Spaces:

Running

on

L40S

DiffuEraser: A Diffusion Model for Video Inpainting

DiffuEraser is a diffusion model for video inpainting, which outperforms state-of-the-art model Propainter in both content completeness and temporal consistency while maintaining acceptable efficiency.

Update Log

- 2025.01.20: Release inference code.

TODO

- Release training code.

- Release HuggingFace/ModelScope demo.

- Release gradio demo.

Results

More results will be displayed on the project page.

https://github.com/user-attachments/assets/b59d0b88-4186-4531-8698-adf6e62058f8

Method Overview

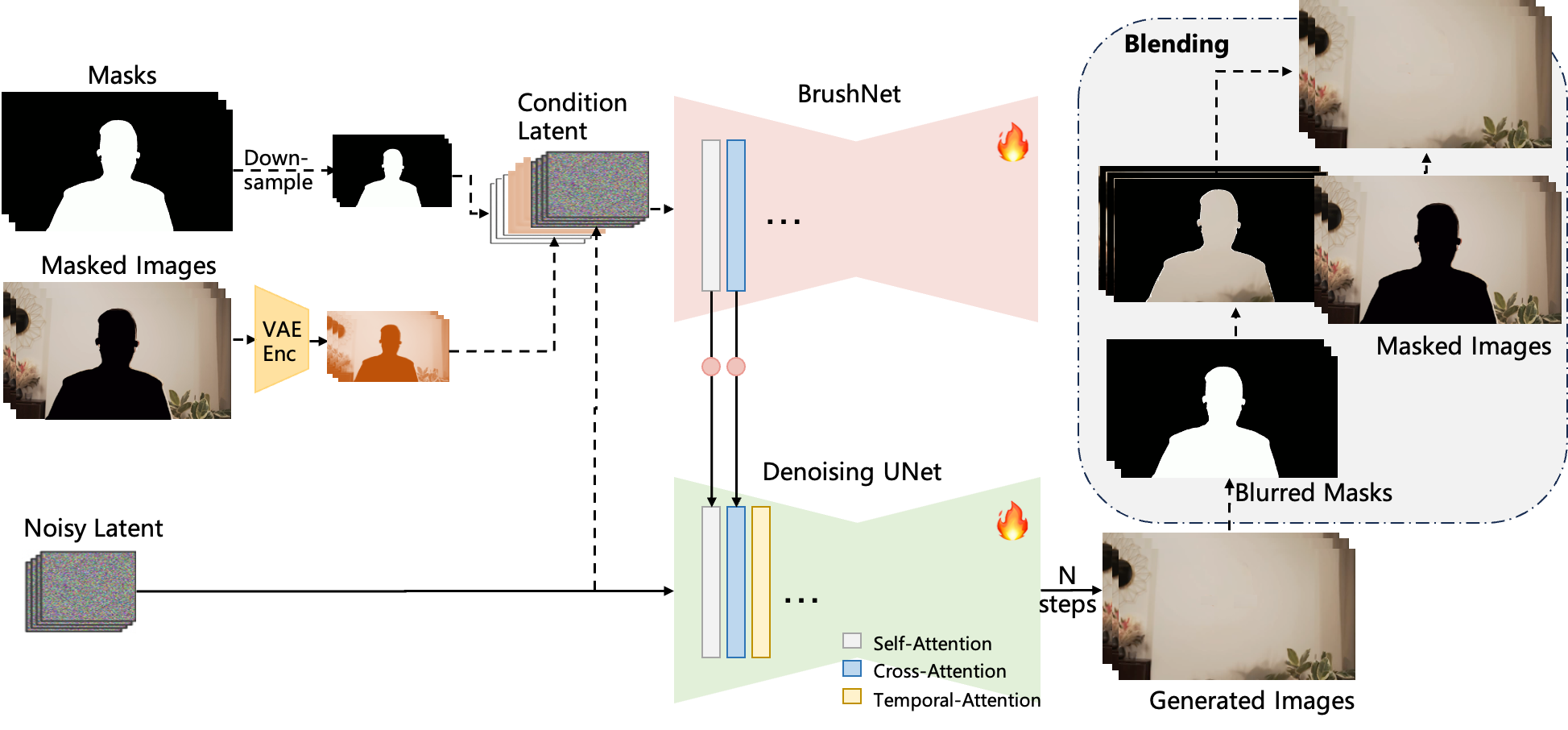

Our network is inspired by BrushNet and Animatediff. The architecture comprises the primary denoising UNet and an auxiliary BrushNet branch. Features extracted by BrushNet branch are integrated into the denoising UNet layer by layer after a zero convolution block. The denoising UNet performs the denoising process to generate the final output. To enhance temporal consistency, temporal attention mechanisms are incorporated following both self-attention and cross-attention layers. After denoising, the generated images are blended with the input masked images using blurred masks.

We incorporate prior information to provide initialization and weak conditioning, which helps mitigate noisy artifacts and suppress hallucinations.

Additionally, to improve temporal consistency during long-sequence inference, we expand the temporal receptive fields of both the prior model and DiffuEraser, and further enhance consistency by leveraging the temporal smoothing capabilities of Video Diffusion Models. Please read the paper for details.

Getting Started

Installation

Clone Repo

git clone https://github.com/lixiaowen-xw/DiffuEraser.gitCreate Conda Environment and Install Dependencies

# create new anaconda env conda create -n diffueraser python=3.9.19 conda activate diffueraser # install python dependencies pip install -r requirements.txt

Prepare pretrained models

Weights will be placed under the ./weights directory.

- Download our pretrained models from Hugging Face or ModelScope to the

weightsfolder. - Download pretrained weight of based models and other components:

- stable-diffusion-v1-5 . The full folder size is over 30 GB. If you want to save storage space, you can download only the necessary files: feature_extractor, model_index.json, safety_checker, scheduler, text_encoder, and tokenizer,about 4GB.

- PCM_Weights

- propainter

- sd-vae-ft-mse

The directory structure will be arranged as:

weights

|- diffuEraser

|-brushnet

|-unet_main

|- stable-diffusion-v1-5

|-feature_extractor

|-...

|- PCM_Weights

|-sd15

|- propainter

|-ProPainter.pth

|-raft-things.pth

|-recurrent_flow_completion.pth

|- sd-vae-ft-mse

|-diffusion_pytorch_model.bin

|-...

|- README.md

Main Inference

We provide some examples in the examples folder.

Run the following commands to try it out:

cd DiffuEraser

python run_diffueraser.py

The results will be saved in the results folder.

To test your own videos, please replace the input_video and input_mask in run_diffueraser.py . The first inference may take a long time.

The frame rate of input_video and input_mask needs to be consistent. We currently only support mp4 video as input intead of split frames, you can convert frames to video using ffmepg:

ffmpeg -i image%03d.jpg -c:v libx264 -r 25 output.mp4

Notice: Do not convert the frame rate of mask video if it is not consitent with that of the input video, which would lead to errors due to misalignment.

Blow shows the estimated GPU memory requirements and inference time for different resolution:

| Resolution | Gpu Memeory | Inference Time(250f(~10s), L20) |

|---|---|---|

| 1280 x 720 | 33G | 314s |

| 960 x 540 | 20G | 175s |

| 640 x 360 | 12G | 92s |

Citation

If you find our repo useful for your research, please consider citing our paper:

@misc{li2025diffueraserdiffusionmodelvideo,

title={DiffuEraser: A Diffusion Model for Video Inpainting},

author={Xiaowen Li and Haolan Xue and Peiran Ren and Liefeng Bo},

year={2025},

eprint={2501.10018},

archivePrefix={arXiv},

primaryClass={cs.CV},

url={https://arxiv.org/abs/2501.10018},

}

License

This repository uses Propainter as the prior model. Users must comply with Propainter's license when using this code. Or you can use other model to replace it.

This project is licensed under the Apache License Version 2.0 except for the third-party components listed below.

Acknowledgement

This code is based on BrushNet, Propainter and Animatediff. The example videos come from Pexels, DAVIS, SA-V and DanceTrack. Thanks for their awesome works.