Spaces:

Running

on

L40S

Running

on

L40S

Migrated from GitHub

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitattributes +2 -0

- LICENSE +202 -0

- ORIGINAL_README.md +171 -0

- assets/DiffuEraser_pipeline.png +0 -0

- diffueraser/diffueraser.py +432 -0

- diffueraser/pipeline_diffueraser.py +1349 -0

- examples/example1/mask.mp4 +0 -0

- examples/example1/video.mp4 +0 -0

- examples/example2/mask.mp4 +3 -0

- examples/example2/video.mp4 +0 -0

- examples/example3/mask.mp4 +0 -0

- examples/example3/video.mp4 +3 -0

- libs/brushnet_CA.py +939 -0

- libs/transformer_temporal.py +375 -0

- libs/unet_2d_blocks.py +0 -0

- libs/unet_2d_condition.py +1359 -0

- libs/unet_3d_blocks.py +2463 -0

- libs/unet_motion_model.py +975 -0

- propainter/RAFT/__init__.py +2 -0

- propainter/RAFT/corr.py +111 -0

- propainter/RAFT/datasets.py +235 -0

- propainter/RAFT/demo.py +79 -0

- propainter/RAFT/extractor.py +267 -0

- propainter/RAFT/raft.py +146 -0

- propainter/RAFT/update.py +139 -0

- propainter/RAFT/utils/__init__.py +2 -0

- propainter/RAFT/utils/augmentor.py +246 -0

- propainter/RAFT/utils/flow_viz.py +132 -0

- propainter/RAFT/utils/flow_viz_pt.py +118 -0

- propainter/RAFT/utils/frame_utils.py +137 -0

- propainter/RAFT/utils/utils.py +82 -0

- propainter/core/dataset.py +232 -0

- propainter/core/dist.py +47 -0

- propainter/core/loss.py +180 -0

- propainter/core/lr_scheduler.py +112 -0

- propainter/core/metrics.py +571 -0

- propainter/core/prefetch_dataloader.py +125 -0

- propainter/core/trainer.py +509 -0

- propainter/core/trainer_flow_w_edge.py +380 -0

- propainter/core/utils.py +371 -0

- propainter/inference.py +520 -0

- propainter/model/__init__.py +1 -0

- propainter/model/canny/canny_filter.py +256 -0

- propainter/model/canny/filter.py +288 -0

- propainter/model/canny/gaussian.py +116 -0

- propainter/model/canny/kernels.py +690 -0

- propainter/model/canny/sobel.py +263 -0

- propainter/model/misc.py +131 -0

- propainter/model/modules/base_module.py +131 -0

- propainter/model/modules/deformconv.py +54 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,5 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

examples/example2/mask.mp4 filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

examples/example3/video.mp4 filter=lfs diff=lfs merge=lfs -text

|

LICENSE

ADDED

|

@@ -0,0 +1,202 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

|

| 2 |

+

Apache License

|

| 3 |

+

Version 2.0, January 2004

|

| 4 |

+

http://www.apache.org/licenses/

|

| 5 |

+

|

| 6 |

+

TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

|

| 7 |

+

|

| 8 |

+

1. Definitions.

|

| 9 |

+

|

| 10 |

+

"License" shall mean the terms and conditions for use, reproduction,

|

| 11 |

+

and distribution as defined by Sections 1 through 9 of this document.

|

| 12 |

+

|

| 13 |

+

"Licensor" shall mean the copyright owner or entity authorized by

|

| 14 |

+

the copyright owner that is granting the License.

|

| 15 |

+

|

| 16 |

+

"Legal Entity" shall mean the union of the acting entity and all

|

| 17 |

+

other entities that control, are controlled by, or are under common

|

| 18 |

+

control with that entity. For the purposes of this definition,

|

| 19 |

+

"control" means (i) the power, direct or indirect, to cause the

|

| 20 |

+

direction or management of such entity, whether by contract or

|

| 21 |

+

otherwise, or (ii) ownership of fifty percent (50%) or more of the

|

| 22 |

+

outstanding shares, or (iii) beneficial ownership of such entity.

|

| 23 |

+

|

| 24 |

+

"You" (or "Your") shall mean an individual or Legal Entity

|

| 25 |

+

exercising permissions granted by this License.

|

| 26 |

+

|

| 27 |

+

"Source" form shall mean the preferred form for making modifications,

|

| 28 |

+

including but not limited to software source code, documentation

|

| 29 |

+

source, and configuration files.

|

| 30 |

+

|

| 31 |

+

"Object" form shall mean any form resulting from mechanical

|

| 32 |

+

transformation or translation of a Source form, including but

|

| 33 |

+

not limited to compiled object code, generated documentation,

|

| 34 |

+

and conversions to other media types.

|

| 35 |

+

|

| 36 |

+

"Work" shall mean the work of authorship, whether in Source or

|

| 37 |

+

Object form, made available under the License, as indicated by a

|

| 38 |

+

copyright notice that is included in or attached to the work

|

| 39 |

+

(an example is provided in the Appendix below).

|

| 40 |

+

|

| 41 |

+

"Derivative Works" shall mean any work, whether in Source or Object

|

| 42 |

+

form, that is based on (or derived from) the Work and for which the

|

| 43 |

+

editorial revisions, annotations, elaborations, or other modifications

|

| 44 |

+

represent, as a whole, an original work of authorship. For the purposes

|

| 45 |

+

of this License, Derivative Works shall not include works that remain

|

| 46 |

+

separable from, or merely link (or bind by name) to the interfaces of,

|

| 47 |

+

the Work and Derivative Works thereof.

|

| 48 |

+

|

| 49 |

+

"Contribution" shall mean any work of authorship, including

|

| 50 |

+

the original version of the Work and any modifications or additions

|

| 51 |

+

to that Work or Derivative Works thereof, that is intentionally

|

| 52 |

+

submitted to Licensor for inclusion in the Work by the copyright owner

|

| 53 |

+

or by an individual or Legal Entity authorized to submit on behalf of

|

| 54 |

+

the copyright owner. For the purposes of this definition, "submitted"

|

| 55 |

+

means any form of electronic, verbal, or written communication sent

|

| 56 |

+

to the Licensor or its representatives, including but not limited to

|

| 57 |

+

communication on electronic mailing lists, source code control systems,

|

| 58 |

+

and issue tracking systems that are managed by, or on behalf of, the

|

| 59 |

+

Licensor for the purpose of discussing and improving the Work, but

|

| 60 |

+

excluding communication that is conspicuously marked or otherwise

|

| 61 |

+

designated in writing by the copyright owner as "Not a Contribution."

|

| 62 |

+

|

| 63 |

+

"Contributor" shall mean Licensor and any individual or Legal Entity

|

| 64 |

+

on behalf of whom a Contribution has been received by Licensor and

|

| 65 |

+

subsequently incorporated within the Work.

|

| 66 |

+

|

| 67 |

+

2. Grant of Copyright License. Subject to the terms and conditions of

|

| 68 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 69 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 70 |

+

copyright license to reproduce, prepare Derivative Works of,

|

| 71 |

+

publicly display, publicly perform, sublicense, and distribute the

|

| 72 |

+

Work and such Derivative Works in Source or Object form.

|

| 73 |

+

|

| 74 |

+

3. Grant of Patent License. Subject to the terms and conditions of

|

| 75 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 76 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 77 |

+

(except as stated in this section) patent license to make, have made,

|

| 78 |

+

use, offer to sell, sell, import, and otherwise transfer the Work,

|

| 79 |

+

where such license applies only to those patent claims licensable

|

| 80 |

+

by such Contributor that are necessarily infringed by their

|

| 81 |

+

Contribution(s) alone or by combination of their Contribution(s)

|

| 82 |

+

with the Work to which such Contribution(s) was submitted. If You

|

| 83 |

+

institute patent litigation against any entity (including a

|

| 84 |

+

cross-claim or counterclaim in a lawsuit) alleging that the Work

|

| 85 |

+

or a Contribution incorporated within the Work constitutes direct

|

| 86 |

+

or contributory patent infringement, then any patent licenses

|

| 87 |

+

granted to You under this License for that Work shall terminate

|

| 88 |

+

as of the date such litigation is filed.

|

| 89 |

+

|

| 90 |

+

4. Redistribution. You may reproduce and distribute copies of the

|

| 91 |

+

Work or Derivative Works thereof in any medium, with or without

|

| 92 |

+

modifications, and in Source or Object form, provided that You

|

| 93 |

+

meet the following conditions:

|

| 94 |

+

|

| 95 |

+

(a) You must give any other recipients of the Work or

|

| 96 |

+

Derivative Works a copy of this License; and

|

| 97 |

+

|

| 98 |

+

(b) You must cause any modified files to carry prominent notices

|

| 99 |

+

stating that You changed the files; and

|

| 100 |

+

|

| 101 |

+

(c) You must retain, in the Source form of any Derivative Works

|

| 102 |

+

that You distribute, all copyright, patent, trademark, and

|

| 103 |

+

attribution notices from the Source form of the Work,

|

| 104 |

+

excluding those notices that do not pertain to any part of

|

| 105 |

+

the Derivative Works; and

|

| 106 |

+

|

| 107 |

+

(d) If the Work includes a "NOTICE" text file as part of its

|

| 108 |

+

distribution, then any Derivative Works that You distribute must

|

| 109 |

+

include a readable copy of the attribution notices contained

|

| 110 |

+

within such NOTICE file, excluding those notices that do not

|

| 111 |

+

pertain to any part of the Derivative Works, in at least one

|

| 112 |

+

of the following places: within a NOTICE text file distributed

|

| 113 |

+

as part of the Derivative Works; within the Source form or

|

| 114 |

+

documentation, if provided along with the Derivative Works; or,

|

| 115 |

+

within a display generated by the Derivative Works, if and

|

| 116 |

+

wherever such third-party notices normally appear. The contents

|

| 117 |

+

of the NOTICE file are for informational purposes only and

|

| 118 |

+

do not modify the License. You may add Your own attribution

|

| 119 |

+

notices within Derivative Works that You distribute, alongside

|

| 120 |

+

or as an addendum to the NOTICE text from the Work, provided

|

| 121 |

+

that such additional attribution notices cannot be construed

|

| 122 |

+

as modifying the License.

|

| 123 |

+

|

| 124 |

+

You may add Your own copyright statement to Your modifications and

|

| 125 |

+

may provide additional or different license terms and conditions

|

| 126 |

+

for use, reproduction, or distribution of Your modifications, or

|

| 127 |

+

for any such Derivative Works as a whole, provided Your use,

|

| 128 |

+

reproduction, and distribution of the Work otherwise complies with

|

| 129 |

+

the conditions stated in this License.

|

| 130 |

+

|

| 131 |

+

5. Submission of Contributions. Unless You explicitly state otherwise,

|

| 132 |

+

any Contribution intentionally submitted for inclusion in the Work

|

| 133 |

+

by You to the Licensor shall be under the terms and conditions of

|

| 134 |

+

this License, without any additional terms or conditions.

|

| 135 |

+

Notwithstanding the above, nothing herein shall supersede or modify

|

| 136 |

+

the terms of any separate license agreement you may have executed

|

| 137 |

+

with Licensor regarding such Contributions.

|

| 138 |

+

|

| 139 |

+

6. Trademarks. This License does not grant permission to use the trade

|

| 140 |

+

names, trademarks, service marks, or product names of the Licensor,

|

| 141 |

+

except as required for reasonable and customary use in describing the

|

| 142 |

+

origin of the Work and reproducing the content of the NOTICE file.

|

| 143 |

+

|

| 144 |

+

7. Disclaimer of Warranty. Unless required by applicable law or

|

| 145 |

+

agreed to in writing, Licensor provides the Work (and each

|

| 146 |

+

Contributor provides its Contributions) on an "AS IS" BASIS,

|

| 147 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

|

| 148 |

+

implied, including, without limitation, any warranties or conditions

|

| 149 |

+

of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

|

| 150 |

+

PARTICULAR PURPOSE. You are solely responsible for determining the

|

| 151 |

+

appropriateness of using or redistributing the Work and assume any

|

| 152 |

+

risks associated with Your exercise of permissions under this License.

|

| 153 |

+

|

| 154 |

+

8. Limitation of Liability. In no event and under no legal theory,

|

| 155 |

+

whether in tort (including negligence), contract, or otherwise,

|

| 156 |

+

unless required by applicable law (such as deliberate and grossly

|

| 157 |

+

negligent acts) or agreed to in writing, shall any Contributor be

|

| 158 |

+

liable to You for damages, including any direct, indirect, special,

|

| 159 |

+

incidental, or consequential damages of any character arising as a

|

| 160 |

+

result of this License or out of the use or inability to use the

|

| 161 |

+

Work (including but not limited to damages for loss of goodwill,

|

| 162 |

+

work stoppage, computer failure or malfunction, or any and all

|

| 163 |

+

other commercial damages or losses), even if such Contributor

|

| 164 |

+

has been advised of the possibility of such damages.

|

| 165 |

+

|

| 166 |

+

9. Accepting Warranty or Additional Liability. While redistributing

|

| 167 |

+

the Work or Derivative Works thereof, You may choose to offer,

|

| 168 |

+

and charge a fee for, acceptance of support, warranty, indemnity,

|

| 169 |

+

or other liability obligations and/or rights consistent with this

|

| 170 |

+

License. However, in accepting such obligations, You may act only

|

| 171 |

+

on Your own behalf and on Your sole responsibility, not on behalf

|

| 172 |

+

of any other Contributor, and only if You agree to indemnify,

|

| 173 |

+

defend, and hold each Contributor harmless for any liability

|

| 174 |

+

incurred by, or claims asserted against, such Contributor by reason

|

| 175 |

+

of your accepting any such warranty or additional liability.

|

| 176 |

+

|

| 177 |

+

END OF TERMS AND CONDITIONS

|

| 178 |

+

|

| 179 |

+

APPENDIX: How to apply the Apache License to your work.

|

| 180 |

+

|

| 181 |

+

To apply the Apache License to your work, attach the following

|

| 182 |

+

boilerplate notice, with the fields enclosed by brackets "[]"

|

| 183 |

+

replaced with your own identifying information. (Don't include

|

| 184 |

+

the brackets!) The text should be enclosed in the appropriate

|

| 185 |

+

comment syntax for the file format. We also recommend that a

|

| 186 |

+

file or class name and description of purpose be included on the

|

| 187 |

+

same "printed page" as the copyright notice for easier

|

| 188 |

+

identification within third-party archives.

|

| 189 |

+

|

| 190 |

+

Copyright [yyyy] [name of copyright owner]

|

| 191 |

+

|

| 192 |

+

Licensed under the Apache License, Version 2.0 (the "License");

|

| 193 |

+

you may not use this file except in compliance with the License.

|

| 194 |

+

You may obtain a copy of the License at

|

| 195 |

+

|

| 196 |

+

http://www.apache.org/licenses/LICENSE-2.0

|

| 197 |

+

|

| 198 |

+

Unless required by applicable law or agreed to in writing, software

|

| 199 |

+

distributed under the License is distributed on an "AS IS" BASIS,

|

| 200 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

| 201 |

+

See the License for the specific language governing permissions and

|

| 202 |

+

limitations under the License.

|

ORIGINAL_README.md

ADDED

|

@@ -0,0 +1,171 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

<div align="center">

|

| 2 |

+

|

| 3 |

+

<h1>DiffuEraser: A Diffusion Model for Video Inpainting</h1>

|

| 4 |

+

|

| 5 |

+

<div>

|

| 6 |

+

Xiaowen Li

|

| 7 |

+

Haolan Xue

|

| 8 |

+

Peiran Ren

|

| 9 |

+

Liefeng Bo

|

| 10 |

+

</div>

|

| 11 |

+

<div>

|

| 12 |

+

Tongyi Lab, Alibaba Group

|

| 13 |

+

</div>

|

| 14 |

+

|

| 15 |

+

<div>

|

| 16 |

+

<strong>TECHNICAL REPORT</strong>

|

| 17 |

+

</div>

|

| 18 |

+

|

| 19 |

+

<div>

|

| 20 |

+

<h4 align="center">

|

| 21 |

+

<a href="https://lixiaowen-xw.github.io/DiffuEraser-page" target='_blank'>

|

| 22 |

+

<img src="https://img.shields.io/badge/%F0%9F%8C%B1-Project%20Page-blue">

|

| 23 |

+

</a>

|

| 24 |

+

<a href="https://arxiv.org/abs/2501.10018" target='_blank'>

|

| 25 |

+

<img src="https://img.shields.io/badge/arXiv-2501.10018-B31B1B.svg">

|

| 26 |

+

</a>

|

| 27 |

+

</h4>

|

| 28 |

+

</div>

|

| 29 |

+

|

| 30 |

+

|

| 31 |

+

|

| 32 |

+

|

| 33 |

+

</div>

|

| 34 |

+

|

| 35 |

+

DiffuEraser is a diffusion model for video inpainting, which outperforms state-of-the-art model Propainter in both content completeness and temporal consistency while maintaining acceptable efficiency.

|

| 36 |

+

|

| 37 |

+

---

|

| 38 |

+

|

| 39 |

+

|

| 40 |

+

## Update Log

|

| 41 |

+

- *2025.01.20*: Release inference code.

|

| 42 |

+

|

| 43 |

+

|

| 44 |

+

## TODO

|

| 45 |

+

- [ ] Release training code.

|

| 46 |

+

- [ ] Release HuggingFace/ModelScope demo.

|

| 47 |

+

- [ ] Release gradio demo.

|

| 48 |

+

|

| 49 |

+

|

| 50 |

+

## Results

|

| 51 |

+

More results will be displayed on the project page.

|

| 52 |

+

|

| 53 |

+

https://github.com/user-attachments/assets/b59d0b88-4186-4531-8698-adf6e62058f8

|

| 54 |

+

|

| 55 |

+

|

| 56 |

+

|

| 57 |

+

|

| 58 |

+

## Method Overview

|

| 59 |

+

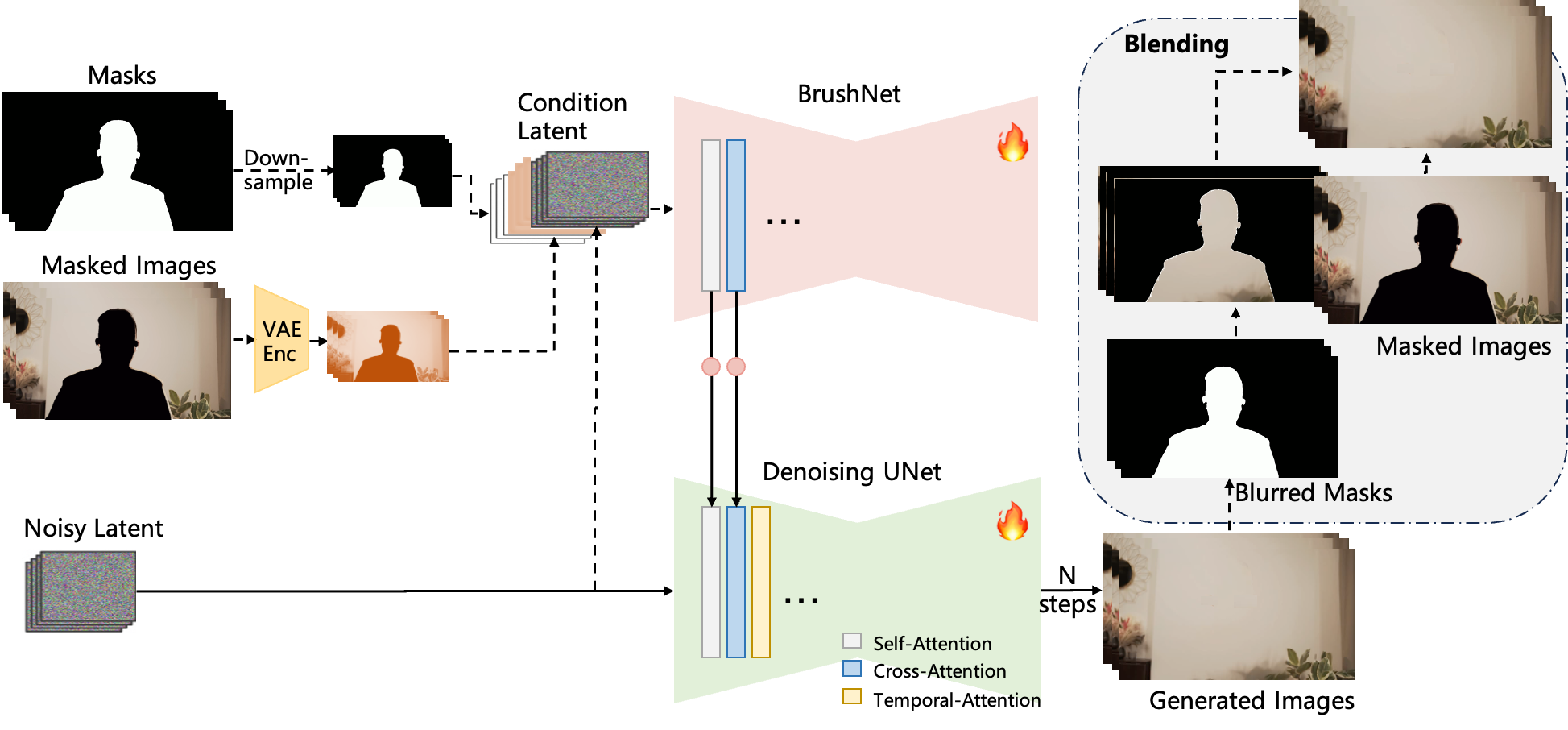

Our network is inspired by [BrushNet](https://github.com/TencentARC/BrushNet) and [Animatediff](https://github.com/guoyww/AnimateDiff). The architecture comprises the primary `denoising UNet` and an auxiliary `BrushNet branch`. Features extracted by BrushNet branch are integrated into the denoising UNet layer by layer after a zero convolution block. The denoising UNet performs the denoising process to generate the final output. To enhance temporal consistency, `temporal attention` mechanisms are incorporated following both self-attention and cross-attention layers. After denoising, the generated images are blended with the input masked images using blurred masks.

|

| 60 |

+

|

| 61 |

+

|

| 62 |

+

|

| 63 |

+

We incorporate `prior` information to provide initialization and weak conditioning, which helps mitigate noisy artifacts and suppress hallucinations.

|

| 64 |

+

Additionally, to improve temporal consistency during long-sequence inference, we expand the `temporal receptive fields` of both the prior model and DiffuEraser, and further enhance consistency by leveraging the temporal smoothing capabilities of Video Diffusion Models. Please read the paper for details.

|

| 65 |

+

|

| 66 |

+

|

| 67 |

+

## Getting Started

|

| 68 |

+

|

| 69 |

+

#### Installation

|

| 70 |

+

|

| 71 |

+

1. Clone Repo

|

| 72 |

+

|

| 73 |

+

```bash

|

| 74 |

+

git clone https://github.com/lixiaowen-xw/DiffuEraser.git

|

| 75 |

+

```

|

| 76 |

+

|

| 77 |

+

2. Create Conda Environment and Install Dependencies

|

| 78 |

+

|

| 79 |

+

```bash

|

| 80 |

+

# create new anaconda env

|

| 81 |

+

conda create -n diffueraser python=3.9.19

|

| 82 |

+

conda activate diffueraser

|

| 83 |

+

# install python dependencies

|

| 84 |

+

pip install -r requirements.txt

|

| 85 |

+

```

|

| 86 |

+

|

| 87 |

+

#### Prepare pretrained models

|

| 88 |

+

Weights will be placed under the `./weights` directory.

|

| 89 |

+

1. Download our pretrained models from [Hugging Face](https://huggingface.co/lixiaowen/diffuEraser) or [ModelScope](https://www.modelscope.cn/xingzi/diffuEraser.git) to the `weights` folder.

|

| 90 |

+

2. Download pretrained weight of based models and other components:

|

| 91 |

+

- [stable-diffusion-v1-5](https://huggingface.co/stable-diffusion-v1-5/stable-diffusion-v1-5) . The full folder size is over 30 GB. If you want to save storage space, you can download only the necessary files: feature_extractor, model_index.json, safety_checker, scheduler, text_encoder, and tokenizer,about 4GB.

|

| 92 |

+

- [PCM_Weights](https://huggingface.co/wangfuyun/PCM_Weights)

|

| 93 |

+

- [propainter](https://github.com/sczhou/ProPainter/releases/tag/v0.1.0)

|

| 94 |

+

- [sd-vae-ft-mse](https://huggingface.co/stabilityai/sd-vae-ft-mse)

|

| 95 |

+

|

| 96 |

+

|

| 97 |

+

The directory structure will be arranged as:

|

| 98 |

+

```

|

| 99 |

+

weights

|

| 100 |

+

|- diffuEraser

|

| 101 |

+

|-brushnet

|

| 102 |

+

|-unet_main

|

| 103 |

+

|- stable-diffusion-v1-5

|

| 104 |

+

|-feature_extractor

|

| 105 |

+

|-...

|

| 106 |

+

|- PCM_Weights

|

| 107 |

+

|-sd15

|

| 108 |

+

|- propainter

|

| 109 |

+

|-ProPainter.pth

|

| 110 |

+

|-raft-things.pth

|

| 111 |

+

|-recurrent_flow_completion.pth

|

| 112 |

+

|- sd-vae-ft-mse

|

| 113 |

+

|-diffusion_pytorch_model.bin

|

| 114 |

+

|-...

|

| 115 |

+

|- README.md

|

| 116 |

+

```

|

| 117 |

+

|

| 118 |

+

#### Main Inference

|

| 119 |

+

We provide some examples in the [`examples`](./examples) folder.

|

| 120 |

+

Run the following commands to try it out:

|

| 121 |

+

```shell

|

| 122 |

+

cd DiffuEraser

|

| 123 |

+

python run_diffueraser.py

|

| 124 |

+

```

|

| 125 |

+

The results will be saved in the `results` folder.

|

| 126 |

+

To test your own videos, please replace the `input_video` and `input_mask` in run_diffueraser.py . The first inference may take a long time.

|

| 127 |

+

|

| 128 |

+

The `frame rate` of input_video and input_mask needs to be consistent. We currently only support `mp4 video` as input intead of split frames, you can convert frames to video using ffmepg:

|

| 129 |

+

```shell

|

| 130 |

+

ffmpeg -i image%03d.jpg -c:v libx264 -r 25 output.mp4

|

| 131 |

+

```

|

| 132 |

+

Notice: Do not convert the frame rate of mask video if it is not consitent with that of the input video, which would lead to errors due to misalignment.

|

| 133 |

+

|

| 134 |

+

|

| 135 |

+

Blow shows the estimated GPU memory requirements and inference time for different resolution:

|

| 136 |

+

|

| 137 |

+

| Resolution | Gpu Memeory | Inference Time(250f(~10s), L20) |

|

| 138 |

+

| :--------- | :---------: | :-----------------------------: |

|

| 139 |

+

| 1280 x 720 | 33G | 314s |

|

| 140 |

+

| 960 x 540 | 20G | 175s |

|

| 141 |

+

| 640 x 360 | 12G | 92s |

|

| 142 |

+

|

| 143 |

+

|

| 144 |

+

## Citation

|

| 145 |

+

|

| 146 |

+

If you find our repo useful for your research, please consider citing our paper:

|

| 147 |

+

|

| 148 |

+

```bibtex

|

| 149 |

+

@misc{li2025diffueraserdiffusionmodelvideo,

|

| 150 |

+

title={DiffuEraser: A Diffusion Model for Video Inpainting},

|

| 151 |

+

author={Xiaowen Li and Haolan Xue and Peiran Ren and Liefeng Bo},

|

| 152 |

+

year={2025},

|

| 153 |

+

eprint={2501.10018},

|

| 154 |

+

archivePrefix={arXiv},

|

| 155 |

+

primaryClass={cs.CV},

|

| 156 |

+

url={https://arxiv.org/abs/2501.10018},

|

| 157 |

+

}

|

| 158 |

+

```

|

| 159 |

+

|

| 160 |

+

|

| 161 |

+

## License

|

| 162 |

+

This repository uses [Propainter](https://github.com/sczhou/ProPainter) as the prior model. Users must comply with [Propainter's license](https://github.com/sczhou/ProPainter/blob/main/LICENSE) when using this code. Or you can use other model to replace it.

|

| 163 |

+

|

| 164 |

+

This project is licensed under the [Apache License Version 2.0](./LICENSE) except for the third-party components listed below.

|

| 165 |

+

|

| 166 |

+

|

| 167 |

+

## Acknowledgement

|

| 168 |

+

|

| 169 |

+

This code is based on [BrushNet](https://github.com/TencentARC/BrushNet), [Propainter](https://github.com/sczhou/ProPainter) and [Animatediff](https://github.com/guoyww/AnimateDiff). The example videos come from [Pexels](https://www.pexels.com/), [DAVIS](https://davischallenge.org/), [SA-V](https://ai.meta.com/datasets/segment-anything-video) and [DanceTrack](https://dancetrack.github.io/). Thanks for their awesome works.

|

| 170 |

+

|

| 171 |

+

|

assets/DiffuEraser_pipeline.png

ADDED

|

diffueraser/diffueraser.py

ADDED

|

@@ -0,0 +1,432 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import gc

|

| 2 |

+

import copy

|

| 3 |

+

import cv2

|

| 4 |

+

import os

|

| 5 |

+

import numpy as np

|

| 6 |

+

import torch

|

| 7 |

+

import torchvision

|

| 8 |

+

from einops import repeat

|

| 9 |

+

from PIL import Image, ImageFilter

|

| 10 |

+

from diffusers import (

|

| 11 |

+

AutoencoderKL,

|

| 12 |

+

DDPMScheduler,

|

| 13 |

+

UniPCMultistepScheduler,

|

| 14 |

+

LCMScheduler,

|

| 15 |

+

)

|

| 16 |

+

from diffusers.schedulers import TCDScheduler

|

| 17 |

+

from diffusers.image_processor import PipelineImageInput, VaeImageProcessor

|

| 18 |

+

from diffusers.utils.torch_utils import randn_tensor

|

| 19 |

+

from transformers import AutoTokenizer, PretrainedConfig

|

| 20 |

+

|

| 21 |

+

from libs.unet_motion_model import MotionAdapter, UNetMotionModel

|

| 22 |

+

from libs.brushnet_CA import BrushNetModel

|

| 23 |

+

from libs.unet_2d_condition import UNet2DConditionModel

|

| 24 |

+

from diffueraser.pipeline_diffueraser import StableDiffusionDiffuEraserPipeline

|

| 25 |

+

|

| 26 |

+

|

| 27 |

+

checkpoints = {

|

| 28 |

+

"2-Step": ["pcm_{}_smallcfg_2step_converted.safetensors", 2, 0.0],

|

| 29 |

+

"4-Step": ["pcm_{}_smallcfg_4step_converted.safetensors", 4, 0.0],

|

| 30 |

+

"8-Step": ["pcm_{}_smallcfg_8step_converted.safetensors", 8, 0.0],

|

| 31 |

+

"16-Step": ["pcm_{}_smallcfg_16step_converted.safetensors", 16, 0.0],

|

| 32 |

+

"Normal CFG 4-Step": ["pcm_{}_normalcfg_4step_converted.safetensors", 4, 7.5],

|

| 33 |

+

"Normal CFG 8-Step": ["pcm_{}_normalcfg_8step_converted.safetensors", 8, 7.5],

|

| 34 |

+

"Normal CFG 16-Step": ["pcm_{}_normalcfg_16step_converted.safetensors", 16, 7.5],

|

| 35 |

+

"LCM-Like LoRA": [

|

| 36 |

+

"pcm_{}_lcmlike_lora_converted.safetensors",

|

| 37 |

+

4,

|

| 38 |

+

0.0,

|

| 39 |

+

],

|

| 40 |

+

}

|

| 41 |

+

|

| 42 |

+

def import_model_class_from_model_name_or_path(pretrained_model_name_or_path: str, revision: str):

|

| 43 |

+

text_encoder_config = PretrainedConfig.from_pretrained(

|

| 44 |

+

pretrained_model_name_or_path,

|

| 45 |

+

subfolder="text_encoder",

|

| 46 |

+

revision=revision,

|

| 47 |

+

)

|

| 48 |

+

model_class = text_encoder_config.architectures[0]

|

| 49 |

+

|

| 50 |

+

if model_class == "CLIPTextModel":

|

| 51 |

+

from transformers import CLIPTextModel

|

| 52 |

+

|

| 53 |

+

return CLIPTextModel

|

| 54 |

+

elif model_class == "RobertaSeriesModelWithTransformation":

|

| 55 |

+

from diffusers.pipelines.alt_diffusion.modeling_roberta_series import RobertaSeriesModelWithTransformation

|

| 56 |

+

|

| 57 |

+

return RobertaSeriesModelWithTransformation

|

| 58 |

+

else:

|

| 59 |

+

raise ValueError(f"{model_class} is not supported.")

|

| 60 |

+

|

| 61 |

+

def resize_frames(frames, size=None):

|

| 62 |

+

if size is not None:

|

| 63 |

+

out_size = size

|

| 64 |

+

process_size = (out_size[0]-out_size[0]%8, out_size[1]-out_size[1]%8)

|

| 65 |

+

frames = [f.resize(process_size) for f in frames]

|

| 66 |

+

else:

|

| 67 |

+

out_size = frames[0].size

|

| 68 |

+

process_size = (out_size[0]-out_size[0]%8, out_size[1]-out_size[1]%8)

|

| 69 |

+

if not out_size == process_size:

|

| 70 |

+

frames = [f.resize(process_size) for f in frames]

|

| 71 |

+

|

| 72 |

+

return frames

|

| 73 |

+

|

| 74 |

+

def read_mask(validation_mask, fps, n_total_frames, img_size, mask_dilation_iter, frames):

|

| 75 |

+

cap = cv2.VideoCapture(validation_mask)

|

| 76 |

+

if not cap.isOpened():

|

| 77 |

+

print("Error: Could not open mask video.")

|

| 78 |

+

exit()

|

| 79 |

+

mask_fps = cap.get(cv2.CAP_PROP_FPS)

|

| 80 |

+

if mask_fps != fps:

|

| 81 |

+

cap.release()

|

| 82 |

+

raise ValueError("The frame rate of all input videos needs to be consistent.")

|

| 83 |

+

|

| 84 |

+

masks = []

|

| 85 |

+

masked_images = []

|

| 86 |

+

idx = 0

|

| 87 |

+

while True:

|

| 88 |

+

ret, frame = cap.read()

|

| 89 |

+

if not ret:

|

| 90 |

+

break

|

| 91 |

+

if(idx >= n_total_frames):

|

| 92 |

+

break

|

| 93 |

+

mask = Image.fromarray(frame[...,::-1]).convert('L')

|

| 94 |

+

if mask.size != img_size:

|

| 95 |

+

mask = mask.resize(img_size, Image.NEAREST)

|

| 96 |

+

mask = np.asarray(mask)

|

| 97 |

+

m = np.array(mask > 0).astype(np.uint8)

|

| 98 |

+

m = cv2.erode(m,

|

| 99 |

+

cv2.getStructuringElement(cv2.MORPH_RECT, (3, 3)),

|

| 100 |

+

iterations=1)

|

| 101 |

+

m = cv2.dilate(m,

|

| 102 |

+

cv2.getStructuringElement(cv2.MORPH_RECT, (3, 3)),

|

| 103 |

+

iterations=mask_dilation_iter)

|

| 104 |

+

|

| 105 |

+

mask = Image.fromarray(m * 255)

|

| 106 |

+

masks.append(mask)

|

| 107 |

+

|

| 108 |

+

masked_image = np.array(frames[idx])*(1-(np.array(mask)[:,:,np.newaxis].astype(np.float32)/255))

|

| 109 |

+

masked_image = Image.fromarray(masked_image.astype(np.uint8))

|

| 110 |

+

masked_images.append(masked_image)

|

| 111 |

+

|

| 112 |

+

idx += 1

|

| 113 |

+

cap.release()

|

| 114 |

+

|

| 115 |

+

return masks, masked_images

|

| 116 |

+

|

| 117 |

+

def read_priori(priori, fps, n_total_frames, img_size):

|

| 118 |

+

cap = cv2.VideoCapture(priori)

|

| 119 |

+

if not cap.isOpened():

|

| 120 |

+

print("Error: Could not open video.")

|

| 121 |

+

exit()

|

| 122 |

+

priori_fps = cap.get(cv2.CAP_PROP_FPS)

|

| 123 |

+

if priori_fps != fps:

|

| 124 |

+

cap.release()

|

| 125 |

+

raise ValueError("The frame rate of all input videos needs to be consistent.")

|

| 126 |

+

|

| 127 |

+

prioris=[]

|

| 128 |

+

idx = 0

|

| 129 |

+

while True:

|

| 130 |

+

ret, frame = cap.read()

|

| 131 |

+

if not ret:

|

| 132 |

+

break

|

| 133 |

+

if(idx >= n_total_frames):

|

| 134 |

+

break

|

| 135 |

+

img = Image.fromarray(frame[...,::-1])

|

| 136 |

+

if img.size != img_size:

|

| 137 |

+

img = img.resize(img_size)

|

| 138 |

+

prioris.append(img)

|

| 139 |

+

idx += 1

|

| 140 |

+

cap.release()

|

| 141 |

+

|

| 142 |

+

os.remove(priori) # remove priori

|

| 143 |

+

|

| 144 |

+

return prioris

|

| 145 |

+

|

| 146 |

+

def read_video(validation_image, video_length, nframes, max_img_size):

|

| 147 |

+

vframes, aframes, info = torchvision.io.read_video(filename=validation_image, pts_unit='sec', end_pts=video_length) # RGB

|

| 148 |

+

fps = info['video_fps']

|

| 149 |

+

n_total_frames = int(video_length * fps)

|

| 150 |

+

n_clip = int(np.ceil(n_total_frames/nframes))

|

| 151 |

+

|

| 152 |

+

frames = list(vframes.numpy())[:n_total_frames]

|

| 153 |

+

frames = [Image.fromarray(f) for f in frames]

|

| 154 |

+

max_size = max(frames[0].size)

|

| 155 |

+

if(max_size<256):

|

| 156 |

+

raise ValueError("The resolution of the uploaded video must be larger than 256x256.")

|

| 157 |

+

if(max_size>4096):

|

| 158 |

+

raise ValueError("The resolution of the uploaded video must be smaller than 4096x4096.")

|

| 159 |

+

if max_size>max_img_size:

|

| 160 |

+

ratio = max_size/max_img_size

|

| 161 |

+

ratio_size = (int(frames[0].size[0]/ratio),int(frames[0].size[1]/ratio))

|

| 162 |

+

img_size = (ratio_size[0]-ratio_size[0]%8, ratio_size[1]-ratio_size[1]%8)

|

| 163 |

+

resize_flag=True

|

| 164 |

+

elif (frames[0].size[0]%8==0) and (frames[0].size[1]%8==0):

|

| 165 |

+

img_size = frames[0].size

|

| 166 |

+

resize_flag=False

|

| 167 |

+

else:

|

| 168 |

+

ratio_size = frames[0].size

|

| 169 |

+

img_size = (ratio_size[0]-ratio_size[0]%8, ratio_size[1]-ratio_size[1]%8)

|

| 170 |

+

resize_flag=True

|

| 171 |

+

if resize_flag:

|

| 172 |

+

frames = resize_frames(frames, img_size)

|

| 173 |

+

img_size = frames[0].size

|

| 174 |

+

|

| 175 |

+

return frames, fps, img_size, n_clip, n_total_frames

|

| 176 |

+

|

| 177 |

+

|

| 178 |

+

class DiffuEraser:

|

| 179 |

+

def __init__(

|

| 180 |

+

self, device, base_model_path, vae_path, diffueraser_path, revision=None,

|

| 181 |

+

ckpt="Normal CFG 4-Step", mode="sd15", loaded=None):

|

| 182 |

+

self.device = device

|

| 183 |

+

|

| 184 |

+

## load model

|

| 185 |

+

self.vae = AutoencoderKL.from_pretrained(vae_path)

|

| 186 |

+

self.noise_scheduler = DDPMScheduler.from_pretrained(base_model_path,

|

| 187 |

+

subfolder="scheduler",

|

| 188 |

+

prediction_type="v_prediction",

|

| 189 |

+

timestep_spacing="trailing",

|

| 190 |

+

rescale_betas_zero_snr=True

|

| 191 |

+

)

|

| 192 |

+

self.tokenizer = AutoTokenizer.from_pretrained(

|

| 193 |

+

base_model_path,

|

| 194 |

+

subfolder="tokenizer",

|

| 195 |

+

use_fast=False,

|

| 196 |

+

)

|

| 197 |

+

text_encoder_cls = import_model_class_from_model_name_or_path(base_model_path,revision)

|

| 198 |

+

self.text_encoder = text_encoder_cls.from_pretrained(

|

| 199 |

+

base_model_path, subfolder="text_encoder"

|

| 200 |

+

)

|

| 201 |

+

self.brushnet = BrushNetModel.from_pretrained(diffueraser_path, subfolder="brushnet")

|

| 202 |

+

self.unet_main = UNetMotionModel.from_pretrained(

|

| 203 |

+

diffueraser_path, subfolder="unet_main",

|

| 204 |

+

)

|

| 205 |

+

|

| 206 |

+

## set pipeline

|

| 207 |

+

self.pipeline = StableDiffusionDiffuEraserPipeline.from_pretrained(

|

| 208 |

+

base_model_path,

|

| 209 |

+

vae=self.vae,

|

| 210 |

+

text_encoder=self.text_encoder,

|

| 211 |

+

tokenizer=self.tokenizer,

|

| 212 |

+

unet=self.unet_main,

|

| 213 |

+

brushnet=self.brushnet

|

| 214 |

+

).to(self.device, torch.float16)

|

| 215 |

+

self.pipeline.scheduler = UniPCMultistepScheduler.from_config(self.pipeline.scheduler.config)

|

| 216 |

+

self.pipeline.set_progress_bar_config(disable=True)

|

| 217 |

+

|

| 218 |

+

self.noise_scheduler = UniPCMultistepScheduler.from_config(self.pipeline.scheduler.config)

|

| 219 |

+

self.vae_scale_factor = 2 ** (len(self.vae.config.block_out_channels) - 1)

|

| 220 |

+

self.image_processor = VaeImageProcessor(vae_scale_factor=self.vae_scale_factor, do_convert_rgb=True)

|

| 221 |

+

|

| 222 |

+

## use PCM

|

| 223 |

+

self.ckpt = ckpt

|

| 224 |

+

PCM_ckpts = checkpoints[ckpt][0].format(mode)

|

| 225 |

+

self.guidance_scale = checkpoints[ckpt][2]

|

| 226 |

+

if loaded != (ckpt + mode):

|

| 227 |

+

self.pipeline.load_lora_weights(

|

| 228 |

+

"weights/PCM_Weights", weight_name=PCM_ckpts, subfolder=mode

|

| 229 |

+

)

|

| 230 |

+

loaded = ckpt + mode

|

| 231 |

+

|

| 232 |

+

if ckpt == "LCM-Like LoRA":

|

| 233 |

+

self.pipeline.scheduler = LCMScheduler()

|

| 234 |

+

else:

|

| 235 |

+

self.pipeline.scheduler = TCDScheduler(

|

| 236 |

+

num_train_timesteps=1000,

|

| 237 |

+

beta_start=0.00085,

|

| 238 |

+

beta_end=0.012,

|

| 239 |

+

beta_schedule="scaled_linear",

|

| 240 |

+

timestep_spacing="trailing",

|

| 241 |

+

)

|

| 242 |

+

self.num_inference_steps = checkpoints[ckpt][1]

|

| 243 |

+

self.guidance_scale = 0

|

| 244 |

+

|

| 245 |

+

def forward(self, validation_image, validation_mask, priori, output_path,

|

| 246 |

+

max_img_size = 1280, video_length=2, mask_dilation_iter=4,

|

| 247 |

+

nframes=22, seed=None, revision = None, guidance_scale=None, blended=True):

|

| 248 |

+

validation_prompt = "" #

|

| 249 |

+

guidance_scale_final = self.guidance_scale if guidance_scale==None else guidance_scale

|

| 250 |

+

|

| 251 |

+

if (max_img_size<256 or max_img_size>1920):

|

| 252 |

+

raise ValueError("The max_img_size must be larger than 256, smaller than 1920.")

|

| 253 |

+

|

| 254 |

+

################ read input video ################

|

| 255 |

+

frames, fps, img_size, n_clip, n_total_frames = read_video(validation_image, video_length, nframes, max_img_size)

|

| 256 |

+

video_len = len(frames)

|

| 257 |

+

|

| 258 |

+

################ read mask ################

|

| 259 |

+

validation_masks_input, validation_images_input = read_mask(validation_mask, fps, video_len, img_size, mask_dilation_iter, frames)

|

| 260 |

+

|

| 261 |

+

################ read priori ################

|

| 262 |

+

prioris = read_priori(priori, fps, n_total_frames, img_size)

|

| 263 |

+

|

| 264 |

+

## recheck

|

| 265 |

+

n_total_frames = min(min(len(frames), len(validation_masks_input)), len(prioris))

|

| 266 |

+

if(n_total_frames<22):

|

| 267 |

+

raise ValueError("The effective video duration is too short. Please make sure that the number of frames of video, mask, and priori is at least greater than 22 frames.")

|

| 268 |

+

validation_masks_input = validation_masks_input[:n_total_frames]

|

| 269 |

+

validation_images_input = validation_images_input[:n_total_frames]

|

| 270 |

+

frames = frames[:n_total_frames]

|

| 271 |

+

prioris = prioris[:n_total_frames]

|

| 272 |

+

|

| 273 |

+

prioris = resize_frames(prioris)

|

| 274 |

+

validation_masks_input = resize_frames(validation_masks_input)

|

| 275 |

+

validation_images_input = resize_frames(validation_images_input)

|

| 276 |

+

resized_frames = resize_frames(frames)

|

| 277 |

+

|

| 278 |

+

##############################################

|

| 279 |

+

# DiffuEraser inference

|

| 280 |

+

##############################################

|

| 281 |

+

print("DiffuEraser inference...")

|

| 282 |

+

if seed is None:

|

| 283 |

+

generator = None

|

| 284 |

+

else:

|

| 285 |

+

generator = torch.Generator(device=self.device).manual_seed(seed)

|

| 286 |

+

|

| 287 |

+

## random noise

|

| 288 |

+

real_video_length = len(validation_images_input)

|

| 289 |

+

tar_width, tar_height = validation_images_input[0].size

|

| 290 |

+

shape = (

|

| 291 |

+

nframes,

|

| 292 |

+

4,

|

| 293 |

+

tar_height//8,

|

| 294 |

+

tar_width//8

|

| 295 |

+

)

|

| 296 |

+

if self.text_encoder is not None:

|

| 297 |

+

prompt_embeds_dtype = self.text_encoder.dtype

|

| 298 |

+

elif self.unet_main is not None:

|

| 299 |

+

prompt_embeds_dtype = self.unet_main.dtype

|

| 300 |

+

else:

|

| 301 |

+

prompt_embeds_dtype = torch.float16

|

| 302 |

+

noise_pre = randn_tensor(shape, device=torch.device(self.device), dtype=prompt_embeds_dtype, generator=generator)

|

| 303 |

+

noise = repeat(noise_pre, "t c h w->(repeat t) c h w", repeat=n_clip)[:real_video_length,...]

|

| 304 |

+

|

| 305 |

+

################ prepare priori ################

|

| 306 |

+

images_preprocessed = []

|

| 307 |

+

for image in prioris:

|

| 308 |

+

image = self.image_processor.preprocess(image, height=tar_height, width=tar_width).to(dtype=torch.float32)

|

| 309 |

+

image = image.to(device=torch.device(self.device), dtype=torch.float16)

|

| 310 |

+

images_preprocessed.append(image)

|

| 311 |

+

pixel_values = torch.cat(images_preprocessed)

|

| 312 |

+

|

| 313 |

+

with torch.no_grad():

|

| 314 |

+

pixel_values = pixel_values.to(dtype=torch.float16)

|

| 315 |

+

latents = []

|

| 316 |

+

num=4

|

| 317 |

+

for i in range(0, pixel_values.shape[0], num):

|

| 318 |

+

latents.append(self.vae.encode(pixel_values[i : i + num]).latent_dist.sample())

|

| 319 |

+

latents = torch.cat(latents, dim=0)

|

| 320 |

+

latents = latents * self.vae.config.scaling_factor #[(b f), c1, h, w], c1=4

|

| 321 |

+

torch.cuda.empty_cache()

|

| 322 |

+

timesteps = torch.tensor([0], device=self.device)

|

| 323 |

+

timesteps = timesteps.long()

|

| 324 |

+

|

| 325 |

+

validation_masks_input_ori = copy.deepcopy(validation_masks_input)

|

| 326 |

+

resized_frames_ori = copy.deepcopy(resized_frames)

|

| 327 |

+

################ Pre-inference ################

|

| 328 |

+

if n_total_frames > nframes*2: ## do pre-inference only when number of input frames is larger than nframes*2

|

| 329 |

+

## sample

|

| 330 |

+

step = n_total_frames / nframes

|

| 331 |

+

sample_index = [int(i * step) for i in range(nframes)]

|

| 332 |

+

sample_index = sample_index[:22]

|

| 333 |

+

validation_masks_input_pre = [validation_masks_input[i] for i in sample_index]

|

| 334 |

+

validation_images_input_pre = [validation_images_input[i] for i in sample_index]

|

| 335 |

+

latents_pre = torch.stack([latents[i] for i in sample_index])

|

| 336 |

+

|

| 337 |

+

## add proiri

|

| 338 |

+

noisy_latents_pre = self.noise_scheduler.add_noise(latents_pre, noise_pre, timesteps)

|

| 339 |

+

latents_pre = noisy_latents_pre

|

| 340 |

+

|

| 341 |

+

with torch.no_grad():

|

| 342 |

+

latents_pre_out = self.pipeline(

|

| 343 |

+

num_frames=nframes,

|

| 344 |

+

prompt=validation_prompt,

|

| 345 |

+

images=validation_images_input_pre,

|

| 346 |

+

masks=validation_masks_input_pre,

|

| 347 |

+

num_inference_steps=self.num_inference_steps,

|

| 348 |

+

generator=generator,

|

| 349 |

+