Added chat_template

#1

by

shizhediao2

- opened

- README.md +62 -158

- added_tokens.json +0 -3

- config.json +14 -14

- generation_config.json +2 -1

- images/instruct_performance.png +0 -0

- images/performance1.png +0 -0

- images/performance2.png +0 -0

- instruct_performance.png +0 -0

- tokenizer.model → model-00001-of-00002.safetensors +2 -2

- model.safetensors → model-00002-of-00002.safetensors +2 -2

- model.safetensors.index.json +618 -0

- modeling_hymba.py +137 -177

- setup.sh +0 -44

- special_tokens_map.json +0 -30

- tokenizer.json +0 -0

- tokenizer_config.json +0 -52

README.md

CHANGED

|

@@ -1,201 +1,105 @@

|

|

| 1 |

---

|

| 2 |

-

|

| 3 |

-

- nvidia/Hymba-1.5B-Base

|

| 4 |

-

library_name: transformers

|

| 5 |

-

license: other

|

| 6 |

-

license_name: nvidia-open-model-license

|

| 7 |

-

license_link: https://developer.download.nvidia.com/licenses/nvidia-open-model-license-agreement-june-2024.pdf

|

| 8 |

-

pipeline_tag: text-generation

|

| 9 |

---

|

|

|

|

| 10 |

|

| 11 |

-

#

|

| 12 |

|

| 13 |

-

|

| 14 |

-

💾 <a href="https://github.com/NVlabs/hymba">Github</a>   |    📄 <a href="https://arxiv.org/abs/2411.13676">Paper</a> |    📜 <a href="https://developer.nvidia.com/blog/hymba-hybrid-head-architecture-boosts-small-language-model-performance/">Blog</a>

|

| 15 |

-

</p>

|

| 16 |

|

| 17 |

-

## Model Overview

|

| 18 |

|

| 19 |

-

|

| 20 |

-

|

| 21 |

-

|

| 22 |

-

Hymba-1.5B-Instruct is capable of many complex and important tasks like math reasoning, function calling, and role playing.

|

| 23 |

-

|

| 24 |

-

This model is ready for commercial use.

|

| 25 |

-

|

| 26 |

-

**Model Developer:** NVIDIA

|

| 27 |

-

|

| 28 |

-

**Model Dates:** Hymba-1.5B-Instruct was trained between September 4, 2024 and November 10th, 2024.

|

| 29 |

-

|

| 30 |

-

**License:**

|

| 31 |

-

This model is released under the [NVIDIA Open Model License Agreement](https://developer.download.nvidia.com/licenses/nvidia-open-model-license-agreement-june-2024.pdf).

|

| 32 |

-

|

| 33 |

-

|

| 34 |

-

## Model Architecture

|

| 35 |

-

|

| 36 |

-

> ⚡️ We've released a minimal implementation of Hymba on GitHub to help developers understand and implement its design principles in their own models. Check it out! [barebones-hymba](https://github.com/NVlabs/hymba/tree/main/barebones_hymba).

|

| 37 |

-

>

|

| 38 |

-

|

| 39 |

-

Hymba-1.5B-Instruct has a model embedding size of 1600, 25 attention heads, and an MLP intermediate dimension of 5504, with 32 layers in total, 16 SSM states, 3 full attention layers, the rest are sliding window attention. Unlike the standard Transformer, each attention layer in Hymba has a hybrid combination of standard attention heads and Mamba heads in parallel. Additionally, it uses Grouped-Query Attention (GQA) and Rotary Position Embeddings (RoPE).

|

| 40 |

-

|

| 41 |

-

Features of this architecture:

|

| 42 |

-

|

| 43 |

-

- Fuse attention heads and SSM heads within the same layer, offering parallel and complementary processing of the same inputs.

|

| 44 |

|

| 45 |

<div align="center">

|

| 46 |

<img src="https://huggingface.co/nvidia/Hymba-1.5B-Instruct/resolve/main/images/module.png" alt="Hymba Module" width="600">

|

| 47 |

</div>

|

| 48 |

|

| 49 |

-

- Introduce meta tokens that are prepended to the input sequences and interact with all subsequent tokens, thus storing important information and alleviating the burden of "forced-to-attend" in attention

|

| 50 |

|

| 51 |

-

- Integrate with cross-layer KV sharing and global-local attention to further boost memory and computation efficiency

|

| 52 |

|

| 53 |

<div align="center">

|

| 54 |

<img src="https://huggingface.co/nvidia/Hymba-1.5B-Instruct/resolve/main/images/macro_arch.png" alt="Hymba Model" width="600">

|

| 55 |

</div>

|

| 56 |

|

| 57 |

|

| 58 |

-

## Performance Highlights

|

| 59 |

-

|

| 60 |

-

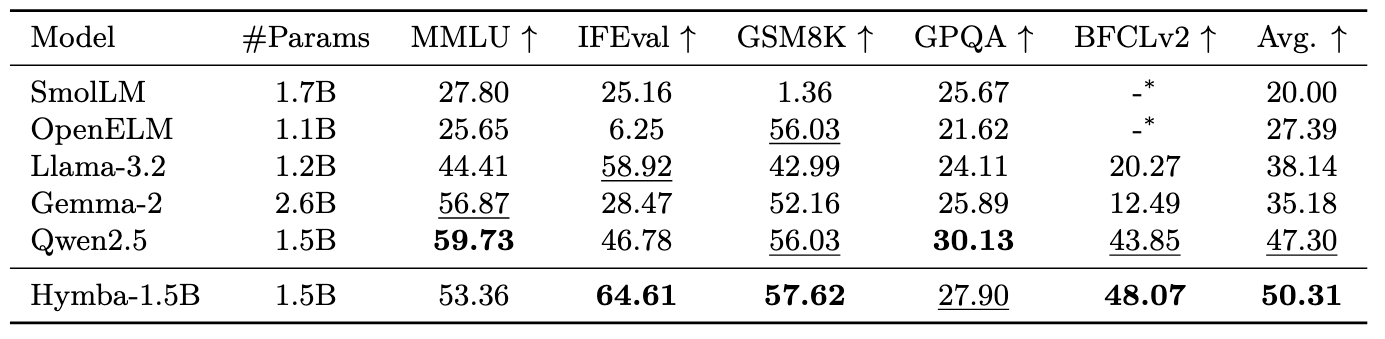

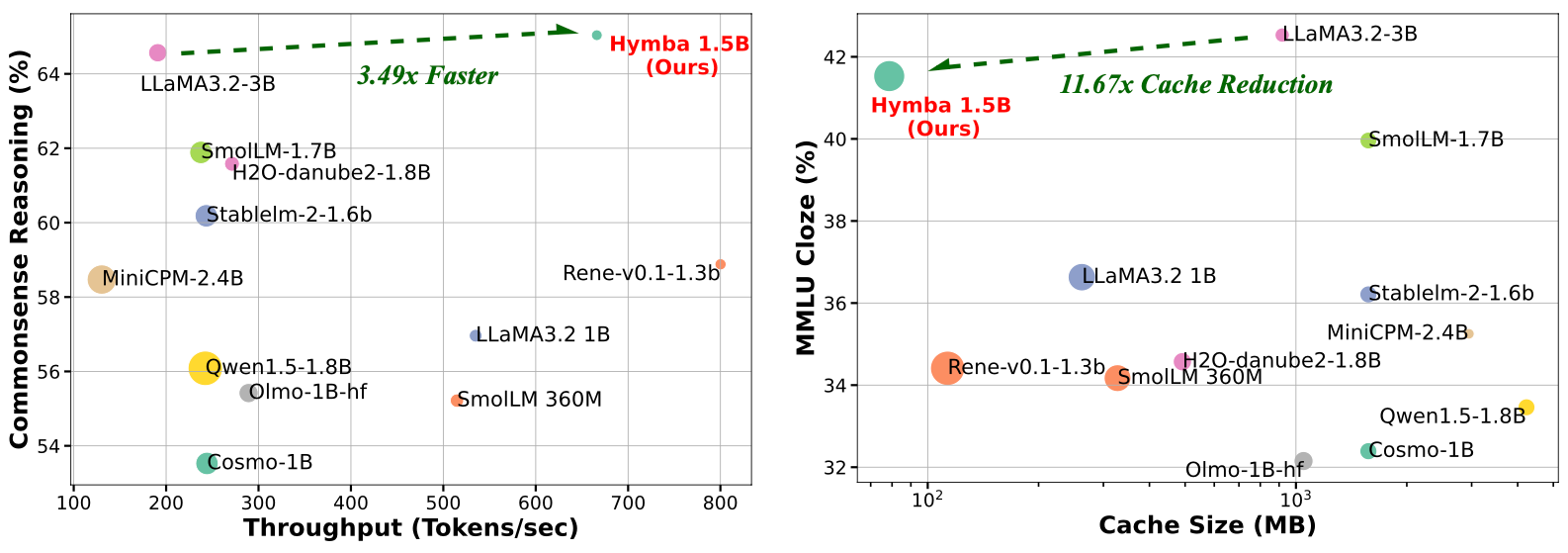

- Hymba-1.5B-Instruct outperforms popular small language models and achieves the highest average performance across all tasks.

|

| 61 |

-

|

| 62 |

|

| 63 |

<div align="center">

|

| 64 |

-

<img src="https://huggingface.co/nvidia/Hymba-1.5B-Instruct/resolve/main/images/

|

| 65 |

</div>

|

| 66 |

|

| 67 |

|

| 68 |

-

|

| 69 |

-

|

| 70 |

-

### Step 1: Environment Setup

|

| 71 |

-

|

| 72 |

-

Since Hymba-1.5B-Instruct employs [FlexAttention](https://pytorch.org/blog/flexattention/), which relies on Pytorch2.5 and other related dependencies, we provide two ways to setup the environment:

|

| 73 |

|

| 74 |

-

- **[Local install]** Install the related packages using our provided `setup.sh` (support CUDA 12.1/12.4):

|

| 75 |

|

| 76 |

-

|

| 77 |

-

|

| 78 |

-

|

| 79 |

-

```

|

| 80 |

|

| 81 |

-

- **[Docker]** A docker image is provided with all of Hymba's dependencies installed. You can download our docker image and start a container using the following commands:

|

| 82 |

-

```

|

| 83 |

-

docker pull ghcr.io/tilmto/hymba:v1

|

| 84 |

-

docker run --gpus all -v /home/$USER:/home/$USER -it ghcr.io/tilmto/hymba:v1 bash

|

| 85 |

-

```

|

| 86 |

|

|

|

|

| 87 |

|

| 88 |

-

|

| 89 |

-

After setting up the environment, you can use the following script to chat with our Model

|

| 90 |

|

| 91 |

-

|

| 92 |

-

from transformers import AutoModelForCausalLM, AutoTokenizer, StopStringCriteria, StoppingCriteriaList

|

| 93 |

-

import torch

|

| 94 |

|

| 95 |

-

|

| 96 |

-

repo_name = "nvidia/Hymba-1.5B-Instruct"

|

| 97 |

-

|

| 98 |

-

tokenizer = AutoTokenizer.from_pretrained(repo_name, trust_remote_code=True)

|

| 99 |

-

model = AutoModelForCausalLM.from_pretrained(repo_name, trust_remote_code=True)

|

| 100 |

-

model = model.cuda().to(torch.bfloat16)

|

| 101 |

-

|

| 102 |

-

# Chat with Hymba

|

| 103 |

-

prompt = input()

|

| 104 |

-

|

| 105 |

-

messages = [

|

| 106 |

-

{"role": "system", "content": "You are a helpful assistant."}

|

| 107 |

-

]

|

| 108 |

-

messages.append({"role": "user", "content": prompt})

|

| 109 |

-

|

| 110 |

-

# Apply chat template

|

| 111 |

-

tokenized_chat = tokenizer.apply_chat_template(messages, tokenize=True, add_generation_prompt=True, return_tensors="pt").to('cuda')

|

| 112 |

-

stopping_criteria = StoppingCriteriaList([StopStringCriteria(tokenizer=tokenizer, stop_strings="</s>")])

|

| 113 |

-

outputs = model.generate(

|

| 114 |

-

tokenized_chat,

|

| 115 |

-

max_new_tokens=256,

|

| 116 |

-

do_sample=False,

|

| 117 |

-

temperature=0.7,

|

| 118 |

-

use_cache=True,

|

| 119 |

-

stopping_criteria=stopping_criteria

|

| 120 |

-

)

|

| 121 |

-

input_length = tokenized_chat.shape[1]

|

| 122 |

-

response = tokenizer.decode(outputs[0][input_length:], skip_special_tokens=True)

|

| 123 |

-

|

| 124 |

-

print(f"Model response: {response}")

|

| 125 |

|

|

|

|

|

|

|

|

|

|

| 126 |

```

|

| 127 |

|

| 128 |

-

|

| 129 |

|

| 130 |

```

|

| 131 |

-

|

| 132 |

-

|

| 133 |

-

|

| 134 |

-

<extra_id_1>User

|

| 135 |

-

<tool> ... </tool>

|

| 136 |

-

<context> ... </context>

|

| 137 |

-

{prompt}

|

| 138 |

-

<extra_id_1>Assistant

|

| 139 |

-

<toolcall> ... </toolcall>

|

| 140 |

-

<extra_id_1>Tool

|

| 141 |

-

{tool response}

|

| 142 |

-

<extra_id_1>Assistant\n

|

| 143 |

```

|

| 144 |

|

|

|

|

| 145 |

|

| 146 |

-

|

| 147 |

-

|

| 148 |

-

|

| 149 |

-

[LMFlow](https://github.com/OptimalScale/LMFlow) is a complete pipeline for fine-tuning large language models.

|

| 150 |

-

The following steps provide an example of how to fine-tune the `Hymba-1.5B-Base` models using LMFlow.

|

| 151 |

|

| 152 |

-

|

| 153 |

-

|

| 154 |

-

```

|

| 155 |

-

docker pull ghcr.io/tilmto/hymba:v1

|

| 156 |

-

docker run --gpus all -v /home/$USER:/home/$USER -it ghcr.io/tilmto/hymba:v1 bash

|

| 157 |

-

```

|

| 158 |

-

2. Install LMFlow

|

| 159 |

-

|

| 160 |

-

```

|

| 161 |

-

git clone https://github.com/OptimalScale/LMFlow.git

|

| 162 |

-

cd LMFlow

|

| 163 |

-

conda create -n lmflow python=3.9 -y

|

| 164 |

-

conda activate lmflow

|

| 165 |

-

conda install mpi4py

|

| 166 |

-

pip install -e .

|

| 167 |

-

```

|

| 168 |

-

|

| 169 |

-

3. Fine-tune the model using the following command.

|

| 170 |

-

|

| 171 |

-

```

|

| 172 |

-

cd LMFlow

|

| 173 |

-

bash ./scripts/run_finetune_hymba.sh

|

| 174 |

-

```

|

| 175 |

-

|

| 176 |

-

With LMFlow, you can also fine-tune the model on your custom dataset. The only thing you need to do is transform your dataset into the [LMFlow data format](https://optimalscale.github.io/LMFlow/examples/DATASETS.html).

|

| 177 |

-

In addition to full-finetuniing, you can also fine-tune hymba efficiently with [DoRA](https://arxiv.org/html/2402.09353v4), [LoRA](https://github.com/OptimalScale/LMFlow?tab=readme-ov-file#lora), [LISA](https://github.com/OptimalScale/LMFlow?tab=readme-ov-file#lisa), [Flash Attention](https://github.com/OptimalScale/LMFlow/blob/main/readme/flash_attn2.md), and other acceleration techniques.

|

| 178 |

-

For more details, please refer to the [LMFlow for Hymba](https://github.com/OptimalScale/LMFlow/tree/main/experimental/Hymba) documentation.

|

| 179 |

-

|

| 180 |

-

## Limitations

|

| 181 |

-

The model was trained on data that contains toxic language, unsafe content, and societal biases originally crawled from the internet. Therefore, the model may amplify those biases and return toxic responses especially when prompted with toxic prompts. The model may generate answers that may be inaccurate, omit key information, or include irrelevant or redundant text producing socially unacceptable or undesirable text, even if the prompt itself does not include anything explicitly offensive.

|

| 182 |

-

|

| 183 |

-

The testing suggests that this model is susceptible to jailbreak attacks. If using this model in a RAG or agentic setting, we recommend strong output validation controls to ensure security and safety risks from user-controlled model outputs are consistent with the intended use cases.

|

| 184 |

|

| 185 |

-

|

| 186 |

-

|

| 187 |

-

|

|

|

|

| 188 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 189 |

|

| 190 |

-

## Citation

|

| 191 |

-

```

|

| 192 |

-

@misc{dong2024hymbahybridheadarchitecturesmall,

|

| 193 |

-

title={Hymba: A Hybrid-head Architecture for Small Language Models},

|

| 194 |

-

author={Xin Dong and Yonggan Fu and Shizhe Diao and Wonmin Byeon and Zijia Chen and Ameya Sunil Mahabaleshwarkar and Shih-Yang Liu and Matthijs Van Keirsbilck and Min-Hung Chen and Yoshi Suhara and Yingyan Lin and Jan Kautz and Pavlo Molchanov},

|

| 195 |

-

year={2024},

|

| 196 |

-

eprint={2411.13676},

|

| 197 |

-

archivePrefix={arXiv},

|

| 198 |

-

primaryClass={cs.CL},

|

| 199 |

-

url={https://arxiv.org/abs/2411.13676},

|

| 200 |

-

}

|

| 201 |

```

|

|

|

|

| 1 |

---

|

| 2 |

+

{}

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 3 |

---

|

| 4 |

+

# Hymba: A Hybrid-head Architecture for Small Language Models

|

| 5 |

|

| 6 |

+

[[Slide](https://docs.google.com/presentation/d/1uidqBfDy8a149yE1-AKtNnPm1qwa01hp8sOj3_KAoMI/edit#slide=id.g2f73b22dcb8_0_1017)][Technical Report] **!!! This huggingface repo is still under development.**

|

| 7 |

|

| 8 |

+

Developed by Deep Learning Efficiency Research (DLER) team at NVIDIA Research.

|

|

|

|

|

|

|

| 9 |

|

|

|

|

| 10 |

|

| 11 |

+

## Hymba: A Novel LM Architecture

|

| 12 |

+

- Fuse attention heads and SSM heads within the same layer, offering parallel and complementary processing of the same inputs

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 13 |

|

| 14 |

<div align="center">

|

| 15 |

<img src="https://huggingface.co/nvidia/Hymba-1.5B-Instruct/resolve/main/images/module.png" alt="Hymba Module" width="600">

|

| 16 |

</div>

|

| 17 |

|

| 18 |

+

- Introduce meta tokens that are prepended to the input sequences and interact with all subsequent tokens, thus storing important information and alleviating the burden of "forced-to-attend" in attention

|

| 19 |

|

| 20 |

+

- Integrate with cross-layer KV sharing and global-local attention to further boost memory and computation efficiency

|

| 21 |

|

| 22 |

<div align="center">

|

| 23 |

<img src="https://huggingface.co/nvidia/Hymba-1.5B-Instruct/resolve/main/images/macro_arch.png" alt="Hymba Model" width="600">

|

| 24 |

</div>

|

| 25 |

|

| 26 |

|

| 27 |

+

## Hymba: Performance Highlights

|

| 28 |

+

- [Hymba-1.5B-Base](https://huggingface.co/nvidia/Hymba-1.5B): Outperform all sub-2B public models, e.g., matching Llama 3.2 3B’s commonsense reasoning accuracy, being 3.49× faster, and reducing cache size by 11.7×

|

|

|

|

|

|

|

| 29 |

|

| 30 |

<div align="center">

|

| 31 |

+

<img src="https://huggingface.co/nvidia/Hymba-1.5B-Instruct/resolve/main/images/performance1.png" alt="Compare with SoTA Small LMs" width="600">

|

| 32 |

</div>

|

| 33 |

|

| 34 |

|

| 35 |

+

- Hymba-1.5B-Instruct: Outperform SOTA small LMs.

|

|

|

|

|

|

|

|

|

|

|

|

|

| 36 |

|

|

|

|

| 37 |

|

| 38 |

+

<div align="center">

|

| 39 |

+

<img src="https://huggingface.co/nvidia/Hymba-1.5B-Instruct/resolve/main/images/instruct_performance.png" alt="Compare with SoTA Small LMs" width="600">

|

| 40 |

+

</div>

|

|

|

|

| 41 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 42 |

|

| 43 |

+

## Hymba-1.5B-Instruct: Model Usage

|

| 44 |

|

| 45 |

+

We release our Hymba-1.5B-Instruct model and offer the instructions to use our model as follows.

|

|

|

|

| 46 |

|

| 47 |

+

### Step 1: Environment Setup

|

|

|

|

|

|

|

| 48 |

|

| 49 |

+

Since our model employs [FlexAttention](https://pytorch.org/blog/flexattention/), which relies on Pytorch2.5 and other related dependencies, we provide three ways to set up the environment:

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 50 |

|

| 51 |

+

- **[Pip]** Install the related packages using our provided `requirement.txt`:

|

| 52 |

+

```

|

| 53 |

+

pip install -r https://huggingface.co/nvidia/Hymba-1.5B-Instruct/resolve/main/requirements.txt

|

| 54 |

```

|

| 55 |

|

| 56 |

+

- **[Docker]** We have prepared a docker image with all of Hymba's dependencies installed. You can download our docker image and start a container using the following commands:

|

| 57 |

|

| 58 |

```

|

| 59 |

+

wget http://10.137.9.244:8000/hymba_docker.tar

|

| 60 |

+

docker load -i hymba.tar

|

| 61 |

+

docker run --security-opt seccomp=unconfined --gpus all -v /home/$USER:/home/$USER -it hymba:v1 bash

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 62 |

```

|

| 63 |

|

| 64 |

+

- **[Internal Only]** If you are an internal user from NVIDIA and are using the ORD cluster, you can use our prepared `sqsh` file to apply for an interactive node:

|

| 65 |

|

| 66 |

+

```

|

| 67 |

+

srun -A nvr_lpr_llm --partition interactive --time 4:00:00 --gpus 8 --container-image /lustre/fsw/portfolios/nvr/users/yongganf/docker/megatron_py25.sqsh --container-mounts=$HOME:/home,/lustre:/lustre --pty bash

|

| 68 |

+

```

|

|

|

|

|

|

|

| 69 |

|

| 70 |

+

### Step 2: Chat with Hymba

|

| 71 |

+

After setting up the environment, you can use the following script to chat with our Model

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 72 |

|

| 73 |

+

```

|

| 74 |

+

from transformers import LlamaTokenizer, AutoModelForCausalLM, AutoTokenizer, AutoModel

|

| 75 |

+

from huggingface_hub import login

|

| 76 |

+

import torch

|

| 77 |

|

| 78 |

+

login()

|

| 79 |

+

|

| 80 |

+

# Load LLaMA2's tokenizer

|

| 81 |

+

tokenizer = LlamaTokenizer.from_pretrained("meta-llama/Llama-2-7b")

|

| 82 |

+

|

| 83 |

+

# Load Hymba-1.5B

|

| 84 |

+

model = AutoModelForCausalLM.from_pretrained("nvidia/Hymba-1.5B-Instruct", trust_remote_code=True).cuda().to(torch.bfloat16)

|

| 85 |

+

|

| 86 |

+

# Chat with our model

|

| 87 |

+

def chat_with_model(prompt, model, tokenizer, max_length=64):

|

| 88 |

+

inputs = tokenizer(prompt, return_tensors="pt").to('cuda')

|

| 89 |

+

outputs = model.generate(inputs.input_ids, max_length=max_length, do_sample=False, temperature=0.7, use_cache=True)

|

| 90 |

+

response = tokenizer.decode(outputs[0], skip_special_tokens=True)

|

| 91 |

+

return response

|

| 92 |

+

|

| 93 |

+

print("Chat with the model (type 'exit' to quit):")

|

| 94 |

+

while True:

|

| 95 |

+

print("User:")

|

| 96 |

+

prompt = input()

|

| 97 |

+

if prompt.lower() == "exit":

|

| 98 |

+

break

|

| 99 |

+

|

| 100 |

+

# Get the model's response

|

| 101 |

+

response = chat_with_model(prompt, model, tokenizer)

|

| 102 |

+

|

| 103 |

+

print(f"Model: {response}")

|

| 104 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 105 |

```

|

added_tokens.json

DELETED

|

@@ -1,3 +0,0 @@

|

|

| 1 |

-

{

|

| 2 |

-

"[PAD]": 32000

|

| 3 |

-

}

|

|

|

|

|

|

|

|

|

|

|

|

config.json

CHANGED

|

@@ -15,6 +15,14 @@

|

|

| 15 |

"conv_dim": {

|

| 16 |

"0": 3200,

|

| 17 |

"1": 3200,

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 18 |

"10": 3200,

|

| 19 |

"11": 3200,

|

| 20 |

"12": 3200,

|

|

@@ -25,7 +33,6 @@

|

|

| 25 |

"17": 3200,

|

| 26 |

"18": 3200,

|

| 27 |

"19": 3200,

|

| 28 |

-

"2": 3200,

|

| 29 |

"20": 3200,

|

| 30 |

"21": 3200,

|

| 31 |

"22": 3200,

|

|

@@ -36,15 +43,8 @@

|

|

| 36 |

"27": 3200,

|

| 37 |

"28": 3200,

|

| 38 |

"29": 3200,

|

| 39 |

-

"3": 3200,

|

| 40 |

"30": 3200,

|

| 41 |

-

"31": 3200

|

| 42 |

-

"4": 3200,

|

| 43 |

-

"5": 3200,

|

| 44 |

-

"6": 3200,

|

| 45 |

-

"7": 3200,

|

| 46 |

-

"8": 3200,

|

| 47 |

-

"9": 3200

|

| 48 |

},

|

| 49 |

"eos_token_id": 2,

|

| 50 |

"global_attn_idx": [

|

|

@@ -160,7 +160,7 @@

|

|

| 160 |

"mamba_expand": 2,

|

| 161 |

"mamba_inner_layernorms": true,

|

| 162 |

"mamba_proj_bias": false,

|

| 163 |

-

"max_position_embeddings":

|

| 164 |

"memory_tokens_interspersed_every": 0,

|

| 165 |

"mlp_hidden_act": "silu",

|

| 166 |

"model_type": "hymba",

|

|

@@ -171,18 +171,18 @@

|

|

| 171 |

"num_key_value_heads": 5,

|

| 172 |

"num_mamba": 1,

|

| 173 |

"num_memory_tokens": 128,

|

| 174 |

-

"orig_max_position_embeddings":

|

| 175 |

"output_router_logits": false,

|

| 176 |

"pad_token_id": 0,

|

| 177 |

"rms_norm_eps": 1e-06,

|

| 178 |

"rope": true,

|

| 179 |

"rope_theta": 10000.0,

|

| 180 |

-

"rope_type":

|

| 181 |

"router_aux_loss_coef": 0.001,

|

| 182 |

-

"seq_length":

|

| 183 |

"sliding_window": 1024,

|

| 184 |

"tie_word_embeddings": true,

|

| 185 |

-

"torch_dtype": "

|

| 186 |

"transformers_version": "4.44.0",

|

| 187 |

"use_cache": false,

|

| 188 |

"use_mamba_kernels": true,

|

|

|

|

| 15 |

"conv_dim": {

|

| 16 |

"0": 3200,

|

| 17 |

"1": 3200,

|

| 18 |

+

"2": 3200,

|

| 19 |

+

"3": 3200,

|

| 20 |

+

"4": 3200,

|

| 21 |

+

"5": 3200,

|

| 22 |

+

"6": 3200,

|

| 23 |

+

"7": 3200,

|

| 24 |

+

"8": 3200,

|

| 25 |

+

"9": 3200,

|

| 26 |

"10": 3200,

|

| 27 |

"11": 3200,

|

| 28 |

"12": 3200,

|

|

|

|

| 33 |

"17": 3200,

|

| 34 |

"18": 3200,

|

| 35 |

"19": 3200,

|

|

|

|

| 36 |

"20": 3200,

|

| 37 |

"21": 3200,

|

| 38 |

"22": 3200,

|

|

|

|

| 43 |

"27": 3200,

|

| 44 |

"28": 3200,

|

| 45 |

"29": 3200,

|

|

|

|

| 46 |

"30": 3200,

|

| 47 |

+

"31": 3200

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 48 |

},

|

| 49 |

"eos_token_id": 2,

|

| 50 |

"global_attn_idx": [

|

|

|

|

| 160 |

"mamba_expand": 2,

|

| 161 |

"mamba_inner_layernorms": true,

|

| 162 |

"mamba_proj_bias": false,

|

| 163 |

+

"max_position_embeddings": 1024,

|

| 164 |

"memory_tokens_interspersed_every": 0,

|

| 165 |

"mlp_hidden_act": "silu",

|

| 166 |

"model_type": "hymba",

|

|

|

|

| 171 |

"num_key_value_heads": 5,

|

| 172 |

"num_mamba": 1,

|

| 173 |

"num_memory_tokens": 128,

|

| 174 |

+

"orig_max_position_embeddings": null,

|

| 175 |

"output_router_logits": false,

|

| 176 |

"pad_token_id": 0,

|

| 177 |

"rms_norm_eps": 1e-06,

|

| 178 |

"rope": true,

|

| 179 |

"rope_theta": 10000.0,

|

| 180 |

+

"rope_type": null,

|

| 181 |

"router_aux_loss_coef": 0.001,

|

| 182 |

+

"seq_length": 1024,

|

| 183 |

"sliding_window": 1024,

|

| 184 |

"tie_word_embeddings": true,

|

| 185 |

+

"torch_dtype": "float32",

|

| 186 |

"transformers_version": "4.44.0",

|

| 187 |

"use_cache": false,

|

| 188 |

"use_mamba_kernels": true,

|

generation_config.json

CHANGED

|

@@ -4,5 +4,6 @@

|

|

| 4 |

"eos_token_id": 2,

|

| 5 |

"pad_token_id": 0,

|

| 6 |

"transformers_version": "4.44.0",

|

| 7 |

-

"use_cache": false

|

|

|

|

| 8 |

}

|

|

|

|

| 4 |

"eos_token_id": 2,

|

| 5 |

"pad_token_id": 0,

|

| 6 |

"transformers_version": "4.44.0",

|

| 7 |

+

"use_cache": false,

|

| 8 |

+

"chat_template": "{{'<extra_id_0>System'}}{% for message in messages %}{% if message['role'] == 'system' %}{{'\n' + message['content'].strip()}}{% if tools or contexts %}{{'\n'}}{% endif %}{% endif %}{% endfor %}{% if tools %}{% for tool in tools %}{{ '\n<tool> ' + tool|tojson + ' </tool>' }}{% endfor %}{% endif %}{% if contexts %}{% if tools %}{{'\n'}}{% endif %}{% for context in contexts %}{{ '\n<context> ' + context.strip() + ' </context>' }}{% endfor %}{% endif %}{{'\n\n'}}{% for message in messages %}{% if message['role'] == 'user' %}{{ '<extra_id_1>User\n' + message['content'].strip() + '\n' }}{% elif message['role'] == 'assistant' %}{{ '<extra_id_1>Assistant\n' + message['content'].strip() + '\n' }}{% elif message['role'] == 'tool' %}{{ '<extra_id_1>Tool\n' + message['content'].strip() + '\n' }}{% endif %}{% endfor %}{%- if add_generation_prompt %}{{'<extra_id_1>Assistant\n'}}{%- endif %}"

|

| 9 |

}

|

images/instruct_performance.png

CHANGED

|

|

images/performance1.png

ADDED

|

images/performance2.png

ADDED

|

instruct_performance.png

DELETED

|

Binary file (97.9 kB)

|

|

|

tokenizer.model → model-00001-of-00002.safetensors

RENAMED

|

@@ -1,3 +1,3 @@

|

|

| 1 |

version https://git-lfs.github.com/spec/v1

|

| 2 |

-

oid sha256:

|

| 3 |

-

size

|

|

|

|

| 1 |

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:7f01b19a43514af19def4c812a1d453dfd66f5c1b0be9674090a5bf37b699fc1

|

| 3 |

+

size 4988876320

|

model.safetensors → model-00002-of-00002.safetensors

RENAMED

|

@@ -1,3 +1,3 @@

|

|

| 1 |

version https://git-lfs.github.com/spec/v1

|

| 2 |

-

oid sha256:

|

| 3 |

-

size

|

|

|

|

| 1 |

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:b11f9bec9246d8dc80612bb4e9d20f58b5744ca90ffae8944fffa0658789fde8

|

| 3 |

+

size 1102383712

|

model.safetensors.index.json

ADDED

|

@@ -0,0 +1,618 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"metadata": {

|

| 3 |

+

"total_size": 6091191296

|

| 4 |

+

},

|

| 5 |

+

"weight_map": {

|

| 6 |

+

"model.embed_tokens.weight": "model-00001-of-00002.safetensors",

|

| 7 |

+

"model.final_layernorm.weight": "model-00002-of-00002.safetensors",

|

| 8 |

+

"model.layers.0.input_layernorm.weight": "model-00001-of-00002.safetensors",

|

| 9 |

+

"model.layers.0.mamba.A_log.0": "model-00001-of-00002.safetensors",

|

| 10 |

+

"model.layers.0.mamba.B_layernorm.weight": "model-00001-of-00002.safetensors",

|

| 11 |

+

"model.layers.0.mamba.C_layernorm.weight": "model-00001-of-00002.safetensors",

|

| 12 |

+

"model.layers.0.mamba.D.0": "model-00001-of-00002.safetensors",

|

| 13 |

+

"model.layers.0.mamba.conv1d.bias": "model-00001-of-00002.safetensors",

|

| 14 |

+

"model.layers.0.mamba.conv1d.weight": "model-00001-of-00002.safetensors",

|

| 15 |

+

"model.layers.0.mamba.dt_layernorm.weight": "model-00001-of-00002.safetensors",

|

| 16 |

+

"model.layers.0.mamba.dt_proj.0.bias": "model-00001-of-00002.safetensors",

|

| 17 |

+

"model.layers.0.mamba.dt_proj.0.weight": "model-00001-of-00002.safetensors",

|

| 18 |

+

"model.layers.0.mamba.in_proj.weight": "model-00001-of-00002.safetensors",

|

| 19 |

+

"model.layers.0.mamba.out_proj.weight": "model-00001-of-00002.safetensors",

|

| 20 |

+

"model.layers.0.mamba.pre_avg_layernorm1.weight": "model-00001-of-00002.safetensors",

|

| 21 |

+

"model.layers.0.mamba.pre_avg_layernorm2.weight": "model-00001-of-00002.safetensors",

|

| 22 |

+

"model.layers.0.mamba.x_proj.0.weight": "model-00001-of-00002.safetensors",

|

| 23 |

+

"model.layers.0.moe.experts.0.down_proj.weight": "model-00001-of-00002.safetensors",

|

| 24 |

+

"model.layers.0.moe.experts.0.gate_proj.weight": "model-00001-of-00002.safetensors",

|

| 25 |

+

"model.layers.0.moe.experts.0.up_proj.weight": "model-00001-of-00002.safetensors",

|

| 26 |

+

"model.layers.0.pre_moe_layernorm.weight": "model-00001-of-00002.safetensors",

|

| 27 |

+

"model.layers.1.input_layernorm.weight": "model-00001-of-00002.safetensors",

|

| 28 |

+

"model.layers.1.mamba.A_log.0": "model-00001-of-00002.safetensors",

|

| 29 |

+

"model.layers.1.mamba.B_layernorm.weight": "model-00001-of-00002.safetensors",

|

| 30 |

+

"model.layers.1.mamba.C_layernorm.weight": "model-00001-of-00002.safetensors",

|

| 31 |

+

"model.layers.1.mamba.D.0": "model-00001-of-00002.safetensors",

|

| 32 |

+

"model.layers.1.mamba.conv1d.bias": "model-00001-of-00002.safetensors",

|

| 33 |

+

"model.layers.1.mamba.conv1d.weight": "model-00001-of-00002.safetensors",

|

| 34 |

+

"model.layers.1.mamba.dt_layernorm.weight": "model-00001-of-00002.safetensors",

|

| 35 |

+

"model.layers.1.mamba.dt_proj.0.bias": "model-00001-of-00002.safetensors",

|

| 36 |

+

"model.layers.1.mamba.dt_proj.0.weight": "model-00001-of-00002.safetensors",

|

| 37 |

+

"model.layers.1.mamba.in_proj.weight": "model-00001-of-00002.safetensors",

|

| 38 |

+

"model.layers.1.mamba.out_proj.weight": "model-00001-of-00002.safetensors",

|

| 39 |

+

"model.layers.1.mamba.pre_avg_layernorm1.weight": "model-00001-of-00002.safetensors",

|

| 40 |

+

"model.layers.1.mamba.pre_avg_layernorm2.weight": "model-00001-of-00002.safetensors",

|

| 41 |

+

"model.layers.1.mamba.x_proj.0.weight": "model-00001-of-00002.safetensors",

|

| 42 |

+

"model.layers.1.moe.experts.0.down_proj.weight": "model-00001-of-00002.safetensors",

|

| 43 |

+

"model.layers.1.moe.experts.0.gate_proj.weight": "model-00001-of-00002.safetensors",

|

| 44 |

+

"model.layers.1.moe.experts.0.up_proj.weight": "model-00001-of-00002.safetensors",

|

| 45 |

+

"model.layers.1.pre_moe_layernorm.weight": "model-00001-of-00002.safetensors",

|

| 46 |

+

"model.layers.10.input_layernorm.weight": "model-00001-of-00002.safetensors",

|

| 47 |

+

"model.layers.10.mamba.A_log.0": "model-00001-of-00002.safetensors",

|

| 48 |

+

"model.layers.10.mamba.B_layernorm.weight": "model-00001-of-00002.safetensors",

|

| 49 |

+

"model.layers.10.mamba.C_layernorm.weight": "model-00001-of-00002.safetensors",

|

| 50 |

+

"model.layers.10.mamba.D.0": "model-00001-of-00002.safetensors",

|

| 51 |

+

"model.layers.10.mamba.conv1d.bias": "model-00001-of-00002.safetensors",

|

| 52 |

+

"model.layers.10.mamba.conv1d.weight": "model-00001-of-00002.safetensors",

|

| 53 |

+

"model.layers.10.mamba.dt_layernorm.weight": "model-00001-of-00002.safetensors",

|

| 54 |

+

"model.layers.10.mamba.dt_proj.0.bias": "model-00001-of-00002.safetensors",

|

| 55 |

+

"model.layers.10.mamba.dt_proj.0.weight": "model-00001-of-00002.safetensors",

|

| 56 |

+

"model.layers.10.mamba.in_proj.weight": "model-00001-of-00002.safetensors",

|

| 57 |

+

"model.layers.10.mamba.out_proj.weight": "model-00001-of-00002.safetensors",

|

| 58 |

+

"model.layers.10.mamba.pre_avg_layernorm1.weight": "model-00001-of-00002.safetensors",

|

| 59 |

+

"model.layers.10.mamba.pre_avg_layernorm2.weight": "model-00001-of-00002.safetensors",

|

| 60 |

+

"model.layers.10.mamba.x_proj.0.weight": "model-00001-of-00002.safetensors",

|

| 61 |

+

"model.layers.10.moe.experts.0.down_proj.weight": "model-00001-of-00002.safetensors",

|

| 62 |

+

"model.layers.10.moe.experts.0.gate_proj.weight": "model-00001-of-00002.safetensors",

|

| 63 |

+

"model.layers.10.moe.experts.0.up_proj.weight": "model-00001-of-00002.safetensors",

|

| 64 |

+

"model.layers.10.pre_moe_layernorm.weight": "model-00001-of-00002.safetensors",

|

| 65 |

+

"model.layers.11.input_layernorm.weight": "model-00001-of-00002.safetensors",

|

| 66 |

+

"model.layers.11.mamba.A_log.0": "model-00001-of-00002.safetensors",

|

| 67 |

+

"model.layers.11.mamba.B_layernorm.weight": "model-00001-of-00002.safetensors",

|

| 68 |

+

"model.layers.11.mamba.C_layernorm.weight": "model-00001-of-00002.safetensors",

|

| 69 |

+

"model.layers.11.mamba.D.0": "model-00001-of-00002.safetensors",

|

| 70 |

+

"model.layers.11.mamba.conv1d.bias": "model-00001-of-00002.safetensors",

|

| 71 |

+

"model.layers.11.mamba.conv1d.weight": "model-00001-of-00002.safetensors",

|

| 72 |

+

"model.layers.11.mamba.dt_layernorm.weight": "model-00001-of-00002.safetensors",

|

| 73 |

+

"model.layers.11.mamba.dt_proj.0.bias": "model-00001-of-00002.safetensors",

|

| 74 |

+

"model.layers.11.mamba.dt_proj.0.weight": "model-00001-of-00002.safetensors",

|

| 75 |

+

"model.layers.11.mamba.in_proj.weight": "model-00001-of-00002.safetensors",

|

| 76 |

+

"model.layers.11.mamba.out_proj.weight": "model-00001-of-00002.safetensors",

|

| 77 |

+

"model.layers.11.mamba.pre_avg_layernorm1.weight": "model-00001-of-00002.safetensors",

|

| 78 |

+

"model.layers.11.mamba.pre_avg_layernorm2.weight": "model-00001-of-00002.safetensors",

|

| 79 |

+

"model.layers.11.mamba.x_proj.0.weight": "model-00001-of-00002.safetensors",

|

| 80 |

+

"model.layers.11.moe.experts.0.down_proj.weight": "model-00001-of-00002.safetensors",

|

| 81 |

+

"model.layers.11.moe.experts.0.gate_proj.weight": "model-00001-of-00002.safetensors",

|

| 82 |

+

"model.layers.11.moe.experts.0.up_proj.weight": "model-00001-of-00002.safetensors",

|

| 83 |

+

"model.layers.11.pre_moe_layernorm.weight": "model-00001-of-00002.safetensors",

|

| 84 |

+

"model.layers.12.input_layernorm.weight": "model-00001-of-00002.safetensors",

|

| 85 |

+

"model.layers.12.mamba.A_log.0": "model-00001-of-00002.safetensors",

|

| 86 |

+

"model.layers.12.mamba.B_layernorm.weight": "model-00001-of-00002.safetensors",

|

| 87 |

+

"model.layers.12.mamba.C_layernorm.weight": "model-00001-of-00002.safetensors",

|

| 88 |

+

"model.layers.12.mamba.D.0": "model-00001-of-00002.safetensors",

|

| 89 |

+

"model.layers.12.mamba.conv1d.bias": "model-00001-of-00002.safetensors",

|

| 90 |

+

"model.layers.12.mamba.conv1d.weight": "model-00001-of-00002.safetensors",

|

| 91 |

+

"model.layers.12.mamba.dt_layernorm.weight": "model-00001-of-00002.safetensors",

|

| 92 |

+

"model.layers.12.mamba.dt_proj.0.bias": "model-00001-of-00002.safetensors",

|

| 93 |

+

"model.layers.12.mamba.dt_proj.0.weight": "model-00001-of-00002.safetensors",

|

| 94 |

+

"model.layers.12.mamba.in_proj.weight": "model-00001-of-00002.safetensors",

|

| 95 |

+

"model.layers.12.mamba.out_proj.weight": "model-00001-of-00002.safetensors",

|

| 96 |

+

"model.layers.12.mamba.pre_avg_layernorm1.weight": "model-00001-of-00002.safetensors",

|

| 97 |

+

"model.layers.12.mamba.pre_avg_layernorm2.weight": "model-00001-of-00002.safetensors",

|

| 98 |

+

"model.layers.12.mamba.x_proj.0.weight": "model-00001-of-00002.safetensors",

|

| 99 |

+

"model.layers.12.moe.experts.0.down_proj.weight": "model-00001-of-00002.safetensors",

|

| 100 |

+

"model.layers.12.moe.experts.0.gate_proj.weight": "model-00001-of-00002.safetensors",

|

| 101 |

+

"model.layers.12.moe.experts.0.up_proj.weight": "model-00001-of-00002.safetensors",

|

| 102 |

+

"model.layers.12.pre_moe_layernorm.weight": "model-00001-of-00002.safetensors",

|

| 103 |

+

"model.layers.13.input_layernorm.weight": "model-00001-of-00002.safetensors",

|

| 104 |

+

"model.layers.13.mamba.A_log.0": "model-00001-of-00002.safetensors",

|

| 105 |

+

"model.layers.13.mamba.B_layernorm.weight": "model-00001-of-00002.safetensors",

|

| 106 |

+

"model.layers.13.mamba.C_layernorm.weight": "model-00001-of-00002.safetensors",

|

| 107 |

+

"model.layers.13.mamba.D.0": "model-00001-of-00002.safetensors",

|

| 108 |

+

"model.layers.13.mamba.conv1d.bias": "model-00001-of-00002.safetensors",

|

| 109 |

+

"model.layers.13.mamba.conv1d.weight": "model-00001-of-00002.safetensors",

|

| 110 |

+

"model.layers.13.mamba.dt_layernorm.weight": "model-00001-of-00002.safetensors",

|

| 111 |

+

"model.layers.13.mamba.dt_proj.0.bias": "model-00001-of-00002.safetensors",

|

| 112 |

+

"model.layers.13.mamba.dt_proj.0.weight": "model-00001-of-00002.safetensors",

|

| 113 |

+

"model.layers.13.mamba.in_proj.weight": "model-00001-of-00002.safetensors",

|

| 114 |

+

"model.layers.13.mamba.out_proj.weight": "model-00001-of-00002.safetensors",

|

| 115 |

+

"model.layers.13.mamba.pre_avg_layernorm1.weight": "model-00001-of-00002.safetensors",

|

| 116 |

+

"model.layers.13.mamba.pre_avg_layernorm2.weight": "model-00001-of-00002.safetensors",

|

| 117 |

+

"model.layers.13.mamba.x_proj.0.weight": "model-00001-of-00002.safetensors",

|

| 118 |

+

"model.layers.13.moe.experts.0.down_proj.weight": "model-00001-of-00002.safetensors",

|

| 119 |

+

"model.layers.13.moe.experts.0.gate_proj.weight": "model-00001-of-00002.safetensors",

|

| 120 |

+

"model.layers.13.moe.experts.0.up_proj.weight": "model-00001-of-00002.safetensors",

|

| 121 |

+

"model.layers.13.pre_moe_layernorm.weight": "model-00001-of-00002.safetensors",

|

| 122 |

+

"model.layers.14.input_layernorm.weight": "model-00001-of-00002.safetensors",

|

| 123 |

+

"model.layers.14.mamba.A_log.0": "model-00001-of-00002.safetensors",

|

| 124 |

+

"model.layers.14.mamba.B_layernorm.weight": "model-00001-of-00002.safetensors",

|

| 125 |

+

"model.layers.14.mamba.C_layernorm.weight": "model-00001-of-00002.safetensors",

|

| 126 |

+

"model.layers.14.mamba.D.0": "model-00001-of-00002.safetensors",

|

| 127 |

+

"model.layers.14.mamba.conv1d.bias": "model-00001-of-00002.safetensors",

|

| 128 |

+

"model.layers.14.mamba.conv1d.weight": "model-00001-of-00002.safetensors",

|

| 129 |

+

"model.layers.14.mamba.dt_layernorm.weight": "model-00001-of-00002.safetensors",

|

| 130 |

+

"model.layers.14.mamba.dt_proj.0.bias": "model-00001-of-00002.safetensors",

|

| 131 |

+

"model.layers.14.mamba.dt_proj.0.weight": "model-00001-of-00002.safetensors",

|

| 132 |

+

"model.layers.14.mamba.in_proj.weight": "model-00001-of-00002.safetensors",

|

| 133 |

+

"model.layers.14.mamba.out_proj.weight": "model-00001-of-00002.safetensors",

|

| 134 |

+

"model.layers.14.mamba.pre_avg_layernorm1.weight": "model-00001-of-00002.safetensors",

|

| 135 |

+

"model.layers.14.mamba.pre_avg_layernorm2.weight": "model-00001-of-00002.safetensors",

|

| 136 |

+

"model.layers.14.mamba.x_proj.0.weight": "model-00001-of-00002.safetensors",

|

| 137 |

+

"model.layers.14.moe.experts.0.down_proj.weight": "model-00001-of-00002.safetensors",

|

| 138 |

+

"model.layers.14.moe.experts.0.gate_proj.weight": "model-00001-of-00002.safetensors",

|

| 139 |

+

"model.layers.14.moe.experts.0.up_proj.weight": "model-00001-of-00002.safetensors",

|

| 140 |

+

"model.layers.14.pre_moe_layernorm.weight": "model-00001-of-00002.safetensors",

|

| 141 |

+

"model.layers.15.input_layernorm.weight": "model-00001-of-00002.safetensors",

|

| 142 |

+

"model.layers.15.mamba.A_log.0": "model-00001-of-00002.safetensors",

|

| 143 |

+

"model.layers.15.mamba.B_layernorm.weight": "model-00001-of-00002.safetensors",

|

| 144 |

+

"model.layers.15.mamba.C_layernorm.weight": "model-00001-of-00002.safetensors",

|

| 145 |

+

"model.layers.15.mamba.D.0": "model-00001-of-00002.safetensors",

|

| 146 |

+

"model.layers.15.mamba.conv1d.bias": "model-00001-of-00002.safetensors",

|

| 147 |

+

"model.layers.15.mamba.conv1d.weight": "model-00001-of-00002.safetensors",

|

| 148 |

+

"model.layers.15.mamba.dt_layernorm.weight": "model-00001-of-00002.safetensors",

|

| 149 |

+

"model.layers.15.mamba.dt_proj.0.bias": "model-00001-of-00002.safetensors",

|

| 150 |

+

"model.layers.15.mamba.dt_proj.0.weight": "model-00001-of-00002.safetensors",

|

| 151 |

+

"model.layers.15.mamba.in_proj.weight": "model-00001-of-00002.safetensors",

|

| 152 |

+

"model.layers.15.mamba.out_proj.weight": "model-00001-of-00002.safetensors",

|

| 153 |

+

"model.layers.15.mamba.pre_avg_layernorm1.weight": "model-00001-of-00002.safetensors",

|

| 154 |

+

"model.layers.15.mamba.pre_avg_layernorm2.weight": "model-00001-of-00002.safetensors",

|

| 155 |

+

"model.layers.15.mamba.x_proj.0.weight": "model-00001-of-00002.safetensors",

|

| 156 |

+

"model.layers.15.moe.experts.0.down_proj.weight": "model-00001-of-00002.safetensors",

|

| 157 |

+

"model.layers.15.moe.experts.0.gate_proj.weight": "model-00001-of-00002.safetensors",

|

| 158 |

+

"model.layers.15.moe.experts.0.up_proj.weight": "model-00001-of-00002.safetensors",

|

| 159 |

+

"model.layers.15.pre_moe_layernorm.weight": "model-00001-of-00002.safetensors",

|

| 160 |

+

"model.layers.16.input_layernorm.weight": "model-00001-of-00002.safetensors",

|

| 161 |

+

"model.layers.16.mamba.A_log.0": "model-00001-of-00002.safetensors",

|

| 162 |

+

"model.layers.16.mamba.B_layernorm.weight": "model-00001-of-00002.safetensors",

|

| 163 |

+

"model.layers.16.mamba.C_layernorm.weight": "model-00001-of-00002.safetensors",

|

| 164 |

+

"model.layers.16.mamba.D.0": "model-00001-of-00002.safetensors",

|

| 165 |

+

"model.layers.16.mamba.conv1d.bias": "model-00001-of-00002.safetensors",

|

| 166 |

+

"model.layers.16.mamba.conv1d.weight": "model-00001-of-00002.safetensors",

|

| 167 |

+

"model.layers.16.mamba.dt_layernorm.weight": "model-00001-of-00002.safetensors",

|

| 168 |

+

"model.layers.16.mamba.dt_proj.0.bias": "model-00001-of-00002.safetensors",

|

| 169 |

+

"model.layers.16.mamba.dt_proj.0.weight": "model-00001-of-00002.safetensors",

|

| 170 |

+

"model.layers.16.mamba.in_proj.weight": "model-00001-of-00002.safetensors",

|

| 171 |

+

"model.layers.16.mamba.out_proj.weight": "model-00001-of-00002.safetensors",

|

| 172 |

+

"model.layers.16.mamba.pre_avg_layernorm1.weight": "model-00001-of-00002.safetensors",

|

| 173 |

+

"model.layers.16.mamba.pre_avg_layernorm2.weight": "model-00001-of-00002.safetensors",

|

| 174 |

+

"model.layers.16.mamba.x_proj.0.weight": "model-00001-of-00002.safetensors",

|

| 175 |

+

"model.layers.16.moe.experts.0.down_proj.weight": "model-00001-of-00002.safetensors",

|

| 176 |

+

"model.layers.16.moe.experts.0.gate_proj.weight": "model-00001-of-00002.safetensors",

|

| 177 |

+

"model.layers.16.moe.experts.0.up_proj.weight": "model-00001-of-00002.safetensors",

|

| 178 |

+

"model.layers.16.pre_moe_layernorm.weight": "model-00001-of-00002.safetensors",

|

| 179 |

+

"model.layers.17.input_layernorm.weight": "model-00001-of-00002.safetensors",

|

| 180 |

+

"model.layers.17.mamba.A_log.0": "model-00001-of-00002.safetensors",

|

| 181 |

+

"model.layers.17.mamba.B_layernorm.weight": "model-00001-of-00002.safetensors",

|

| 182 |

+

"model.layers.17.mamba.C_layernorm.weight": "model-00001-of-00002.safetensors",

|

| 183 |

+

"model.layers.17.mamba.D.0": "model-00001-of-00002.safetensors",

|

| 184 |

+

"model.layers.17.mamba.conv1d.bias": "model-00001-of-00002.safetensors",

|

| 185 |

+

"model.layers.17.mamba.conv1d.weight": "model-00001-of-00002.safetensors",

|

| 186 |

+

"model.layers.17.mamba.dt_layernorm.weight": "model-00001-of-00002.safetensors",

|

| 187 |

+

"model.layers.17.mamba.dt_proj.0.bias": "model-00001-of-00002.safetensors",

|

| 188 |

+

"model.layers.17.mamba.dt_proj.0.weight": "model-00001-of-00002.safetensors",

|

| 189 |

+

"model.layers.17.mamba.in_proj.weight": "model-00001-of-00002.safetensors",

|

| 190 |

+

"model.layers.17.mamba.out_proj.weight": "model-00001-of-00002.safetensors",

|

| 191 |

+

"model.layers.17.mamba.pre_avg_layernorm1.weight": "model-00001-of-00002.safetensors",

|

| 192 |

+

"model.layers.17.mamba.pre_avg_layernorm2.weight": "model-00001-of-00002.safetensors",

|

| 193 |

+

"model.layers.17.mamba.x_proj.0.weight": "model-00001-of-00002.safetensors",

|

| 194 |

+

"model.layers.17.moe.experts.0.down_proj.weight": "model-00001-of-00002.safetensors",

|

| 195 |

+

"model.layers.17.moe.experts.0.gate_proj.weight": "model-00001-of-00002.safetensors",

|

| 196 |

+

"model.layers.17.moe.experts.0.up_proj.weight": "model-00001-of-00002.safetensors",

|

| 197 |

+

"model.layers.17.pre_moe_layernorm.weight": "model-00001-of-00002.safetensors",

|

| 198 |

+

"model.layers.18.input_layernorm.weight": "model-00001-of-00002.safetensors",

|

| 199 |

+

"model.layers.18.mamba.A_log.0": "model-00001-of-00002.safetensors",

|

| 200 |

+

"model.layers.18.mamba.B_layernorm.weight": "model-00001-of-00002.safetensors",

|

| 201 |

+

"model.layers.18.mamba.C_layernorm.weight": "model-00001-of-00002.safetensors",

|

| 202 |

+

"model.layers.18.mamba.D.0": "model-00001-of-00002.safetensors",

|

| 203 |

+

"model.layers.18.mamba.conv1d.bias": "model-00001-of-00002.safetensors",

|

| 204 |

+

"model.layers.18.mamba.conv1d.weight": "model-00001-of-00002.safetensors",

|

| 205 |

+

"model.layers.18.mamba.dt_layernorm.weight": "model-00001-of-00002.safetensors",

|

| 206 |

+

"model.layers.18.mamba.dt_proj.0.bias": "model-00001-of-00002.safetensors",

|

| 207 |

+

"model.layers.18.mamba.dt_proj.0.weight": "model-00001-of-00002.safetensors",

|

| 208 |

+

"model.layers.18.mamba.in_proj.weight": "model-00001-of-00002.safetensors",

|

| 209 |

+

"model.layers.18.mamba.out_proj.weight": "model-00001-of-00002.safetensors",

|

| 210 |

+

"model.layers.18.mamba.pre_avg_layernorm1.weight": "model-00001-of-00002.safetensors",

|

| 211 |

+

"model.layers.18.mamba.pre_avg_layernorm2.weight": "model-00001-of-00002.safetensors",

|

| 212 |

+

"model.layers.18.mamba.x_proj.0.weight": "model-00001-of-00002.safetensors",

|

| 213 |

+

"model.layers.18.moe.experts.0.down_proj.weight": "model-00001-of-00002.safetensors",

|

| 214 |

+

"model.layers.18.moe.experts.0.gate_proj.weight": "model-00001-of-00002.safetensors",

|

| 215 |

+

"model.layers.18.moe.experts.0.up_proj.weight": "model-00001-of-00002.safetensors",

|

| 216 |

+

"model.layers.18.pre_moe_layernorm.weight": "model-00001-of-00002.safetensors",

|

| 217 |

+

"model.layers.19.input_layernorm.weight": "model-00001-of-00002.safetensors",

|

| 218 |

+

"model.layers.19.mamba.A_log.0": "model-00001-of-00002.safetensors",

|

| 219 |

+

"model.layers.19.mamba.B_layernorm.weight": "model-00001-of-00002.safetensors",

|

| 220 |

+

"model.layers.19.mamba.C_layernorm.weight": "model-00001-of-00002.safetensors",

|

| 221 |

+

"model.layers.19.mamba.D.0": "model-00001-of-00002.safetensors",

|

| 222 |

+

"model.layers.19.mamba.conv1d.bias": "model-00001-of-00002.safetensors",

|

| 223 |

+

"model.layers.19.mamba.conv1d.weight": "model-00001-of-00002.safetensors",

|

| 224 |

+

"model.layers.19.mamba.dt_layernorm.weight": "model-00001-of-00002.safetensors",

|

| 225 |

+

"model.layers.19.mamba.dt_proj.0.bias": "model-00001-of-00002.safetensors",

|

| 226 |

+

"model.layers.19.mamba.dt_proj.0.weight": "model-00001-of-00002.safetensors",

|

| 227 |

+

"model.layers.19.mamba.in_proj.weight": "model-00001-of-00002.safetensors",

|

| 228 |

+

"model.layers.19.mamba.out_proj.weight": "model-00001-of-00002.safetensors",

|

| 229 |

+

"model.layers.19.mamba.pre_avg_layernorm1.weight": "model-00001-of-00002.safetensors",

|

| 230 |

+

"model.layers.19.mamba.pre_avg_layernorm2.weight": "model-00001-of-00002.safetensors",

|

| 231 |

+

"model.layers.19.mamba.x_proj.0.weight": "model-00001-of-00002.safetensors",

|

| 232 |

+

"model.layers.19.moe.experts.0.down_proj.weight": "model-00001-of-00002.safetensors",

|

| 233 |

+

"model.layers.19.moe.experts.0.gate_proj.weight": "model-00001-of-00002.safetensors",

|

| 234 |

+

"model.layers.19.moe.experts.0.up_proj.weight": "model-00001-of-00002.safetensors",

|

| 235 |

+

"model.layers.19.pre_moe_layernorm.weight": "model-00001-of-00002.safetensors",

|

| 236 |

+