full_name

stringlengths 10

67

| url

stringlengths 29

86

| description

stringlengths 3

347

⌀ | readme

stringlengths 0

162k

| stars

int64 10

3.1k

| forks

int64 0

1.51k

|

|---|---|---|---|---|---|

Ctrlmonster/r3f-effekseer | https://github.com/Ctrlmonster/r3f-effekseer | null | # Effekseer for React-Three-Fiber 🎆💥

This Library aims to provide React bindings for the **WebGL + WASM** runtime

of [**Effekseer**](https://effekseer.github.io/en/). Effekseer is a mature **Particle Effect Creation Tool**,

which supports major game engines, is used in many commercial games, and includes its

own free to use editor, which you can use to create to your own effects!

---------

> TODO: Section on how to install

---------

## Adding Effects to your Scene: `<Effekt />`

Effects are loaded from `.etf` files, which is the effects

format of Effekseer. You can export these yourself from the

Effekseer Editor, or download some from the collection of

[sample effects](https://effekseer.github.io/en/contribute.html).

```tsx

function MyScene() {

// get ref to EffectInstance for imperative control of effect

const effectRef = useRef<EffectInstance>(null!);

return (

// effects can be added anywhere inside parent component

<Effekseer>

<mesh onClick={() => effectRef.current?.play()}>

<sphereGeometry/>

<meshStandardMaterial/>

{/*Suspense is required for async loading of effect*/}

<Suspense>

<Effekt ref={effectRef}

name={"Laser1"}

src={"../assets/Laser1.efk"}

playOnMount // start playing as soon effect is ready

position={[0, 1.5, 0]} // transforms are relative to parent mesh

/>

</Suspense>

</mesh>

</Effekseer>

)

}

```

-------------

## Controlling Effects imperatively via `EffectInstance`

The `<Effekt />` component forwards a ref to an `EffectInstance`. This class gives you a persistent handle

to a particular instance of this effect. You can have as many instances

of one effect as you like. The `EffectInstance` provides you with an

imperative api that lets you set a variety of settings supported

by Effekseer, as well as control playback of the effect.

Some examples methods:

```js

const effect = new EffectInstance(name, path); // or get via <Effekt ref={effectRef}>

effect.setPaused(true); // pause / unpause this effect

effect.stop(); // stop the effect from running

effect.sendTrigger(index); // send trigger to effect (effekseer feature)

await effect.play(); // start a new run of this effect (returns a promise for completion)

```

## Effect Settings

All settings applied to an `EffectInstance` are **persistent** and will be applied to the current

run of this effect, as well as all future calls of `effect.play()`.

```js

effect.setSpeed(0.5); // set playback speed

effect.setPosition(x, y, z); // set transforms relative to parent in scene tree

effect.setColor(255, 0, 255, 255); // set rgba color

effect.setVisible(false) // hide effect

```

To **drop a setting**, call `effect.dropSetting(name)`. This is the full

list of **available settings**:

```ts

type EffectInstanceSetting = "paused"

| "position"

| "rotation"

| "scale"

| "speed"

| "randomSeed"

| "visible"

| "matrix"

| "dynamicInput"

| "targetPosition"

| "color"

```

You can also set settings via **props** on the `<Effekt/>` component.

This is full list of props available:

```ts

type EffectProps = {

// required props for initialization / loading

name: string,

src: string,

// -----------------------------------------

// effect settings

position?: [x: number, y: number, z: number],

rotation?: [x: number, y: number, z: number],

scale?: [x: number, y: number, z: number],

speed?: number,

randomSeed?: number,

visible?: boolean,

dynamicInput?: (number | undefined)[],

targetPosition?: [x: number, y: number, z: number],

color?: [r: number, g: number, b: number, alpha: number],

paused?: boolean,

// -----------------------------------------

// r3f specifics

playOnMount?: boolean,

dispose?: null, // set to null to prevent unloading of effect on dismount

debug?: boolean,

// -----------------------------------------

// loading callbacks

onload?: (() => void) | undefined,

onerror?: ((reason: string, path: string) => void) | undefined,

redirect?: ((path: string) => string) | undefined,

}

```

-------------

## Spawning multiple Instances of the same effect

Any Effect that has been loaded can be spawned multiple times. Either via the `EffectInstance` class,

or via the `<Effekt />` component. Simply re-use the same name and path, and you will get a new instance each time.

```tsx

const instance1 = new EffectInstance("Laser1", "../assets/Laser1.efk");

// or via <Effekt /> component

<Effekt name={"Laser1"} src={"../assets/Laser1.efk"} speed={0.1} />

<Effekt name={"Laser1"} src={"../assets/Laser1.efk"} position={[0, 2, 0]} />

```

## The Parent Component: `<Effekseer>`

The `<Effekseer>` parent component provides its children with the React context to spawn effects.

You can access all loaded effects, as well as the manager singleton via the context.

```ts

const {effects, manager} = useContext(EffekseerReactContext);

```

The `<Effekseer>` component can be initialized with a set of native Effekseer settings,

a custom camera as well as prop to take over Rendering:

```ts

type EffekseerSettings = {

instanceMaxCount?: number // default is 4000

squareMaxCount?: number, // default is 10000

enableExtensionsByDefault?: boolean,

enablePremultipliedAlpha?: boolean,

enableTimerQuery?: boolean,

onTimerQueryReport?: (averageDrawTime: number) => void,

timerQueryReportIntervalCount?: number,

}

```

```tsx

<Effekseer settings={effekseerSettings} camera={DefaultCamera} ejectRenderer={false} />

```

--------

## Loading & Rendering Effects: `effekseerManager`

The parent component also forwards a ref to the `effekseerManager` **singleton**.

This object holds all loaded effects, handles the initialization of the wasm runtime,

loading/unloading of effects and offers a limited imperative API to play effects,

next to the `EffectInstance` class.

```js

const effectHandle = effectManager.playEffect(name); // play an effect

effectHandle.setSpeed(0.5); // fleeting effect handle, becomes invalid once effect has finished.

// view all loaded effects

console.log(effectManager.effects)

```

### Overtaking the renderer:

If you decide to eject the default effekseer renderer, you can render yourself like this (it's

what `<Effekseer>` does internally):

```js

useFrame((state, delta) => {

state.gl.render();

effekseerManager.update(delta);

}, 1);

```

**Note**: Setting `ejectRenderer` to true will also be required if

you plan on rendering effekseer effects as a postprocessing effect.

-----------------

## Preloading Runtime & Effects

You can start preloading via the manager. Preloading the runtime means it will already

be available when `<Effekseer>` mounts and preloading effects means they will already

be available when a `<Effekt>` component using this effect mounts.<br/>

```js

effekseerManager.preload(); // Start initializing the wasm runtime before <Effekseer> mounts

```

**Note**: Effects can't actually start preloading before the `<Effekseer>` component mounts

This is because they rely on the `EffekseerContext` to be created, which can't be instantiated

before WebGLRenderer, Camera and Scene exist. This is still useful to preload Effects

that don't get mounted with your initial render. Also preloading any effect will

automatically preload the runtime, meaning you don't have to call `preload`, if you're

doing `preloadEffect`.

```js

effekseerManager.preloadEffect(name, path); // will preload runtime automatically

```

---------------

## Disable automatic Effect disposal:

By default, an effect will be disposed when the last `<Effekt>` using it unmounts. This means

the next time an <Effekt> component using that effect mounts, it will have to be loaded again.

You can disable this behaviour via setting the **dispose** prop to null. This way effects

never get disposed automatically.

```jsx

// Laser1.efk will not be unloaded

<Effekt name={"Laser"} path={"../assets/Laser1.efk"} dispose={null}>

```

You can unload the effect yourself via the `effekseerManager`. <br/>

**Note**: Since effects are stored by name, make sure to give each effect a **unique name**.

```js

effekseerManager.disposeEffect("Laser");

```

---------------

## Native API: `EffekseerContext`:

The `EffekseerManager` holds a reference to the `EffekseerContext` which

is a class provided by Effekseer itself. If you are looking for **direct

access** to the **native API**, this is the place to look at. It includes methods like:

```js

effekseerManager.context.setProjectionMatrix();

effekseerManager.context.setProjectionOrthographic();

effekseerManager.context.loadEffectPackage();

...

```

-------------------

## Known issues / Gotchas:

* There needs to be a background color assigned to the scene, or else

black parts of the particle image are not rendered transparently.

## TODOs:

* Check if all Effekseer Settings are being used in the effekseer.js

file, in the same that they were used in effekseer.src.js

* Check if baseDir in manager needs to be settable

## Next Steps / How to Contribute:

* The Effekseer render pass needs to be adapted to be compatible

with the pmndrs PostProcessing lib (see Resources below) - check the

`EffekseerRenderPass.tsx` for a wip.

* Check what kind of additional methods to add to the Manager

* Check if HMR experience can be improved

--------------

## References

### Vanilla Three.js Demo

I've included the Effekseer vanilla three demo for reference inside

the reference folder.

Just run `python -m SimpleHTTPServer` or

`python3 -m http.server` inside `references/html-demo/src` to view

the original demo in browser.

### Effekseer Resources

* website: https://effekseer.github.io/en/

* effekseer webgl: https://github.com/effekseer/EffekseerForWebGL

* effekseer post processing pass: https://github.com/effekseer/EffekseerForWebGL/blob/master/tests/post_processing_threejs.html

| 30 | 2 |

matthunz/hoot | https://github.com/matthunz/hoot | Opinionated package manager for haskell (WIP) | # Hoot

Opinionated haskell package builder (based on cabal)

* WIP: Only `hoot add` package resolution works so far

### Create a new project

```sh

hoot new hello

cd hello

hoot run

```

### Add dependencies

```sh

hoot add QuickCheck

# Added QuickCheck v2.14.3

```

### Package manifest

Package manifests are stored in `Hoot.toml`

```toml

[package]

name = "example"

[dependencies]

quickcheck = "v2.14.3"

```

| 16 | 0 |

7h3h4ckv157/100-Days-of-Hacking | https://github.com/7h3h4ckv157/100-Days-of-Hacking | 100-Days-of-Hacking | # 100-Days-of-Hacking

Here, you will find collection of my daily tweets documenting my journey through the exciting world of hacking.

I have compiled a comprehensive archive of my Twitter posts, providing a detailed account of my progress, challenges, and discoveries throughout my 100-day hacking challenge.

Each tweet link serves as a snapshot of my thoughts, insights, and the resources I found valuable during my journey. Join me on this exhilarating journey through my "100 Days of Hacking" and let's explore the fascinating realm of cybersecurity together!

Happy Hacking!!

<img src="https://github.com/7h3h4ckv157/100-Days-of-Hacking/blob/main/Hack.jpg">

## Day 1-10: ~# Hacking & Bug-Bounty Writeups

- Day 1: https://twitter.com/7h3h4ckv157/status/1658327548865155073

- Day 2: https://twitter.com/7h3h4ckv157/status/1658887862392360960

- Day 3: https://twitter.com/7h3h4ckv157/status/1659256754612637697

- Day 4: https://twitter.com/7h3h4ckv157/status/1659617271860568064

- Day 5: https://twitter.com/7h3h4ckv157/status/1659984958801444864

- Day 6: https://twitter.com/7h3h4ckv157/status/1660332905762279424

- Day 7: https://twitter.com/7h3h4ckv157/status/1660698267301322752

- Day 8: https://twitter.com/7h3h4ckv157/status/1661035179312566273

- Day 9: https://twitter.com/7h3h4ckv157/status/1661408595798261763

- Day 10: https://twitter.com/7h3h4ckv157/status/1661786942126850048

## Day 11: ~# CORS (Cross-Origin Resource Sharing)

- https://twitter.com/7h3h4ckv157/status/1662153470076665857

## Day 12: ~# Server Side Request Forgery (SSRF)

- https://twitter.com/7h3h4ckv157/status/1662504293902204929

## Day 13: ~# Access control vulnerabilities

- https://twitter.com/7h3h4ckv157/status/1662879474487275520

## Day 14: ~# SQL Injection (SQLi)

- https://twitter.com/7h3h4ckv157/status/1663242325437616129

## Day 15: ~# Server Side Template Injection (SSTI)

- https://twitter.com/7h3h4ckv157/status/1663588780572565504

## Day 16: ~# Cross Site Scripting

- https://twitter.com/7h3h4ckv157/status/1663962637250736133

## Day 17: ~# Cross Site Request Forgery (CSRF)

- https://twitter.com/7h3h4ckv157/status/1664328757891698688

## Day 18: ~# Insecure Direct Object Reference (IDOR)

- https://twitter.com/7h3h4ckv157/status/1664695437821988877

## Day 19: ~# Local File Inclusion (LFI) & Directory traversal

- https://twitter.com/7h3h4ckv157/status/1665041463032561664

## Day 20: ~# XML external entity (XXE) injection

- https://twitter.com/7h3h4ckv157/status/1665387480596586497

## Day 21: ~# Complete Bug Bounty Cheat Sheet

- https://twitter.com/7h3h4ckv157/status/1665728971483549696

## Day 22: ~# Reverse Engineering

- https://twitter.com/7h3h4ckv157/status/1666140238031507456

## Day 23: ~# Collection of InfoSec Dorks 🧑💻 🪄

- https://twitter.com/7h3h4ckv157/status/1666422979859996672

## Day 24: ~# G-Mail Hacking!

- https://twitter.com/7h3h4ckv157/status/1666878092491763717

## Day 25: ~# Beginner Guide: "How to start Hacking!"

- https://twitter.com/7h3h4ckv157/status/1667231672142823424

## Day 26: ~# Beginners to intermediate Guide: "Reverse Engineering Resources"

- https://twitter.com/7h3h4ckv157/status/1667594434551382016

## Day 27: ~# Car Hacking: The Ultimate Guide!

- https://twitter.com/7h3h4ckv157/status/1667946768074674177

## Day 28: ~# Introduction to Game Hacking 🪄

- https://twitter.com/7h3h4ckv157/status/1668316281471385605

## Day 29: ~# A dive into IoT Hacking

- https://twitter.com/7h3h4ckv157/status/1668658147119210503

## Day 30: ~# Browser Exploitation 🔥

- https://twitter.com/7h3h4ckv157/status/1669046797199900673

## Day 31: ~# WebHacking (BugBounty) Cheatsheet & Red-Team Resources

- https://twitter.com/7h3h4ckv157/status/1669393182856200192

- https://twitter.com/7h3h4ckv157/status/1669756590583611394

## Day 32: ~# Android Hacking

- https://twitter.com/7h3h4ckv157/status/1670104669266534400

## Day 33: ~# iOS Hacking

- https://twitter.com/7h3h4ckv157/status/1670474636935729153

## Day 34: ~# Satellite Hacking 🚀💻🔥

- https://twitter.com/7h3h4ckv157/status/1670851905969602561

## Day 35: ~# Web3 Hacking 🔥

- https://twitter.com/7h3h4ckv157/status/1671192773121445888

## Day 36: ~# Cloud Hacking 🔥

- https://twitter.com/7h3h4ckv157/status/1671549362110087168

## Day 37: ~# Malware Analysis 🔥

- https://twitter.com/7h3h4ckv157/status/1671891442439180292

## Day 38: ~# Active Directory Hacking

- https://twitter.com/7h3h4ckv157/status/1672262640712966144

## Day 39: ~# Threat Intelligence 🔥

- https://twitter.com/7h3h4ckv157/status/1672498502059069440

## Day 40: ~# Exploit Development 🔥

- https://twitter.com/7h3h4ckv157/status/1672991167699636224

## Day 41: ~# Hacking-Labs

- https://twitter.com/7h3h4ckv157/status/1673388922808995841

## Day 42: ~# Purple Team 🧵

- https://twitter.com/7h3h4ckv157/status/1673743184013455361

## Day 43: ~# API Hacking!

- https://twitter.com/7h3h4ckv157/status/1674117140947894273

## Day 44: ~# GraphQL-Hacking 🔥

- https://twitter.com/7h3h4ckv157/status/1674477244314710016

## Day 45: ~# Free Resource to CISSP

- https://twitter.com/7h3h4ckv157/status/1674839984279527429

## Day 46: ~# Privilege Escalation (Win-Linux)🔥

- https://twitter.com/7h3h4ckv157/status/1675204396584681472

## Day 47: ~# Network Penetration Testing

- https://twitter.com/7h3h4ckv157/status/1675564922615496704

## Day 48: ~# FREE Cyber-Security Certifications/Training 🔥

- https://twitter.com/7h3h4ckv157/status/1675929889218891777

## Day 49: ~# Special Day for me

- https://twitter.com/7h3h4ckv157/status/1676282384516550656

## Day 50: ~# OT Penetration Testing 🔥

- https://twitter.com/7h3h4ckv157/status/1676617659885191169

## Day 51: ~# OSINT++ 🔥

- https://twitter.com/7h3h4ckv157/status/1676996186023272448

## Day 52: ~# Source Code Analysis ++

- https://twitter.com/7h3h4ckv157/status/1677380692920127490

## Day 53: ~# Some Top-Notch Bounty Reports 💰

- https://twitter.com/7h3h4ckv157/status/1677736435603087360

## Day 54: ~# Check out these Twitter profiles sharing valuable resources and posting about hacking contents!

- https://twitter.com/7h3h4ckv157/status/1678068115152973827

## Day 55: ~# Google Cloud Penetration Testing

- https://twitter.com/7h3h4ckv157/status/1678454897648324608

## Day 56: ~# Top YouTube Channels to Learn Hacking!

- https://twitter.com/7h3h4ckv157/status/1678825354730012678

## Day 57: ~# Preparation for CompTIA PenTest+ Certification

- https://twitter.com/7h3h4ckv157/status/1679169556638760960

## Day 58: ~# Hacking AI 🔥

- https://twitter.com/7h3h4ckv157/status/1679548700832731136

## Day 59: ~# ATM Pentesting Collections 🔥

- https://twitter.com/7h3h4ckv157/status/1679898673327779841

## Day 60: ~# Hacking CI/CD 🔥

- https://twitter.com/7h3h4ckv157/status/1680274755474317312

## Day 61: ~# Checklist for Red-Teaming 🔥

- https://twitter.com/7h3h4ckv157/status/1680642509154979841

## Day 62: ~# 🛡️ Blue-Teaming ++

- https://twitter.com/7h3h4ckv157/status/1681004385483259904

## Day 63: ~# Some respectful Hacking Certifications

- https://twitter.com/7h3h4ckv157/status/1681370353296363520

## Day 64: ~# Hacking-Tools 🔥📢

- https://twitter.com/7h3h4ckv157/status/1681712528223776768

## Day 65: ~# Best BugBounty Writeups (@Meta & @GoogleVRP)

- https://twitter.com/7h3h4ckv157/status/1682092456987459584

## Day 66: ~# Social Engineering 💯

- https://twitter.com/7h3h4ckv157/status/1682451096411975680

## Day 67: ~#recon

- https://twitter.com/7h3h4ckv157/status/1682817216386048000

## Day 68: ~# Wifi Attacks

- https://twitter.com/7h3h4ckv157/status/1683172354858549248

## Day 69: ~# Docker Hacking 🔥📢

- https://twitter.com/7h3h4ckv157/status/1683525691814596610

## Day 70: ~# Bank Hacking

- https://twitter.com/7h3h4ckv157/status/1683901864499376128

## Day 71: ~# Top Bug Bounty Platform to earn 💰

- https://twitter.com/7h3h4ckv157/status/1684267059092533248

## Day 72: ~# Hardware Hacking!

- https://twitter.com/7h3h4ckv157/status/1684630230273777669

## Day 73: ~# Google Dorking for Hacking! 🔥🔥

- https://twitter.com/7h3h4ckv157/status/1684964541002813440

## Day 74: ~# Web application firewall (WAF) bypass 💯

- https://twitter.com/7h3h4ckv157/status/1685348183990501376

## Day 75: ~# How to Crack an Entry Level Job in Cybersecurity🔒

- https://twitter.com/7h3h4ckv157/status/1685702822699180032

## Day 76: ~# How Hackers hack into victims Account?

- https://twitter.com/7h3h4ckv157/status/1686070460168261633

## Day 77: ~# Security Operation Center (SOC) Tools

- https://twitter.com/7h3h4ckv157/status/1686438785826037761

## Day 78: ~# Best Hackers Search Engines ❤️🔥 📢

- https://twitter.com/7h3h4ckv157/status/1686801039406850048

## Day 79: ~# Capture The Flag (CTF) - Improve Hacking skills 💯

- https://twitter.com/7h3h4ckv157/status/1687164605880274944

## Day 80: ~# FREE Cybersecurity AI projects

- https://twitter.com/7h3h4ckv157/status/1687527890978811904

| 57 | 6 |

ubavic/bas-celik | https://github.com/ubavic/bas-celik | A program for reading ID cards issued by the government of Serbia | # Baš Čelik

**Baš Čelik** je čitač elektronskih ličnih karata i zdravstvenih knjižica. Program je osmišljen kao zamena za zvanične aplikacije poput *Čelika*. Nažalost, zvanične aplikacije mogu se pokrenuti samo na Windows operativnom sistemu, dok Baš Čelik funkcioniše na tri operativna sistema (Windows/Linux/OSX).

Aplikacija bi trebalo da podržava očitavanje svih ličnih karata, ali za sada nije testirana na starim (izdate pre avgusta 2014. godine), kao ni na najnovijim (izdate nakon februara 2023. godine). Unapred sam zahvalan za bilo kakvu povratnu informaciju.

## Upotreba

Povežite čitač za računar i pokrenite aplikaciju. Ubacite karticu u čitač. Aplikacija će pročitati informacije sa kartice i prikazati ih. Tada možete sačuvati PDF pritiskom na donje desno dugme.

Kreirani PDF dokument izgleda maksimalno približno dokumentu koji se dobija sa zvaničnim aplikacijama.

### Pokretanje na Linuksu

Aplikacija zahteva instalirane `ccid` i `opensc`/`pcscd` pakete. Nakon instalacije ovih paketa, neophodno je i pokrenuti `pcscd` servis:

```

sudo systemctl start pcscd

sudo systemctl enable pcscd

```

### Pokretanje u komandnoj liniji

Aplikacija prihvata sledeće opcije:

+ `-verbose`: tokom rada aplikacije detalji o greškama će biti ispisani u komandnu liniju

+ `-pdf PATH`: grafički interfejs neće biti pokrenut, a sadržaj dokumenta će biti direktno sačuvan u PDF na `PATH` lokaciji.

+ `-help`: informacija o opcijama će biti prikazana

## Preuzimanje

Izvršne datoteke poslednje verzije programa možete preuzeti sa [Releases](https://github.com/ubavic/bas-celik/releases) stranice.

Dostupan je i [AUR paket](https://aur.archlinux.org/packages/bas-celik) za Arch korisnike.

## Kompilacija

Potrebno je posedovati `go` kompajler. Na Linuksu je potrebno instalirati i `libpcsclite-dev` i [pakete za Fyne](https://developer.fyne.io/started/#prerequisites) (možda i `pkg-config`).

Nakon preuzimanja repozitorijuma, dovoljno je pokrenuti

```

go build main.go

```

### Kroskompilacija

Uz pomoć [fyne-cross](https://github.com/fyne-io/fyne-cross) programa moguće je na jednom operativnom sistemu iskompajlirati program za sva tri operativna sistema. Ovaj program zahteva Docker na vašem operativnom sistemu.

## Planirane nadogradnje

+ Omogućavanje potpisivanja dokumenata sa LK

## Poznati problemi

+ Na Windowsu, aplikacija u nekim slučajevima neće pročitati karticu ako je kartica ubačena u čitač nakon pokretanja programa. U tom slučaju, dovoljno je restartovati program.

+ Podaci na zdravstvenoj kartici su kodirani sa (meni) nepoznatim kodranjem. Program dekodira uspešno većinu karaktera, ali ne sve. Zbog toga se mogu desiti greške prilikom ispisa podataka.

Ni jedan od problema ne utiče na "sigurnost" vašeg dokumenta. Baš Čelik isključivo čita podatke sa kartice.

## Licenca

Program i izvorni kôd su objavljeni pod [*MIT* licencom](LICENSE).

Font `free-sans-regular` je objavljen pod [*SIL Open Font* licencom](assets/LICENSE).

| 51 | 3 |

YiVal/YiVal | https://github.com/YiVal/YiVal | YiVal is a dynamic AI experimentation framework, offering a blend of manual and automated tools for data input, parameter variations, and evaluation. With the upcoming YiVal Agent, it promises to autonomously streamline the AI development process, catering to both hands-on developers and automation enthusiasts. | # YiVal

[](https://github.com/YiVal/YiVal/actions/workflows/pytest.yml)

[](https://github.com/google/yapf)

<details open="open">

<summary>Table of Contents</summary>

- [About](#about)

</details>

---

## About

YiVal is an adaptable AI development framework, designed to provide a tailored experimentation experience. Whether you're a hands-on developer or leaning into automation, YiVal is equipped for both:

- **Data Input**: Choose between manual data input or let the framework handle auto-generation.

- **Variations**: Manually set parameter and prompt variations or utilize the automated capabilities for optimal settings.

- **Evaluation**: Engage with manual evaluators or leverage the built-in automated evaluators for efficient results.

On the horizon is the YiVal Agent, an ambitious addition aimed at autonomously driving the entire experimentation process. With its blend of manual and automated features, YiVal stands as a comprehensive solution for AI experimentation, ensuring flexibility and efficiency every step of the way.

<details>

<summary>Screenshots</summary>

<br>

### Best Parameter Combination

### Data Analysis

### Test Cases Side by Side

</details>

## Roadmap

**Qian** (The Creative, Heaven) 🌤️ (乾):

- [x] Setup the framework for wrappers that can be used directly

in the production code.

- [x] Set up the BaseWrapper

- [x] Set up the StringWrapper

- [x] Setup the config framework

- [x] Setup the experiment main function

- [x] Setup the evaluator framework to do evaluations

- [x] One auto-evaluator

- [x] Ground truth matching

- [ ] Human evaluator

- [x] Interactive evaluator

- [x] Reader framework that be able to process different data

- [ ] One reader from csv

- [x] Output parser - Capture detailed information

- [ ] Documents

- [ ] Git setup

- [ ] Cotribution guide

- [x] End2End Examples

- [ ] Release

| 489 | 77 |

microsoft/azurechatgpt | https://github.com/microsoft/azurechatgpt | 🤖 Azure ChatGPT: Private & secure ChatGPT for internal enterprise use 💼 | # Unleash the Power of Azure Open AI

ChatGPT has grown explosively in popularity as we all know now. Business users across the globe often tap into the public service to work more productively or act as a creative assistant.

However, ChatGPT risks exposing confidential intellectual property. One option is to block corporate access to ChatGPT, but people always find workarounds. This also limits the powerful capabilities of ChatGPT and reduces employee productivity and their work experience.

Azure ChatGPT is our enterprise option. This is the exact same service but offered as your private ChatGPT.

### Benefits are:

**1. Private:** Built-in guarantees around the privacy of your data and fully isolated from those operated by OpenAI.

**2. Controlled:** Network traffic can be fully isolated to your network and other enterprise grade security controls are built in.

**3. Value:** Deliver added business value with your own internal data sources (plug and play) or use plug-ins to integrate with your internal services (e.g., ServiceNow, etc).

We've built a Solution Accelerator to empower your workforce with Azure ChatGPT.

# Getting Started

1. [ Introduction](/docs/1-introduction.md)

1. [Provision Azure Resources](/docs/2-provision-azure-resources.md)

1. [Run Azure ChatGPT from your local machine](/docs/3-run-locally.md)

1. [Deploy Azure ChatGPT to Azure](/docs/4-deployto-azure.md)

1. [Add identity provider](/docs/5-add-Identity.md)

1. [Chatting with your file](/docs/6-chat-over-file.md)

1. [Environment variables](/docs/7-environment-variables.md)

# Contributing

This project welcomes contributions and suggestions. Most contributions require you to agree to a

Contributor License Agreement (CLA) declaring that you have the right to, and actually do, grant us

the rights to use your contribution. For details, visit https://cla.opensource.microsoft.com.

When you submit a pull request, a CLA bot will automatically determine whether you need to provide

a CLA and decorate the PR appropriately (e.g., status check, comment). Simply follow the instructions

provided by the bot. You will only need to do this once across all repos using our CLA.

This project has adopted the [Microsoft Open Source Code of Conduct](https://opensource.microsoft.com/codeofconduct/).

For more information see the [Code of Conduct FAQ](https://opensource.microsoft.com/codeofconduct/faq/) or

contact [[email protected]](mailto:[email protected]) with any additional questions or comments.

# Trademarks

This project may contain trademarks or logos for projects, products, or services. Authorized use of Microsoft

trademarks or logos is subject to and must follow

[Microsoft's Trademark & Brand Guidelines](https://www.microsoft.com/en-us/legal/intellectualproperty/trademarks/usage/general).

Use of Microsoft trademarks or logos in modified versions of this project must not cause confusion or imply Microsoft sponsorship.

Any use of third-party trademarks or logos are subject to those third-party's policies.

| 3,101 | 459 |

kuutsav/llm-toys | https://github.com/kuutsav/llm-toys | Small(7B and below), production-ready finetuned LLMs for a diverse set of useful tasks. | # llm-toys

[](https://github.com/psf/black)

[](https://pypi.org/project/llm-toys/)

Small(7B and below), production-ready finetuned LLMs for a diverse set of useful tasks.

Supported tasks: Paraphrasing, Changing the tone of a passage, Summary and Topic generation from a dailogue,

~~Retrieval augmented QA(WIP)~~.

We finetune LoRAs on quantized 3B and 7B models. The 3B model is finetuned on specific tasks, while the 7B model is

finetuned on all the tasks.

The goal is to be able to finetune and use all these models on a very modest consumer grade hardware.

## Installation

```bash

pip install llm-toys

```

> Might not work without a CUDA enabled GPU

>

> If you encounter "The installed version of bitsandbytes was compiled without GPU support" with bitsandbytes

> then look here https://github.com/TimDettmers/bitsandbytes/issues/112

>

> or try

>

> cp <path_to_your_venv>/lib/python3.10/site-packages/bitsandbytes/libbitsandbytes_cpu.so <path_to_your_venv>/lib/python3.10/site-packages/bitsandbytes/libbitsandbytes_cuda117.so

>

> Note that we are using the transformers and peft packages from the source directory,

> not the installed package. 4bit bitsandbytes quantization was only working with the

> main brach of transformers and peft. Once transformers version 4.31.0 and peft version 0.4.0 is

> published to pypi we will use the published version.

## Available Models

| Model | Size | Tasks | Colab |

| ----- | ---- | ----- | ----- |

| [llm-toys/RedPajama-INCITE-Base-3B-v1-paraphrase-tone](https://huggingface.co./llm-toys/RedPajama-INCITE-Base-3B-v1-paraphrase-tone) | 3B | Paraphrasing, Tone change | [Notebook](https://colab.research.google.com/drive/1MSl8IDLjs3rgEv8cPHbJLR8GHh2ucT3_) |

| [llm-toys/RedPajama-INCITE-Base-3B-v1-dialogue-summary-topic](https://huggingface.co./llm-toys/RedPajama-INCITE-Base-3B-v1-dialogue-summary-topic) | 3B | Dialogue Summary and Topic generation | [Notebook](https://colab.research.google.com/drive/1MSl8IDLjs3rgEv8cPHbJLR8GHh2ucT3_) |

| [llm-toys/falcon-7b-paraphrase-tone-dialogue-summary-topic](https://huggingface.co./llm-toys/falcon-7b-paraphrase-tone-dialogue-summary-topic) | 7B | Paraphrasing, Tone change, Dialogue Summary and Topic generation | [Notebook](https://colab.research.google.com/drive/1hhANNzQkxhrPIIrxtvf0WT_Ste8KrFjh#scrollTo=d6-OJJq_q5Qr) |

## Usage

### Task specific 3B models

#### Paraphrasing

```python

from llm_toys.tasks import Paraphraser

paraphraser = Paraphraser()

paraphraser.paraphrase("Hey, can yuo hepl me cancel my last order?")

# "Could you kindly assist me in canceling my previous order?"

```

#### Tone change

```python

paraphraser.paraphrase("Hey, can yuo hepl me cancel my last order?", tone="casual")

# "Hey, could you help me cancel my order?"

paraphraser.paraphrase("Hey, can yuo hepl me cancel my last order?", tone="professional")

# "I would appreciate guidance on canceling my previous order."

paraphraser.paraphrase("Hey, can yuo hepl me cancel my last order?", tone="witty")

# "Hey, I need your help with my last order. Can you wave your magic wand and make it disappear?"

```

#### Dialogue Summary and Topic generation

```python

from llm_toys.tasks import SummaryAndTopicGenerator

summary_topic_generator = SummaryAndTopicGenerator()

summary_topic_generator.generate_summary_and_topic(

"""

#Person1#: I'm so excited for the premiere of the latest Studio Ghibli movie!

#Person2#: What's got you so hyped?

#Person1#: Studio Ghibli movies are pure magic! The animation, storytelling, everything is incredible.

#Person2#: Which movie is it?

#Person1#: It's called "Whisper of the Wind." It's about a girl on a magical journey to save her village.

#Person2#: Sounds amazing! I'm in for the premiere.

#Person1#: Great! We're in for a visual masterpiece and a heartfelt story.

#Person2#: Can't wait to be transported to their world.

#Person1#: It'll be an unforgettable experience, for sure!

""".strip()

)

# {"summary": "#Person1# is excited for the premiere of the latest Studio Ghibli movie.

# #Person1# thinks the animation, storytelling, and heartfelt story will be unforgettable.

# #Person2# is also excited for the premiere.",

# "topic": "Studio ghibli movie"}

```

### General 7B model

```python

from llm_toys.tasks import GeneralTaskAssitant

from llm_toys.config import TaskType

gta = GeneralTaskAssitant()

gta.complete(TaskType.PARAPHRASE_TONE, "Hey, can yuo hepl me cancel my last order?")

# "Could you assist me in canceling my previous order?"

gta.complete(TaskType.PARAPHRASE_TONE, "Hey, can yuo hepl me cancel my last order?", tone="casual")

# "Hey, can you help me cancel my last order?"

gta.complete(TaskType.PARAPHRASE_TONE, "Hey, can yuo hepl me cancel my last order?", tone="professional")

# "I would appreciate if you could assist me in canceling my previous order."

gta.complete(TaskType.PARAPHRASE_TONE, "Hey, can yuo hepl me cancel my last order?", tone="witty")

# "Oops! Looks like I got a little carried away with my shopping spree. Can you help me cancel my last order?"

chat = """

#Person1#: I'm so excited for the premiere of the latest Studio Ghibli movie!

#Person2#: What's got you so hyped?

#Person1#: Studio Ghibli movies are pure magic! The animation, storytelling, everything is incredible.

#Person2#: Which movie is it?

#Person1#: It's called "Whisper of the Wind." It's about a girl on a magical journey to save her village.

#Person2#: Sounds amazing! I'm in for the premiere.

#Person1#: Great! We're in for a visual masterpiece and a heartfelt story.

#Person2#: Can't wait to be transported to their world.

#Person1#: It'll be an unforgettable experience, for sure!

""".strip()

gta.complete(TaskType.DIALOGUE_SUMMARY_TOPIC, chat)

# {"summary": "#Person1# tells #Person2# about the upcoming Studio Ghibli movie.

# #Person1# thinks it's magical and #Person2#'s excited to watch it.",

# "topic": "Movie premiere"}

```

## Training

### Data

- [Paraphrasing and Tone change](data/paraphrase_tone.json): Contains passages and their paraphrased versions as well

as the passage in different tones like casual, professional and witty. Used to models to rephrase and change the

tone of a passage. Data was generated using gpt-35-turbo. A small sample of training passages have also been picked

up from quora quesions and squad_2 datasets.

- [Dialogue Summary and Topic generation](data/dialogue_summary_topic.json): Contains Dialogues and their Summary

and Topic. The training data is ~1k records from the training split of the

[Dialogsum dataset](https://github.com/cylnlp/dialogsum). It also contains ~20 samples from the dev split.

Data points with longer Summaries and Topics were given priority in the sampling. Note that some(~30) topics

were edited manually in final training data as the original labeled Topic was just a word and not descriptive enough.

### Sample training script

To look at all the options

```bash

python llm_toys/train.py --help

```

To train a paraphrasing and tone change model

```bash

python llm_toys/train.py \

--task_type paraphrase_tone \

--model_name meta-llama/Llama-2-7b \

--max_length 128 \

--batch_size 8 \

--gradient_accumulation_steps 1 \

--learning_rate 1e-4 \

--num_train_epochs 3 \

--eval_ratio 0.05

```

## Evaluation

### Paraphrasing and Tone change

WIP

### Dialogue Summary and Topic generation

Evaluation is done on 500 records from the [Dialogsum test](https://github.com/cylnlp/dialogsum/tree/main/DialogSum_Data)

split.

```python

# llm-toys/RedPajama-INCITE-Base-3B-v1-dialogue-summary-topic

{"rouge1": 0.453, "rouge2": 0.197, "rougeL": 0.365, "topic_similarity": 0.888}

# llm-toys/falcon-7b-paraphrase-tone-dialogue-summary-topic

{'rouge1': 0.448, 'rouge2': 0.195, 'rougeL': 0.359, 'topic_similarity': 0.886}

```

## Roadmap

- [ ] Add tests.

- [ ] Ability to switch the LoRAs(for task wise models) without re-initializing the backbone model and tokenizer.

- [ ] Retrieval augmented QA.

- [ ] Explore the generalizability of 3B model across more tasks.

- [ ] Explore even smaller models.

- [ ] Evaluation strategy for tasks where we don"t have a test/eval dataset handy.

- [ ] Data collection strategy and finetuning a model for OpenAI like "function calling"

| 71 | 3 |

Abdulhaseebimran/CodeHelp_MERN_YT | https://github.com/Abdulhaseebimran/CodeHelp_MERN_YT | In this repository, I am working on my assignments, projects, and class tasks to practice and improve my web development skills. | # CodeHelp_MERN_YT

In this repository, I am working on my assignments, projects, and class tasks to practice and improve my web development skills.

| 10 | 0 |

ClaudiaRojasSoto/my-bookstore | https://github.com/ClaudiaRojasSoto/my-bookstore | Discover a user-friendly website built with React.js and Redux, designed to store and manage your beloved book collection. Effortlessly add and remove books with a seamless experience. Dive into a world of literary wonders! | <a name="readme-top"></a>

<div align="center">

<br/>

<h1><b>my-bookstore</b></h1>

</div>

# 📗 Table of Contents

- [📗 Table of Contents](#-table-of-contents)

- [📖 my-bookstore ](#-my-bookstore-)

- [🛠 Built With ](#-built-with-)

- [Tech Stack ](#tech-stack-)

- [Key Features ](#key-features-)

- [🚀 Live Demo ](#-live-demo-)

- [💻 Getting Started ](#-getting-started-)

- [Project Structure](#project-structure)

- [Setup](#setup)

- [Install](#install)

- [Usage](#usage)

- [Run tests](#run-tests)

- [👥 Authors ](#-authors-)

- [🔭 Future Features ](#-future-features-)

- [🤝 Contributing ](#-contributing-)

- [⭐️ Show your support ](#️-show-your-support-)

- [🙏 Acknowledgments ](#-acknowledgments-)

- [📝 License ](#-license-)

# 📖 my-bookstore <a name="about-project"></a>

> This Bookstore Website is a user-friendly platform built with React.js and Redux, aimed at helping book enthusiasts store and manage their favorite books. Whether you are an avid reader or a collector, this website provides a seamless experience for organizing and accessing your beloved book collection

## 🛠 Built With <a name="built-with"></a>

### Tech Stack <a name="tech-stack"></a>

<details>

<summary>React</summary>

<ul>

<li>This project use <a href="https://react.dev/">React</a></li>

</ul>

</details>

<details>

<summary>HTML</summary>

<ul>

<li>This project use <a href="https://github.com/microverseinc/curriculum-html-css/blob/main/html5.md">HTML.</a></li>

</ul>

</details>

<details>

<summary>CSS</summary>

<ul>

<li>The <a href="https://github.com/microverseinc/curriculum-html-css/blob/main/html5.md">CSS</a> is used to provide the design in the whole page.</li>

</ul>

</details>

<details>

<summary>Linters</summary>

<ul>

<li>The <a href="https://github.com/microverseinc/linters-config">Linters</a> are tools that help us to check and solve the errors in the code</li>

This project count with three linters:

<ul>

<li>CSS</li>

<li>JavaScript</li>

</ul>

</ul>

</details>

### Key Features <a name="key-features"></a>

- **React configuration**

- **HTML Generation**

- **Code Quality**

- **Modular Structure**

- **Development Server**

- **JavaScript Functionality**

- **Gitflow**

- **API integration**

- **CSS Styling**

<p align="right">(<a href="#readme-top">back to top</a>)</p>

## 🚀 Live Demo <a name="live-demo"></a>

> You can see a Demo [here](https://bookstore-flq3.onrender.com/).

<p align="right">(<a href="#readme-top">back to top</a>)</p>

## 💻 Getting Started <a name="getting-started"></a>

> To get a local copy up and running, follow these steps.

> This project requires Node.js and npm installed on your machine.

-Node.js

-npm

> -Clone this repository to your local machine using:

> git clone https://github.com/ClaudiaRojasSoto/my-bookstore.git

> -Navigate to the project folder:

> cd math_magicians

> -Install the project dependencies:

> npm install

> To start the development server, run the following command:

> npm start

### Project Structure

> The project follows the following folder and file structure:

- /src: Contains the source files of the application.

- /src/index.js: Main entry point of the JavaScript application.

- /src/App.js: Top-level component of the application.

- /src/components: Directory for React component

- /public: Contains the public files and assets of the application.

- /public/index.html: Base HTML file of the application.

- /build: Contains the generated production files.

### Setup

> Clone this repository to your desired folder: https://github.com/ClaudiaRojasSoto/my-bookstore.git

### Install

> Install this project with: install Stylelint and ESLint

### Usage

> To run the project, execute the following command: just need a web Browser

### Run tests

> To run tests, run the following command: npm start

> you just need a simple web browser to run this project for a test

## 👥 Authors <a name="authors"></a>

👤 **Claudia Rojas**

- GitHub: [@githubhandle](https://github.com/ClaudiaRojasSoto)

- LinkedIn: [LinkedIn](https://www.linkedin.com/in/claudia-soto-260504208/)

<p align="right">(<a href="#readme-top">back to top</a>)</p>

## 🔭 Future Features <a name="future-features"></a>

- **Testing**

- **Deployment**

<p align="right">(<a href="#readme-top">back to top</a>)</p>

## 🤝 Contributing <a name="contributing"></a>

> Contributions, issues, and feature requests are welcome!

> Feel free to check the [issues page](https://github.com/ClaudiaRojasSoto/my-bookstore/issues).

<p align="right">(<a href="#readme-top">back to top</a>)</p>

## ⭐️ Show your support <a name="support"></a>

> If you like this project show support by following this account

<p align="right">(<a href="#readme-top">back to top</a>)</p>

<!-- ACKNOWLEDGEMENTS -->

## 🙏 Acknowledgments <a name="acknowledgements"></a>

> - Microverse for providing the opportunity to learn Git and GitHub in a collaborative environment.

> - GitHub Docs for providing a wealth of information on Git and GitHub.

<p align="right">(<a href="#readme-top">back to top</a>)</p>

<!-- LICENSE -->

## 📝 License <a name="license"></a>

> This project is [MIT](MIT.md).

<p align="right">(<a href="#readme-top">back to top</a>)</p>

| 11 | 0 |

Jager-yoo/Spiderversify | https://github.com/Jager-yoo/Spiderversify | 🕸️ Brings a Spider-Verse like glitching effect to your SwiftUI views! | # 🕸️ Spiderversify your SwiftUI views!

Inspired by the distinctive visual style of the animation "Spider-Man: Spider-Verse series" by Sony Pictures,

<br> `Spiderversify` brings a Spider-Verse like glitching effect to your SwiftUI views.

### ✨ More charming glitching effects are planned for release. Stay tuned!

<br>

<p align="leading">

<img src="https://github.com/Jager-yoo/Spiderversify/assets/71127966/2999354c-a30f-42ef-979f-83977819dbed" width="250"/>

<img src="https://github.com/Jager-yoo/Spiderversify/assets/71127966/f37a331a-1894-4853-a732-ca8a2e6cf107" width="250"/>

<img src="https://github.com/Jager-yoo/Spiderversify/assets/71127966/e006dc74-96d0-4a44-9f38-10d9b4887141" width="250"/>

</p>

The Spiderversify library requires `iOS 15.0`, macOS 12.0, watchOS 8.0, or tvOS 15.0 and higher.

<br> Enjoy bringing a bit of the Spider-Verse into your apps!

<br>

## - How to use Spiderversify

To apply `Spiderversify` to your SwiftUI views, you simply add the `.spiderversify` view modifier.

<br> Here is an example:

<br>

```swift

import SwiftUI

import Spiderversify

struct ContentView: View {

@State private var glitching = false

var body: some View {

Text("Spiderversify")

.spiderversify($glitching, duration: 2, glitchInterval: 0.12) // ⬅️ 🕸️

.font(.title)

.onTapGesture {

glitching = true

}

}

}

```

<br>

## - Parameter Details

- `on`: A Binding<Bool> that controls whether the glitch effect is active.

- `duration`: The duration of the glitch effect animation.

- `glitchInterval`: The interval at which the glitch effect changes. (default value: 0.12 sec)

Please note that both duration and glitchInterval are specified in `seconds`.

<br>

## - Installation

Spiderversify supports [Swift Package Manager](https://www.swift.org/package-manager/).

- Navigate to `File` menu at the top of Xcode -> Select `Add Packages...`.

- Enter `https://github.com/Jager-yoo/Spiderversify.git` in the Package URL field to install it.

<br>

## - License

This library is released under the MIT license. See [LICENSE](https://github.com/Jager-yoo/Spiderversify/blob/main/LICENSE) for details.

| 12 | 0 |

yarspirin/LottieSwiftUI | https://github.com/yarspirin/LottieSwiftUI | null | # LottieSwiftUI

A Swift package that provides a SwiftUI interface to the popular [Lottie](https://airbnb.design/lottie/) animation library. The LottieSwiftUI package allows you to easily add and control Lottie animations in your SwiftUI project. It offers a clean and easy-to-use API with customizable options like animation speed and loop mode.

<img src="https://raw.githubusercontent.com/mountain-viewer/LottieSwiftUI/master/Resources/sample.gif" height="300">

## Features

- Swift/SwiftUI native integration.

- Customize animation speed.

- Choose loop mode: play once, loop, or auto reverse.

- Control of animation playback (play, pause, stop).

- Clean, organized, and thoroughly documented code.

- Efficient and performance-optimized design.

## Installation

This package uses Swift Package Manager, which is integrated with Xcode. Here's how you can add **LottieSwiftUI** to your project:

1. In Xcode, select "File" > "Swift Packages" > "Add Package Dependency"

2. Enter the URL for this repository (https://github.com/yarspirin/LottieSwiftUI)

## Usage

Here's an example of how you can use `LottieView` in your SwiftUI code:

```swift

import SwiftUI

import LottieSwiftUI

struct ContentView: View {

@State private var playbackState: LottieView.PlaybackState = .playing

var body: some View {

LottieView(

name: "london_animation", // Replace with your Lottie animation name

animationSpeed: 1.0,

loopMode: .loop,

playbackState: $playbackState

)

}

}

```

<img src="https://raw.githubusercontent.com/mountain-viewer/LottieSwiftUI/master/Resources/sample.gif" height="300">

Properties:

- `name`: The name of the Lottie animation file (without the file extension). This file should be added to your project's assets.

- `animationSpeed`: The speed of the animation. It should be a CGFloat value, where 1.0 represents the normal speed. Defaults to 1.0.

- `loopMode`: The loop mode for the animation. Default is LottieLoopMode.playOnce. Other options include .loop and .autoReverse.

- `playbackState`: The playback state of the animation. It should be a `Binding<PlaybackState>`, allowing the state to be shared between multiple views. This allows for the control of the animation (play, pause, stop) from the parent view.

### Controlling Animation Playback

To control the animation playback, pass a `Binding` to `PlaybackState` to `LottieView`. This will allow you to control the animation's state (play, pause, stop) from its parent view or any other part of your app. For example, you could bind it to a SwiftUI `@State` property, and then modify that state when a button is pressed to control the animation.

## Requirements

- iOS 13.0+

- Xcode 14.0+

- Swift 5.7+

## Contributing

Contributions are welcome! If you have a bug to report, a feature to request, or have the desire to contribute in another way, feel free to file an issue.

## License

LottieSwiftUI is available under the MIT license. See the LICENSE file for more info.

| 85 | 49 |

charlesnathansmith/whatlicense | https://github.com/charlesnathansmith/whatlicense | WinLicense key extraction via Intel PIN | # whatlicense

Full tool chain to extract WinLicense secrets from a protected program then launch it bypassing all verification steps, utlizing an Intel PIN tool and license file builder.

For a full technical breakdown of everything these tools are doing under the hood, see [tech_details.pdf](tech_details.pdf)

I have no qualms about releasing this because you still need the launcher for the final run, so this can't be used to make restributable cracked binaries. This is helpful for older programs where the manufacturer is no longer around to ask for a license file from, as the verification mechanism seems to have remained unchanged for at least a decade. It's also an academic curiosity, as the protection scheme is extraordinarily convoluted, involving multiple layers of decryption that are buried in virtualization.

I've tried to test this on programs protected with as many different versions of WL as possible, using the default virtualization engine and license scheme (ie. programs that use *regkey.dat* files, not the *SmartLicense* or registry key schemes, but these are the defaults you are going to run into the vast majority of the time. It's difficult to find older versions of their protector software, so if you're running into problems, or find demos of some of their older versions, or can refer me to more products that employ it commercially, let me know so I can generalize this as much as possible.

Neither I nor this project are in any way endorsed or affiliated with Oreans WinLicense or Intel PIN. No source code was ever seen and all tools were built solely through reverse engineering, so there is no copyrighted content contained in anywhere.

The license file building tool **wl-lic** includes [libtommath](https://github.com/libtom/libtommath), which was declared restriction-free (Unlicense) at the time of publication, and I place no further restrictions on your ability to re-use, resdistribute, modify, etc. any part of this project. I don't make any warranties about any of it though, so I wouldn't drop any of it into anything mission-critical without thorough testing.

# building

Add the **whatlicense** root directory to your Intel PIN tools directory (eg. C:\pin\source\tools\whatlicense), open **whatlicense.sln** with Visual Studio and choose the build environment that corresponds to the version of Pin you are using ("Pin 3.26" for versions up to 3.26 or "Pin 3.27+" for versions 3.27 and newer.)

Only x86 is currently supported.

A **/bin** directory will be created that contains the built executables.

If you run into issues with it, make sure you are building it from within your PIN tools directory, and if you really have trouble, **wl-lic** is just a *C++ Console* project, and you can build **wl-extract** by just a gutting a copy of **MyPinTool** that comes with PIN and replacing its code with that of **wl-extract**.

# usage

The overall process is to first build a dummy license file, which is internally consistent (correct layout and checksums), but is built with arbitrary keys that aren't valid for the protected program. This is accomplished by running **wl-lic**:

```

wl-lic -d regkey.dat -r regkey.rsa

```

This will produce *regkey.dat*, which is our dummy license file, and *regkey.rsa*, which contains information about the associated RSA public keys needed to decrypt and verify it.

Next **wl-extract** is launched via PIN, supplying the files we just generated and the path to the protected program. You can use **-o** to specify the log file, and if you already know the hardware ID (**HWID**) that WL generates for you, you can use the **-s** option to avoid searching for it in error messages, which is a lot faster since it can kill the program as soon as it extracts everything else. You're **HWID** will look similar to *0123-4567-89AB-CDEF-FEDC-BA98-7654-3210*. It will usually be given to you during nag messages while trying to run the program without a license, or wherever you normally go to try to register it, since the developer would need it to build a license for you.

```

C:\pin\pin.exe -t wl-extract.dll -d regkey.dat -r regkey.rsa -o logfile -s -- C:\[path]\protected.exe

```

This will launch the protected program and start working through the verification steps, bypassing them and extracting and calculating the correct values. You can monitor the logfile during this process for reasonably verbose progress updates. When it is finished, it should generate a **main_hash** string near the end of the log. It will be a long alpha-numeric string starting with *aaaa...*

We can now build a new license using your extracted **main_hash** and **HWID**, which will produce a license file built with all of the correct keys except for the RSA keys, which there is currently no way to overcome:

```

wl-lic -h HWID -m main_hash -d regkey2.dat -r regkey2.rsa

```

Finally, this license file can be used to launch the original program using the **-l** (lowercase L) option:

```

C:\pin\pin.exe -t wl-extract.dll -d regkey2.dat -r regkey2.rsa -o logfile -l -- C:\[path]\protected.exe

```

And it should open right up at that point. The launcher bypasses RSA, but skips the rest of the previous extraction steps then lets the program run normally from that point forward. Since the license is otherwise built using all of the correct keys now, verification should pass and the program should launch.

# known issues

I haven't figured out how to fully detach the launcher after the RSA bypass just yet. PIN_Detach() launches an external process that connects as a debugger in order to fully extricate the PIN framework and trips the anti-debug protections. It's not as simple as just implementing ScyllaHide's techniques, because the PEB needs fixed after PIN's external process attaches, after we lose instrumentation ability. We'll have to write some permanent patches that can catch and deal with it.

All instrumentation is removed after the RSA bypass, which lets the program run reasonably fast, but it's still running in JIT mode instead of natively, which isn't ideal.

More ideally would be to permanently patch the RSA public keys in the executable, so the launcher isn't required at all, but that'll require an equally monumental undertaking to this one, with the need to understand all of the unpacking and integrity check routines.

Pin tools usually do not trip WinLicense anti-debug alarms, but it seems to be a bit hit and miss after broader testing. I am currently working to add **ScyllaHide** and **Themidie** injection which should mitigate this issue during extraction, though more extensive work is likely necessary to make debugger attachment to the running process possible.

While the entire build process has been generalized as much as possible, there are slight differences between programs protected with different versions and even between programs protected by commercial and demo versions. There is no official list of programs protected with it and older versions of even the demo protection software is difficult to track down, so it is difficult to thoroughly test this and it may not work on all protected programs. If it's not working on something, refer me to it so I can see what's going on.

Some of the hash and key values that the license files implement never seemed to be verified at all during testing, and valid licenses could be built with completely arbitrary values for them even against commercially available products. They may have put them in and then just never bothered to implement verifying them. There's a haphazardness to the license format that would lead me to not at all be surprised by that, but it's disconcerting since there could be version out there that do verify these and I have no way to know how they work yet.

As before, don't pirate things. You're going to have to use the launcher every time you run it and then it'll have to run in JIT mode the whole time. It should get you in and give you the chance to properly evaluate the full version of something, but if you want the best experience, you're going to have to pay for it.

| 55 | 4 |

ugjka/blast | https://github.com/ugjka/blast | blast your linux audio to DLNA receivers | # BLAST

## Stream your Linux audio to DLNA receivers

You need `pactl`, `parec` and `lame` executables/dependencies on your system to run Blast.

If you have all that then you can launch `blast` and it looks like this when you run it:

```

[user@user blast]$ ./blast

----------

DLNA receivers

0: Kitchen

1: Phone

2: Bedroom

3: Livingroom TV

----------

Select the DLNA device:

[1]

----------

Audio sources

0: alsa_output.pci-0000_00_1b.0.analog-stereo.monitor

1: alsa_input.pci-0000_00_1b.0.analog-stereo

2: bluez_output.D8_AA_59_95_96_B7.1.monitor

3: blast.monitor

----------

Select the audio source:

[2]

----------

Your LAN ip addresses

0: 192.168.1.14

1: 192.168.122.1

2: 2a04:ec00:b9ab:555:3c50:e6e8:8ea:211f

3: 2a04:ec00:b9ab:555:806d:800b:1138:8b1b

4: fe80::f4c2:c827:a865:35e5

----------

Select the lan IP address for the stream:

[0]

----------

2023/07/08 23:53:07 starting the stream on port 9000 (configure your firewall if necessary)

2023/07/10 23:53:07 stream URI: http://192.168.1.14:9000/stream

2023/07/08 23:53:07 setting av1transport URI and playing

```

## Building

You need the `go` and `go-tools` toolchain, also `git`

then execute:

```

git clone https://github.com/ugjka/blast

cd blast

go build

```

now you can run blast with:

```

[user@user blast]$ ./blast

```

## Bins

Prebuilt Linux binaries are available on the releases [page](https://github.com/ugjka/blast/releases)

## Why not use pulseaudio-dlna?

This is for pipewire-pulse users.

## Caveats

* You need to allow port 9000 from LAN for the DLNA receiver to be able to access the HTTP stream

* blast monitor sink may not be visible in the pulse control applet unless you enable virtual streams

## License

```

MIT+NoAI License

Copyright (c) 2023 ugjka <[email protected]>

Permission is hereby granted, free of charge, to any person obtaining a copy

of this software and associated documentation files (the "Software"), to deal

in the Software without restriction, including without limitation the rights/

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

copies of the Software, and to permit persons to whom the Software is

furnished to do so, subject to the following conditions:

The above copyright notice and this permission notice shall be included in all

copies or substantial portions of the Software.

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

SOFTWARE.

This code may not be used to train artificial intelligence computer models

or retrieved by artificial intelligence software or hardware.

``` | 13 | 0 |

Nainish-Rai/strings-web | https://github.com/Nainish-Rai/strings-web | null |

# String - Threads Web Frontend

String is a modern and innovative threads opensource frontend built with Next.js, Tailwind CSS, and the latest web development technologies.

[Live Preview](https://strings-web.vercel.app)

## Screenshots

## Installation

1. Clone the repository:

```bash

git clone https://github.com/your-username/string.git

```

2. Navigate to the project directory:

```bash

cd string

```

3. Install the dependencies:

```bash

npm install

```

4. Start the development server:

```bash

npm run dev

```

5. Open your browser and visit `http://localhost:3000` to access String.

## Contributing

Contributions are welcome! If you'd like to contribute to String, please follow these steps:

1. Fork the repository.

2. Create a new branch: `git checkout -b feature/your-feature-name`.

3. Make your changes and commit them: `git commit -m 'Add your feature'`.

4. Push to the branch: `git push origin feature/your-feature-name`.

5. Submit a pull request.

## License

This project is licensed under the [MIT License](LICENSE).

## Acknowledgements

- [Next.js](https://nextjs.org/)

- [Tailwind CSS](https://tailwindcss.com/)

- [React](https://reactjs.org/)

- [Your Inspiration Source](https://www.example.com/) - Mention any sources or inspiration for your project.

## Contact

If you have any questions or feedback, feel free to reach out:

- Email: [email protected]

- LinkedIn: [Nainish-rai](https://www.linkedin.com/in/nainish-rai/)

- Twitter: [@nain1sh](https://twitter.com/nain1sh)

| 10 | 2 |

verytinydever/zichainTestTask | https://github.com/verytinydever/zichainTestTask | null | # zichainTestTask

## Running the app

```bash

# install packages

$ npm i

# run eslinter

$ npm run eslint

# run apitests

$ npm run apitests

# run application

$ npm start

follow http://0.0.0.0:3000/link/add post request with req.body.usersLink

to add long link and get short one

follow http://0.0.0.0:3000/* to be moved to long link

```

## Examples

```bash

ed@ed-Extensa-2540:~$ curl -v -X POST http://0.0.0.0:3000/link/add/ -d 'usersLink=https://google.com'

Note: Unnecessary use of -X or --request, POST is already inferred.

* Trying 0.0.0.0...

* TCP_NODELAY set

* Connected to 0.0.0.0 (127.0.0.1) port 3000 (#0)

> POST /link/add/ HTTP/1.1

> Host: 0.0.0.0:3000

> User-Agent: curl/7.58.0

> Accept: */*

> Content-Length: 28

> Content-Type: application/x-www-form-urlencoded

>

* upload completely sent off: 28 out of 28 bytes

< HTTP/1.1 200 OK

< X-Powered-By: Express

< Content-Type: application/json; charset=utf-8

< Content-Length: 40

< ETag: W/"28-X/qKmoA514kT4gTtb2bCEuaQWxI"

< Date: Fri, 23 Aug 2019 08:19:53 GMT

< Connection: keep-alive

<

* Connection #0 to host 0.0.0.0 left intact

{"link":"http://0.0.0.0:3000/z6Ak11T1u"}

ed@ed-Extensa-2540:~$ curl -v -X GET http://0.0.0.0:3000/z6Ak11T1u

Note: Unnecessary use of -X or --request, GET is already inferred.

* Trying 0.0.0.0...

* TCP_NODELAY set

* Connected to 0.0.0.0 (127.0.0.1) port 3000 (#0)

> GET /z6Ak11T1u HTTP/1.1

> Host: 0.0.0.0:3000

> User-Agent: curl/7.58.0

> Accept: */*

>

< HTTP/1.1 302 Found

< X-Powered-By: Express

< Location: https://google.com

< Vary: Accept

< Content-Type: text/plain; charset=utf-8

< Content-Length: 40

< Date: Fri, 23 Aug 2019 08:20:33 GMT

< Connection: keep-alive

<

* Connection #0 to host 0.0.0.0 left intact

Found. Redirecting to https://google.com

```

| 14 | 0 |

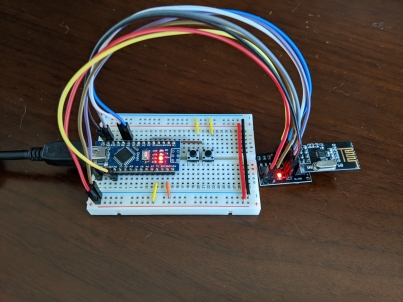

decrazyo/unifying | https://github.com/decrazyo/unifying | FOSS re-implementation of the Logitech Unifying protocol |

# Logitech Unifying Protocol Implementation

This project is an attempt to re-implement the proprietary Logitech Unifying protocol as a free and open C library.

The library is intended to be Arduino compatible while remaining compiler and hardware agnostic.

The goal of this project is to enable people to create custom keyboards and mice that are compatible with Logitech Unifying receivers.

## Example

The provided Arduino example is dependent on the RF24 library.

https://github.com/nRF24/RF24

## TODO

- [ ] Add proper HID++ response payloads

- [ ] Add more examples

- [ ] General code cleanup

## Done

- [x] Timing-critical packet transmission

- [x] Pairing with a receiver

- [x] HID++ error response payloads

- [x] Encrypted keystroke payloads

- [x] Add documentation

- [x] Add mouse payloads

- [x] Add multimedia payloads

- [x] Add wake up payloads

## See also

[Hacking Logitech Unifying DC612 talk](https://www.youtube.com/watch?v=10lE96BBOF8)

[nRF24 pseudo-promiscuous mode](http://travisgoodspeed.blogspot.com/2011/02/promiscuity-is-nrf24l01s-duty.html)

[KeySweeper](https://github.com/samyk/keysweeper)

[MouseJack](https://github.com/BastilleResearch/mousejack)

[KeyJack](https://github.com/BastilleResearch/keyjack)

[KeySniffer](https://github.com/BastilleResearch/keysniffer)

[Of Mice And Keyboards](https://www.icaria.de/posts/2016/11/of-mice-and-keyboards/)

[Logitech HID++ Specification](https://drive.google.com/folderview?id=0BxbRzx7vEV7eWmgwazJ3NUFfQ28)

[Official Logitech Firmware](https://github.com/Logitech/fw_updates)

| 10 | 0 |

taikoxyz/grants | https://github.com/taikoxyz/grants | Community grants program | # Taiko Grants Program

View the full grants program description on our mirror blog here: https://taiko.mirror.xyz/G7dmuoR42S4D55vT8bs_lAxPZP63kAgRu2IfqkJdf6U.

We are super excited to announce the launch of Taiko’s first community grants program! 🌍🏗️

The aim of this program is to discover and support innovative community members that build up and build on the Taiko ecosystem, with the financial incentives and developer resources needed to bring their visions to life. Despite still being in our testnet phase we think there is immense value in supporting ambitious builders from Day 0, and allowing them to participate in the network’s potential success. Even more importantly, we see this as a great opportunity to learn more about effective grant frameworks and outcomes, so that we can iterate and improve our future grants programs together with you.

In the post below, we will cover some of the most relevant points of the program, and also provide you with some guidance on the areas that we are particularly excited about.

As with everything we do, we hugely value the feedback of our community, so please do let us know your thoughts (or any questions) in our Discord or community forum.

## Grant Program Format

All awarded grants will be in the form of future Taiko tokens, with up to 0.2% of the total token supply allocated to just the inaugural program 👀. These tokens will originate from the treasury, which will eventually be owned and managed by the Taiko DAO. Until the DAO is established, the team and/or foundation will oversee the treasury. While the total allocation of tokens to future grant programs is still to be determined, we expect it to be significantly higher than what is available in this round.

### Distribution and Vesting

Any awarded grants will remain valid for up to 6 months and will come with milestones (2-month intervals) which determine the vesting schedule of the grant tokens. Should a project exceed the 6-month timeframe, it forfeits any remaining grant funds, and the remaining tokens will be reallocated to other grant initiatives. However, projects that applied to or received any grants, may still apply and qualify for future Taiko grant opportunities. As a matter of fact, we actively encourage past grantees to continue to apply to future programs, i.e., there is no downside to applying as early as possible.

All vested tokens will also observe a 6-month lock-up period, which commences at the Token Generation Event (TGE) or upon milestone fulfillment — whichever comes later. In addition, we will ask our grantees to demonstrate their deliverables primarily in open-source code and to deploy usable prototypes on Taiko’s testnets.

### Project Guidelines

Our project guidelines advocate for transparency and cooperative growth. As such, we require that:

Unless explicitly stated, all smart contracts and frontend code be open-sourced from the project's inception.

Projects involving dApps must choose Taiko as one of their very first platforms, but they can also deploy and integrate on other L1/L2/L3s. That said, we do look favorably upon Taiko-tailored projects, i.e., those that truly take advantage of Taiko’s strengths and design decisions, such as Ethereum-equivalence and permissionless proposing and proving.

Taiko Labs or its associated entities receive the right to invest up to $250K USD in any project’s subsequent fundraising round, up to one-year post-grant acceptance (if applicable).

Grantees can apply for grants from other projects, given all other requirements are met.

Grantees and Taiko must establish a formal legal agreement detailing the expectations and responsibilities of both parties. Before execution of the agreement, discretion for changes in the program rest exclusively with Taiko.

If you are awarded a grant, you may not disclose this (incl. any details such as the number of vested or received tokens) until we have done so.

Note that eligible projects can be in the early ideation stage, active development stage, or in mature operational stage, looking to make meaningful contributions to Taiko.

### Application Process

Applications should be submitted by filling in the template issue in this GitHub repo.

Please be as detailed as possible when filling out the form and focus on areas such as the background of core team members, technical design and integration with Taiko, and any product roadmaps (if available).

The application window is open from now until August 31st, 2023. The team will continuously review all applications and be in touch with shortlisted projects to ask further questions, set up interviews or approve their submission. We may reach out or approve applicants on a rolling basis, and will have reached final decisions by two weeks after the submission deadline. We will also announce the winning projects via our official public channels.

Please note that while our aim is to streamline the process and minimize stringent requirements, we reserve the right to adjust the criteria or delay decisions should the application volume become overwhelming.

## Categories

While Taiko is a general-purpose ZK rollup and we welcome all kinds of applications, there are a few areas that get us particularly excited and giddy 💗. To help get our builders thinking about creative solutions, we have therefore shared them below.

**1. Zero-Knowledge Proofs (ZKP)**

Why we’re excited: well, it’s really no secret that we love ZK, and hence we are very keen to promote any advancements in this space!

Our current focus lies in the following areas:

Circuit Optimizations: we are seeking projects that strive to reduce proving times and hardware requirements. This focus area aims to curtail costs and latency, leading to more cost-effective and efficient ZK-EVMs.

Prover Optimizations: where the goal is to explore optimization opportunities within a variety of hardware systems, including CPU, GPU/FPGA/ASIC, and memory. Enhancements in this area have the potential to significantly boost the performance of provers within ZK-EVM systems.

Next-Generation Proving Systems: currently, the proving system utilizes turbo plonk, but the program is eager to explore new proving systems, like Nova. Any project that aids in the transition to these novel systems can help alleviate bottlenecks present in the existing ZK-EVM.

Circuit Writing Tools Improvements: we look for projects that simplify and improve circuit writing. This will directly contribute to the efficiency and readability of the code, thereby enhancing the success of ZK-EVMs.

Testing Improvements: given the complexity of a ZK-EVM, rigorous testing is necessary to ensure its robust functionality. Therefore, projects that broaden testing capabilities and improve the overall quality assurance process are highly encouraged.

ZK-Specific Applications: showing interest in how ZK technology can be applied to enhance functionality in areas like bridges, privacy, and identity. Projects that challenge the boundaries of these ZK-specific applications could foster unprecedented innovation in the field.

**2. Proposer Optimization**

Why we're excited: Taiko being a permissionless based-rollup allows any address to build and propose an L2 block directly on L1, without going through an off-chain (Ethereum) proposer selection or block selection process. This means that multiple proposers may be building blocks in parallel, resulting in duplicated effort and wasted gas. One way of solving this is by hooking into Ethereum’s PBS. However, this is only one possible solution, and there are many other aspects that require a well-thought-out approach, like the impact of variable L1 gas costs and prover cost, and L2 transaction price prediction. We look forward to seeing innovative approaches that seek to solve or mitigate this issue.

3. Alternative Proposer-Prover Tokenomics

Why we're excited: the intricacies and challenges of L2 tokenomics design have proven to be more engaging and complex than we initially anticipated. We've experimented with two designs across our three testnets. These iterations featured auto-adjustments of fees and rewards per gas or per block. Additionally, we attempted a third design based on batch auctions but subsequently decided not to pursue it. Currently, we are testing a fourth design premised on token staking.

While we recognize that no perfect L2 tokenomics solution exists, we are eager to explore innovative designs brought forth by community developers. We remain open to incorporating these promising concepts into our code, continuously improving and evolving.

We have laid out our design objectives and a set of metrics to evaluate L2 tokenomics for Taiko in this document.

**4. Proof Markets**

Why we're excited: in the latest update to Taiko's tokenomics, provers have the ability to stake Taiko tokens and designate an expected rewardPerGas for proving, thereby securing an exclusive chance to prove a block. Given that such tokenomics are executed via smart contracts on L1, only the top 32 provers are supported.