metadata

license: mit

language:

- en

metrics:

- accuracy

pipeline_tag: text-generation

MathCoder: Seamless Code Integration in LLMs for Enhanced Mathematical Reasoning

Paper: https://arxiv.org/pdf/2310.03731.pdf

Repo: https://github.com/mathllm/MathCoder

Introduction

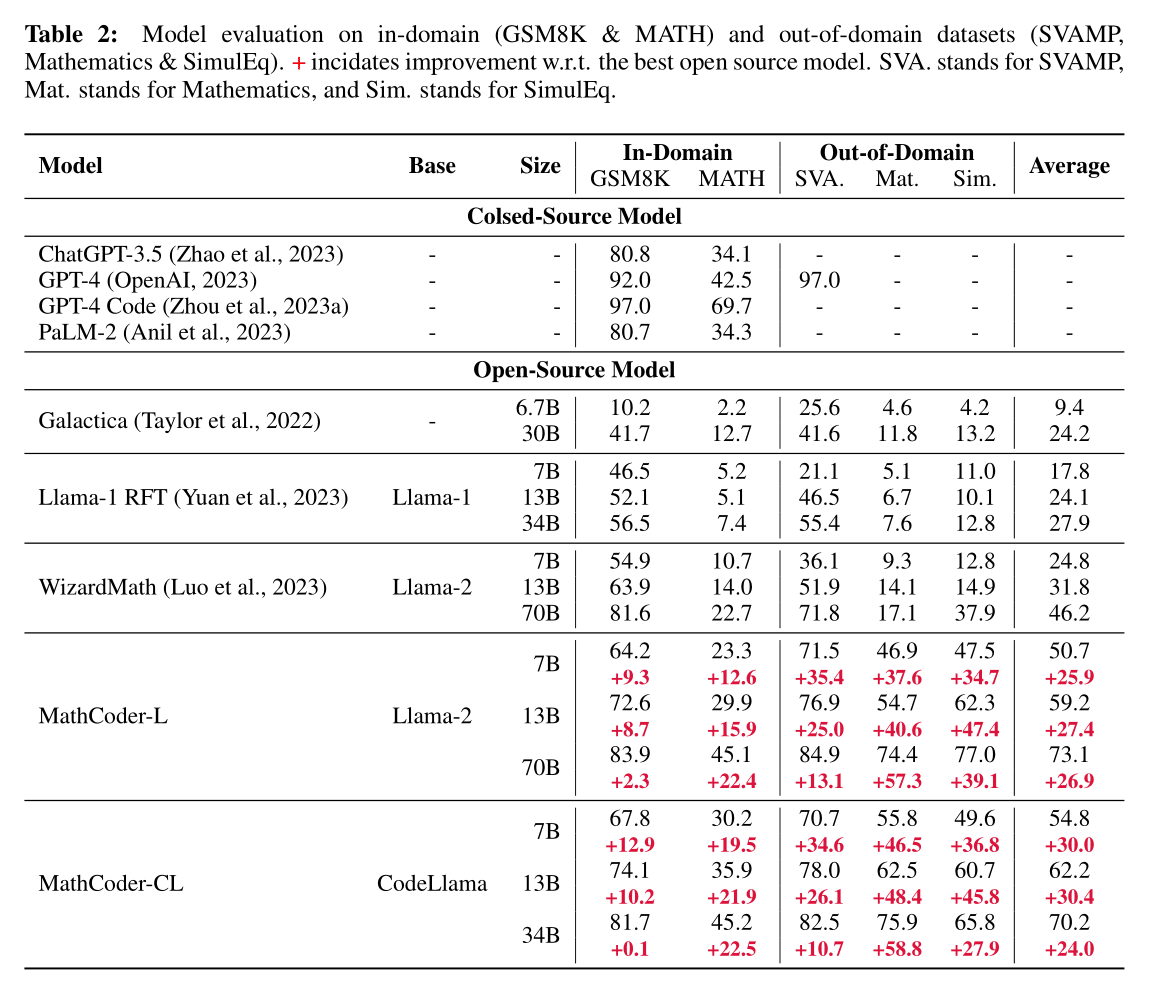

We introduce MathCoder, a series of open-source large language models (LLMs) specifically tailored for general math problem-solving.

| Base Model: Llama-2 | Base Model: Code Llama |

|---|---|

| MathCoder-L-7B | MathCoder-CL-7B |

| MathCoder-L-13B | MathCoder-CL-34B |

Training Data

The models are trained on the MathCodeInstruct Dataset.

Training Procedure

The models are fine-tuned with the MathCodeInstruct dataset using the original Llama-2 and CodeLlama models as base models. Check out our paper and repo for more details.

Evaluation

Usage

You can use the models through Huggingface's Transformers library. Use the pipeline function to create a text-generation pipeline with the model of your choice, then feed in a math problem to get the solution. Check our Github repo for datails.

Citation

Please cite the paper if you use our data, model or code.

@misc{wang2023mathcoder,

title={MathCoder: Seamless Code Integration in LLMs for Enhanced Mathematical Reasoning},

author={Ke Wang and Houxing Ren and Aojun Zhou and Zimu Lu and Sichun Luo and Weikang Shi and Renrui Zhang and Linqi Song and Mingjie Zhan and Hongsheng Li},

year={2023},

eprint={2310.03731},

archivePrefix={arXiv},

primaryClass={cs.CL}

}