Backbone Finetuned YOLO Models for Oriented Object Detection

Overview

This repository contains finetuned YOLO models using a specific backbone for two sizes: Nano and Small. These models were trained and evaluated on the DIOR dataset, showcasing their performance in satellite object detection tasks. The finetuning process emphasized optimizing both training time and detection metrics.

This YOLO models were finetuned to perform Oriented Bounding Box detection.

Usage

The models can be used for oriented object detection tasks and are compatible with the YOLO framework. Detailed instructions for downloading and using these models are provided in the README.

Dataset

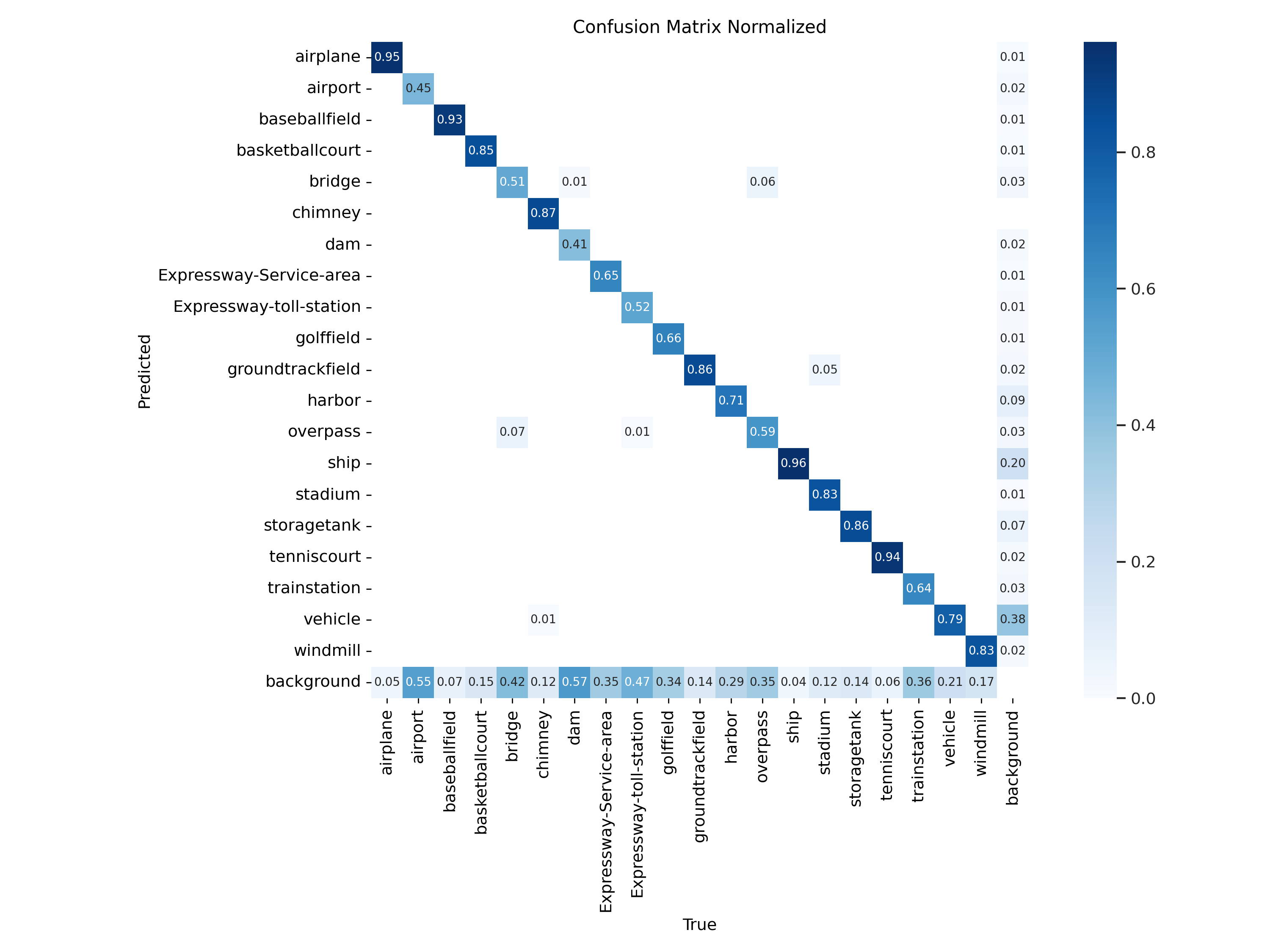

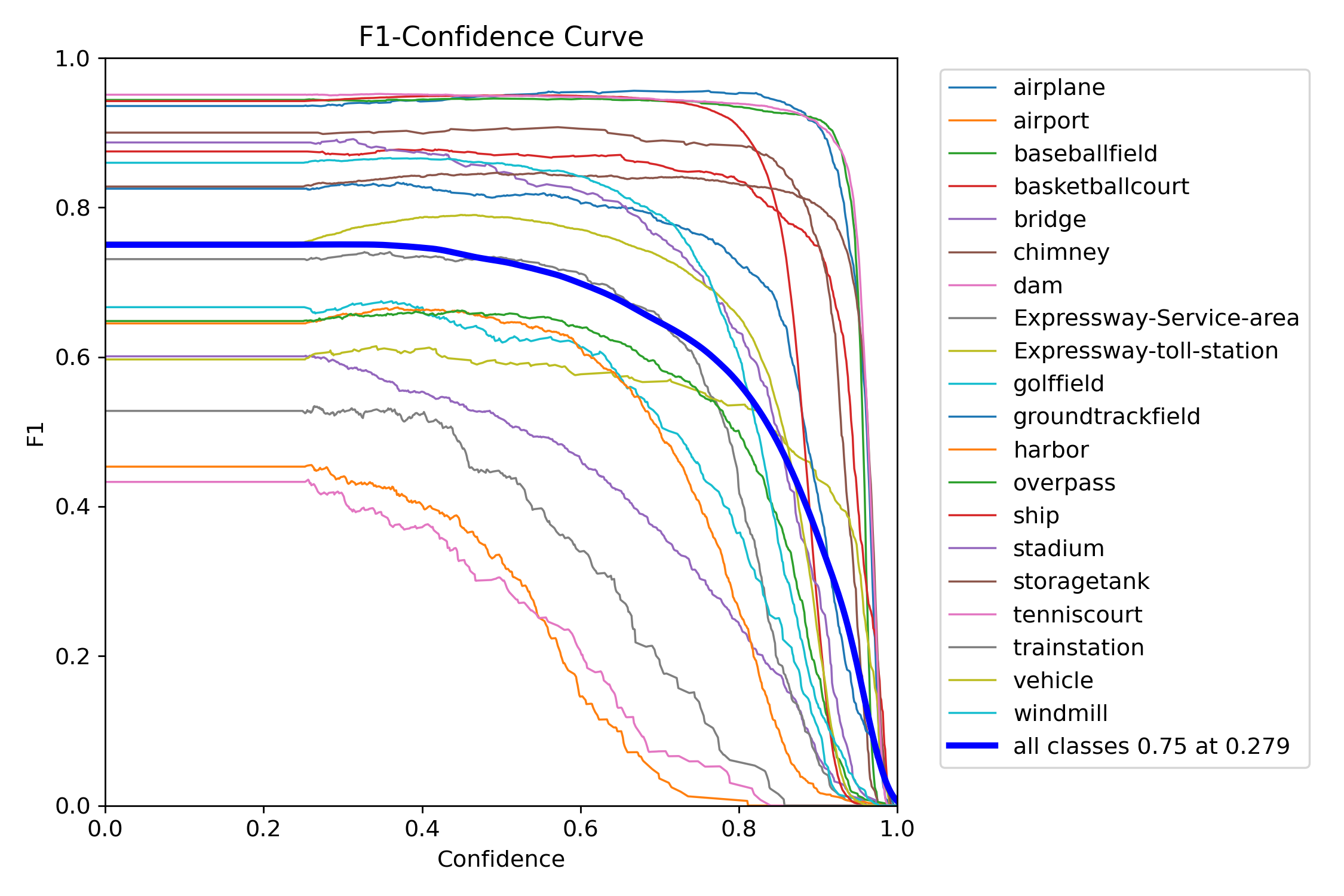

The models were trained on the DIOR Dataset, which is tailored for detecting elements in satellite images. Evaluation metrics include mAP50 and mAP50-95 for a comprehensive assessment of detection accuracy.

Model Performance and Comparison Table

| Model | Training Time (s) | mAP50 | mAP50-95 |

|---|---|---|---|

| Backbone Nano | 7861.18 | 0.7552 | 0.5905 |

| Backbone Small | 7719.13 | 0.7824 | 0.6219 |

Key Observations

- Backbone Nano: Achieves a respectable mAP50-95 of 0.5905, with moderate training time.

- Backbone Small: Outperforms the Nano model in both mAP50 and mAP50-95, while requiring slightly less training time.

Examples

How to Use the Models

- Clone the repository.

- Install the ultralytics library (pip install ultralytics)

- Load the model size of your choice.

from ultralytics import YOLO

# Load a finetuned YOLO model

model = YOLO('path-to-model.pt')

# Perform inference

results = model('path-to-image.jpg')

results.show()

If your goal is to use it in matlab, you need to:

- Clone the repository.

- Clone the Matlab YOLOv8 repository.

- Use the convert_to_onnx.py

- Load the model in Matalb like shown in 3_YOLO_matlab.mlx

Model tree for pauhidalgoo/yolov8-DIOR

Base model

Ultralytics/YOLOv8