Model Card for ru-rope-t5-small-instruct

The Russian Rotary Position Embedding T5 model of small version after instruct tuning

Model Details

The model was trained in a Russian corpus with a mix of English using the Mixture-Of-Denoisers pre-training method by UL2 on 1024 length sequences. Training using Flash Attention 2 is available because of the replacement of bias with rotary encoding.

- Model type: RoPE T5

- Language(s) (NLP): Russian, English

Uses

Finetuning for downstream tasks

Bias, Risks, and Limitations

Despite the instructional tuning, it is not recommended to use in zero-shot mode due to the small size

Training Details

Training Data

A corpus of Russian texts from Vikhr filtered by FRED-T5-1.7B perplexy. Instructions are translated English set

Training Procedure

Using AdamWScale instead of Adafactor for stable learning without loss explosions

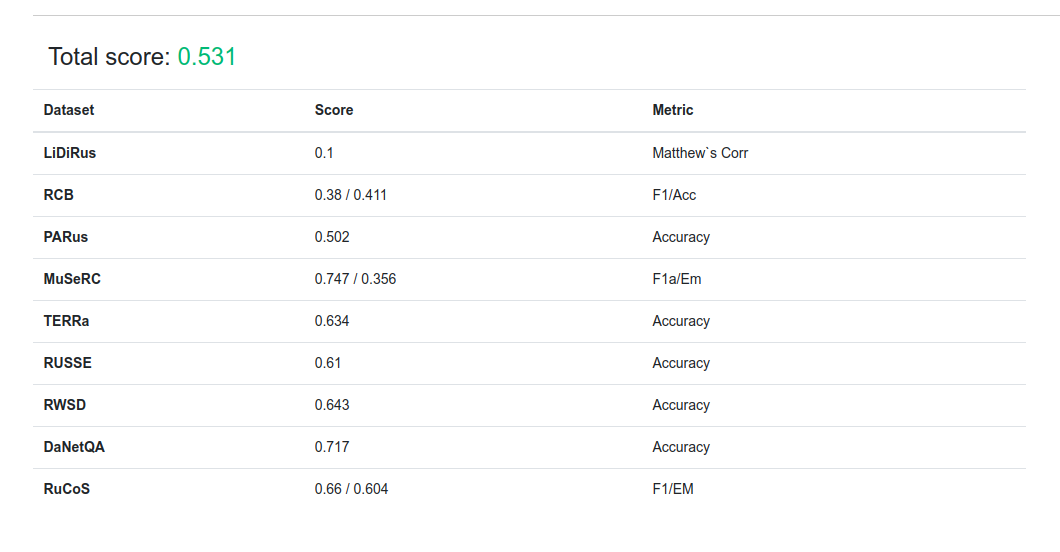

Metrics

Model Card Contact

- Downloads last month

- 22

This model does not have enough activity to be deployed to Inference API (serverless) yet. Increase its social

visibility and check back later, or deploy to Inference Endpoints (dedicated)

instead.