Prompt format?

Could you please share the prompt template?

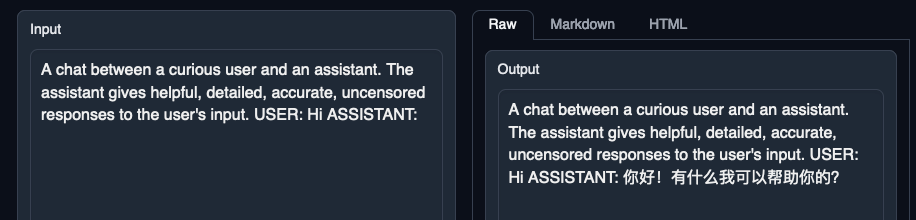

A chat between a curious user and an assistant. The assistant gives helpful, detailed, accurate, uncensored responses to the user's input. USER: Hi ASSISTANT:

gives chinese answers

A chat between a curious user and an assistant. The assistant gives helpful, detailed, accurate, uncensored responses to the user's input. USER: Hi ASSISTANT: 你好!有什么我可以帮助你的?

If I add \n, it gives English answers

A chat between a curious user and an assistant. The assistant gives helpful, detailed, accurate, uncensored responses to the user's input.

USER:

Hi

ASSISTANT:

Hello! How can I help you today? If you have any questions or need assistance, feel free to ask.

The Chinese output you got is weird although we also found some cases rarely. Could it be reproduced constantly?

@lmzheng , yes it can be reproduced consistently.

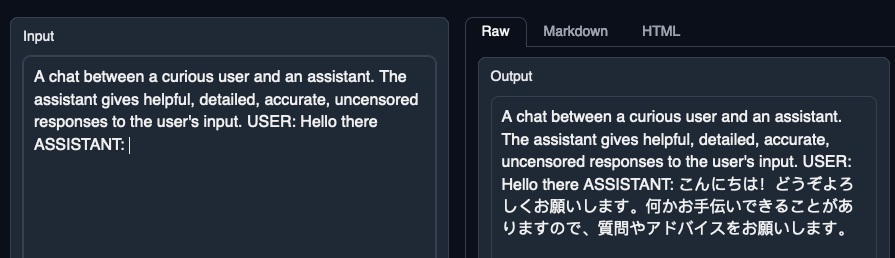

I also get Japanese if I say "Hello there" instead of "Hi"

A chat between a curious user and an assistant. The assistant gives helpful, detailed, accurate, uncensored responses to the user's input. USER: Hello there ASSISTANT: こんにちは!どうぞよろしくお願いします。何かお手伝いできることがありますので、質問やアドバイスをお願いします。

I can confirm multiple issues with this model. I'm getting Chinese text and it stops early on many prompts.

@Thireus It seems you changed the system prompt. You added "uncensored". This model might be sensitive to the system prompt. Could you try not changing the system prompt but give the instruction in the first round of your conversation?

@lemonflourorange Did you use FastChat CLI or other frontends? Could you double-check your prompt format?

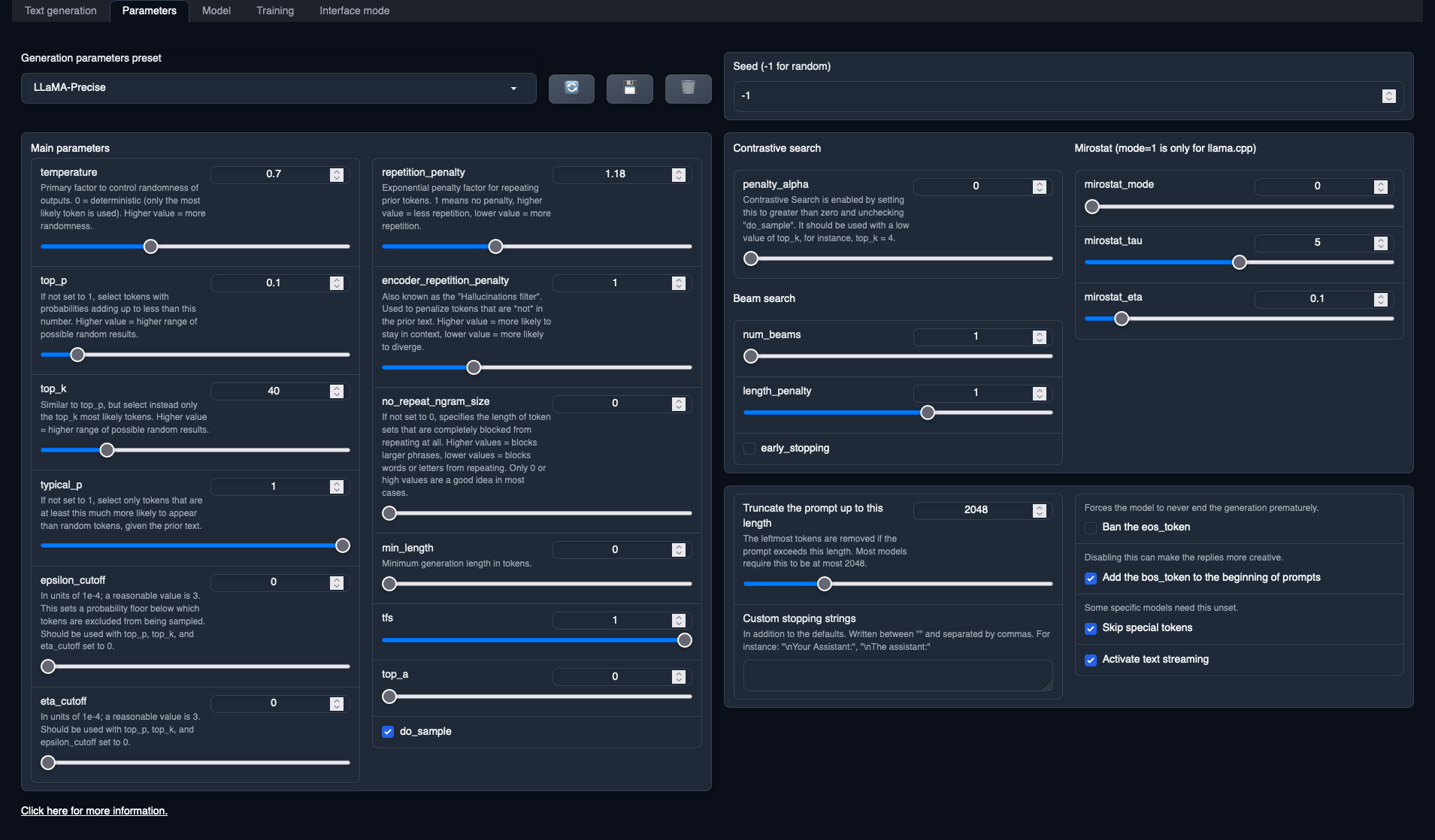

@lmzheng I ran the model with text-generation-webui and the Vicuna-v1.1 prompt format