license: cc-by-nc-4.0

Overview

This is a fine-tuned 13b parameter LlaMa model, using completely synthetic training data created by https://github.com/jondurbin/airoboros

I don't recommend using this model! The outputs aren't particularly great, and it may contain "harmful" data due to jailbreak

Please see one of the updated airoboros models for a much better experience.

Eval (gpt4 judging)

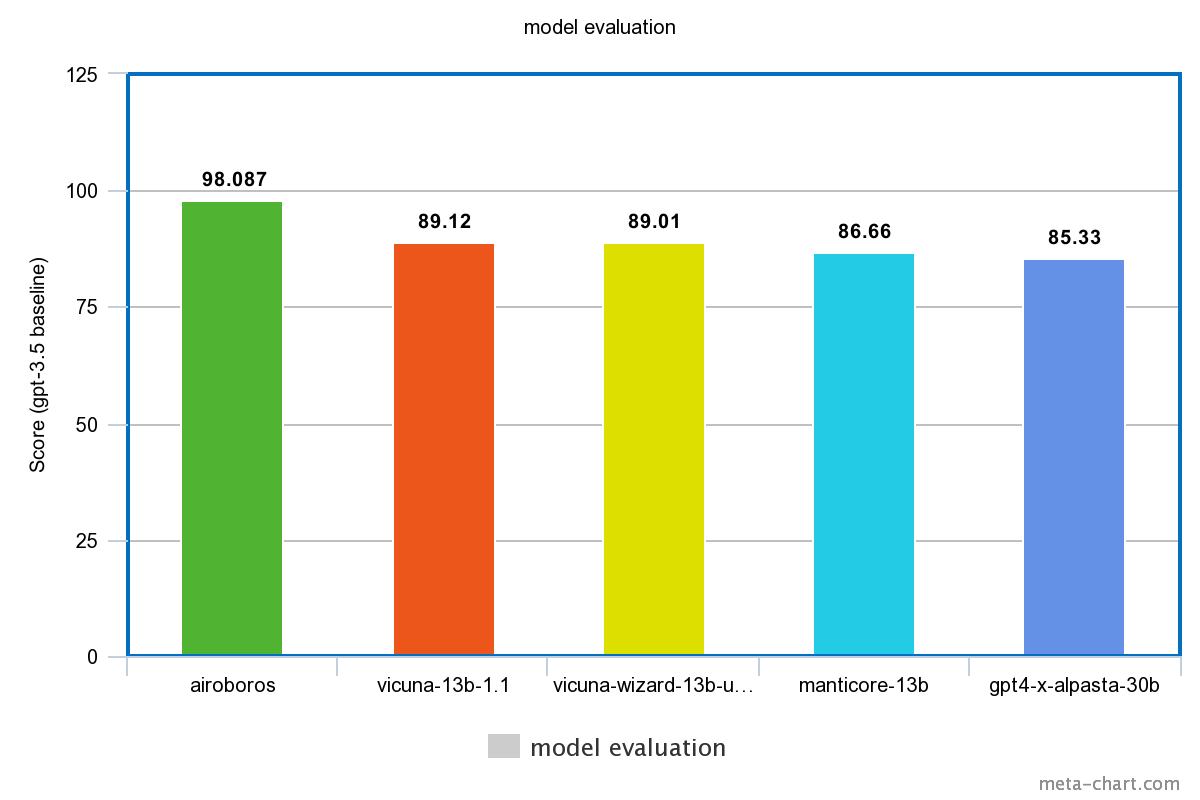

| model | raw score | gpt-3.5 adjusted score |

|---|---|---|

| airoboros-13b | 17947 | 98.087 |

| gpt35 | 18297 | 100.0 |

| gpt4-x-alpasta-30b | 15612 | 85.33 |

| manticore-13b | 15856 | 86.66 |

| vicuna-13b-1.1 | 16306 | 89.12 |

| wizard-vicuna-13b-uncensored | 16287 | 89.01 |

individual question scores, with shareGPT links (200 prompts generated by gpt-4)

wb-13b-u is Wizard-Vicuna-13b-Uncensored

| airoboros-13b | gpt35 | gpt4-x-alpasta-30b | manticore-13b | vicuna-13b-1.1 | wv-13b-u | link |

|---|---|---|---|---|---|---|

| 80 | 95 | 70 | 90 | 85 | 60 | eval |

| 20 | 95 | 40 | 30 | 90 | 80 | eval |

| 100 | 100 | 100 | 95 | 95 | 100 | eval |

| 90 | 100 | 85 | 60 | 95 | 100 | eval |

| 95 | 90 | 80 | 85 | 95 | 75 | eval |

| 100 | 95 | 90 | 95 | 98 | 92 | eval |

| 50 | 100 | 80 | 95 | 60 | 55 | eval |

| 70 | 90 | 80 | 60 | 85 | 40 | eval |

| 100 | 95 | 50 | 85 | 40 | 60 | eval |

| 85 | 60 | 55 | 65 | 50 | 70 | eval |

| 95 | 100 | 85 | 90 | 60 | 75 | eval |

| 100 | 95 | 70 | 80 | 50 | 85 | eval |

| 100 | 95 | 80 | 70 | 60 | 90 | eval |

| 95 | 100 | 70 | 85 | 90 | 90 | eval |

| 80 | 95 | 90 | 60 | 30 | 85 | eval |

| 60 | 95 | 0 | 75 | 50 | 40 | eval |

| 100 | 95 | 90 | 98 | 95 | 95 | eval |

| 60 | 85 | 40 | 50 | 20 | 0 | eval |

| 100 | 90 | 85 | 95 | 95 | 80 | eval |

| 100 | 95 | 100 | 95 | 90 | 95 | eval |

| 95 | 90 | 96 | 80 | 92 | 88 | eval |

| 95 | 92 | 90 | 93 | 89 | 91 | eval |

| 95 | 93 | 90 | 94 | 96 | 92 | eval |

| 95 | 90 | 93 | 88 | 92 | 85 | eval |

| 95 | 90 | 85 | 96 | 88 | 92 | eval |

| 95 | 95 | 90 | 93 | 92 | 91 | eval |

| 95 | 98 | 80 | 97 | 99 | 96 | eval |

| 95 | 93 | 90 | 87 | 92 | 89 | eval |

| 90 | 85 | 95 | 80 | 92 | 75 | eval |

| 90 | 85 | 95 | 93 | 80 | 92 | eval |

| 95 | 92 | 90 | 91 | 93 | 89 | eval |

| 100 | 95 | 90 | 85 | 80 | 95 | eval |

| 95 | 97 | 93 | 92 | 96 | 94 | eval |

| 95 | 93 | 94 | 90 | 88 | 92 | eval |

| 90 | 95 | 98 | 85 | 96 | 92 | eval |

| 90 | 88 | 85 | 80 | 82 | 84 | eval |

| 90 | 95 | 85 | 87 | 92 | 88 | eval |

| 95 | 97 | 96 | 90 | 93 | 92 | eval |

| 95 | 93 | 92 | 90 | 89 | 91 | eval |

| 90 | 95 | 93 | 92 | 94 | 91 | eval |

| 90 | 85 | 95 | 80 | 88 | 75 | eval |

| 85 | 90 | 95 | 88 | 92 | 80 | eval |

| 90 | 95 | 92 | 85 | 80 | 87 | eval |

| 85 | 90 | 95 | 80 | 88 | 75 | eval |

| 85 | 80 | 75 | 90 | 70 | 82 | eval |

| 90 | 85 | 95 | 92 | 93 | 80 | eval |

| 90 | 95 | 75 | 85 | 80 | 70 | eval |

| 85 | 90 | 80 | 88 | 82 | 83 | eval |

| 85 | 90 | 95 | 92 | 88 | 80 | eval |

| 85 | 90 | 80 | 75 | 95 | 88 | eval |

| 85 | 90 | 80 | 88 | 84 | 92 | eval |

| 80 | 90 | 75 | 85 | 70 | 95 | eval |

| 90 | 88 | 85 | 80 | 92 | 83 | eval |

| 85 | 75 | 90 | 80 | 78 | 88 | eval |

| 85 | 90 | 80 | 82 | 75 | 88 | eval |

| 90 | 85 | 40 | 95 | 80 | 88 | eval |

| 85 | 95 | 90 | 75 | 88 | 80 | eval |

| 85 | 95 | 90 | 92 | 89 | 88 | eval |

| 80 | 85 | 75 | 60 | 90 | 70 | eval |

| 85 | 90 | 87 | 80 | 88 | 75 | eval |

| 85 | 80 | 75 | 50 | 90 | 80 | eval |

| 95 | 80 | 90 | 85 | 75 | 82 | eval |

| 85 | 90 | 80 | 70 | 95 | 88 | eval |

| 90 | 95 | 70 | 85 | 80 | 75 | eval |

| 90 | 85 | 70 | 75 | 80 | 60 | eval |

| 95 | 90 | 70 | 50 | 85 | 80 | eval |

| 80 | 85 | 40 | 60 | 90 | 95 | eval |

| 75 | 60 | 80 | 55 | 70 | 85 | eval |

| 90 | 85 | 60 | 50 | 80 | 95 | eval |

| 45 | 85 | 60 | 20 | 65 | 75 | eval |

| 85 | 90 | 30 | 60 | 80 | 70 | eval |

| 90 | 95 | 80 | 40 | 85 | 70 | eval |

| 85 | 90 | 70 | 75 | 80 | 95 | eval |

| 90 | 70 | 50 | 20 | 60 | 40 | eval |

| 90 | 95 | 75 | 60 | 85 | 80 | eval |

| 85 | 80 | 60 | 70 | 65 | 75 | eval |

| 90 | 85 | 80 | 75 | 82 | 70 | eval |

| 90 | 95 | 80 | 70 | 85 | 75 | eval |

| 85 | 75 | 30 | 80 | 90 | 70 | eval |

| 85 | 90 | 50 | 70 | 80 | 60 | eval |

| 100 | 95 | 98 | 99 | 97 | 96 | eval |

| 95 | 90 | 92 | 93 | 91 | 89 | eval |

| 95 | 92 | 90 | 85 | 88 | 91 | eval |

| 100 | 95 | 98 | 97 | 96 | 99 | eval |

| 100 | 100 | 100 | 90 | 100 | 95 | eval |

| 100 | 95 | 98 | 97 | 94 | 99 | eval |

| 95 | 90 | 92 | 93 | 94 | 91 | eval |

| 100 | 95 | 98 | 90 | 96 | 95 | eval |

| 95 | 96 | 92 | 90 | 89 | 93 | eval |

| 100 | 95 | 93 | 90 | 92 | 88 | eval |

| 100 | 100 | 98 | 97 | 99 | 100 | eval |

| 95 | 90 | 92 | 85 | 93 | 94 | eval |

| 95 | 93 | 90 | 92 | 96 | 91 | eval |

| 95 | 96 | 92 | 90 | 93 | 91 | eval |

| 95 | 90 | 92 | 93 | 91 | 89 | eval |

| 100 | 98 | 95 | 97 | 96 | 99 | eval |

| 90 | 95 | 85 | 88 | 92 | 87 | eval |

| 95 | 93 | 90 | 92 | 89 | 88 | eval |

| 100 | 95 | 97 | 90 | 96 | 94 | eval |

| 95 | 93 | 90 | 92 | 94 | 91 | eval |

| 95 | 92 | 90 | 93 | 94 | 88 | eval |

| 95 | 92 | 60 | 97 | 90 | 96 | eval |

| 95 | 90 | 92 | 93 | 91 | 89 | eval |

| 95 | 90 | 97 | 92 | 91 | 93 | eval |

| 90 | 95 | 93 | 85 | 92 | 91 | eval |

| 95 | 90 | 40 | 92 | 93 | 85 | eval |

| 100 | 100 | 95 | 90 | 95 | 90 | eval |

| 90 | 95 | 96 | 98 | 93 | 92 | eval |

| 90 | 95 | 92 | 89 | 93 | 94 | eval |

| 100 | 95 | 100 | 98 | 96 | 99 | eval |

| 100 | 100 | 95 | 90 | 100 | 90 | eval |

| 90 | 85 | 88 | 92 | 87 | 91 | eval |

| 95 | 97 | 90 | 92 | 93 | 94 | eval |

| 90 | 95 | 85 | 88 | 92 | 89 | eval |

| 95 | 93 | 90 | 92 | 94 | 91 | eval |

| 90 | 95 | 85 | 80 | 88 | 82 | eval |

| 95 | 90 | 60 | 85 | 93 | 70 | eval |

| 95 | 92 | 94 | 93 | 96 | 90 | eval |

| 95 | 90 | 85 | 93 | 87 | 92 | eval |

| 95 | 96 | 93 | 90 | 97 | 92 | eval |

| 100 | 0 | 0 | 100 | 0 | 0 | eval |

| 60 | 100 | 0 | 80 | 0 | 0 | eval |

| 0 | 100 | 60 | 0 | 0 | 90 | eval |

| 100 | 100 | 0 | 100 | 100 | 100 | eval |

| 100 | 100 | 100 | 100 | 95 | 100 | eval |

| 100 | 100 | 100 | 50 | 90 | 100 | eval |

| 100 | 100 | 100 | 100 | 95 | 90 | eval |

| 100 | 100 | 100 | 95 | 0 | 100 | eval |

| 50 | 95 | 20 | 10 | 30 | 85 | eval |

| 100 | 100 | 60 | 20 | 30 | 40 | eval |

| 100 | 0 | 0 | 0 | 0 | 100 | eval |

| 0 | 100 | 60 | 0 | 0 | 80 | eval |

| 50 | 100 | 20 | 90 | 0 | 10 | eval |

| 100 | 100 | 100 | 100 | 100 | 100 | eval |

| 100 | 100 | 100 | 100 | 100 | 100 | eval |

| 40 | 100 | 95 | 0 | 100 | 40 | eval |

| 100 | 100 | 100 | 100 | 80 | 100 | eval |

| 100 | 100 | 100 | 0 | 90 | 40 | eval |

| 0 | 100 | 100 | 50 | 70 | 20 | eval |

| 100 | 100 | 50 | 90 | 0 | 95 | eval |

| 100 | 95 | 90 | 85 | 98 | 80 | eval |

| 95 | 98 | 90 | 92 | 96 | 89 | eval |

| 90 | 95 | 75 | 85 | 80 | 82 | eval |

| 95 | 98 | 50 | 92 | 96 | 94 | eval |

| 95 | 90 | 0 | 93 | 92 | 94 | eval |

| 95 | 90 | 85 | 92 | 80 | 88 | eval |

| 95 | 93 | 75 | 85 | 90 | 92 | eval |

| 90 | 95 | 88 | 85 | 92 | 89 | eval |

| 100 | 100 | 100 | 95 | 97 | 98 | eval |

| 85 | 40 | 30 | 95 | 90 | 88 | eval |

| 90 | 95 | 92 | 85 | 88 | 93 | eval |

| 95 | 96 | 92 | 90 | 89 | 93 | eval |

| 90 | 95 | 85 | 80 | 92 | 88 | eval |

| 95 | 98 | 65 | 90 | 85 | 93 | eval |

| 95 | 92 | 96 | 97 | 90 | 89 | eval |

| 95 | 90 | 92 | 91 | 89 | 93 | eval |

| 95 | 90 | 80 | 75 | 95 | 90 | eval |

| 92 | 40 | 30 | 95 | 90 | 93 | eval |

| 90 | 92 | 85 | 88 | 89 | 87 | eval |

| 95 | 80 | 90 | 92 | 91 | 88 | eval |

| 95 | 93 | 92 | 90 | 91 | 94 | eval |

| 100 | 98 | 95 | 90 | 92 | 96 | eval |

| 95 | 92 | 80 | 85 | 90 | 93 | eval |

| 95 | 98 | 90 | 88 | 97 | 96 | eval |

| 90 | 95 | 85 | 88 | 86 | 92 | eval |

| 100 | 100 | 100 | 100 | 100 | 100 | eval |

| 90 | 95 | 85 | 96 | 92 | 88 | eval |

| 100 | 98 | 95 | 99 | 97 | 96 | eval |

| 95 | 92 | 70 | 90 | 93 | 89 | eval |

| 95 | 90 | 88 | 92 | 94 | 93 | eval |

| 95 | 90 | 93 | 92 | 85 | 94 | eval |

| 95 | 93 | 90 | 87 | 92 | 91 | eval |

| 95 | 93 | 90 | 96 | 92 | 91 | eval |

| 95 | 97 | 85 | 96 | 98 | 90 | eval |

| 95 | 92 | 90 | 85 | 93 | 94 | eval |

| 95 | 96 | 92 | 90 | 97 | 93 | eval |

| 95 | 93 | 96 | 94 | 90 | 92 | eval |

| 95 | 94 | 93 | 92 | 90 | 89 | eval |

| 90 | 85 | 95 | 80 | 87 | 75 | eval |

| 95 | 94 | 92 | 93 | 90 | 96 | eval |

| 95 | 100 | 90 | 95 | 95 | 95 | eval |

| 100 | 95 | 85 | 100 | 0 | 90 | eval |

| 100 | 95 | 90 | 95 | 100 | 95 | eval |

| 95 | 90 | 60 | 95 | 85 | 80 | eval |

| 100 | 95 | 90 | 98 | 97 | 99 | eval |

| 95 | 90 | 85 | 95 | 80 | 92 | eval |

| 100 | 95 | 100 | 98 | 100 | 90 | eval |

| 100 | 95 | 80 | 85 | 90 | 85 | eval |

| 100 | 90 | 95 | 85 | 95 | 100 | eval |

| 95 | 90 | 85 | 80 | 88 | 92 | eval |

| 100 | 100 | 0 | 0 | 100 | 0 | eval |

| 100 | 100 | 100 | 50 | 100 | 75 | eval |

| 100 | 100 | 0 | 0 | 100 | 0 | eval |

| 0 | 100 | 0 | 0 | 0 | 0 | eval |

| 100 | 100 | 50 | 0 | 0 | 0 | eval |

| 100 | 100 | 100 | 100 | 100 | 95 | eval |

| 100 | 100 | 50 | 0 | 0 | 0 | eval |

| 100 | 100 | 0 | 0 | 100 | 0 | eval |

| 90 | 85 | 80 | 95 | 70 | 75 | eval |

| 100 | 100 | 0 | 0 | 0 | 0 | eval |

Training data

This was an experiment to see if a "jailbreak" prompt could be used to generate a broader range of data that would otherwise have been filtered by OpenAI's alignment efforts.

The jailbreak did indeed work with a high success rate, and caused OpenAI to generate a broader range of topics and fewer refusals to answer questions/instructions of sensitive topics.

Prompt format

The prompt should be 1:1 compatible with the FastChat/vicuna format, e.g.:

With a system prompt:

A chat between a curious user and an artificial intelligence assistant. The assistant gives helpful, detailed, and polite answers to the user's questions. USER: [prompt] ASSISTANT:

Or without a system prompt:

USER: [prompt] ASSISTANT:

Usage and License Notices

The model and dataset are intended and licensed for research use only. I've used the 'cc-nc-4.0' license, but really it is subject to a custom/special license because:

- the base model is LLaMa, which has it's own special research license

- the dataset(s) were generated with OpenAI (gpt-4 and/or gpt-3.5-turbo), which has a clausing saying the data can't be used to create models to compete with openai

So, to reiterate: this model (and datasets) cannot be used commercially.

Open LLM Leaderboard Evaluation Results

Detailed results can be found here

| Metric | Value |

|---|---|

| Avg. | 48.94 |

| ARC (25-shot) | 58.28 |

| HellaSwag (10-shot) | 81.05 |

| MMLU (5-shot) | 50.03 |

| TruthfulQA (0-shot) | 51.57 |

| Winogrande (5-shot) | 76.24 |

| GSM8K (5-shot) | 6.97 |

| DROP (3-shot) | 18.42 |