Model Card for Model ID

Model Details

- meta-llama/Llama-2-7b-chat-hf를 미세튜닝하여 글의 긍정과 부정을 구분하는 모델

Model Description

Developed by: 성신여자대학교 20211421 황규원

Finetuned from model: KT-AI/midm-bitext-S-7B-inst-v1

Uses

- NSMC 데이터셋으로 글의 긍정과 부정을 구분하는 모델을 미세튜닝함이 목적

- 문장의 감정이 긍정이면 '1'로, 그 외는 '0'으로 레이블을 함

Training Data

- NSMC 데이터셋의 train 스플릿 앞쪽 3,000개의 샘플을 학습에 사용

Training Procedure

- 512 시퀀스 길이

- 1600 steps의 학습

Testing Data, Factors & Metrics

Testing Data

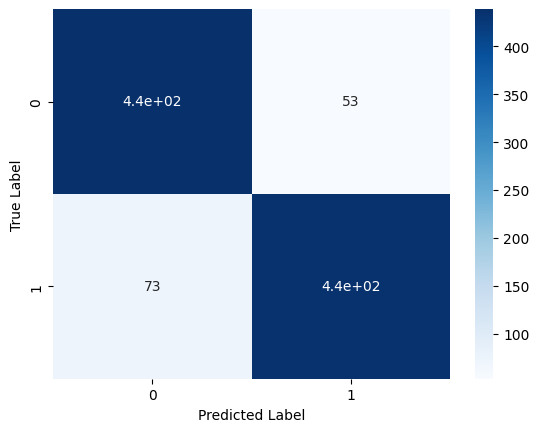

- NSMC 데이터셋의 test 스플릿 앞쪽 1,000개의 샘플을 학습에 사용

Metrics

| Llama2 정확도 | Midm 정확도 | 정밀도 | 재현율 |

|---|---|---|---|

| 0.874 | 0.9 | 0.891 | 0.856 |

- 믿음 모델을 미세튜닝한 것이 더 나은 정확도를 보여줌

Results

Summary

- 정답률을 올리기 위해 2,000개의 데이터셋이 아닌 3,000개의 학습 데이터를 넣어봄

- steps수도 올려 더 낮은 loss값이 나오길 기대했음

Training procedure

The following bitsandbytes quantization config was used during training:

- quant_method: bitsandbytes

- load_in_8bit: False

- load_in_4bit: True

- llm_int8_threshold: 6.0

- llm_int8_skip_modules: None

- llm_int8_enable_fp32_cpu_offload: False

- llm_int8_has_fp16_weight: False

- bnb_4bit_quant_type: nf4

- bnb_4bit_use_double_quant: False

- bnb_4bit_compute_dtype: bfloat16

Framework versions

- PEFT 0.7.0

- Downloads last month

- 6

Model tree for guguwon/hw-llama-2-7B-nsmc

Base model

meta-llama/Llama-2-7b-chat-hf