id

stringlengths 36

36

| status

stringclasses 1

value | inserted_at

timestamp[us] | updated_at

timestamp[us] | _server_id

stringlengths 36

36

| title

stringlengths 11

142

| authors

stringlengths 3

297

| filename

stringlengths 5

62

| content

stringlengths 2

64.1k

| content_class.responses

sequencelengths 1

1

| content_class.responses.users

sequencelengths 1

1

| content_class.responses.status

sequencelengths 1

1

| content_class.suggestion

sequencelengths 1

4

| content_class.suggestion.agent

null | content_class.suggestion.score

null |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

e5d70009-4e7c-4c3b-bb5a-9fe338b229d1 | completed | 2025-01-16T03:08:53.170791 | 2025-01-19T18:58:44.899654 | d6c31fa9-7f3a-42f2-80a2-1a7ae7f2fccc | BERT 101 - State Of The Art NLP Model Explained | britneymuller | bert-101.md | # BERT 101 🤗 State Of The Art NLP Model Explained

<script async defer src="https://unpkg.com/medium-zoom-element@0/dist/medium-zoom-element.min.js"></script>

## What is BERT?

BERT, short for Bidirectional Encoder Representations from Transformers, is a Machine Learning (ML) model for natural language processing. It was developed in 2018 by researchers at Google AI Language and serves as a swiss army knife solution to 11+ of the most common language tasks, such as sentiment analysis and named entity recognition.

Language has historically been difficult for computers to ‘understand’. Sure, computers can collect, store, and read text inputs but they lack basic language _context_.

So, along came Natural Language Processing (NLP): the field of artificial intelligence aiming for computers to read, analyze, interpret and derive meaning from text and spoken words. This practice combines linguistics, statistics, and Machine Learning to assist computers in ‘understanding’ human language.

Individual NLP tasks have traditionally been solved by individual models created for each specific task. That is, until— BERT!

BERT revolutionized the NLP space by solving for 11+ of the most common NLP tasks (and better than previous models) making it the jack of all NLP trades.

In this guide, you'll learn what BERT is, why it’s different, and how to get started using BERT:

1. [What is BERT used for?](#1-what-is-bert-used-for)

2. [How does BERT work?](#2-how-does-bert-work)

3. [BERT model size & architecture](#3-bert-model-size--architecture)

4. [BERT’s performance on common language tasks](#4-berts-performance-on-common-language-tasks)

5. [Environmental impact of deep learning](#5-enviornmental-impact-of-deep-learning)

6. [The open source power of BERT](#6-the-open-source-power-of-bert)

7. [How to get started using BERT](#7-how-to-get-started-using-bert)

8. [BERT FAQs](#8-bert-faqs)

9. [Conclusion](#9-conclusion)

Let's get started! 🚀

## 1. What is BERT used for?

BERT can be used on a wide variety of language tasks:

- Can determine how positive or negative a movie’s reviews are. [(Sentiment Analysis)](https://huggingface.co./blog/sentiment-analysis-python)

- Helps chatbots answer your questions. [(Question answering)](https://huggingface.co./tasks/question-answering)

- Predicts your text when writing an email (Gmail). [(Text prediction)](https://huggingface.co./tasks/fill-mask)

- Can write an article about any topic with just a few sentence inputs. [(Text generation)](https://huggingface.co./tasks/text-generation)

- Can quickly summarize long legal contracts. [(Summarization)](https://huggingface.co./tasks/summarization)

- Can differentiate words that have multiple meanings (like ‘bank’) based on the surrounding text. (Polysemy resolution)

**There are many more language/NLP tasks + more detail behind each of these.**

***Fun Fact:*** You interact with NLP (and likely BERT) almost every single day!

NLP is behind Google Translate, voice assistants (Alexa, Siri, etc.), chatbots, Google searches, voice-operated GPS, and more. | [

[

"llm",

"transformers",

"research",

"tutorial"

]

] | [

"2629e041-8c70-4026-8651-8bb91fd9749a"

] | [

"submitted"

] | [

"llm",

"transformers",

"tutorial",

"research"

] | null | null |

7f8a87d1-04d0-417d-beed-386eab5df016 | completed | 2025-01-16T03:08:53.170803 | 2025-01-19T19:06:05.430221 | e1d155de-8231-4454-964e-26b90ac52015 | StackLLaMA: A hands-on guide to train LLaMA with RLHF | edbeeching, kashif, ybelkada, lewtun, lvwerra, nazneen, natolambert | stackllama.md | Models such as [ChatGPT]([https://openai.com/blog/chatgpt](https://openai.com/blog/chatgpt)), [GPT-4]([https://openai.com/research/gpt-4](https://openai.com/research/gpt-4)), and [Claude]([https://www.anthropic.com/index/introducing-claude](https://www.anthropic.com/index/introducing-claude)) are powerful language models that have been fine-tuned using a method called Reinforcement Learning from Human Feedback (RLHF) to be better aligned with how we expect them to behave and would like to use them.

In this blog post, we show all the steps involved in training a [LlaMa model](https://ai.facebook.com/blog/large-language-model-llama-meta-ai) to answer questions on [Stack Exchange](https://stackexchange.com) with RLHF through a combination of:

- Supervised Fine-tuning (SFT)

- Reward / preference modeling (RM)

- Reinforcement Learning from Human Feedback (RLHF)

*From InstructGPT paper: Ouyang, Long, et al. "Training language models to follow instructions with human feedback." arXiv preprint arXiv:2203.02155 (2022).*

By combining these approaches, we are releasing the StackLLaMA model. This model is available on the [🤗 Hub](https://huggingface.co./trl-lib/llama-se-rl-peft) (see [Meta's LLaMA release](https://ai.facebook.com/blog/large-language-model-llama-meta-ai/) for the original LLaMA model) and [the entire training pipeline](https://huggingface.co./docs/trl/index) is available as part of the Hugging Face TRL library. To give you a taste of what the model can do, try out the demo below!

<script

type="module"

src="https://gradio.s3-us-west-2.amazonaws.com/3.23.0/gradio.js"></script>

<gradio-app theme_mode="light" src="https://trl-lib-stack-llama.hf.space"></gradio-app>

## The LLaMA model

When doing RLHF, it is important to start with a capable model: the RLHF step is only a fine-tuning step to align the model with how we want to interact with it and how we expect it to respond. Therefore, we choose to use the recently introduced and performant [LLaMA models](https://arxiv.org/abs/2302.13971). The LLaMA models are the latest large language models developed by Meta AI. They come in sizes ranging from 7B to 65B parameters and were trained on between 1T and 1.4T tokens, making them very capable. We use the 7B model as the base for all the following steps!

To access the model, use the [form](https://docs.google.com/forms/d/e/1FAIpQLSfqNECQnMkycAp2jP4Z9TFX0cGR4uf7b_fBxjY_OjhJILlKGA/viewform) from Meta AI.

## Stack Exchange dataset

Gathering human feedback is a complex and expensive endeavor. In order to bootstrap the process for this example while still building a useful model, we make use of the [StackExchange dataset](https://huggingface.co./datasets/HuggingFaceH4/stack-exchange-preferences). The dataset includes questions and their corresponding answers from the StackExchange platform (including StackOverflow for code and many other topics). It is attractive for this use case because the answers come together with the number of upvotes and a label for the accepted answer.

We follow the approach described in [Askell et al. 2021](https://arxiv.org/abs/2112.00861) and assign each answer a score:

`score = log2 (1 + upvotes) rounded to the nearest integer, plus 1 if the questioner accepted the answer (we assign a score of −1 if the number of upvotes is negative).`

For the reward model, we will always need two answers per question to compare, as we’ll see later. Some questions have dozens of answers, leading to many possible pairs. We sample at most ten answer pairs per question to limit the number of data points per question. Finally, we cleaned up formatting by converting HTML to Markdown to make the model’s outputs more readable. You can find the dataset as well as the processing notebook [here](https://huggingface.co./datasets/lvwerra/stack-exchange-paired).

## Efficient training strategies

Even training the smallest LLaMA model requires an enormous amount of memory. Some quick math: in bf16, every parameter uses 2 bytes (in fp32 4 bytes) in addition to 8 bytes used, e.g., in the Adam optimizer (see the [performance docs](https://huggingface.co./docs/transformers/perf_train_gpu_one#optimizer) in Transformers for more info). So a 7B parameter model would use `(2+8)*7B=70GB` just to fit in memory and would likely need more when you compute intermediate values such as attention scores. So you couldn’t train the model even on a single 80GB A100 like that. You can use some tricks, like more efficient optimizers of half-precision training, to squeeze a bit more into memory, but you’ll run out sooner or later.

Another option is to use Parameter-Efficient Fine-Tuning (PEFT) techniques, such as the [`peft`](https://github.com/huggingface/peft) library, which can perform Low-Rank Adaptation (LoRA) on a model loaded in 8-bit.

*Low-Rank Adaptation of linear layers: extra parameters (in orange) are added next to the frozen layer (in blue), and the resulting encoded hidden states are added together with the hidden states of the frozen layer.*

Loading the model in 8bit reduces the memory footprint drastically since you only need one byte per parameter for the weights (e.g. 7B LlaMa is 7GB in memory). Instead of training the original weights directly, LoRA adds small adapter layers on top of some specific layers (usually the attention layers); thus, the number of trainable parameters is drastically reduced.

In this scenario, a rule of thumb is to allocate ~1.2-1.4GB per billion parameters (depending on the batch size and sequence length) to fit the entire fine-tuning setup. As detailed in the attached blog post above, this enables fine-tuning larger models (up to 50-60B scale models on a NVIDIA A100 80GB) at low cost.

These techniques have enabled fine-tuning large models on consumer devices and Google Colab. Notable demos are fine-tuning `facebook/opt-6.7b` (13GB in `float16` ), and `openai/whisper-large` on Google Colab (15GB GPU RAM). To learn more about using `peft`, refer to our [github repo](https://github.com/huggingface/peft) or the [previous blog post](https://huggingface.co./blog/trl-peft)(https://huggingface.co./blog/trl-peft)) on training 20b parameter models on consumer hardware.

Now we can fit very large models into a single GPU, but the training might still be very slow. The simplest strategy in this scenario is data parallelism: we replicate the same training setup into separate GPUs and pass different batches to each GPU. With this, you can parallelize the forward/backward passes of the model and scale with the number of GPUs.

We use either the `transformers.Trainer` or `accelerate`, which both support data parallelism without any code changes, by simply passing arguments when calling the scripts with `torchrun` or `accelerate launch`. The following runs a training script with 8 GPUs on a single machine with `accelerate` and `torchrun`, respectively.

```bash

accelerate launch --multi_gpu --num_machines 1 --num_processes 8 my_accelerate_script.py

torchrun --nnodes 1 --nproc_per_node 8 my_torch_script.py

```

## Supervised fine-tuning

Before we start training reward models and tuning our model with RL, it helps if the model is already good in the domain we are interested in. In our case, we want it to answer questions, while for other use cases, we might want it to follow instructions, in which case instruction tuning is a great idea. The easiest way to achieve this is by continuing to train the language model with the language modeling objective on texts from the domain or task. The [StackExchange dataset](https://huggingface.co./datasets/HuggingFaceH4/stack-exchange-preferences) is enormous (over 10 million instructions), so we can easily train the language model on a subset of it.

There is nothing special about fine-tuning the model before doing RLHF - it’s just the causal language modeling objective from pretraining that we apply here. To use the data efficiently, we use a technique called packing: instead of having one text per sample in the batch and then padding to either the longest text or the maximal context of the model, we concatenate a lot of texts with a EOS token in between and cut chunks of the context size to fill the batch without any padding.

With this approach the training is much more efficient as each token that is passed through the model is also trained in contrast to padding tokens which are usually masked from the loss. If you don't have much data and are more concerned about occasionally cutting off some tokens that are overflowing the context you can also use a classical data loader.

The packing is handled by the `ConstantLengthDataset` and we can then use the `Trainer` after loading the model with `peft`. First, we load the model in int8, prepare it for training, and then add the LoRA adapters.

```python

# load model in 8bit

model = AutoModelForCausalLM.from_pretrained(

args.model_path,

load_in_8bit=True,

device_map={"": Accelerator().local_process_index}

)

model = prepare_model_for_int8_training(model)

# add LoRA to model

lora_config = LoraConfig(

r=16,

lora_alpha=32,

lora_dropout=0.05,

bias="none",

task_type="CAUSAL_LM",

)

model = get_peft_model(model, lora_config)

```

We train the model for a few thousand steps with the causal language modeling objective and save the model. Since we will tune the model again with different objectives, we merge the adapter weights with the original model weights.

**Disclaimer:** due to LLaMA's license, we release only the adapter weights for this and the model checkpoints in the following sections. You can apply for access to the base model's weights by filling out Meta AI's [form](https://docs.google.com/forms/d/e/1FAIpQLSfqNECQnMkycAp2jP4Z9TFX0cGR4uf7b_fBxjY_OjhJILlKGA/viewform) and then converting them to the 🤗 Transformers format by running this [script](https://github.com/huggingface/transformers/blob/main/src/transformers/models/llama/convert_llama_weights_to_hf.py). Note that you'll also need to install 🤗 Transformers from source until the `v4.28` is released.

Now that we have fine-tuned the model for the task, we are ready to train a reward model.

## Reward modeling and human preferences

In principle, we could fine-tune the model using RLHF directly with the human annotations. However, this would require us to send some samples to humans for rating after each optimization iteration. This is expensive and slow due to the number of training samples needed for convergence and the inherent latency of human reading and annotator speed.

A trick that works well instead of direct feedback is training a reward model on human annotations collected before the RL loop. The goal of the reward model is to imitate how a human would rate a text. There are several possible strategies to build a reward model: the most straightforward way would be to predict the annotation (e.g. a rating score or a binary value for “good”/”bad”). In practice, what works better is to predict the ranking of two examples, where the reward model is presented with two candidates \\( (y_k, y_j) \\) for a given prompt \\( x \\) and has to predict which one would be rated higher by a human annotator.

This can be translated into the following loss function:

\\( \operatorname{loss}(\theta)=- E_{\left(x, y_j, y_k\right) \sim D}\left[\log \left(\sigma\left(r_\theta\left(x, y_j\right)-r_\theta\left(x, y_k\right)\right)\right)\right] \\)

where \\( r \\) is the model’s score and \\( y_j \\) is the preferred candidate.

With the StackExchange dataset, we can infer which of the two answers was preferred by the users based on the score. With that information and the loss defined above, we can then modify the `transformers.Trainer` by adding a custom loss function.

```python

class RewardTrainer(Trainer):

def compute_loss(self, model, inputs, return_outputs=False):

rewards_j = model(input_ids=inputs["input_ids_j"], attention_mask=inputs["attention_mask_j"])[0]

rewards_k = model(input_ids=inputs["input_ids_k"], attention_mask=inputs["attention_mask_k"])[0]

loss = -nn.functional.logsigmoid(rewards_j - rewards_k).mean()

if return_outputs:

return loss, {"rewards_j": rewards_j, "rewards_k": rewards_k}

return loss

```

We utilize a subset of a 100,000 pair of candidates and evaluate on a held-out set of 50,000. With a modest training batch size of 4, we train the LLaMA model using the LoRA `peft` adapter for a single epoch using the Adam optimizer with BF16 precision. Our LoRA configuration is:

```python

peft_config = LoraConfig(

task_type=TaskType.SEQ_CLS,

inference_mode=False,

r=8,

lora_alpha=32,

lora_dropout=0.1,

)

```

The training is logged via [Weights & Biases](https://wandb.ai/krasul/huggingface/runs/wmd8rvq6?workspace=user-krasul) and took a few hours on 8-A100 GPUs using the 🤗 research cluster and the model achieves a final **accuracy of 67%**. Although this sounds like a low score, the task is also very hard, even for human annotators.

As detailed in the next section, the resulting adapter can be merged into the frozen model and saved for further downstream use.

## Reinforcement Learning from Human Feedback

With the fine-tuned language model and the reward model at hand, we are now ready to run the RL loop. It follows roughly three steps:

1. Generate responses from prompts

2. Rate the responses with the reward model

3. Run a reinforcement learning policy-optimization step with the ratings

The Query and Response prompts are templated as follows before being tokenized and passed to the model:

```bash

Question: <Query>

Answer: <Response>

```

The same template was used for SFT, RM and RLHF stages.

A common issue with training the language model with RL is that the model can learn to exploit the reward model by generating complete gibberish, which causes the reward model to assign high rewards. To balance this, we add a penalty to the reward: we keep a reference of the model that we don’t train and compare the new model’s generation to the reference one by computing the KL-divergence:

\\( \operatorname{R}(x, y)=\operatorname{r}(x, y)- \beta \operatorname{KL}(x, y) \\)

where \\( r \\) is the reward from the reward model and \\( \operatorname{KL}(x,y) \\) is the KL-divergence between the current policy and the reference model.

Once more, we utilize `peft` for memory-efficient training, which offers an extra advantage in the RLHF context. Here, the reference model and policy share the same base, the SFT model, which we load in 8-bit and freeze during training. We exclusively optimize the policy's LoRA weights using PPO while sharing the base model's weights.

```python

for epoch, batch in tqdm(enumerate(ppo_trainer.dataloader)):

question_tensors = batch["input_ids"]

# sample from the policy and generate responses

response_tensors = ppo_trainer.generate(

question_tensors,

return_prompt=False,

length_sampler=output_length_sampler,

**generation_kwargs,

)

batch["response"] = tokenizer.batch_decode(response_tensors, skip_special_tokens=True)

# Compute sentiment score

texts = [q + r for q, r in zip(batch["query"], batch["response"])]

pipe_outputs = sentiment_pipe(texts, **sent_kwargs)

rewards = [torch.tensor(output[0]["score"] - script_args.reward_baseline) for output in pipe_outputs]

# Run PPO step

stats = ppo_trainer.step(question_tensors, response_tensors, rewards)

# Log stats to WandB

ppo_trainer.log_stats(stats, batch, rewards)

```

We train for 20 hours on 3x8 A100-80GB GPUs, using the 🤗 research cluster, but you can also get decent results much quicker (e.g. after ~20h on 8 A100 GPUs). All the training statistics of the training run are available on [Weights & Biases](https://wandb.ai/lvwerra/trl/runs/ie2h4q8p).

*Per batch reward at each step during training. The model’s performance plateaus after around 1000 steps.*

So what can the model do after training? Let's have a look!

Although we shouldn't trust its advice on LLaMA matters just, yet, the answer looks coherent and even provides a Google link. Let's have a look and some of the training challenges next.

## Challenges, instabilities and workarounds

Training LLMs with RL is not always plain sailing. The model we demo today is the result of many experiments, failed runs and hyper-parameter sweeps. Even then, the model is far from perfect. Here we will share a few of the observations and headaches we encountered on the way to making this example.

### Higher reward means better performance, right?

*Wow this run must be great, look at that sweet, sweet, reward!*

In general in RL, you want to achieve the highest reward. In RLHF we use a Reward Model, which is imperfect and given the chance, the PPO algorithm will exploit these imperfections. This can manifest itself as sudden increases in reward, however when we look at the text generations from the policy, they mostly contain repetitions of the string ```, as the reward model found the stack exchange answers containing blocks of code usually rank higher than ones without it. Fortunately this issue was observed fairly rarely and in general the KL penalty should counteract such exploits.

### KL is always a positive value, isn’t it?

As we previously mentioned, a KL penalty term is used in order to push the model’s outputs remain close to that of the base policy. In general, KL divergence measures the distances between two distributions and is always a positive quantity. However, in `trl` we use an estimate of the KL which in expectation is equal to the real KL divergence.

\\( KL_{pen}(x,y) = \log \left(\pi_\phi^{\mathrm{RL}}(y \mid x) / \pi^{\mathrm{SFT}}(y \mid x)\right) \\)

Clearly, when a token is sampled from the policy which has a lower probability than the SFT model, this will lead to a negative KL penalty, but on average it will be positive otherwise you wouldn't be properly sampling from the policy. However, some generation strategies can force some tokens to be generated or some tokens can suppressed. For example when generating in batches finished sequences are padded and when setting a minimum length the EOS token is suppressed. The model can assign very high or low probabilities to those tokens which leads to negative KL. As the PPO algorithm optimizes for reward, it will chase after these negative penalties, leading to instabilities.

One needs to be careful when generating the responses and we suggest to always use a simple sampling strategy first before resorting to more sophisticated generation methods.

### Ongoing issues

There are still a number of issues that we need to better understand and resolve. For example, there are occassionally spikes in the loss, which can lead to further instabilities.

As we identify and resolve these issues, we will upstream the changes `trl`, to ensure the community can benefit.

## Conclusion

In this post, we went through the entire training cycle for RLHF, starting with preparing a dataset with human annotations, adapting the language model to the domain, training a reward model, and finally training a model with RL.

By using `peft`, anyone can run our example on a single GPU! If training is too slow, you can use data parallelism with no code changes and scale training by adding more GPUs.

For a real use case, this is just the first step! Once you have a trained model, you must evaluate it and compare it against other models to see how good it is. This can be done by ranking generations of different model versions, similar to how we built the reward dataset.

Once you add the evaluation step, the fun begins: you can start iterating on your dataset and model training setup to see if there are ways to improve the model. You could add other datasets to the mix or apply better filters to the existing one. On the other hand, you could try different model sizes and architecture for the reward model or train for longer.

We are actively improving TRL to make all steps involved in RLHF more accessible and are excited to see the things people build with it! Check out the [issues on GitHub](https://github.com/lvwerra/trl/issues) if you're interested in contributing.

## Citation

```bibtex

@misc {beeching2023stackllama,

author = { Edward Beeching and

Younes Belkada and

Kashif Rasul and

Lewis Tunstall and

Leandro von Werra and

Nazneen Rajani and

Nathan Lambert

},

title = { StackLLaMA: An RL Fine-tuned LLaMA Model for Stack Exchange Question and Answering },

year = 2023,

url = { https://huggingface.co./blog/stackllama },

doi = { 10.57967/hf/0513 },

publisher = { Hugging Face Blog }

}

```

## Acknowledgements

We thank Philipp Schmid for sharing his wonderful [demo](https://huggingface.co./spaces/philschmid/igel-playground) of streaming text generation upon which our demo was based. We also thank Omar Sanseviero and Louis Castricato for giving valuable and detailed feedback on the draft of the blog post. | [

[

"llm",

"implementation",

"tutorial",

"fine_tuning"

]

] | [

"2629e041-8c70-4026-8651-8bb91fd9749a"

] | [

"submitted"

] | [

"llm",

"fine_tuning",

"tutorial",

"implementation"

] | null | null |

c88a19d7-c645-40d5-a297-9264ec53d213 | completed | 2025-01-16T03:08:53.170809 | 2025-01-16T03:12:40.426634 | e4403500-5442-4c60-b687-c753e9ee196f | Introducing SafeCoder | jeffboudier, philschmid | safecoder.md | Today we are excited to announce SafeCoder - a code assistant solution built for the enterprise.

The goal of SafeCoder is to unlock software development productivity for the enterprise, with a fully compliant and self-hosted pair programmer. In marketing speak: “your own on-prem GitHub copilot”.

Before we dive deeper, here’s what you need to know:

- SafeCoder is not a model, but a complete end-to-end commercial solution

- SafeCoder is built with security and privacy as core principles - code never leaves the VPC during training or inference

- SafeCoder is designed for self-hosting by the customer on their own infrastructure

- SafeCoder is designed for customers to own their own Code Large Language Model

## Why SafeCoder?

Code assistant solutions built upon LLMs, such as GitHub Copilot, are delivering strong [productivity boosts](https://github.blog/2022-09-07-research-quantifying-github-copilots-impact-on-developer-productivity-and-happiness/). For the enterprise, the ability to tune Code LLMs on the company code base to create proprietary Code LLMs improves reliability and relevance of completions to create another level of productivity boost. For instance, Google internal LLM code assistant reports a completion [acceptance rate of 25-34%](https://ai.googleblog.com/2022/07/ml-enhanced-code-completion-improves.html) by being trained on an internal code base.

However, relying on closed-source Code LLMs to create internal code assistants exposes companies to compliance and security issues. First during training, as fine-tuning a closed-source Code LLM on an internal codebase requires exposing this codebase to a third party. And then during inference, as fine-tuned Code LLMs are likely to “leak” code from their training dataset during inference. To meet compliance requirements, enterprises need to deploy fine-tuned Code LLMs within their own infrastructure - which is not possible with closed source LLMs.

With SafeCoder, Hugging Face will help customers build their own Code LLMs, fine-tuned on their proprietary codebase, using state of the art open models and libraries, without sharing their code with Hugging Face or any other third party. With SafeCoder, Hugging Face delivers a containerized, hardware-accelerated Code LLM inference solution, to be deployed by the customer directly within the Customer secure infrastructure, without code inputs and completions leaving their secure IT environment.

## From StarCoder to SafeCoder

At the core of the SafeCoder solution is the [StarCoder](https://huggingface.co./bigcode/starcoder) family of Code LLMs, created by the [BigCode](https://huggingface.co./bigcode) project, a collaboration between Hugging Face, ServiceNow and the open source community.

The StarCoder models offer unique characteristics ideally suited to enterprise self-hosted solution:

- State of the art code completion results - see benchmarks in the [paper](https://huggingface.co./papers/2305.06161) and [multilingual code evaluation leaderboard](https://huggingface.co./spaces/bigcode/multilingual-code-evals)

- Designed for inference performance: a 15B parameters model with code optimizations, Multi-Query Attention for reduced memory footprint, and Flash Attention to scale to 8,192 tokens context.

- Trained on [the Stack](https://huggingface.co./datasets/bigcode/the-stack), an ethically sourced, open source code dataset containing only commercially permissible licensed code, with a developer opt-out mechanism from the get-go, refined through intensive PII removal and deduplication efforts.

Note: While StarCoder is the inspiration and model powering the initial version of SafeCoder, an important benefit of building a LLM solution upon open source models is that it can adapt to the latest and greatest open source models available. In the future, SafeCoder may offer other similarly commercially permissible open source models built upon ethically sourced and transparent datasets as the base LLM available for fine-tuning.

## Privacy and Security as a Core Principle

For any company, the internal codebase is some of its most important and valuable intellectual property. A core principle of SafeCoder is that the customer internal codebase will never be accessible to any third party (including Hugging Face) during training or inference.

In the initial set up phase of SafeCoder, the Hugging Face team provides containers, scripts and examples to work hand in hand with the customer to select, extract, prepare, duplicate, deidentify internal codebase data into a training dataset to be used in a Hugging Face provided training container configured to the hardware infrastructure available to the customer.

In the deployment phase of SafeCoder, the customer deploys containers provided by Hugging Face on their own infrastructure to expose internal private endpoints within their VPC. These containers are configured to the exact hardware configuration available to the customer, including NVIDIA GPUs, AMD Instinct GPUs, Intel Xeon CPUs, AWS Inferentia2 or Habana Gaudi accelerators.

## Compliance as a Core Principle

As the regulation framework around machine learning models and datasets is still being written across the world, global companies need to make sure the solutions they use minimize legal risks.

Data sources, data governance, management of copyrighted data are just a few of the most important compliance areas to consider. BigScience, the older cousin and inspiration for BigCode, addressed these areas in working groups before they were broadly recognized by the draft AI EU Act, and as a result was [graded as most compliant among Foundational Model Providers in a Stanford CRFM study](https://crfm.stanford.edu/2023/06/15/eu-ai-act.html).

BigCode expanded upon this work by implementing novel techniques for the code domain and building The Stack with compliance as a core principle, such as commercially permissible license filtering, consent mechanisms (developers can [easily find out if their code is present and request to be opted out](https://huggingface.co./spaces/bigcode/in-the-stack) of the dataset), and extensive documentation and tools to inspect the [source data](https://huggingface.co./datasets/bigcode/the-stack-metadata), and dataset improvements (such as [deduplication](https://huggingface.co./blog/dedup) and [PII removal](https://huggingface.co./bigcode/starpii)).

All these efforts translate into legal risk minimization for users of the StarCoder models, and customers of SafeCoder. And for SafeCoder users, these efforts translate into compliance features: when software developers get code completions these suggestions are checked against The Stack, so users know if the suggested code matches existing code in the source dataset, and what the license is. Customers can specify which licenses are preferred and surface those preferences to their users.

## How does it work?

SafeCoder is a complete commercial solution, including service, software and support.

### Training your own SafeCoder model

StarCoder was trained in more than 80 programming languages and offers state of the art performance on [multiple benchmarks](https://huggingface.co./spaces/bigcode/multilingual-code-evals). To offer better code suggestions specifically for a SafeCoder customer, we start the engagement with an optional training phase, where the Hugging Face team works directly with the customer team to guide them through the steps to prepare and build a training code dataset, and to create their own code generation model through fine-tuning, without ever exposing their codebase to third parties or the internet.

The end result is a model that is adapted to the code languages, standards and practices of the customer. Through this process, SafeCoder customers learn the process and build a pipeline for creating and updating their own models, ensuring no vendor lock-in, and keeping control of their AI capabilities.

### Deploying SafeCoder

During the setup phase, SafeCoder customers and Hugging Face design and provision the optimal infrastructure to support the required concurrency to offer a great developer experience. Hugging Face then builds SafeCoder inference containers that are hardware-accelerated and optimized for throughput, to be deployed by the customer on their own infrastructure.

SafeCoder inference supports various hardware to give customers a wide range of options: NVIDIA Ampere GPUs, AMD Instinct GPUs, Habana Gaudi2, AWS Inferentia 2, Intel Xeon Sapphire Rapids CPUs and more.

### Using SafeCoder

Once SafeCoder is deployed and its endpoints are live within the customer VPC, developers can install compatible SafeCoder IDE plugins to get code suggestions as they work. Today, SafeCoder supports popular IDEs, including [VSCode](https://marketplace.visualstudio.com/items?itemName=HuggingFace.huggingface-vscode), IntelliJ and with more plugins coming from our partners.

## How can I get SafeCoder?

Today, we are announcing SafeCoder in collaboration with VMware at the VMware Explore conference and making SafeCoder available to VMware enterprise customers. Working with VMware helps ensure the deployment of SafeCoder on customers’ VMware Cloud infrastructure is successful – whichever cloud, on-premises or hybrid infrastructure scenario is preferred by the customer. In addition to utilizing SafeCoder, VMware has published a [reference architecture](https://www.vmware.com/content/dam/digitalmarketing/vmware/en/pdf/docs/vmware-baseline-reference-architecture-for-generative-ai.pdf) with code samples to enable the fastest possible time-to-value when deploying and operating SafeCoder on VMware infrastructure. VMware’s Private AI Reference Architecture makes it easy for organizations to quickly leverage popular open source projects such as ray and kubeflow to deploy AI services adjacent to their private datasets, while working with Hugging Face to ensure that organizations maintain the flexibility to take advantage of the latest and greatest in open-source models. This is all without tradeoffs in total cost of ownership or performance.

“Our collaboration with Hugging Face around SafeCoder fully aligns to VMware’s goal of enabling customer choice of solutions while maintaining privacy and control of their business data. In fact, we have been running SafeCoder internally for months and have seen excellent results. Best of all, our collaboration with Hugging Face is just getting started, and I’m excited to take our solution to our hundreds of thousands of customers worldwide,” says Chris Wolf, Vice President of VMware AI Labs. Learn more about private AI and VMware’s differentiation in this emerging space [here](https://octo.vmware.com/vmware-private-ai-foundation/). | [

[

"llm",

"mlops",

"security",

"deployment"

]

] | [

"2629e041-8c70-4026-8651-8bb91fd9749a"

] | [

"submitted"

] | [

"llm",

"mlops",

"security",

"deployment"

] | null | null |

e2169c0b-64d3-413e-8e51-3024a8717f66 | completed | 2025-01-16T03:08:53.170813 | 2025-01-19T18:49:04.030751 | 2be8c7af-3113-44ee-9f03-c01041147421 | What's new in Diffusers? 🎨 | osanseviero | diffusers-2nd-month.md | A month and a half ago we released `diffusers`, a library that provides a modular toolbox for diffusion models across modalities. A couple of weeks later, we released support for Stable Diffusion, a high quality text-to-image model, with a free demo for anyone to try out. Apart from burning lots of GPUs, in the last three weeks the team has decided to add one or two new features to the library that we hope the community enjoys! This blog post gives a high-level overview of the new features in `diffusers` version 0.3! Remember to give a ⭐ to the [GitHub repository](https://github.com/huggingface/diffusers).

- [Image to Image pipelines](#image-to-image-pipeline)

- [Textual Inversion](#textual-inversion)

- [Inpainting](#experimental-inpainting-pipeline)

- [Optimizations for Smaller GPUs](#optimizations-for-smaller-gpus)

- [Run on Mac](#diffusers-in-mac-os)

- [ONNX Exporter](#experimental-onnx-exporter-and-pipeline)

- [New docs](#new-docs)

- [Community](#community)

- [Generate videos with SD latent space](#stable-diffusion-videos)

- [Model Explainability](#diffusers-interpret)

- [Japanese Stable Diffusion](#japanese-stable-diffusion)

- [High quality fine-tuned model](#waifu-diffusion)

- [Cross Attention Control with Stable Diffusion](#cross-attention-control)

- [Reusable seeds](#reusable-seeds)

## Image to Image pipeline

One of the most requested features was to have image to image generation. This pipeline allows you to input an image and a prompt, and it will generate an image based on that!

Let's see some code based on the official Colab [notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/image_2_image_using_diffusers.ipynb).

```python

from diffusers import StableDiffusionImg2ImgPipeline

pipe = StableDiffusionImg2ImgPipeline.from_pretrained(

"CompVis/stable-diffusion-v1-4",

revision="fp16",

torch_dtype=torch.float16,

use_auth_token=True

)

# Download an initial image

# ...

init_image = preprocess(init_img)

prompt = "A fantasy landscape, trending on artstation"

images = pipe(prompt=prompt, init_image=init_image, strength=0.75, guidance_scale=7.5, generator=generator)["sample"]

```

Don't have time for code? No worries, we also created a [Space demo](https://huggingface.co./spaces/huggingface/diffuse-the-rest) where you can try it out directly

## Textual Inversion

Textual Inversion lets you personalize a Stable Diffusion model on your own images with just 3-5 samples. With this tool, you can train a model on a concept, and then share the concept with the rest of the community!

In just a couple of days, the community shared over 200 concepts! Check them out!

* [Organization](https://huggingface.co./sd-concepts-library) with the concepts.

* [Navigator Colab](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_diffusion_textual_inversion_library_navigator.ipynb): Browse visually and use over 150 concepts created by the community.

* [Training Colab](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_textual_inversion_training.ipynb): Teach Stable Diffusion a new concept and share it with the rest of the community.

* [Inference Colab](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_conceptualizer_inference.ipynb): Run Stable Diffusion with the learned concepts.

## Experimental inpainting pipeline

Inpainting allows to provide an image, then select an area in the image (or provide a mask), and use Stable Diffusion to replace the mask. Here is an example:

<figure class="image table text-center m-0 w-full">

<img src="https://huggingface.co./datasets/huggingface/documentation-images/resolve/main/blog/diffusers-2nd-month/inpainting.png" alt="Example inpaint of owl being generated from an initial image and a prompt"/>

</figure>

You can try out a minimal Colab [notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/in_painting_with_stable_diffusion_using_diffusers.ipynb) or check out the code below. A demo is coming soon!

```python

from diffusers import StableDiffusionInpaintPipeline

pipe = StableDiffusionInpaintPipeline.from_pretrained(

"CompVis/stable-diffusion-v1-4",

revision="fp16",

torch_dtype=torch.float16,

use_auth_token=True

).to(device)

images = pipe(

prompt=["a cat sitting on a bench"] * 3,

init_image=init_image,

mask_image=mask_image,

strength=0.75,

guidance_scale=7.5,

generator=None

).images

```

Please note this is experimental, so there is room for improvement.

## Optimizations for smaller GPUs

After some improvements, the diffusion models can take much less VRAM. 🔥 For example, Stable Diffusion only takes 3.2GB! This yields the exact same results at the expense of 10% of speed. Here is how to use these optimizations

```python

from diffusers import StableDiffusionPipeline

pipe = StableDiffusionPipeline.from_pretrained(

"CompVis/stable-diffusion-v1-4",

revision="fp16",

torch_dtype=torch.float16,

use_auth_token=True

)

pipe = pipe.to("cuda")

pipe.enable_attention_slicing()

```

This is super exciting as this will reduce even more the barrier to use these models!

## Diffusers in Mac OS

🍎 That's right! Another widely requested feature was just released! Read the full instructions in the [official docs](https://huggingface.co./docs/diffusers/optimization/mps) (including performance comparisons, specs, and more).

Using the PyTorch mps device, people with M1/M2 hardware can run inference with Stable Diffusion. 🤯 This requires minimal setup for users, try it out!

```python

from diffusers import StableDiffusionPipeline

pipe = StableDiffusionPipeline.from_pretrained("CompVis/stable-diffusion-v1-4", use_auth_token=True)

pipe = pipe.to("mps")

prompt = "a photo of an astronaut riding a horse on mars"

image = pipe(prompt).images[0]

```

## Experimental ONNX exporter and pipeline

The new experimental pipeline allows users to run Stable Diffusion on any hardware that supports ONNX. Here is an example of how to use it (note that the `onnx` revision is being used)

```python

from diffusers import StableDiffusionOnnxPipeline

pipe = StableDiffusionOnnxPipeline.from_pretrained(

"CompVis/stable-diffusion-v1-4",

revision="onnx",

provider="CPUExecutionProvider",

use_auth_token=True,

)

prompt = "a photo of an astronaut riding a horse on mars"

image = pipe(prompt).images[0]

```

Alternatively, you can also convert your SD checkpoints to ONNX directly with the exporter script.

```

python scripts/convert_stable_diffusion_checkpoint_to_onnx.py --model_path="CompVis/stable-diffusion-v1-4" --output_path="./stable_diffusion_onnx"

```

## New docs

All of the previous features are very cool. As maintainers of open-source libraries, we know about the importance of high quality documentation to make it as easy as possible for anyone to try out the library.

💅 Because of this, we did a Docs sprint and we're very excited to do a first release of our [documentation](https://huggingface.co./docs/diffusers/v0.3.0/en/index). This is a first version, so there are many things we plan to add (and contributions are always welcome!).

Some highlights of the docs:

* Techniques for [optimization](https://huggingface.co./docs/diffusers/optimization/fp16)

* The [training overview](https://huggingface.co./docs/diffusers/training/overview)

* A [contributing guide](https://huggingface.co./docs/diffusers/conceptual/contribution)

* In-depth API docs for [schedulers](https://huggingface.co./docs/diffusers/api/schedulers)

* In-depth API docs for [pipelines](https://huggingface.co./docs/diffusers/api/pipelines/overview)

## Community

And while we were doing all of the above, the community did not stay idle! Here are some highlights (although not exhaustive) of what has been done out there

### Stable Diffusion Videos

Create 🔥 videos with Stable Diffusion by exploring the latent space and morphing between text prompts. You can:

* Dream different versions of the same prompt

* Morph between different prompts

The [Stable Diffusion Videos](https://github.com/nateraw/stable-diffusion-videos) tool is pip-installable, comes with a Colab notebook and a Gradio notebook, and is super easy to use!

Here is an example

```python

from stable_diffusion_videos import walk

video_path = walk(['a cat', 'a dog'], [42, 1337], num_steps=3, make_video=True)

```

### Diffusers Interpret

[Diffusers interpret](https://github.com/JoaoLages/diffusers-interpret) is an explainability tool built on top of `diffusers`. It has cool features such as:

* See all the images in the diffusion process

* Analyze how each token in the prompt influences the generation

* Analyze within specified bounding boxes if you want to understand a part of the image

(Image from the tool repository)

```python

# pass pipeline to the explainer class

explainer = StableDiffusionPipelineExplainer(pipe)

# generate an image with `explainer`

prompt = "Corgi with the Eiffel Tower"

output = explainer(

prompt,

num_inference_steps=15

)

output.normalized_token_attributions # (token, attribution_percentage)

#[('corgi', 40),

# ('with', 5),

# ('the', 5),

# ('eiffel', 25),

# ('tower', 25)]

```

### Japanese Stable Diffusion

The name says it all! The goal of JSD was to train a model that also captures information about the culture, identity and unique expressions. It was trained with 100 million images with Japanese captions. You can read more about how the model was trained in the [model card](https://huggingface.co./rinna/japanese-stable-diffusion)

### Waifu Diffusion

[Waifu Diffusion](https://huggingface.co./hakurei/waifu-diffusion) is a fine-tuned SD model for high-quality anime images generation.

<figure class="image table text-center m-0 w-full">

<img src="https://huggingface.co./datasets/huggingface/documentation-images/resolve/main/blog/diffusers-2nd-month/waifu.png" alt="Images of high quality anime"/>

</figure>

(Image from the tool repository)

### Cross Attention Control

[Cross Attention Control](https://github.com/bloc97/CrossAttentionControl) allows fine control of the prompts by modifying the attention maps of the diffusion models. Some cool things you can do:

* Replace a target in the prompt (e.g. replace cat by dog)

* Reduce or increase the importance of words in the prompt (e.g. if you want less attention to be given to "rocks")

* Easily inject styles

And much more! Check out the repo.

### Reusable Seeds

One of the most impressive early demos of Stable Diffusion was the reuse of seeds to tweak images. The idea is to use the seed of an image of interest to generate a new image, with a different prompt. This yields some cool results! Check out the [Colab](https://colab.research.google.com/github/pcuenca/diffusers-examples/blob/main/notebooks/stable-diffusion-seeds.ipynb)

## Thanks for reading!

I hope you enjoy reading this! Remember to give a Star in our [GitHub Repository](https://github.com/huggingface/diffusers) and join the [Hugging Face Discord Server](https://hf.co/join/discord), where we have a category of channels just for Diffusion models. Over there the latest news in the library are shared!

Feel free to open issues with feature requests and bug reports! Everything that has been achieved couldn't have been done without such an amazing community. | [

[

"computer_vision",

"optimization",

"tools",

"image_generation"

]

] | [

"2629e041-8c70-4026-8651-8bb91fd9749a"

] | [

"submitted"

] | [

"computer_vision",

"image_generation",

"tools",

"optimization"

] | null | null |

32908790-7e76-4203-ab96-4142f7d282dd | completed | 2025-01-16T03:08:53.170818 | 2025-01-19T18:52:31.757045 | 31a1dd64-f9d3-4521-82bd-ca3c4800f829 | Optimum-NVIDIA Unlocking blazingly fast LLM inference in just 1 line of code | laikh-nvidia, mfuntowicz | optimum-nvidia.md | Large Language Models (LLMs) have revolutionized natural language processing and are increasingly deployed to solve complex problems at scale. Achieving optimal performance with these models is notoriously challenging due to their unique and intense computational demands. Optimized performance of LLMs is incredibly valuable for end users looking for a snappy and responsive experience, as well as for scaled deployments where improved throughput translates to dollars saved.

That's where the [Optimum-NVIDIA](https://github.com/huggingface/optimum-nvidia) inference library comes in. Available on Hugging Face, Optimum-NVIDIA dramatically accelerates LLM inference on the NVIDIA platform through an extremely simple API.

By changing **just a single line of code**, you can unlock up to **28x faster inference and 1,200 tokens/second** on the NVIDIA platform.

Optimum-NVIDIA is the first Hugging Face inference library to benefit from the new `float8` format supported on the NVIDIA Ada Lovelace and Hopper architectures.

FP8, in addition to the advanced compilation capabilities of [NVIDIA TensorRT-LLM software](https://developer.nvidia.com/blog/nvidia-tensorrt-llm-supercharges-large-language-model-inference-on-nvidia-h100-gpus/) software, dramatically accelerates LLM inference.

### How to Run

You can start running LLaMA with blazingly fast inference speeds in just 3 lines of code with a pipeline from Optimum-NVIDIA.

If you already set up a pipeline from Hugging Face’s transformers library to run LLaMA, you just need to modify a single line of code to unlock peak performance!

```diff

- from transformers.pipelines import pipeline

+ from optimum.nvidia.pipelines import pipeline

# everything else is the same as in transformers!

pipe = pipeline('text-generation', 'meta-llama/Llama-2-7b-chat-hf', use_fp8=True)

pipe("Describe a real-world application of AI in sustainable energy.")

```

You can also enable FP8 quantization with a single flag, which allows you to run a bigger model on a single GPU at faster speeds and without sacrificing accuracy.

The flag shown in this example uses a predefined calibration strategy by default, though you can provide your own calibration dataset and customized tokenization to tailor the quantization to your use case.

The pipeline interface is great for getting up and running quickly, but power users who want fine-grained control over setting sampling parameters can use the Model API.

```diff

- from transformers import AutoModelForCausalLM

+ from optimum.nvidia import AutoModelForCausalLM

from transformers import AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained("meta-llama/Llama-2-13b-chat-hf", padding_side="left")

model = AutoModelForCausalLM.from_pretrained(

"meta-llama/Llama-2-13b-chat-hf",

+ use_fp8=True,

)

model_inputs = tokenizer(

["How is autonomous vehicle technology transforming the future of transportation and urban planning?"],

return_tensors="pt"

).to("cuda")

generated_ids, generated_length = model.generate(

**model_inputs,

top_k=40,

top_p=0.7,

repetition_penalty=10,

)

tokenizer.batch_decode(generated_ids[0], skip_special_tokens=True)

```

For more details, check out our [documentation](https://github.com/huggingface/optimum-nvidia)

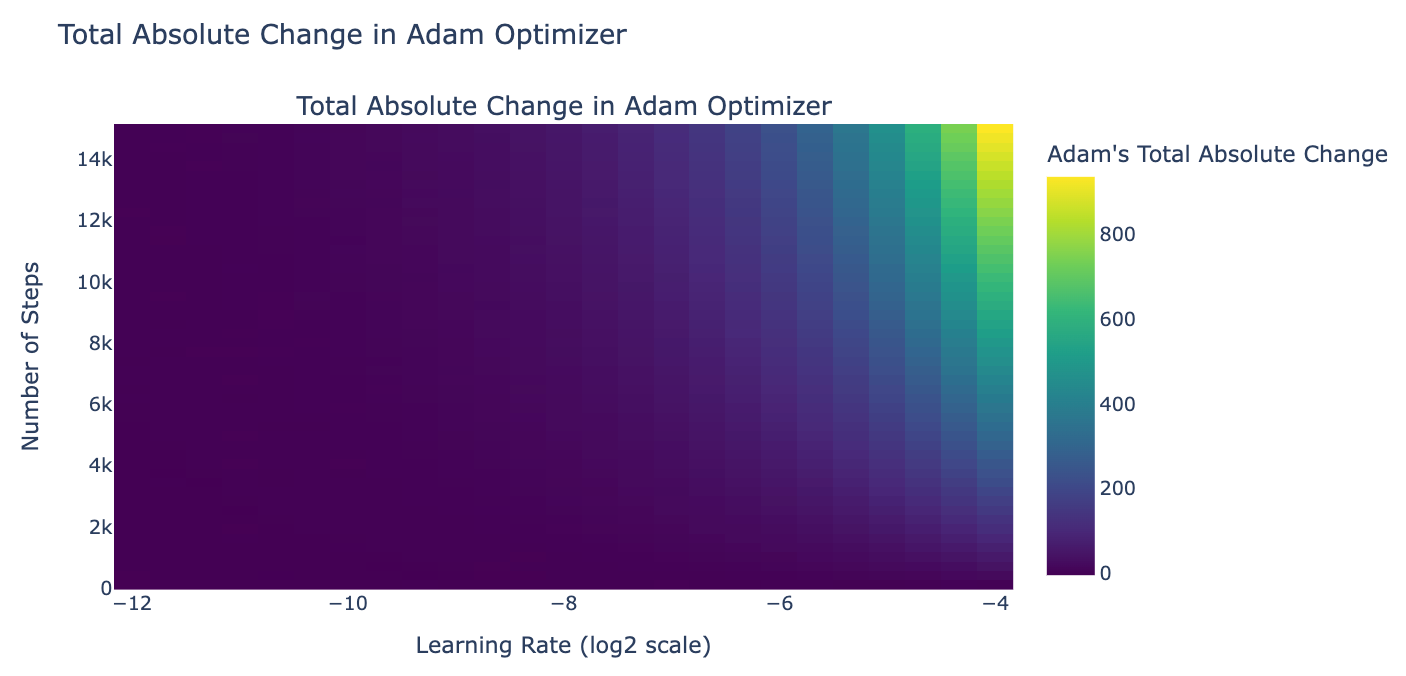

### Performance Evaluation

When evaluating the performance of an LLM, we consider two metrics: First Token Latency and Throughput.

First Token Latency (also known as Time to First Token or prefill latency) measures how long you wait from the time you enter your prompt to the time you begin receiving your output, so this metric can tell you how responsive the model will feel.

Optimum-NVIDIA delivers up to 3.3x faster First Token Latency compared to stock transformers:

<br>

<figure class="image">

<img alt="" src="assets/optimum_nvidia/first_token_latency.svg" />

<figcaption>Figure 1. Time it takes to generate the first token (ms)</figcaption>

</figure>

<br>

Throughput, on the other hand, measures how fast the model can generate tokens and is particularly relevant when you want to batch generations together.

While there are a few ways to calculate throughput, we adopted a standard method to divide the end-to-end latency by the total sequence length, including both input and output tokens summed over all batches.

Optimum-NVIDIA delivers up to 28x better throughput compared to stock transformers:

<br>

<figure class="image">

<img alt="" src="assets/optimum_nvidia/throughput.svg" />

<figcaption>Figure 2. Throughput (token / second)</figcaption>

</figure>

<br>

Initial evaluations of the [recently announced NVIDIA H200 Tensor Core GPU](https://www.nvidia.com/en-us/data-center/h200/) show up to an additional 2x boost in throughput for LLaMA models compared to an NVIDIA H100 Tensor Core GPU.

As H200 GPUs become more readily available, we will share performance data for Optimum-NVIDIA running on them.

### Next steps

Optimum-NVIDIA currently provides peak performance for the LLaMAForCausalLM architecture + task, so any [LLaMA-based model](https://huggingface.co./models?other=llama,llama2), including fine-tuned versions, should work with Optimum-NVIDIA out of the box today.

We are actively expanding support to include other text generation model architectures and tasks, all from within Hugging Face.

We continue to push the boundaries of performance and plan to incorporate cutting-edge optimization techniques like In-Flight Batching to improve throughput when streaming prompts and INT4 quantization to run even bigger models on a single GPU.

Give it a try: we are releasing the [Optimum-NVIDIA repository](https://github.com/huggingface/optimum-nvidia) with instructions on how to get started. Please share your feedback with us! 🤗 | [

[

"llm",

"mlops",

"optimization",

"tools"

]

] | [

"2629e041-8c70-4026-8651-8bb91fd9749a"

] | [

"submitted"

] | [

"llm",

"optimization",

"mlops",

"tools"

] | null | null |

bf512e00-b35b-4d18-8ae8-71e366df4051 | completed | 2025-01-16T03:08:53.170823 | 2025-01-19T19:05:36.952927 | b4f91c67-4010-4b28-a9de-bff13fecd4ef | How to Install and Use the Hugging Face Unity API | dylanebert | unity-api.md | <!-- {authors} -->

The [Hugging Face Unity API](https://github.com/huggingface/unity-api) is an easy-to-use integration of the [Hugging Face Inference API](https://huggingface.co./inference-api), allowing developers to access and use Hugging Face AI models in their Unity projects. In this blog post, we'll walk through the steps to install and use the Hugging Face Unity API.

## Installation

1. Open your Unity project

2. Go to `Window` -> `Package Manager`

3. Click `+` and select `Add Package from git URL`

4. Enter `https://github.com/huggingface/unity-api.git`

5. Once installed, the Unity API wizard should pop up. If not, go to `Window` -> `Hugging Face API Wizard`

<figure class="image text-center">

<img src="https://huggingface.co./datasets/huggingface/documentation-images/resolve/main/blog/124_ml-for-games/packagemanager.gif">

</figure>

6. Enter your API key. Your API key can be created in your [Hugging Face account settings](https://huggingface.co./settings/tokens).

7. Test the API key by clicking `Test API key` in the API Wizard.

8. Optionally, change the model endpoints to change which model to use. The model endpoint for any model that supports the inference API can be found by going to the model on the Hugging Face website, clicking `Deploy` -> `Inference API`, and copying the url from the `API_URL` field.

9. Configure advanced settings if desired. For up-to-date information, visit the project repository at `https://github.com/huggingface/unity-api`

10. To see examples of how to use the API, click `Install Examples`. You can now close the API Wizard.

<figure class="image text-center">

<img src="https://huggingface.co./datasets/huggingface/documentation-images/resolve/main/blog/124_ml-for-games/apiwizard.png">

</figure>

Now that the API is set up, you can make calls from your scripts to the API. Let's look at an example of performing a Sentence Similarity task:

```

using HuggingFace.API;

/* other code */

// Make a call to the API

void Query() {

string inputText = "I'm on my way to the forest.";

string[] candidates = {

"The player is going to the city",

"The player is going to the wilderness",

"The player is wandering aimlessly"

};

HuggingFaceAPI.SentenceSimilarity(inputText, OnSuccess, OnError, candidates);

}

// If successful, handle the result

void OnSuccess(float[] result) {

foreach(float value in result) {

Debug.Log(value);

}

}

// Otherwise, handle the error

void OnError(string error) {

Debug.LogError(error);

}

/* other code */

```

## Supported Tasks and Custom Models

The Hugging Face Unity API also currently supports the following tasks:

- [Conversation](https://huggingface.co./tasks/conversational)

- [Text Generation](https://huggingface.co./tasks/text-generation)

- [Text to Image](https://huggingface.co./tasks/text-to-image)

- [Text Classification](https://huggingface.co./tasks/text-classification)

- [Question Answering](https://huggingface.co./tasks/question-answering)

- [Translation](https://huggingface.co./tasks/translation)

- [Summarization](https://huggingface.co./tasks/summarization)

- [Speech Recognition](https://huggingface.co./tasks/automatic-speech-recognition)

Use the corresponding methods provided by the `HuggingFaceAPI` class to perform these tasks.

To use your own custom model hosted on Hugging Face, change the model endpoint in the API Wizard.

## Usage Tips

1. Keep in mind that the API makes calls asynchronously, and returns a response or error via callbacks.

2. Address slow response times or performance issues by changing model endpoints to lower resource models.

## Conclusion

The Hugging Face Unity API offers a simple way to integrate AI models into your Unity projects. We hope you found this tutorial helpful. If you have any questions or would like to get more involved in using Hugging Face for Games, join the [Hugging Face Discord](https://hf.co/join/discord)! | [

[

"implementation",

"tutorial",

"tools",

"integration"

]

] | [

"2629e041-8c70-4026-8651-8bb91fd9749a"

] | [

"submitted"

] | [

"implementation",

"tutorial",

"integration",

"tools"

] | null | null |

e8f22810-d80b-4c54-9359-2cf62d14bcd2 | completed | 2025-01-16T03:08:53.170828 | 2025-01-16T03:21:38.002539 | a031b75d-c965-4e76-8449-593a47a1e0a3 | Fine-Tuning Gemma Models in Hugging Face | svaibhav, alanwaketan, ybelkada, ArthurZ | gemma-peft.md | We recently announced that [Gemma](https://huggingface.co./blog/gemma), the open weights language model from Google Deepmind, is available for the broader open-source community via Hugging Face. It’s available in 2 billion and 7 billion parameter sizes with pretrained and instruction-tuned flavors. It’s available on Hugging Face, supported in TGI, and easily accessible for deployment and fine-tuning in the Vertex Model Garden and Google Kubernetes Engine.

<div class="flex items-center justify-center">

<img src="/blog/assets/gemma-peft/Gemma-peft.png" alt="Gemma Deploy">

</div>

The Gemma family of models also happens to be well suited for prototyping and experimentation using the free GPU resource available via Colab. In this post we will briefly review how you can do [Parameter Efficient FineTuning (PEFT)](https://huggingface.co./blog/peft) for Gemma models, using the Hugging Face Transformers and PEFT libraries on GPUs and Cloud TPUs for anyone who wants to fine-tune Gemma models on their own dataset.

## Why PEFT?

The default (full weight) training for language models, even for modest sizes, tends to be memory and compute-intensive. On one hand, it can be prohibitive for users relying on openly available compute platforms for learning and experimentation, such as Colab or Kaggle. On the other hand, and even for enterprise users, the cost of adapting these models for different domains is an important metric to optimize. PEFT, or parameter-efficient fine tuning, is a popular technique to accomplish this at low cost.

## PyTorch on GPU and TPU

Gemma models in Hugging Face `transformers` are optimized for both PyTorch and PyTorch/XLA. This enables both TPU and GPU users to access and experiment with Gemma models as needed. Together with the Gemma release, we have also improved the [FSDP](https://engineering.fb.com/2021/07/15/open-source/fsdp/) experience for PyTorch/XLA in Hugging Face. This [FSDP via SPMD](https://github.com/pytorch/xla/issues/6379) integration also allows other Hugging Face models to take advantage of TPU acceleration via PyTorch/XLA. In this post, we will focus on PEFT, and more specifically on Low-Rank Adaptation (LoRA), for Gemma models. For a more comprehensive set of LoRA techniques, we encourage readers to review the [Scaling Down to Scale Up, from Lialin et al.](https://arxiv.org/pdf/2303.15647.pdf) and [this excellent post](https://pytorch.org/blog/finetune-llms/) post by Belkada et al.

## Low-Rank Adaptation for Large Language Models

Low-Rank Adaptation (LoRA) is one of the parameter-efficient fine-tuning techniques for large language models (LLMs). It addresses just a fraction of the total number of model parameters to be fine-tuned, by freezing the original model and only training adapter layers that are decomposed into low-rank matrices. The [PEFT library](https://github.com/huggingface/peft) provides an easy abstraction that allows users to select the model layers where adapter weights should be applied.

```python

from peft import LoraConfig

lora_config = LoraConfig(

r=8,

target_modules=["q_proj", "o_proj", "k_proj", "v_proj", "gate_proj", "up_proj", "down_proj"],

task_type="CAUSAL_LM",

)

```

In this snippet, we refer to all `nn.Linear` layers as the target layers to be adapted.

In the following example, we will leverage [QLoRA](https://huggingface.co./blog/4bit-transformers-bitsandbytes), from [Dettmers et al.](https://arxiv.org/abs/2305.14314), in order to quantize the base model in 4-bit precision for a more memory efficient fine-tuning protocol. The model can be loaded with QLoRA by first installing the `bitsandbytes` library on your environment, and then passing a `BitsAndBytesConfig` object to `from_pretrained` when loading the model.

## Before we begin

In order to access Gemma model artifacts, users are required to accept [the consent form](https://huggingface.co./google/gemma-7b-it).

Now let’s get started with the implementation.

## Learning to quote

Assuming that you have submitted the consent form, you can access the model artifacts from the [Hugging Face Hub](https://huggingface.co./collections/google/gemma-release-65d5efbccdbb8c4202ec078b).

We start by downloading the model and the tokenizer. We also include a `BitsAndBytesConfig` for weight only quantization.

```python

import torch

from transformers import AutoTokenizer, AutoModelForCausalLM, BitsAndBytesConfig

model_id = "google/gemma-2b"

bnb_config = BitsAndBytesConfig(

load_in_4bit=True,

bnb_4bit_quant_type="nf4",

bnb_4bit_compute_dtype=torch.bfloat16

)

tokenizer = AutoTokenizer.from_pretrained(model_id, token=os.environ['HF_TOKEN'])

model = AutoModelForCausalLM.from_pretrained(model_id, quantization_config=bnb_config, device_map={"":0}, token=os.environ['HF_TOKEN'])

```

Now we test the model before starting the finetuning, using a famous quote:

```python

text = "Quote: Imagination is more"

device = "cuda:0"

inputs = tokenizer(text, return_tensors="pt").to(device)

outputs = model.generate(**inputs, max_new_tokens=20)

print(tokenizer.decode(outputs[0], skip_special_tokens=True))

```

The model does a reasonable completion with some extra tokens:

```

Quote: Imagination is more important than knowledge. Knowledge is limited. Imagination encircles the world.

-Albert Einstein

I

```

But this is not exactly the format we would love the answer to be. Let’s see if we can use fine-tuning to teach the model to generate the answer in the following format.

```

Quote: Imagination is more important than knowledge. Knowledge is limited. Imagination encircles the world.

Author: Albert Einstein

```

To begin with, let's select an English quotes dataset [Abirate/english_quotes](https://huggingface.co./datasets/Abirate/english_quotes).

```python

from datasets import load_dataset

data = load_dataset("Abirate/english_quotes")

data = data.map(lambda samples: tokenizer(samples["quote"]), batched=True)

```

Now let’s finetune this model using the LoRA config stated above:

```python

import transformers

from trl import SFTTrainer

def formatting_func(example):

text = f"Quote: {example['quote'][0]}\nAuthor: {example['author'][0]}<eos>"

return [text]

trainer = SFTTrainer(

model=model,

train_dataset=data["train"],

args=transformers.TrainingArguments(

per_device_train_batch_size=1,

gradient_accumulation_steps=4,

warmup_steps=2,

max_steps=10,

learning_rate=2e-4,

fp16=True,

logging_steps=1,

output_dir="outputs",

optim="paged_adamw_8bit"

),

peft_config=lora_config,

formatting_func=formatting_func,

)

trainer.train()

```

Finally, we are ready to test the model once more with the same prompt we have used earlier:

```python

text = "Quote: Imagination is"

device = "cuda:0"

inputs = tokenizer(text, return_tensors="pt").to(device)

outputs = model.generate(**inputs, max_new_tokens=20)

print(tokenizer.decode(outputs[0], skip_special_tokens=True))

```

This time we get the response in the format we like:

```

Quote: Imagination is more important than knowledge. Knowledge is limited. Imagination encircles the world.

Author: Albert Einstein

```

## Accelerate with FSDP via SPMD on TPU

As mentioned earlier, Hugging Face `transformers` now supports PyTorch/XLA’s latest FSDP implementation. This can greatly accelerate the fine-tuning speed. To enable that, one just needs to add a FSDP config to the `transformers.Trainer`:

```python

from transformers import DataCollatorForLanguageModeling, Trainer, TrainingArguments

# Set up the FSDP config. To enable FSDP via SPMD, set xla_fsdp_v2 to True.

fsdp_config = {

"fsdp_transformer_layer_cls_to_wrap": ["GemmaDecoderLayer"],

"xla": True,

"xla_fsdp_v2": True,

"xla_fsdp_grad_ckpt": True

}

# Finally, set up the trainer and train the model.

trainer = Trainer(

model=model,

train_dataset=data,

args=TrainingArguments(

per_device_train_batch_size=64, # This is actually the global batch size for SPMD.

num_train_epochs=100,

max_steps=-1,

output_dir="./output",

optim="adafactor",

logging_steps=1,

dataloader_drop_last = True, # Required for SPMD.

fsdp="full_shard",

fsdp_config=fsdp_config,

),

data_collator=DataCollatorForLanguageModeling(tokenizer, mlm=False),

)

trainer.train()

```

## Next Steps

We walked through this simple example adapted from the source notebook to illustrate the LoRA finetuning method applied to Gemma models. The full colab for GPU can be found [here](https://huggingface.co./google/gemma-7b/blob/main/examples/notebook_sft_peft.ipynb), and the full script for TPU can be found [here](https://huggingface.co./google/gemma-7b/blob/main/examples/example_fsdp.py). We are excited about the endless possibilities for research and learning thanks to this recent addition to our open source ecosystem. We encourage users to also visit the [Gemma documentation](https://huggingface.co./docs/transformers/v4.38.0/en/model_doc/gemma), as well as our [launch blog](https://huggingface.co./blog/gemma) for more examples to train, finetune and deploy Gemma models. | [

[

"llm",

"transformers",

"mlops",

"fine_tuning"

]

] | [

"2629e041-8c70-4026-8651-8bb91fd9749a"

] | [

"submitted"

] | [

"llm",

"fine_tuning",

"transformers",

"mlops"

] | null | null |

8f58f47c-2afd-4173-8311-53a13f471ecc | completed | 2025-01-16T03:08:53.170833 | 2025-01-19T18:52:45.901121 | 6c75d858-bb98-423a-80d0-864007076fe9 | Faster assisted generation support for Intel Gaudi | haimbarad, neharaste, joeychou | assisted-generation-support-gaudi.md | As model sizes grow, Generative AI implementations require significant inference resources. This not only increases the cost per generation, but also increases the power consumption used to serve such requests.

Inference optimizations for text generation are essential for reducing latency, infrastructure costs, and power consumption. This can lead to an improved user experience and increased efficiency in text generation tasks.

Assisted decoding is a popular method for speeding up text generation. We adapted and optimized it for Intel Gaudi, which delivers similar performance as Nvidia H100 GPUs as shown in [a previous post](https://huggingface.co./blog/bridgetower), while its price is in the same ballpark as Nvidia A100 80GB GPUs. This work is now part of Optimum Habana, which extends various Hugging Face libraries like Transformers and Diffusers so that your AI workflows are fully optimized for Intel Gaudi processors.

## Speculative Sampling - Assisted Decoding

Speculative sampling is a technique used to speed up text generation. It works by generating a draft model that generates K tokens, which are then evaluated in the target model. If the draft model is rejected, the target model is used to generate the next token. This process repeats. By using speculative sampling, we can improve the speed of text generation and achieve similar sampling quality as autoregressive sampling. The technique allows us to specify a draft model when generating text. This method has been shown to provide speedups of about 2x for large transformer-based models. Overall, these techniques can accelerate text generation and improve performance on Intel Gaudi processors.

However, the draft model and target model have different sizes that would be represented in a KV cache, so the challenge is to take advantage of separate optimization strategies simultaneously. For this article, we assume a quantized model and leverage KV caching together with Speculative Sampling. Note that each model has its own KV cache, and the draft model is used to generate K tokens, which are then evaluated in the target model. The target model is used to generate the next token when the draft model is rejected. The draft model is used to generate the next K tokens, and the process repeats.

Note that the authors [2] prove that the target distribution is recovered when performing speculative sampling - this guarantees the same sampling quality as autoregressive sampling on the target itself. Therefore, the situations where not leveraging speculative sampling is not worthwhile have to do with the case where there are not enough savings in the relative size of the draft model or the acceptance rate of the draft model is not high enough to benefit from the smaller size of the draft model.

There is a technique similar to Speculative Sampling, known as Assisted Generation. This was developed independently around the same time [3]. The author integrated this method into Hugging Face Transformers, and the *.generate()* call now has an optional *assistant_model* parameter to enable this method.

## Usage & Experiments

The usage of Assisted Generation is straightforward. An example is provided [here](https://github.com/huggingface/optimum-habana/tree/main/examples/text-generation#run-speculative-sampling-on-gaudi).

As would be expected, the parameter `--assistant_model` is used to specify the draft model. The draft model is used to generate K tokens, which are then evaluated in the target model. The target model is used to generate the next token when the draft model is rejected. The draft model is used to generate the next K tokens, and the process repeats. The acceptance rate of the draft model is partly dependent on the input text. Typically, we have seen speed-ups of about 2x for large transformer-based models.

## Conclusion

Accelerating text generation with Gaudi with assisted generation is now supported and easy to use. This can be used to improve performance on Intel Gaudi processors. The method is based on Speculative Sampling, which has been shown to be effective in improving performance on large transformer-based models.

# References

[1] N. Shazeer, “Fast Transformer Decoding: One Write-Head is All You Need,” Nov. 2019. arXiv:1911.02150.

[2] C. Chen, S. Borgeaud, G. Irving, J.B. Lespiau, L. Sifre, and J. Jumper, “Accelerating Large Language Model Decoding with Speculative Sampling,” Feb. 2023. arXiv:2302.01318.

[3] J. Gante, “Assisted Generation: a new direction toward low-latency text generation,” May 2023, https://huggingface.co./blog/assisted-generation. | [

[

"llm",

"optimization",

"text_generation",

"efficient_computing"

]

] | [

"2629e041-8c70-4026-8651-8bb91fd9749a"

] | [

"submitted"

] | [

"llm",

"optimization",

"text_generation",

"efficient_computing"

] | null | null |

77ebe7b9-9753-4fc7-bb9c-8760c2e8be90 | completed | 2025-01-16T03:08:53.170837 | 2025-01-19T18:55:10.651333 | 974f622b-b4cf-4a2f-aa6e-0aacd992d017 | Stable Diffusion with 🧨 Diffusers | valhalla, pcuenq, natolambert, patrickvonplaten | stable_diffusion.md | <a target="_blank" href="https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_diffusion.ipynb">

<img src="https://colab.research.google.com/assets/colab-badge.svg" alt="Open In Colab"/>

</a>

**Stable Diffusion** 🎨

*...using 🧨 Diffusers*

Stable Diffusion is a text-to-image latent diffusion model created by the researchers and engineers from [CompVis](https://github.com/CompVis), [Stability AI](https://stability.ai/) and [LAION](https://laion.ai/).

It is trained on 512x512 images from a subset of the [LAION-5B](https://laion.ai/blog/laion-5b/) database.

*LAION-5B* is the largest, freely accessible multi-modal dataset that currently exists.

In this post, we want to show how to use Stable Diffusion with the [🧨 Diffusers library](https://github.com/huggingface/diffusers), explain how the model works and finally dive a bit deeper into how `diffusers` allows

one to customize the image generation pipeline.

**Note**: It is highly recommended to have a basic understanding of how diffusion models work. If diffusion

models are completely new to you, we recommend reading one of the following blog posts:

- [The Annotated Diffusion Model](https://huggingface.co./blog/annotated-diffusion)

- [Getting started with 🧨 Diffusers](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/diffusers_intro.ipynb)

Now, let's get started by generating some images 🎨.

## Running Stable Diffusion

### License

Before using the model, you need to accept the model [license](https://huggingface.co./spaces/CompVis/stable-diffusion-license) in order to download and use the weights. **Note: the license does not need to be explicitly accepted through the UI anymore**.