url

stringlengths 62

66

| repository_url

stringclasses 1

value | labels_url

stringlengths 76

80

| comments_url

stringlengths 71

75

| events_url

stringlengths 69

73

| html_url

stringlengths 50

56

| id

int64 377M

2.15B

| node_id

stringlengths 18

32

| number

int64 1

29.2k

| title

stringlengths 1

487

| user

dict | labels

list | state

stringclasses 2

values | locked

bool 2

classes | assignee

dict | assignees

list | comments

sequence | created_at

int64 1.54k

1.71k

| updated_at

int64 1.54k

1.71k

| closed_at

int64 1.54k

1.71k

⌀ | author_association

stringclasses 4

values | active_lock_reason

stringclasses 2

values | body

stringlengths 0

234k

⌀ | reactions

dict | timeline_url

stringlengths 71

75

| state_reason

stringclasses 3

values | draft

bool 2

classes | pull_request

dict |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

https://api.github.com/repos/huggingface/transformers/issues/509 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/509/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/509/comments | https://api.github.com/repos/huggingface/transformers/issues/509/events | https://github.com/huggingface/transformers/issues/509 | 435,407,887 | MDU6SXNzdWU0MzU0MDc4ODc= | 509 | How to read a checkpoint and continue training? | {

"login": "a-maci",

"id": 23125439,

"node_id": "MDQ6VXNlcjIzMTI1NDM5",

"avatar_url": "https://avatars.githubusercontent.com/u/23125439?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/a-maci",

"html_url": "https://github.com/a-maci",

"followers_url": "https://api.github.com/users/a-maci/followers",

"following_url": "https://api.github.com/users/a-maci/following{/other_user}",

"gists_url": "https://api.github.com/users/a-maci/gists{/gist_id}",

"starred_url": "https://api.github.com/users/a-maci/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/a-maci/subscriptions",

"organizations_url": "https://api.github.com/users/a-maci/orgs",

"repos_url": "https://api.github.com/users/a-maci/repos",

"events_url": "https://api.github.com/users/a-maci/events{/privacy}",

"received_events_url": "https://api.github.com/users/a-maci/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 1108649070,

"node_id": "MDU6TGFiZWwxMTA4NjQ5MDcw",

"url": "https://api.github.com/repos/huggingface/transformers/labels/Need%20more%20information",

"name": "Need more information",

"color": "d876e3",

"default": false,

"description": "Further information is requested"

},

{

"id": 1314768611,

"node_id": "MDU6TGFiZWwxMzE0NzY4NjEx",

"url": "https://api.github.com/repos/huggingface/transformers/labels/wontfix",

"name": "wontfix",

"color": "ffffff",

"default": true,

"description": null

}

] | closed | false | null | [] | [

"Hi, what fine-tuning script and model are you referring to?",

"I would like to know how to restart / continue runs as well.\r\nI would like to fine tune on half data first, checkpoint it. Then restart and continue on the other half of the data.\r\n\r\nLike the `main` function in this finetuning script:\r\nhttps://github.com/huggingface/pytorch-pretrained-BERT/blob/3fc63f126ddf883ba9659f13ec046c3639db7b7e/examples/lm_finetuning/simple_lm_finetuning.py",

"@thomwolf Hi. I was experimenting with run_squad.py on colab. I was able to train and checkpoint the model after every 50 steps. However, for some reason, the notebook crashed and did not resume training. Is there a way to load that checkpoint and resume training from that point onwards? ",

"I am fine-tuning using run_glue.py on bert. Have a checkpoint that I would like to continue from since my run crashed. Also, what happens to the tensorboard event file? For example, if my checkpoint is at iteration 250 (and my checkpoint crashed at 290), will the Tensorboard event file be appended correctly???",

"This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.\n",

"I think the solution is to change the model name to the checkpoint directory. When using the `run_glue.py` example script I changed the parameter from `--model_name_or_path bert-base-uncased` to `--model_name_or_path ../my-output-dir/checkpoint-1600`",

"> I think the solution is to change the model name to the checkpoint directory. When using the `run_glue.py` example script I changed the parameter from `--model_name_or_path bert-base-uncased` to `--model_name_or_path ../my-output-dir/checkpoint-1600`\r\n\r\nHi, this works but may I know what did you do the OURPUT-DIR? Keeping the same one while \"overwriting\" or starting a new one? Thanks!",

"> I think the solution is to change the model name to the checkpoint directory. When using the `run_glue.py` example script I changed the parameter from `--model_name_or_path bert-base-uncased` to `--model_name_or_path ../my-output-dir/checkpoint-1600`\r\n\r\nHi, I tried this. The following error message shows: \"We assumed '/cluster/home/xiazhi/finetune_results_republican/checkpoint-1500' was a path, a model identifier, or url to a directory containing vocabulary files named ['vocab.json', 'merges.txt'] but couldn't find such vocabulary files at this path or url.\" But only after all epochs are done will the vocal.json and merges.txt be generated.\r\n",

"@anniezhi I have the same problem. This makes training very difficult; anyone have any ideas re: how to save the tokenizer whenever the checkpoints are saved?",

"@anniezhi I figured it out - if loading from a checkpoint, use the additional argument --tokenizer_name and provide the name of your tokenizer. Here's my helper bash script for reference :\r\n\r\n```\r\n#!/bin/bash\r\nconda activate transformers\r\n\r\ncd \"${HOME}/Desktop\"\r\nrm -rf \"./${1}\"\r\n\r\nTRAIN_FILE=\"/media/b/F:/debiased_archive_200.h5\"\r\n\r\n#Matt login key\r\nwandb login MY_API_KEY\r\n\r\npython bao-ai/training_flows/run_language_custom_modeling.py \\\r\n --output_dir=\"./${1}\" \\\r\n --tokenizer_name=gpt2 \\\r\n --model_name_or_path=\"${2}\" \\\r\n --block_size \"${3}\" \\\r\n --per_device_train_batch_size \"${4}\" \\\r\n --do_train \\\r\n --train_data_file=$TRAIN_FILE\\\r\n```",

"If you're using the latest release (v3.1.0), the tokenizer should be saved as well, so there's no need to use the `--tokenizer_name` anymore.\r\n\r\nFor any version <3.1.0, @apteryxlabs's solution is the way to go!",

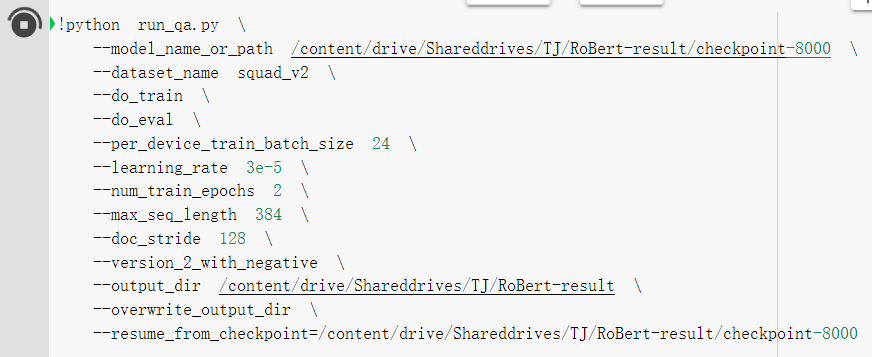

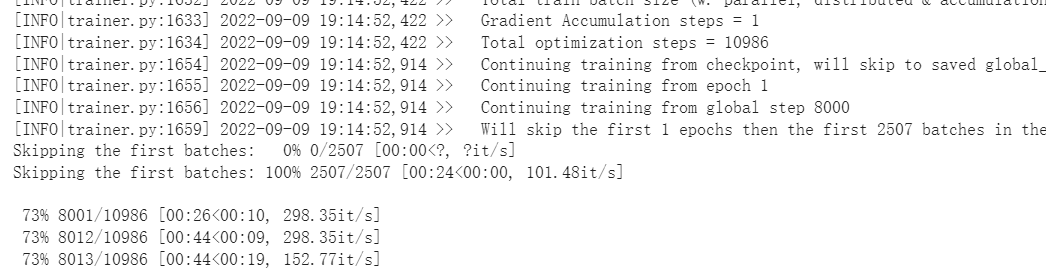

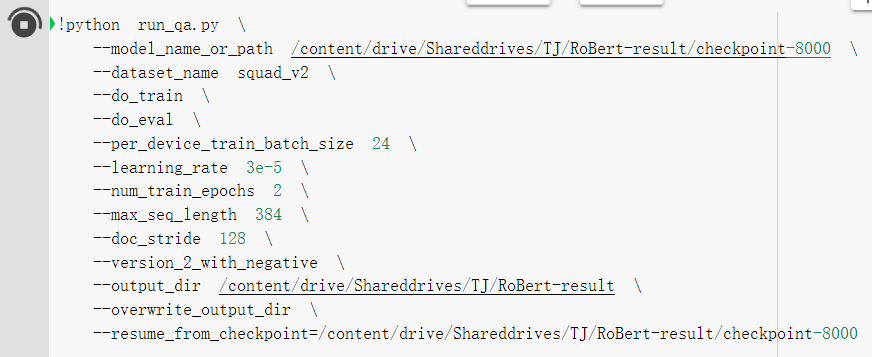

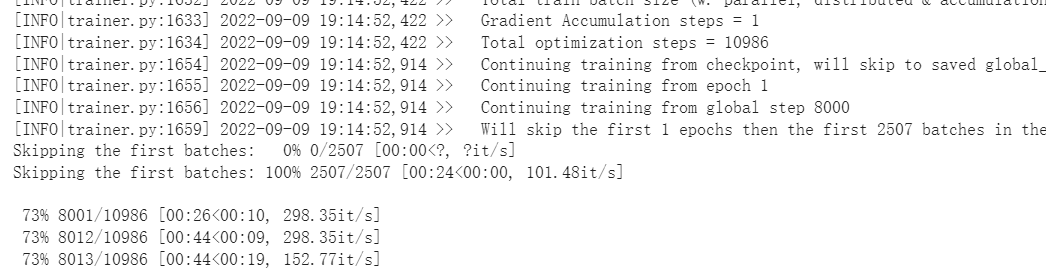

"> \r\n\r\nBrowse parameters, resume_from_checkpoint=./\r\n\r\nNow, the code runs from checkpoint\r\n\r\n",

"> \r\n\r\nBrowse parameters, resume_from_checkpoint=./\r\n\r\nNow, the code runs from checkpoint\r\n\r\n"

] | 1,555 | 1,662 | 1,571 | NONE | null | I wanted to experiment with longer training schedules. How do I re-start a run from it’s fine-tuned checkpoint? | {

"url": "https://api.github.com/repos/huggingface/transformers/issues/509/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/509/timeline | completed | null | null |

https://api.github.com/repos/huggingface/transformers/issues/508 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/508/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/508/comments | https://api.github.com/repos/huggingface/transformers/issues/508/events | https://github.com/huggingface/transformers/pull/508 | 435,037,149 | MDExOlB1bGxSZXF1ZXN0MjcxODk2MzYx | 508 | Fix python syntax in examples/run_gpt2.py | {

"login": "SivilTaram",

"id": 10275209,

"node_id": "MDQ6VXNlcjEwMjc1MjA5",

"avatar_url": "https://avatars.githubusercontent.com/u/10275209?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/SivilTaram",

"html_url": "https://github.com/SivilTaram",

"followers_url": "https://api.github.com/users/SivilTaram/followers",

"following_url": "https://api.github.com/users/SivilTaram/following{/other_user}",

"gists_url": "https://api.github.com/users/SivilTaram/gists{/gist_id}",

"starred_url": "https://api.github.com/users/SivilTaram/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/SivilTaram/subscriptions",

"organizations_url": "https://api.github.com/users/SivilTaram/orgs",

"repos_url": "https://api.github.com/users/SivilTaram/repos",

"events_url": "https://api.github.com/users/SivilTaram/events{/privacy}",

"received_events_url": "https://api.github.com/users/SivilTaram/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [

"Thanks for the PR. This is fixed now."

] | 1,555 | 1,556 | 1,556 | CONTRIBUTOR | null | As the title, we will never reach the code from line 115 to 131 because the space before `if args.unconditional` is not enough. | {

"url": "https://api.github.com/repos/huggingface/transformers/issues/508/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/508/timeline | null | false | {

"url": "https://api.github.com/repos/huggingface/transformers/pulls/508",

"html_url": "https://github.com/huggingface/transformers/pull/508",

"diff_url": "https://github.com/huggingface/transformers/pull/508.diff",

"patch_url": "https://github.com/huggingface/transformers/pull/508.patch",

"merged_at": null

} |

https://api.github.com/repos/huggingface/transformers/issues/507 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/507/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/507/comments | https://api.github.com/repos/huggingface/transformers/issues/507/events | https://github.com/huggingface/transformers/issues/507 | 434,994,568 | MDU6SXNzdWU0MzQ5OTQ1Njg= | 507 | GPT-2 FineTuning on Cloze/ ROC | {

"login": "rohuns",

"id": 17604744,

"node_id": "MDQ6VXNlcjE3NjA0NzQ0",

"avatar_url": "https://avatars.githubusercontent.com/u/17604744?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/rohuns",

"html_url": "https://github.com/rohuns",

"followers_url": "https://api.github.com/users/rohuns/followers",

"following_url": "https://api.github.com/users/rohuns/following{/other_user}",

"gists_url": "https://api.github.com/users/rohuns/gists{/gist_id}",

"starred_url": "https://api.github.com/users/rohuns/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/rohuns/subscriptions",

"organizations_url": "https://api.github.com/users/rohuns/orgs",

"repos_url": "https://api.github.com/users/rohuns/repos",

"events_url": "https://api.github.com/users/rohuns/events{/privacy}",

"received_events_url": "https://api.github.com/users/rohuns/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 1260952223,

"node_id": "MDU6TGFiZWwxMjYwOTUyMjIz",

"url": "https://api.github.com/repos/huggingface/transformers/labels/Discussion",

"name": "Discussion",

"color": "22870e",

"default": false,

"description": "Discussion on a topic (keep it focused or open a new issue though)"

}

] | closed | false | null | [] | [

"Hi rohuns, I was wondering what padding value have you used for the lm_labels, since the -1 specified in the docs doesn't work for me on GPT2LMHead model. See #577. ",

"> Hi rohuns, I was wondering what padding value have you used for the lm_labels, since the -1 specified in the docs doesn't work for me on GPT2LMHead model. See #577.\r\n\r\nI had just used -1, can take a look at your stack trace and respond on that chat",

"Also to close this issue it appears others also achieved similar performance on the MC task, more details on the thread issue #468 ",

"> > Hi rohuns, I was wondering what padding value have you used for the lm_labels, since the -1 specified in the docs doesn't work for me on GPT2LMHead model. See #577.\r\n> \r\n> I had just used -1, can take a look at your stack trace and respond on that chat\r\n\r\nYes, please do have a look. Here is a toy example with a hand-coded dataset to prove that the -1 throws an error. It looks like it's a library issue.\r\n[gpt2_simplified.py.zip](https://github.com/huggingface/pytorch-pretrained-BERT/files/3144185/gpt2_simplified.py.zip)\r\n\r\nRegards,\r\nAdrian\r\n"

] | 1,555 | 1,556 | 1,556 | NONE | null | Hi, wrote some code to finetune GPT2 on rocstories using the DoubleHeads model mirroring the GPT1 code. However, I'm only getting performance of 68% on the eval. Was wondering if anyone else had tried it and seen this drop in performance. Thanks | {

"url": "https://api.github.com/repos/huggingface/transformers/issues/507/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/507/timeline | completed | null | null |

https://api.github.com/repos/huggingface/transformers/issues/506 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/506/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/506/comments | https://api.github.com/repos/huggingface/transformers/issues/506/events | https://github.com/huggingface/transformers/pull/506 | 434,515,106 | MDExOlB1bGxSZXF1ZXN0MjcxNDkyNjg0 | 506 | Hubconf | {

"login": "ailzhang",

"id": 5248122,

"node_id": "MDQ6VXNlcjUyNDgxMjI=",

"avatar_url": "https://avatars.githubusercontent.com/u/5248122?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/ailzhang",

"html_url": "https://github.com/ailzhang",

"followers_url": "https://api.github.com/users/ailzhang/followers",

"following_url": "https://api.github.com/users/ailzhang/following{/other_user}",

"gists_url": "https://api.github.com/users/ailzhang/gists{/gist_id}",

"starred_url": "https://api.github.com/users/ailzhang/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/ailzhang/subscriptions",

"organizations_url": "https://api.github.com/users/ailzhang/orgs",

"repos_url": "https://api.github.com/users/ailzhang/repos",

"events_url": "https://api.github.com/users/ailzhang/events{/privacy}",

"received_events_url": "https://api.github.com/users/ailzhang/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [

"Hi @ailzhang,\r\nThis is great! I went through it and it looks good to me.\r\n\r\nI guess we should update the `from_pretrained` method of the other models as well (like [here](https://github.com/huggingface/pytorch-pretrained-BERT/blob/19666dcb3bee3e379f1458e295869957aac8590c/pytorch_pretrained_bert/modeling_openai.py#L420))\r\n\r\nDo you want to have a look at the other models (GPT, GPT-2 and Transformer-XL) and add them to the `hubconf.py` as well ?",

"Hi @thomwolf, thanks for the quick reply! Yea we definitely would like to add GPT and Transformer-XL models in. \r\n\r\nI can definitely add them in this PR myself. Alternatively one thing could be super helpful to us would be someone from your team try out implementing a few models using `torch.hub` interfaces and let us know if you see any bugs/issues from a repo owner perspective :D. Let me know which way you prefer, thanks! \r\n\r\n Another question is about cache dir, pytorch has move to comply with XDG specification about caching dirs(https://github.com/pytorch/pytorch/issues/14693). Detailed logic can be found here https://pytorch.org/docs/master/hub.html#where-are-my-downloaded-models-saved ( I will fix the doc formatting soon :P ) Are you interested in moving to be in the same place? Happy to help on it as well. \r\n",

"@thomwolf Any update on this? ;) Thanks!",

"Hi @ailzhang, sorry for the delay, here are some answers to your questions:\r\n\r\n- `torch.hub`: I can give it a try but the present week is fully packed. I'll see if I can free some time next week. If you want to see it reach `master` faster, I'm also fine with you adding the other models.\r\n\r\nOne question I have here is that the pretrained models cannot really be used without the associated tokenizers. How is this supposed to work with `torch.hub`? Can you give me an example of usage (like the one in the readme for instance)?\r\n\r\n- update to `cache dir`: XDG specification seems nice indeed. If you want to give it a try it would be a lot cleaner than the present caching setting I guess.\r\n\r\nRelated note: we (Sebastian Ruder, Matthew Peters, Swabha Swayamdipta and I) are preparing a [tutorial on Transfer Learning in NLP to be held at NAACL](https://naacl2019.org/program/tutorials). We'll show various frameworks in action. I'll will see if we can include a `torch.hub` example.",

"@thomwolf \r\nNote that there's a tokenizer in hub already. Typically we'd prefer hub only contains models, but in this case we also includes tokenizer as it's a required part. \r\nThere's an example in docstring of BertTokenizer. Is this good enough? \r\n```\r\n >>> sentence = 'Hello, World!'\r\n >>> tokenizer = torch.hub.load('ailzhang/pytorch-pretrained-BERT:hubconf', 'bertTokenizer', 'bert-base-cased', do_basic_tokenize=False, force_reload=False)\r\n >>> toks = tokenizer.tokenize(sentence)\r\n ['Hello', '##,', 'World', '##!']\r\n >>> ids = tokenizer.convert_tokens_to_ids(toks)\r\n [8667, 28136, 1291, 28125]\r\n```\r\nMaybe we can merge this PR first if it looks good? \r\n",

"Oh indeed, I missed the tokenizer.\r\nOk let's go with this PR!"

] | 1,555 | 1,556 | 1,556 | NONE | null | fixes #504

Also add hubconf for bert related tokenizer & models.

There're a few GPT models and transformer models, but would like to send this out to get a review first.

Also there's possibility to unify the cache dir with pytorch one. | {

"url": "https://api.github.com/repos/huggingface/transformers/issues/506/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/506/timeline | null | false | {

"url": "https://api.github.com/repos/huggingface/transformers/pulls/506",

"html_url": "https://github.com/huggingface/transformers/pull/506",

"diff_url": "https://github.com/huggingface/transformers/pull/506.diff",

"patch_url": "https://github.com/huggingface/transformers/pull/506.patch",

"merged_at": 1556132361000

} |

https://api.github.com/repos/huggingface/transformers/issues/505 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/505/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/505/comments | https://api.github.com/repos/huggingface/transformers/issues/505/events | https://github.com/huggingface/transformers/issues/505 | 434,489,113 | MDU6SXNzdWU0MzQ0ODkxMTM= | 505 | Generating text with Transformer XL | {

"login": "shashwath94",

"id": 7631779,

"node_id": "MDQ6VXNlcjc2MzE3Nzk=",

"avatar_url": "https://avatars.githubusercontent.com/u/7631779?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/shashwath94",

"html_url": "https://github.com/shashwath94",

"followers_url": "https://api.github.com/users/shashwath94/followers",

"following_url": "https://api.github.com/users/shashwath94/following{/other_user}",

"gists_url": "https://api.github.com/users/shashwath94/gists{/gist_id}",

"starred_url": "https://api.github.com/users/shashwath94/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/shashwath94/subscriptions",

"organizations_url": "https://api.github.com/users/shashwath94/orgs",

"repos_url": "https://api.github.com/users/shashwath94/repos",

"events_url": "https://api.github.com/users/shashwath94/events{/privacy}",

"received_events_url": "https://api.github.com/users/shashwath94/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [

"Here's an example of text generation, picks second most likely word at each step\r\n\r\n```\r\ntokenizer = TransfoXLTokenizer.from_pretrained('transfo-xl-wt103')\r\nmodel = TransfoXLLMHeadModel.from_pretrained('transfo-xl-wt103')\r\nline = \"Cars were invented in\"\r\nline_tokenized = tokenizer.tokenize(line)\r\nline_indexed = tokenizer.convert_tokens_to_ids(line_tokenized)\r\ntokens_tensor = torch.tensor([line_indexed])\r\ntokens_tensor = tokens_tensor.to(device)\r\n\r\nmax_predictions = 50\r\nmems = None\r\nfor i in range(max_predictions):\r\n predictions, mems = model(tokens_tensor, mems=mems)\r\n predicted_index = torch.topk(predictions[0, -1, :],5)[1][1].item()\r\n predicted_token = tokenizer.convert_ids_to_tokens([predicted_index])[0]\r\n print(predicted_token)\r\n predicted_index = torch.tensor([[predicted_index]]).to(device)\r\n tokens_tensor = torch.cat((tokens_tensor, predicted_index), dim=1)\r\n```\r\nShould produce\r\n\r\n```\r\nBritain\r\nand\r\nAmerica\r\n,\r\nbut\r\nthe\r\nfirst\r\ntwo\r\ncars\r\nhad\r\nto\r\nhave\r\nbeen\r\na\r\n\"\r\nTurbo\r\n```",

"Yeah figured it out. Thanks nevertheless @yaroslavvb !",

"@yaroslavvb I think, there is a bug in the code, you shared \r\n`predicted_index = torch.topk(predictions[0, -1, :],5)[1][1].item()`why is it not `predicted_index = torch.topk(predictions[0, -1, :],5)[1][0].item()` or probably its not a bug \r\n",

"@yaroslavvb Why in the text generation with Transformer-XL there is a loop over the number of predictions requested, like max_predictions? \r\n\r\nGiven a fixed input like line = \"Cars were invented in\", which is 21 characters or 4 words (depending if trained for character output or word output), say, why one cannot generate say the next 21 characters or 4 words directly from the T-XL output all at once? Then generate another set of 21 characters or 4 words again in the next iteration? \r\n\r\nI thought one advantage of the T-XL vs the vanilla Transformer was this ability to predict a whole next sequence without having to loop by adding character by character or word by word at the input? \r\n\r\nIsn't the T-XL trained by computing the loss over the whole input and whole target (label) without looping?\r\nThus why would it be different during text generation? To provide a more accurate context along the prediction by adding the previous prediction one by one?",

"@shashwath94 Could you please post your fix, so that we can learn by example? Thanks. ",

"@gussmith you could do it this way, but empirically the results are very bad. The model loss is trained to maximize probability of \"next token prediction\". What looks like loss over a loss over whole sequence is actually a parallelization trick to compute many \"next token prediction\" losses in a single pass."

] | 1,555 | 1,595 | 1,555 | CONTRIBUTOR | null | Hi everyone,

I am trying to generate text with the pre-trained transformer XL model in a similar way to how we do with the GPT-2 model. But I guess there is a bug in the `sample_sequence` function after I adjusted to the transformer XL architecture. But the generated text is completely random in general and with respect to the context as well.

The core sampling loop looks very similar to the gpt-2 one:

```

with torch.no_grad():

for i in trange(length):

logits, past = model(prev, mems=past)

logits = logits[:, -1, :] / temperature

logits = top_k_logits(logits, k=top_k)

log_probs = F.softmax(logits, dim=-1)

if sample:

prev = torch.multinomial(log_probs, num_samples=1)

else:

_, prev = torch.topk(log_probs, k=1, dim=-1)

output = torch.cat((output, prev), dim=1)

```

What is the bug that I'm missing?

| {

"url": "https://api.github.com/repos/huggingface/transformers/issues/505/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/505/timeline | completed | null | null |

https://api.github.com/repos/huggingface/transformers/issues/504 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/504/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/504/comments | https://api.github.com/repos/huggingface/transformers/issues/504/events | https://github.com/huggingface/transformers/issues/504 | 434,469,616 | MDU6SXNzdWU0MzQ0Njk2MTY= | 504 | Init BertForTokenClassification from from_pretrained | {

"login": "ailzhang",

"id": 5248122,

"node_id": "MDQ6VXNlcjUyNDgxMjI=",

"avatar_url": "https://avatars.githubusercontent.com/u/5248122?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/ailzhang",

"html_url": "https://github.com/ailzhang",

"followers_url": "https://api.github.com/users/ailzhang/followers",

"following_url": "https://api.github.com/users/ailzhang/following{/other_user}",

"gists_url": "https://api.github.com/users/ailzhang/gists{/gist_id}",

"starred_url": "https://api.github.com/users/ailzhang/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/ailzhang/subscriptions",

"organizations_url": "https://api.github.com/users/ailzhang/orgs",

"repos_url": "https://api.github.com/users/ailzhang/repos",

"events_url": "https://api.github.com/users/ailzhang/events{/privacy}",

"received_events_url": "https://api.github.com/users/ailzhang/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [

"actually this is related to my current work, I will send a fix along with my PR."

] | 1,555 | 1,556 | 1,556 | NONE | null | ```

model = BertForTokenClassification.from_pretrained('bert-base-uncased', 2)

```

will complain about missing positional arg for `num_labels`.

The root cause is here the function signature should actually be

https://github.com/huggingface/pytorch-pretrained-BERT/blob/19666dcb3bee3e379f1458e295869957aac8590c/pytorch_pretrained_bert/modeling.py#L522

```

def from_pretrained(cls, pretrained_model_name_or_path, *inputs, state_dict=None, cache_dir=None, from_tf=False, **kwargs):

```

But note that the signature above above is actually only supported in py3 not py2. See a similar workaround here: https://github.com/pytorch/pytorch/pull/19247/files#diff-bdb85c31edc2daaad6cdb68c0d19bafbR300 | {

"url": "https://api.github.com/repos/huggingface/transformers/issues/504/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/504/timeline | completed | null | null |

https://api.github.com/repos/huggingface/transformers/issues/503 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/503/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/503/comments | https://api.github.com/repos/huggingface/transformers/issues/503/events | https://github.com/huggingface/transformers/pull/503 | 434,376,103 | MDExOlB1bGxSZXF1ZXN0MjcxMzgyMTEz | 503 | Fix possible risks of bpe on special tokens | {

"login": "SivilTaram",

"id": 10275209,

"node_id": "MDQ6VXNlcjEwMjc1MjA5",

"avatar_url": "https://avatars.githubusercontent.com/u/10275209?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/SivilTaram",

"html_url": "https://github.com/SivilTaram",

"followers_url": "https://api.github.com/users/SivilTaram/followers",

"following_url": "https://api.github.com/users/SivilTaram/following{/other_user}",

"gists_url": "https://api.github.com/users/SivilTaram/gists{/gist_id}",

"starred_url": "https://api.github.com/users/SivilTaram/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/SivilTaram/subscriptions",

"organizations_url": "https://api.github.com/users/SivilTaram/orgs",

"repos_url": "https://api.github.com/users/SivilTaram/repos",

"events_url": "https://api.github.com/users/SivilTaram/events{/privacy}",

"received_events_url": "https://api.github.com/users/SivilTaram/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 1314768611,

"node_id": "MDU6TGFiZWwxMzE0NzY4NjEx",

"url": "https://api.github.com/repos/huggingface/transformers/labels/wontfix",

"name": "wontfix",

"color": "ffffff",

"default": true,

"description": null

}

] | closed | false | null | [] | [

"This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.\n"

] | 1,555 | 1,560 | 1,560 | CONTRIBUTOR | null | Hi developers !

When I use the openai tokenizer, I find it hard to handle the `special tokens` correctly (my library version is v0.6.1) , even though I have already defined them and told the tokenizer NEVER SPLIT them. It is because all tokens, including the special ones will be processed by BPE. So I add one line for avoiding BPE on special tokens.

But there still are some problems when we use `spacy` as the tokenizer. I will try to add special tokens to the vocabulary of `spacy` and pull another request. Thanks for code review :) | {

"url": "https://api.github.com/repos/huggingface/transformers/issues/503/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/503/timeline | null | false | {

"url": "https://api.github.com/repos/huggingface/transformers/pulls/503",

"html_url": "https://github.com/huggingface/transformers/pull/503",

"diff_url": "https://github.com/huggingface/transformers/pull/503.diff",

"patch_url": "https://github.com/huggingface/transformers/pull/503.patch",

"merged_at": null

} |

https://api.github.com/repos/huggingface/transformers/issues/502 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/502/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/502/comments | https://api.github.com/repos/huggingface/transformers/issues/502/events | https://github.com/huggingface/transformers/issues/502 | 434,217,681 | MDU6SXNzdWU0MzQyMTc2ODE= | 502 | How to obtain attention values for each layer | {

"login": "serenaklm",

"id": 34397223,

"node_id": "MDQ6VXNlcjM0Mzk3MjIz",

"avatar_url": "https://avatars.githubusercontent.com/u/34397223?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/serenaklm",

"html_url": "https://github.com/serenaklm",

"followers_url": "https://api.github.com/users/serenaklm/followers",

"following_url": "https://api.github.com/users/serenaklm/following{/other_user}",

"gists_url": "https://api.github.com/users/serenaklm/gists{/gist_id}",

"starred_url": "https://api.github.com/users/serenaklm/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/serenaklm/subscriptions",

"organizations_url": "https://api.github.com/users/serenaklm/orgs",

"repos_url": "https://api.github.com/users/serenaklm/repos",

"events_url": "https://api.github.com/users/serenaklm/events{/privacy}",

"received_events_url": "https://api.github.com/users/serenaklm/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 1260952223,

"node_id": "MDU6TGFiZWwxMjYwOTUyMjIz",

"url": "https://api.github.com/repos/huggingface/transformers/labels/Discussion",

"name": "Discussion",

"color": "22870e",

"default": false,

"description": "Discussion on a topic (keep it focused or open a new issue though)"

},

{

"id": 1314768611,

"node_id": "MDU6TGFiZWwxMzE0NzY4NjEx",

"url": "https://api.github.com/repos/huggingface/transformers/labels/wontfix",

"name": "wontfix",

"color": "ffffff",

"default": true,

"description": null

}

] | closed | false | null | [] | [

"Not really.\r\nYou should build a new sub-class of `BertPreTrainedModel` which is identical to `BertModel`but send back self-attention values in addition to the hidden states.\r\n",

"I see. Thank you! ",

"Hi, \r\n\r\nJust to add on. If this is what I would be doing, would it be advisable to fine-tune the weights for the pretrained model? \r\n\r\nRegards",

"Probably.\r\nIt depends on what's your final use-case.",

"This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.\n"

] | 1,555 | 1,561 | 1,561 | NONE | null | Hi all,

Please correct me if I am wrong.

From my understanding, The encoded values for each layer (12 of them for base model) would be returned when we run our results through the pre-trained model.

However, I would like to examine the self-attention values for each layer. Is there a way I can extract that out?

Regards | {

"url": "https://api.github.com/repos/huggingface/transformers/issues/502/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/502/timeline | completed | null | null |

https://api.github.com/repos/huggingface/transformers/issues/501 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/501/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/501/comments | https://api.github.com/repos/huggingface/transformers/issues/501/events | https://github.com/huggingface/transformers/issues/501 | 434,200,823 | MDU6SXNzdWU0MzQyMDA4MjM= | 501 | Test a fine-tuned BERT-QA model | {

"login": "wasiahmad",

"id": 17520413,

"node_id": "MDQ6VXNlcjE3NTIwNDEz",

"avatar_url": "https://avatars.githubusercontent.com/u/17520413?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/wasiahmad",

"html_url": "https://github.com/wasiahmad",

"followers_url": "https://api.github.com/users/wasiahmad/followers",

"following_url": "https://api.github.com/users/wasiahmad/following{/other_user}",

"gists_url": "https://api.github.com/users/wasiahmad/gists{/gist_id}",

"starred_url": "https://api.github.com/users/wasiahmad/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/wasiahmad/subscriptions",

"organizations_url": "https://api.github.com/users/wasiahmad/orgs",

"repos_url": "https://api.github.com/users/wasiahmad/repos",

"events_url": "https://api.github.com/users/wasiahmad/events{/privacy}",

"received_events_url": "https://api.github.com/users/wasiahmad/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 1108649053,

"node_id": "MDU6TGFiZWwxMTA4NjQ5MDUz",

"url": "https://api.github.com/repos/huggingface/transformers/labels/Help%20wanted",

"name": "Help wanted",

"color": "008672",

"default": false,

"description": "Extra attention is needed, help appreciated"

}

] | closed | false | null | [] | [

"I noticed the following snippet in the code. (which I have edited to solve my problem)\r\n\r\n if args.do_train and (args.local_rank == -1 or torch.distributed.get_rank() == 0):\r\n # Save a trained model, configuration and tokenizer\r\n model_to_save = model.module if hasattr(model, 'module') else model # Only save the model it-self\r\n\r\n # If we save using the predefined names, we can load using `from_pretrained`\r\n output_model_file = os.path.join(args.output_dir, WEIGHTS_NAME)\r\n output_config_file = os.path.join(args.output_dir, CONFIG_NAME)\r\n\r\n torch.save(model_to_save.state_dict(), output_model_file)\r\n model_to_save.config.to_json_file(output_config_file)\r\n tokenizer.save_vocabulary(args.output_dir)\r\n\r\n # Load a trained model and vocabulary that you have fine-tuned\r\n model = BertForQuestionAnswering.from_pretrained(args.output_dir)\r\n tokenizer = BertTokenizer.from_pretrained(args.output_dir, do_lower_case=args.do_lower_case)\r\n else:\r\n model = BertForQuestionAnswering.from_pretrained(args.bert_model)\r\n\r\n\r\nSo, if we want to load the fine-tuned model only for prediction, need to load it from `args.output_dir`. But the current code loads from `args.bert_model` when we use `squad.py` only for prediction.",

"@wasiahmad tokenizer is not needed at prediction time?\r\n\r\nThanks\r\nMahesh",

"need help in understanding how to get the model trained with SQuAD + my dataset. Once trained, how to use it for actual prediction.\r\n\r\nmodel : BERT Question Answering\r\n",

"@Swathygsb \r\nhttps://github.com/kamalkraj/BERT-SQuAD\r\ninference on bert-squad model",

"> @Swathygsb\r\n> https://github.com/kamalkraj/BERT-SQuAD\r\n> inference on bert-squad model\r\n\r\nthx for your sharing, and there is inference on bert-squad model by tensorflow?\r\n3Q~"

] | 1,555 | 1,569 | 1,555 | NONE | null | I have fine-tuned a BERT-QA model on SQuAD and it produced a `pytorch_model.bin` file. Now, I want to load this fine-tuned model and evaluate on SQuAD. How can I do that? I am using the `run_squad.py` script. | {

"url": "https://api.github.com/repos/huggingface/transformers/issues/501/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/501/timeline | completed | null | null |

https://api.github.com/repos/huggingface/transformers/issues/500 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/500/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/500/comments | https://api.github.com/repos/huggingface/transformers/issues/500/events | https://github.com/huggingface/transformers/pull/500 | 434,196,137 | MDExOlB1bGxSZXF1ZXN0MjcxMjM3NTYw | 500 | Updating network handling | {

"login": "thomwolf",

"id": 7353373,

"node_id": "MDQ6VXNlcjczNTMzNzM=",

"avatar_url": "https://avatars.githubusercontent.com/u/7353373?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/thomwolf",

"html_url": "https://github.com/thomwolf",

"followers_url": "https://api.github.com/users/thomwolf/followers",

"following_url": "https://api.github.com/users/thomwolf/following{/other_user}",

"gists_url": "https://api.github.com/users/thomwolf/gists{/gist_id}",

"starred_url": "https://api.github.com/users/thomwolf/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/thomwolf/subscriptions",

"organizations_url": "https://api.github.com/users/thomwolf/orgs",

"repos_url": "https://api.github.com/users/thomwolf/repos",

"events_url": "https://api.github.com/users/thomwolf/events{/privacy}",

"received_events_url": "https://api.github.com/users/thomwolf/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [] | 1,555 | 1,566 | 1,555 | MEMBER | null | This PR adds:

- a bunch of tests for the models and tokenizers stored on S3 with `--runslow` (download and load one model/tokenizer for each type of model BERT, GPT, GPT-2, Transformer-XL)

- relax network connection checking (fallback on the last downloaded model in the cache when we can't get the last eTag from s3) | {

"url": "https://api.github.com/repos/huggingface/transformers/issues/500/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/500/timeline | null | false | {

"url": "https://api.github.com/repos/huggingface/transformers/pulls/500",

"html_url": "https://github.com/huggingface/transformers/pull/500",

"diff_url": "https://github.com/huggingface/transformers/pull/500.diff",

"patch_url": "https://github.com/huggingface/transformers/pull/500.patch",

"merged_at": 1555507335000

} |

https://api.github.com/repos/huggingface/transformers/issues/499 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/499/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/499/comments | https://api.github.com/repos/huggingface/transformers/issues/499/events | https://github.com/huggingface/transformers/issues/499 | 434,182,603 | MDU6SXNzdWU0MzQxODI2MDM= | 499 | error when do python3 run_squad.py | {

"login": "directv00",

"id": 37170991,

"node_id": "MDQ6VXNlcjM3MTcwOTkx",

"avatar_url": "https://avatars.githubusercontent.com/u/37170991?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/directv00",

"html_url": "https://github.com/directv00",

"followers_url": "https://api.github.com/users/directv00/followers",

"following_url": "https://api.github.com/users/directv00/following{/other_user}",

"gists_url": "https://api.github.com/users/directv00/gists{/gist_id}",

"starred_url": "https://api.github.com/users/directv00/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/directv00/subscriptions",

"organizations_url": "https://api.github.com/users/directv00/orgs",

"repos_url": "https://api.github.com/users/directv00/repos",

"events_url": "https://api.github.com/users/directv00/events{/privacy}",

"received_events_url": "https://api.github.com/users/directv00/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 1108649070,

"node_id": "MDU6TGFiZWwxMTA4NjQ5MDcw",

"url": "https://api.github.com/repos/huggingface/transformers/labels/Need%20more%20information",

"name": "Need more information",

"color": "d876e3",

"default": false,

"description": "Further information is requested"

}

] | closed | false | null | [] | [

"Did you install pytorch-pretrained-bert as indicated in the README?\r\n`pip install pytorch_pretrained_bert`\r\n\r\nYou don't have to convert the checkpoints yourself, there are already converted.\r\n\r\nTry reading the installation and usage sections of the README.",

"Of cause I installed,\r\nMore precisely, error code is slightly changed.\r\n```bash\r\nTraceback (most recent call last):\r\n File \"run_squad.py\", line 37, in <module>\r\n from pytorch_pretrained_bert.file_utils import PYTORCH_PRETRAINED_BERT_CACHE, WEIGHTS_NAME, CONFIG_NAME\r\nImportError: cannot import name WEIGHTS_NAME\r\n```",

"Hmm you are right, the examples are compatible with `master` only now that we have a new token serialization. I guess we'll have to do a new release (0.6.2) today so everybody is on the same page.\r\nLet me do that.",

"Actually, we'll wait for the merge of #506.\r\n\r\nIn the meantime you can install from source and it should work.",

"Oh it's done immediately when I installed from source. Thanks.",

"Ok great.\r\n\r\nJust a side note on writing messages in github: you should add triple-quotes like this: \\``` before and after the command line, errors and code you are pasting. This way it's easier to read.\r\n\r\nEx:\r\n\\```\r\npip install -e .\r\n\\```\r\n\r\nwill display like:\r\n```\r\npip install -e .\r\n```",

"Good point(triple quotes).\r\nI didn't know what to do, but now I have it all.\r\nThanks.",

"> Actually, we'll wait for the merge of #506.\r\n> \r\n> In the meantime you can install from source and it should work.\r\n\r\nhow to \"install from source\"?",

"@YanZhangADS \r\n\r\nYou can install from source with this command below\r\n```\r\ngit clone https://github.com/huggingface/pytorch-pretrained-BERT.git\r\ncd pytorch-pretrained-BERT\r\npython setup.py install\r\n```",

"Same problem with \"ImportError: cannot import name WEIGHTS_NAME\". However, after building **0.6.1** from source, I get: \r\n```\r\nfrom pytorch_pretrained_bert.optimization import BertAdam, warmup_linear\r\n ImportError: cannot import name 'warmup_linear'\r\n```\r\nI don't need the warmup, so I removed the import, but letting you guys know that this is an import error as well. Thanks!",

"Thanks for that @dumitrescustefan, we're working on it in #518.\r\nI'm closing this issue for now as we start to deviate from the original discussion.",

"I just built from source. I'm still getting the same error as in original issue.",

"The version 0.4.0 doesn't give this issue.\r\npip install pytorch_pretrained_bert==0.4.0"

] | 1,555 | 1,580 | 1,556 | NONE | null | Hello,

I am newbie of pytorch-pretrained-Bert.

After successfully converted from init-checkpoint of tensorflow to pytorch bin,

I found an error when I do run_squad.

Guessing I should've included some configuration ahead, could anyone can help?

See below.

```bash

File "run_squad.py", line 37, in <module>

from pytorch_pretrained_bert.file_utils import PYTORCH_PRETRAINED_BERT_CACHE, WEIGHTS_NAME, CONFIG_NAME

ImportError: No module named pytorch_pretrained_bert.file_utils

```

| {

"url": "https://api.github.com/repos/huggingface/transformers/issues/499/reactions",

"total_count": 4,

"+1": 4,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/499/timeline | completed | null | null |

https://api.github.com/repos/huggingface/transformers/issues/498 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/498/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/498/comments | https://api.github.com/repos/huggingface/transformers/issues/498/events | https://github.com/huggingface/transformers/pull/498 | 434,153,484 | MDExOlB1bGxSZXF1ZXN0MjcxMjAzODg4 | 498 | Gpt2 tokenization | {

"login": "thomwolf",

"id": 7353373,

"node_id": "MDQ6VXNlcjczNTMzNzM=",

"avatar_url": "https://avatars.githubusercontent.com/u/7353373?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/thomwolf",

"html_url": "https://github.com/thomwolf",

"followers_url": "https://api.github.com/users/thomwolf/followers",

"following_url": "https://api.github.com/users/thomwolf/following{/other_user}",

"gists_url": "https://api.github.com/users/thomwolf/gists{/gist_id}",

"starred_url": "https://api.github.com/users/thomwolf/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/thomwolf/subscriptions",

"organizations_url": "https://api.github.com/users/thomwolf/orgs",

"repos_url": "https://api.github.com/users/thomwolf/repos",

"events_url": "https://api.github.com/users/thomwolf/events{/privacy}",

"received_events_url": "https://api.github.com/users/thomwolf/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [] | 1,555 | 1,566 | 1,555 | MEMBER | null | Complete #489 by:

- adding tests on GPT-2 Tokenizer (at last)

- fixing GPT-2 tokenization to work on python 2 as well

- adding `special_tokens` handling logic in GPT-2 tokenizer

- fixing GPT and GPT-2 serialization logic to save special tokens | {

"url": "https://api.github.com/repos/huggingface/transformers/issues/498/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/498/timeline | null | false | {

"url": "https://api.github.com/repos/huggingface/transformers/pulls/498",

"html_url": "https://github.com/huggingface/transformers/pull/498",

"diff_url": "https://github.com/huggingface/transformers/pull/498.diff",

"patch_url": "https://github.com/huggingface/transformers/pull/498.patch",

"merged_at": 1555492002000

} |

https://api.github.com/repos/huggingface/transformers/issues/497 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/497/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/497/comments | https://api.github.com/repos/huggingface/transformers/issues/497/events | https://github.com/huggingface/transformers/issues/497 | 434,028,654 | MDU6SXNzdWU0MzQwMjg2NTQ= | 497 | UnboundLocalError: local variable 'special_tokens_file' referenced before assignment | {

"login": "yaroslavvb",

"id": 23068,

"node_id": "MDQ6VXNlcjIzMDY4",

"avatar_url": "https://avatars.githubusercontent.com/u/23068?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/yaroslavvb",

"html_url": "https://github.com/yaroslavvb",

"followers_url": "https://api.github.com/users/yaroslavvb/followers",

"following_url": "https://api.github.com/users/yaroslavvb/following{/other_user}",

"gists_url": "https://api.github.com/users/yaroslavvb/gists{/gist_id}",

"starred_url": "https://api.github.com/users/yaroslavvb/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/yaroslavvb/subscriptions",

"organizations_url": "https://api.github.com/users/yaroslavvb/orgs",

"repos_url": "https://api.github.com/users/yaroslavvb/repos",

"events_url": "https://api.github.com/users/yaroslavvb/events{/privacy}",

"received_events_url": "https://api.github.com/users/yaroslavvb/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [

"Yes, this should be fixed by #498."

] | 1,555 | 1,555 | 1,555 | CONTRIBUTOR | null | Happens during this

```enc = GPT2Tokenizer.from_pretrained('gpt2')```

```

File "example_lambada_prediction_difference.py", line 23, in <module>

enc = GPT2Tokenizer.from_pretrained(model_name)

File "/bflm/pytorch-pretrained-BERT/pytorch_pretrained_bert/tokenization_gpt2.py", line 134, in from_pretrained

if special_tokens_file and 'special_tokens' not in kwargs:

UnboundLocalError: local variable 'special_tokens_file' referenced before assignment

```

Looking at offending file, it looks like there's a path for which `special_tokens_file` is never initialized

https://github.com/huggingface/pytorch-pretrained-BERT/blob/3d78e226e68a5c5d0ef612132b601024c3534e38/pytorch_pretrained_bert/tokenization_gpt2.py#L134 | {

"url": "https://api.github.com/repos/huggingface/transformers/issues/497/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/497/timeline | completed | null | null |

https://api.github.com/repos/huggingface/transformers/issues/496 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/496/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/496/comments | https://api.github.com/repos/huggingface/transformers/issues/496/events | https://github.com/huggingface/transformers/pull/496 | 434,011,487 | MDExOlB1bGxSZXF1ZXN0MjcxMDk1Mjgw | 496 | [run_gpt2.py] temperature should be a float, not int | {

"login": "8enmann",

"id": 1021104,

"node_id": "MDQ6VXNlcjEwMjExMDQ=",

"avatar_url": "https://avatars.githubusercontent.com/u/1021104?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/8enmann",

"html_url": "https://github.com/8enmann",

"followers_url": "https://api.github.com/users/8enmann/followers",

"following_url": "https://api.github.com/users/8enmann/following{/other_user}",

"gists_url": "https://api.github.com/users/8enmann/gists{/gist_id}",

"starred_url": "https://api.github.com/users/8enmann/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/8enmann/subscriptions",

"organizations_url": "https://api.github.com/users/8enmann/orgs",

"repos_url": "https://api.github.com/users/8enmann/repos",

"events_url": "https://api.github.com/users/8enmann/events{/privacy}",

"received_events_url": "https://api.github.com/users/8enmann/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [

"Indeed, thanks @8enmann!"

] | 1,555 | 1,555 | 1,555 | CONTRIBUTOR | null | {

"url": "https://api.github.com/repos/huggingface/transformers/issues/496/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/496/timeline | null | false | {

"url": "https://api.github.com/repos/huggingface/transformers/pulls/496",

"html_url": "https://github.com/huggingface/transformers/pull/496",

"diff_url": "https://github.com/huggingface/transformers/pull/496.diff",

"patch_url": "https://github.com/huggingface/transformers/pull/496.patch",

"merged_at": 1555492134000

} |

|

https://api.github.com/repos/huggingface/transformers/issues/495 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/495/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/495/comments | https://api.github.com/repos/huggingface/transformers/issues/495/events | https://github.com/huggingface/transformers/pull/495 | 433,929,690 | MDExOlB1bGxSZXF1ZXN0MjcxMDI5MDQx | 495 | Fix gradient overflow issue during attention mask | {

"login": "SudoSharma",

"id": 18308855,

"node_id": "MDQ6VXNlcjE4MzA4ODU1",

"avatar_url": "https://avatars.githubusercontent.com/u/18308855?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/SudoSharma",

"html_url": "https://github.com/SudoSharma",

"followers_url": "https://api.github.com/users/SudoSharma/followers",

"following_url": "https://api.github.com/users/SudoSharma/following{/other_user}",

"gists_url": "https://api.github.com/users/SudoSharma/gists{/gist_id}",

"starred_url": "https://api.github.com/users/SudoSharma/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/SudoSharma/subscriptions",

"organizations_url": "https://api.github.com/users/SudoSharma/orgs",

"repos_url": "https://api.github.com/users/SudoSharma/repos",

"events_url": "https://api.github.com/users/SudoSharma/events{/privacy}",

"received_events_url": "https://api.github.com/users/SudoSharma/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [

"Ok, great, thanks @SudoSharma!",

"While the outputs are the same between 1e10 and 1e4, I shouldn't expect the outputs between fp32 and fp16 to be the same, should I? I get different outputs between the two when doing unconditional/conditional generation with top_k=40 but even with top_k=1. Usually they're the same for a while and then deviate. This is with Apex installed, so using FusedLayerNorm.\r\n\r\nIf I turn on Apex's AMP with `from apex import amp; amp.init()` then they still deviate but after a longer time (I think it makes the attention nn.Softmax use fp32). Have to remove the `model.half()` call when using AMP.\r\n\r\nPerhaps it's not realistic to have the outputs be the same when fp16 errors in the \"past\" tensors are compounding as the sequence gets longer? But it is surprising to see them differ for top_k=1 (deterministic) since only the largest logit affects the output there.\r\n\r\nP.S. For my site it's been enormously helpful to have this PyTorch implementation. @thomwolf Thank you!",

"Hi @AdamDanielKing,\r\nCongratulation on your demo!\r\nAre you using the updated API for apex Amp? (https://nvidia.github.io/apex/amp.html)\r\nAlso, we should discuss this in a new issue? At first, I thought this was related to this PR but I understand it's not, right?",

"@thomwolf You're probably right that a new issue is best. I've created one at #602.\r\n\r\nThanks for pointing out I was using the old Apex API. Switching to the new one unfortunately didn't fix the issue though."

] | 1,555 | 1,557 | 1,555 | CONTRIBUTOR | null | This fix is in reference to issue #382. GPT2 can now be trained in mixed precision, which I've confirmed with testing. I also tested unconditional generation on multiple seeds before and after changing 1e10 to 1e4 and there was no difference. Please let me know if there is anything else I can do to make this pull request better. Thanks for all your work! | {

"url": "https://api.github.com/repos/huggingface/transformers/issues/495/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/495/timeline | null | false | {

"url": "https://api.github.com/repos/huggingface/transformers/pulls/495",

"html_url": "https://github.com/huggingface/transformers/pull/495",

"diff_url": "https://github.com/huggingface/transformers/pull/495.diff",

"patch_url": "https://github.com/huggingface/transformers/pull/495.patch",

"merged_at": 1555492237000

} |

https://api.github.com/repos/huggingface/transformers/issues/494 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/494/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/494/comments | https://api.github.com/repos/huggingface/transformers/issues/494/events | https://github.com/huggingface/transformers/pull/494 | 433,917,699 | MDExOlB1bGxSZXF1ZXN0MjcxMDE5NTA3 | 494 | Fix indentation for unconditional generation | {

"login": "SudoSharma",

"id": 18308855,

"node_id": "MDQ6VXNlcjE4MzA4ODU1",

"avatar_url": "https://avatars.githubusercontent.com/u/18308855?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/SudoSharma",

"html_url": "https://github.com/SudoSharma",

"followers_url": "https://api.github.com/users/SudoSharma/followers",

"following_url": "https://api.github.com/users/SudoSharma/following{/other_user}",

"gists_url": "https://api.github.com/users/SudoSharma/gists{/gist_id}",

"starred_url": "https://api.github.com/users/SudoSharma/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/SudoSharma/subscriptions",

"organizations_url": "https://api.github.com/users/SudoSharma/orgs",

"repos_url": "https://api.github.com/users/SudoSharma/repos",

"events_url": "https://api.github.com/users/SudoSharma/events{/privacy}",

"received_events_url": "https://api.github.com/users/SudoSharma/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [

"Thanks!"

] | 1,555 | 1,555 | 1,555 | CONTRIBUTOR | null | Hey guys, there was an issue with the example file for generating unconditional samples. I just fixed the indentation. Let me know if there is anything else I need to do! Thanks for the great work on this repo. | {

"url": "https://api.github.com/repos/huggingface/transformers/issues/494/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/494/timeline | null | false | {

"url": "https://api.github.com/repos/huggingface/transformers/pulls/494",

"html_url": "https://github.com/huggingface/transformers/pull/494",

"diff_url": "https://github.com/huggingface/transformers/pull/494.diff",

"patch_url": "https://github.com/huggingface/transformers/pull/494.patch",

"merged_at": 1555492296000

} |

https://api.github.com/repos/huggingface/transformers/issues/493 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/493/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/493/comments | https://api.github.com/repos/huggingface/transformers/issues/493/events | https://github.com/huggingface/transformers/issues/493 | 433,778,597 | MDU6SXNzdWU0MzM3Nzg1OTc= | 493 | how to use extracted features in extract_features.py? | {

"login": "heslowen",

"id": 22348625,

"node_id": "MDQ6VXNlcjIyMzQ4NjI1",

"avatar_url": "https://avatars.githubusercontent.com/u/22348625?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/heslowen",

"html_url": "https://github.com/heslowen",

"followers_url": "https://api.github.com/users/heslowen/followers",

"following_url": "https://api.github.com/users/heslowen/following{/other_user}",

"gists_url": "https://api.github.com/users/heslowen/gists{/gist_id}",

"starred_url": "https://api.github.com/users/heslowen/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/heslowen/subscriptions",

"organizations_url": "https://api.github.com/users/heslowen/orgs",

"repos_url": "https://api.github.com/users/heslowen/repos",

"events_url": "https://api.github.com/users/heslowen/events{/privacy}",

"received_events_url": "https://api.github.com/users/heslowen/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 1260952223,

"node_id": "MDU6TGFiZWwxMjYwOTUyMjIz",

"url": "https://api.github.com/repos/huggingface/transformers/labels/Discussion",

"name": "Discussion",

"color": "22870e",

"default": false,

"description": "Discussion on a topic (keep it focused or open a new issue though)"

},

{

"id": 1314768611,

"node_id": "MDU6TGFiZWwxMzE0NzY4NjEx",

"url": "https://api.github.com/repos/huggingface/transformers/labels/wontfix",

"name": "wontfix",

"color": "ffffff",

"default": true,

"description": null

}

] | closed | false | null | [] | [

"Without fine-tuning, BERT features are usually less useful than plain GloVe or wrd2vec indeed.\r\nThey start to be interesting when you fine-tune a classifier on top of BERT.\r\n\r\nSee the recent study by Matthew Peters, Sebastian Ruder, Noah A. Smith ([To Tune or Not to Tune? Adapting Pretrained Representations to Diverse Tasks](https://arxiv.org/abs/1903.05987)) for some practical tips on that.",

"thank you so much~",

"@heslowen could you please share the code for extracting features in order to use them for learning a classifier? Thanks.",

"@joistick11 you can find a demo in extract_features.py",

"Could you please help me?\r\nI was using bert-as-service (https://github.com/hanxiao/bert-as-service) and there is model method `encode`, which accepts list and returns list of the same size, each element containing sentence embedding. All the elements of the same size. \r\n1. When I use extract_features.py, it returns embedding for each recognized symbol in the sentence from the specified layers. I mean, instead of sentence embedding it returns symbols embeddings. How should I use it, for instance, to train an SVM? I am using `bert-base-multilingual-cased`\r\n2. Which layer output should I use? Is it with index `-1`?\r\n\r\nThanks you very much!",

"@joistick11 you want to embed a sentence to a vector?\r\n`all_encoder_layers, pooled_output = model(input_ids, token_type_ids=None, attention_mask=input_mask)` pooled_output may help you.\r\nI have no idea about using these features to train an SVM although I know the theory about SVM.\r\nFor the second question, please refer to thomwolf's answer.\r\nI used the top 4 encoders_layers, but I did not get a better result than using Glove ",

"@heslowen Hello, would you please help me? For a sequence like [cls I have a dog.sep], when I input this to Bert and get the last hidden layer of sequence out, let’s say the output is “vector”, is the vector[0] embedding of cls, vector[1] embedding of I, etc. vector[-1] embedding of sep?",

"@heslowen How did you extract features after training a classifier on top of BERT? I've been trying to do the same, but I'm unable to do so. \r\nDo I first follow run_classifier.py, and then extract the features from tf.Estimator?",

"@rvoak I use pytorch. I did it as the demo in extract_featrues.py. it is easy to do that, you just need to load a tokenizer, a bert model, then tokenize your sentences, and then run the model to get the encoded_layers",

"@RomanShen yes you're right\r\n",

"@heslowen Thanks for your reply!",

"This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.\n",

"@heslowen sorry about my english, now i doing embedding for sentence task, i tuned with my corpus with this library, and i received config.json, vocab.txt and model.bin file, but in bert's original doc, can extract feature when load from ckpt tensorflow checkpoint. according to your answer, i must write feature_extraction for torch, that's right ? please help me",

"@hungph-dev-ict Do you mind opening a new issue with your problem? I'll try and help you out.",

"@LysandreJik Thank you for your help, I will find solution for my problem, it's use last hidden layer in bert mechanism, but if you have a better solution, can you help me ?\r\nSo i have more concerns about with my corpus, with this library code, use tokenizer from pretrained BERT model, so I want use only BasicTokenizer. Can you help me ? ",

"How long should the extract_features.py take to complete?\r\n\r\nwhen using 'bert-large-uncased' it takes seconds however it writes a blank file.\r\nwhen using 'bert-base-uncased' its been running for over 30 mins.\r\n\r\nany advice?\r\n\r\nthe code I used:\r\n\r\n!python extract_features.py \\\r\n --input_file data/src_train.txt \\\r\n --output_file data/output1.jsonl \\\r\n --bert_model bert-base-uncased \\\r\n --layers -1\r\n",

"You can look at what the BertForSequenceClassification model [https://github.com/huggingface/transformers/blob/3ba5470eb85464df62f324bea88e20da234c423f/pytorch_pretrained_bert/modeling.py#L867 ](url) does in it’s forward 139.\r\nThe pooled_output obtained from self.bert would seem to be the features you are looking for."

] | 1,555 | 1,576 | 1,562 | NONE | null | I extract features like examples in extarct_features.py. But went I used these features (the last encoded_layers) as word embeddings in a text classification task, I got a worse result than using 300D Glove(any other parameters are the same). I also used these features to compute the cos similarity for each word in sentences, I found that all values were around 0.6. So are these features can be used as Glove or word2vec embeddings? What exactly these features are? | {

"url": "https://api.github.com/repos/huggingface/transformers/issues/493/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/493/timeline | completed | null | null |

https://api.github.com/repos/huggingface/transformers/issues/492 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/492/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/492/comments | https://api.github.com/repos/huggingface/transformers/issues/492/events | https://github.com/huggingface/transformers/issues/492 | 433,597,604 | MDU6SXNzdWU0MzM1OTc2MDQ= | 492 | no_decay = ['bias', 'LayerNorm.bias', 'LayerNorm.weight'] | {

"login": "RayXu14",

"id": 22774575,

"node_id": "MDQ6VXNlcjIyNzc0NTc1",

"avatar_url": "https://avatars.githubusercontent.com/u/22774575?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/RayXu14",

"html_url": "https://github.com/RayXu14",

"followers_url": "https://api.github.com/users/RayXu14/followers",

"following_url": "https://api.github.com/users/RayXu14/following{/other_user}",

"gists_url": "https://api.github.com/users/RayXu14/gists{/gist_id}",

"starred_url": "https://api.github.com/users/RayXu14/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/RayXu14/subscriptions",

"organizations_url": "https://api.github.com/users/RayXu14/orgs",

"repos_url": "https://api.github.com/users/RayXu14/repos",

"events_url": "https://api.github.com/users/RayXu14/events{/privacy}",

"received_events_url": "https://api.github.com/users/RayXu14/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | [

"Yes. We are reproducing the behavior of the original optimizer, see [here](https://github.com/google-research/bert/blob/master/optimization.py#L65).",

"thanks~",

"but why?",

"I have the same question, but did this prove to be better? Or is it just to speed up calculations?"

] | 1,555 | 1,613 | 1,555 | NONE | null | what does this means? Whay these three kind no decay? | {

"url": "https://api.github.com/repos/huggingface/transformers/issues/492/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/492/timeline | completed | null | null |

https://api.github.com/repos/huggingface/transformers/issues/491 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/491/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/491/comments | https://api.github.com/repos/huggingface/transformers/issues/491/events | https://github.com/huggingface/transformers/issues/491 | 433,550,221 | MDU6SXNzdWU0MzM1NTAyMjE= | 491 | pretrained GPT-2 checkpoint gets only 31% accuracy on Lambada | {

"login": "yaroslavvb",

"id": 23068,

"node_id": "MDQ6VXNlcjIzMDY4",

"avatar_url": "https://avatars.githubusercontent.com/u/23068?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/yaroslavvb",

"html_url": "https://github.com/yaroslavvb",

"followers_url": "https://api.github.com/users/yaroslavvb/followers",

"following_url": "https://api.github.com/users/yaroslavvb/following{/other_user}",

"gists_url": "https://api.github.com/users/yaroslavvb/gists{/gist_id}",

"starred_url": "https://api.github.com/users/yaroslavvb/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/yaroslavvb/subscriptions",

"organizations_url": "https://api.github.com/users/yaroslavvb/orgs",

"repos_url": "https://api.github.com/users/yaroslavvb/repos",

"events_url": "https://api.github.com/users/yaroslavvb/events{/privacy}",

"received_events_url": "https://api.github.com/users/yaroslavvb/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 1260952223,

"node_id": "MDU6TGFiZWwxMjYwOTUyMjIz",

"url": "https://api.github.com/repos/huggingface/transformers/labels/Discussion",

"name": "Discussion",

"color": "22870e",

"default": false,

"description": "Discussion on a topic (keep it focused or open a new issue though)"

}

] | closed | false | null | [] | [

"Accuracy goes to 31% if I use [stop-word filter](https://github.com/cybertronai/bflm/blob/51908bdd15477a0cedfbd010d489f8d355443b6a/eval_lambada_slow.py#L62), still seems lower than expected ([predictions](https://s3.amazonaws.com/yaroslavvb2/data/lambada_predictions_stopword_filter.txt))\r\n",

"Hi, I doubt it's a problem with the model. Usually the culprit is too find in the pre-processing logic.\r\n\r\nYour dataset seems to be pre-processed but Radford, Wu et al. says they are using a version without preprocessing (end of section 3.3). GPT-2 is likely sensitive to tokenization issues and the like.\r\n\r\nIf you want to check the model it-self, you could try comparing with the predictions of the Tensorflow version on a few lambada completions?",

"Applying [detokenization](https://github.com/cybertronai/bflm/blob/d58a6860451ee2afa3688aff13d104ad74001ebe/eval_lambada_slow.py#L77) raises accuracy to 33.11%\r\n\r\nI spot checked a few errors against TF implementation and they give the same errors, so it seems likely the difference is due to eval protocol, rather than the checkpoint",

"IMHO \"without pre-processing\" means taking the original dataset without modification, which is what I also did here.\r\n\r\nHowever in the original dataset, everything is tokenized. IE \"haven't\" was turned into \"have n't\"\r\nEither way, undoing this tokenization only has a improvement of 2%, so there must be some deeper underlying difference in the way OpenAI did their evaluation.\r\n",

"Indeed. It's not very clear to me what they mean exactly by \"stop-word filter\". It seems like the kind of heuristic that can have a very large impact on the performances.\r\n\r\nMaybe a better filtering is key. I would probably go with a sort of beam-search to compute the probability of having a punctuation/end-of-sentence token after the predicted word and use that to filter the results.",

"I spoke with Alec and turns out for evaluation they got used the \"raw\" lambada corpus which was obtained by finding original sentences in book corpus that matched the tokenized versions in the lambada release. So to to reproduce the numbers we need the \"raw\" corpus https://github.com/openai/gpt-2/issues/131",

"I'm now able to get within 1% of their reported accuracy on GPT2-small. The two missing modifications were:\r\n1. Evaluate on OpenAI's version of lambada which adds extra formatting\r\n2. Evaluate by counting number of times the last BPE token is predicted incorrectly instead of last word, details are in https://github.com/openai/gpt-2/issues/131#issuecomment-497136199"

] | 1,555 | 1,559 | 1,559 | CONTRIBUTOR | null | For some reason I only see 26% accuracy when evaluating on Lambada for GPT-2 checkpoint instead of expected 45.99%

Here's a file of [predictions](https://s3.amazonaws.com/yaroslavvb2/data/lambada_predictions.txt) with sets of 3 lines of the form:

ground truth

predicted last_word

is_counted_as_error

Generated by this [script](https://github.com/cybertronai/bflm/blob/master/eval_lambada_slow.py)

Could this be caused by the way GPT-2 checkpoint was imported into HuggingFace?

| {

"url": "https://api.github.com/repos/huggingface/transformers/issues/491/reactions",

"total_count": 1,

"+1": 1,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/transformers/issues/491/timeline | completed | null | null |

https://api.github.com/repos/huggingface/transformers/issues/490 | https://api.github.com/repos/huggingface/transformers | https://api.github.com/repos/huggingface/transformers/issues/490/labels{/name} | https://api.github.com/repos/huggingface/transformers/issues/490/comments | https://api.github.com/repos/huggingface/transformers/issues/490/events | https://github.com/huggingface/transformers/pull/490 | 433,306,374 | MDExOlB1bGxSZXF1ZXN0MjcwNTM1OTk1 | 490 | Clean up GPT and GPT-2 losses computation | {

"login": "thomwolf",

"id": 7353373,

"node_id": "MDQ6VXNlcjczNTMzNzM=",

"avatar_url": "https://avatars.githubusercontent.com/u/7353373?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/thomwolf",

"html_url": "https://github.com/thomwolf",

"followers_url": "https://api.github.com/users/thomwolf/followers",

"following_url": "https://api.github.com/users/thomwolf/following{/other_user}",

"gists_url": "https://api.github.com/users/thomwolf/gists{/gist_id}",

"starred_url": "https://api.github.com/users/thomwolf/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/thomwolf/subscriptions",

"organizations_url": "https://api.github.com/users/thomwolf/orgs",

"repos_url": "https://api.github.com/users/thomwolf/repos",

"events_url": "https://api.github.com/users/thomwolf/events{/privacy}",