Model Card for Model ID

This is a continue-pretrained version of Tinyllama-v1.1 tailored for traditional Chinese. The continue-pretraining dataset contains over 10B tokens. Using bfloat16, the VRAM required during inference is only around 3GB!!!

This is a continue-pretrained version of Tinyllama-v1.1 tailored for traditional Chinese. The continue-pretraining dataset contains over 10B tokens. Using bfloat16, the VRAM required during inference is only around 3GB!!!

Usage

This is a causal language model not a chat model ! It is not designed to generate human-like responses. It is designed to generate text based on previous text.

from transformers import AutoModelForCausalLM, AutoTokenizer, AutoConfig

import torch

from transformers import TextStreamer

def generate_response(input):

'''

simple test for the model

'''

# tokenzize the input

tokenized_input = tokenizer.encode_plus(input, return_tensors='pt').to(device)

print(tokenized_input['input_ids'])

# generate the response

_ = model.generate(

input_ids=tokenized_input['input_ids'],

attention_mask=tokenized_input['attention_mask'],

pad_token_id=tokenizer.pad_token_id,

do_sample=True,

repetition_penalty=1.0,

max_length=2048,

streamer=streamer,

)

if __name__ == '__main__':

device = 'cuda' if torch.cuda.is_available() else 'cpu'

model = AutoModelForCausalLM.from_pretrained("benchang1110/Taiwan-tinyllama-v1.1-base",attn_implementation="flash_attention_2",device_map=device,torch_dtype=torch.bfloat16)

tokenizer = AutoTokenizer.from_pretrained("benchang1110/Taiwan-tinyllama-v1.1-base",use_fast=True)

streamer = TextStreamer(tokenizer)

while(True):

text = input("input a simple prompt:")

generate_response(text)

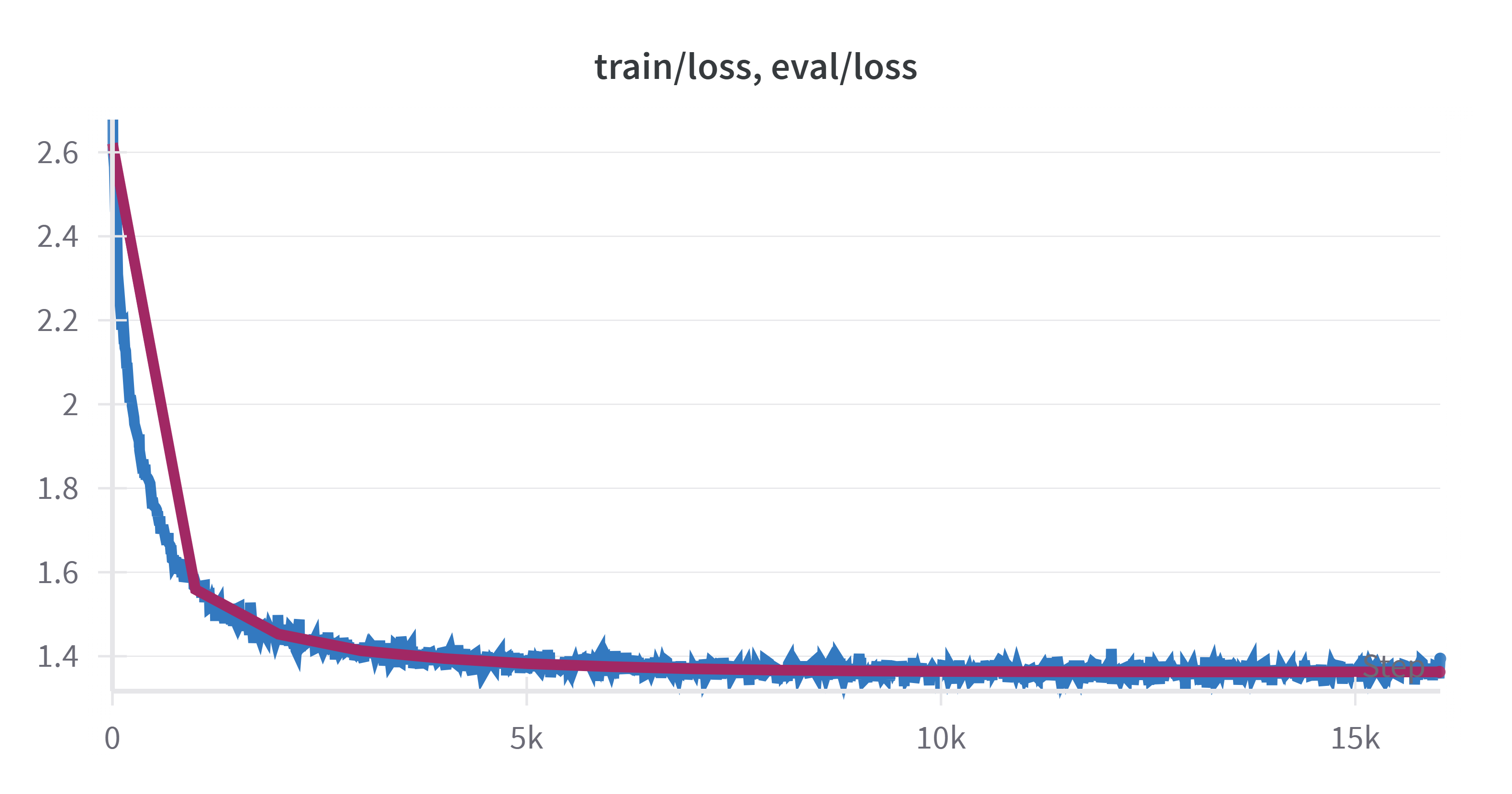

Training Procedure

The following training hyperparameters are used:

| Data size | Global Batch Size | Learning Rate | Epochs | Max Length | Weight Decay |

|---|---|---|---|---|---|

| 10B | 32 | 5e-5 | 1 | 2048 | 1e-4 |

Compute Infrastructure

1xA100(80GB), took approximately 200 GPU hours.

- Downloads last month

- 150

Inference Providers

NEW

This model is not currently available via any of the supported third-party Inference Providers, and

the model is not deployed on the HF Inference API.