| license: apache-2.0 | |

| datasets: | |

| - totally-not-an-llm/EverythingLM-data-V3 | |

| language: | |

| - en | |

| tags: | |

| - openllama | |

| - 3b | |

| Trained on 3 epoch of the EverythingLM data. | |

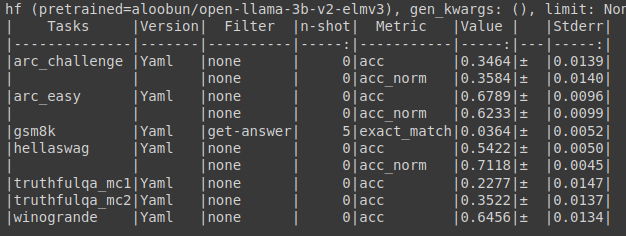

| Eval Results : | |

|  | |

| I like to tweak smaller models than 3B and mix loras, but now I'm trying my hand at finetuning a 3B model. Lets see how it goes. |