|

--- |

|

license: creativeml-openrail-m |

|

tags: |

|

- text-to-image |

|

- stable-diffusion |

|

- anime |

|

- aiart |

|

--- |

|

|

|

**This model is trained on 6(+1?) characters from ONIMAI: I'm Now Your Sister! (お兄ちゃんはおしまい!)** |

|

|

|

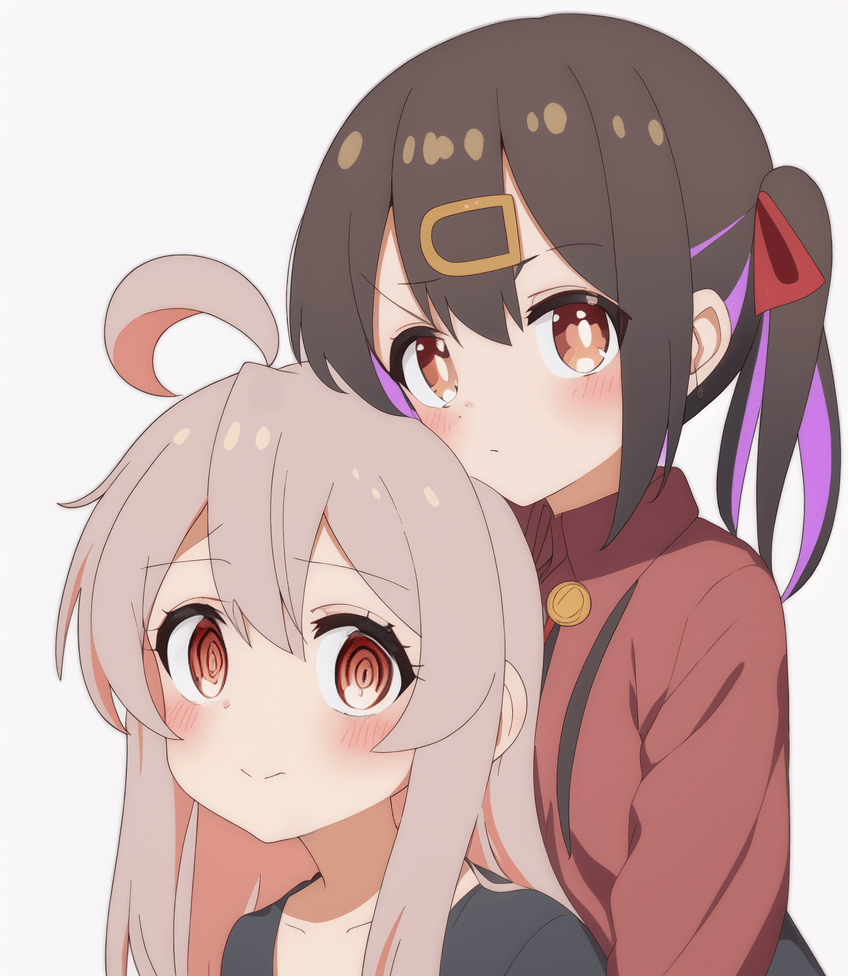

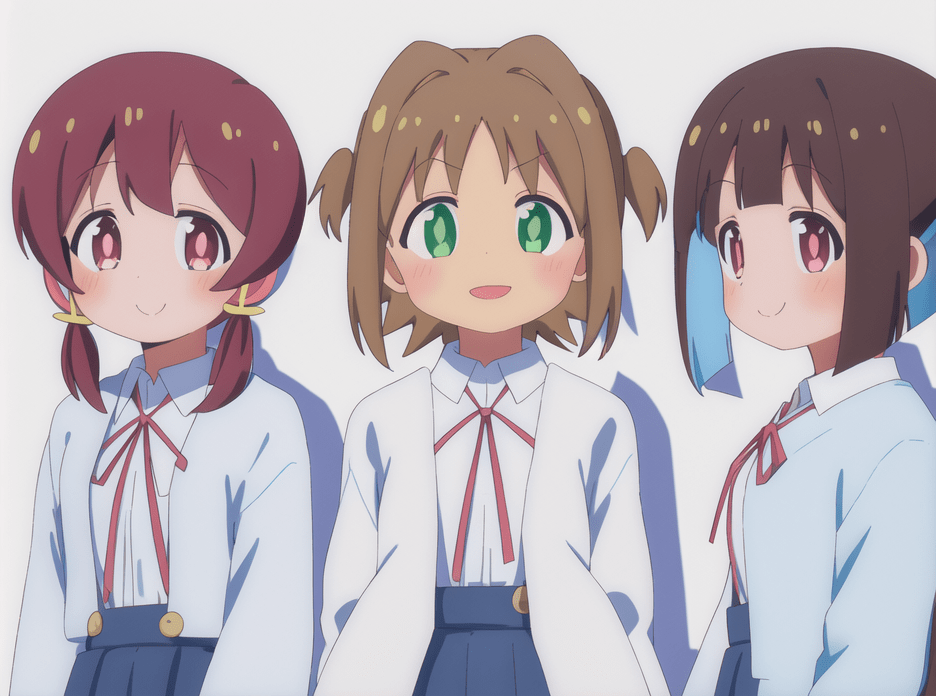

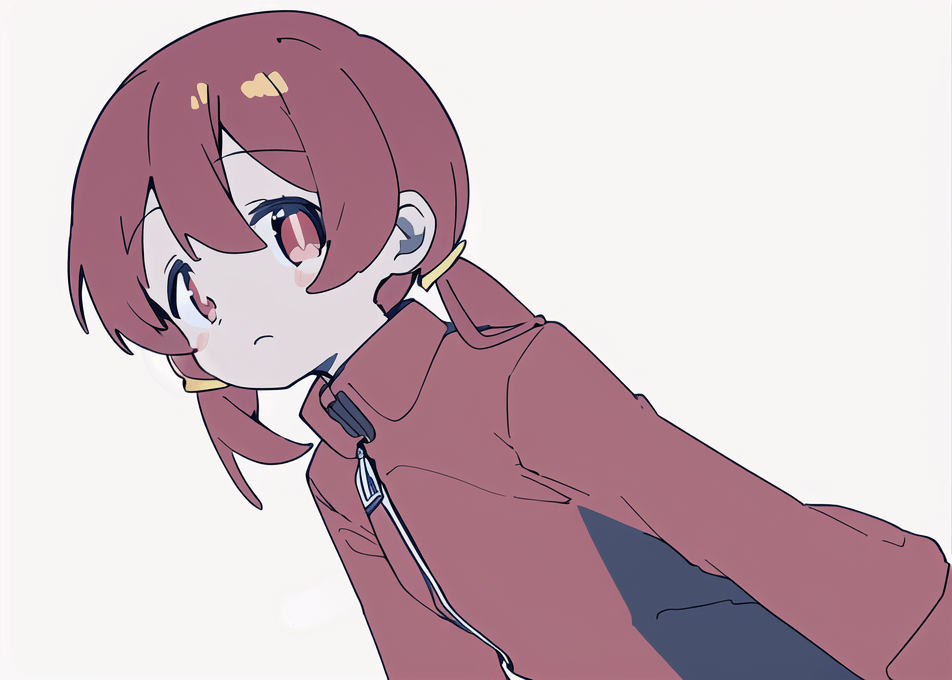

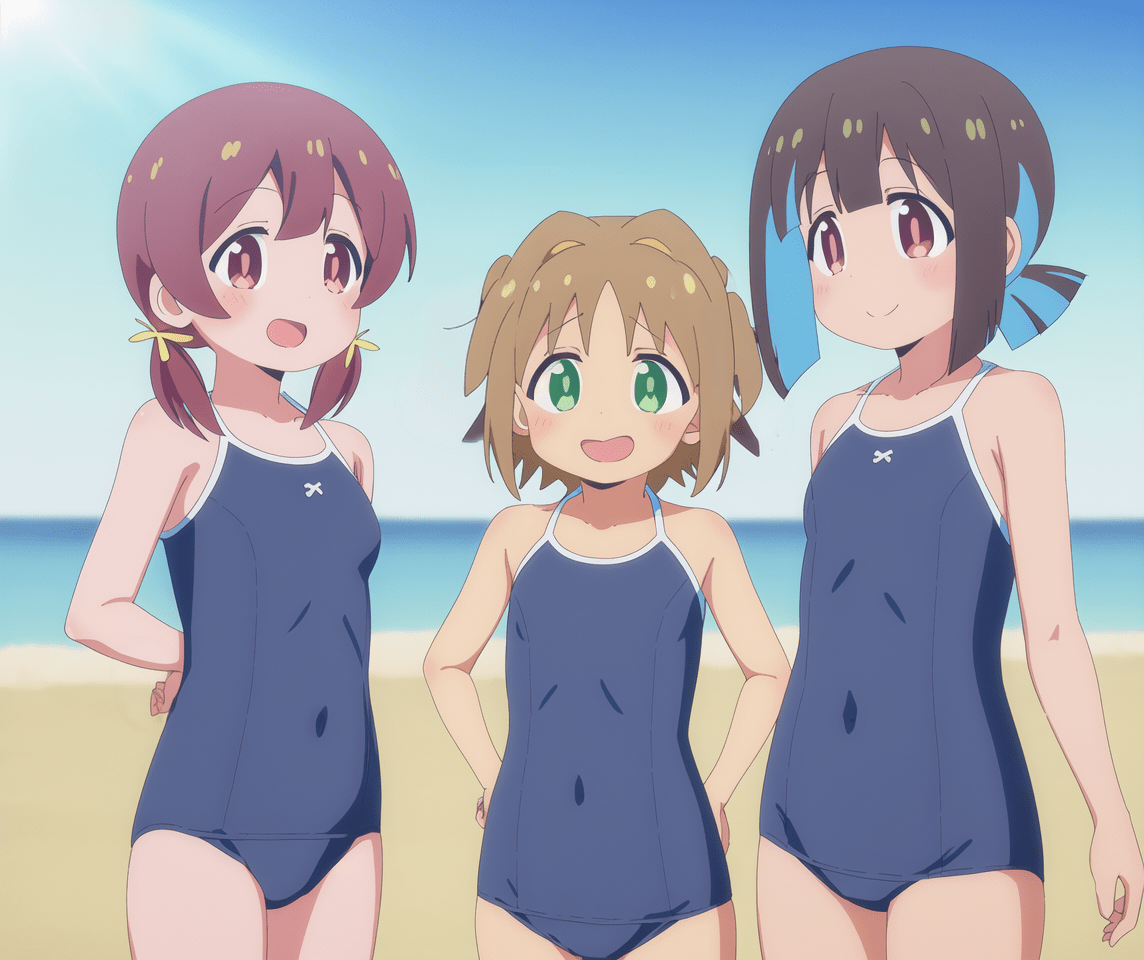

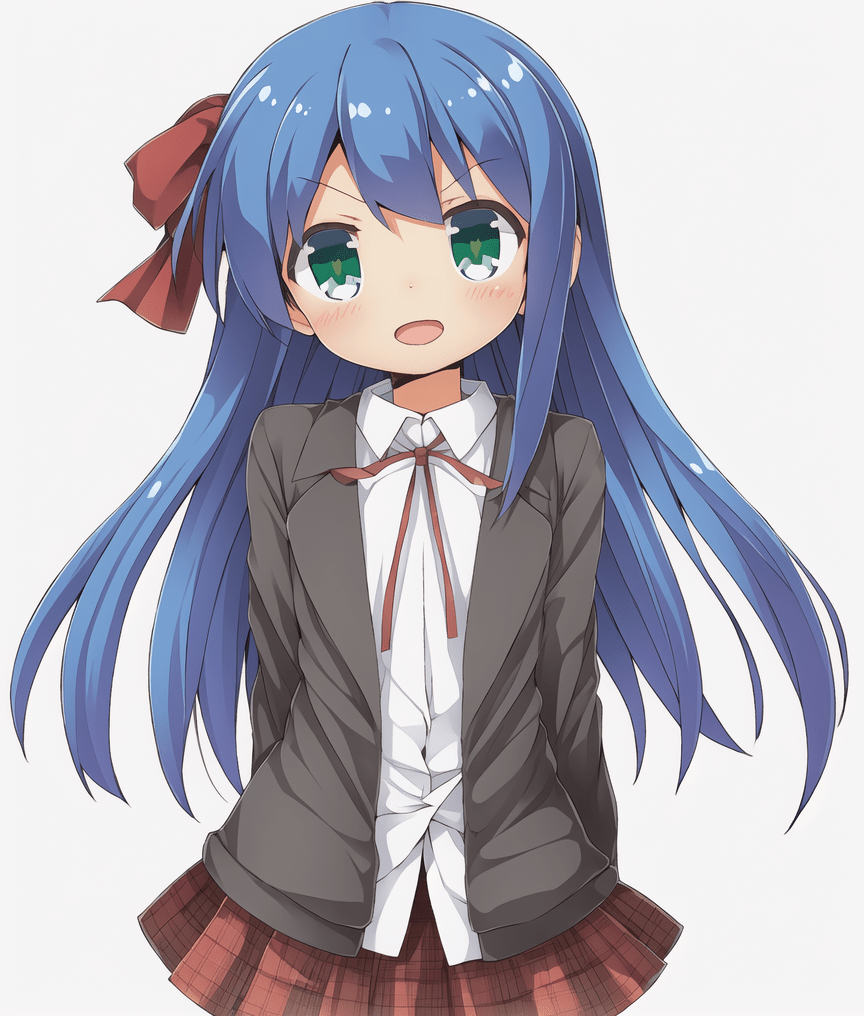

### Example Generations |

|

|

|

|

|

|

|

|

|

### Update -- 2023.02.19 |

|

|

|

Two lora checkpoints trained with the same dataset are added to the `loras` subfolder. The first one seems to work already. |

|

The characters are learned but unfortunately not the styles nor the outfits. |

|

It is trained based on ACertainty and works fine on Orange and Anything, but not so well on models that are trained further such as MyneFactoryBase or my own models. |

|

I still cannot figure out when Loras get transferred correctly. |

|

|

|

The lora has dimension 32, alpha 1, and is trained with learning rate 1e-4. |

|

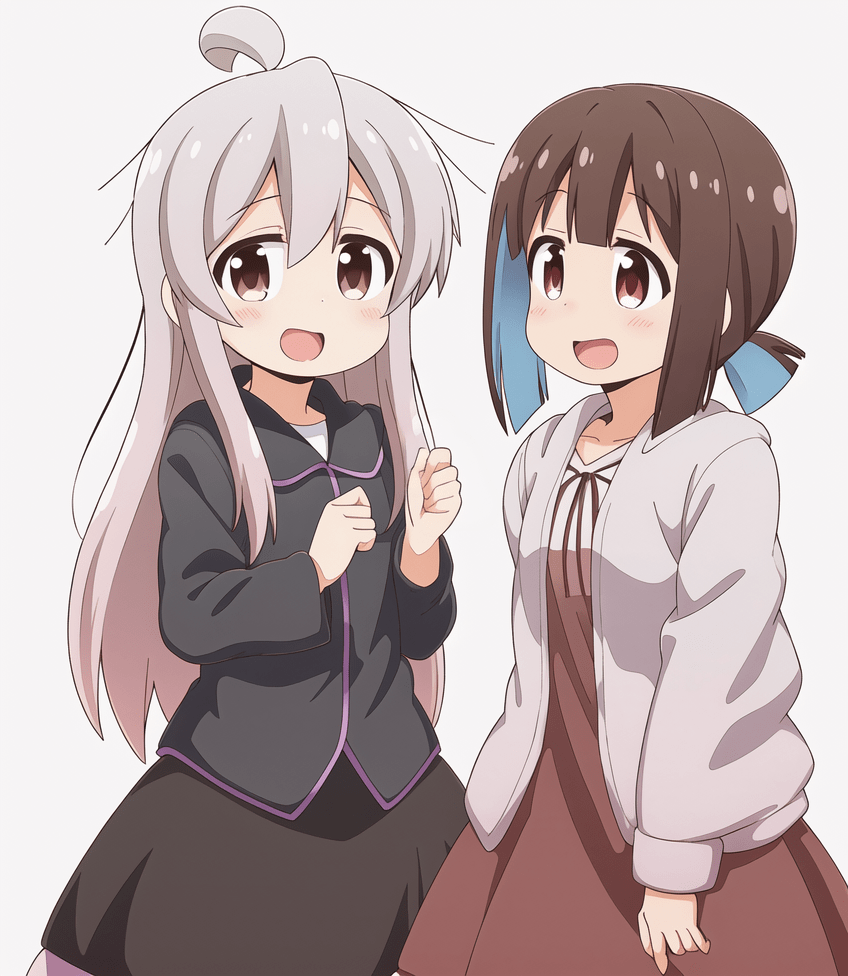

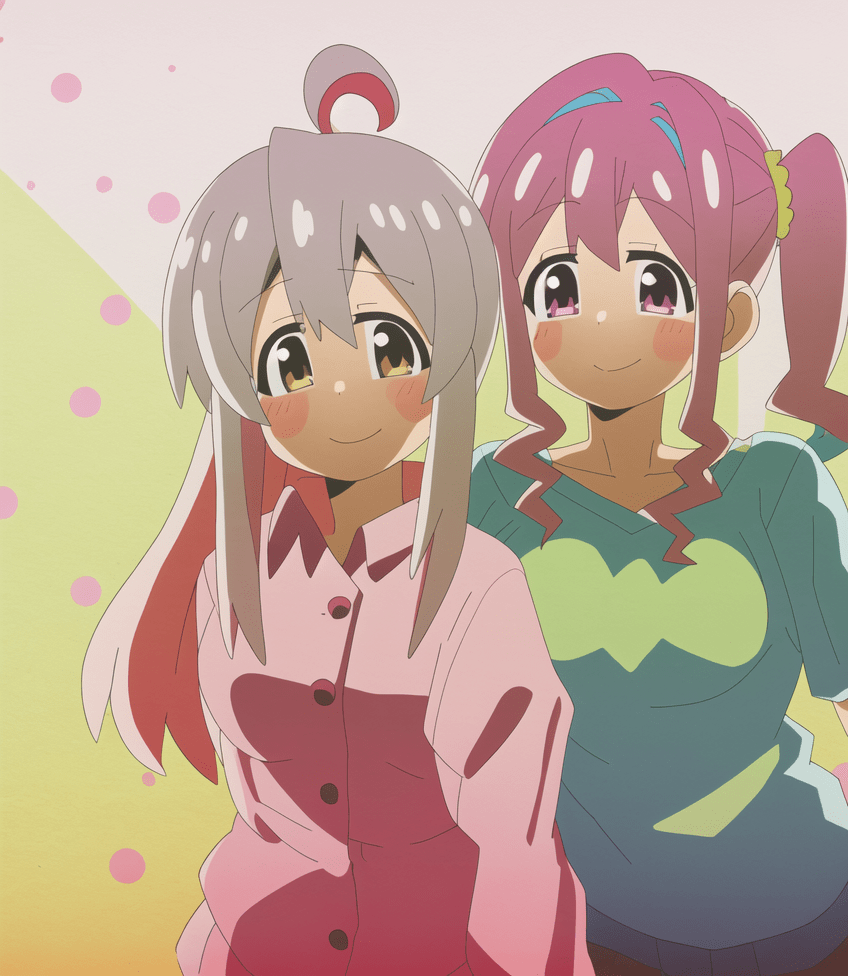

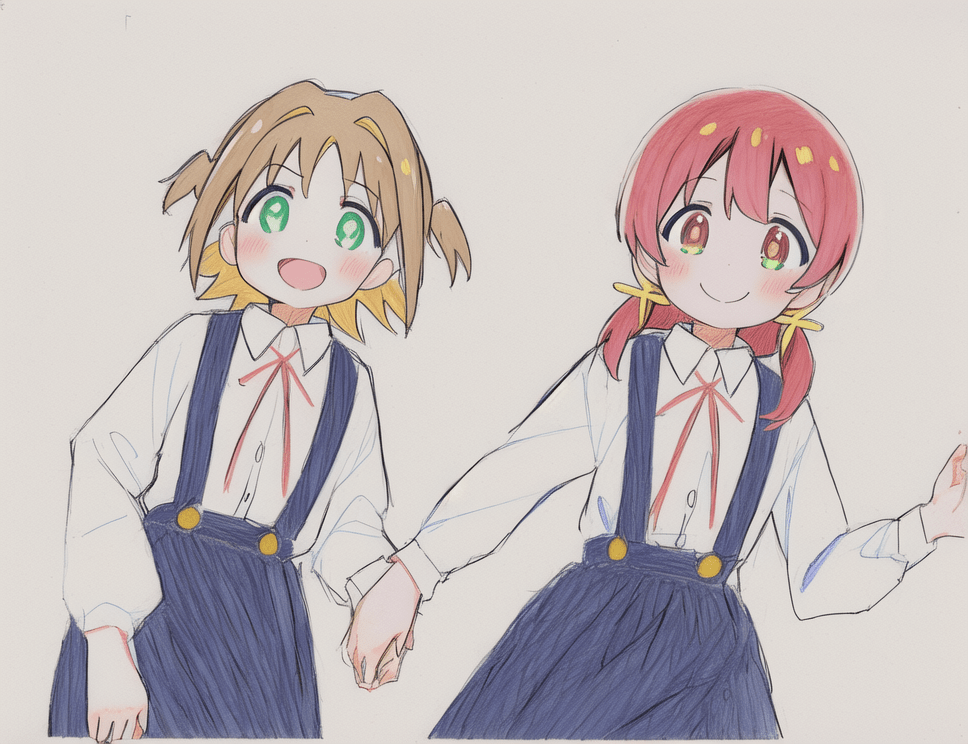

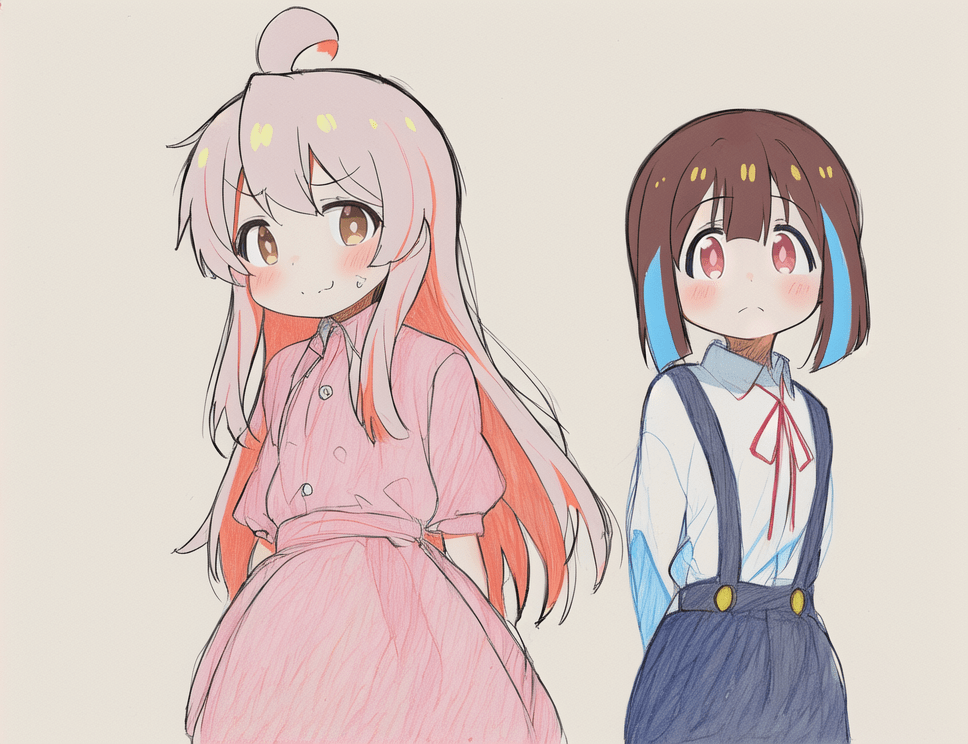

Here are some example generations. |

|

|

|

|

|

|

|

|

|

|

|

P.S. I would still suggest using the full model if you want more complex scenes, or more fidelity to the styles and outfits. |

|

You can always alter the style by merging models. |

|

|

|

### Usage |

|

|

|

The model is shared in both diffuser and safetensors formats. |

|

As for the trigger words, the six characters can be prompted with |

|

`OyamaMahiro`, `OyamaMihari`, `HozukiKaede`, `HozukiMomiji`, `OkaAsahi`, and `MurosakiMiyo`. |

|

`TenkawaNayuta` is tagged but she appears in fewer than 10 images so don't expect any good result. |

|

There are also three different styles trained into the model: `aniscreen`, `edstyle`, and `megazine` (yes, typo). |

|

As usual you can get multiple-character imagee but starting from 4 it is difficult. |

|

By the way, the model is trained at clip skip 1. |

|

|

|

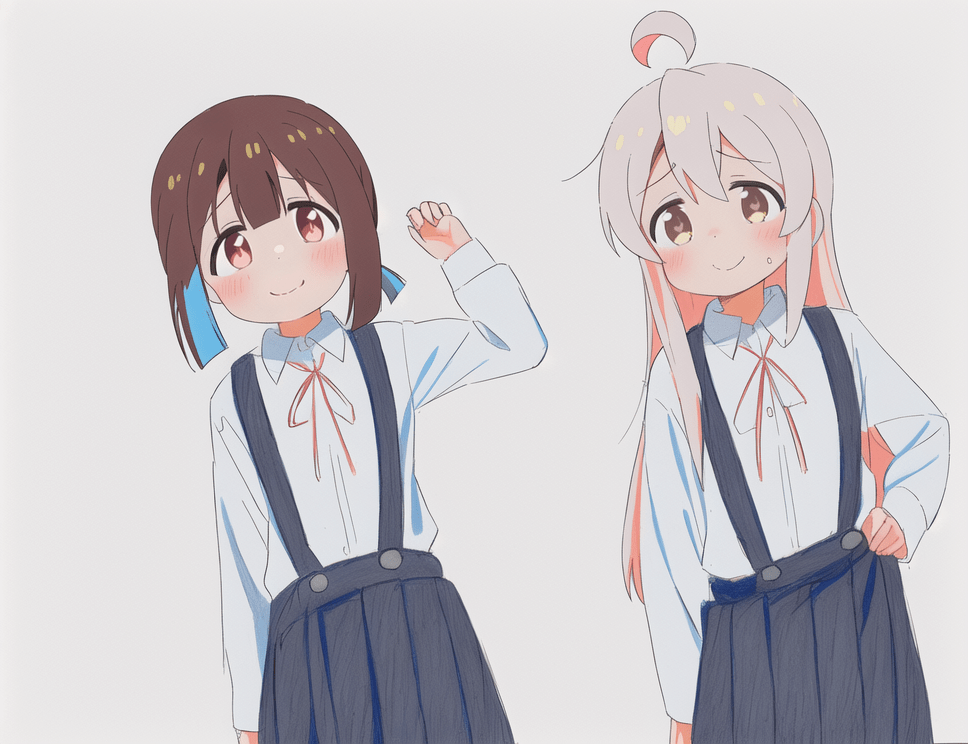

In the following images are shown the generations of different checkpoints. |

|

The default one is that of step 22828, but all the checkpoints starting from step 9969 can be found in the `checkpoints` directory. |

|

They are all sufficiently good at the six characters but later ones are better at `megazine` and `edstyle` (at the risk of overfitting, I don't really know). |

|

|

|

|

|

|

|

|

|

|

|

### More Generations |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

### Dataset Description |

|

|

|

The dataset is prepared via the workflow detailed here: https://github.com/cyber-meow/anime_screenshot_pipeline |

|

|

|

It contains 21412 images with the following composition |

|

|

|

- 2133 onimai images separated in four types |

|

- 1496 anime screenshots from the first six episodes (for style `aniscreen`) |

|

- 70 screenshots of the ending of the anime (for style `edstyle`, not counted in the 1496 above) |

|

- 528 fan arts (or probably some official arts) |

|

- 39 scans of the covers of the mangas (for style `megazine`, don't ask me why I choose this name, it is bad but it turns out to work) |

|

- 19279 regularization images which intend to be as various as possible while being in anime style (i.e. no photorealistic image is used) |

|

|

|

Note that the model is trained with a specific weighting scheme to balance between different concepts so that every image does not weight equally. |

|

After applying the per-image repeat we get around 145K images per epoch. |

|

|

|

### Training |

|

|

|

Training is done with [EveryDream2](https://github.com/victorchall/EveryDream2trainer) trainer with [ACertainty](https://huggingface.co./JosephusCheung/ACertainty) as base model. |

|

The following configuration is used |

|

|

|

- resolution 512 |

|

- cosine learning rate scheduler, lr 2.5e-6 |

|

- batch size 8 |

|

- conditional dropout 0.08 |

|

- change beta scheduler from `scaler_linear` to `linear` in `config.json` of the scheduler of the model |

|

- clip skip 1 |

|

|

|

I trained for two epochs wheareas the default release model was trained for 22828 steps as mentioned above. |