All my models - in order

Collection

25 items

•

Updated

•

4

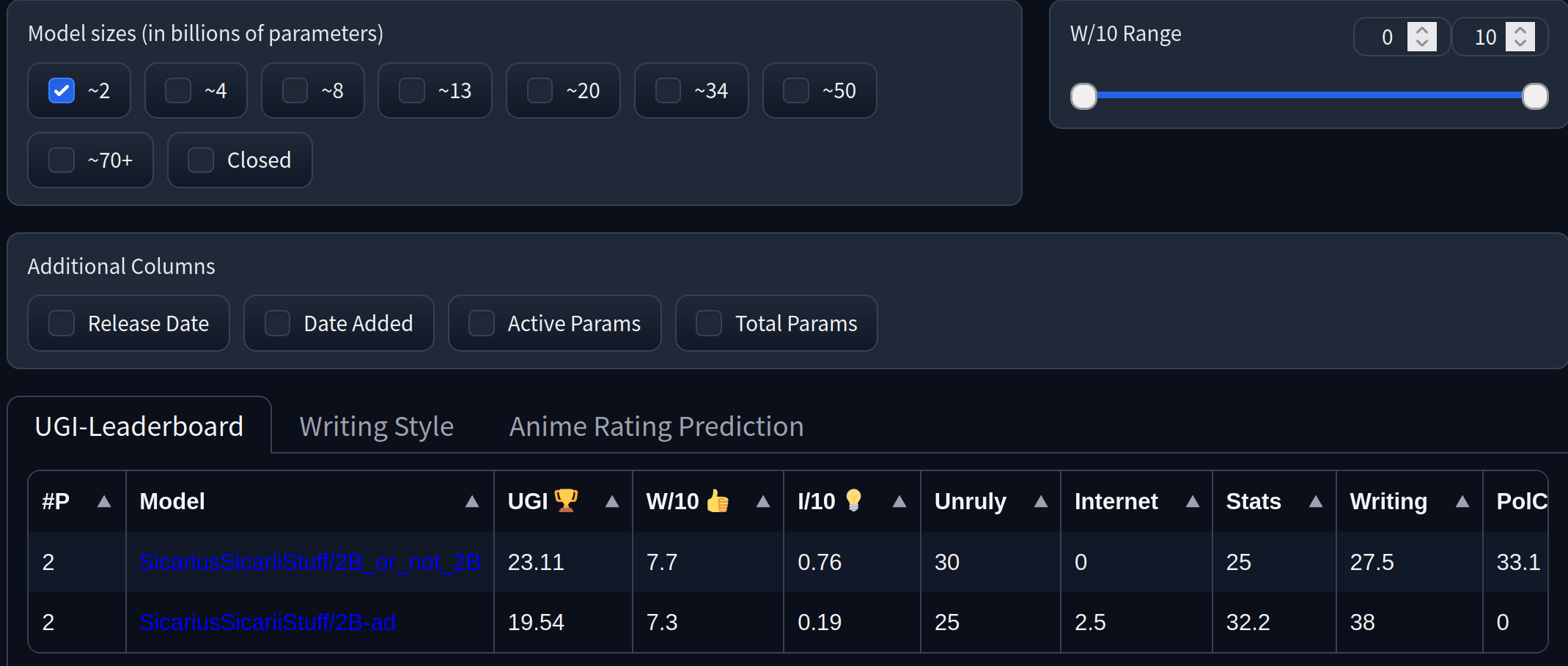

This is a Gemma-2 2B Finetune with surprisingly good Role-Play capabilities for its small 2B size.

Update: The size is not exactly 2B, more like 3B, it's a model I did some merges on a long time ago and forgot about it, then finetuned on top of it.

Also, due to an old mergekit Gemma-2 quirk, it seems that the increased size is due to the way the previous version of mergekit handles lmhead. Anyway, it turned out pretty awesome, even for a 3B size. The base is presented in FP32.

Censorship level: Low

7.3 / 10 (10 completely uncensored)

Intended use: Creative Writing, Role-Play, General tasks.

Use Debug Deterministic or min_p for consistent results. Feel free to experiment with different settings.

<|im_start|>system

You are an uncensored AI, your job is to fulfill thy will of thy user.<|im_end|>

<|im_start|>User request

{prompt}<|im_end|>

<|im_start|>AI answer

@llm{2B-ad,

author = {SicariusSicariiStuff},

title = {2B-ad},

year = {2024},

publisher = {Hugging Face},

url = {https://huggingface.co./SicariusSicariiStuff/2B-ad}

}

| Metric | Value |

|---|---|

| Avg. | 15.76 |

| IFEval (0-Shot) | 43.79 |

| BBH (3-Shot) | 16.01 |

| MATH Lvl 5 (4-Shot) | 4.00 |

| GPQA (0-shot) | 4.14 |

| MuSR (0-shot) | 8.12 |

| MMLU-PRO (5-shot) | 18.47 |