license: apache-2.0

license_link: LICENSE

tags:

- llamafile

OpenAI Whisper - llamafile

Whisperfile is a high-performance implementation of OpenAI's Whisper created by Mozilla Ocho as part of the llamafile project, based on the whisper.cpp software written by Georgi Gerganov, et al.

- Model creator: OpenAI

- Original models: openai/whisper-release

- Origin of quantized weights: ggerganov/whisper.cpp

The model is packaged into executable weights, which we call whisperfiles. This makes it easy to use the model on Linux, MacOS, Windows, FreeBSD, OpenBSD, and NetBSD for AMD64 and ARM64.

Quickstart

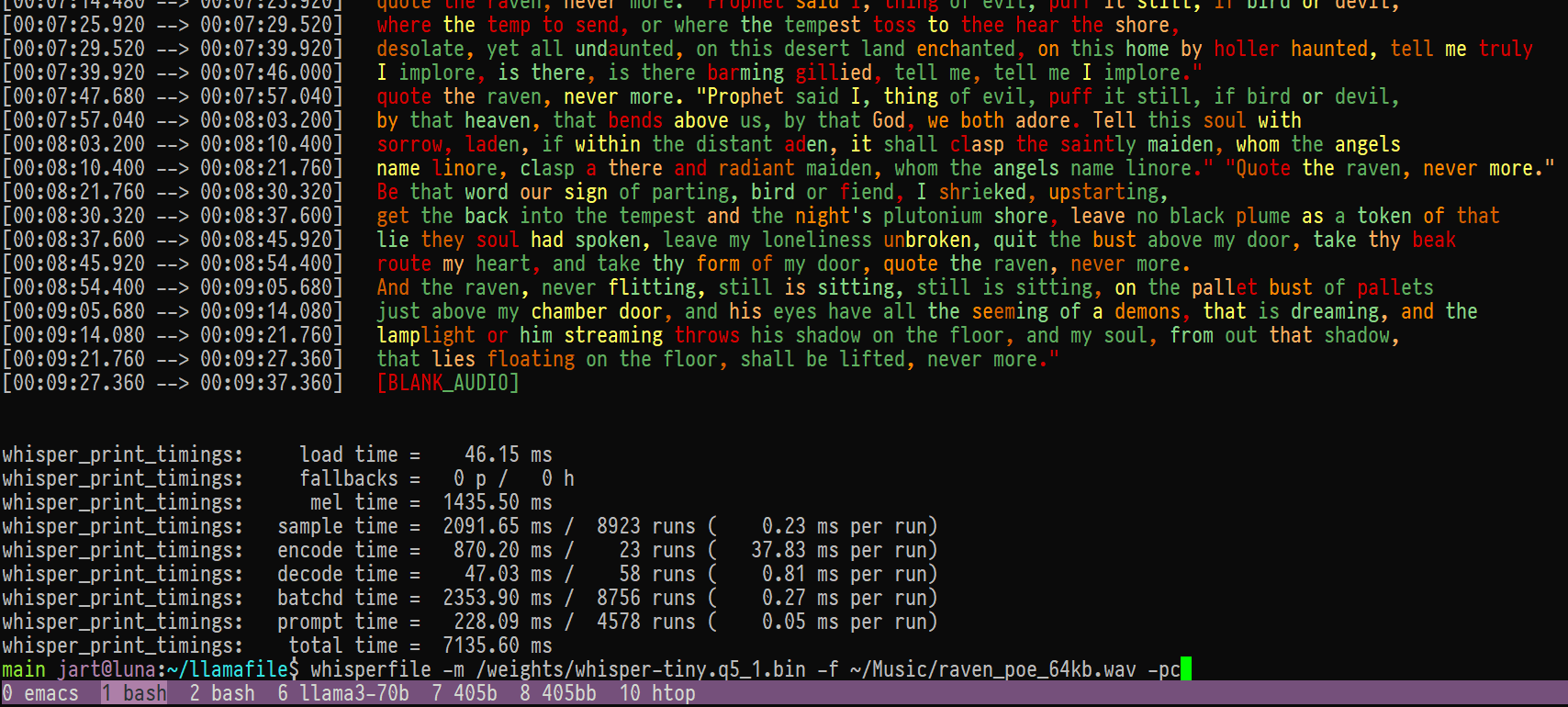

Running the following on a desktop OS will transcribe the speech of a

wav/mp3/ogg/flac file into text. The -pc flag enables confidence color

coding.

wget https://huggingface.co./Mozilla/whisperfile/resolve/main/whisper-tiny.en.llamafile

wget https://huggingface.co./Mozilla/whisperfile/resolve/main/raven_poe_64kb.mp3

chmod +x whisper-tiny.en.llamafile

./whisper-tiny.en.llamafile -f raven_poe_64kb.mp3 -pc

There's also an HTTP server available:

./whisper-tiny.en.llamafile

You can also read the man page:

./whisper-tiny.en.llamafile --help

Having trouble? See the "Gotchas" section of the llamafile README.

GPU Acceleration

The following flags are available to enable GPU support:

--gpu nvidia--gpu metal--gpu amd

The medium and large whisperfiles contain prebuilt dynamic shared

objects for Linux and Windows. If you download one of the other models,

then you'll need to install the CUDA or ROCm SDK and pass --recompile

to build a GGML CUDA module for your system.

On Windows, only the graphics card driver needs to be installed if you

own an NVIDIA GPU. On Windows, if you have an AMD GPU, you should

install the ROCm SDK v6.1 and then pass the flags --recompile --gpu amd the first time you run your llamafile.

On NVIDIA GPUs, by default, the prebuilt tinyBLAS library is used to

perform matrix multiplications. This is open source software, but it

doesn't go as fast as closed source cuBLAS. If you have the CUDA SDK

installed on your system, then you can pass the --recompile flag to

build a GGML CUDA library just for your system that uses cuBLAS. This

ensures you get maximum performance.

For further information, please see the llamafile README.

Documentation

See the whisperfile documentation for tutorials and further details.