Anime Illust Diffusion XL 0.8

.jpeg)

Anime Illustration Diffusion XL, or AIDXL, is a model dedicated to generating stylized anime illustrations. It has over 800 (with more and more updates) built-in illustration styles, which are triggered by specific trigger words (see Trigger Words List).

Advantages:

Flexible composition rather than traditional AI posing.

Skillful details rather than messy chaos.

Knows anime characters better.

I User Guide

Basic Usage

- CFG scale: 5-11

- Resolution: Area (= width x height) around 1024x1024. Not lower than 256x256, and resolutions where both length and width are multiples of 32.

- Sampling method: Euler A (20+ steps) or DPM++ 2M Karras (~35 steps)

- No need to use any refiner model. Use VAE of the model itself or the sdxl-vae.

❓ Question: Why not use the standard SDXL resolution?

💡 Answer: Because the bucketing algorithm used in training does not adhere to a fixed set of buckets. Although this does not conform to positional encoding, we have not observed any adverse effects.

Prompting Strategies

All text-to-image diffusion models have a notoriously high sensitivity to prompt, and AIDXL is no exception. Even a misspelling in the prompt, or even replacing spaces with underscores, can affect the generated results.

AIDXL encourages users to write prompt in tags separated by comma + space (, ). Although the model also supports natural language descriptions as prompt, or an intermix of both, the tag-by-tag format is more stable and user-friendly.

When describing a specific ACG concept, such as a character, style, or scene, we recommend users choose tags from the Danbooru tags and replace underscores in the Danbooru tags with spaces to ensure the model accurately understands your needs. For example, bishop_(chess) should be written as bishop (chess), and in inference tools like AUTOMATIC1111 WebUI that use parentheses to weight prompt, all parentheses within the tags should be escaped, i.e., bishop \(chess\).

Tag Ordering

Including AWA Diffusion, most diffusion models better understand logically ordered tags. While tag ordering is not mandatory, it can help the model better understand your needs. Generally, the earlier the tag in the order, the greater its impact on generation.

Here's an example of tag ordering. The example organizes the order of tags, prepends art style tags because style should be the most important to the image. Subsequently, other tags are added in order of importance. Lastly, aesthetic tags and quality tags are positioned at the end to further emphasize the aesthetics of the image.

art style (by xxx) -> character (1 frieren (sousou no frieren)) -> race (elf) -> composition (cowboy shot) -> painting style (impasto) -> theme (fantasy theme) -> main environment (in the forest, at day) -> background (gradient background) -> action (sitting on ground) -> expression (expressionless) -> main characteristics (white hair) -> other characteristics (twintails, green eyes, parted lip) -> clothing (wearing a white dress) -> clothing accessories (frills) -> other items (holding a magic wand) -> secondary environment (grass, sunshine) -> aesthetics (beautiful color, detailed) -> quality (best quality) -> secondary description (birds, cloud, butterfly)

Tag order is not set in stone. Flexibility in writing prompt can yield better results. For example, if the effect of a concept (such as style) is too strong and detracts from the aesthetic appeal of the image, you can move it to a later position to reduce its impact.

Special usage

Art Styles

Add the trigger words to stylize the image. Suitable trigger words will greatly improve the quality;

Tips for Style Tag

- Intensity Adjustment: You can adjust the intensity of a style by altering the order or weighting of style tags in your prompt. Frontloading a style tag enhances its effect, while placing it later reduces its effect.

❓ Question: Why include the prefix by in artistic style tags?

💡 Answer: To clearly inform the model that you want to generate a specific artistic style rather than something else, we recommend including the prefix by in artistic style tags. This differentiates by xxx from xxx, especially when xxx itself carries other meanings, such as dino which could represent either a dinosaur or an artist's identifier.

Similarly, when triggering characters, add a 1 as a prefix to the character trigger word.

❓ Question: How to reproduce the model cover? Why cannot I reproduce a same picture as the cover using the same generation parameters?

💡 Answer: Because the generation parameters shown in the cover are NOT its text2image parameter, but the image2image (to scale up) parameter. The base image is mostly generated from the Euler Ancester sampler rather than the DPM sampler.

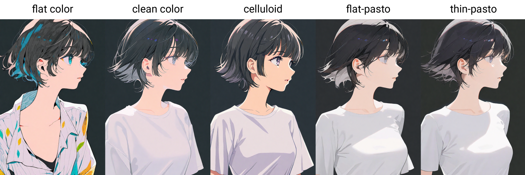

Painting Styles

From version 0.7, AIDXL summarizes several similar styles and introduces painting style trigger words. These trigger words each represent a common animation illustration style category. Please note that general style trigger words do not necessarily conform to the artistic meaning that their word meaning refers to but are special trigger words that have been redefined.

Painting style trigger words: flat color, clean color, celluloid, flat-pasto, thin-pasto, pseudo-impasto, impasto, realistic, photorealistic, cel shading, 3d

- flat color: Flat colors, using lines to describe light and shadow

- clean color: Style between flat color and flat-pasto. Simple and tidy coloring.

- celluloid: Anime coloring

- flat-pasto: Nearly flat color, using gradient color to describe lighting and shadow

- thin-pasto: Thin contour, using gradient and paint thickness to describe light, shadow and layers

- pseudo-impasto:Use gradients and paint thickness to describe light, shadow and layers

- impasto:Use paint thickness to describe light, shadow and gradation

- realistic

- photorealistic:Redefined to a style closer to the real world

- cel shading: Anime 3D modeling style

- 3d

Characters

From version 0.7, AIDXL has enhanced training for characters. The effect of some character trigger words can already achieve the effect of Lora, and can well separate the character concept from its own clothing.

The character triggering method is: {character} ({copyright}). For example, to trigger the heroine Lucy in the animation "Cyberpunk: Edgerunners", use lucy (cyberpunk); to trigger the character Gan Yu in the game "Genshin Impact", use ganyu (genshin impact). Here, "lucy" and "ganyu" are character names, "(cyberpunk)" and "(genshin impact)" are the origins of the corresponding characters, and the brackets are escaped with slashes "" to prevent them from being interpreted as weighted tags. For some characters, copyright part is not necessary.

From version v0.8, there's another easier triggering method: a {girl/boy} named {character} from {copyright} series.

For the list of character trigger words, please refer to: selected_tags.csv · SmilingWolf/wd-v1-4-convnext-tagger-v2 at main (huggingface.co). Also, some extra trigger words that are not mentioned in this document may also be included.

Some character requires extra triggering step. When using, if the character cannot be completely restored with a single character trigger word, the main characteristics of the character need to be added to the prompt.

AIDXL supports character dressing up. Character trigger words usually do not carry the clothing characteristics concept of the character itself. If you want to add character clothing, you need to add the clothing tag in the prompt word. For example, silver evening gown, plunging neckline gives the dress of character St. Louis (Luxurious Wheels) from game Azur Lane. Similarly, you can add any character's clothing tags to those of other characters.

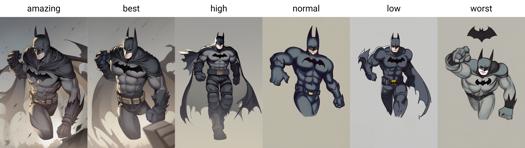

Quality Tags

Quality and aesthetic tags are formally trained. Trailing them in prompts will affect the quality of the generated image.

From version 0.7, AIDXL officially trains and introduces quality tags. Qualities are divided into six levels, from best to worst: amazing quality, best quality, high quality, normal quality, low quality and worst quality.

It's recommended to add extra weight to quality tags, e.g. (amazing quality:1.5).

Aesthetic Tags

Since version 0.7, aesthetic tags have been introduced to describe the special aesthetic characteristics of images.

Style Merging

You are able to merge some styles into your customized style. 'Merging' actually means use multiple style trigger words at one time. For example, chun-li, amazing quality, (by yoneyama mai:0.9), (by chi4:0.8), by ask, by modare, (by ikky:0.9).

Some tips:

Control the weight and order of the styles to adjust the style.

Append rather than prepend to your prompt.

Training Strategy & Parameters

AIDXLv0.1

Using SDXL1.0 as the base model, using about 22k labeled images to train about 100 epochs on a cosine scheduler with a learning rate of 5e-6 and a number of cycles = 1 to obtain model A. Then, using a learning rate of 2e-7 and the same other parameters to obtain model B. The AIDXLv0.1 model is obtained by merging model A and B.

AIDXLv0.51

Training Strategy

Resume training from AIDXLv0.5, there are three runs of training pipelined one by one:

Long caption training: Use the whole dataset, with some images captioned manually. Start training both the U-Net and the text encoder with the AdamW8bit optimizer, a high learning rate (around 1.5e-6) with cosine scheduler. Stop training when the learning rate decays below a threshold (around 5e-7).

Short caption training: Restart training from the output of step 1. with the same parameters and strategy but a dataset with a shorter caption length.

Refining step: Prepare a subset of the dataset in step 1. that contains manually picked images of high quality. Restart training from the output of step 2. with a low learning (around 7.5e-7), with cosine scheduler with restarts 5 to 10 turns. Train until the result is aesthetically good.

Fixed Training Parameters

No extra noise like noise offset.

Min snr gamma = 5: speed up training.

Full bf16 precision.

AdamW8bit optimizer: a balance between efficiency and performance.

Dataset

Resolution: 1024x1024 total resolution (= height time width) with a modified SDXL officially bucketing strategy.

Captioning: Captioned by WD14-Swinv2 model with 0.35 threshold.

Close-up cropping: Crop images into several close-ups. It's very useful when the training images are large or rare.

Trigger words: Keep the first tag of images as their trigger words.

AIDXLv0.6

Training Strategy

Resume training from AIDXLv0.52, but with an adaptive repeating strategy - For each captioned image in the dataset, increase its number of repeats in training subject to the following rules:

Rule 1: The higher the image's quality, the more its number of repeats;

Rule 2: If the image belongs to a style class:

- If the class is not yet fitted or underfitted, then manually increase the number of repeats of the class, or automatically boost its number of repeats such that the total number of repeats of the data in the class reaches a certain preset value, which is around 100.

- If the class is already fitted or overfitted, then manually decrease the number of repeats of the class by forcing its number of repeats to 1 and drop it if its quality is low.

Rule 3: Its number of repeats limit its final number of repeats to not exceed a certain threshold, which is around 10.

This strategy has the following advantages:

It protects the model's original information from new training, which holds the same idea to the regularized image;

It makes the impact of training data more controllable;

It balances the training between different classes by motivating those not yet fitted classes and preventing overfitting to those already fitted classes;

It significantly saves computation resources, and make it much easier to add new styles into the model.

Fixed Training Parameters

Same as AIDXLv0.51.

Dataset

The dataset of AIDXLv0.6 is based on AIDXLv0.51. Furthermore, the following optimization strategies are applied:

Caption semantic sorting: Sort caption tags by semantic order, e.g. "gun, 1boy, holding, short hair" -> "1boy, short hair, holding, gun".

Caption deduplicating: Remove duplicate tags, keep the one that retains the most information. Duplicate tags means tags with similar meaning such as "long hair" and "very long hair".

Extra tags: Manually add additional tags to all images, e.g. "high quality", "impasto" etc. This can be quickly done with some tools.

Special thanks

Computing power sponsorship: Thanks to @NieTa community (捏Ta (nieta.art)) for providing computing power support;

Data support: Thanks to @KirinTea_Aki (KirinTea_Aki Creator Profile | Civitai) and @Chenkin (Civitai | Share your models) for providing a large amount of data support;

There would be no version 0.7 and 0.8 without them.

AIDXL vs AID

AIDXL is trained on the same training set as AIDv2.10, but outperforms AIDv2.10. AIDXL is smarter and can do many things that SD1.5-based models cannot. It also does a really good job of distinguishing between concepts, learning image detail, handling compositions that are difficult or even impossible for SD1.5 and AID. Overall, it is absolute potential. I'll keep updating AIDXL.

Sponsorship

If you like our work, you are welcome to sponsor us through Ko-fi(https://ko-fi.com/eugeai) to support our research and development. Thank you for your support~

- Downloads last month

- 94

Model tree for Eugeoter/anime_illust_diffusion_xl

Base model

stabilityai/stable-diffusion-xl-base-1.0