license: mit

datasets:

- uoft-cs/cifar10

- uoft-cs/cifar100

- ILSVRC/imagenet-1k

tags:

- Adversarial Robustness

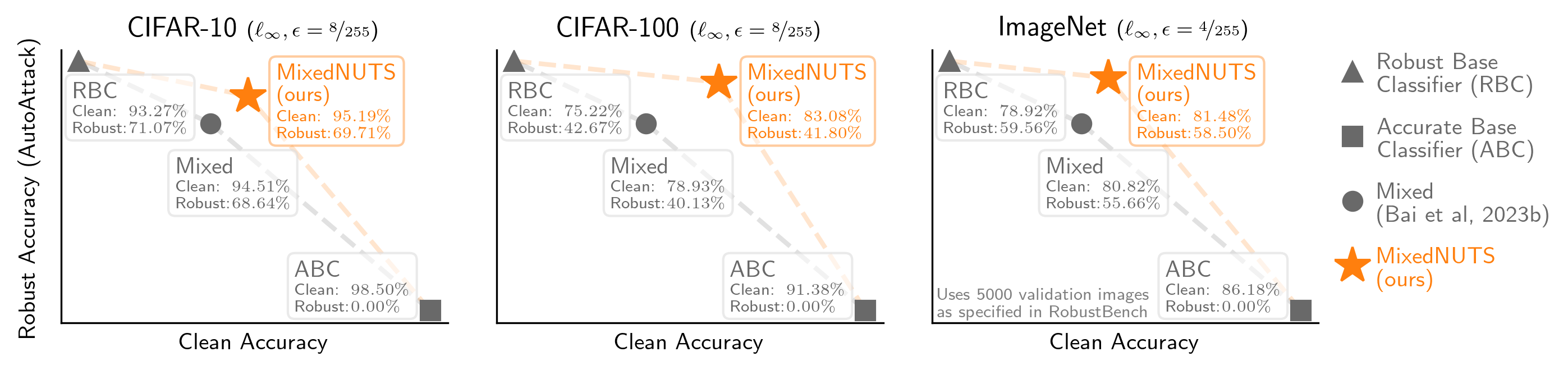

MixedNUTS: Training-Free Accuracy-Robustness Balance via Nonlinearly Mixed Classifiers

This is the official model repository of the preprint paper

MixedNUTS: Training-Free Accuracy-Robustness Balance via Nonlinearly Mixed Classifiers

by Yatong Bai, Mo Zhou, Vishal M. Patel,

and Somayeh Sojoudi in Transactions on Machine Learning Research.

TL;DR: MixedNUTS balances clean data classification accuracy and adversarial robustness without additional training via a mixed classifier with nonlinear base model logit transformations.

Model Checkpoints

MixedNUTS is a training-free method that has no additional neural network components other than its base classifiers.

All robust base classifiers used in the main results of our paper are available on RobustBench and can be downloaded automatically via the RobustBench API.

Here, we provide the download links to the standard base classifiers used in the main results.

For code and detailed usage, please refer to our GitHub repository.

Citing our work (BibTeX)

@article{MixedNUTS,

title={MixedNUTS: Training-Free Accuracy-Robustness Balance via Nonlinearly Mixed Classifiers},

author={Bai, Yatong and Zhou, Mo and Patel, Vishal M. and Sojoudi, Somayeh},

journal={Transactions on Machine Learning Research},

year={2024}

}