Spaces:

Running

Running

chart for task summary

Browse files- app.py +74 -30

- distinguish_high_low_label.ipynb +127 -25

- plot.png +0 -0

- result.txt +1 -1

app.py

CHANGED

|

@@ -9,6 +9,7 @@ import matplotlib.pyplot as plt

|

|

| 9 |

from sklearn.metrics import roc_curve, auc

|

| 10 |

import pandas as pd

|

| 11 |

from sklearn.metrics import roc_auc_score

|

|

|

|

| 12 |

# Define the function to process the input file and model selection

|

| 13 |

|

| 14 |

def process_file(model_name,inc_slider,progress=Progress(track_tqdm=True)):

|

|

@@ -69,7 +70,7 @@ def process_file(model_name,inc_slider,progress=Progress(track_tqdm=True)):

|

|

| 69 |

indices = test_info[test_info[0].isin(random_schools)].index.tolist()

|

| 70 |

high_indices = test_info[(test_info[0].isin(high_sample))].index.tolist()

|

| 71 |

low_indices = test_info[(test_info[0].isin(low_sample))].index.tolist()

|

| 72 |

-

|

| 73 |

# Load the test file and select rows based on indices

|

| 74 |

test = pd.read_csv(test_location, sep=',', header=None, engine='python')

|

| 75 |

selected_rows_df2 = test.loc[indices]

|

|

@@ -80,7 +81,8 @@ def process_file(model_name,inc_slider,progress=Progress(track_tqdm=True)):

|

|

| 80 |

graduation_groups = [

|

| 81 |

'high' if idx in high_indices else 'low' for idx in selected_rows_df2.index

|

| 82 |

]

|

| 83 |

-

|

|

|

|

| 84 |

|

| 85 |

with open("roc_data2.pkl", 'rb') as file:

|

| 86 |

data = pickle.load(file)

|

|

@@ -88,7 +90,7 @@ def process_file(model_name,inc_slider,progress=Progress(track_tqdm=True)):

|

|

| 88 |

p_label=data[1]

|

| 89 |

# Step 1: Align graduation_group, t_label, and p_label

|

| 90 |

aligned_labels = list(zip(graduation_groups, t_label, p_label))

|

| 91 |

-

|

| 92 |

# Step 2: Separate the labels for high and low groups

|

| 93 |

high_t_labels = [t for grad, t, p in aligned_labels if grad == 'high']

|

| 94 |

low_t_labels = [t for grad, t, p in aligned_labels if grad == 'low']

|

|

@@ -96,8 +98,18 @@ def process_file(model_name,inc_slider,progress=Progress(track_tqdm=True)):

|

|

| 96 |

high_p_labels = [p for grad, t, p in aligned_labels if grad == 'high']

|

| 97 |

low_p_labels = [p for grad, t, p in aligned_labels if grad == 'low']

|

| 98 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 99 |

high_roc_auc = roc_auc_score(high_t_labels, high_p_labels) if len(set(high_t_labels)) > 1 else None

|

| 100 |

low_roc_auc = roc_auc_score(low_t_labels, low_p_labels) if len(set(low_t_labels)) > 1 else None

|

|

|

|

|

|

|

|

|

|

|

|

|

| 101 |

# For demonstration purposes, we'll just return the content with the selected model name

|

| 102 |

|

| 103 |

# print(checkpoint)

|

|

@@ -155,8 +167,8 @@ def process_file(model_name,inc_slider,progress=Progress(track_tqdm=True)):

|

|

| 155 |

|

| 156 |

# Initialize counters

|

| 157 |

task_counts = {

|

| 158 |

-

|

| 159 |

-

|

| 160 |

}

|

| 161 |

|

| 162 |

# Analyze rows

|

|

@@ -175,6 +187,8 @@ def process_file(model_name,inc_slider,progress=Progress(track_tqdm=True)):

|

|

| 175 |

task_counts[1]["only_opt2"] += 1

|

| 176 |

elif opt1_done and opt2_done:

|

| 177 |

task_counts[1]["both"] += 1

|

|

|

|

|

|

|

| 178 |

elif ideal_task == 1:

|

| 179 |

if opt1_done and not opt2_done:

|

| 180 |

task_counts[2]["only_opt1"] += 1

|

|

@@ -182,32 +196,52 @@ def process_file(model_name,inc_slider,progress=Progress(track_tqdm=True)):

|

|

| 182 |

task_counts[2]["only_opt2"] += 1

|

| 183 |

elif opt1_done and opt2_done:

|

| 184 |

task_counts[2]["both"] += 1

|

|

|

|

|

|

|

| 185 |

|

| 186 |

# Create a string output for results

|

| 187 |

-

output_summary = "Task Analysis Summary:\n"

|

| 188 |

-

output_summary += "-----------------------\n"

|

| 189 |

|

| 190 |

-

for ideal_task, counts in task_counts.items():

|

| 191 |

-

|

| 192 |

-

|

| 193 |

-

|

| 194 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 195 |

|

| 196 |

# print(output_summary)

|

| 197 |

|

| 198 |

progress(0.2, desc="analysis done!! Executing models")

|

| 199 |

print("finetuned task: ",finetune_task)

|

| 200 |

-

subprocess.run([

|

| 201 |

-

|

| 202 |

-

|

| 203 |

-

|

| 204 |

-

|

| 205 |

-

|

| 206 |

-

|

| 207 |

-

|

| 208 |

-

|

| 209 |

-

|

| 210 |

-

])

|

| 211 |

progress(0.6,desc="Model execution completed")

|

| 212 |

result = {}

|

| 213 |

with open("result.txt", 'r') as file:

|

|

@@ -225,10 +259,14 @@ def process_file(model_name,inc_slider,progress=Progress(track_tqdm=True)):

|

|

| 225 |

fpr, tpr, _ = pickle.load(f)

|

| 226 |

# print(fpr,tpr)

|

| 227 |

roc_auc = auc(fpr, tpr)

|

| 228 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

| 229 |

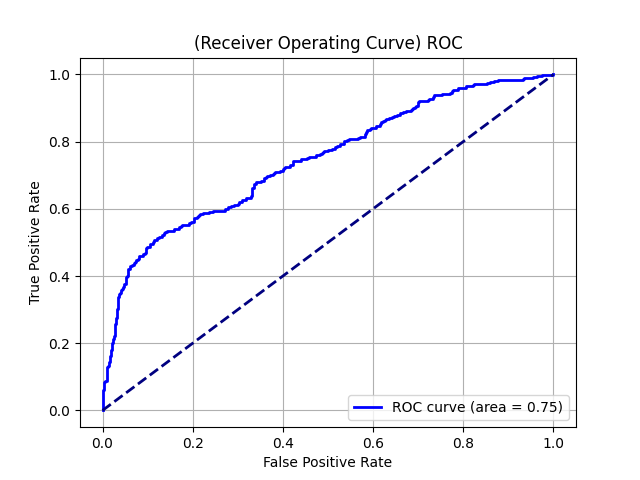

ax.plot(fpr, tpr, color='blue', lw=2, label=f'ROC curve (area = {roc_auc:.2f})')

|

| 230 |

ax.plot([0, 1], [0, 1], color='navy', lw=2, linestyle='--')

|

| 231 |

-

ax.set(xlabel='False Positive Rate', ylabel='True Positive Rate', title=f'

|

| 232 |

ax.legend(loc="lower right")

|

| 233 |

ax.grid()

|

| 234 |

|

|

@@ -247,7 +285,6 @@ def process_file(model_name,inc_slider,progress=Progress(track_tqdm=True)):

|

|

| 247 |

text_output = f"""

|

| 248 |

Model: {model_name}\n

|

| 249 |

-----------------\n

|

| 250 |

-

|

| 251 |

Time Taken: {result['time_taken_from_start']:.2f} seconds\n

|

| 252 |

Total Schools in test: {len(unique_schools):.4f}\n

|

| 253 |

Total number of instances having Schools with HGR : {len(high_sample):.4f}\n

|

|

@@ -255,9 +292,12 @@ def process_file(model_name,inc_slider,progress=Progress(track_tqdm=True)):

|

|

| 255 |

|

| 256 |

ROC score of HGR: {high_roc_auc}\n

|

| 257 |

ROC score of LGR: {low_roc_auc}\n

|

|

|

|

|

|

|

|

|

|

| 258 |

-----------------\n

|

| 259 |

"""

|

| 260 |

-

return text_output,

|

| 261 |

|

| 262 |

# List of models for the dropdown menu

|

| 263 |

|

|

@@ -507,12 +547,16 @@ tbody.svelte-18wv37q>tr.svelte-18wv37q:nth-child(odd) {

|

|

| 507 |

gr.Markdown("<p class='description'>Dashboard</p>")

|

| 508 |

with gr.Row():

|

| 509 |

output_text = gr.Textbox(label="")

|

| 510 |

-

output_image = gr.Image(label="ROC")

|

| 511 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

| 512 |

|

| 513 |

btn = gr.Button("Submit")

|

| 514 |

|

| 515 |

-

btn.click(fn=process_file, inputs=[model_dropdown,increment_slider], outputs=[output_text,

|

| 516 |

|

| 517 |

|

| 518 |

# Launch the app

|

|

|

|

| 9 |

from sklearn.metrics import roc_curve, auc

|

| 10 |

import pandas as pd

|

| 11 |

from sklearn.metrics import roc_auc_score

|

| 12 |

+

from matplotlib.figure import Figure

|

| 13 |

# Define the function to process the input file and model selection

|

| 14 |

|

| 15 |

def process_file(model_name,inc_slider,progress=Progress(track_tqdm=True)):

|

|

|

|

| 70 |

indices = test_info[test_info[0].isin(random_schools)].index.tolist()

|

| 71 |

high_indices = test_info[(test_info[0].isin(high_sample))].index.tolist()

|

| 72 |

low_indices = test_info[(test_info[0].isin(low_sample))].index.tolist()

|

| 73 |

+

|

| 74 |

# Load the test file and select rows based on indices

|

| 75 |

test = pd.read_csv(test_location, sep=',', header=None, engine='python')

|

| 76 |

selected_rows_df2 = test.loc[indices]

|

|

|

|

| 81 |

graduation_groups = [

|

| 82 |

'high' if idx in high_indices else 'low' for idx in selected_rows_df2.index

|

| 83 |

]

|

| 84 |

+

# Group data by opt_task1 and opt_task2 based on test_info[6]

|

| 85 |

+

opt_task_groups = ['opt_task1' if test_info.loc[idx, 6] == 0 else 'opt_task2' for idx in selected_rows_df2.index]

|

| 86 |

|

| 87 |

with open("roc_data2.pkl", 'rb') as file:

|

| 88 |

data = pickle.load(file)

|

|

|

|

| 90 |

p_label=data[1]

|

| 91 |

# Step 1: Align graduation_group, t_label, and p_label

|

| 92 |

aligned_labels = list(zip(graduation_groups, t_label, p_label))

|

| 93 |

+

opt_task_aligned = list(zip(opt_task_groups, t_label, p_label))

|

| 94 |

# Step 2: Separate the labels for high and low groups

|

| 95 |

high_t_labels = [t for grad, t, p in aligned_labels if grad == 'high']

|

| 96 |

low_t_labels = [t for grad, t, p in aligned_labels if grad == 'low']

|

|

|

|

| 98 |

high_p_labels = [p for grad, t, p in aligned_labels if grad == 'high']

|

| 99 |

low_p_labels = [p for grad, t, p in aligned_labels if grad == 'low']

|

| 100 |

|

| 101 |

+

opt_task1_t_labels = [t for task, t, p in opt_task_aligned if task == 'opt_task1']

|

| 102 |

+

opt_task1_p_labels = [p for task, t, p in opt_task_aligned if task == 'opt_task1']

|

| 103 |

+

|

| 104 |

+

opt_task2_t_labels = [t for task, t, p in opt_task_aligned if task == 'opt_task2']

|

| 105 |

+

opt_task2_p_labels = [p for task, t, p in opt_task_aligned if task == 'opt_task2']

|

| 106 |

+

|

| 107 |

high_roc_auc = roc_auc_score(high_t_labels, high_p_labels) if len(set(high_t_labels)) > 1 else None

|

| 108 |

low_roc_auc = roc_auc_score(low_t_labels, low_p_labels) if len(set(low_t_labels)) > 1 else None

|

| 109 |

+

|

| 110 |

+

opt_task1_roc_auc = roc_auc_score(opt_task1_t_labels, opt_task1_p_labels) if len(set(opt_task1_t_labels)) > 1 else None

|

| 111 |

+

opt_task2_roc_auc = roc_auc_score(opt_task2_t_labels, opt_task2_p_labels) if len(set(opt_task2_t_labels)) > 1 else None

|

| 112 |

+

|

| 113 |

# For demonstration purposes, we'll just return the content with the selected model name

|

| 114 |

|

| 115 |

# print(checkpoint)

|

|

|

|

| 167 |

|

| 168 |

# Initialize counters

|

| 169 |

task_counts = {

|

| 170 |

+

1: {"only_opt1": 0, "only_opt2": 0, "both": 0,"none":0},

|

| 171 |

+

2: {"only_opt1": 0, "only_opt2": 0, "both": 0,"none":0}

|

| 172 |

}

|

| 173 |

|

| 174 |

# Analyze rows

|

|

|

|

| 187 |

task_counts[1]["only_opt2"] += 1

|

| 188 |

elif opt1_done and opt2_done:

|

| 189 |

task_counts[1]["both"] += 1

|

| 190 |

+

else:

|

| 191 |

+

task_counts[1]["none"] +=1

|

| 192 |

elif ideal_task == 1:

|

| 193 |

if opt1_done and not opt2_done:

|

| 194 |

task_counts[2]["only_opt1"] += 1

|

|

|

|

| 196 |

task_counts[2]["only_opt2"] += 1

|

| 197 |

elif opt1_done and opt2_done:

|

| 198 |

task_counts[2]["both"] += 1

|

| 199 |

+

else:

|

| 200 |

+

task_counts[2]["none"] +=1

|

| 201 |

|

| 202 |

# Create a string output for results

|

| 203 |

+

# output_summary = "Task Analysis Summary:\n"

|

| 204 |

+

# output_summary += "-----------------------\n"

|

| 205 |

|

| 206 |

+

# for ideal_task, counts in task_counts.items():

|

| 207 |

+

# output_summary += f"Ideal Task = OptionalTask_{ideal_task}:\n"

|

| 208 |

+

# output_summary += f" Only OptionalTask_1 done: {counts['only_opt1']}\n"

|

| 209 |

+

# output_summary += f" Only OptionalTask_2 done: {counts['only_opt2']}\n"

|

| 210 |

+

# output_summary += f" Both done: {counts['both']}\n"

|

| 211 |

+

|

| 212 |

+

# Generate pie chart for Task 1

|

| 213 |

+

task1_labels = list(task_counts[1].keys())

|

| 214 |

+

task1_values = list(task_counts[1].values())

|

| 215 |

+

|

| 216 |

+

fig_task1 = Figure()

|

| 217 |

+

ax1 = fig_task1.add_subplot(1, 1, 1)

|

| 218 |

+

ax1.pie(task1_values, labels=task1_labels, autopct='%1.1f%%', startangle=90)

|

| 219 |

+

ax1.set_title('Ideal Task 1 Distribution')

|

| 220 |

+

|

| 221 |

+

# Generate pie chart for Task 2

|

| 222 |

+

task2_labels = list(task_counts[2].keys())

|

| 223 |

+

task2_values = list(task_counts[2].values())

|

| 224 |

+

|

| 225 |

+

fig_task2 = Figure()

|

| 226 |

+

ax2 = fig_task2.add_subplot(1, 1, 1)

|

| 227 |

+

ax2.pie(task2_values, labels=task2_labels, autopct='%1.1f%%', startangle=90)

|

| 228 |

+

ax2.set_title('Ideal Task 2 Distribution')

|

| 229 |

|

| 230 |

# print(output_summary)

|

| 231 |

|

| 232 |

progress(0.2, desc="analysis done!! Executing models")

|

| 233 |

print("finetuned task: ",finetune_task)

|

| 234 |

+

# subprocess.run([

|

| 235 |

+

# "python", "new_test_saved_finetuned_model.py",

|

| 236 |

+

# "-workspace_name", "ratio_proportion_change3_2223/sch_largest_100-coded",

|

| 237 |

+

# "-finetune_task", finetune_task,

|

| 238 |

+

# "-test_dataset_path","../../../../selected_rows.txt",

|

| 239 |

+

# # "-test_label_path","../../../../train_label.txt",

|

| 240 |

+

# "-finetuned_bert_classifier_checkpoint",

|

| 241 |

+

# "ratio_proportion_change3_2223/sch_largest_100-coded/output/highGRschool10/bert_fine_tuned.model.ep42",

|

| 242 |

+

# "-e",str(1),

|

| 243 |

+

# "-b",str(1000)

|

| 244 |

+

# ])

|

| 245 |

progress(0.6,desc="Model execution completed")

|

| 246 |

result = {}

|

| 247 |

with open("result.txt", 'r') as file:

|

|

|

|

| 259 |

fpr, tpr, _ = pickle.load(f)

|

| 260 |

# print(fpr,tpr)

|

| 261 |

roc_auc = auc(fpr, tpr)

|

| 262 |

+

|

| 263 |

+

|

| 264 |

+

# Create a matplotlib figure

|

| 265 |

+

fig = Figure()

|

| 266 |

+

ax = fig.add_subplot(1, 1, 1)

|

| 267 |

ax.plot(fpr, tpr, color='blue', lw=2, label=f'ROC curve (area = {roc_auc:.2f})')

|

| 268 |

ax.plot([0, 1], [0, 1], color='navy', lw=2, linestyle='--')

|

| 269 |

+

ax.set(xlabel='False Positive Rate', ylabel='True Positive Rate', title=f'Receiver Operating Curve (ROC)')

|

| 270 |

ax.legend(loc="lower right")

|

| 271 |

ax.grid()

|

| 272 |

|

|

|

|

| 285 |

text_output = f"""

|

| 286 |

Model: {model_name}\n

|

| 287 |

-----------------\n

|

|

|

|

| 288 |

Time Taken: {result['time_taken_from_start']:.2f} seconds\n

|

| 289 |

Total Schools in test: {len(unique_schools):.4f}\n

|

| 290 |

Total number of instances having Schools with HGR : {len(high_sample):.4f}\n

|

|

|

|

| 292 |

|

| 293 |

ROC score of HGR: {high_roc_auc}\n

|

| 294 |

ROC score of LGR: {low_roc_auc}\n

|

| 295 |

+

|

| 296 |

+

ROC score of opt1: {opt_task1_roc_auc}\n

|

| 297 |

+

ROC score of opt2: {opt_task2_roc_auc}\n

|

| 298 |

-----------------\n

|

| 299 |

"""

|

| 300 |

+

return text_output,fig,fig_task1,fig_task2

|

| 301 |

|

| 302 |

# List of models for the dropdown menu

|

| 303 |

|

|

|

|

| 547 |

gr.Markdown("<p class='description'>Dashboard</p>")

|

| 548 |

with gr.Row():

|

| 549 |

output_text = gr.Textbox(label="")

|

| 550 |

+

# output_image = gr.Image(label="ROC")

|

| 551 |

+

plot_output = gr.Plot(label="roc")

|

| 552 |

+

with gr.Row():

|

| 553 |

+

opt1_pie = gr.Plot(label="opt1")

|

| 554 |

+

opt2_pie = gr.Plot(label="opt2")

|

| 555 |

+

# output_summary = gr.Textbox(label="Summary")

|

| 556 |

|

| 557 |

btn = gr.Button("Submit")

|

| 558 |

|

| 559 |

+

btn.click(fn=process_file, inputs=[model_dropdown,increment_slider], outputs=[output_text,plot_output,opt1_pie,opt2_pie])

|

| 560 |

|

| 561 |

|

| 562 |

# Launch the app

|

distinguish_high_low_label.ipynb

CHANGED

|

@@ -2,18 +2,19 @@

|

|

| 2 |

"cells": [

|

| 3 |

{

|

| 4 |

"cell_type": "code",

|

| 5 |

-

"execution_count":

|

| 6 |

"id": "960bac80-51c7-4e9f-ad2d-84cd6c710f98",

|

| 7 |

"metadata": {},

|

| 8 |

"outputs": [],

|

| 9 |

"source": [

|

| 10 |

"import pickle\n",

|

| 11 |

-

"import pandas as pd"

|

|

|

|

| 12 |

]

|

| 13 |

},

|

| 14 |

{

|

| 15 |

"cell_type": "code",

|

| 16 |

-

"execution_count":

|

| 17 |

"id": "a34f21d0-0854-4a54-8f93-67718b2f969e",

|

| 18 |

"metadata": {},

|

| 19 |

"outputs": [],

|

|

@@ -31,7 +32,7 @@

|

|

| 31 |

},

|

| 32 |

{

|

| 33 |

"cell_type": "code",

|

| 34 |

-

"execution_count":

|

| 35 |

"id": "f9febed4-ce50-4e30-96ea-4b538ce2f9a1",

|

| 36 |

"metadata": {},

|

| 37 |

"outputs": [],

|

|

@@ -70,7 +71,7 @@

|

|

| 70 |

},

|

| 71 |

{

|

| 72 |

"cell_type": "code",

|

| 73 |

-

"execution_count":

|

| 74 |

"id": "fdfdf4b6-2752-4a21-9880-869af69f20cf",

|

| 75 |

"metadata": {},

|

| 76 |

"outputs": [],

|

|

@@ -81,7 +82,7 @@

|

|

| 81 |

},

|

| 82 |

{

|

| 83 |

"cell_type": "code",

|

| 84 |

-

"execution_count":

|

| 85 |

"id": "a79a4598-5702-4cc8-9f07-8e18fdda648b",

|

| 86 |

"metadata": {},

|

| 87 |

"outputs": [

|

|

@@ -91,7 +92,7 @@

|

|

| 91 |

"997"

|

| 92 |

]

|

| 93 |

},

|

| 94 |

-

"execution_count":

|

| 95 |

"metadata": {},

|

| 96 |

"output_type": "execute_result"

|

| 97 |

}

|

|

@@ -102,7 +103,7 @@

|

|

| 102 |

},

|

| 103 |

{

|

| 104 |

"cell_type": "code",

|

| 105 |

-

"execution_count":

|

| 106 |

"id": "4707f3e6-2f44-46d8-ad8c-b6c244f693af",

|

| 107 |

"metadata": {},

|

| 108 |

"outputs": [

|

|

@@ -197,7 +198,7 @@

|

|

| 197 |

"[997 rows x 1 columns]"

|

| 198 |

]

|

| 199 |

},

|

| 200 |

-

"execution_count":

|

| 201 |

"metadata": {},

|

| 202 |

"output_type": "execute_result"

|

| 203 |

}

|

|

@@ -211,20 +212,54 @@

|

|

| 211 |

},

|

| 212 |

{

|

| 213 |

"cell_type": "code",

|

| 214 |

-

"execution_count":

|

| 215 |

"id": "1d0c3d49-061f-486b-9c19-cf20945f3207",

|

| 216 |

"metadata": {},

|

| 217 |

-

"outputs": [

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 218 |

"source": [

|

| 219 |

"graduation_groups = [\n",

|

| 220 |

" 'high' if idx in high_indices else 'low' for idx in selected_rows_df2.index\n",

|

| 221 |

"]\n",

|

| 222 |

-

"# graduation_groups"

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 223 |

]

|

| 224 |

},

|

| 225 |

{

|

| 226 |

"cell_type": "code",

|

| 227 |

-

"execution_count":

|

| 228 |

"id": "ad0ce4a1-27fa-4867-8061-4054dbb340df",

|

| 229 |

"metadata": {},

|

| 230 |

"outputs": [],

|

|

@@ -235,21 +270,51 @@

|

|

| 235 |

},

|

| 236 |

{

|

| 237 |

"cell_type": "code",

|

| 238 |

-

"execution_count":

|

| 239 |

"id": "a4f4a2b9-3134-42ac-871b-4e117098cd0e",

|

| 240 |

"metadata": {},

|

| 241 |

"outputs": [],

|

| 242 |

"source": [

|

| 243 |

"# Step 1: Align graduation_group, t_label, and p_label\n",

|

| 244 |

"aligned_labels = list(zip(graduation_groups, t_label, p_label))\n",

|

| 245 |

-

"\n",

|

| 246 |

"# Step 2: Separate the labels for high and low groups\n",

|

| 247 |

"high_t_labels = [t for grad, t, p in aligned_labels if grad == 'high']\n",

|

| 248 |

"low_t_labels = [t for grad, t, p in aligned_labels if grad == 'low']\n",

|

| 249 |

"\n",

|

| 250 |

"high_p_labels = [p for grad, t, p in aligned_labels if grad == 'high']\n",

|

| 251 |

"low_p_labels = [p for grad, t, p in aligned_labels if grad == 'low']\n",

|

| 252 |

-

"\n"

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 253 |

]

|

| 254 |

},

|

| 255 |

{

|

|

@@ -275,17 +340,15 @@

|

|

| 275 |

},

|

| 276 |

{

|

| 277 |

"cell_type": "code",

|

| 278 |

-

"execution_count":

|

| 279 |

"id": "c11050db-2636-4c50-9cd4-b9943e5cee83",

|

| 280 |

"metadata": {},

|

| 281 |

"outputs": [],

|

| 282 |

-

"source": [

|

| 283 |

-

"from sklearn.metrics import precision_score, recall_score, f1_score, confusion_matrix, roc_curve, roc_auc_score"

|

| 284 |

-

]

|

| 285 |

},

|

| 286 |

{

|

| 287 |

"cell_type": "code",

|

| 288 |

-

"execution_count":

|

| 289 |

"id": "e1309e93-7063-4f48-bbc7-11a0d449c34e",

|

| 290 |

"metadata": {},

|

| 291 |

"outputs": [

|

|

@@ -308,7 +371,7 @@

|

|

| 308 |

},

|

| 309 |

{

|

| 310 |

"cell_type": "code",

|

| 311 |

-

"execution_count":

|

| 312 |

"id": "a99e7812-817d-4f9f-b6fa-1a58aa3a34dc",

|

| 313 |

"metadata": {},

|

| 314 |

"outputs": [

|

|

@@ -322,10 +385,12 @@

|

|

| 322 |

" Only OptionalTask_1 done: 22501\n",

|

| 323 |

" Only OptionalTask_2 done: 20014\n",

|

| 324 |

" Both done: 24854\n",

|

|

|

|

| 325 |

"Ideal Task = OptionalTask_2:\n",

|

| 326 |

" Only OptionalTask_1 done: 12588\n",

|

| 327 |

" Only OptionalTask_2 done: 18942\n",

|

| 328 |

" Both done: 15147\n",

|

|

|

|

| 329 |

"\n"

|

| 330 |

]

|

| 331 |

}

|

|

@@ -377,8 +442,8 @@

|

|

| 377 |

"\n",

|

| 378 |

"# Initialize counters\n",

|

| 379 |

"task_counts = {\n",

|

| 380 |

-

" 1: {\"only_opt1\": 0, \"only_opt2\": 0, \"both\": 0},\n",

|

| 381 |

-

" 2: {\"only_opt1\": 0, \"only_opt2\": 0, \"both\": 0}\n",

|

| 382 |

"}\n",

|

| 383 |

"\n",

|

| 384 |

"# Analyze rows\n",

|

|

@@ -397,6 +462,8 @@

|

|

| 397 |

" task_counts[1][\"only_opt2\"] += 1\n",

|

| 398 |

" elif opt1_done and opt2_done:\n",

|

| 399 |

" task_counts[1][\"both\"] += 1\n",

|

|

|

|

|

|

|

| 400 |

" elif ideal_task == 1:\n",

|

| 401 |

" if opt1_done and not opt2_done:\n",

|

| 402 |

" task_counts[2][\"only_opt1\"] += 1\n",

|

|

@@ -404,6 +471,8 @@

|

|

| 404 |

" task_counts[2][\"only_opt2\"] += 1\n",

|

| 405 |

" elif opt1_done and opt2_done:\n",

|

| 406 |

" task_counts[2][\"both\"] += 1\n",

|

|

|

|

|

|

|

| 407 |

"\n",

|

| 408 |

"# Create a string output for results\n",

|

| 409 |

"output_summary = \"Task Analysis Summary:\\n\"\n",

|

|

@@ -414,14 +483,47 @@

|

|

| 414 |

" output_summary += f\" Only OptionalTask_1 done: {counts['only_opt1']}\\n\"\n",

|

| 415 |

" output_summary += f\" Only OptionalTask_2 done: {counts['only_opt2']}\\n\"\n",

|

| 416 |

" output_summary += f\" Both done: {counts['both']}\\n\"\n",

|

|

|

|

| 417 |

"\n",

|

| 418 |

"print(output_summary)\n"

|

| 419 |

]

|

| 420 |

},

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 421 |

{

|

| 422 |

"cell_type": "code",

|

| 423 |

"execution_count": null,

|

| 424 |

-

"id": "

|

| 425 |

"metadata": {},

|

| 426 |

"outputs": [],

|

| 427 |

"source": []

|

|

|

|

| 2 |

"cells": [

|

| 3 |

{

|

| 4 |

"cell_type": "code",

|

| 5 |

+

"execution_count": 27,

|

| 6 |

"id": "960bac80-51c7-4e9f-ad2d-84cd6c710f98",

|

| 7 |

"metadata": {},

|

| 8 |

"outputs": [],

|

| 9 |

"source": [

|

| 10 |

"import pickle\n",

|

| 11 |

+

"import pandas as pd\n",

|

| 12 |

+

"from sklearn.metrics import precision_score, recall_score, f1_score, confusion_matrix, roc_curve, roc_auc_score,auc"

|

| 13 |

]

|

| 14 |

},

|

| 15 |

{

|

| 16 |

"cell_type": "code",

|

| 17 |

+

"execution_count": 3,

|

| 18 |

"id": "a34f21d0-0854-4a54-8f93-67718b2f969e",

|

| 19 |

"metadata": {},

|

| 20 |

"outputs": [],

|

|

|

|

| 32 |

},

|

| 33 |

{

|

| 34 |

"cell_type": "code",

|

| 35 |

+

"execution_count": 4,

|

| 36 |

"id": "f9febed4-ce50-4e30-96ea-4b538ce2f9a1",

|

| 37 |

"metadata": {},

|

| 38 |

"outputs": [],

|

|

|

|

| 71 |

},

|

| 72 |

{

|

| 73 |

"cell_type": "code",

|

| 74 |

+

"execution_count": 5,

|

| 75 |

"id": "fdfdf4b6-2752-4a21-9880-869af69f20cf",

|

| 76 |

"metadata": {},

|

| 77 |

"outputs": [],

|

|

|

|

| 82 |

},

|

| 83 |

{

|

| 84 |

"cell_type": "code",

|

| 85 |

+

"execution_count": 6,

|

| 86 |

"id": "a79a4598-5702-4cc8-9f07-8e18fdda648b",

|

| 87 |

"metadata": {},

|

| 88 |

"outputs": [

|

|

|

|

| 92 |

"997"

|

| 93 |

]

|

| 94 |

},

|

| 95 |

+

"execution_count": 6,

|

| 96 |

"metadata": {},

|

| 97 |

"output_type": "execute_result"

|

| 98 |

}

|

|

|

|

| 103 |

},

|

| 104 |

{

|

| 105 |

"cell_type": "code",

|

| 106 |

+

"execution_count": 7,

|

| 107 |

"id": "4707f3e6-2f44-46d8-ad8c-b6c244f693af",

|

| 108 |

"metadata": {},

|

| 109 |

"outputs": [

|

|

|

|

| 198 |

"[997 rows x 1 columns]"

|

| 199 |

]

|

| 200 |

},

|

| 201 |

+

"execution_count": 7,

|

| 202 |

"metadata": {},

|

| 203 |

"output_type": "execute_result"

|

| 204 |

}

|

|

|

|

| 212 |

},

|

| 213 |

{

|

| 214 |

"cell_type": "code",

|

| 215 |

+

"execution_count": 8,

|

| 216 |

"id": "1d0c3d49-061f-486b-9c19-cf20945f3207",

|

| 217 |

"metadata": {},

|

| 218 |

+

"outputs": [

|

| 219 |

+

{

|

| 220 |

+

"data": {

|

| 221 |

+

"text/plain": [

|

| 222 |

+

"997"

|

| 223 |

+

]

|

| 224 |

+

},

|

| 225 |

+

"execution_count": 8,

|

| 226 |

+

"metadata": {},

|

| 227 |

+

"output_type": "execute_result"

|

| 228 |

+

}

|

| 229 |

+

],

|

| 230 |

"source": [

|

| 231 |

"graduation_groups = [\n",

|

| 232 |

" 'high' if idx in high_indices else 'low' for idx in selected_rows_df2.index\n",

|

| 233 |

"]\n",

|

| 234 |

+

"# graduation_groups\n",

|

| 235 |

+

"len(graduation_groups)"

|

| 236 |

+

]

|

| 237 |

+

},

|

| 238 |

+

{

|

| 239 |

+

"cell_type": "code",

|

| 240 |

+

"execution_count": 9,

|

| 241 |

+

"id": "d2508a0f-e5ca-432e-b99b-481ea4536d4d",

|

| 242 |

+

"metadata": {},

|

| 243 |

+

"outputs": [

|

| 244 |

+

{

|

| 245 |

+

"data": {

|

| 246 |

+

"text/plain": [

|

| 247 |

+

"997"

|

| 248 |

+

]

|

| 249 |

+

},

|

| 250 |

+

"execution_count": 9,

|

| 251 |

+

"metadata": {},

|

| 252 |

+

"output_type": "execute_result"

|

| 253 |

+

}

|

| 254 |

+

],

|

| 255 |

+

"source": [

|

| 256 |

+

"opt_task_groups = ['opt_task1' if test_info.loc[idx, 6] == 0 else 'opt_task2' for idx in selected_rows_df2.index]\n",

|

| 257 |

+

"len(opt_task_groups)"

|

| 258 |

]

|

| 259 |

},

|

| 260 |

{

|

| 261 |

"cell_type": "code",

|

| 262 |

+

"execution_count": 10,

|

| 263 |

"id": "ad0ce4a1-27fa-4867-8061-4054dbb340df",

|

| 264 |

"metadata": {},

|

| 265 |

"outputs": [],

|

|

|

|

| 270 |

},

|

| 271 |

{

|

| 272 |

"cell_type": "code",

|

| 273 |

+

"execution_count": 12,

|

| 274 |

"id": "a4f4a2b9-3134-42ac-871b-4e117098cd0e",

|

| 275 |

"metadata": {},

|

| 276 |

"outputs": [],

|

| 277 |

"source": [

|

| 278 |

"# Step 1: Align graduation_group, t_label, and p_label\n",

|

| 279 |

"aligned_labels = list(zip(graduation_groups, t_label, p_label))\n",

|

| 280 |

+

"opt_task_aligned = list(zip(opt_task_groups, t_label, p_label))\n",

|

| 281 |

"# Step 2: Separate the labels for high and low groups\n",

|

| 282 |

"high_t_labels = [t for grad, t, p in aligned_labels if grad == 'high']\n",

|

| 283 |

"low_t_labels = [t for grad, t, p in aligned_labels if grad == 'low']\n",

|

| 284 |

"\n",

|

| 285 |

"high_p_labels = [p for grad, t, p in aligned_labels if grad == 'high']\n",

|

| 286 |

"low_p_labels = [p for grad, t, p in aligned_labels if grad == 'low']\n",

|

| 287 |

+

"\n",

|

| 288 |

+

"\n",

|

| 289 |

+

"opt_task1_t_labels = [t for task, t, p in opt_task_aligned if task == 'opt_task1']\n",

|

| 290 |

+

"opt_task1_p_labels = [p for task, t, p in opt_task_aligned if task == 'opt_task1']\n",

|

| 291 |

+

"\n",

|

| 292 |

+

"opt_task2_t_labels = [t for task, t, p in opt_task_aligned if task == 'opt_task2']\n",

|

| 293 |

+

"opt_task2_p_labels = [p for task, t, p in opt_task_aligned if task == 'opt_task2']\n"

|

| 294 |

+

]

|

| 295 |

+

},

|

| 296 |

+

{

|

| 297 |

+

"cell_type": "code",

|

| 298 |

+

"execution_count": 15,

|

| 299 |

+

"id": "74cda932-ce98-4ad5-9c29-a54bdc4ee086",

|

| 300 |

+

"metadata": {},

|

| 301 |

+

"outputs": [

|

| 302 |

+

{

|

| 303 |

+

"name": "stdout",

|

| 304 |

+

"output_type": "stream",

|

| 305 |

+

"text": [

|

| 306 |

+

"opt_task1 ROC-AUC: 0.7592686234399062\n",

|

| 307 |

+

"opt_task2 ROC-AUC: 0.7268598353289777\n"

|

| 308 |

+

]

|

| 309 |

+

}

|

| 310 |

+

],

|

| 311 |

+

"source": [

|

| 312 |

+

"\n",

|

| 313 |

+

"opt_task1_roc_auc = roc_auc_score(opt_task1_t_labels, opt_task1_p_labels) if len(set(opt_task1_t_labels)) > 1 else None\n",

|

| 314 |

+

"opt_task2_roc_auc = roc_auc_score(opt_task2_t_labels, opt_task2_p_labels) if len(set(opt_task2_t_labels)) > 1 else None\n",

|

| 315 |

+

"\n",

|

| 316 |

+

"print(f\"opt_task1 ROC-AUC: {opt_task1_roc_auc}\")\n",

|

| 317 |

+

"print(f\"opt_task2 ROC-AUC: {opt_task2_roc_auc}\")"

|

| 318 |

]

|

| 319 |

},

|

| 320 |

{

|

|

|

|

| 340 |

},

|

| 341 |

{

|

| 342 |

"cell_type": "code",

|

| 343 |

+

"execution_count": 13,

|

| 344 |

"id": "c11050db-2636-4c50-9cd4-b9943e5cee83",

|

| 345 |

"metadata": {},

|

| 346 |

"outputs": [],

|

| 347 |

+

"source": []

|

|

|

|

|

|

|

| 348 |

},

|

| 349 |

{

|

| 350 |

"cell_type": "code",

|

| 351 |

+

"execution_count": 16,

|

| 352 |

"id": "e1309e93-7063-4f48-bbc7-11a0d449c34e",

|

| 353 |

"metadata": {},

|

| 354 |

"outputs": [

|

|

|

|

| 371 |

},

|

| 372 |

{

|

| 373 |

"cell_type": "code",

|

| 374 |

+

"execution_count": 21,

|

| 375 |

"id": "a99e7812-817d-4f9f-b6fa-1a58aa3a34dc",

|

| 376 |

"metadata": {},

|

| 377 |

"outputs": [

|

|

|

|

| 385 |

" Only OptionalTask_1 done: 22501\n",

|

| 386 |

" Only OptionalTask_2 done: 20014\n",

|

| 387 |

" Both done: 24854\n",

|

| 388 |

+

" None done: 38\n",

|

| 389 |

"Ideal Task = OptionalTask_2:\n",

|

| 390 |

" Only OptionalTask_1 done: 12588\n",

|

| 391 |

" Only OptionalTask_2 done: 18942\n",

|

| 392 |

" Both done: 15147\n",

|

| 393 |

+

" None done: 78\n",

|

| 394 |

"\n"

|

| 395 |

]

|

| 396 |

}

|

|

|

|

| 442 |

"\n",

|

| 443 |

"# Initialize counters\n",

|

| 444 |

"task_counts = {\n",

|

| 445 |

+

" 1: {\"only_opt1\": 0, \"only_opt2\": 0, \"both\": 0,\"none\":0},\n",

|

| 446 |

+

" 2: {\"only_opt1\": 0, \"only_opt2\": 0, \"both\": 0,\"none\":0}\n",

|

| 447 |

"}\n",

|

| 448 |

"\n",

|

| 449 |

"# Analyze rows\n",

|

|

|

|

| 462 |

" task_counts[1][\"only_opt2\"] += 1\n",

|

| 463 |

" elif opt1_done and opt2_done:\n",

|

| 464 |

" task_counts[1][\"both\"] += 1\n",

|

| 465 |

+

" else:\n",

|

| 466 |

+

" task_counts[1][\"none\"] +=1\n",

|

| 467 |

" elif ideal_task == 1:\n",

|

| 468 |

" if opt1_done and not opt2_done:\n",

|

| 469 |

" task_counts[2][\"only_opt1\"] += 1\n",

|

|

|

|

| 471 |

" task_counts[2][\"only_opt2\"] += 1\n",

|

| 472 |

" elif opt1_done and opt2_done:\n",

|

| 473 |

" task_counts[2][\"both\"] += 1\n",

|

| 474 |

+

" else:\n",

|

| 475 |

+

" task_counts[2][\"none\"] +=1\n",

|

| 476 |

"\n",

|

| 477 |

"# Create a string output for results\n",

|

| 478 |

"output_summary = \"Task Analysis Summary:\\n\"\n",

|

|

|

|

| 483 |

" output_summary += f\" Only OptionalTask_1 done: {counts['only_opt1']}\\n\"\n",

|

| 484 |

" output_summary += f\" Only OptionalTask_2 done: {counts['only_opt2']}\\n\"\n",

|

| 485 |

" output_summary += f\" Both done: {counts['both']}\\n\"\n",

|

| 486 |

+

" output_summary += f\" None done: {counts['none']}\\n\"\n",

|

| 487 |

"\n",

|

| 488 |

"print(output_summary)\n"

|

| 489 |

]

|

| 490 |

},

|

| 491 |

+

{

|

| 492 |

+

"cell_type": "code",

|

| 493 |

+

"execution_count": 23,

|

| 494 |

+

"id": "3630406c-859a-43ab-a569-67d577cc9bf6",

|

| 495 |

+

"metadata": {},

|

| 496 |

+

"outputs": [],

|

| 497 |

+

"source": [

|

| 498 |

+

"import gradio as gr\n",

|

| 499 |

+

"from matplotlib.figure import Figure"

|

| 500 |

+

]

|

| 501 |

+

},

|

| 502 |

+

{

|

| 503 |

+

"cell_type": "code",

|

| 504 |

+

"execution_count": 28,

|

| 505 |

+

"id": "99833638-882d-4c75-bcc3-031e39cfb5a7",

|

| 506 |

+

"metadata": {},

|

| 507 |

+

"outputs": [],

|

| 508 |

+

"source": [

|

| 509 |

+

"with open(\"roc_data.pkl\", \"rb\") as f:\n",

|

| 510 |

+

" fpr, tpr, _ = pickle.load(f)\n",

|

| 511 |

+

"roc_auc = auc(fpr, tpr)\n",

|

| 512 |

+

"\n",

|

| 513 |

+

"# Create a matplotlib figure\n",

|

| 514 |

+

"fig = Figure()\n",

|

| 515 |

+

"ax = fig.add_subplot(1, 1, 1)\n",

|

| 516 |

+

"ax.plot(fpr, tpr, color='blue', lw=2, label=f'ROC curve (area = {roc_auc:.2f})')\n",

|

| 517 |

+

"ax.plot([0, 1], [0, 1], color='navy', lw=2, linestyle='--')\n",

|

| 518 |

+

"ax.set(xlabel='False Positive Rate', ylabel='True Positive Rate', title=f'Receiver Operating Curve (ROC)')\n",

|

| 519 |

+

"ax.legend(loc=\"lower right\")\n",

|

| 520 |

+

"ax.grid()"

|

| 521 |

+

]

|

| 522 |

+

},

|

| 523 |

{

|

| 524 |

"cell_type": "code",

|

| 525 |

"execution_count": null,

|

| 526 |

+

"id": "6eb3dece-5b33-4223-af9a-6b999bb2305b",

|

| 527 |

"metadata": {},

|

| 528 |

"outputs": [],

|

| 529 |

"source": []

|

plot.png

CHANGED

|

|

result.txt

CHANGED

|

@@ -3,5 +3,5 @@ total_acc: 69.00702106318957

|

|

| 3 |

precisions: 0.7236623191454734

|

| 4 |

recalls: 0.6900702106318957

|

| 5 |

f1_scores: 0.6802420656474512

|

| 6 |

-

time_taken_from_start:

|

| 7 |

auc_score: 0.7457100293916334

|

|

|

|

| 3 |

precisions: 0.7236623191454734

|

| 4 |

recalls: 0.6900702106318957

|

| 5 |

f1_scores: 0.6802420656474512

|

| 6 |

+

time_taken_from_start: 25.420082330703735

|

| 7 |

auc_score: 0.7457100293916334

|