Spaces:

Running

on

CPU Upgrade

Running

on

CPU Upgrade

rahulvenkk

commited on

Commit

•

6dfcb0f

1

Parent(s):

0ba04fa

app.py updated

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitattributes +4 -0

- .gitignore +3 -0

- README.md +47 -14

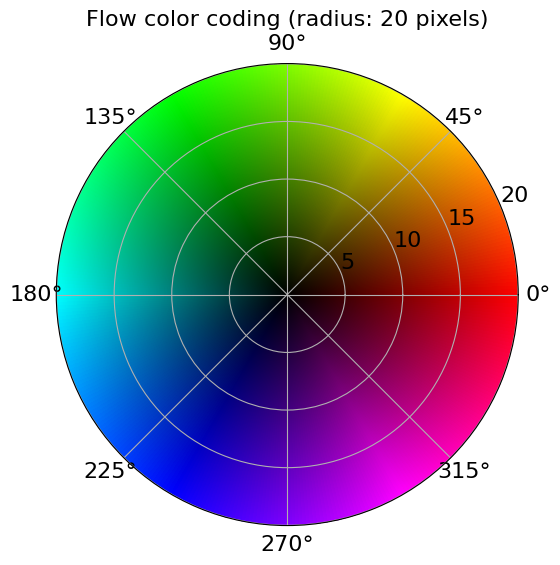

- assets/color_wheel.png +0 -0

- assets/cwm_teaser.gif +3 -0

- assets/desk_1.jpg +3 -0

- assets/flow_test_videos/libby.mp4 +3 -0

- assets/flow_test_videos/weight_lifter.mp4 +3 -0

- cwm/__init__.py +0 -0

- cwm/data/__init__.py +0 -0

- cwm/data/dataset.py +453 -0

- cwm/data/dataset_utils.py +73 -0

- cwm/data/masking_generator.py +86 -0

- cwm/data/transforms.py +206 -0

- cwm/data/video_file_lists/kinetics_400_train_list.txt +3 -0

- cwm/data/video_file_lists/kinetics_400_train_list_sing.txt +3 -0

- cwm/engine_for_pretraining.py +92 -0

- cwm/eval/Action_recognition/__init__.py +0 -0

- cwm/eval/Flow/__init__.py +0 -0

- cwm/eval/Flow/create_spring_submission_parallel.sh +36 -0

- cwm/eval/Flow/create_spring_submission_unified.py +111 -0

- cwm/eval/Flow/flow_extraction_classes.py +122 -0

- cwm/eval/Flow/flow_utils.py +569 -0

- cwm/eval/Flow/flow_utils_legacy.py +152 -0

- cwm/eval/Flow/generator.py +579 -0

- cwm/eval/Flow/losses.py +60 -0

- cwm/eval/Flow/masking_flow.py +375 -0

- cwm/eval/Flow/vis_utils.py +150 -0

- cwm/eval/IntPhys/__init__.py +0 -0

- cwm/eval/Physion/__init__.py +0 -0

- cwm/eval/Physion/feature_extractor.py +317 -0

- cwm/eval/Physion/flow_utils.py +279 -0

- cwm/eval/Physion/run_eval.sh +17 -0

- cwm/eval/Physion/run_eval_kfflow.sh +18 -0

- cwm/eval/Physion/run_eval_mp4s.sh +19 -0

- cwm/eval/Physion/run_eval_mp4s_keyp.sh +17 -0

- cwm/eval/Physion/run_eval_mp4s_keyp_flow.sh +17 -0

- cwm/eval/Segmentation/__init__.py +0 -0

- cwm/eval/Segmentation/archive/__init__.py +0 -0

- cwm/eval/Segmentation/archive/common/__init__.py +0 -0

- cwm/eval/Segmentation/archive/common/coco_loader_lsj.py +222 -0

- cwm/eval/Segmentation/archive/common/convert_cwm_checkpoint_detectron_format.py +19 -0

- cwm/eval/Segmentation/archive/common/convert_cwm_checkpoint_detectron_format_v2.py +54 -0

- cwm/eval/Segmentation/archive/common/convert_dino_checkpoint_detectron_format.py +26 -0

- cwm/eval/Segmentation/archive/competition.py +673 -0

- cwm/eval/Segmentation/archive/configs/__init__.py +0 -0

- cwm/eval/Segmentation/archive/configs/mask_rcnn_cwm_vitdet_b_100ep.py +56 -0

- cwm/eval/Segmentation/archive/configs/mask_rcnn_vitdet_b_100ep.py +59 -0

- cwm/eval/Segmentation/archive/configs/mask_rcnn_vitdet_b_100ep_dino.py +56 -0

- cwm/eval/Segmentation/archive/configs/mask_rcnn_vitdet_b_100ep_v2.py +59 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,7 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

*.jpg filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

*.txt filter=lfs diff=lfs merge=lfs -text

|

| 38 |

+

*.gif filter=lfs diff=lfs merge=lfs -text

|

| 39 |

+

*.mp4 filter=lfs diff=lfs merge=lfs -text

|

.gitignore

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

.idea

|

| 2 |

+

|

| 3 |

+

.pth

|

README.md

CHANGED

|

@@ -1,14 +1,47 @@

|

|

| 1 |

-

|

| 2 |

-

|

| 3 |

-

|

| 4 |

-

|

| 5 |

-

|

| 6 |

-

|

| 7 |

-

|

| 8 |

-

|

| 9 |

-

|

| 10 |

-

|

| 11 |

-

|

| 12 |

-

|

| 13 |

-

|

| 14 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

<div align="center">

|

| 2 |

+

<h2>Understanding Physical Dynamics with Counterfactual World Modeling</h2>

|

| 3 |

+

|

| 4 |

+

[**Rahul Venkatesh***](https://rahulvenkk.github.io/)<sup>1</sup> · [**Honglin Chen***](https://web.stanford.edu/~honglinc/)<sup>1*</sup> · [**Kevin Feigelis***](https://neuroscience.stanford.edu/people/kevin-t-feigelis)<sup>1</sup> · [**Daniel M. Bear**](https://twitter.com/recursus?lang=en)<sup>1</sup> · [**Khaled Jedoui**](https://web.stanford.edu/~thekej/)<sup>1</sup> · [**Klemen Kotar**](https://klemenkotar.github.io/)<sup>1</sup> · [**Felix Binder**](https://ac.felixbinder.net/)<sup>2</sup> · [**Wanhee Lee**](https://www.linkedin.com/in/wanhee-lee-31102820b/)<sup>1</sup> · [**Sherry Liu**](https://neuroailab.github.io/cwm-physics/)<sup>1</sup> · [**Kevin A. Smith**](https://www.mit.edu/~k2smith/)<sup>3</sup> · [**Judith E. Fan**](https://cogtoolslab.github.io/)<sup>1</sup> · [**Daniel L. K. Yamins**](https://stanford.edu/~yamins/)<sup>1</sup>

|

| 5 |

+

|

| 6 |

+

(* equal contribution)

|

| 7 |

+

|

| 8 |

+

<sup>1</sup>Stanford    <sup>2</sup>UCSD    <sup>3</sup>MIT

|

| 9 |

+

|

| 10 |

+

|

| 11 |

+

|

| 12 |

+

|

| 13 |

+

<a href="https://arxiv.org/abs/2312.06721"><img src='https://img.shields.io/badge/arXiv-CWM-red' alt='Paper PDF'></a>

|

| 14 |

+

<a href='https://neuroailab.github.io/cwm-physics/'><img src='https://img.shields.io/badge/Project_Page-CWM-green' alt='Project Page'></a>

|

| 15 |

+

<a href='https://neuroailab.github.io/cwm-physics/'><img src='https://img.shields.io/badge/%F0%9F%A4%97%20Hugging%20Face-Spaces-blue'></a>

|

| 16 |

+

<a href='https://neuroailab.github.io/cwm-physics/'><img src='https://img.shields.io/badge/%F0%9F%A4%97%20Hugging%20Face-Colab-yellow'></a>

|

| 17 |

+

</div>

|

| 18 |

+

|

| 19 |

+

This work presents the Counterfactual World Modeling (CWM) framework. CWM is capable of counterfactual prediction and extraction of vision structures useful for understanding physical dynamics.

|

| 20 |

+

|

| 21 |

+

|

| 22 |

+

|

| 23 |

+

## 📣 News

|

| 24 |

+

|

| 25 |

+

- 2024-06-01: Release [project page](https://neuroailab.github.io) and [codes](https://github.com/rahulvenkk/cwm_release.git)

|

| 26 |

+

|

| 27 |

+

## 🔨 Installation

|

| 28 |

+

|

| 29 |

+

```

|

| 30 |

+

git clone https://github.com/rahulvenkk/cwm_release.git

|

| 31 |

+

pip install -e .

|

| 32 |

+

```

|

| 33 |

+

|

| 34 |

+

## ✨ Usage

|

| 35 |

+

To download and use a pre-trianed model run the following

|

| 36 |

+

```

|

| 37 |

+

from cwm.model.model_factory import model_factory

|

| 38 |

+

model = model_factory.load_model('vitbase_8x8patch_3frames_1tube')

|

| 39 |

+

```

|

| 40 |

+

This will automatically initialize the appropriate model class and download the specified weights to your `$CACHE` directory.

|

| 41 |

+

|

| 42 |

+

## 🔄 Pre-training

|

| 43 |

+

To train the model run the following script

|

| 44 |

+

|

| 45 |

+

```

|

| 46 |

+

./scripts/pretrain/3frame_patch8x8_mr0.90_gpu.sh

|

| 47 |

+

```

|

assets/color_wheel.png

ADDED

|

assets/cwm_teaser.gif

ADDED

|

Git LFS Details

|

assets/desk_1.jpg

ADDED

|

Git LFS Details

|

assets/flow_test_videos/libby.mp4

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:1d1887bc796d1883e8a63405398125476257572c0c8d7f1862bf309e422b4828

|

| 3 |

+

size 671950

|

assets/flow_test_videos/weight_lifter.mp4

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:525988b314936079f904236c614e2f987ba64fd5c69f4f12dd5b6b9076311854

|

| 3 |

+

size 1176790

|

cwm/__init__.py

ADDED

|

File without changes

|

cwm/data/__init__.py

ADDED

|

File without changes

|

cwm/data/dataset.py

ADDED

|

@@ -0,0 +1,453 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os

|

| 2 |

+

import decord

|

| 3 |

+

import numpy as np

|

| 4 |

+

import torch

|

| 5 |

+

from PIL import Image

|

| 6 |

+

from torch.utils.data import Dataset

|

| 7 |

+

from torchvision import transforms

|

| 8 |

+

|

| 9 |

+

|

| 10 |

+

class VideoMAE(torch.utils.data.Dataset):

|

| 11 |

+

"""Load your own video classification dataset.

|

| 12 |

+

Parameters

|

| 13 |

+

----------

|

| 14 |

+

root : str, required.

|

| 15 |

+

Path to the root folder storing the dataset.

|

| 16 |

+

setting : str, required.

|

| 17 |

+

A text file describing the dataset, each line per video sample.

|

| 18 |

+

There are three items in each line: (1) video path; (2) video length and (3) video label.

|

| 19 |

+

train : bool, default True.

|

| 20 |

+

Whether to load the training or validation set.

|

| 21 |

+

test_mode : bool, default False.

|

| 22 |

+

Whether to perform evaluation on the test set.

|

| 23 |

+

Usually there is three-crop or ten-crop evaluation strategy involved.

|

| 24 |

+

name_pattern : str, default None.

|

| 25 |

+

The naming pattern of the decoded video frames.

|

| 26 |

+

For example, img_00012.jpg.

|

| 27 |

+

video_ext : str, default 'mp4'.

|

| 28 |

+

If video_loader is set to True, please specify the video format accordinly.

|

| 29 |

+

is_color : bool, default True.

|

| 30 |

+

Whether the loaded image is color or grayscale.

|

| 31 |

+

modality : str, default 'rgb'.

|

| 32 |

+

Input modalities, we support only rgb video frames for now.

|

| 33 |

+

Will add support for rgb difference image and optical flow image later.

|

| 34 |

+

num_segments : int, default 1.

|

| 35 |

+

Number of segments to evenly divide the video into clips.

|

| 36 |

+

A useful technique to obtain global video-level information.

|

| 37 |

+

Limin Wang, etal, Temporal Segment Networks: Towards Good Practices for Deep Action Recognition, ECCV 2016.

|

| 38 |

+

num_crop : int, default 1.

|

| 39 |

+

Number of crops for each image. default is 1.

|

| 40 |

+

Common choices are three crops and ten crops during evaluation.

|

| 41 |

+

new_length : int, default 1.

|

| 42 |

+

The length of input video clip. Default is a single image, but it can be multiple video frames.

|

| 43 |

+

For example, new_length=16 means we will extract a video clip of consecutive 16 frames.

|

| 44 |

+

new_step : int, default 1.

|

| 45 |

+

Temporal sampling rate. For example, new_step=1 means we will extract a video clip of consecutive frames.

|

| 46 |

+

new_step=2 means we will extract a video clip of every other frame.

|

| 47 |

+

temporal_jitter : bool, default False.

|

| 48 |

+

Whether to temporally jitter if new_step > 1.

|

| 49 |

+

video_loader : bool, default False.

|

| 50 |

+

Whether to use video loader to load data.

|

| 51 |

+

use_decord : bool, default True.

|

| 52 |

+

Whether to use Decord video loader to load data. Otherwise use mmcv video loader.

|

| 53 |

+

transform : function, default None.

|

| 54 |

+

A function that takes data and label and transforms them.

|

| 55 |

+

data_aug : str, default 'v1'.

|

| 56 |

+

Different types of data augmentation auto. Supports v1, v2, v3 and v4.

|

| 57 |

+

lazy_init : bool, default False.

|

| 58 |

+

If set to True, build a dataset instance without loading any dataset.

|

| 59 |

+

"""

|

| 60 |

+

|

| 61 |

+

def __init__(self,

|

| 62 |

+

root,

|

| 63 |

+

setting,

|

| 64 |

+

train=True,

|

| 65 |

+

test_mode=False,

|

| 66 |

+

name_pattern='img_%05d.jpg',

|

| 67 |

+

video_ext='mp4',

|

| 68 |

+

is_color=True,

|

| 69 |

+

modality='rgb',

|

| 70 |

+

num_segments=1,

|

| 71 |

+

num_crop=1,

|

| 72 |

+

new_length=1,

|

| 73 |

+

new_step=1,

|

| 74 |

+

randomize_interframes=False,

|

| 75 |

+

transform=None,

|

| 76 |

+

temporal_jitter=False,

|

| 77 |

+

video_loader=False,

|

| 78 |

+

use_decord=False,

|

| 79 |

+

lazy_init=False,

|

| 80 |

+

is_video_dataset=True):

|

| 81 |

+

|

| 82 |

+

super(VideoMAE, self).__init__()

|

| 83 |

+

self.root = root

|

| 84 |

+

self.setting = setting

|

| 85 |

+

self.train = train

|

| 86 |

+

self.test_mode = test_mode

|

| 87 |

+

self.is_color = is_color

|

| 88 |

+

self.modality = modality

|

| 89 |

+

self.num_segments = num_segments

|

| 90 |

+

self.num_crop = num_crop

|

| 91 |

+

self.new_length = new_length

|

| 92 |

+

|

| 93 |

+

self.randomize_interframes = randomize_interframes

|

| 94 |

+

self._new_step = new_step # If randomize_interframes is True, then this is the max, otherwise it's just the skip

|

| 95 |

+

# self._skip_length = self.new_length * self.new_step # If randomize_interframes is True, then this isn't used, otherwise it's used as calculated

|

| 96 |

+

self.temporal_jitter = temporal_jitter

|

| 97 |

+

self.name_pattern = name_pattern

|

| 98 |

+

self.video_loader = video_loader

|

| 99 |

+

self.video_ext = video_ext

|

| 100 |

+

self.use_decord = use_decord

|

| 101 |

+

self.transform = transform

|

| 102 |

+

self.lazy_init = lazy_init

|

| 103 |

+

|

| 104 |

+

if (not self.lazy_init) and is_video_dataset:

|

| 105 |

+

self.clips = self._make_dataset(root, setting)

|

| 106 |

+

if len(self.clips) == 0:

|

| 107 |

+

raise (RuntimeError("Found 0 video clips in subfolders of: " + root + "\n"

|

| 108 |

+

"Check your data directory (opt.data-dir)."))

|

| 109 |

+

|

| 110 |

+

def __getitem__(self, index):

|

| 111 |

+

|

| 112 |

+

directory, target = self.clips[index]

|

| 113 |

+

|

| 114 |

+

if self.video_loader:

|

| 115 |

+

if '.' in directory.split('/')[-1]:

|

| 116 |

+

# data in the "setting" file already have extension, e.g., demo.mp4

|

| 117 |

+

video_name = directory

|

| 118 |

+

else:

|

| 119 |

+

# data in the "setting" file do not have extension, e.g., demo

|

| 120 |

+

# So we need to provide extension (i.e., .mp4) to complete the file name.

|

| 121 |

+

video_name = '{}.{}'.format(directory, self.video_ext)

|

| 122 |

+

|

| 123 |

+

try:

|

| 124 |

+

decord_vr = decord.VideoReader(video_name, num_threads=1)

|

| 125 |

+

except:

|

| 126 |

+

# return video_name

|

| 127 |

+

return (self.__getitem__(index + 1))

|

| 128 |

+

duration = len(decord_vr)

|

| 129 |

+

|

| 130 |

+

segment_indices, skip_offsets, new_step, skip_length = self._sample_train_indices(duration)

|

| 131 |

+

|

| 132 |

+

images = self._video_TSN_decord_batch_loader(directory, decord_vr, duration, segment_indices, skip_offsets,

|

| 133 |

+

new_step, skip_length)

|

| 134 |

+

|

| 135 |

+

process_data, mask = self.transform((images, None)) # T*C,H,W

|

| 136 |

+

process_data = process_data.view((self.new_length, 3) + process_data.size()[-2:]).transpose(0,

|

| 137 |

+

1) # T*C,H,W -> T,C,H,W -> C,T,H,W

|

| 138 |

+

|

| 139 |

+

return (process_data, mask)

|

| 140 |

+

|

| 141 |

+

def __len__(self):

|

| 142 |

+

return len(self.clips)

|

| 143 |

+

|

| 144 |

+

def _make_dataset(self, directory, setting):

|

| 145 |

+

if not os.path.exists(setting):

|

| 146 |

+

raise (RuntimeError("Setting file %s doesn't exist. Check opt.train-list and opt.val-list. " % (setting)))

|

| 147 |

+

clips = []

|

| 148 |

+

with open(setting) as split_f:

|

| 149 |

+

data = split_f.readlines()

|

| 150 |

+

for line in data:

|

| 151 |

+

line_info = line.split(' ')

|

| 152 |

+

# line format: video_path, video_duration, video_label

|

| 153 |

+

if len(line_info) < 2:

|

| 154 |

+

raise (RuntimeError('Video input format is not correct, missing one or more element. %s' % line))

|

| 155 |

+

elif len(line_info) > 2:

|

| 156 |

+

line_info = (' '.join(line_info[:-1]), line_info[-1]) # filename has spaces

|

| 157 |

+

clip_path = os.path.join(line_info[0])

|

| 158 |

+

target = int(line_info[1])

|

| 159 |

+

item = (clip_path, target)

|

| 160 |

+

clips.append(item)

|

| 161 |

+

# import torch_xla.core.xla_model as xm

|

| 162 |

+

# print = xm.master_print

|

| 163 |

+

# print("Dataset created. Number of clips: ", len(clips))

|

| 164 |

+

return clips

|

| 165 |

+

|

| 166 |

+

def _sample_train_indices(self, num_frames):

|

| 167 |

+

if self.randomize_interframes is False:

|

| 168 |

+

new_step = self._new_step

|

| 169 |

+

else:

|

| 170 |

+

new_step = np.random.randint(1, self._new_step + 1)

|

| 171 |

+

|

| 172 |

+

skip_length = self.new_length * new_step

|

| 173 |

+

|

| 174 |

+

average_duration = (num_frames - skip_length + 1) // self.num_segments

|

| 175 |

+

if average_duration > 0:

|

| 176 |

+

offsets = np.multiply(list(range(self.num_segments)),

|

| 177 |

+

average_duration)

|

| 178 |

+

offsets = offsets + np.random.randint(average_duration,

|

| 179 |

+

size=self.num_segments)

|

| 180 |

+

elif num_frames > max(self.num_segments, skip_length):

|

| 181 |

+

offsets = np.sort(np.random.randint(

|

| 182 |

+

num_frames - skip_length + 1,

|

| 183 |

+

size=self.num_segments))

|

| 184 |

+

else:

|

| 185 |

+

offsets = np.zeros((self.num_segments,))

|

| 186 |

+

|

| 187 |

+

if self.temporal_jitter:

|

| 188 |

+

skip_offsets = np.random.randint(

|

| 189 |

+

new_step, size=skip_length // new_step)

|

| 190 |

+

else:

|

| 191 |

+

skip_offsets = np.zeros(

|

| 192 |

+

skip_length // new_step, dtype=int)

|

| 193 |

+

return offsets + 1, skip_offsets, new_step, skip_length

|

| 194 |

+

|

| 195 |

+

def _video_TSN_decord_batch_loader(self, directory, video_reader, duration, indices, skip_offsets, new_step,

|

| 196 |

+

skip_length):

|

| 197 |

+

sampled_list = []

|

| 198 |

+

frame_id_list = []

|

| 199 |

+

for seg_ind in indices:

|

| 200 |

+

offset = int(seg_ind)

|

| 201 |

+

for i, _ in enumerate(range(0, skip_length, new_step)):

|

| 202 |

+

if offset + skip_offsets[i] <= duration:

|

| 203 |

+

frame_id = offset + skip_offsets[i] - 1

|

| 204 |

+

else:

|

| 205 |

+

frame_id = offset - 1

|

| 206 |

+

frame_id_list.append(frame_id)

|

| 207 |

+

if offset + new_step < duration:

|

| 208 |

+

offset += new_step

|

| 209 |

+

try:

|

| 210 |

+

video_data = video_reader.get_batch(frame_id_list).asnumpy()

|

| 211 |

+

sampled_list = [Image.fromarray(video_data[vid, :, :, :]).convert('RGB') for vid, _ in

|

| 212 |

+

enumerate(frame_id_list)]

|

| 213 |

+

except:

|

| 214 |

+

raise RuntimeError(

|

| 215 |

+

'Error occured in reading frames {} from video {} of duration {}.'.format(frame_id_list, directory,

|

| 216 |

+

duration))

|

| 217 |

+

return sampled_list

|

| 218 |

+

|

| 219 |

+

|

| 220 |

+

class ContextAndTargetVideoDataset(VideoMAE):

|

| 221 |

+

"""

|

| 222 |

+

A video dataset whose provided videos consist of (1) a "context" sequence of length Tc

|

| 223 |

+

and (2) a "target" sequence Tt.

|

| 224 |

+

|

| 225 |

+

These two sequences have the same frame rate (specificiable in real units) but are

|

| 226 |

+

separated by a specified gap (which may vary for different examples.)

|

| 227 |

+

|

| 228 |

+

The main use case is for training models to predict ahead by some variable amount,

|

| 229 |

+

given the context.

|

| 230 |

+

"""

|

| 231 |

+

|

| 232 |

+

standard_fps = [12, 24, 30, 48, 60, 100]

|

| 233 |

+

|

| 234 |

+

def __init__(self,

|

| 235 |

+

root,

|

| 236 |

+

setting,

|

| 237 |

+

train=True,

|

| 238 |

+

test_mode=False,

|

| 239 |

+

transform=None,

|

| 240 |

+

step_units='ms',

|

| 241 |

+

new_step=150,

|

| 242 |

+

start_frame=0,

|

| 243 |

+

context_length=2,

|

| 244 |

+

target_length=1,

|

| 245 |

+

channels_first=True,

|

| 246 |

+

generate_masks=True,

|

| 247 |

+

mask_generator=None,

|

| 248 |

+

context_target_gap=[400, 600],

|

| 249 |

+

normalize_timestamps=True,

|

| 250 |

+

default_fps=30,

|

| 251 |

+

min_fps=0.1,

|

| 252 |

+

seed=0,

|

| 253 |

+

*args,

|

| 254 |

+

**kwargs):

|

| 255 |

+

super(ContextAndTargetVideoDataset, self).__init__(

|

| 256 |

+

root=root,

|

| 257 |

+

setting=setting,

|

| 258 |

+

train=train,

|

| 259 |

+

test_mode=test_mode,

|

| 260 |

+

transform=transform,

|

| 261 |

+

new_length=context_length,

|

| 262 |

+

use_decord=True,

|

| 263 |

+

lazy_init=False,

|

| 264 |

+

video_loader=True,

|

| 265 |

+

*args, **kwargs)

|

| 266 |

+

|

| 267 |

+

# breakpoint()

|

| 268 |

+

|

| 269 |

+

self.context_length = self.new_length

|

| 270 |

+

self.target_length = target_length

|

| 271 |

+

|

| 272 |

+

## convert from fps and step size to frames

|

| 273 |

+

self._fps = None

|

| 274 |

+

self._min_fps = min_fps

|

| 275 |

+

self._default_fps = default_fps

|

| 276 |

+

self._step_units = step_units

|

| 277 |

+

self.new_step = new_step

|

| 278 |

+

|

| 279 |

+

## sampling for train and test

|

| 280 |

+

self._start_frame = start_frame

|

| 281 |

+

self.gap = context_target_gap

|

| 282 |

+

self.seed = seed

|

| 283 |

+

self.rng = np.random.RandomState(seed=seed)

|

| 284 |

+

|

| 285 |

+

# breakpoint()

|

| 286 |

+

|

| 287 |

+

## output formatting

|

| 288 |

+

self._channels_first = channels_first

|

| 289 |

+

self._normalize_timestamps = normalize_timestamps

|

| 290 |

+

self._generate_masks = generate_masks

|

| 291 |

+

self.mask_generator = mask_generator

|

| 292 |

+

|

| 293 |

+

|

| 294 |

+

def _get_frames_per_t(self, t):

|

| 295 |

+

if self._step_units == 'frames' or (self._step_units is None):

|

| 296 |

+

return int(t)

|

| 297 |

+

|

| 298 |

+

assert self._fps is not None

|

| 299 |

+

t_per_frame = 1 / self._fps

|

| 300 |

+

if self._step_units in ['ms', 'milliseconds']:

|

| 301 |

+

t_per_frame *= 1000.0

|

| 302 |

+

|

| 303 |

+

return max(int(np.round(t / t_per_frame)), 1)

|

| 304 |

+

|

| 305 |

+

@property

|

| 306 |

+

def new_step(self):

|

| 307 |

+

if self._fps is None:

|

| 308 |

+

return None

|

| 309 |

+

else:

|

| 310 |

+

return self._get_frames_per_t(self._new_step)

|

| 311 |

+

|

| 312 |

+

@new_step.setter

|

| 313 |

+

def new_step(self, v):

|

| 314 |

+

self._new_step = v

|

| 315 |

+

|

| 316 |

+

@property

|

| 317 |

+

def gap(self):

|

| 318 |

+

if self._fps is None:

|

| 319 |

+

return [1, 2]

|

| 320 |

+

else:

|

| 321 |

+

gap = [self._get_frames_per_t(self._gap[0]),

|

| 322 |

+

self._get_frames_per_t(self._gap[1])]

|

| 323 |

+

gap[1] = max(gap[1], gap[0] + 1)

|

| 324 |

+

return gap

|

| 325 |

+

|

| 326 |

+

@gap.setter

|

| 327 |

+

def gap(self, v):

|

| 328 |

+

if v is None:

|

| 329 |

+

v = self._new_step

|

| 330 |

+

if not isinstance(v, (list, tuple)):

|

| 331 |

+

v = [v, v]

|

| 332 |

+

self._gap = v

|

| 333 |

+

|

| 334 |

+

def _get_video_name(self, directory):

|

| 335 |

+

if ''.join(['.', self.video_ext]) in directory.split('/')[-1]:

|

| 336 |

+

# data in the "setting" file has extension, e.g. demo.mpr

|

| 337 |

+

video_name = directory

|

| 338 |

+

else:

|

| 339 |

+

# data doesn't have an extension

|

| 340 |

+

video_name = '{}.{}'.format(directory, self.video_ext)

|

| 341 |

+

return video_name

|

| 342 |

+

|

| 343 |

+

def _set_fps(self, reader):

|

| 344 |

+

"""click fps to a standard"""

|

| 345 |

+

if self._step_units == 'frames' or self._step_units is None:

|

| 346 |

+

self._fps = None

|

| 347 |

+

else:

|

| 348 |

+

self._fps = None

|

| 349 |

+

fps = reader.get_avg_fps()

|

| 350 |

+

for st in self.standard_fps:

|

| 351 |

+

if (int(np.floor(fps)) == st) or (int(np.ceil(fps)) == st):

|

| 352 |

+

self._fps = st

|

| 353 |

+

if self._fps is None:

|

| 354 |

+

self._fps = int(np.round(fps))

|

| 355 |

+

|

| 356 |

+

if self._fps < self._min_fps:

|

| 357 |

+

self._fps = self._default_fps

|

| 358 |

+

|

| 359 |

+

def _get_step_and_gap(self):

|

| 360 |

+

step = self.new_step

|

| 361 |

+

if self.randomize_interframes and self.train:

|

| 362 |

+

step = self.rng.randint(1, step + 1)

|

| 363 |

+

|

| 364 |

+

if self.train:

|

| 365 |

+

gap = self.rng.randint(*self.gap)

|

| 366 |

+

else:

|

| 367 |

+

gap = sum(self.gap) // 2

|

| 368 |

+

return (step, gap)

|

| 369 |

+

|

| 370 |

+

def _sample_frames(self):

|

| 371 |

+

step, gap = self._get_step_and_gap()

|

| 372 |

+

|

| 373 |

+

## compute total length of sample

|

| 374 |

+

## e.g. if context_length = 2, step = 1, gap = 10, target_length = 2:

|

| 375 |

+

## total_length = 2 * 1 + 10 + (2 - 1) * 1 = 13

|

| 376 |

+

## so len(video) must be >= 13

|

| 377 |

+

self._total_length = self.context_length * step + gap + (self.target_length - 1) * step

|

| 378 |

+

if self._total_length > (self._num_frames - self._start_frame):

|

| 379 |

+

if self.train:

|

| 380 |

+

return None

|

| 381 |

+

else:

|

| 382 |

+

raise ValueError(

|

| 383 |

+

"movie of length %d starting at fr=%d is too long for video of %d frames" % \

|

| 384 |

+

(self._total_length, self._start_frame, self._num_frames))

|

| 385 |

+

|

| 386 |

+

## sample the frames randomly (if training) or from the start frame (if test)

|

| 387 |

+

if self.train:

|

| 388 |

+

self.start_frame_now = self.rng.randint(

|

| 389 |

+

min(self._start_frame, self._num_frames - self._total_length),

|

| 390 |

+

self._num_frames - self._total_length + 1)

|

| 391 |

+

else:

|

| 392 |

+

self.start_frame_now = min(self._start_frame, self._num_frames - self._total_length)

|

| 393 |

+

|

| 394 |

+

frames = [self.start_frame_now + i * step for i in range(self.context_length)]

|

| 395 |

+

frames += [frames[-1] + gap + i * step for i in range(self.target_length)]

|

| 396 |

+

|

| 397 |

+

# breakpoint()

|

| 398 |

+

|

| 399 |

+

return frames

|

| 400 |

+

|

| 401 |

+

def _decode_frame_images(self, reader, frames):

|

| 402 |

+

try:

|

| 403 |

+

video_data = reader.get_batch(frames).asnumpy()

|

| 404 |

+

video_data = [Image.fromarray(video_data[t, :, :, :]).convert('RGB')

|

| 405 |

+

for t, _ in enumerate(frames)]

|

| 406 |

+

except:

|

| 407 |

+

raise RuntimeError(

|

| 408 |

+

"Error occurred in reading frames {} from video {} of duration {}".format(

|

| 409 |

+

frames, self.index, self._num_frames))

|

| 410 |

+

return video_data

|

| 411 |

+

|

| 412 |

+

def __getitem__(self, index):

|

| 413 |

+

|

| 414 |

+

self.index = index

|

| 415 |

+

self.directory, target = self.clips[index]

|

| 416 |

+

|

| 417 |

+

self.video_name = self._get_video_name(self.directory)

|

| 418 |

+

|

| 419 |

+

## build decord loader

|

| 420 |

+

try:

|

| 421 |

+

decord_vr = decord.VideoReader(self.video_name, num_threads=1)

|

| 422 |

+

self._set_fps(decord_vr)

|

| 423 |

+

except:

|

| 424 |

+

# return self.video_name

|

| 425 |

+

return (self.__getitem__(index + 1))

|

| 426 |

+

|

| 427 |

+

## sample the video

|

| 428 |

+

self._num_frames = len(decord_vr)

|

| 429 |

+

self.frames = self._sample_frames()

|

| 430 |

+

if self.frames is None:

|

| 431 |

+

print("no movie of length %d for video idx=%d" % (self._total_length, self.index))

|

| 432 |

+

return self.__getitem__(index + 1)

|

| 433 |

+

|

| 434 |

+

## decode to PIL.Image

|

| 435 |

+

image_list = self._decode_frame_images(decord_vr, self.frames)

|

| 436 |

+

|

| 437 |

+

## postproc to torch.Tensor and mask generation

|

| 438 |

+

if self.transform is None:

|

| 439 |

+

image_tensor = torch.stack([transforms.ToTensor()(img) for img in image_list], 0)

|

| 440 |

+

else:

|

| 441 |

+

image_tensor = self.transform((image_list, None))

|

| 442 |

+

|

| 443 |

+

image_tensor = image_tensor.view(self.context_length + self.target_length, 3, *image_tensor.shape[-2:])

|

| 444 |

+

|

| 445 |

+

## VMAE expects [B,C,T,H,W] rather than [B,T,C,H,W]

|

| 446 |

+

if self._channels_first:

|

| 447 |

+

image_tensor = image_tensor.transpose(0, 1)

|

| 448 |

+

|

| 449 |

+

if self._generate_masks and self.mask_generator is not None:

|

| 450 |

+

mask = self.mask_generator()

|

| 451 |

+

return image_tensor, mask.bool()

|

| 452 |

+

else:

|

| 453 |

+

return image_tensor

|

cwm/data/dataset_utils.py

ADDED

|

@@ -0,0 +1,73 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from torchvision import transforms

|

| 2 |

+

from cwm.data.transforms import *

|

| 3 |

+

from cwm.data.dataset import ContextAndTargetVideoDataset

|

| 4 |

+

from timm.data.constants import IMAGENET_DEFAULT_MEAN, IMAGENET_DEFAULT_STD

|

| 5 |

+

from cwm.data.masking_generator import RotatedTableMaskingGenerator

|

| 6 |

+

|

| 7 |

+

class DataAugmentationForVideoMAE(object):

|

| 8 |

+

def __init__(self, augmentation_type, input_size, augmentation_scales):

|

| 9 |

+

|

| 10 |

+

transform_list = []

|

| 11 |

+

|

| 12 |

+

self.scale = GroupScale(input_size)

|

| 13 |

+

transform_list.append(self.scale)

|

| 14 |

+

|

| 15 |

+

if augmentation_type == 'multiscale':

|

| 16 |

+

self.train_augmentation = GroupMultiScaleCrop(input_size, list(augmentation_scales))

|

| 17 |

+

elif augmentation_type == 'center':

|

| 18 |

+

self.train_augmentation = GroupCenterCrop(input_size)

|

| 19 |

+

|

| 20 |

+

transform_list.extend([self.train_augmentation, Stack(roll=False), ToTorchFormatTensor(div=True)])

|

| 21 |

+

|

| 22 |

+

# Normalize input images

|

| 23 |

+

normalize = GroupNormalize(IMAGENET_DEFAULT_MEAN, IMAGENET_DEFAULT_STD)

|

| 24 |

+

transform_list.append(normalize)

|

| 25 |

+

|

| 26 |

+

self.transform = transforms.Compose(transform_list)

|

| 27 |

+

|

| 28 |

+

def __call__(self, images):

|

| 29 |

+

process_data, _ = self.transform(images)

|

| 30 |

+

return process_data

|

| 31 |

+

|

| 32 |

+

def __repr__(self):

|

| 33 |

+

repr = "(DataAugmentationForVideoMAE,\n"

|

| 34 |

+

repr += " transform = %s,\n" % str(self.transform)

|

| 35 |

+

repr += ")"

|

| 36 |

+

return repr

|

| 37 |

+

|

| 38 |

+

|

| 39 |

+

def build_pretraining_dataset(args):

|

| 40 |

+

|

| 41 |

+

dataset_list = []

|

| 42 |

+

data_transform = DataAugmentationForVideoMAE(args.augmentation_type, args.input_size, args.augmentation_scales)

|

| 43 |

+

|

| 44 |

+

mask_generator = RotatedTableMaskingGenerator(

|

| 45 |

+

input_size=args.mask_input_size,

|

| 46 |

+

mask_ratio=args.mask_ratio,

|

| 47 |

+

tube_length=args.tubelet_size,

|

| 48 |

+

batch_size=args.batch_size,

|

| 49 |

+

mask_type=args.mask_type

|

| 50 |

+

)

|

| 51 |

+

|

| 52 |

+

for data_path in [args.data_path] if args.data_path_list is None else args.data_path_list:

|

| 53 |

+

dataset = ContextAndTargetVideoDataset(

|

| 54 |

+

root=None,

|

| 55 |

+

setting=data_path,

|

| 56 |

+

video_ext='mp4',

|

| 57 |

+

is_color=True,

|

| 58 |

+

modality='rgb',

|

| 59 |

+

context_length=args.context_frames,

|

| 60 |

+

target_length=args.target_frames,

|

| 61 |

+

step_units=args.temporal_units,

|

| 62 |

+

new_step=args.sampling_rate,

|

| 63 |

+

context_target_gap=args.context_target_gap,

|

| 64 |

+

transform=data_transform,

|

| 65 |

+

randomize_interframes=False,

|

| 66 |

+

channels_first=True,

|

| 67 |

+

temporal_jitter=False,

|

| 68 |

+

train=True,

|

| 69 |

+

mask_generator=mask_generator,

|

| 70 |

+

)

|

| 71 |

+

dataset_list.append(dataset)

|

| 72 |

+

dataset = torch.utils.data.ConcatDataset(dataset_list)

|

| 73 |

+

return dataset

|

cwm/data/masking_generator.py

ADDED

|

@@ -0,0 +1,86 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import numpy as np

|

| 2 |

+

import torch

|

| 3 |

+

|

| 4 |

+

def get_tubes(masks_per_frame, tube_length):

|

| 5 |

+

rp = torch.randperm(len(masks_per_frame))

|

| 6 |

+

masks_per_frame = masks_per_frame[rp]

|

| 7 |

+

|

| 8 |

+

tubes = [masks_per_frame]

|

| 9 |

+

for x in range(tube_length - 1):

|

| 10 |

+

masks_per_frame = masks_per_frame.clone()

|

| 11 |

+

rp = torch.randperm(len(masks_per_frame))

|

| 12 |

+

masks_per_frame = masks_per_frame[rp]

|

| 13 |

+

tubes.append(masks_per_frame)

|

| 14 |

+

|

| 15 |

+

tubes = torch.vstack(tubes)

|

| 16 |

+

|

| 17 |

+

return tubes

|

| 18 |

+

|

| 19 |

+

class RotatedTableMaskingGenerator:

|

| 20 |

+

def __init__(self,

|

| 21 |

+

input_size,

|

| 22 |

+

mask_ratio,

|

| 23 |

+

tube_length,

|

| 24 |

+

batch_size,

|

| 25 |

+

mask_type='rotated_table',

|

| 26 |

+

seed=None,

|

| 27 |

+

randomize_num_visible=False):

|

| 28 |

+

|

| 29 |

+

self.batch_size = batch_size

|

| 30 |

+

|

| 31 |

+

self.mask_ratio = mask_ratio

|

| 32 |

+

self.tube_length = tube_length

|

| 33 |

+

|

| 34 |

+

self.frames, self.height, self.width = input_size

|

| 35 |

+

self.num_patches_per_frame = self.height * self.width

|

| 36 |

+

self.total_patches = self.frames * self.num_patches_per_frame

|

| 37 |

+

|

| 38 |

+

self.seed = seed

|

| 39 |

+

self.randomize_num_visible = randomize_num_visible

|

| 40 |

+

|

| 41 |

+

self.mask_type = mask_type

|

| 42 |

+

|

| 43 |

+

def __repr__(self):

|

| 44 |

+

repr_str = "Inverted Table Mask: total patches {}, tube length {}, randomize num visible? {}, seed {}".format(

|

| 45 |

+

self.total_patches, self.tube_length, self.randomize_num_visible, self.seed

|

| 46 |

+

)

|

| 47 |

+

return repr_str

|

| 48 |

+

|

| 49 |

+

def __call__(self, m=None):

|

| 50 |

+

|

| 51 |

+

if self.mask_type == 'rotated_table_magvit':

|

| 52 |

+

self.mask_ratio = np.random.uniform(low=0.0, high=1)

|

| 53 |

+

self.mask_ratio = np.cos(self.mask_ratio * np.pi / 2)

|

| 54 |

+

elif self.mask_type == 'rotated_table_maskvit':

|

| 55 |

+

self.mask_ratio = np.random.uniform(low=0.5, high=1)

|

| 56 |

+

|

| 57 |

+

all_masks = []

|

| 58 |

+

for b in range(self.batch_size):

|

| 59 |

+

|

| 60 |

+

self.num_masks_per_frame = max(0, int(self.mask_ratio * self.num_patches_per_frame))

|

| 61 |

+

self.total_masks = self.tube_length * self.num_masks_per_frame

|

| 62 |

+

|

| 63 |

+

num_masks = self.num_masks_per_frame

|

| 64 |

+

|

| 65 |

+

if self.randomize_num_visible:

|

| 66 |

+

assert "Randomize num visible Not implemented"

|

| 67 |

+

num_masks = self.rng.randint(low=num_masks, high=(self.num_patches_per_frame + 1))

|

| 68 |

+

|

| 69 |

+

if self.mask_ratio == 0:

|

| 70 |

+

mask_per_frame = torch.hstack([

|

| 71 |

+

torch.zeros(self.num_patches_per_frame - num_masks),

|

| 72 |

+

])

|

| 73 |

+

else:

|

| 74 |

+

mask_per_frame = torch.hstack([

|

| 75 |

+

torch.zeros(self.num_patches_per_frame - num_masks),

|

| 76 |

+

torch.ones(num_masks),

|

| 77 |

+

])

|

| 78 |

+

|

| 79 |

+

tubes = get_tubes(mask_per_frame, self.tube_length)

|

| 80 |

+

top = torch.zeros(self.height * self.width).to(tubes.dtype)

|

| 81 |

+

|

| 82 |

+

top = torch.tile(top, (self.frames - self.tube_length, 1))

|

| 83 |

+

mask = torch.cat([top, tubes])

|

| 84 |

+

mask = mask.flatten()

|

| 85 |

+

all_masks.append(mask)

|

| 86 |

+

return torch.stack(all_masks)

|

cwm/data/transforms.py

ADDED

|

@@ -0,0 +1,206 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import torch

|

| 2 |

+

import torchvision.transforms.functional as F

|

| 3 |

+

import warnings

|

| 4 |

+

import random

|

| 5 |

+

import numpy as np

|

| 6 |

+

import torchvision

|

| 7 |

+

from PIL import Image, ImageOps

|

| 8 |

+

import numbers

|

| 9 |

+

|

| 10 |

+

|

| 11 |

+

class GroupRandomCrop(object):

|

| 12 |

+

def __init__(self, size):

|

| 13 |

+

if isinstance(size, numbers.Number):

|

| 14 |

+

self.size = (int(size), int(size))

|

| 15 |

+

else:

|

| 16 |

+

self.size = size

|

| 17 |

+

|

| 18 |

+

def __call__(self, img_tuple):

|

| 19 |

+

img_group, label = img_tuple

|

| 20 |

+

|

| 21 |

+

w, h = img_group[0].size

|

| 22 |

+

th, tw = self.size

|

| 23 |

+

|

| 24 |

+

out_images = list()

|

| 25 |

+

|

| 26 |

+

x1 = random.randint(0, w - tw)

|

| 27 |

+

y1 = random.randint(0, h - th)

|

| 28 |

+

|

| 29 |

+

for img in img_group:

|

| 30 |

+

assert(img.size[0] == w and img.size[1] == h)

|

| 31 |

+

if w == tw and h == th:

|

| 32 |

+

out_images.append(img)

|

| 33 |

+

else:

|

| 34 |

+

out_images.append(img.crop((x1, y1, x1 + tw, y1 + th)))

|

| 35 |

+

|

| 36 |

+

return (out_images, label)

|

| 37 |

+

|

| 38 |

+

|

| 39 |

+

class GroupCenterCrop(object):

|

| 40 |

+

def __init__(self, size):

|

| 41 |

+

self.worker = torchvision.transforms.CenterCrop(size)

|

| 42 |

+

|

| 43 |

+

def __call__(self, img_tuple):

|

| 44 |

+

img_group, label = img_tuple

|

| 45 |

+

return ([self.worker(img) for img in img_group], label)

|

| 46 |

+

|

| 47 |

+

|

| 48 |

+

class GroupNormalize(object):

|

| 49 |

+

def __init__(self, mean, std):

|

| 50 |

+

self.mean = mean

|

| 51 |

+

self.std = std

|

| 52 |

+

|

| 53 |

+

def __call__(self, tensor_tuple):

|

| 54 |

+

tensor, label = tensor_tuple

|

| 55 |

+

rep_mean = self.mean * (tensor.size()[0]//len(self.mean))

|

| 56 |

+

rep_std = self.std * (tensor.size()[0]//len(self.std))

|

| 57 |

+

|

| 58 |

+

# TODO: make efficient

|

| 59 |

+

for t, m, s in zip(tensor, rep_mean, rep_std):

|

| 60 |

+

t.sub_(m).div_(s)

|

| 61 |

+

|

| 62 |

+

return (tensor,label)

|

| 63 |

+

|

| 64 |

+

|

| 65 |

+

class GroupGrayScale(object):

|

| 66 |

+

def __init__(self, size):

|

| 67 |

+

self.worker = torchvision.transforms.Grayscale(size)

|

| 68 |

+

|

| 69 |

+

def __call__(self, img_tuple):

|

| 70 |

+

img_group, label = img_tuple

|

| 71 |

+

return ([self.worker(img) for img in img_group], label)

|

| 72 |

+

|

| 73 |

+

|

| 74 |

+

class GroupScale(object):

|

| 75 |

+

""" Rescales the input PIL.Image to the given 'size'.

|

| 76 |

+

'size' will be the size of the smaller edge.

|

| 77 |

+

For example, if height > width, then image will be

|

| 78 |

+

rescaled to (size * height / width, size)

|

| 79 |

+

size: size of the smaller edge

|

| 80 |

+

interpolation: Default: PIL.Image.BILINEAR

|

| 81 |

+

"""

|

| 82 |

+

|

| 83 |

+

def __init__(self, size, interpolation=Image.BILINEAR):

|

| 84 |

+

self.worker = torchvision.transforms.Resize(size, interpolation)

|

| 85 |

+

|

| 86 |

+

def __call__(self, img_tuple):

|

| 87 |

+

img_group, label = img_tuple

|

| 88 |

+

return ([self.worker(img) for img in img_group], label)

|

| 89 |

+

|

| 90 |

+

|

| 91 |

+

class GroupMultiScaleCrop(object):

|

| 92 |

+

|

| 93 |

+

def __init__(self, input_size, scales=None, max_distort=1, fix_crop=True, more_fix_crop=True):

|

| 94 |

+