Spaces:

Sleeping

Sleeping

Add files for midterm project

Browse files- =0.2.0 +29 -0

- =0.3 +0 -0

- BuildingAChainlitApp.md +312 -0

- Dockerfile +11 -0

- LICENSE +21 -0

- README.md +118 -11

- __pycache__/app.cpython-311.pyc +0 -0

- app.py +112 -0

- chainlit.md +3 -0

- classes/__pycache__/app_state.cpython-311.pyc +0 -0

- classes/app_state.py +81 -0

- images/docchain_img.png +0 -0

- old_app.py +145 -0

- public/custom_styles.css +129 -0

- rag_chain.ipynb +757 -0

- requirements.txt +13 -0

- utilities/__init__.py +0 -0

- utilities/__pycache__/__init__.cpython-311.pyc +0 -0

- utilities/__pycache__/debugger.cpython-311.pyc +0 -0

- utilities/__pycache__/rag_utilities.cpython-311.pyc +0 -0

- utilities/debugger.py +3 -0

- utilities/get_documents.py +33 -0

- utilities/pipeline.py +27 -0

- utilities/rag_utilities.py +109 -0

- utilities/text_utils.py +103 -0

- utilities/vector_database.py +105 -0

- utilities_2/__init__.py +0 -0

- utilities_2/openai_utils/__init__.py +0 -0

- utilities_2/openai_utils/chatmodel.py +45 -0

- utilities_2/openai_utils/embedding.py +60 -0

- utilities_2/openai_utils/prompts.py +78 -0

- utilities_2/text_utils.py +75 -0

- utilities_2/vectordatabase.py +82 -0

=0.2.0

ADDED

|

@@ -0,0 +1,29 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Requirement already satisfied: langchain_core in /home/rchrdgwr/anaconda3/lib/python3.11/site-packages (0.3.1)

|

| 2 |

+

Requirement already satisfied: langchain_openai in /home/rchrdgwr/anaconda3/lib/python3.11/site-packages (0.2.0)

|

| 3 |

+

Requirement already satisfied: PyYAML>=5.3 in /home/rchrdgwr/anaconda3/lib/python3.11/site-packages (from langchain_core) (6.0)

|

| 4 |

+

Requirement already satisfied: jsonpatch<2.0,>=1.33 in /home/rchrdgwr/anaconda3/lib/python3.11/site-packages (from langchain_core) (1.33)

|

| 5 |

+

Requirement already satisfied: langsmith<0.2.0,>=0.1.117 in /home/rchrdgwr/anaconda3/lib/python3.11/site-packages (from langchain_core) (0.1.122)

|

| 6 |

+

Requirement already satisfied: packaging<25,>=23.2 in /home/rchrdgwr/anaconda3/lib/python3.11/site-packages (from langchain_core) (23.2)

|

| 7 |

+

Requirement already satisfied: pydantic<3.0.0,>=2.5.2 in /home/rchrdgwr/anaconda3/lib/python3.11/site-packages (from langchain_core) (2.8.2)

|

| 8 |

+

Requirement already satisfied: tenacity!=8.4.0,<9.0.0,>=8.1.0 in /home/rchrdgwr/anaconda3/lib/python3.11/site-packages (from langchain_core) (8.2.2)

|

| 9 |

+

Requirement already satisfied: typing-extensions>=4.7 in /home/rchrdgwr/anaconda3/lib/python3.11/site-packages (from langchain_core) (4.12.2)

|

| 10 |

+

Requirement already satisfied: openai<2.0.0,>=1.40.0 in /home/rchrdgwr/anaconda3/lib/python3.11/site-packages (from langchain_openai) (1.46.0)

|

| 11 |

+

Requirement already satisfied: tiktoken<1,>=0.7 in /home/rchrdgwr/anaconda3/lib/python3.11/site-packages (from langchain_openai) (0.7.0)

|

| 12 |

+

Requirement already satisfied: jsonpointer>=1.9 in /home/rchrdgwr/anaconda3/lib/python3.11/site-packages (from jsonpatch<2.0,>=1.33->langchain_core) (2.1)

|

| 13 |

+

Requirement already satisfied: httpx<1,>=0.23.0 in /home/rchrdgwr/anaconda3/lib/python3.11/site-packages (from langsmith<0.2.0,>=0.1.117->langchain_core) (0.24.1)

|

| 14 |

+

Requirement already satisfied: orjson<4.0.0,>=3.9.14 in /home/rchrdgwr/anaconda3/lib/python3.11/site-packages (from langsmith<0.2.0,>=0.1.117->langchain_core) (3.10.7)

|

| 15 |

+

Requirement already satisfied: requests<3,>=2 in /home/rchrdgwr/anaconda3/lib/python3.11/site-packages (from langsmith<0.2.0,>=0.1.117->langchain_core) (2.31.0)

|

| 16 |

+

Requirement already satisfied: anyio<5,>=3.5.0 in /home/rchrdgwr/anaconda3/lib/python3.11/site-packages (from openai<2.0.0,>=1.40.0->langchain_openai) (3.5.0)

|

| 17 |

+

Requirement already satisfied: distro<2,>=1.7.0 in /home/rchrdgwr/anaconda3/lib/python3.11/site-packages (from openai<2.0.0,>=1.40.0->langchain_openai) (1.9.0)

|

| 18 |

+

Requirement already satisfied: jiter<1,>=0.4.0 in /home/rchrdgwr/anaconda3/lib/python3.11/site-packages (from openai<2.0.0,>=1.40.0->langchain_openai) (0.5.0)

|

| 19 |

+

Requirement already satisfied: sniffio in /home/rchrdgwr/anaconda3/lib/python3.11/site-packages (from openai<2.0.0,>=1.40.0->langchain_openai) (1.2.0)

|

| 20 |

+

Requirement already satisfied: tqdm>4 in /home/rchrdgwr/anaconda3/lib/python3.11/site-packages (from openai<2.0.0,>=1.40.0->langchain_openai) (4.65.0)

|

| 21 |

+

Requirement already satisfied: annotated-types>=0.4.0 in /home/rchrdgwr/anaconda3/lib/python3.11/site-packages (from pydantic<3.0.0,>=2.5.2->langchain_core) (0.7.0)

|

| 22 |

+

Requirement already satisfied: pydantic-core==2.20.1 in /home/rchrdgwr/anaconda3/lib/python3.11/site-packages (from pydantic<3.0.0,>=2.5.2->langchain_core) (2.20.1)

|

| 23 |

+

Requirement already satisfied: regex>=2022.1.18 in /home/rchrdgwr/anaconda3/lib/python3.11/site-packages (from tiktoken<1,>=0.7->langchain_openai) (2022.7.9)

|

| 24 |

+

Requirement already satisfied: idna>=2.8 in /home/rchrdgwr/anaconda3/lib/python3.11/site-packages (from anyio<5,>=3.5.0->openai<2.0.0,>=1.40.0->langchain_openai) (3.4)

|

| 25 |

+

Requirement already satisfied: certifi in /home/rchrdgwr/anaconda3/lib/python3.11/site-packages (from httpx<1,>=0.23.0->langsmith<0.2.0,>=0.1.117->langchain_core) (2023.7.22)

|

| 26 |

+

Requirement already satisfied: httpcore<0.18.0,>=0.15.0 in /home/rchrdgwr/anaconda3/lib/python3.11/site-packages (from httpx<1,>=0.23.0->langsmith<0.2.0,>=0.1.117->langchain_core) (0.17.3)

|

| 27 |

+

Requirement already satisfied: charset-normalizer<4,>=2 in /home/rchrdgwr/anaconda3/lib/python3.11/site-packages (from requests<3,>=2->langsmith<0.2.0,>=0.1.117->langchain_core) (2.0.4)

|

| 28 |

+

Requirement already satisfied: urllib3<3,>=1.21.1 in /home/rchrdgwr/anaconda3/lib/python3.11/site-packages (from requests<3,>=2->langsmith<0.2.0,>=0.1.117->langchain_core) (1.26.16)

|

| 29 |

+

Requirement already satisfied: h11<0.15,>=0.13 in /home/rchrdgwr/anaconda3/lib/python3.11/site-packages (from httpcore<0.18.0,>=0.15.0->httpx<1,>=0.23.0->langsmith<0.2.0,>=0.1.117->langchain_core) (0.14.0)

|

=0.3

ADDED

|

File without changes

|

BuildingAChainlitApp.md

ADDED

|

@@ -0,0 +1,312 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Building a Chainlit App

|

| 2 |

+

|

| 3 |

+

What if we want to take our Week 1 Day 2 assignment - [Pythonic RAG](https://github.com/AI-Maker-Space/AIE4/tree/main/Week%201/Day%202) - and bring it out of the notebook?

|

| 4 |

+

|

| 5 |

+

Well - we'll cover exactly that here!

|

| 6 |

+

|

| 7 |

+

## Anatomy of a Chainlit Application

|

| 8 |

+

|

| 9 |

+

[Chainlit](https://docs.chainlit.io/get-started/overview) is a Python package similar to Streamlit that lets users write a backend and a front end in a single (or multiple) Python file(s). It is mainly used for prototyping LLM-based Chat Style Applications - though it is used in production in some settings with 1,000,000s of MAUs (Monthly Active Users).

|

| 10 |

+

|

| 11 |

+

The primary method of customizing and interacting with the Chainlit UI is through a few critical [decorators](https://blog.hubspot.com/website/decorators-in-python).

|

| 12 |

+

|

| 13 |

+

> NOTE: Simply put, the decorators (in Chainlit) are just ways we can "plug-in" to the functionality in Chainlit.

|

| 14 |

+

|

| 15 |

+

We'll be concerning ourselves with three main scopes:

|

| 16 |

+

|

| 17 |

+

1. On application start - when we start the Chainlit application with a command like `chainlit run app.py`

|

| 18 |

+

2. On chat start - when a chat session starts (a user opens the web browser to the address hosting the application)

|

| 19 |

+

3. On message - when the users sends a message through the input text box in the Chainlit UI

|

| 20 |

+

|

| 21 |

+

Let's dig into each scope and see what we're doing!

|

| 22 |

+

|

| 23 |

+

## On Application Start:

|

| 24 |

+

|

| 25 |

+

The first thing you'll notice is that we have the traditional "wall of imports" this is to ensure we have everything we need to run our application.

|

| 26 |

+

|

| 27 |

+

```python

|

| 28 |

+

import os

|

| 29 |

+

from typing import List

|

| 30 |

+

from chainlit.types import AskFileResponse

|

| 31 |

+

from utilities_2.text_utils import CharacterTextSplitter, TextFileLoader

|

| 32 |

+

from utilities_2.openai_utils.prompts import (

|

| 33 |

+

UserRolePrompt,

|

| 34 |

+

SystemRolePrompt,

|

| 35 |

+

AssistantRolePrompt,

|

| 36 |

+

)

|

| 37 |

+

from utilities_2.openai_utils.embedding import EmbeddingModel

|

| 38 |

+

from utilities_2.vectordatabase import VectorDatabase

|

| 39 |

+

from utilities_2.openai_utils.chatmodel import ChatOpenAI

|

| 40 |

+

import chainlit as cl

|

| 41 |

+

```

|

| 42 |

+

|

| 43 |

+

Next up, we have some prompt templates. As all sessions will use the same prompt templates without modification, and we don't need these templates to be specific per template - we can set them up here - at the application scope.

|

| 44 |

+

|

| 45 |

+

```python

|

| 46 |

+

system_template = """\

|

| 47 |

+

Use the following context to answer a users question. If you cannot find the answer in the context, say you don't know the answer."""

|

| 48 |

+

system_role_prompt = SystemRolePrompt(system_template)

|

| 49 |

+

|

| 50 |

+

user_prompt_template = """\

|

| 51 |

+

Context:

|

| 52 |

+

{context}

|

| 53 |

+

|

| 54 |

+

Question:

|

| 55 |

+

{question}

|

| 56 |

+

"""

|

| 57 |

+

user_role_prompt = UserRolePrompt(user_prompt_template)

|

| 58 |

+

```

|

| 59 |

+

|

| 60 |

+

> NOTE: You'll notice that these are the exact same prompt templates we used from the Pythonic RAG Notebook in Week 1 Day 2!

|

| 61 |

+

|

| 62 |

+

Following that - we can create the Python Class definition for our RAG pipeline - or *chain*, as we'll refer to it in the rest of this walkthrough.

|

| 63 |

+

|

| 64 |

+

Let's look at the definition first:

|

| 65 |

+

|

| 66 |

+

```python

|

| 67 |

+

class RetrievalAugmentedQAPipeline:

|

| 68 |

+

def __init__(self, llm: ChatOpenAI(), vector_db_retriever: VectorDatabase) -> None:

|

| 69 |

+

self.llm = llm

|

| 70 |

+

self.vector_db_retriever = vector_db_retriever

|

| 71 |

+

|

| 72 |

+

async def arun_pipeline(self, user_query: str):

|

| 73 |

+

### RETRIEVAL

|

| 74 |

+

context_list = self.vector_db_retriever.search_by_text(user_query, k=4)

|

| 75 |

+

|

| 76 |

+

context_prompt = ""

|

| 77 |

+

for context in context_list:

|

| 78 |

+

context_prompt += context[0] + "\n"

|

| 79 |

+

|

| 80 |

+

### AUGMENTED

|

| 81 |

+

formatted_system_prompt = system_role_prompt.create_message()

|

| 82 |

+

|

| 83 |

+

formatted_user_prompt = user_role_prompt.create_message(question=user_query, context=context_prompt)

|

| 84 |

+

|

| 85 |

+

|

| 86 |

+

### GENERATION

|

| 87 |

+

async def generate_response():

|

| 88 |

+

async for chunk in self.llm.astream([formatted_system_prompt, formatted_user_prompt]):

|

| 89 |

+

yield chunk

|

| 90 |

+

|

| 91 |

+

return {"response": generate_response(), "context": context_list}

|

| 92 |

+

```

|

| 93 |

+

|

| 94 |

+

Notice a few things:

|

| 95 |

+

|

| 96 |

+

1. We have modified this `RetrievalAugmentedQAPipeline` from the initial notebook to support streaming.

|

| 97 |

+

2. In essence, our pipeline is *chaining* a few events together:

|

| 98 |

+

1. We take our user query, and chain it into our Vector Database to collect related chunks

|

| 99 |

+

2. We take those contexts and our user's questions and chain them into the prompt templates

|

| 100 |

+

3. We take that prompt template and chain it into our LLM call

|

| 101 |

+

4. We chain the response of the LLM call to the user

|

| 102 |

+

3. We are using a lot of `async` again!

|

| 103 |

+

|

| 104 |

+

Now, we're going to create a helper function for processing uploaded text files.

|

| 105 |

+

|

| 106 |

+

First, we'll instantiate a shared `CharacterTextSplitter`.

|

| 107 |

+

|

| 108 |

+

```python

|

| 109 |

+

text_splitter = CharacterTextSplitter()

|

| 110 |

+

```

|

| 111 |

+

|

| 112 |

+

Now we can define our helper.

|

| 113 |

+

|

| 114 |

+

```python

|

| 115 |

+

def process_text_file(file: AskFileResponse):

|

| 116 |

+

import tempfile

|

| 117 |

+

|

| 118 |

+

with tempfile.NamedTemporaryFile(mode="w", delete=False, suffix=".txt") as temp_file:

|

| 119 |

+

temp_file_path = temp_file.name

|

| 120 |

+

|

| 121 |

+

with open(temp_file_path, "wb") as f:

|

| 122 |

+

f.write(file.content)

|

| 123 |

+

|

| 124 |

+

text_loader = TextFileLoader(temp_file_path)

|

| 125 |

+

documents = text_loader.load_documents()

|

| 126 |

+

texts = text_splitter.split_text(documents)

|

| 127 |

+

return texts

|

| 128 |

+

```

|

| 129 |

+

|

| 130 |

+

Simply put, this downloads the file as a temp file, we load it in with `TextFileLoader` and then split it with our `TextSplitter`, and returns that list of strings!

|

| 131 |

+

|

| 132 |

+

<div style="border: 2px solid white; padding: 10px; border-radius: 5px; background-color: black; padding: 10px;">

|

| 133 |

+

QUESTION #1:

|

| 134 |

+

|

| 135 |

+

Why do we want to support streaming? What about streaming is important, or useful?

|

| 136 |

+

|

| 137 |

+

### ANSWER #1:

|

| 138 |

+

|

| 139 |

+

Streaming is the continuous transmission of the data from the model to the UI. Instead of waiting and batching up the response into a single

|

| 140 |

+

large message, the response is sent in pieces (streams) as it is created.

|

| 141 |

+

|

| 142 |

+

The advantages of streaming:

|

| 143 |

+

- quicker initial response - the user sees the first part of the answer sooner

|

| 144 |

+

- it is easier to identify the results are incorrect and terminate the request

|

| 145 |

+

- it is a more natural mode of communication for humans

|

| 146 |

+

- better handling of large data, not requiring complex caching

|

| 147 |

+

- essential for real time processing

|

| 148 |

+

- humans can only read so fast so its an advantage to get some of the data earlier

|

| 149 |

+

|

| 150 |

+

</div>

|

| 151 |

+

|

| 152 |

+

## On Chat Start:

|

| 153 |

+

|

| 154 |

+

The next scope is where "the magic happens". On Chat Start is when a user begins a chat session. This will happen whenever a user opens a new chat window, or refreshes an existing chat window.

|

| 155 |

+

|

| 156 |

+

You'll see that our code is set-up to immediately show the user a chat box requesting them to upload a file.

|

| 157 |

+

|

| 158 |

+

```python

|

| 159 |

+

while files == None:

|

| 160 |

+

files = await cl.AskFileMessage(

|

| 161 |

+

content="Please upload a Text File file to begin!",

|

| 162 |

+

accept=["text/plain"],

|

| 163 |

+

max_size_mb=2,

|

| 164 |

+

timeout=180,

|

| 165 |

+

).send()

|

| 166 |

+

```

|

| 167 |

+

|

| 168 |

+

Once we've obtained the text file - we'll use our processing helper function to process our text!

|

| 169 |

+

|

| 170 |

+

After we have processed our text file - we'll need to create a `VectorDatabase` and populate it with our processed chunks and their related embeddings!

|

| 171 |

+

|

| 172 |

+

```python

|

| 173 |

+

vector_db = VectorDatabase()

|

| 174 |

+

vector_db = await vector_db.abuild_from_list(texts)

|

| 175 |

+

```

|

| 176 |

+

|

| 177 |

+

Once we have that piece completed - we can create the chain we'll be using to respond to user queries!

|

| 178 |

+

|

| 179 |

+

```python

|

| 180 |

+

retrieval_augmented_qa_pipeline = RetrievalAugmentedQAPipeline(

|

| 181 |

+

vector_db_retriever=vector_db,

|

| 182 |

+

llm=chat_openai

|

| 183 |

+

)

|

| 184 |

+

```

|

| 185 |

+

|

| 186 |

+

Now, we'll save that into our user session!

|

| 187 |

+

|

| 188 |

+

> NOTE: Chainlit has some great documentation about [User Session](https://docs.chainlit.io/concepts/user-session).

|

| 189 |

+

|

| 190 |

+

<div style="border: 2px solid white; padding: 10px; border-radius: 5px; background-color: black; padding: 10px;">

|

| 191 |

+

|

| 192 |

+

### QUESTION #2:

|

| 193 |

+

|

| 194 |

+

Why are we using User Session here? What about Python makes us need to use this? Why not just store everything in a global variable?

|

| 195 |

+

|

| 196 |

+

### ANSWER #2:

|

| 197 |

+

The application hopefully will be run by many people, at the same time. If the data was stored in a global variable

|

| 198 |

+

this would be accessed by everyone using the application. So everytime someone started a new session, the information

|

| 199 |

+

would be overwritten, meaning everyone would basically get the same results. Unless only one person used the system

|

| 200 |

+

at a time.

|

| 201 |

+

|

| 202 |

+

So the goal is to keep each users session information separate from all the other users. The ChainLit User session

|

| 203 |

+

provides the capability of storing each users data separately.

|

| 204 |

+

</div>

|

| 205 |

+

|

| 206 |

+

## On Message

|

| 207 |

+

|

| 208 |

+

First, we load our chain from the user session:

|

| 209 |

+

|

| 210 |

+

```python

|

| 211 |

+

chain = cl.user_session.get("chain")

|

| 212 |

+

```

|

| 213 |

+

|

| 214 |

+

Then, we run the chain on the content of the message - and stream it to the front end - that's it!

|

| 215 |

+

|

| 216 |

+

```python

|

| 217 |

+

msg = cl.Message(content="")

|

| 218 |

+

result = await chain.arun_pipeline(message.content)

|

| 219 |

+

|

| 220 |

+

async for stream_resp in result["response"]:

|

| 221 |

+

await msg.stream_token(stream_resp)

|

| 222 |

+

```

|

| 223 |

+

|

| 224 |

+

## 🎉

|

| 225 |

+

|

| 226 |

+

With that - you've created a Chainlit application that moves our Pythonic RAG notebook to a Chainlit application!

|

| 227 |

+

|

| 228 |

+

## 🚧 CHALLENGE MODE 🚧

|

| 229 |

+

|

| 230 |

+

For an extra challenge - modify the behaviour of your applciation by integrating changes you made to your Pythonic RAG notebook (using new retrieval methods, etc.)

|

| 231 |

+

|

| 232 |

+

If you're still looking for a challenge, or didn't make any modifications to your Pythonic RAG notebook:

|

| 233 |

+

|

| 234 |

+

1) Allow users to upload PDFs (this will require you to build a PDF parser as well)

|

| 235 |

+

2) Modify the VectorStore to leverage [Qdrant](https://python-client.qdrant.tech/)

|

| 236 |

+

|

| 237 |

+

> NOTE: The motivation for these challenges is simple - the beginning of the course is extremely information dense, and people come from all kinds of different technical backgrounds. In order to ensure that all learners are able to engage with the content confidently and comfortably, we want to focus on the basic units of technical competency required. This leads to a situation where some learners, who came in with more robust technical skills, find the introductory material to be too simple - and these open-ended challenges help us do this!

|

| 238 |

+

|

| 239 |

+

## Support pdf documents

|

| 240 |

+

|

| 241 |

+

Code was modified to support pdf documents in the following areas:

|

| 242 |

+

|

| 243 |

+

1) Change to the request for documents in on_chat_start:

|

| 244 |

+

|

| 245 |

+

- changed the message to ask for .txt or .pdf file

|

| 246 |

+

- changed the acceptable file formats so that the pdf documents are included in the select pop up

|

| 247 |

+

|

| 248 |

+

```python

|

| 249 |

+

while not files:

|

| 250 |

+

files = await cl.AskFileMessage(

|

| 251 |

+

content="Please upload a .txt or .pdf file to begin processing!",

|

| 252 |

+

accept=["text/plain", "application/pdf"],

|

| 253 |

+

max_size_mb=2,

|

| 254 |

+

timeout=180,

|

| 255 |

+

).send()

|

| 256 |

+

```

|

| 257 |

+

|

| 258 |

+

2) change process_text_file() function to handle .pdf files

|

| 259 |

+

|

| 260 |

+

- refactor the code to do all file handling in utilities.text_utils

|

| 261 |

+

- app calls process_file, optionally passing in the text splitter function

|

| 262 |

+

- default text splitter function is CharacterTextSplitter

|

| 263 |

+

```python

|

| 264 |

+

texts = process_file(file)

|

| 265 |

+

```

|

| 266 |

+

- load_file() function does the following

|

| 267 |

+

- read the uploaded document into a temporary file

|

| 268 |

+

- identify the file extension

|

| 269 |

+

- process a .txt file as before resulting in the texts list

|

| 270 |

+

- if the file is .pdf use the PyMuPDF library to read each page and extract the text and add it to texts list

|

| 271 |

+

- use the passed in text splitter function to split the documents

|

| 272 |

+

|

| 273 |

+

```python

|

| 274 |

+

def load_file(self, file, text_splitter=CharacterTextSplitter()):

|

| 275 |

+

file_extension = os.path.splitext(file.name)[1].lower()

|

| 276 |

+

with tempfile.NamedTemporaryFile(mode="wb", delete=False, suffix=file_extension) as temp_file:

|

| 277 |

+

self.temp_file_path = temp_file.name

|

| 278 |

+

temp_file.write(file.content)

|

| 279 |

+

|

| 280 |

+

if os.path.isfile(self.temp_file_path):

|

| 281 |

+

if self.temp_file_path.endswith(".txt"):

|

| 282 |

+

self.load_text_file()

|

| 283 |

+

elif self.temp_file_path.endswith(".pdf"):

|

| 284 |

+

self.load_pdf_file()

|

| 285 |

+

else:

|

| 286 |

+

raise ValueError(

|

| 287 |

+

f"Unsupported file type: {self.temp_file_path}"

|

| 288 |

+

)

|

| 289 |

+

return text_splitter.split_text(self.documents)

|

| 290 |

+

else:

|

| 291 |

+

raise ValueError(

|

| 292 |

+

"Not a file"

|

| 293 |

+

)

|

| 294 |

+

|

| 295 |

+

def load_text_file(self):

|

| 296 |

+

with open(self.temp_file_path, "r", encoding=self.encoding) as f:

|

| 297 |

+

self.documents.append(f.read())

|

| 298 |

+

|

| 299 |

+

def load_pdf_file(self):

|

| 300 |

+

|

| 301 |

+

pdf_document = fitz.open(self.temp_file_path)

|

| 302 |

+

for page_num in range(len(pdf_document)):

|

| 303 |

+

page = pdf_document.load_page(page_num)

|

| 304 |

+

text = page.get_text()

|

| 305 |

+

self.documents.append(text)

|

| 306 |

+

```

|

| 307 |

+

|

| 308 |

+

3) Test the handling of .pdf and .txt files

|

| 309 |

+

|

| 310 |

+

Several different .pdf and .txt files were successfully uploaded and processed by the app

|

| 311 |

+

|

| 312 |

+

|

Dockerfile

ADDED

|

@@ -0,0 +1,11 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

FROM python:3.11.9

|

| 2 |

+

RUN useradd -m -u 1000 user

|

| 3 |

+

USER user

|

| 4 |

+

ENV HOME=/home/user \

|

| 5 |

+

PATH=/home/user/.local/bin:$PATH

|

| 6 |

+

WORKDIR $HOME/app

|

| 7 |

+

COPY --chown=user . $HOME/app

|

| 8 |

+

COPY ./requirements.txt ~/app/requirements.txt

|

| 9 |

+

RUN pip install -r requirements.txt

|

| 10 |

+

COPY . .

|

| 11 |

+

CMD ["chainlit", "run", "app.py", "--port", "7860"]

|

LICENSE

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

MIT License

|

| 2 |

+

|

| 3 |

+

Copyright (c) 2024 Richard Gower

|

| 4 |

+

|

| 5 |

+

Permission is hereby granted, free of charge, to any person obtaining a copy

|

| 6 |

+

of this software and associated documentation files (the "Software"), to deal

|

| 7 |

+

in the Software without restriction, including without limitation the rights

|

| 8 |

+

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

| 9 |

+

copies of the Software, and to permit persons to whom the Software is

|

| 10 |

+

furnished to do so, subject to the following conditions:

|

| 11 |

+

|

| 12 |

+

The above copyright notice and this permission notice shall be included in all

|

| 13 |

+

copies or substantial portions of the Software.

|

| 14 |

+

|

| 15 |

+

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

| 16 |

+

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

| 17 |

+

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

| 18 |

+

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

| 19 |

+

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

| 20 |

+

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

| 21 |

+

SOFTWARE.

|

README.md

CHANGED

|

@@ -1,11 +1,118 @@

|

|

| 1 |

-

---

|

| 2 |

-

title:

|

| 3 |

-

emoji:

|

| 4 |

-

colorFrom:

|

| 5 |

-

colorTo:

|

| 6 |

-

sdk: docker

|

| 7 |

-

pinned: false

|

| 8 |

-

license:

|

| 9 |

-

---

|

| 10 |

-

|

| 11 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

title: DeployPythonicRAG

|

| 3 |

+

emoji: 📉

|

| 4 |

+

colorFrom: blue

|

| 5 |

+

colorTo: purple

|

| 6 |

+

sdk: docker

|

| 7 |

+

pinned: false

|

| 8 |

+

license: apache-2.0

|

| 9 |

+

---

|

| 10 |

+

|

| 11 |

+

# Deploying Pythonic Chat With Your Text File Application

|

| 12 |

+

|

| 13 |

+

In today's breakout rooms, we will be following the processed that you saw during the challenge - for reference, the instructions for that are available [here](https://github.com/AI-Maker-Space/Beyond-ChatGPT/tree/main).

|

| 14 |

+

|

| 15 |

+

Today, we will repeat the same process - but powered by our Pythonic RAG implementation we created last week.

|

| 16 |

+

|

| 17 |

+

You'll notice a few differences in the `app.py` logic - as well as a few changes to the `utilities_2` package to get things working smoothly with Chainlit.

|

| 18 |

+

|

| 19 |

+

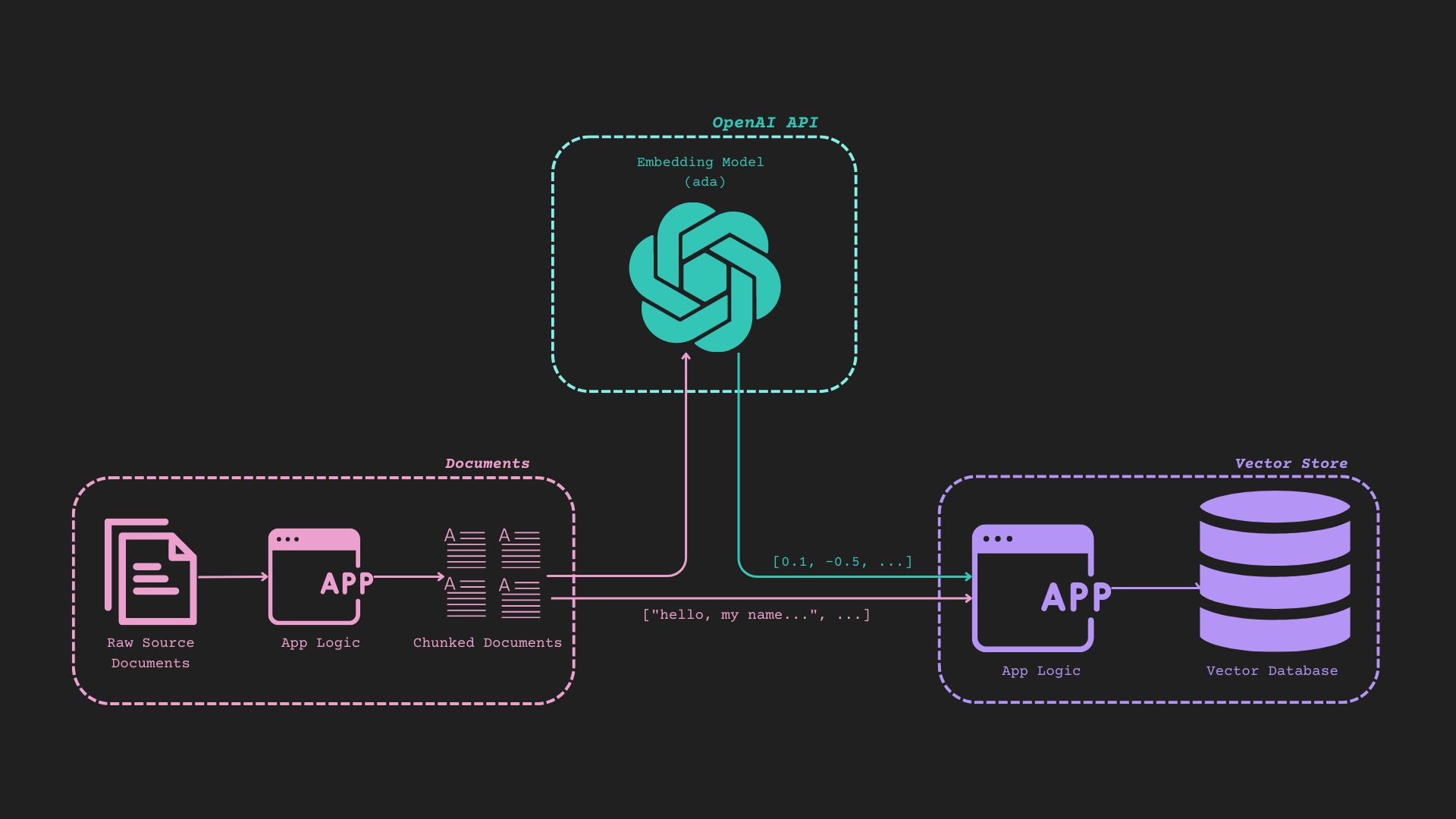

## Reference Diagram (It's Busy, but it works)

|

| 20 |

+

|

| 21 |

+

|

| 22 |

+

|

| 23 |

+

## Deploying the Application to Hugging Face Space

|

| 24 |

+

|

| 25 |

+

Due to the way the repository is created - it should be straightforward to deploy this to a Hugging Face Space!

|

| 26 |

+

|

| 27 |

+

> NOTE: If you wish to go through the local deployments using `chainlit run app.py` and Docker - please feel free to do so!

|

| 28 |

+

|

| 29 |

+

<details>

|

| 30 |

+

<summary>Creating a Hugging Face Space</summary>

|

| 31 |

+

|

| 32 |

+

1. Navigate to the `Spaces` tab.

|

| 33 |

+

|

| 34 |

+

|

| 35 |

+

|

| 36 |

+

2. Click on `Create new Space`

|

| 37 |

+

|

| 38 |

+

|

| 39 |

+

|

| 40 |

+

3. Create the Space by providing values in the form. Make sure you've selected "Docker" as your Space SDK.

|

| 41 |

+

|

| 42 |

+

|

| 43 |

+

|

| 44 |

+

</details>

|

| 45 |

+

|

| 46 |

+

<details>

|

| 47 |

+

<summary>Adding this Repository to the Newly Created Space</summary>

|

| 48 |

+

|

| 49 |

+

1. Collect the SSH address from the newly created Space.

|

| 50 |

+

|

| 51 |

+

|

| 52 |

+

|

| 53 |

+

> NOTE: The address is the component that starts with `[email protected]:spaces/`.

|

| 54 |

+

|

| 55 |

+

2. Use the command:

|

| 56 |

+

|

| 57 |

+

```bash

|

| 58 |

+

git remote add hf HF_SPACE_SSH_ADDRESS_HERE

|

| 59 |

+

```

|

| 60 |

+

|

| 61 |

+

3. Use the command:

|

| 62 |

+

|

| 63 |

+

```bash

|

| 64 |

+

git pull hf main --no-rebase --allow-unrelated-histories -X ours

|

| 65 |

+

```

|

| 66 |

+

|

| 67 |

+

4. Use the command:

|

| 68 |

+

|

| 69 |

+

```bash

|

| 70 |

+

git add .

|

| 71 |

+

```

|

| 72 |

+

|

| 73 |

+

5. Use the command:

|

| 74 |

+

|

| 75 |

+

```bash

|

| 76 |

+

git commit -m "Deploying Pythonic RAG"

|

| 77 |

+

```

|

| 78 |

+

|

| 79 |

+

6. Use the command:

|

| 80 |

+

|

| 81 |

+

```bash

|

| 82 |

+

git push hf main

|

| 83 |

+

```

|

| 84 |

+

|

| 85 |

+

7. The Space should automatically build as soon as the push is completed!

|

| 86 |

+

|

| 87 |

+

> NOTE: The build will fail before you complete the following steps!

|

| 88 |

+

|

| 89 |

+

</details>

|

| 90 |

+

|

| 91 |

+

<details>

|

| 92 |

+

<summary>Adding OpenAI Secrets to the Space</summary>

|

| 93 |

+

|

| 94 |

+

1. Navigate to your Space settings.

|

| 95 |

+

|

| 96 |

+

|

| 97 |

+

|

| 98 |

+

2. Navigate to `Variables and secrets` on the Settings page and click `New secret`:

|

| 99 |

+

|

| 100 |

+

|

| 101 |

+

|

| 102 |

+

3. In the `Name` field - input `OPENAI_API_KEY` in the `Value (private)` field, put your OpenAI API Key.

|

| 103 |

+

|

| 104 |

+

|

| 105 |

+

|

| 106 |

+

4. The Space will begin rebuilding!

|

| 107 |

+

|

| 108 |

+

</details>

|

| 109 |

+

|

| 110 |

+

## 🎉

|

| 111 |

+

|

| 112 |

+

You just deployed Pythonic RAG!

|

| 113 |

+

|

| 114 |

+

Try uploading a text file and asking some questions!

|

| 115 |

+

|

| 116 |

+

## 🚧CHALLENGE MODE 🚧

|

| 117 |

+

|

| 118 |

+

For more of a challenge, please reference [Building a Chainlit App](./BuildingAChainlitApp.md)!

|

__pycache__/app.cpython-311.pyc

ADDED

|

Binary file (4.66 kB). View file

|

|

|

app.py

ADDED

|

@@ -0,0 +1,112 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os

|

| 2 |

+

from dotenv import load_dotenv

|

| 3 |

+

import chainlit as cl

|

| 4 |

+

from langchain_openai import ChatOpenAI

|

| 5 |

+

from langchain.prompts import PromptTemplate

|

| 6 |

+

from utilities.rag_utilities import create_vector_store

|

| 7 |

+

from langchain_core.prompts import ChatPromptTemplate

|

| 8 |

+

from operator import itemgetter

|

| 9 |

+

from langchain.schema.output_parser import StrOutputParser

|

| 10 |

+

from langchain.schema.runnable import RunnablePassthrough

|

| 11 |

+

from classes.app_state import AppState

|

| 12 |

+

|

| 13 |

+

document_urls = [

|

| 14 |

+

"https://www.whitehouse.gov/wp-content/uploads/2022/10/Blueprint-for-an-AI-Bill-of-Rights.pdf",

|

| 15 |

+

"https://nvlpubs.nist.gov/nistpubs/ai/NIST.AI.600-1.pdf",

|

| 16 |

+

]

|

| 17 |

+

|

| 18 |

+

# Load environment variables from .env file

|

| 19 |

+

load_dotenv()

|

| 20 |

+

|

| 21 |

+

# Get the OpenAI API key from environment variables

|

| 22 |

+

openai_api_key = os.getenv("OPENAI_API_KEY")

|

| 23 |

+

|

| 24 |

+

# Setup our state

|

| 25 |

+

state = AppState()

|

| 26 |

+

state.set_document_urls(document_urls)

|

| 27 |

+

state.set_llm_model("gpt-3.5-turbo")

|

| 28 |

+

state.set_embedding_model("text-embedding-3-small")

|

| 29 |

+

|

| 30 |

+

|

| 31 |

+

# Initialize the OpenAI LLM using LangChain

|

| 32 |

+

llm = ChatOpenAI(model=state.llm_model, openai_api_key=openai_api_key)

|

| 33 |

+

|

| 34 |

+

|

| 35 |

+

|

| 36 |

+

|

| 37 |

+

qdrant_retriever = create_vector_store(state)

|

| 38 |

+

|

| 39 |

+

system_template = """

|

| 40 |

+

You are an expert at explaining technical documents to people.

|

| 41 |

+

You are provided context below to answer the question.

|

| 42 |

+

Only use the information provided below.

|

| 43 |

+

If they do not ask a question, have a conversation with them and ask them if they have any questions

|

| 44 |

+

If you cannot answer the question with the content below say 'I don't have enough information, sorry'

|

| 45 |

+

The two documents are 'Blueprint for an AI Bill of Rights' and 'Artificial Intelligence Risk Management Framework: Generative Artificial Intelligence Profile'

|

| 46 |

+

"""

|

| 47 |

+

human_template = """

|

| 48 |

+

===

|

| 49 |

+

question:

|

| 50 |

+

{question}

|

| 51 |

+

|

| 52 |

+

===

|

| 53 |

+

context:

|

| 54 |

+

{context}

|

| 55 |

+

===

|

| 56 |

+

"""

|

| 57 |

+

chat_prompt = ChatPromptTemplate.from_messages([

|

| 58 |

+

("system", system_template),

|

| 59 |

+

("human", human_template)

|

| 60 |

+

])

|

| 61 |

+

# create the chain

|

| 62 |

+

openai_chat_model = ChatOpenAI(model="gpt-4o")

|

| 63 |

+

|

| 64 |

+

|

| 65 |

+

|

| 66 |

+

retrieval_augmented_qa_chain = (

|

| 67 |

+

{"context": itemgetter("question") | qdrant_retriever, "question": itemgetter("question")}

|

| 68 |

+

| RunnablePassthrough.assign(context=itemgetter("context"))

|

| 69 |

+

|

| 70 |

+

|

| 71 |

+

| {"response": chat_prompt | openai_chat_model, "context": itemgetter("context")}

|

| 72 |

+

)

|

| 73 |

+

|

| 74 |

+

opening_content = """

|

| 75 |

+

Welcome! I can answer your questions on AI based on the following 2 documents:

|

| 76 |

+

- Blueprint for an AI Bill of Rights

|

| 77 |

+

- Artificial Intelligence Risk Management Framework: Generative Artificial Intelligence Profile

|

| 78 |

+

|

| 79 |

+

What questions do you have for me?

|

| 80 |

+

"""

|

| 81 |

+

|

| 82 |

+

@cl.on_chat_start

|

| 83 |

+

async def on_chat_start():

|

| 84 |

+

|

| 85 |

+

await cl.Message(content=opening_content).send()

|

| 86 |

+

|

| 87 |

+

|

| 88 |

+

|

| 89 |

+

@cl.on_message

|

| 90 |

+

async def main(message):

|

| 91 |

+

|

| 92 |

+

# formatted_prompt = prompt.format(question=message.content)

|

| 93 |

+

|

| 94 |

+

# Call the LLM with the formatted prompt

|

| 95 |

+

# response = llm.invoke(formatted_prompt)

|

| 96 |

+

#

|

| 97 |

+

response = retrieval_augmented_qa_chain.invoke({"question" : message.content })

|

| 98 |

+

answer_content = response["response"].content

|

| 99 |

+

msg = cl.Message(content="")

|

| 100 |

+

# print(response["response"].content)

|

| 101 |

+

# print(f"Number of found context: {len(response['context'])}")

|

| 102 |

+

for i in range(0, len(answer_content), 50): # Adjust chunk size (e.g., 50 characters)

|

| 103 |

+

chunk = answer_content[i:i+50]

|

| 104 |

+

await msg.stream_token(chunk)

|

| 105 |

+

|

| 106 |

+

# Send the response back to the user

|

| 107 |

+

await msg.send()

|

| 108 |

+

|

| 109 |

+

context_documents = response["context"]

|

| 110 |

+

num_contexts = len(context_documents)

|

| 111 |

+

context_msg = f"Number of found context: {num_contexts}"

|

| 112 |

+

await cl.Message(content=context_msg).send()

|

chainlit.md

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Welcome to Chat with Your Text File

|

| 2 |

+

|

| 3 |

+

With this application, you can chat with an uploaded text file that is smaller than 2MB!

|

classes/__pycache__/app_state.cpython-311.pyc

ADDED

|

Binary file (4.6 kB). View file

|

|

|

classes/app_state.py

ADDED

|

@@ -0,0 +1,81 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

class AppState:

|

| 2 |

+

def __init__(self):

|

| 3 |

+

self.debug = False

|

| 4 |

+

self.llm_model = "gpt-3.5-turbo"

|

| 5 |

+

self.embedding_model = "text-embedding-3-small"

|

| 6 |

+

self.chunk_size = 1000

|

| 7 |

+

self.chunk_overlap = 100

|

| 8 |

+

self.document_urls = []

|

| 9 |

+

self.download_folder = "data/"

|

| 10 |

+

self.loaded_documents = []

|

| 11 |

+

self.single_text_documents = []

|

| 12 |

+

self.metadata = []

|

| 13 |

+

self.titles = []

|

| 14 |

+

self.documents = []

|

| 15 |

+

self.combined_document_objects = []

|

| 16 |

+

self.retriever = None

|

| 17 |

+

|

| 18 |

+

self.system_template = "You are a helpful assistant"

|

| 19 |

+

#

|

| 20 |

+

self.user_input = None

|

| 21 |

+

self.retrieved_documents = []

|

| 22 |

+

self.chat_history = []

|

| 23 |

+

self.current_question = None

|

| 24 |

+

|

| 25 |

+

def set_document_urls(self, document_urls):

|

| 26 |

+

self.document_urls = document_urls

|

| 27 |

+

|

| 28 |

+

def set_llm_model(self, llm_model):

|

| 29 |

+

self.llm_model = llm_model

|

| 30 |

+

|

| 31 |

+

def set_embedding_model(self, embedding_model):

|

| 32 |

+

self.embedding_model = embedding_model

|

| 33 |

+

|

| 34 |

+

def set_chunk_size(self, chunk_size):

|

| 35 |

+

self.chunk_size = chunk_size

|

| 36 |

+

|

| 37 |

+

def set_chunk_overlap(self, chunk_overlap):

|

| 38 |

+

self.chunk_overlap = chunk_overlap

|

| 39 |

+

|

| 40 |

+

def set_system_template(self, system_template):

|

| 41 |

+

self.system_template = system_template

|

| 42 |

+

|

| 43 |

+

def add_loaded_document(self, loaded_document):

|

| 44 |

+

self.loaded_documents.append(loaded_document)

|

| 45 |

+

|

| 46 |

+

def add_single_text_documents(self, single_text_document):

|

| 47 |

+

self.single_text_documents.append(single_text_document)

|

| 48 |

+

def add_metadata(self, metadata):

|

| 49 |

+

self.metadata = metadata

|

| 50 |

+

|

| 51 |

+

def add_title(self, title):

|

| 52 |

+

self.titles.append(title)

|

| 53 |

+

def add_document(self, document):

|

| 54 |

+

self.documents.append(document)

|

| 55 |

+

def add_combined_document_objects(self, combined_document_objects):

|

| 56 |

+

self.combined_document_objects = combined_document_objects

|

| 57 |

+

def set_retriever(self, retriever):

|

| 58 |

+

self.retriever = retriever

|

| 59 |

+

#

|

| 60 |

+

# Method to update the user input

|

| 61 |

+

def set_user_input(self, input_text):

|

| 62 |

+

self.user_input = input_text

|

| 63 |

+

|

| 64 |

+

# Method to add a retrieved document

|

| 65 |

+

# def add_document(self, document):

|

| 66 |

+

# print("adding document")

|

| 67 |

+

# print(self)

|

| 68 |

+

# self.retrieved_documents.append(document)

|

| 69 |

+

|

| 70 |

+

# Method to update chat history

|

| 71 |

+

def update_chat_history(self, message):

|

| 72 |

+

self.chat_history.append(message)

|

| 73 |

+

|

| 74 |

+

# Method to get the current state

|

| 75 |

+

def get_state(self):

|

| 76 |

+

return {

|

| 77 |

+

"user_input": self.user_input,

|

| 78 |

+

"retrieved_documents": self.retrieved_documents,

|

| 79 |

+

"chat_history": self.chat_history,

|

| 80 |

+

"current_question": self.current_question

|

| 81 |

+

}

|

images/docchain_img.png

ADDED

|

old_app.py

ADDED

|

@@ -0,0 +1,145 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os

|

| 2 |

+

from chainlit.types import AskFileResponse

|

| 3 |

+

|

| 4 |

+

from utilities_2.openai_utils.prompts import (

|

| 5 |

+

UserRolePrompt,

|

| 6 |

+

SystemRolePrompt,

|

| 7 |

+

AssistantRolePrompt,

|

| 8 |

+

)

|

| 9 |

+

from utilities_2.openai_utils.embedding import EmbeddingModel

|

| 10 |

+

from utilities_2.vectordatabase import VectorDatabase

|

| 11 |

+

from utilities_2.openai_utils.chatmodel import ChatOpenAI

|

| 12 |

+

import chainlit as cl

|

| 13 |

+

from utilities.text_utils import FileLoader

|

| 14 |

+

from utilities.pipeline import RetrievalAugmentedQAPipeline

|

| 15 |

+

# from utilities.vector_database import QdrantDatabase

|

| 16 |

+

|

| 17 |

+

|

| 18 |

+

def process_file(file, use_rct):

|

| 19 |

+

fileLoader = FileLoader()

|

| 20 |

+

return fileLoader.load_file(file, use_rct)

|

| 21 |

+

|

| 22 |

+

system_template = """\

|

| 23 |

+

Use the following context to answer a users question.

|

| 24 |

+

If you cannot find the answer in the context, say you don't know the answer.

|

| 25 |

+

The context contains the text from a document. Refer to it as the document not the context.

|

| 26 |

+

"""

|

| 27 |

+

system_role_prompt = SystemRolePrompt(system_template)

|

| 28 |

+

|

| 29 |

+

user_prompt_template = """\

|

| 30 |

+

Context:

|

| 31 |

+

{context}

|

| 32 |

+

|

| 33 |

+

Question:

|

| 34 |

+

{question}

|

| 35 |

+

"""

|

| 36 |

+

user_role_prompt = UserRolePrompt(user_prompt_template)

|

| 37 |

+

|

| 38 |

+

@cl.on_chat_start

|

| 39 |

+

async def on_chat_start():

|

| 40 |

+

# get user inputs

|

| 41 |

+

res = await cl.AskActionMessage(

|

| 42 |

+

content="Do you want to use Qdrant?",

|

| 43 |

+

actions=[

|

| 44 |

+

cl.Action(name="yes", value="yes", label="✅ Yes"),

|

| 45 |

+

cl.Action(name="no", value="no", label="❌ No"),

|

| 46 |

+

],

|

| 47 |

+

).send()

|

| 48 |

+

use_qdrant = False

|

| 49 |

+

use_qdrant_type = "Local"

|

| 50 |

+

if res and res.get("value") == "yes":

|

| 51 |

+

use_qdrant = True

|

| 52 |

+

local_res = await cl.AskActionMessage(

|

| 53 |

+

content="Do you want to use local or cloud?",

|

| 54 |

+

actions=[

|

| 55 |

+

cl.Action(name="Local", value="Local", label="✅ Local"),

|

| 56 |

+

cl.Action(name="Cloud", value="Cloud", label="❌ Cloud"),

|

| 57 |

+

],

|

| 58 |

+

).send()

|

| 59 |

+

if local_res and local_res.get("value") == "Cloud":

|

| 60 |

+

use_qdrant_type = "Cloud"

|

| 61 |

+

use_rct = False

|

| 62 |

+

res = await cl.AskActionMessage(

|

| 63 |

+

content="Do you want to use RecursiveCharacterTextSplitter?",

|

| 64 |

+

actions=[

|

| 65 |

+