Spaces:

Running

Running

IC4T

commited on

Commit

·

cfd3735

1

Parent(s):

ab98e06

update

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- app.py +1 -1

- langchain/CITATION.cff +8 -0

- langchain/Dockerfile +48 -0

- langchain/LICENSE +21 -0

- langchain/Makefile +70 -0

- langchain/README.md +93 -0

- langchain/docs/Makefile +21 -0

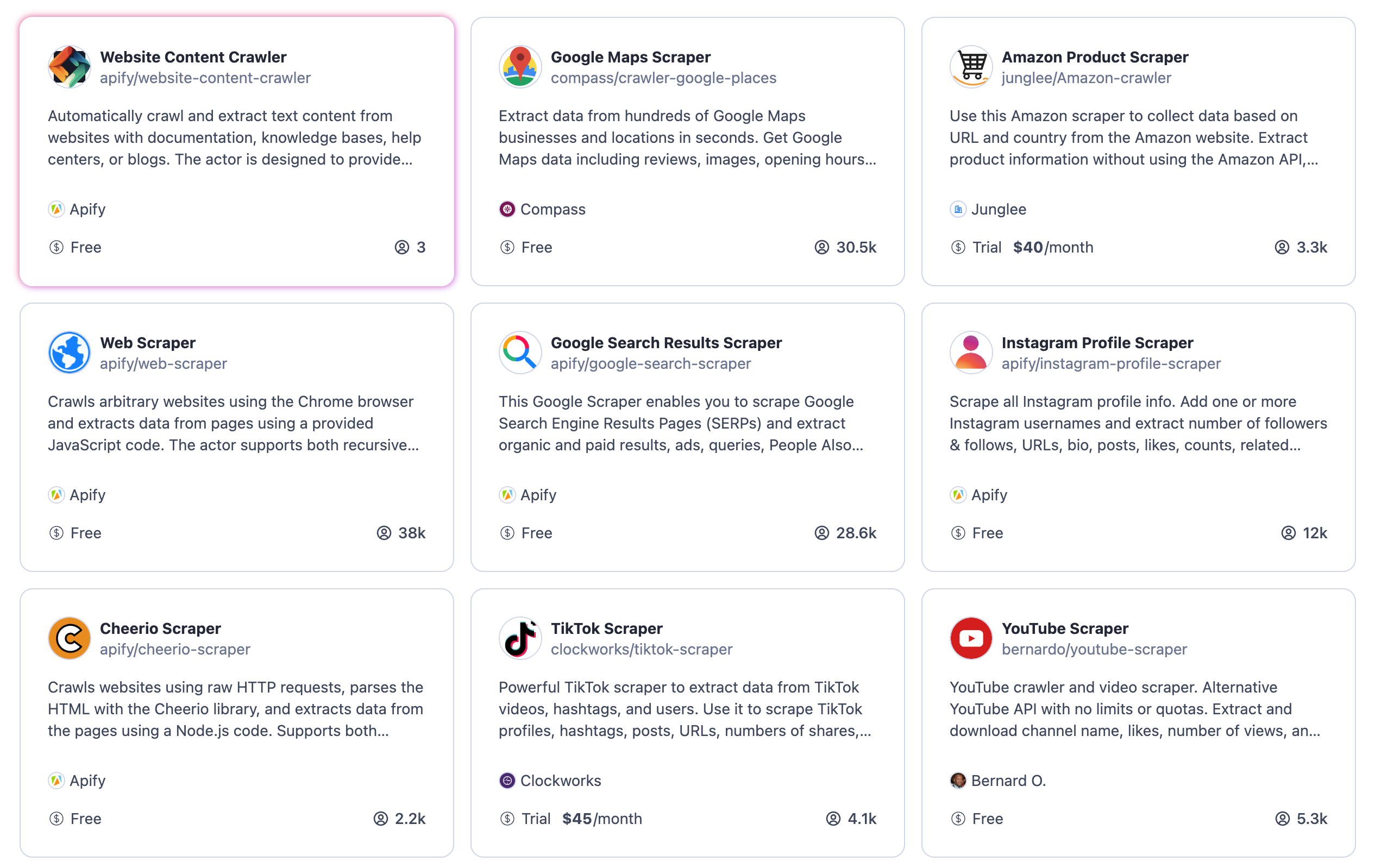

- langchain/docs/_static/ApifyActors.png +0 -0

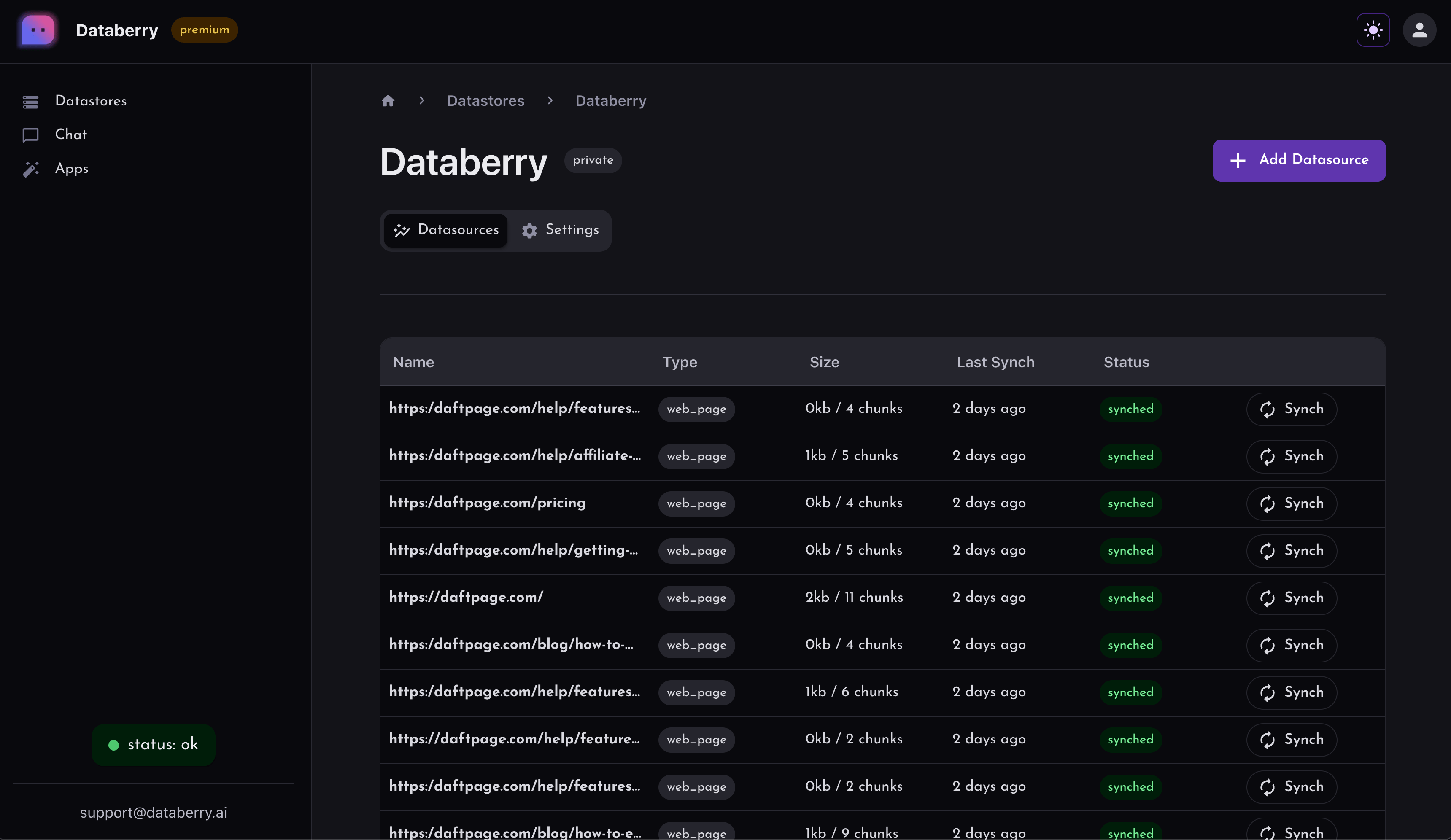

- langchain/docs/_static/DataberryDashboard.png +0 -0

- langchain/docs/_static/HeliconeDashboard.png +0 -0

- langchain/docs/_static/HeliconeKeys.png +0 -0

- langchain/docs/_static/css/custom.css +17 -0

- langchain/docs/_static/js/mendablesearch.js +58 -0

- langchain/docs/conf.py +112 -0

- langchain/docs/deployments.md +62 -0

- langchain/docs/ecosystem.rst +29 -0

- langchain/docs/ecosystem/ai21.md +16 -0

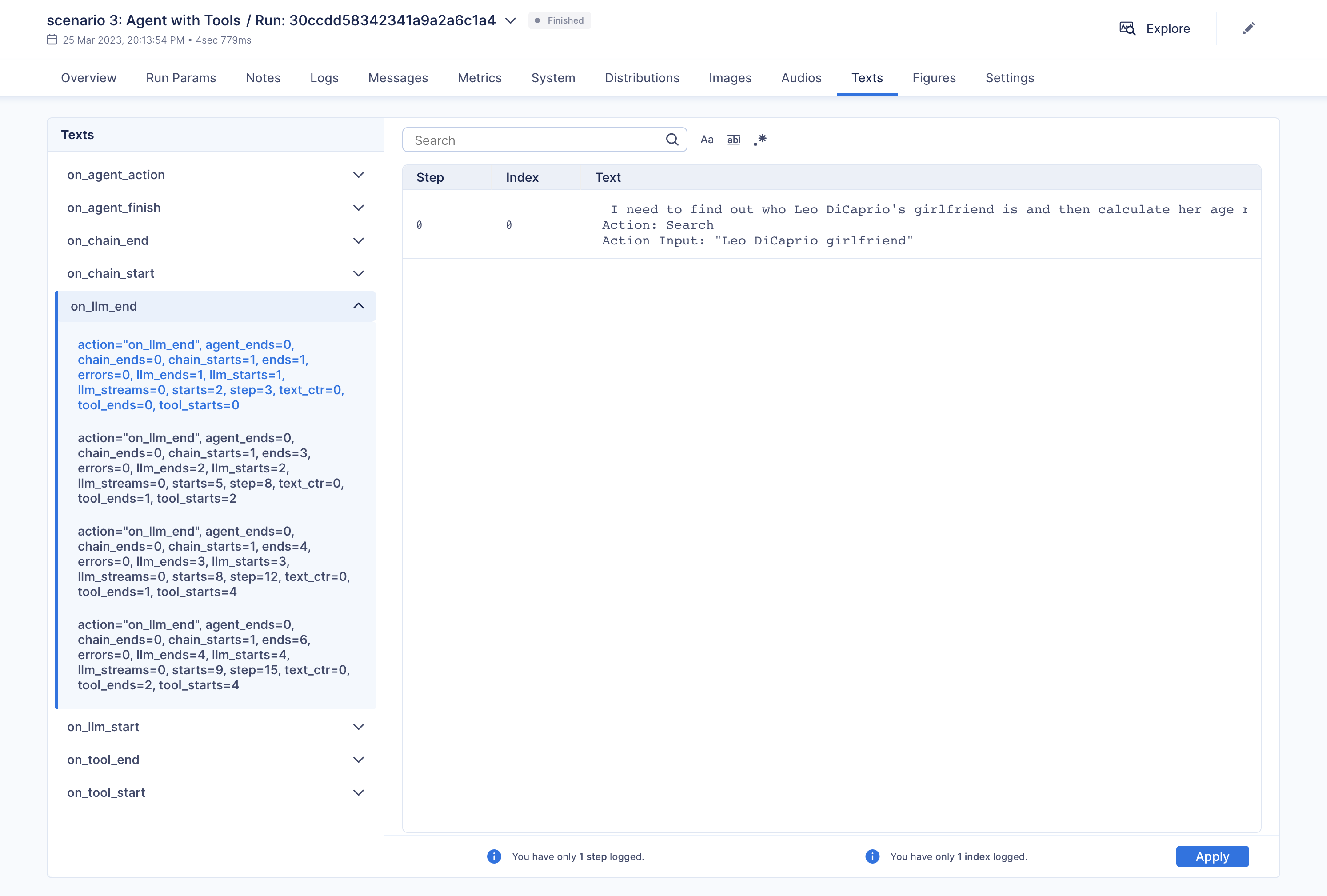

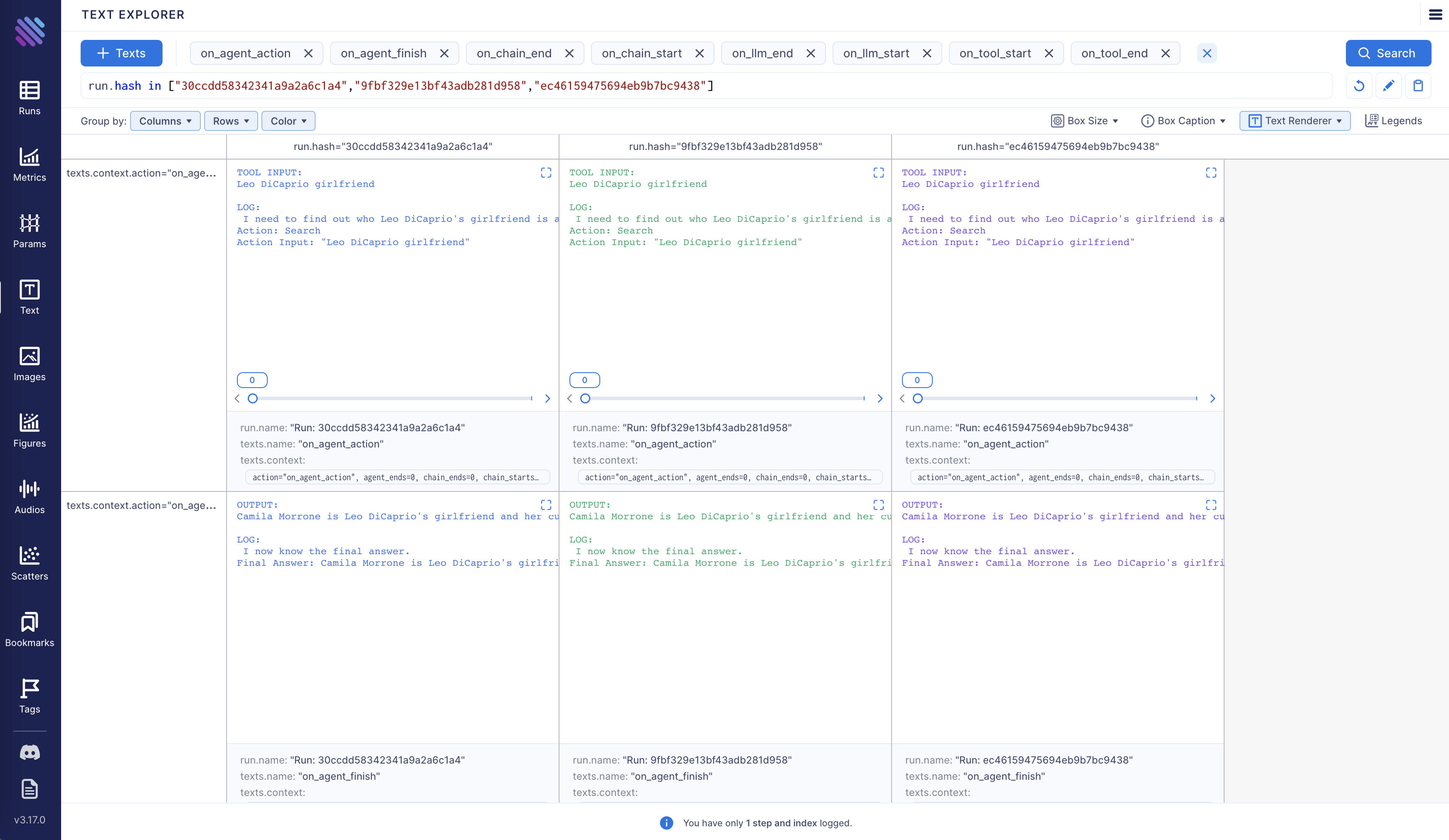

- langchain/docs/ecosystem/aim_tracking.ipynb +291 -0

- langchain/docs/ecosystem/analyticdb.md +15 -0

- langchain/docs/ecosystem/anyscale.md +17 -0

- langchain/docs/ecosystem/apify.md +46 -0

- langchain/docs/ecosystem/atlas.md +27 -0

- langchain/docs/ecosystem/bananadev.md +79 -0

- langchain/docs/ecosystem/cerebriumai.md +17 -0

- langchain/docs/ecosystem/chroma.md +20 -0

- langchain/docs/ecosystem/clearml_tracking.ipynb +587 -0

- langchain/docs/ecosystem/cohere.md +25 -0

- langchain/docs/ecosystem/comet_tracking.ipynb +347 -0

- langchain/docs/ecosystem/databerry.md +25 -0

- langchain/docs/ecosystem/deepinfra.md +17 -0

- langchain/docs/ecosystem/deeplake.md +30 -0

- langchain/docs/ecosystem/forefrontai.md +16 -0

- langchain/docs/ecosystem/google_search.md +32 -0

- langchain/docs/ecosystem/google_serper.md +73 -0

- langchain/docs/ecosystem/gooseai.md +23 -0

- langchain/docs/ecosystem/gpt4all.md +48 -0

- langchain/docs/ecosystem/graphsignal.md +44 -0

- langchain/docs/ecosystem/hazy_research.md +19 -0

- langchain/docs/ecosystem/helicone.md +53 -0

- langchain/docs/ecosystem/huggingface.md +69 -0

- langchain/docs/ecosystem/jina.md +18 -0

- langchain/docs/ecosystem/lancedb.md +23 -0

- langchain/docs/ecosystem/llamacpp.md +26 -0

- langchain/docs/ecosystem/metal.md +26 -0

- langchain/docs/ecosystem/milvus.md +20 -0

- langchain/docs/ecosystem/mlflow_tracking.ipynb +172 -0

- langchain/docs/ecosystem/modal.md +66 -0

- langchain/docs/ecosystem/myscale.md +65 -0

- langchain/docs/ecosystem/nlpcloud.md +17 -0

- langchain/docs/ecosystem/openai.md +55 -0

app.py

CHANGED

|

@@ -2,7 +2,7 @@

|

|

| 2 |

# All credit goes to `vnk8071` as I mentioned in the video.

|

| 3 |

# As this code was still in the pull request while I was creating the video, did some modifications so that it works for me locally.

|

| 4 |

import os

|

| 5 |

-

os.system('pip install -e ./langchain')

|

| 6 |

import gradio as gr

|

| 7 |

from dotenv import load_dotenv

|

| 8 |

from langchain.callbacks.streaming_stdout import StreamingStdOutCallbackHandler

|

|

|

|

| 2 |

# All credit goes to `vnk8071` as I mentioned in the video.

|

| 3 |

# As this code was still in the pull request while I was creating the video, did some modifications so that it works for me locally.

|

| 4 |

import os

|

| 5 |

+

#os.system('pip install -e ./langchain')

|

| 6 |

import gradio as gr

|

| 7 |

from dotenv import load_dotenv

|

| 8 |

from langchain.callbacks.streaming_stdout import StreamingStdOutCallbackHandler

|

langchain/CITATION.cff

ADDED

|

@@ -0,0 +1,8 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

cff-version: 1.2.0

|

| 2 |

+

message: "If you use this software, please cite it as below."

|

| 3 |

+

authors:

|

| 4 |

+

- family-names: "Chase"

|

| 5 |

+

given-names: "Harrison"

|

| 6 |

+

title: "LangChain"

|

| 7 |

+

date-released: 2022-10-17

|

| 8 |

+

url: "https://github.com/hwchase17/langchain"

|

langchain/Dockerfile

ADDED

|

@@ -0,0 +1,48 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# This is a Dockerfile for running unit tests

|

| 2 |

+

|

| 3 |

+

ARG POETRY_HOME=/opt/poetry

|

| 4 |

+

|

| 5 |

+

# Use the Python base image

|

| 6 |

+

FROM python:3.11.2-bullseye AS builder

|

| 7 |

+

|

| 8 |

+

# Define the version of Poetry to install (default is 1.4.2)

|

| 9 |

+

ARG POETRY_VERSION=1.4.2

|

| 10 |

+

|

| 11 |

+

# Define the directory to install Poetry to (default is /opt/poetry)

|

| 12 |

+

ARG POETRY_HOME

|

| 13 |

+

|

| 14 |

+

# Create a Python virtual environment for Poetry and install it

|

| 15 |

+

RUN python3 -m venv ${POETRY_HOME} && \

|

| 16 |

+

$POETRY_HOME/bin/pip install --upgrade pip && \

|

| 17 |

+

$POETRY_HOME/bin/pip install poetry==${POETRY_VERSION}

|

| 18 |

+

|

| 19 |

+

# Test if Poetry is installed in the expected path

|

| 20 |

+

RUN echo "Poetry version:" && $POETRY_HOME/bin/poetry --version

|

| 21 |

+

|

| 22 |

+

# Set the working directory for the app

|

| 23 |

+

WORKDIR /app

|

| 24 |

+

|

| 25 |

+

# Use a multi-stage build to install dependencies

|

| 26 |

+

FROM builder AS dependencies

|

| 27 |

+

|

| 28 |

+

ARG POETRY_HOME

|

| 29 |

+

|

| 30 |

+

# Copy only the dependency files for installation

|

| 31 |

+

COPY pyproject.toml poetry.lock poetry.toml ./

|

| 32 |

+

|

| 33 |

+

# Install the Poetry dependencies (this layer will be cached as long as the dependencies don't change)

|

| 34 |

+

RUN $POETRY_HOME/bin/poetry install --no-interaction --no-ansi --with test

|

| 35 |

+

|

| 36 |

+

# Use a multi-stage build to run tests

|

| 37 |

+

FROM dependencies AS tests

|

| 38 |

+

|

| 39 |

+

# Copy the rest of the app source code (this layer will be invalidated and rebuilt whenever the source code changes)

|

| 40 |

+

COPY . .

|

| 41 |

+

|

| 42 |

+

RUN /opt/poetry/bin/poetry install --no-interaction --no-ansi --with test

|

| 43 |

+

|

| 44 |

+

# Set the entrypoint to run tests using Poetry

|

| 45 |

+

ENTRYPOINT ["/opt/poetry/bin/poetry", "run", "pytest"]

|

| 46 |

+

|

| 47 |

+

# Set the default command to run all unit tests

|

| 48 |

+

CMD ["tests/unit_tests"]

|

langchain/LICENSE

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

The MIT License

|

| 2 |

+

|

| 3 |

+

Copyright (c) Harrison Chase

|

| 4 |

+

|

| 5 |

+

Permission is hereby granted, free of charge, to any person obtaining a copy

|

| 6 |

+

of this software and associated documentation files (the "Software"), to deal

|

| 7 |

+

in the Software without restriction, including without limitation the rights

|

| 8 |

+

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

| 9 |

+

copies of the Software, and to permit persons to whom the Software is

|

| 10 |

+

furnished to do so, subject to the following conditions:

|

| 11 |

+

|

| 12 |

+

The above copyright notice and this permission notice shall be included in

|

| 13 |

+

all copies or substantial portions of the Software.

|

| 14 |

+

|

| 15 |

+

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

| 16 |

+

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

| 17 |

+

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

| 18 |

+

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

| 19 |

+

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

| 20 |

+

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN

|

| 21 |

+

THE SOFTWARE.

|

langchain/Makefile

ADDED

|

@@ -0,0 +1,70 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

.PHONY: all clean format lint test tests test_watch integration_tests docker_tests help extended_tests

|

| 2 |

+

|

| 3 |

+

all: help

|

| 4 |

+

|

| 5 |

+

coverage:

|

| 6 |

+

poetry run pytest --cov \

|

| 7 |

+

--cov-config=.coveragerc \

|

| 8 |

+

--cov-report xml \

|

| 9 |

+

--cov-report term-missing:skip-covered

|

| 10 |

+

|

| 11 |

+

clean: docs_clean

|

| 12 |

+

|

| 13 |

+

docs_build:

|

| 14 |

+

cd docs && poetry run make html

|

| 15 |

+

|

| 16 |

+

docs_clean:

|

| 17 |

+

cd docs && poetry run make clean

|

| 18 |

+

|

| 19 |

+

docs_linkcheck:

|

| 20 |

+

poetry run linkchecker docs/_build/html/index.html

|

| 21 |

+

|

| 22 |

+

format:

|

| 23 |

+

poetry run black .

|

| 24 |

+

poetry run ruff --select I --fix .

|

| 25 |

+

|

| 26 |

+

PYTHON_FILES=.

|

| 27 |

+

lint: PYTHON_FILES=.

|

| 28 |

+

lint_diff: PYTHON_FILES=$(shell git diff --name-only --diff-filter=d master | grep -E '\.py$$')

|

| 29 |

+

|

| 30 |

+

lint lint_diff:

|

| 31 |

+

poetry run mypy $(PYTHON_FILES)

|

| 32 |

+

poetry run black $(PYTHON_FILES) --check

|

| 33 |

+

poetry run ruff .

|

| 34 |

+

|

| 35 |

+

TEST_FILE ?= tests/unit_tests/

|

| 36 |

+

|

| 37 |

+

test:

|

| 38 |

+

poetry run pytest $(TEST_FILE)

|

| 39 |

+

|

| 40 |

+

tests:

|

| 41 |

+

poetry run pytest $(TEST_FILE)

|

| 42 |

+

|

| 43 |

+

extended_tests:

|

| 44 |

+

poetry run pytest --only-extended tests/unit_tests

|

| 45 |

+

|

| 46 |

+

test_watch:

|

| 47 |

+

poetry run ptw --now . -- tests/unit_tests

|

| 48 |

+

|

| 49 |

+

integration_tests:

|

| 50 |

+

poetry run pytest tests/integration_tests

|

| 51 |

+

|

| 52 |

+

docker_tests:

|

| 53 |

+

docker build -t my-langchain-image:test .

|

| 54 |

+

docker run --rm my-langchain-image:test

|

| 55 |

+

|

| 56 |

+

help:

|

| 57 |

+

@echo '----'

|

| 58 |

+

@echo 'coverage - run unit tests and generate coverage report'

|

| 59 |

+

@echo 'docs_build - build the documentation'

|

| 60 |

+

@echo 'docs_clean - clean the documentation build artifacts'

|

| 61 |

+

@echo 'docs_linkcheck - run linkchecker on the documentation'

|

| 62 |

+

@echo 'format - run code formatters'

|

| 63 |

+

@echo 'lint - run linters'

|

| 64 |

+

@echo 'test - run unit tests'

|

| 65 |

+

@echo 'test - run unit tests'

|

| 66 |

+

@echo 'test TEST_FILE=<test_file> - run all tests in file'

|

| 67 |

+

@echo 'extended_tests - run only extended unit tests'

|

| 68 |

+

@echo 'test_watch - run unit tests in watch mode'

|

| 69 |

+

@echo 'integration_tests - run integration tests'

|

| 70 |

+

@echo 'docker_tests - run unit tests in docker'

|

langchain/README.md

ADDED

|

@@ -0,0 +1,93 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# 🦜️🔗 LangChain

|

| 2 |

+

|

| 3 |

+

⚡ Building applications with LLMs through composability ⚡

|

| 4 |

+

|

| 5 |

+

[](https://github.com/hwchase17/langchain/actions/workflows/lint.yml)

|

| 6 |

+

[](https://github.com/hwchase17/langchain/actions/workflows/test.yml)

|

| 7 |

+

[](https://github.com/hwchase17/langchain/actions/workflows/linkcheck.yml)

|

| 8 |

+

[](https://pepy.tech/project/langchain)

|

| 9 |

+

[](https://opensource.org/licenses/MIT)

|

| 10 |

+

[](https://twitter.com/langchainai)

|

| 11 |

+

[](https://discord.gg/6adMQxSpJS)

|

| 12 |

+

[](https://vscode.dev/redirect?url=vscode://ms-vscode-remote.remote-containers/cloneInVolume?url=https://github.com/hwchase17/langchain)

|

| 13 |

+

[](https://codespaces.new/hwchase17/langchain)

|

| 14 |

+

[](https://star-history.com/#hwchase17/langchain)

|

| 15 |

+

|

| 16 |

+

|

| 17 |

+

Looking for the JS/TS version? Check out [LangChain.js](https://github.com/hwchase17/langchainjs).

|

| 18 |

+

|

| 19 |

+

**Production Support:** As you move your LangChains into production, we'd love to offer more comprehensive support.

|

| 20 |

+

Please fill out [this form](https://forms.gle/57d8AmXBYp8PP8tZA) and we'll set up a dedicated support Slack channel.

|

| 21 |

+

|

| 22 |

+

## Quick Install

|

| 23 |

+

|

| 24 |

+

`pip install langchain`

|

| 25 |

+

or

|

| 26 |

+

`conda install langchain -c conda-forge`

|

| 27 |

+

|

| 28 |

+

## 🤔 What is this?

|

| 29 |

+

|

| 30 |

+

Large language models (LLMs) are emerging as a transformative technology, enabling developers to build applications that they previously could not. However, using these LLMs in isolation is often insufficient for creating a truly powerful app - the real power comes when you can combine them with other sources of computation or knowledge.

|

| 31 |

+

|

| 32 |

+

This library aims to assist in the development of those types of applications. Common examples of these applications include:

|

| 33 |

+

|

| 34 |

+

**❓ Question Answering over specific documents**

|

| 35 |

+

|

| 36 |

+

- [Documentation](https://langchain.readthedocs.io/en/latest/use_cases/question_answering.html)

|

| 37 |

+

- End-to-end Example: [Question Answering over Notion Database](https://github.com/hwchase17/notion-qa)

|

| 38 |

+

|

| 39 |

+

**💬 Chatbots**

|

| 40 |

+

|

| 41 |

+

- [Documentation](https://langchain.readthedocs.io/en/latest/use_cases/chatbots.html)

|

| 42 |

+

- End-to-end Example: [Chat-LangChain](https://github.com/hwchase17/chat-langchain)

|

| 43 |

+

|

| 44 |

+

**🤖 Agents**

|

| 45 |

+

|

| 46 |

+

- [Documentation](https://langchain.readthedocs.io/en/latest/modules/agents.html)

|

| 47 |

+

- End-to-end Example: [GPT+WolframAlpha](https://huggingface.co/spaces/JavaFXpert/Chat-GPT-LangChain)

|

| 48 |

+

|

| 49 |

+

## 📖 Documentation

|

| 50 |

+

|

| 51 |

+

Please see [here](https://langchain.readthedocs.io/en/latest/?) for full documentation on:

|

| 52 |

+

|

| 53 |

+

- Getting started (installation, setting up the environment, simple examples)

|

| 54 |

+

- How-To examples (demos, integrations, helper functions)

|

| 55 |

+

- Reference (full API docs)

|

| 56 |

+

- Resources (high-level explanation of core concepts)

|

| 57 |

+

|

| 58 |

+

## 🚀 What can this help with?

|

| 59 |

+

|

| 60 |

+

There are six main areas that LangChain is designed to help with.

|

| 61 |

+

These are, in increasing order of complexity:

|

| 62 |

+

|

| 63 |

+

**📃 LLMs and Prompts:**

|

| 64 |

+

|

| 65 |

+

This includes prompt management, prompt optimization, a generic interface for all LLMs, and common utilities for working with LLMs.

|

| 66 |

+

|

| 67 |

+

**🔗 Chains:**

|

| 68 |

+

|

| 69 |

+

Chains go beyond a single LLM call and involve sequences of calls (whether to an LLM or a different utility). LangChain provides a standard interface for chains, lots of integrations with other tools, and end-to-end chains for common applications.

|

| 70 |

+

|

| 71 |

+

**📚 Data Augmented Generation:**

|

| 72 |

+

|

| 73 |

+

Data Augmented Generation involves specific types of chains that first interact with an external data source to fetch data for use in the generation step. Examples include summarization of long pieces of text and question/answering over specific data sources.

|

| 74 |

+

|

| 75 |

+

**🤖 Agents:**

|

| 76 |

+

|

| 77 |

+

Agents involve an LLM making decisions about which Actions to take, taking that Action, seeing an Observation, and repeating that until done. LangChain provides a standard interface for agents, a selection of agents to choose from, and examples of end-to-end agents.

|

| 78 |

+

|

| 79 |

+

**🧠 Memory:**

|

| 80 |

+

|

| 81 |

+

Memory refers to persisting state between calls of a chain/agent. LangChain provides a standard interface for memory, a collection of memory implementations, and examples of chains/agents that use memory.

|

| 82 |

+

|

| 83 |

+

**🧐 Evaluation:**

|

| 84 |

+

|

| 85 |

+

[BETA] Generative models are notoriously hard to evaluate with traditional metrics. One new way of evaluating them is using language models themselves to do the evaluation. LangChain provides some prompts/chains for assisting in this.

|

| 86 |

+

|

| 87 |

+

For more information on these concepts, please see our [full documentation](https://langchain.readthedocs.io/en/latest/).

|

| 88 |

+

|

| 89 |

+

## 💁 Contributing

|

| 90 |

+

|

| 91 |

+

As an open-source project in a rapidly developing field, we are extremely open to contributions, whether it be in the form of a new feature, improved infrastructure, or better documentation.

|

| 92 |

+

|

| 93 |

+

For detailed information on how to contribute, see [here](.github/CONTRIBUTING.md).

|

langchain/docs/Makefile

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Minimal makefile for Sphinx documentation

|

| 2 |

+

#

|

| 3 |

+

|

| 4 |

+

# You can set these variables from the command line, and also

|

| 5 |

+

# from the environment for the first two.

|

| 6 |

+

SPHINXOPTS ?=

|

| 7 |

+

SPHINXBUILD ?= sphinx-build

|

| 8 |

+

SPHINXAUTOBUILD ?= sphinx-autobuild

|

| 9 |

+

SOURCEDIR = .

|

| 10 |

+

BUILDDIR = _build

|

| 11 |

+

|

| 12 |

+

# Put it first so that "make" without argument is like "make help".

|

| 13 |

+

help:

|

| 14 |

+

@$(SPHINXBUILD) -M help "$(SOURCEDIR)" "$(BUILDDIR)" $(SPHINXOPTS) $(O)

|

| 15 |

+

|

| 16 |

+

.PHONY: help Makefile

|

| 17 |

+

|

| 18 |

+

# Catch-all target: route all unknown targets to Sphinx using the new

|

| 19 |

+

# "make mode" option. $(O) is meant as a shortcut for $(SPHINXOPTS).

|

| 20 |

+

%: Makefile

|

| 21 |

+

@$(SPHINXBUILD) -M $@ "$(SOURCEDIR)" "$(BUILDDIR)" $(SPHINXOPTS) $(O)

|

langchain/docs/_static/ApifyActors.png

ADDED

|

langchain/docs/_static/DataberryDashboard.png

ADDED

|

langchain/docs/_static/HeliconeDashboard.png

ADDED

|

|

langchain/docs/_static/HeliconeKeys.png

ADDED

|

|

langchain/docs/_static/css/custom.css

ADDED

|

@@ -0,0 +1,17 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

pre {

|

| 2 |

+

white-space: break-spaces;

|

| 3 |

+

}

|

| 4 |

+

|

| 5 |

+

@media (min-width: 1200px) {

|

| 6 |

+

.container,

|

| 7 |

+

.container-lg,

|

| 8 |

+

.container-md,

|

| 9 |

+

.container-sm,

|

| 10 |

+

.container-xl {

|

| 11 |

+

max-width: 2560px !important;

|

| 12 |

+

}

|

| 13 |

+

}

|

| 14 |

+

|

| 15 |

+

#my-component-root *, #headlessui-portal-root * {

|

| 16 |

+

z-index: 1000000000000;

|

| 17 |

+

}

|

langchain/docs/_static/js/mendablesearch.js

ADDED

|

@@ -0,0 +1,58 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

document.addEventListener('DOMContentLoaded', () => {

|

| 2 |

+

// Load the external dependencies

|

| 3 |

+

function loadScript(src, onLoadCallback) {

|

| 4 |

+

const script = document.createElement('script');

|

| 5 |

+

script.src = src;

|

| 6 |

+

script.onload = onLoadCallback;

|

| 7 |

+

document.head.appendChild(script);

|

| 8 |

+

}

|

| 9 |

+

|

| 10 |

+

function createRootElement() {

|

| 11 |

+

const rootElement = document.createElement('div');

|

| 12 |

+

rootElement.id = 'my-component-root';

|

| 13 |

+

document.body.appendChild(rootElement);

|

| 14 |

+

return rootElement;

|

| 15 |

+

}

|

| 16 |

+

|

| 17 |

+

|

| 18 |

+

|

| 19 |

+

function initializeMendable() {

|

| 20 |

+

const rootElement = createRootElement();

|

| 21 |

+

const { MendableFloatingButton } = Mendable;

|

| 22 |

+

|

| 23 |

+

|

| 24 |

+

const iconSpan1 = React.createElement('span', {

|

| 25 |

+

}, '🦜');

|

| 26 |

+

|

| 27 |

+

const iconSpan2 = React.createElement('span', {

|

| 28 |

+

}, '🔗');

|

| 29 |

+

|

| 30 |

+

const icon = React.createElement('p', {

|

| 31 |

+

style: { color: '#ffffff', fontSize: '22px',width: '48px', height: '48px', margin: '0px', padding: '0px', display: 'flex', alignItems: 'center', justifyContent: 'center', textAlign: 'center' },

|

| 32 |

+

}, [iconSpan1, iconSpan2]);

|

| 33 |

+

|

| 34 |

+

|

| 35 |

+

|

| 36 |

+

|

| 37 |

+

const mendableFloatingButton = React.createElement(

|

| 38 |

+

MendableFloatingButton,

|

| 39 |

+

{

|

| 40 |

+

style: { darkMode: false, accentColor: '#010810' },

|

| 41 |

+

floatingButtonStyle: { color: '#ffffff', backgroundColor: '#010810' },

|

| 42 |

+

anon_key: '82842b36-3ea6-49b2-9fb8-52cfc4bde6bf', // Mendable Search Public ANON key, ok to be public

|

| 43 |

+

messageSettings: {

|

| 44 |

+

openSourcesInNewTab: false,

|

| 45 |

+

},

|

| 46 |

+

icon: icon,

|

| 47 |

+

}

|

| 48 |

+

);

|

| 49 |

+

|

| 50 |

+

ReactDOM.render(mendableFloatingButton, rootElement);

|

| 51 |

+

}

|

| 52 |

+

|

| 53 |

+

loadScript('https://unpkg.com/react@17/umd/react.production.min.js', () => {

|

| 54 |

+

loadScript('https://unpkg.com/react-dom@17/umd/react-dom.production.min.js', () => {

|

| 55 |

+

loadScript('https://unpkg.com/@mendable/[email protected]/dist/umd/mendable.min.js', initializeMendable);

|

| 56 |

+

});

|

| 57 |

+

});

|

| 58 |

+

});

|

langchain/docs/conf.py

ADDED

|

@@ -0,0 +1,112 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""Configuration file for the Sphinx documentation builder."""

|

| 2 |

+

# Configuration file for the Sphinx documentation builder.

|

| 3 |

+

#

|

| 4 |

+

# This file only contains a selection of the most common options. For a full

|

| 5 |

+

# list see the documentation:

|

| 6 |

+

# https://www.sphinx-doc.org/en/master/usage/configuration.html

|

| 7 |

+

|

| 8 |

+

# -- Path setup --------------------------------------------------------------

|

| 9 |

+

|

| 10 |

+

# If extensions (or modules to document with autodoc) are in another directory,

|

| 11 |

+

# add these directories to sys.path here. If the directory is relative to the

|

| 12 |

+

# documentation root, use os.path.abspath to make it absolute, like shown here.

|

| 13 |

+

#

|

| 14 |

+

# import os

|

| 15 |

+

# import sys

|

| 16 |

+

# sys.path.insert(0, os.path.abspath('.'))

|

| 17 |

+

|

| 18 |

+

import toml

|

| 19 |

+

|

| 20 |

+

with open("../pyproject.toml") as f:

|

| 21 |

+

data = toml.load(f)

|

| 22 |

+

|

| 23 |

+

# -- Project information -----------------------------------------------------

|

| 24 |

+

|

| 25 |

+

project = "🦜🔗 LangChain"

|

| 26 |

+

copyright = "2023, Harrison Chase"

|

| 27 |

+

author = "Harrison Chase"

|

| 28 |

+

|

| 29 |

+

version = data["tool"]["poetry"]["version"]

|

| 30 |

+

release = version

|

| 31 |

+

|

| 32 |

+

html_title = project + " " + version

|

| 33 |

+

html_last_updated_fmt = "%b %d, %Y"

|

| 34 |

+

|

| 35 |

+

|

| 36 |

+

# -- General configuration ---------------------------------------------------

|

| 37 |

+

|

| 38 |

+

# Add any Sphinx extension module names here, as strings. They can be

|

| 39 |

+

# extensions coming with Sphinx (named 'sphinx.ext.*') or your custom

|

| 40 |

+

# ones.

|

| 41 |

+

extensions = [

|

| 42 |

+

"sphinx.ext.autodoc",

|

| 43 |

+

"sphinx.ext.autodoc.typehints",

|

| 44 |

+

"sphinx.ext.autosummary",

|

| 45 |

+

"sphinx.ext.napoleon",

|

| 46 |

+

"sphinx.ext.viewcode",

|

| 47 |

+

"sphinxcontrib.autodoc_pydantic",

|

| 48 |

+

"myst_nb",

|

| 49 |

+

"sphinx_copybutton",

|

| 50 |

+

"sphinx_panels",

|

| 51 |

+

"IPython.sphinxext.ipython_console_highlighting",

|

| 52 |

+

]

|

| 53 |

+

source_suffix = [".ipynb", ".html", ".md", ".rst"]

|

| 54 |

+

|

| 55 |

+

autodoc_pydantic_model_show_json = False

|

| 56 |

+

autodoc_pydantic_field_list_validators = False

|

| 57 |

+

autodoc_pydantic_config_members = False

|

| 58 |

+

autodoc_pydantic_model_show_config_summary = False

|

| 59 |

+

autodoc_pydantic_model_show_validator_members = False

|

| 60 |

+

autodoc_pydantic_model_show_field_summary = False

|

| 61 |

+

autodoc_pydantic_model_members = False

|

| 62 |

+

autodoc_pydantic_model_undoc_members = False

|

| 63 |

+

# autodoc_typehints = "signature"

|

| 64 |

+

# autodoc_typehints = "description"

|

| 65 |

+

|

| 66 |

+

# Add any paths that contain templates here, relative to this directory.

|

| 67 |

+

templates_path = ["_templates"]

|

| 68 |

+

|

| 69 |

+

# List of patterns, relative to source directory, that match files and

|

| 70 |

+

# directories to ignore when looking for source files.

|

| 71 |

+

# This pattern also affects html_static_path and html_extra_path.

|

| 72 |

+

exclude_patterns = ["_build", "Thumbs.db", ".DS_Store"]

|

| 73 |

+

|

| 74 |

+

|

| 75 |

+

# -- Options for HTML output -------------------------------------------------

|

| 76 |

+

|

| 77 |

+

# The theme to use for HTML and HTML Help pages. See the documentation for

|

| 78 |

+

# a list of builtin themes.

|

| 79 |

+

#

|

| 80 |

+

html_theme = "sphinx_book_theme"

|

| 81 |

+

|

| 82 |

+

html_theme_options = {

|

| 83 |

+

"path_to_docs": "docs",

|

| 84 |

+

"repository_url": "https://github.com/hwchase17/langchain",

|

| 85 |

+

"use_repository_button": True,

|

| 86 |

+

}

|

| 87 |

+

|

| 88 |

+

html_context = {

|

| 89 |

+

"display_github": True, # Integrate GitHub

|

| 90 |

+

"github_user": "hwchase17", # Username

|

| 91 |

+

"github_repo": "langchain", # Repo name

|

| 92 |

+

"github_version": "master", # Version

|

| 93 |

+

"conf_py_path": "/docs/", # Path in the checkout to the docs root

|

| 94 |

+

}

|

| 95 |

+

|

| 96 |

+

# Add any paths that contain custom static files (such as style sheets) here,

|

| 97 |

+

# relative to this directory. They are copied after the builtin static files,

|

| 98 |

+

# so a file named "default.css" will overwrite the builtin "default.css".

|

| 99 |

+

html_static_path = ["_static"]

|

| 100 |

+

|

| 101 |

+

# These paths are either relative to html_static_path

|

| 102 |

+

# or fully qualified paths (eg. https://...)

|

| 103 |

+

html_css_files = [

|

| 104 |

+

"css/custom.css",

|

| 105 |

+

]

|

| 106 |

+

|

| 107 |

+

html_js_files = [

|

| 108 |

+

"js/mendablesearch.js",

|

| 109 |

+

]

|

| 110 |

+

|

| 111 |

+

nb_execution_mode = "off"

|

| 112 |

+

myst_enable_extensions = ["colon_fence"]

|

langchain/docs/deployments.md

ADDED

|

@@ -0,0 +1,62 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Deployments

|

| 2 |

+

|

| 3 |

+

So, you've created a really cool chain - now what? How do you deploy it and make it easily shareable with the world?

|

| 4 |

+

|

| 5 |

+

This section covers several options for that. Note that these options are meant for quick deployment of prototypes and demos, not for production systems. If you need help with the deployment of a production system, please contact us directly.

|

| 6 |

+

|

| 7 |

+

What follows is a list of template GitHub repositories designed to be easily forked and modified to use your chain. This list is far from exhaustive, and we are EXTREMELY open to contributions here.

|

| 8 |

+

|

| 9 |

+

## [Streamlit](https://github.com/hwchase17/langchain-streamlit-template)

|

| 10 |

+

|

| 11 |

+

This repo serves as a template for how to deploy a LangChain with Streamlit.

|

| 12 |

+

It implements a chatbot interface.

|

| 13 |

+

It also contains instructions for how to deploy this app on the Streamlit platform.

|

| 14 |

+

|

| 15 |

+

## [Gradio (on Hugging Face)](https://github.com/hwchase17/langchain-gradio-template)

|

| 16 |

+

|

| 17 |

+

This repo serves as a template for how deploy a LangChain with Gradio.

|

| 18 |

+

It implements a chatbot interface, with a "Bring-Your-Own-Token" approach (nice for not wracking up big bills).

|

| 19 |

+

It also contains instructions for how to deploy this app on the Hugging Face platform.

|

| 20 |

+

This is heavily influenced by James Weaver's [excellent examples](https://huggingface.co/JavaFXpert).

|

| 21 |

+

|

| 22 |

+

## [Beam](https://github.com/slai-labs/get-beam/tree/main/examples/langchain-question-answering)

|

| 23 |

+

|

| 24 |

+

This repo serves as a template for how deploy a LangChain with [Beam](https://beam.cloud).

|

| 25 |

+

|

| 26 |

+

It implements a Question Answering app and contains instructions for deploying the app as a serverless REST API.

|

| 27 |

+

|

| 28 |

+

## [Vercel](https://github.com/homanp/vercel-langchain)

|

| 29 |

+

|

| 30 |

+

A minimal example on how to run LangChain on Vercel using Flask.

|

| 31 |

+

|

| 32 |

+

## [Kinsta](https://github.com/kinsta/hello-world-langchain)

|

| 33 |

+

|

| 34 |

+

A minimal example on how to deploy LangChain to [Kinsta](https://kinsta.com) using Flask.

|

| 35 |

+

|

| 36 |

+

## [Fly.io](https://github.com/fly-apps/hello-fly-langchain)

|

| 37 |

+

|

| 38 |

+

A minimal example of how to deploy LangChain to [Fly.io](https://fly.io/) using Flask.

|

| 39 |

+

|

| 40 |

+

## [Digitalocean App Platform](https://github.com/homanp/digitalocean-langchain)

|

| 41 |

+

|

| 42 |

+

A minimal example on how to deploy LangChain to DigitalOcean App Platform.

|

| 43 |

+

|

| 44 |

+

## [Google Cloud Run](https://github.com/homanp/gcp-langchain)

|

| 45 |

+

|

| 46 |

+

A minimal example on how to deploy LangChain to Google Cloud Run.

|

| 47 |

+

|

| 48 |

+

## [SteamShip](https://github.com/steamship-core/steamship-langchain/)

|

| 49 |

+

|

| 50 |

+

This repository contains LangChain adapters for Steamship, enabling LangChain developers to rapidly deploy their apps on Steamship. This includes: production-ready endpoints, horizontal scaling across dependencies, persistent storage of app state, multi-tenancy support, etc.

|

| 51 |

+

|

| 52 |

+

## [Langchain-serve](https://github.com/jina-ai/langchain-serve)

|

| 53 |

+

|

| 54 |

+

This repository allows users to serve local chains and agents as RESTful, gRPC, or WebSocket APIs, thanks to [Jina](https://docs.jina.ai/). Deploy your chains & agents with ease and enjoy independent scaling, serverless and autoscaling APIs, as well as a Streamlit playground on Jina AI Cloud.

|

| 55 |

+

|

| 56 |

+

## [BentoML](https://github.com/ssheng/BentoChain)

|

| 57 |

+

|

| 58 |

+

This repository provides an example of how to deploy a LangChain application with [BentoML](https://github.com/bentoml/BentoML). BentoML is a framework that enables the containerization of machine learning applications as standard OCI images. BentoML also allows for the automatic generation of OpenAPI and gRPC endpoints. With BentoML, you can integrate models from all popular ML frameworks and deploy them as microservices running on the most optimal hardware and scaling independently.

|

| 59 |

+

|

| 60 |

+

## [Databutton](https://databutton.com/home?new-data-app=true)

|

| 61 |

+

|

| 62 |

+

These templates serve as examples of how to build, deploy, and share LangChain applications using Databutton. You can create user interfaces with Streamlit, automate tasks by scheduling Python code, and store files and data in the built-in store. Examples include a Chatbot interface with conversational memory, a Personal search engine, and a starter template for LangChain apps. Deploying and sharing is just one click away.

|

langchain/docs/ecosystem.rst

ADDED

|

@@ -0,0 +1,29 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

LangChain Ecosystem

|

| 2 |

+

===================

|

| 3 |

+

|

| 4 |

+

Guides for how other companies/products can be used with LangChain

|

| 5 |

+

|

| 6 |

+

Groups

|

| 7 |

+

----------

|

| 8 |

+

|

| 9 |

+

LangChain provides integration with many LLMs and systems:

|

| 10 |

+

|

| 11 |

+

- `LLM Providers <./modules/models/llms/integrations.html>`_

|

| 12 |

+

- `Chat Model Providers <./modules/models/chat/integrations.html>`_

|

| 13 |

+

- `Text Embedding Model Providers <./modules/models/text_embedding.html>`_

|

| 14 |

+

- `Document Loader Integrations <./modules/indexes/document_loaders.html>`_

|

| 15 |

+

- `Text Splitter Integrations <./modules/indexes/text_splitters.html>`_

|

| 16 |

+

- `Vectorstore Providers <./modules/indexes/vectorstores.html>`_

|

| 17 |

+

- `Retriever Providers <./modules/indexes/retrievers.html>`_

|

| 18 |

+

- `Tool Providers <./modules/agents/tools.html>`_

|

| 19 |

+

- `Toolkit Integrations <./modules/agents/toolkits.html>`_

|

| 20 |

+

|

| 21 |

+

Companies / Products

|

| 22 |

+

----------

|

| 23 |

+

|

| 24 |

+

|

| 25 |

+

.. toctree::

|

| 26 |

+

:maxdepth: 1

|

| 27 |

+

:glob:

|

| 28 |

+

|

| 29 |

+

ecosystem/*

|

langchain/docs/ecosystem/ai21.md

ADDED

|

@@ -0,0 +1,16 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# AI21 Labs

|

| 2 |

+

|

| 3 |

+

This page covers how to use the AI21 ecosystem within LangChain.

|

| 4 |

+

It is broken into two parts: installation and setup, and then references to specific AI21 wrappers.

|

| 5 |

+

|

| 6 |

+

## Installation and Setup

|

| 7 |

+

- Get an AI21 api key and set it as an environment variable (`AI21_API_KEY`)

|

| 8 |

+

|

| 9 |

+

## Wrappers

|

| 10 |

+

|

| 11 |

+

### LLM

|

| 12 |

+

|

| 13 |

+

There exists an AI21 LLM wrapper, which you can access with

|

| 14 |

+

```python

|

| 15 |

+

from langchain.llms import AI21

|

| 16 |

+

```

|

langchain/docs/ecosystem/aim_tracking.ipynb

ADDED

|

@@ -0,0 +1,291 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"cells": [

|

| 3 |

+

{

|

| 4 |

+

"cell_type": "markdown",

|

| 5 |

+

"metadata": {},

|

| 6 |

+

"source": [

|

| 7 |

+

"# Aim\n",

|

| 8 |

+

"\n",

|

| 9 |

+

"Aim makes it super easy to visualize and debug LangChain executions. Aim tracks inputs and outputs of LLMs and tools, as well as actions of agents. \n",

|

| 10 |

+

"\n",

|

| 11 |

+

"With Aim, you can easily debug and examine an individual execution:\n",

|

| 12 |

+

"\n",

|

| 13 |

+

"\n",

|

| 14 |

+

"\n",

|

| 15 |

+

"Additionally, you have the option to compare multiple executions side by side:\n",

|

| 16 |

+

"\n",

|

| 17 |

+

"\n",

|

| 18 |

+

"\n",

|

| 19 |

+

"Aim is fully open source, [learn more](https://github.com/aimhubio/aim) about Aim on GitHub.\n",

|

| 20 |

+

"\n",

|

| 21 |

+

"Let's move forward and see how to enable and configure Aim callback."

|

| 22 |

+

]

|

| 23 |

+

},

|

| 24 |

+

{

|

| 25 |

+

"cell_type": "markdown",

|

| 26 |

+

"metadata": {},

|

| 27 |

+

"source": [

|

| 28 |

+

"<h3>Tracking LangChain Executions with Aim</h3>"

|

| 29 |

+

]

|

| 30 |

+

},

|

| 31 |

+

{

|

| 32 |

+

"cell_type": "markdown",

|

| 33 |

+

"metadata": {},

|

| 34 |

+

"source": [

|

| 35 |

+

"In this notebook we will explore three usage scenarios. To start off, we will install the necessary packages and import certain modules. Subsequently, we will configure two environment variables that can be established either within the Python script or through the terminal."

|

| 36 |

+

]

|

| 37 |

+

},

|

| 38 |

+

{

|

| 39 |

+

"cell_type": "code",

|

| 40 |

+

"execution_count": null,

|

| 41 |

+

"metadata": {

|

| 42 |

+

"id": "mf88kuCJhbVu"

|

| 43 |

+

},

|

| 44 |

+

"outputs": [],

|

| 45 |

+

"source": [

|

| 46 |

+

"!pip install aim\n",

|

| 47 |

+

"!pip install langchain\n",

|

| 48 |

+

"!pip install openai\n",

|

| 49 |

+

"!pip install google-search-results"

|

| 50 |

+

]

|

| 51 |

+

},

|

| 52 |

+

{

|

| 53 |

+

"cell_type": "code",

|

| 54 |

+

"execution_count": null,

|

| 55 |

+

"metadata": {

|

| 56 |

+

"id": "g4eTuajwfl6L"

|

| 57 |

+

},

|

| 58 |

+

"outputs": [],

|

| 59 |

+

"source": [

|

| 60 |

+

"import os\n",

|

| 61 |

+

"from datetime import datetime\n",

|

| 62 |

+

"\n",

|

| 63 |

+

"from langchain.llms import OpenAI\n",

|

| 64 |

+

"from langchain.callbacks import AimCallbackHandler, StdOutCallbackHandler"

|

| 65 |

+

]

|

| 66 |

+

},

|

| 67 |

+

{

|

| 68 |

+

"cell_type": "markdown",

|

| 69 |

+

"metadata": {},

|

| 70 |

+

"source": [

|

| 71 |

+

"Our examples use a GPT model as the LLM, and OpenAI offers an API for this purpose. You can obtain the key from the following link: https://platform.openai.com/account/api-keys .\n",

|

| 72 |

+

"\n",

|

| 73 |

+

"We will use the SerpApi to retrieve search results from Google. To acquire the SerpApi key, please go to https://serpapi.com/manage-api-key ."

|

| 74 |

+

]

|

| 75 |

+

},

|

| 76 |

+

{

|

| 77 |

+

"cell_type": "code",

|

| 78 |

+

"execution_count": null,

|

| 79 |

+

"metadata": {

|

| 80 |

+

"id": "T1bSmKd6V2If"

|

| 81 |

+

},

|

| 82 |

+

"outputs": [],

|

| 83 |

+

"source": [

|

| 84 |

+

"os.environ[\"OPENAI_API_KEY\"] = \"...\"\n",

|

| 85 |

+

"os.environ[\"SERPAPI_API_KEY\"] = \"...\""

|

| 86 |

+

]

|

| 87 |

+

},

|

| 88 |

+

{

|

| 89 |

+

"cell_type": "markdown",

|

| 90 |

+

"metadata": {

|

| 91 |

+

"id": "QenUYuBZjIzc"

|

| 92 |

+

},

|

| 93 |

+

"source": [

|

| 94 |

+

"The event methods of `AimCallbackHandler` accept the LangChain module or agent as input and log at least the prompts and generated results, as well as the serialized version of the LangChain module, to the designated Aim run."

|

| 95 |

+

]

|

| 96 |

+

},

|

| 97 |

+

{

|

| 98 |

+

"cell_type": "code",

|

| 99 |

+

"execution_count": null,

|

| 100 |

+

"metadata": {

|

| 101 |

+

"id": "KAz8weWuUeXF"

|

| 102 |

+

},

|

| 103 |

+

"outputs": [],

|

| 104 |

+

"source": [

|

| 105 |

+

"session_group = datetime.now().strftime(\"%m.%d.%Y_%H.%M.%S\")\n",

|

| 106 |

+

"aim_callback = AimCallbackHandler(\n",

|

| 107 |

+

" repo=\".\",\n",

|

| 108 |

+

" experiment_name=\"scenario 1: OpenAI LLM\",\n",

|

| 109 |

+

")\n",

|

| 110 |

+

"\n",

|

| 111 |

+

"callbacks = [StdOutCallbackHandler(), aim_callback]\n",

|

| 112 |

+

"llm = OpenAI(temperature=0, callbacks=callbacks)"

|

| 113 |

+

]

|

| 114 |

+

},

|

| 115 |

+

{

|

| 116 |

+

"cell_type": "markdown",

|

| 117 |

+

"metadata": {

|

| 118 |

+

"id": "b8WfByB4fl6N"

|

| 119 |

+

},

|

| 120 |

+

"source": [

|

| 121 |

+

"The `flush_tracker` function is used to record LangChain assets on Aim. By default, the session is reset rather than being terminated outright."

|

| 122 |

+

]

|

| 123 |

+

},

|

| 124 |

+

{

|

| 125 |

+

"cell_type": "markdown",

|

| 126 |

+

"metadata": {},

|

| 127 |

+

"source": [

|

| 128 |

+

"<h3>Scenario 1</h3> In the first scenario, we will use OpenAI LLM."

|

| 129 |

+

]

|

| 130 |

+

},

|

| 131 |

+

{

|

| 132 |

+

"cell_type": "code",

|

| 133 |

+

"execution_count": null,

|

| 134 |

+

"metadata": {

|

| 135 |

+

"id": "o_VmneyIUyx8"

|

| 136 |

+

},

|

| 137 |

+

"outputs": [],

|

| 138 |

+

"source": [

|

| 139 |

+

"# scenario 1 - LLM\n",

|

| 140 |

+

"llm_result = llm.generate([\"Tell me a joke\", \"Tell me a poem\"] * 3)\n",

|

| 141 |

+

"aim_callback.flush_tracker(\n",

|

| 142 |

+

" langchain_asset=llm,\n",

|

| 143 |

+

" experiment_name=\"scenario 2: Chain with multiple SubChains on multiple generations\",\n",

|

| 144 |

+

")\n"

|

| 145 |

+

]

|

| 146 |

+

},

|

| 147 |

+

{

|

| 148 |

+

"cell_type": "markdown",

|

| 149 |

+

"metadata": {},

|

| 150 |

+

"source": [

|

| 151 |

+

"<h3>Scenario 2</h3> Scenario two involves chaining with multiple SubChains across multiple generations."

|

| 152 |

+

]

|

| 153 |

+

},

|

| 154 |

+

{

|

| 155 |

+

"cell_type": "code",

|

| 156 |

+

"execution_count": null,

|

| 157 |

+

"metadata": {

|

| 158 |

+

"id": "trxslyb1U28Y"

|

| 159 |

+

},

|

| 160 |

+

"outputs": [],

|

| 161 |

+

"source": [

|

| 162 |

+

"from langchain.prompts import PromptTemplate\n",

|

| 163 |

+

"from langchain.chains import LLMChain"

|

| 164 |

+

]

|

| 165 |

+

},

|

| 166 |

+

{

|

| 167 |

+

"cell_type": "code",

|

| 168 |

+

"execution_count": null,

|

| 169 |

+

"metadata": {

|

| 170 |

+

"id": "uauQk10SUzF6"

|

| 171 |

+

},

|

| 172 |

+

"outputs": [],

|

| 173 |

+

"source": [

|

| 174 |

+

"# scenario 2 - Chain\n",

|

| 175 |

+

"template = \"\"\"You are a playwright. Given the title of play, it is your job to write a synopsis for that title.\n",

|

| 176 |

+

"Title: {title}\n",

|

| 177 |

+

"Playwright: This is a synopsis for the above play:\"\"\"\n",

|

| 178 |

+