Spaces:

Sleeping

Sleeping

Upload folder using huggingface_hub

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .DS_Store +0 -0

- .gitattributes +1 -0

- .github/workflows/update_space.yml +28 -0

- .ipynb_checkpoints/CGI_Classification_by_Fourier_Embeddings-checkpoint.ipynb +725 -0

- .jupyter/desktop-workspaces/default-37a8.jupyterlab-workspace +1 -0

- Archive.zip +3 -0

- CGI/.DS_Store +0 -0

- CGI/a082.jpg +0 -0

- CGI/abraao-segundo-conan-face-3k.jpg +0 -0

- CGI/adam-fisher-afisher-ahsoka-01.jpg +0 -0

- CGI/adam-fisher-afisher-asajjventres-final01.jpg +0 -0

- CGI/adam-fisher-afisher-mib-zb-02.jpg +0 -0

- CGI/adam-fisher-afisher-priestess01.jpg +0 -0

- CGI/adam-o-donnell-portrait-mainlight.jpg +0 -0

- CGI/afhnts-s-show07.jpg +0 -0

- CGI/afhnts-s-show08.jpg +0 -0

- CGI/alessandro-mastronardi-dwarfiewhite-topaz.jpg +0 -0

- CGI/alessandro-mastronardi-popup-01.jpg +0 -0

- CGI/alex-coman-slug-beach-combined.jpg +0 -0

- CGI/alex-lucas-sun-worm-002.jpg +0 -0

- CGI/alex-lucas-sun-worm-008.jpg +0 -0

- CGI/alex-pi-final-01.jpg +0 -0

- CGI/alex-pi-hangar-robots-alex-pi.jpg +0 -0

- CGI/alex-pi-ruins-ancient-civilization-final-01.jpg +0 -0

- CGI/alex-pi-temple-on-the-planet-582-73-final.jpg +0 -0

- CGI/alex-savelev-samurai-alex-saveliev-front.jpg +0 -0

- CGI/alexandre-corbini-goth-princess-03.jpg +0 -0

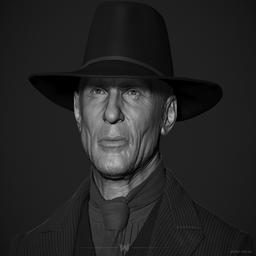

- CGI/andor-kollar-andorkollar-malehead1.jpg +0 -0

- CGI/andrea-bertaccini-01-lookdev-006.jpg +0 -0

- CGI/andrea-bertaccini-lorane-21-post.jpg +0 -0

- CGI/andrew-ariza-main-5.jpg +0 -0

- CGI/andrew-averkin-train-01.jpg +0 -0

- CGI/anthony-catillaz-artico-luminos-design-a-black-spider-man-looking-over-the-rainy-fd96ccx-e05f-460e-abcc-4a1462881264.jpg +0 -0

- CGI/antoine-collignon-1.jpg +0 -0

- CGI/antoine-collignon-final-piece.jpg +0 -0

- CGI/antoine-di-lorenzo-imperfectmechacell01.jpg +0 -0

- CGI/antoine-verney-carron-elephantasian03f01.jpg +0 -0

- CGI/aobo-li-light04.jpg +0 -0

- CGI/april-ed6705a8f03679c5e8012dc7d2cd02e4.jpg +0 -0

- CGI/arthur-yuan-rl-bachi-statue.jpg +0 -0

- CGI/arthur-yuan-rl-concept-environment-ukigumo-mountain-town.jpg +0 -0

- CGI/artur-tarnowski-1-girl-beauty-1920compr.jpg +0 -0

- CGI/artur-tarnowski-girl-prev-131-post-jpg.jpg +0 -0

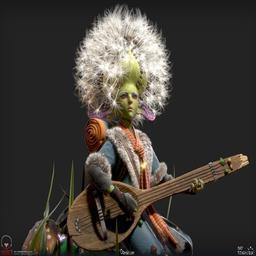

- CGI/baj-singh-dande-rend01.jpg +0 -0

- CGI/baolong-zhang-goblin-7.jpg +0 -0

- CGI/baolong-zhang-render37b-small2.jpg +0 -0

- CGI/baolong-zhang-sirus-closeup02.jpg +0 -0

- CGI/baolong-zhang-w-113.jpg +0 -0

- CGI/ben-erdt-gren-rnd-l.jpg +0 -0

- CGI/bora-kim-1.jpg +0 -0

.DS_Store

ADDED

|

Binary file (14.3 kB). View file

|

|

|

.gitattributes

CHANGED

|

@@ -33,3 +33,4 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

embedding_modelv2.keras filter=lfs diff=lfs merge=lfs -text

|

.github/workflows/update_space.yml

ADDED

|

@@ -0,0 +1,28 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

name: Run Python script

|

| 2 |

+

|

| 3 |

+

on:

|

| 4 |

+

push:

|

| 5 |

+

branches:

|

| 6 |

+

- main

|

| 7 |

+

|

| 8 |

+

jobs:

|

| 9 |

+

build:

|

| 10 |

+

runs-on: ubuntu-latest

|

| 11 |

+

|

| 12 |

+

steps:

|

| 13 |

+

- name: Checkout

|

| 14 |

+

uses: actions/checkout@v2

|

| 15 |

+

|

| 16 |

+

- name: Set up Python

|

| 17 |

+

uses: actions/setup-python@v2

|

| 18 |

+

with:

|

| 19 |

+

python-version: '3.9'

|

| 20 |

+

|

| 21 |

+

- name: Install Gradio

|

| 22 |

+

run: python -m pip install gradio

|

| 23 |

+

|

| 24 |

+

- name: Log in to Hugging Face

|

| 25 |

+

run: python -c 'import huggingface_hub; huggingface_hub.login(token="${{ secrets.hf_token }}")'

|

| 26 |

+

|

| 27 |

+

- name: Deploy to Spaces

|

| 28 |

+

run: gradio deploy

|

.ipynb_checkpoints/CGI_Classification_by_Fourier_Embeddings-checkpoint.ipynb

ADDED

|

@@ -0,0 +1,725 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"nbformat": 4,

|

| 3 |

+

"nbformat_minor": 0,

|

| 4 |

+

"metadata": {

|

| 5 |

+

"colab": {

|

| 6 |

+

"private_outputs": true,

|

| 7 |

+

"provenance": [],

|

| 8 |

+

"machine_shape": "hm"

|

| 9 |

+

},

|

| 10 |

+

"kernelspec": {

|

| 11 |

+

"name": "python3",

|

| 12 |

+

"display_name": "Python 3"

|

| 13 |

+

},

|

| 14 |

+

"language_info": {

|

| 15 |

+

"name": "python"

|

| 16 |

+

}

|

| 17 |

+

},

|

| 18 |

+

"cells": [

|

| 19 |

+

{

|

| 20 |

+

"cell_type": "markdown",

|

| 21 |

+

"source": [

|

| 22 |

+

"### Data Preprocessing"

|

| 23 |

+

],

|

| 24 |

+

"metadata": {

|

| 25 |

+

"id": "-dt9JrHpxRNH"

|

| 26 |

+

}

|

| 27 |

+

},

|

| 28 |

+

{

|

| 29 |

+

"cell_type": "code",

|

| 30 |

+

"source": [

|

| 31 |

+

"import os\n",

|

| 32 |

+

"import cv2\n",

|

| 33 |

+

"import numpy as np\n",

|

| 34 |

+

"import matplotlib.pyplot as plt\n",

|

| 35 |

+

"from sklearn.manifold import TSNE\n",

|

| 36 |

+

"from sklearn.model_selection import train_test_split, cross_val_score, StratifiedKFold\n",

|

| 37 |

+

"from sklearn.metrics import accuracy_score, f1_score, confusion_matrix\n",

|

| 38 |

+

"from sklearn.neighbors import KNeighborsClassifier\n",

|

| 39 |

+

"from xgboost import XGBClassifier\n",

|

| 40 |

+

"from sklearn.decomposition import PCA\n",

|

| 41 |

+

"from sklearn.ensemble import RandomForestClassifier\n",

|

| 42 |

+

"from sklearn.decomposition import PCA\n",

|

| 43 |

+

"from scipy.spatial import distance\n",

|

| 44 |

+

"from collections import Counter\n",

|

| 45 |

+

"import seaborn as sns\n",

|

| 46 |

+

"import joblib"

|

| 47 |

+

],

|

| 48 |

+

"metadata": {

|

| 49 |

+

"id": "dHy-E-RQlDoj"

|

| 50 |

+

},

|

| 51 |

+

"execution_count": null,

|

| 52 |

+

"outputs": []

|

| 53 |

+

},

|

| 54 |

+

{

|

| 55 |

+

"cell_type": "code",

|

| 56 |

+

"source": [

|

| 57 |

+

"# Evaluate classifiers\n",

|

| 58 |

+

"def evaluate_classifier(y_true, y_pred, classifier_name):\n",

|

| 59 |

+

" acc = accuracy_score(y_true, y_pred)\n",

|

| 60 |

+

" f1 = f1_score(y_true, y_pred)\n",

|

| 61 |

+

" cm = confusion_matrix(y_true, y_pred)\n",

|

| 62 |

+

" print(f\"{classifier_name} - Accuracy: {acc:.4f}, F1 Score: {f1:.4f}\")\n",

|

| 63 |

+

" print(f\"Confusion Matrix:\\n{cm}\\n\")\n",

|

| 64 |

+

"\n",

|

| 65 |

+

" plt.figure(figsize=(8, 6))\n",

|

| 66 |

+

" sns.heatmap(cm, annot=True, fmt='d', cmap='Blues', xticklabels=['Real Photo', 'CGI'], yticklabels=['Real Photo', 'CGI'])\n",

|

| 67 |

+

" plt.title(f'Confusion Matrix for {classifier_name}')\n",

|

| 68 |

+

" plt.xlabel('Predicted Labels')\n",

|

| 69 |

+

" plt.ylabel('True Labels')\n",

|

| 70 |

+

" plt.show()"

|

| 71 |

+

],

|

| 72 |

+

"metadata": {

|

| 73 |

+

"id": "60Rkg6uR5oyS"

|

| 74 |

+

},

|

| 75 |

+

"execution_count": null,

|

| 76 |

+

"outputs": []

|

| 77 |

+

},

|

| 78 |

+

{

|

| 79 |

+

"cell_type": "code",

|

| 80 |

+

"source": [

|

| 81 |

+

"import numpy as np\n",

|

| 82 |

+

"from PIL import Image\n",

|

| 83 |

+

"from scipy.fftpack import fft2\n",

|

| 84 |

+

"from tensorflow.keras.models import load_model, Model\n",

|

| 85 |

+

"\n",

|

| 86 |

+

"# Function to apply Fourier transform\n",

|

| 87 |

+

"def apply_fourier_transform(image):\n",

|

| 88 |

+

" image = np.array(image)\n",

|

| 89 |

+

" fft_image = fft2(image)\n",

|

| 90 |

+

" return np.abs(fft_image)\n",

|

| 91 |

+

"\n",

|

| 92 |

+

"# Function to preprocess image\n",

|

| 93 |

+

"def preprocess_image(image_path):\n",

|

| 94 |

+

" try:\n",

|

| 95 |

+

" image = Image.open(image_path).convert('L')\n",

|

| 96 |

+

" image = image.resize((256, 256))\n",

|

| 97 |

+

" image = apply_fourier_transform(image)\n",

|

| 98 |

+

" image = np.expand_dims(image, axis=-1) # Expand dimensions to match model input shape\n",

|

| 99 |

+

" image = np.expand_dims(image, axis=0) # Expand to add batch dimension\n",

|

| 100 |

+

" return image\n",

|

| 101 |

+

" except Exception as e:\n",

|

| 102 |

+

" print(f\"Error processing image {image_path}: {e}\")\n",

|

| 103 |

+

" return None\n",

|

| 104 |

+

"\n",

|

| 105 |

+

"# Function to load embedding model and calculate embeddings\n",

|

| 106 |

+

"def calculate_embeddings(image_path, model_path='embedding_modelv2.keras'):\n",

|

| 107 |

+

" # Load the trained model\n",

|

| 108 |

+

" model = load_model(model_path)\n",

|

| 109 |

+

"\n",

|

| 110 |

+

" # Remove the final classification layer to get embeddings\n",

|

| 111 |

+

" embedding_model = Model(inputs=model.input, outputs=model.output)\n",

|

| 112 |

+

"\n",

|

| 113 |

+

" # Preprocess the image\n",

|

| 114 |

+

" preprocessed_image = preprocess_image(image_path)\n",

|

| 115 |

+

"\n",

|

| 116 |

+

" # Calculate embeddings\n",

|

| 117 |

+

" embeddings = embedding_model.predict(preprocessed_image)\n",

|

| 118 |

+

"\n",

|

| 119 |

+

" return embeddings\n",

|

| 120 |

+

"\n",

|

| 121 |

+

"\n",

|

| 122 |

+

"def calculate_embeddings_folder(folder_path, model_path='embedding_modelv2.keras'):\n",

|

| 123 |

+

" embeddings = []\n",

|

| 124 |

+

" labels = []\n",

|

| 125 |

+

" for filename in os.listdir(folder_path):\n",

|

| 126 |

+

" if filename.endswith(\".jpg\") or filename.endswith(\".png\"):\n",

|

| 127 |

+

" image_path = os.path.join(folder_path, filename)\n",

|

| 128 |

+

" embedding = calculate_embeddings(image_path, model_path)\n",

|

| 129 |

+

" embeddings.append(embedding)\n",

|

| 130 |

+

" if \"CGI\" in folder_path:\n",

|

| 131 |

+

" labels.append(1)\n",

|

| 132 |

+

" else:\n",

|

| 133 |

+

" labels.append(0)\n",

|

| 134 |

+

" return embeddings, labels"

|

| 135 |

+

],

|

| 136 |

+

"metadata": {

|

| 137 |

+

"id": "oIsM1ilT5cQC"

|

| 138 |

+

},

|

| 139 |

+

"execution_count": null,

|

| 140 |

+

"outputs": []

|

| 141 |

+

},

|

| 142 |

+

{

|

| 143 |

+

"cell_type": "code",

|

| 144 |

+

"source": [

|

| 145 |

+

"embeddings = np.load('embeddings.npy')\n",

|

| 146 |

+

"labels = np.load('labels.npy')"

|

| 147 |

+

],

|

| 148 |

+

"metadata": {

|

| 149 |

+

"id": "1lzKxl_gJUEg"

|

| 150 |

+

},

|

| 151 |

+

"execution_count": null,

|

| 152 |

+

"outputs": []

|

| 153 |

+

},

|

| 154 |

+

{

|

| 155 |

+

"cell_type": "code",

|

| 156 |

+

"source": [

|

| 157 |

+

"X_train, X_test, y_train, y_test = train_test_split(embeddings, labels, test_size=0.2, random_state=42, stratify=labels)"

|

| 158 |

+

],

|

| 159 |

+

"metadata": {

|

| 160 |

+

"id": "12-KegWL3ZZh"

|

| 161 |

+

},

|

| 162 |

+

"execution_count": null,

|

| 163 |

+

"outputs": []

|

| 164 |

+

},

|

| 165 |

+

{

|

| 166 |

+

"cell_type": "code",

|

| 167 |

+

"source": [

|

| 168 |

+

"X_test.shape"

|

| 169 |

+

],

|

| 170 |

+

"metadata": {

|

| 171 |

+

"id": "8YY8_59Lmb1N"

|

| 172 |

+

},

|

| 173 |

+

"execution_count": null,

|

| 174 |

+

"outputs": []

|

| 175 |

+

},

|

| 176 |

+

{

|

| 177 |

+

"cell_type": "code",

|

| 178 |

+

"source": [

|

| 179 |

+

"xgb_clf = XGBClassifier(use_label_encoder=False, eval_metric='logloss', early_stopping_rounds=10)\n",

|

| 180 |

+

"xgb_clf.fit(X_train, y_train, eval_set=[(X_test, y_test)], verbose=False)\n",

|

| 181 |

+

"y_pred_xgb = xgb_clf.predict(X_test)\n",

|

| 182 |

+

"evaluate_classifier(y_test, y_pred_xgb, \"XGBoost Classifier\")"

|

| 183 |

+

],

|

| 184 |

+

"metadata": {

|

| 185 |

+

"id": "fSosG_aU3o67"

|

| 186 |

+

},

|

| 187 |

+

"execution_count": null,

|

| 188 |

+

"outputs": []

|

| 189 |

+

},

|

| 190 |

+

{

|

| 191 |

+

"cell_type": "code",

|

| 192 |

+

"source": [

|

| 193 |

+

"from sklearn.neural_network import MLPClassifier as MLP\n",

|

| 194 |

+

"from sklearn.svm import SVC"

|

| 195 |

+

],

|

| 196 |

+

"metadata": {

|

| 197 |

+

"id": "YLhckFv8JYK0"

|

| 198 |

+

},

|

| 199 |

+

"execution_count": null,

|

| 200 |

+

"outputs": []

|

| 201 |

+

},

|

| 202 |

+

{

|

| 203 |

+

"cell_type": "code",

|

| 204 |

+

"source": [

|

| 205 |

+

"# Naive random classifier\n",

|

| 206 |

+

"class RandomClassifier:\n",

|

| 207 |

+

" def fit(self, X, y):\n",

|

| 208 |

+

" pass\n",

|

| 209 |

+

"\n",

|

| 210 |

+

" def predict(self, X):\n",

|

| 211 |

+

" return np.random.choice([0, 1], size=X.shape[0])\n",

|

| 212 |

+

"\n",

|

| 213 |

+

"class MeanClassifier:\n",

|

| 214 |

+

" def fit(self, X, y):\n",

|

| 215 |

+

" self.mean_0 = np.mean(X[y == 0], axis=0) if np.any(y == 0) else None\n",

|

| 216 |

+

" self.mean_1 = np.mean(X[y == 1], axis=0) if np.any(y == 1) else None\n",

|

| 217 |

+

"\n",

|

| 218 |

+

" def predict(self, X):\n",

|

| 219 |

+

" preds = []\n",

|

| 220 |

+

" for x in X:\n",

|

| 221 |

+

" dist_0 = distance.euclidean(x, self.mean_0) if self.mean_0 is not None else np.inf\n",

|

| 222 |

+

" dist_1 = distance.euclidean(x, self.mean_1) if self.mean_1 is not None else np.inf\n",

|

| 223 |

+

" preds.append(1 if dist_1 < dist_0 else 0)\n",

|

| 224 |

+

" return np.array(preds)\n",

|

| 225 |

+

"\n",

|

| 226 |

+

" def predict_proba(self, X):\n",

|

| 227 |

+

" # An implementation of probability prediction which uses a softmax function to determine the probability of each class based on the distance to the mean for each prototype\n",

|

| 228 |

+

" preds = []\n",

|

| 229 |

+

" for x in X:\n",

|

| 230 |

+

" dist_0 = distance.euclidean(x, self.mean_0) if self.mean_0 is not None else np\n",

|

| 231 |

+

" dist_1 = distance.euclidean(x, self.mean_1) if self.mean_1 is not None else np.inf\n",

|

| 232 |

+

" prob_0 = np.exp(-dist_0) / (np.exp(-dist_0) + np.exp(-dist_1))\n",

|

| 233 |

+

" prob_1 = np.exp(-dist_1) / (np.exp(-dist_0) + np.exp(-dist_1))\n",

|

| 234 |

+

" preds.append([prob_0, prob_1])\n",

|

| 235 |

+

" return np.array(preds)\n",

|

| 236 |

+

"\n",

|

| 237 |

+

" def mean_distance(self, x):\n",

|

| 238 |

+

" dist_mean_0 = distance.euclidean(x, self.mean_0) if self.mean_0 is not None else np.inf\n",

|

| 239 |

+

" dist_mean_1 = distance.euclidean(x, self.mean_1) if self.mean_1 is not None else np.inf\n",

|

| 240 |

+

" return dist_mean_0, dist_mean_1\n",

|

| 241 |

+

"\n",

|

| 242 |

+

"# Initialize classifiers\n",

|

| 243 |

+

"random_clf = RandomClassifier()\n",

|

| 244 |

+

"mean_clf = MeanClassifier()\n",

|

| 245 |

+

"knn_clf = KNeighborsClassifier(n_neighbors=10)\n",

|

| 246 |

+

"rf_clf = RandomForestClassifier(max_depth=10, random_state=42)\n",

|

| 247 |

+

"mlp_clf = MLP(hidden_layer_sizes=(128,), max_iter=1000, random_state=42)\n",

|

| 248 |

+

"svc_clf = SVC()\n",

|

| 249 |

+

"\n",

|

| 250 |

+

"# Train classifiers\n",

|

| 251 |

+

"random_clf.fit(X_train, y_train)\n",

|

| 252 |

+

"mean_clf.fit(X_train, y_train)\n",

|

| 253 |

+

"knn_clf.fit(X_train, y_train)\n",

|

| 254 |

+

"#xgb_clf.fit(X_train, y_train, eval_set=[(X_test, y_test)], verbose=False)\n",

|

| 255 |

+

"rf_clf.fit(X_train, y_train)\n",

|

| 256 |

+

"mlp_clf.fit(X_train, y_train)\n",

|

| 257 |

+

"svc_clf.fit(X_train, y_train)\n",

|

| 258 |

+

"\n",

|

| 259 |

+

"# Make predictions\n",

|

| 260 |

+

"y_pred_random = random_clf.predict(X_test)\n",

|

| 261 |

+

"y_pred_mean = mean_clf.predict(X_test)\n",

|

| 262 |

+

"y_pred_knn = knn_clf.predict(X_test)\n",

|

| 263 |

+

"#y_pred_xgb = xgb_clf.predict(X_test)\n",

|

| 264 |

+

"y_pred_rf = rf_clf.predict(X_test)\n",

|

| 265 |

+

"y_pred_mlp = mlp_clf.predict(X_test)\n",

|

| 266 |

+

"y_pred_svc = svc_clf.predict(X_test)"

|

| 267 |

+

],

|

| 268 |

+

"metadata": {

|

| 269 |

+

"id": "MXsnZFDXlNrT"

|

| 270 |

+

},

|

| 271 |

+

"execution_count": null,

|

| 272 |

+

"outputs": []

|

| 273 |

+

},

|

| 274 |

+

{

|

| 275 |

+

"cell_type": "code",

|

| 276 |

+

"source": [

|

| 277 |

+

"evaluate_classifier(y_test, y_pred_random, \"Random Classifier\")\n",

|

| 278 |

+

"evaluate_classifier(y_test, y_pred_mean, \"Mean Classifier\")\n",

|

| 279 |

+

"evaluate_classifier(y_test, y_pred_knn, \"KNN Classifier\")"

|

| 280 |

+

],

|

| 281 |

+

"metadata": {

|

| 282 |

+

"id": "sJ52bzdJmDvn"

|

| 283 |

+

},

|

| 284 |

+

"execution_count": null,

|

| 285 |

+

"outputs": []

|

| 286 |

+

},

|

| 287 |

+

{

|

| 288 |

+

"cell_type": "code",

|

| 289 |

+

"source": [

|

| 290 |

+

"evaluate_classifier(y_test, y_pred_xgb, \"XGBoost Classifier\")\n",

|

| 291 |

+

"evaluate_classifier(y_test, y_pred_rf, \"Random Forest Classifier\")\n",

|

| 292 |

+

"evaluate_classifier(y_test, y_pred_svc, \"SVC Classifier\")"

|

| 293 |

+

],

|

| 294 |

+

"metadata": {

|

| 295 |

+

"id": "DqyF_6STHW7o"

|

| 296 |

+

},

|

| 297 |

+

"execution_count": null,

|

| 298 |

+

"outputs": []

|

| 299 |

+

},

|

| 300 |

+

{

|

| 301 |

+

"cell_type": "code",

|

| 302 |

+

"source": [

|

| 303 |

+

"evaluate_classifier(y_test, y_pred_mlp, \"MLP Classifier\")"

|

| 304 |

+

],

|

| 305 |

+

"metadata": {

|

| 306 |

+

"id": "QfrAONS-DLau"

|

| 307 |

+

},

|

| 308 |

+

"execution_count": null,

|

| 309 |

+

"outputs": []

|

| 310 |

+

},

|

| 311 |

+

{

|

| 312 |

+

"cell_type": "code",

|

| 313 |

+

"source": [

|

| 314 |

+

"test_filename = \"neytiri.png\""

|

| 315 |

+

],

|

| 316 |

+

"metadata": {

|

| 317 |

+

"id": "awoV0KS8_3Bi"

|

| 318 |

+

},

|

| 319 |

+

"execution_count": null,

|

| 320 |

+

"outputs": []

|

| 321 |

+

},

|

| 322 |

+

{

|

| 323 |

+

"cell_type": "code",

|

| 324 |

+

"source": [

|

| 325 |

+

"test_embeddings = calculate_embeddings(test_filename, model_path='embedding_modelv2.keras')"

|

| 326 |

+

],

|

| 327 |

+

"metadata": {

|

| 328 |

+

"id": "ddV4s5IUAaCc"

|

| 329 |

+

},

|

| 330 |

+

"execution_count": null,

|

| 331 |

+

"outputs": []

|

| 332 |

+

},

|

| 333 |

+

{

|

| 334 |

+

"cell_type": "code",

|

| 335 |

+

"source": [

|

| 336 |

+

"def print_prob(model, image_path):\n",

|

| 337 |

+

" test_embeddings = calculate_embeddings(image_path, model_path='embedding_modelv2.keras')\n",

|

| 338 |

+

" probs = model.predict_proba(test_embeddings)\n",

|

| 339 |

+

" print(f\"Real Photo Probability: {probs[0][0]:.4f}\")\n",

|

| 340 |

+

" print(f\"CGI Probability: {probs[0][1]:.4f}\")"

|

| 341 |

+

],

|

| 342 |

+

"metadata": {

|

| 343 |

+

"id": "9yEk_X2rEH4K"

|

| 344 |

+

},

|

| 345 |

+

"execution_count": null,

|

| 346 |

+

"outputs": []

|

| 347 |

+

},

|

| 348 |

+

{

|

| 349 |

+

"cell_type": "code",

|

| 350 |

+

"source": [

|

| 351 |

+

"print_prob(mlp_clf, test_filename)"

|

| 352 |

+

],

|

| 353 |

+

"metadata": {

|

| 354 |

+

"id": "yD2JCKyJROb6"

|

| 355 |

+

},

|

| 356 |

+

"execution_count": null,

|

| 357 |

+

"outputs": []

|

| 358 |

+

},

|

| 359 |

+

{

|

| 360 |

+

"cell_type": "code",

|

| 361 |

+

"source": [

|

| 362 |

+

"print_prob(mean_clf, test_filename)"

|

| 363 |

+

],

|

| 364 |

+

"metadata": {

|

| 365 |

+

"id": "A7Nu_ABnRpT8"

|

| 366 |

+

},

|

| 367 |

+

"execution_count": null,

|

| 368 |

+

"outputs": []

|

| 369 |

+

},

|

| 370 |

+

{

|

| 371 |

+

"cell_type": "code",

|

| 372 |

+

"source": [

|

| 373 |

+

"print_prob(xgb_clf, test_filename)"

|

| 374 |

+

],

|

| 375 |

+

"metadata": {

|

| 376 |

+

"id": "AFJJuPG6Rpdz"

|

| 377 |

+

},

|

| 378 |

+

"execution_count": null,

|

| 379 |

+

"outputs": []

|

| 380 |

+

},

|

| 381 |

+

{

|

| 382 |

+

"cell_type": "code",

|

| 383 |

+

"source": [

|

| 384 |

+

"print_prob(rf_clf, test_filename)"

|

| 385 |

+

],

|

| 386 |

+

"metadata": {

|

| 387 |

+

"id": "Wil3P5JcRYNX"

|

| 388 |

+

},

|

| 389 |

+

"execution_count": null,

|

| 390 |

+

"outputs": []

|

| 391 |

+

},

|

| 392 |

+

{

|

| 393 |

+

"cell_type": "code",

|

| 394 |

+

"source": [

|

| 395 |

+

"print_prob(knn_clf, test_filename)"

|

| 396 |

+

],

|

| 397 |

+

"metadata": {

|

| 398 |

+

"id": "14O37IoKZCEW"

|

| 399 |

+

},

|

| 400 |

+

"execution_count": null,

|

| 401 |

+

"outputs": []

|

| 402 |

+

},

|

| 403 |

+

{

|

| 404 |

+

"cell_type": "code",

|

| 405 |

+

"source": [

|

| 406 |

+

"dist = np.round(mean_clf.mean_distance(test_embeddings[0]), 2)\n",

|

| 407 |

+

"print(f\"Dist to real mean {dist[0]}\")\n",

|

| 408 |

+

"print(f\"Dist to CGI mean {dist[1]}\")"

|

| 409 |

+

],

|

| 410 |

+

"metadata": {

|

| 411 |

+

"id": "gi5Vdf-bQElG"

|

| 412 |

+

},

|

| 413 |

+

"execution_count": null,

|

| 414 |

+

"outputs": []

|

| 415 |

+

},

|

| 416 |

+

{

|

| 417 |

+

"cell_type": "code",

|

| 418 |

+

"source": [

|

| 419 |

+

"def embedding_distance(image_path_1, image_path_2):\n",

|

| 420 |

+

" embedding_1 = calculate_embeddings(image_path_1)\n",

|

| 421 |

+

" embedding_2 = calculate_embeddings(image_path_2)\n",

|

| 422 |

+

" distance = np.linalg.norm(embedding_1 - embedding_2)\n",

|

| 423 |

+

" return distance"

|

| 424 |

+

],

|

| 425 |

+

"metadata": {

|

| 426 |

+

"id": "3RkM68Li8Kh0"

|

| 427 |

+

},

|

| 428 |

+

"execution_count": null,

|

| 429 |

+

"outputs": []

|

| 430 |

+

},

|

| 431 |

+

{

|

| 432 |

+

"cell_type": "markdown",

|

| 433 |

+

"source": [

|

| 434 |

+

"## Visualizing Feature Space"

|

| 435 |

+

],

|

| 436 |

+

"metadata": {

|

| 437 |

+

"id": "x5GprsHRwkEX"

|

| 438 |

+

}

|

| 439 |

+

},

|

| 440 |

+

{

|

| 441 |

+

"cell_type": "code",

|

| 442 |

+

"source": [

|

| 443 |

+

"# prompt: How can I plot embeddings on a t-SNE scatter plot and colored by the label? A label of 1 should be \"CGI\" in the legend and 0 should be \"Real Photo\"\n",

|

| 444 |

+

"\n",

|

| 445 |

+

"import matplotlib.pyplot as plt\n",

|

| 446 |

+

"# Apply t-SNE\n",

|

| 447 |

+

"tsne = TSNE(n_components=2, random_state=42)\n",

|

| 448 |

+

"embeddings_2d = tsne.fit_transform(embeddings)\n",

|

| 449 |

+

"\n",

|

| 450 |

+

"# Plot the embeddings\n",

|

| 451 |

+

"plt.figure(figsize=(10, 7))\n",

|

| 452 |

+

"sns.scatterplot(\n",

|

| 453 |

+

" x=embeddings_2d[:, 0],\n",

|

| 454 |

+

" y=embeddings_2d[:, 1],\n",

|

| 455 |

+

" hue=['CGI' if label == 1 else 'Real Photo' for label in labels], # Map labels to strings\n",

|

| 456 |

+

" palette=sns.color_palette(\"hsv\", 2),\n",

|

| 457 |

+

" legend=\"full\"\n",

|

| 458 |

+

")\n",

|

| 459 |

+

"plt.title(\"t-SNE of Image Embeddings\")\n",

|

| 460 |

+

"plt.xlabel(\"t-SNE component 1\")\n",

|

| 461 |

+

"plt.ylabel(\"t-SNE component 2\")\n",

|

| 462 |

+

"plt.show()"

|

| 463 |

+

],

|

| 464 |

+

"metadata": {

|

| 465 |

+

"id": "oDx-07WfOd-2"

|

| 466 |

+

},

|

| 467 |

+

"execution_count": null,

|

| 468 |

+

"outputs": []

|

| 469 |

+

},

|

| 470 |

+

{

|

| 471 |

+

"cell_type": "code",

|

| 472 |

+

"source": [

|

| 473 |

+

"# prompt: Can you write a function that visualizes the embeddings using t-sne with the labels but allows a parameter which is an image path and preprocesses the image and calculates the embeddings and plots this embedding as well?\n",

|

| 474 |

+

"\n",

|

| 475 |

+

"import matplotlib.pyplot as plt\n",

|

| 476 |

+

"import numpy as np\n",

|

| 477 |

+

"def visualize_embeddings_with_new_image(image_path, embeddings, labels):\n",

|

| 478 |

+

" \"\"\"\n",

|

| 479 |

+

" Visualizes embeddings using t-SNE, including a new image's embedding.\n",

|

| 480 |

+

"\n",

|

| 481 |

+

" Args:\n",

|

| 482 |

+

" image_path: Path to the new image.\n",

|

| 483 |

+

" embeddings: Existing embeddings.\n",

|

| 484 |

+

" labels: Corresponding labels for existing embeddings.\n",

|

| 485 |

+

" \"\"\"\n",

|

| 486 |

+

"\n",

|

| 487 |

+

" # Calculate embedding for the new image\n",

|

| 488 |

+

" new_embedding = calculate_embeddings(image_path, model_path='embedding_modelv2.keras')\n",

|

| 489 |

+

"\n",

|

| 490 |

+

" # Append new embedding and label to existing data\n",

|

| 491 |

+

" all_embeddings = np.concatenate((embeddings, new_embedding), axis=0)\n",

|

| 492 |

+

" all_labels = np.concatenate((labels, [2]), axis=0) # Assuming 2 is a new label for the new image\n",

|

| 493 |

+

"\n",

|

| 494 |

+

" # Apply t-SNE\n",

|

| 495 |

+

" tsne = TSNE(n_components=2, random_state=42)\n",

|

| 496 |

+

" embeddings_2d = tsne.fit_transform(all_embeddings)\n",

|

| 497 |

+

"\n",

|

| 498 |

+

" # Plot the embeddings\n",

|

| 499 |

+

" plt.figure(figsize=(10, 7))\n",

|

| 500 |

+

" sns.scatterplot(\n",

|

| 501 |

+

" x=embeddings_2d[:-1, 0], # Plot existing embeddings\n",

|

| 502 |

+

" y=embeddings_2d[:-1, 1],\n",

|

| 503 |

+

" hue=['CGI' if label == 1 else 'Real Photo' for label in all_labels[:-1]],\n",

|

| 504 |

+

" palette=sns.color_palette(\"hsv\", 2),\n",

|

| 505 |

+

" legend=\"full\"\n",

|

| 506 |

+

" )\n",

|

| 507 |

+

"\n",

|

| 508 |

+

" # Plot the new image's embedding\n",

|

| 509 |

+

" plt.scatter(\n",

|

| 510 |

+

" x=embeddings_2d[-1, 0],\n",

|

| 511 |

+

" y=embeddings_2d[-1, 1],\n",

|

| 512 |

+

" color='black',\n",

|

| 513 |

+

" marker='*',\n",

|

| 514 |

+

" s=200,\n",

|

| 515 |

+

" label='New Image'\n",

|

| 516 |

+

" )\n",

|

| 517 |

+

"\n",

|

| 518 |

+

" plt.title(\"t-SNE of Image Embeddings with New Image\")\n",

|

| 519 |

+

" plt.xlabel(\"t-SNE component 1\")\n",

|

| 520 |

+

" plt.ylabel(\"t-SNE component 2\")\n",

|

| 521 |

+

" plt.legend()\n",

|

| 522 |

+

" plt.show()\n",

|

| 523 |

+

"\n",

|

| 524 |

+

"# Example usage:\n",

|

| 525 |

+

"# visualize_embeddings_with_new_image(\"path/to/your/new/image.jpg\", embeddings, labels)\n"

|

| 526 |

+

],

|

| 527 |

+

"metadata": {

|

| 528 |

+

"id": "BKyYu-8won0l"

|

| 529 |

+

},

|

| 530 |

+

"execution_count": null,

|

| 531 |

+

"outputs": []

|

| 532 |

+

},

|

| 533 |

+

{

|

| 534 |

+

"cell_type": "code",

|

| 535 |

+

"source": [

|

| 536 |

+

"visualize_embeddings_with_new_image(\"neytiri.png\", embeddings, labels)"

|

| 537 |

+

],

|

| 538 |

+

"metadata": {

|

| 539 |

+

"id": "v6jrK3Auo-eM"

|

| 540 |

+

},

|

| 541 |

+

"execution_count": null,

|

| 542 |

+

"outputs": []

|

| 543 |

+

},

|

| 544 |

+

{

|

| 545 |

+

"cell_type": "markdown",

|

| 546 |

+

"source": [

|

| 547 |

+

"### Testing Validation"

|

| 548 |

+

],

|

| 549 |

+

"metadata": {

|

| 550 |

+

"id": "JokVT8QNCOCm"

|

| 551 |

+

}

|

| 552 |

+

},

|

| 553 |

+

{

|

| 554 |

+

"cell_type": "code",

|

| 555 |

+

"source": [

|

| 556 |

+

"!unzip Validation.zip"

|

| 557 |

+

],

|

| 558 |

+

"metadata": {

|

| 559 |

+

"id": "QzkDffzBDGce"

|

| 560 |

+

},

|

| 561 |

+

"execution_count": null,

|

| 562 |

+

"outputs": []

|

| 563 |

+

},

|

| 564 |

+

{

|

| 565 |

+

"cell_type": "code",

|

| 566 |

+

"source": [

|

| 567 |

+

"cgi_val_images, cgi_val_labels = calculate_embeddings_folder('Validation/CGI')\n",

|

| 568 |

+

"photo_val_images, photo_val_labels = calculate_embeddings_folder('Validation/Photo')\n",

|

| 569 |

+

"\n",

|

| 570 |

+

"print(f\"CGI shape {np.array(cgi_val_images).shape}\")\n",

|

| 571 |

+

"print(f\"Photo shape {np.array(photo_val_images).shape}\")"

|

| 572 |

+

],

|

| 573 |

+

"metadata": {

|

| 574 |

+

"id": "UkuPOZXKCNd5"

|

| 575 |

+

},

|

| 576 |

+

"execution_count": null,

|

| 577 |

+

"outputs": []

|

| 578 |

+

},

|

| 579 |

+

{

|

| 580 |

+

"cell_type": "code",

|

| 581 |

+

"source": [

|

| 582 |

+

"# prompt: Can you test the validation images and labels against the XGB, Mean, and KNN classifiers?\n",

|

| 583 |

+

"\n",

|

| 584 |

+

"import numpy as np\n",

|

| 585 |

+

"# Combine validation data\n",

|

| 586 |

+

"X_val = np.concatenate((cgi_val_images, photo_val_images), axis=0)\n",

|

| 587 |

+

"y_val = np.concatenate((cgi_val_labels, photo_val_labels), axis=0)\n",

|

| 588 |

+

"\n",

|

| 589 |

+

"# Reshape validation data to match model input\n",

|

| 590 |

+

"X_val = X_val.reshape(X_val.shape[0], -1)\n",

|

| 591 |

+

"\n",

|

| 592 |

+

"# Predict using classifiers\n",

|

| 593 |

+

"y_pred_xgb_val = xgb_clf.predict(X_val)\n",

|

| 594 |

+

"y_pred_mean_val = mean_clf.predict(X_val)\n",

|

| 595 |

+

"y_pred_knn_val = knn_clf.predict(X_val)\n",

|

| 596 |

+

"y_pred_svc_val = svc_clf.predict(X_val)\n",

|

| 597 |

+

"y_pred_rf_val = rf_clf.predict(X_val)\n",

|

| 598 |

+

"y_pred_mlp_val = mlp_clf.predict(X_val)\n",

|

| 599 |

+

"\n",

|

| 600 |

+

"# Evaluate classifiers on validation set\n",

|

| 601 |

+

"evaluate_classifier(y_val, y_pred_xgb_val, \"XGBoost Classifier (Validation)\")\n",

|

| 602 |

+

"evaluate_classifier(y_val, y_pred_mean_val, \"Mean Classifier (Validation)\")\n",

|

| 603 |

+

"evaluate_classifier(y_val, y_pred_knn_val, \"KNN Classifier (Validation)\")\n",

|

| 604 |

+

"evaluate_classifier(y_val, y_pred_svc_val, \"SVC Classifier (Validation)\")\n",

|

| 605 |

+

"evaluate_classifier(y_val, y_pred_rf_val, \"Random Forest Classifier (Validation)\")\n"

|

| 606 |

+

],

|

| 607 |

+

"metadata": {

|

| 608 |

+

"id": "pUE8siFEDF0h"

|

| 609 |

+

},

|

| 610 |

+

"execution_count": null,

|

| 611 |

+

"outputs": []

|

| 612 |

+

},

|

| 613 |

+

{

|

| 614 |

+

"cell_type": "markdown",

|

| 615 |

+

"source": [

|

| 616 |

+

"### Old Preprocessing"

|

| 617 |

+

],

|

| 618 |

+

"metadata": {

|

| 619 |

+

"id": "KFvqq8di5QnS"

|

| 620 |

+

}

|

| 621 |

+

},

|

| 622 |

+

{

|

| 623 |

+

"cell_type": "code",

|

| 624 |

+

"source": [

|

| 625 |

+

"# Function to load and preprocess images\n",

|

| 626 |

+

"def load_images(folder, label):\n",

|

| 627 |

+

" images = []\n",

|

| 628 |

+

" labels = []\n",

|

| 629 |

+

" for filename in os.listdir(folder):\n",

|

| 630 |

+

" if filename.endswith(\".jpg\") or filename.endswith(\".png\") or filename.endswith(\".jpeg\"):\n",

|

| 631 |

+

" img = cv2.imread(os.path.join(folder, filename), cv2.IMREAD_GRAYSCALE)\n",

|

| 632 |

+

" if img is not None:\n",

|

| 633 |

+

" img = cv2.resize(img, (256, 256))\n",

|

| 634 |

+

" images.append(img)\n",

|

| 635 |

+

" labels.append(label)\n",

|

| 636 |

+

" return images, labels\n",

|

| 637 |

+

"\n",

|

| 638 |

+

"pca = PCA(n_components=128)\n",

|

| 639 |

+

"# Function to perform Fourier transform and extract features\n",

|

| 640 |

+

"def extract_features(images):\n",

|

| 641 |

+

" features = []\n",

|

| 642 |

+

" for img in images:\n",

|

| 643 |

+

" f_transform = np.fft.fft2(img)\n",

|

| 644 |

+

" f_shift = np.fft.fftshift(f_transform)\n",

|

| 645 |

+

" magnitude_spectrum = 20 * np.log(np.abs(f_shift))\n",

|

| 646 |

+

" features.append(magnitude_spectrum.flatten())\n",

|

| 647 |

+

" features = pca.fit_transform(features)\n",

|

| 648 |

+

" return np.array(features)\n",

|

| 649 |

+

"\n",

|

| 650 |

+

"# Load and preprocess images from both folders\n",

|

| 651 |

+

"cgi_images, cgi_labels = load_images('CGI', 1) # 1 for CGI\n",

|

| 652 |

+

"photo_images, photo_labels = load_images('Photo', 0) # 0 for Real Photo\n",

|

| 653 |

+

"\n",

|

| 654 |

+

"min_length = min(len(cgi_images), len(photo_images))\n",

|

| 655 |

+

"cgi_images = cgi_images[:min_length]\n",

|

| 656 |

+

"cgi_labels = cgi_labels[:min_length]\n",

|

| 657 |

+

"photo_images = photo_images[:min_length]\n",

|

| 658 |

+

"photo_labels = photo_labels[:min_length]\n",

|

| 659 |

+

"\n",

|

| 660 |

+

"# Combine datasets\n",

|

| 661 |

+

"images = cgi_images + photo_images\n",

|

| 662 |

+

"labels = cgi_labels + photo_labels\n",

|

| 663 |

+

"\n",

|

| 664 |

+

"print(f\"Number of CGI images: {len(cgi_images)}\")\n",

|

| 665 |

+

"print(f\"Number of Photo images: {len(photo_images)}\")\n",

|

| 666 |

+

"\n",

|

| 667 |

+

"# Extract features\n",

|

| 668 |

+

"features = extract_features(images)\n",

|

| 669 |

+

"\n",

|

| 670 |

+

"# Encode labels\n",

|

| 671 |

+

"labels = np.array(labels)\n",

|

| 672 |

+

"\n",

|

| 673 |

+

"# Split data into training and testing sets\n",

|

| 674 |

+

"X_train, X_test, y_train, y_test = train_test_split(features, labels, test_size=0.2, random_state=42, stratify=labels)"

|

| 675 |

+

],

|

| 676 |

+

"metadata": {

|

| 677 |

+

"id": "5-M_iFWC5SOk"

|

| 678 |

+

},

|

| 679 |

+

"execution_count": null,

|

| 680 |

+

"outputs": []

|

| 681 |

+

},

|

| 682 |

+

{

|

| 683 |

+

"cell_type": "code",

|

| 684 |

+

"source": [

|

| 685 |

+

"X_train.shape"

|

| 686 |

+

],

|

| 687 |

+

"metadata": {

|

| 688 |

+

"id": "yAqmOxpp-iin"

|

| 689 |

+

},

|

| 690 |

+

"execution_count": null,

|

| 691 |

+

"outputs": []

|

| 692 |

+

},

|

| 693 |

+

{

|

| 694 |

+

"cell_type": "code",

|

| 695 |

+

"source": [

|

| 696 |

+

"embeddings.shape"

|

| 697 |

+

],

|

| 698 |

+

"metadata": {

|

| 699 |

+

"id": "Dm1lretJBbKs"

|

| 700 |

+

},

|

| 701 |

+

"execution_count": null,

|

| 702 |

+

"outputs": []

|

| 703 |

+

},

|

| 704 |

+

{

|

| 705 |

+

"cell_type": "code",

|

| 706 |

+

"source": [

|

| 707 |

+

"X_test.shape"

|

| 708 |

+

],

|

| 709 |

+

"metadata": {

|

| 710 |

+

"id": "TlumN_GMBg_F"

|

| 711 |

+

},

|

| 712 |

+

"execution_count": null,

|

| 713 |

+

"outputs": []

|

| 714 |

+

},

|

| 715 |

+

{

|

| 716 |

+

"cell_type": "code",

|

| 717 |

+

"source": [],

|

| 718 |

+

"metadata": {

|

| 719 |

+

"id": "8Fq0dUzHtHeQ"

|

| 720 |

+

},

|

| 721 |

+

"execution_count": null,

|

| 722 |

+

"outputs": []

|

| 723 |

+

}

|

| 724 |

+

]

|

| 725 |

+

}

|

.jupyter/desktop-workspaces/default-37a8.jupyterlab-workspace

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

{"data":{"layout-restorer:data":{"main":{"dock":{"type":"tab-area","currentIndex":1,"widgets":["notebook:CGI_Classification_by_Fourier_Embeddings.ipynb"]},"current":"notebook:CGI_Classification_by_Fourier_Embeddings.ipynb"},"down":{"size":0,"widgets":[]},"left":{"collapsed":false,"current":"filebrowser","widgets":["filebrowser","running-sessions","@jupyterlab/toc:plugin","extensionmanager.main-view"]},"right":{"collapsed":true,"widgets":["jp-property-inspector","debugger-sidebar"]},"relativeSizes":[0.26227795193312436,0.7377220480668757,0]},"notebook:CGI_Classification_by_Fourier_Embeddings.ipynb":{"data":{"path":"CGI_Classification_by_Fourier_Embeddings.ipynb","factory":"Notebook"}}},"metadata":{"id":"default"}}

|

Archive.zip

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:5512bf6c7c0eca199e61a46b5727a5b65478b09262ad3e46d854df67f57e1e42

|

| 3 |

+

size 9556331

|

CGI/.DS_Store

ADDED

|

Binary file (47.1 kB). View file

|

|

|

CGI/a082.jpg

ADDED

|

CGI/abraao-segundo-conan-face-3k.jpg

ADDED

|

CGI/adam-fisher-afisher-ahsoka-01.jpg

ADDED

|

CGI/adam-fisher-afisher-asajjventres-final01.jpg

ADDED

|

CGI/adam-fisher-afisher-mib-zb-02.jpg

ADDED

|

CGI/adam-fisher-afisher-priestess01.jpg

ADDED

|

CGI/adam-o-donnell-portrait-mainlight.jpg

ADDED

|

CGI/afhnts-s-show07.jpg

ADDED

|

CGI/afhnts-s-show08.jpg

ADDED

|

CGI/alessandro-mastronardi-dwarfiewhite-topaz.jpg

ADDED

|

CGI/alessandro-mastronardi-popup-01.jpg

ADDED

|

CGI/alex-coman-slug-beach-combined.jpg

ADDED

|

CGI/alex-lucas-sun-worm-002.jpg

ADDED

|

CGI/alex-lucas-sun-worm-008.jpg

ADDED

|

CGI/alex-pi-final-01.jpg

ADDED

|

CGI/alex-pi-hangar-robots-alex-pi.jpg

ADDED

|

CGI/alex-pi-ruins-ancient-civilization-final-01.jpg

ADDED

|

CGI/alex-pi-temple-on-the-planet-582-73-final.jpg

ADDED

|

CGI/alex-savelev-samurai-alex-saveliev-front.jpg

ADDED

|

CGI/alexandre-corbini-goth-princess-03.jpg

ADDED

|

CGI/andor-kollar-andorkollar-malehead1.jpg

ADDED

|

CGI/andrea-bertaccini-01-lookdev-006.jpg

ADDED

|

CGI/andrea-bertaccini-lorane-21-post.jpg

ADDED

|

CGI/andrew-ariza-main-5.jpg

ADDED

|

CGI/andrew-averkin-train-01.jpg

ADDED

|

CGI/anthony-catillaz-artico-luminos-design-a-black-spider-man-looking-over-the-rainy-fd96ccx-e05f-460e-abcc-4a1462881264.jpg

ADDED

|

CGI/antoine-collignon-1.jpg

ADDED

|

CGI/antoine-collignon-final-piece.jpg

ADDED

|

CGI/antoine-di-lorenzo-imperfectmechacell01.jpg

ADDED

|

CGI/antoine-verney-carron-elephantasian03f01.jpg

ADDED

|

CGI/aobo-li-light04.jpg

ADDED

|

CGI/april-ed6705a8f03679c5e8012dc7d2cd02e4.jpg

ADDED

|

CGI/arthur-yuan-rl-bachi-statue.jpg

ADDED

|

CGI/arthur-yuan-rl-concept-environment-ukigumo-mountain-town.jpg

ADDED

|

CGI/artur-tarnowski-1-girl-beauty-1920compr.jpg

ADDED

|

CGI/artur-tarnowski-girl-prev-131-post-jpg.jpg

ADDED

|

CGI/baj-singh-dande-rend01.jpg

ADDED

|

CGI/baolong-zhang-goblin-7.jpg

ADDED

|

CGI/baolong-zhang-render37b-small2.jpg

ADDED

|

CGI/baolong-zhang-sirus-closeup02.jpg

ADDED

|

CGI/baolong-zhang-w-113.jpg

ADDED

|

CGI/ben-erdt-gren-rnd-l.jpg

ADDED

|

CGI/bora-kim-1.jpg

ADDED

|