Spaces:

Configuration error

Configuration error

Upload 5 files

Browse files- README.md +33 -10

- app.py +142 -0

- chainlit.md +24 -0

- rendering.png +0 -0

- tools.py +108 -0

README.md

CHANGED

|

@@ -1,10 +1,33 @@

|

|

| 1 |

-

|

| 2 |

-

|

| 3 |

-

|

| 4 |

-

|

| 5 |

-

|

| 6 |

-

|

| 7 |

-

|

| 8 |

-

|

| 9 |

-

|

| 10 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Title: Image Generation with Chainlit

|

| 2 |

+

Tags: [image, stability, langchain]

|

| 3 |

+

|

| 4 |

+

# Image Generation with Chainlit

|

| 5 |

+

|

| 6 |

+

This folder is showing how to use Stability AI to generate images and send them to the Chainlit UI.

|

| 7 |

+

|

| 8 |

+

You will learn on how to use the [Image element](https://docs.chainlit.io/api-reference/elements/image) and integrate it with LangChain.

|

| 9 |

+

|

| 10 |

+

## Description

|

| 11 |

+

|

| 12 |

+

The provided code integrates Stability AI's image generation capabilities with Chainlit, a framework for building interactive web apps with Python. It includes functions to generate new images from text prompts and to edit existing images using prompts. The image generation is powered by the Stability AI's API, and the images are displayed using Chainlit's Image element.

|

| 13 |

+

|

| 14 |

+

## Quickstart

|

| 15 |

+

|

| 16 |

+

1. Ensure you have Chainlit installed and set up.

|

| 17 |

+

2. Place your Stability AI API key in the environment variable `STABILITY_KEY`.

|

| 18 |

+

3. Run `app.py` to start the Chainlit app.

|

| 19 |

+

4. Use the `generate_image` function to create a new image from a text prompt.

|

| 20 |

+

5. Use the `edit_image` function to edit an existing image with a new prompt.

|

| 21 |

+

|

| 22 |

+

## Functions

|

| 23 |

+

|

| 24 |

+

- `generate_image(prompt: str)`: Generates an image from a text prompt and returns the image name.

|

| 25 |

+

- `edit_image(init_image_name: str, prompt: str)`: Edits an existing image based on the provided prompt and returns the new image name.

|

| 26 |

+

|

| 27 |

+

## Tools

|

| 28 |

+

|

| 29 |

+

- `generate_image_tool`: A Chainlit tool for generating images from text prompts.

|

| 30 |

+

- `edit_image_tool`: A Chainlit tool for editing images with text prompts.

|

| 31 |

+

|

| 32 |

+

To see the tools in action and interact with the generated images, follow the instructions in the [main readme](/README.md).

|

| 33 |

+

|

app.py

ADDED

|

@@ -0,0 +1,142 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from langchain.agents import AgentExecutor, AgentType, initialize_agent

|

| 2 |

+

from langchain.agents.structured_chat.prompt import SUFFIX

|

| 3 |

+

from langchain.chat_models import ChatOpenAI

|

| 4 |

+

from langchain.memory import ConversationBufferMemory

|

| 5 |

+

from tools import edit_image_tool, generate_image_tool

|

| 6 |

+

|

| 7 |

+

import chainlit as cl

|

| 8 |

+

from chainlit.action import Action

|

| 9 |

+

from chainlit.input_widget import Select, Switch, Slider

|

| 10 |

+

|

| 11 |

+

|

| 12 |

+

@cl.action_callback("Create variation")

|

| 13 |

+

async def create_variant(action: Action):

|

| 14 |

+

agent_input = f"Create a variation of {action.value}"

|

| 15 |

+

await cl.Message(content=f"Creating a variation of `{action.value}`.").send()

|

| 16 |

+

await main(cl.Message(content=agent_input))

|

| 17 |

+

|

| 18 |

+

|

| 19 |

+

@cl.author_rename

|

| 20 |

+

def rename(orig_author):

|

| 21 |

+

mapping = {

|

| 22 |

+

"LLMChain": "Assistant",

|

| 23 |

+

}

|

| 24 |

+

return mapping.get(orig_author, orig_author)

|

| 25 |

+

|

| 26 |

+

|

| 27 |

+

@cl.cache

|

| 28 |

+

def get_memory():

|

| 29 |

+

return ConversationBufferMemory(memory_key="chat_history")

|

| 30 |

+

|

| 31 |

+

|

| 32 |

+

@cl.on_chat_start

|

| 33 |

+

async def start():

|

| 34 |

+

settings = await cl.ChatSettings(

|

| 35 |

+

[

|

| 36 |

+

Select(

|

| 37 |

+

id="Model",

|

| 38 |

+

label="OpenAI - Model",

|

| 39 |

+

values=["gpt-3.5-turbo", "gpt-3.5-turbo-16k", "gpt-4", "gpt-4-32k"],

|

| 40 |

+

initial_index=1,

|

| 41 |

+

),

|

| 42 |

+

Switch(id="Streaming", label="OpenAI - Stream Tokens", initial=True),

|

| 43 |

+

Slider(

|

| 44 |

+

id="Temperature",

|

| 45 |

+

label="OpenAI - Temperature",

|

| 46 |

+

initial=0,

|

| 47 |

+

min=0,

|

| 48 |

+

max=2,

|

| 49 |

+

step=0.1,

|

| 50 |

+

),

|

| 51 |

+

Slider(

|

| 52 |

+

id="SAI_Steps",

|

| 53 |

+

label="Stability AI - Steps",

|

| 54 |

+

initial=30,

|

| 55 |

+

min=10,

|

| 56 |

+

max=150,

|

| 57 |

+

step=1,

|

| 58 |

+

description="Amount of inference steps performed on image generation.",

|

| 59 |

+

),

|

| 60 |

+

Slider(

|

| 61 |

+

id="SAI_Cfg_Scale",

|

| 62 |

+

label="Stability AI - Cfg_Scale",

|

| 63 |

+

initial=7,

|

| 64 |

+

min=1,

|

| 65 |

+

max=35,

|

| 66 |

+

step=0.1,

|

| 67 |

+

description="Influences how strongly your generation is guided to match your prompt.",

|

| 68 |

+

),

|

| 69 |

+

Slider(

|

| 70 |

+

id="SAI_Width",

|

| 71 |

+

label="Stability AI - Image Width",

|

| 72 |

+

initial=512,

|

| 73 |

+

min=256,

|

| 74 |

+

max=2048,

|

| 75 |

+

step=64,

|

| 76 |

+

tooltip="Measured in pixels",

|

| 77 |

+

),

|

| 78 |

+

Slider(

|

| 79 |

+

id="SAI_Height",

|

| 80 |

+

label="Stability AI - Image Height",

|

| 81 |

+

initial=512,

|

| 82 |

+

min=256,

|

| 83 |

+

max=2048,

|

| 84 |

+

step=64,

|

| 85 |

+

tooltip="Measured in pixels",

|

| 86 |

+

),

|

| 87 |

+

]

|

| 88 |

+

).send()

|

| 89 |

+

await setup_agent(settings)

|

| 90 |

+

|

| 91 |

+

|

| 92 |

+

@cl.on_settings_update

|

| 93 |

+

async def setup_agent(settings):

|

| 94 |

+

print("Setup agent with following settings: ", settings)

|

| 95 |

+

|

| 96 |

+

llm = ChatOpenAI(

|

| 97 |

+

temperature=settings["Temperature"],

|

| 98 |

+

streaming=settings["Streaming"],

|

| 99 |

+

model=settings["Model"],

|

| 100 |

+

)

|

| 101 |

+

memory = get_memory()

|

| 102 |

+

_SUFFIX = "Chat history:\n{chat_history}\n\n" + SUFFIX

|

| 103 |

+

|

| 104 |

+

agent = initialize_agent(

|

| 105 |

+

llm=llm,

|

| 106 |

+

tools=[generate_image_tool, edit_image_tool],

|

| 107 |

+

agent=AgentType.STRUCTURED_CHAT_ZERO_SHOT_REACT_DESCRIPTION,

|

| 108 |

+

memory=memory,

|

| 109 |

+

agent_kwargs={

|

| 110 |

+

"suffix": _SUFFIX,

|

| 111 |

+

"input_variables": ["input", "agent_scratchpad", "chat_history"],

|

| 112 |

+

},

|

| 113 |

+

)

|

| 114 |

+

cl.user_session.set("agent", agent)

|

| 115 |

+

|

| 116 |

+

|

| 117 |

+

@cl.on_message

|

| 118 |

+

async def main(message: cl.Message):

|

| 119 |

+

agent = cl.user_session.get("agent") # type: AgentExecutor

|

| 120 |

+

cl.user_session.set("generated_image", None)

|

| 121 |

+

|

| 122 |

+

# No async implementation in the Stability AI client, fallback to sync

|

| 123 |

+

res = await cl.make_async(agent.run)(

|

| 124 |

+

input=message.content, callbacks=[cl.LangchainCallbackHandler()]

|

| 125 |

+

)

|

| 126 |

+

|

| 127 |

+

elements = []

|

| 128 |

+

actions = []

|

| 129 |

+

|

| 130 |

+

generated_image_name = cl.user_session.get("generated_image")

|

| 131 |

+

generated_image = cl.user_session.get(generated_image_name)

|

| 132 |

+

if generated_image:

|

| 133 |

+

elements = [

|

| 134 |

+

cl.Image(

|

| 135 |

+

content=generated_image,

|

| 136 |

+

name=generated_image_name,

|

| 137 |

+

display="inline",

|

| 138 |

+

)

|

| 139 |

+

]

|

| 140 |

+

actions = [cl.Action(name="Create variation", value=generated_image_name)]

|

| 141 |

+

|

| 142 |

+

await cl.Message(content=res, elements=elements, actions=actions).send()

|

chainlit.md

ADDED

|

@@ -0,0 +1,24 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# 🎨 Chainlit Image Gen demo

|

| 2 |

+

|

| 3 |

+

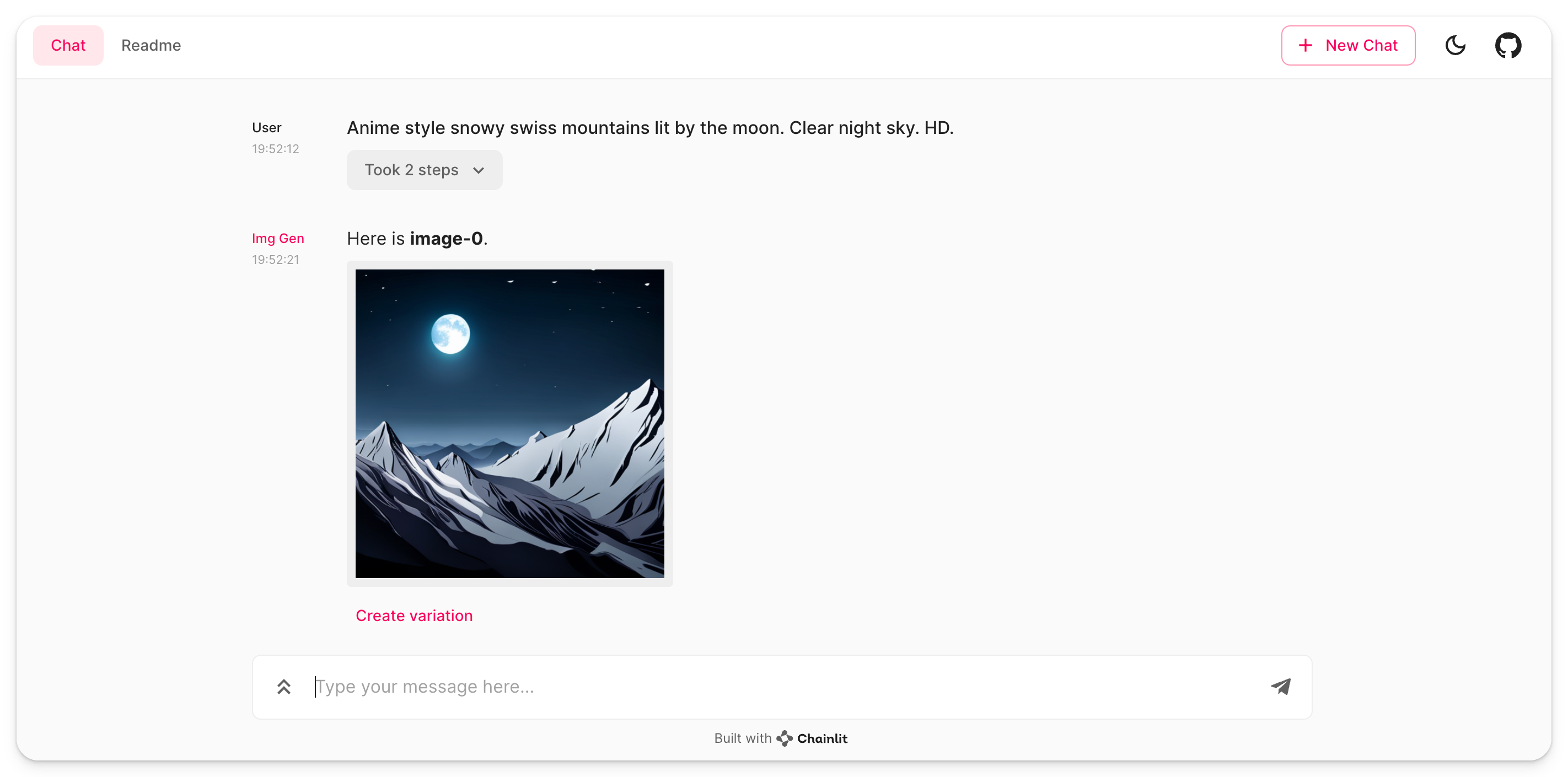

Welcome to our creative image generation demo built with [Chainlit](https://chainlit.io), [LangChain](https://python.langchain.com/en/latest/index.html), and [Stability AI](https://stability.ai/)! 🌟 This app allows you to create and edit unique images simply by chatting with it. Talk about having an artistic conversation! 🎨🗨️

|

| 4 |

+

|

| 5 |

+

This demo has also been adapted to use the new [ChatSettings](https://docs.chainlit.io/concepts/chat-settings) feature introduced in chainlit `0.6.2`. You are now able to tweak Stability AI settings to your liking!

|

| 6 |

+

|

| 7 |

+

## 🎯 Example

|

| 8 |

+

|

| 9 |

+

Try asking:

|

| 10 |

+

```

|

| 11 |

+

Anime style snowy swiss mountains lit by the moon. Clear night sky. HD.

|

| 12 |

+

```

|

| 13 |

+

|

| 14 |

+

|

| 15 |

+

|

| 16 |

+

You can then ask for modifications:

|

| 17 |

+

```

|

| 18 |

+

change the clear night sky with a starry sky

|

| 19 |

+

```

|

| 20 |

+

|

| 21 |

+

|

| 22 |

+

|

| 23 |

+

## ⚠️ Disclaimer

|

| 24 |

+

Please note that the primary goal of this demo is to showcase the ease and convenience of building LLM apps using Chainlit and other tools rather than presenting a state-of-the-art image generation application.

|

rendering.png

ADDED

|

tools.py

ADDED

|

@@ -0,0 +1,108 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import io

|

| 2 |

+

import os

|

| 3 |

+

|

| 4 |

+

import stability_sdk.interfaces.gooseai.generation.generation_pb2 as generation

|

| 5 |

+

from langchain.tools import StructuredTool, Tool

|

| 6 |

+

from PIL import Image

|

| 7 |

+

from stability_sdk import client

|

| 8 |

+

|

| 9 |

+

import chainlit as cl

|

| 10 |

+

|

| 11 |

+

os.environ["STABILITY_HOST"] = "grpc.stability.ai:443"

|

| 12 |

+

|

| 13 |

+

|

| 14 |

+

def get_image_name():

|

| 15 |

+

image_count = cl.user_session.get("image_count")

|

| 16 |

+

if image_count is None:

|

| 17 |

+

image_count = 0

|

| 18 |

+

else:

|

| 19 |

+

image_count += 1

|

| 20 |

+

|

| 21 |

+

cl.user_session.set("image_count", image_count)

|

| 22 |

+

|

| 23 |

+

return f"image-{image_count}"

|

| 24 |

+

|

| 25 |

+

|

| 26 |

+

def _generate_image(prompt: str, init_image=None):

|

| 27 |

+

# Set up our connection to the API.

|

| 28 |

+

stability_api = client.StabilityInference(

|

| 29 |

+

key=os.environ["STABILITY_KEY"], # API Key reference.

|

| 30 |

+

verbose=True, # Print debug messages.

|

| 31 |

+

engine="stable-diffusion-xl-beta-v2-2-2", # Set the engine to use for generation.

|

| 32 |

+

# Available engines: stable-diffusion-v1 stable-diffusion-v1-5 stable-diffusion-512-v2-0 stable-diffusion-768-v2-0

|

| 33 |

+

# stable-diffusion-512-v2-1 stable-diffusion-768-v2-1 stable-diffusion-xl-beta-v2-2-2 stable-inpainting-v1-0 stable-inpainting-512-v2-0

|

| 34 |

+

)

|

| 35 |

+

|

| 36 |

+

start_schedule = 0.8 if init_image else 1

|

| 37 |

+

|

| 38 |

+

cl_chat_settings = cl.user_session.get("chat_settings")

|

| 39 |

+

|

| 40 |

+

# Set up our initial generation parameters.

|

| 41 |

+

answers = stability_api.generate(

|

| 42 |

+

prompt=prompt,

|

| 43 |

+

init_image=init_image,

|

| 44 |

+

start_schedule=start_schedule,

|

| 45 |

+

seed=992446758, # If a seed is provided, the resulting generated image will be deterministic.

|

| 46 |

+

# What this means is that as long as all generation parameters remain the same, you can always recall the same image simply by generating it again.

|

| 47 |

+

# Note: This isn't quite the case for CLIP Guided generations, which we tackle in the CLIP Guidance documentation.

|

| 48 |

+

steps=int(cl_chat_settings["SAI_Steps"]), # Amount of inference steps performed on image generation. Defaults to 30.

|

| 49 |

+

cfg_scale=cl_chat_settings["SAI_Cfg_Scale"], # Influences how strongly your generation is guided to match your prompt.

|

| 50 |

+

# Setting this value higher increases the strength in which it tries to match your prompt.

|

| 51 |

+

# Defaults to 7.0 if not specified.

|

| 52 |

+

width=int(cl_chat_settings["SAI_Width"]), # Generation width, defaults to 512 if not included.

|

| 53 |

+

height=int(cl_chat_settings["SAI_Height"]), # Generation height, defaults to 512 if not included.

|

| 54 |

+

samples=1, # Number of images to generate, defaults to 1 if not included.

|

| 55 |

+

sampler=generation.SAMPLER_K_EULER # Choose which sampler we want to denoise our generation with.

|

| 56 |

+

# Defaults to k_dpmpp_2m if not specified. Clip Guidance only supports ancestral samplers.

|

| 57 |

+

# (Available Samplers: ddim, plms, k_euler, k_euler_ancestral, k_heun, k_dpm_2, k_dpm_2_ancestral, k_dpmpp_2s_ancestral, k_lms, k_dpmpp_2m, k_dpmpp_sde)

|

| 58 |

+

)

|

| 59 |

+

|

| 60 |

+

# Set up our warning to print to the console if the adult content classifier is tripped.

|

| 61 |

+

# If adult content classifier is not tripped, save generated images.

|

| 62 |

+

for resp in answers:

|

| 63 |

+

for artifact in resp.artifacts:

|

| 64 |

+

if artifact.finish_reason == generation.FILTER:

|

| 65 |

+

raise ValueError(

|

| 66 |

+

"Your request activated the API's safety filters and could not be processed."

|

| 67 |

+

"Please modify the prompt and try again."

|

| 68 |

+

)

|

| 69 |

+

if artifact.type == generation.ARTIFACT_IMAGE:

|

| 70 |

+

name = get_image_name()

|

| 71 |

+

cl.user_session.set(name, artifact.binary)

|

| 72 |

+

cl.user_session.set("generated_image", name)

|

| 73 |

+

return name

|

| 74 |

+

else:

|

| 75 |

+

raise ValueError(

|

| 76 |

+

f"Your request did not generate an image. Please modify the prompt and try again. Finish reason: {artifact.finish_reason}"

|

| 77 |

+

)

|

| 78 |

+

|

| 79 |

+

|

| 80 |

+

def generate_image(prompt: str):

|

| 81 |

+

image_name = _generate_image(prompt)

|

| 82 |

+

return f"Here is {image_name}."

|

| 83 |

+

|

| 84 |

+

|

| 85 |

+

def edit_image(init_image_name: str, prompt: str):

|

| 86 |

+

init_image_bytes = cl.user_session.get(init_image_name)

|

| 87 |

+

if init_image_bytes is None:

|

| 88 |

+

raise ValueError(f"Could not find image `{init_image_name}`.")

|

| 89 |

+

|

| 90 |

+

init_image = Image.open(io.BytesIO(init_image_bytes))

|

| 91 |

+

image_name = _generate_image(prompt, init_image)

|

| 92 |

+

|

| 93 |

+

return f"Here is {image_name} based on {init_image_name}."

|

| 94 |

+

|

| 95 |

+

|

| 96 |

+

generate_image_tool = Tool.from_function(

|

| 97 |

+

func=generate_image,

|

| 98 |

+

name="GenerateImage",

|

| 99 |

+

description="Useful to create an image from a text prompt.",

|

| 100 |

+

return_direct=True,

|

| 101 |

+

)

|

| 102 |

+

|

| 103 |

+

edit_image_tool = StructuredTool.from_function(

|

| 104 |

+

func=edit_image,

|

| 105 |

+

name="EditImage",

|

| 106 |

+

description="Useful to edit an image with a prompt. Works well with commands such as 'replace', 'add', 'change', 'remove'.",

|

| 107 |

+

return_direct=True,

|

| 108 |

+

)

|