Spaces:

Running

on

Zero

Running

on

Zero

Commit

•

0a88b62

1

Parent(s):

23ce364

Upload 93 files

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitattributes +37 -35

- README.md +4 -7

- app.py +284 -0

- assets/logo.png +0 -0

- assets/overview_3.png +0 -0

- assets/radar.png +0 -0

- assets/runtime.png +0 -0

- assets/teaser.png +3 -0

- demos/example_000.png +0 -0

- demos/example_001.png +0 -0

- demos/example_002.png +0 -0

- demos/example_003.png +3 -0

- demos/example_list.txt +2 -0

- infer/__init__.py +28 -0

- infer/gif_render.py +55 -0

- infer/image_to_views.py +81 -0

- infer/rembg.py +26 -0

- infer/text_to_image.py +80 -0

- infer/utils.py +77 -0

- infer/views_to_mesh.py +94 -0

- mvd/__init__.py +0 -0

- mvd/hunyuan3d_mvd_lite_pipeline.py +493 -0

- mvd/hunyuan3d_mvd_std_pipeline.py +471 -0

- mvd/utils.py +85 -0

- requirements.txt +22 -0

- scripts/image_to_3d.sh +8 -0

- scripts/image_to_3d_demo.sh +8 -0

- scripts/image_to_3d_fast.sh +6 -0

- scripts/image_to_3d_fast_demo.sh +6 -0

- scripts/text_to_3d.sh +7 -0

- scripts/text_to_3d_demo.sh +7 -0

- scripts/text_to_3d_fast.sh +6 -0

- scripts/text_to_3d_fast_demo.sh +6 -0

- svrm/.DS_Store +0 -0

- svrm/configs/2024-10-24T22-36-18-project.yaml +32 -0

- svrm/configs/svrm.yaml +32 -0

- svrm/ldm/.DS_Store +0 -0

- svrm/ldm/models/svrm.py +263 -0

- svrm/ldm/modules/attention.py +457 -0

- svrm/ldm/modules/encoders/__init__.py +0 -0

- svrm/ldm/modules/encoders/dinov2/__init__.py +0 -0

- svrm/ldm/modules/encoders/dinov2/hub/__init__.py +0 -0

- svrm/ldm/modules/encoders/dinov2/hub/backbones.py +156 -0

- svrm/ldm/modules/encoders/dinov2/hub/utils.py +39 -0

- svrm/ldm/modules/encoders/dinov2/layers/__init__.py +11 -0

- svrm/ldm/modules/encoders/dinov2/layers/attention.py +89 -0

- svrm/ldm/modules/encoders/dinov2/layers/block.py +269 -0

- svrm/ldm/modules/encoders/dinov2/layers/dino_head.py +58 -0

- svrm/ldm/modules/encoders/dinov2/layers/drop_path.py +34 -0

- svrm/ldm/modules/encoders/dinov2/layers/layer_scale.py +27 -0

.gitattributes

CHANGED

|

@@ -1,35 +1,37 @@

|

|

| 1 |

-

*.7z filter=lfs diff=lfs merge=lfs -text

|

| 2 |

-

*.arrow filter=lfs diff=lfs merge=lfs -text

|

| 3 |

-

*.bin filter=lfs diff=lfs merge=lfs -text

|

| 4 |

-

*.bz2 filter=lfs diff=lfs merge=lfs -text

|

| 5 |

-

*.ckpt filter=lfs diff=lfs merge=lfs -text

|

| 6 |

-

*.ftz filter=lfs diff=lfs merge=lfs -text

|

| 7 |

-

*.gz filter=lfs diff=lfs merge=lfs -text

|

| 8 |

-

*.h5 filter=lfs diff=lfs merge=lfs -text

|

| 9 |

-

*.joblib filter=lfs diff=lfs merge=lfs -text

|

| 10 |

-

*.lfs.* filter=lfs diff=lfs merge=lfs -text

|

| 11 |

-

*.mlmodel filter=lfs diff=lfs merge=lfs -text

|

| 12 |

-

*.model filter=lfs diff=lfs merge=lfs -text

|

| 13 |

-

*.msgpack filter=lfs diff=lfs merge=lfs -text

|

| 14 |

-

*.npy filter=lfs diff=lfs merge=lfs -text

|

| 15 |

-

*.npz filter=lfs diff=lfs merge=lfs -text

|

| 16 |

-

*.onnx filter=lfs diff=lfs merge=lfs -text

|

| 17 |

-

*.ot filter=lfs diff=lfs merge=lfs -text

|

| 18 |

-

*.parquet filter=lfs diff=lfs merge=lfs -text

|

| 19 |

-

*.pb filter=lfs diff=lfs merge=lfs -text

|

| 20 |

-

*.pickle filter=lfs diff=lfs merge=lfs -text

|

| 21 |

-

*.pkl filter=lfs diff=lfs merge=lfs -text

|

| 22 |

-

*.pt filter=lfs diff=lfs merge=lfs -text

|

| 23 |

-

*.pth filter=lfs diff=lfs merge=lfs -text

|

| 24 |

-

*.rar filter=lfs diff=lfs merge=lfs -text

|

| 25 |

-

*.safetensors filter=lfs diff=lfs merge=lfs -text

|

| 26 |

-

saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

| 27 |

-

*.tar.* filter=lfs diff=lfs merge=lfs -text

|

| 28 |

-

*.tar filter=lfs diff=lfs merge=lfs -text

|

| 29 |

-

*.tflite filter=lfs diff=lfs merge=lfs -text

|

| 30 |

-

*.tgz filter=lfs diff=lfs merge=lfs -text

|

| 31 |

-

*.wasm filter=lfs diff=lfs merge=lfs -text

|

| 32 |

-

*.xz filter=lfs diff=lfs merge=lfs -text

|

| 33 |

-

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

-

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

-

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

| 1 |

+

*.7z filter=lfs diff=lfs merge=lfs -text

|

| 2 |

+

*.arrow filter=lfs diff=lfs merge=lfs -text

|

| 3 |

+

*.bin filter=lfs diff=lfs merge=lfs -text

|

| 4 |

+

*.bz2 filter=lfs diff=lfs merge=lfs -text

|

| 5 |

+

*.ckpt filter=lfs diff=lfs merge=lfs -text

|

| 6 |

+

*.ftz filter=lfs diff=lfs merge=lfs -text

|

| 7 |

+

*.gz filter=lfs diff=lfs merge=lfs -text

|

| 8 |

+

*.h5 filter=lfs diff=lfs merge=lfs -text

|

| 9 |

+

*.joblib filter=lfs diff=lfs merge=lfs -text

|

| 10 |

+

*.lfs.* filter=lfs diff=lfs merge=lfs -text

|

| 11 |

+

*.mlmodel filter=lfs diff=lfs merge=lfs -text

|

| 12 |

+

*.model filter=lfs diff=lfs merge=lfs -text

|

| 13 |

+

*.msgpack filter=lfs diff=lfs merge=lfs -text

|

| 14 |

+

*.npy filter=lfs diff=lfs merge=lfs -text

|

| 15 |

+

*.npz filter=lfs diff=lfs merge=lfs -text

|

| 16 |

+

*.onnx filter=lfs diff=lfs merge=lfs -text

|

| 17 |

+

*.ot filter=lfs diff=lfs merge=lfs -text

|

| 18 |

+

*.parquet filter=lfs diff=lfs merge=lfs -text

|

| 19 |

+

*.pb filter=lfs diff=lfs merge=lfs -text

|

| 20 |

+

*.pickle filter=lfs diff=lfs merge=lfs -text

|

| 21 |

+

*.pkl filter=lfs diff=lfs merge=lfs -text

|

| 22 |

+

*.pt filter=lfs diff=lfs merge=lfs -text

|

| 23 |

+

*.pth filter=lfs diff=lfs merge=lfs -text

|

| 24 |

+

*.rar filter=lfs diff=lfs merge=lfs -text

|

| 25 |

+

*.safetensors filter=lfs diff=lfs merge=lfs -text

|

| 26 |

+

saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

| 27 |

+

*.tar.* filter=lfs diff=lfs merge=lfs -text

|

| 28 |

+

*.tar filter=lfs diff=lfs merge=lfs -text

|

| 29 |

+

*.tflite filter=lfs diff=lfs merge=lfs -text

|

| 30 |

+

*.tgz filter=lfs diff=lfs merge=lfs -text

|

| 31 |

+

*.wasm filter=lfs diff=lfs merge=lfs -text

|

| 32 |

+

*.xz filter=lfs diff=lfs merge=lfs -text

|

| 33 |

+

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

+

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

+

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

assets/teaser.png filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

demos/example_003.png filter=lfs diff=lfs merge=lfs -text

|

README.md

CHANGED

|

@@ -1,14 +1,11 @@

|

|

| 1 |

---

|

| 2 |

-

title:

|

| 3 |

-

emoji:

|

| 4 |

colorFrom: purple

|

| 5 |

colorTo: red

|

| 6 |

sdk: gradio

|

| 7 |

sdk_version: 4.42.0

|

| 8 |

app_file: app.py

|

| 9 |

pinned: false

|

| 10 |

-

|

| 11 |

-

|

| 12 |

-

---

|

| 13 |

-

|

| 14 |

-

Check out the configuration reference at https://huggingface.co/docs/hub/spaces-config-reference

|

|

|

|

| 1 |

---

|

| 2 |

+

title: Hunyuan3D-1.0

|

| 3 |

+

emoji: 😻

|

| 4 |

colorFrom: purple

|

| 5 |

colorTo: red

|

| 6 |

sdk: gradio

|

| 7 |

sdk_version: 4.42.0

|

| 8 |

app_file: app.py

|

| 9 |

pinned: false

|

| 10 |

+

short_description: Text-to-3D and Image-to-3D Generation

|

| 11 |

+

---

|

|

|

|

|

|

|

|

|

app.py

ADDED

|

@@ -0,0 +1,284 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os

|

| 2 |

+

import warnings

|

| 3 |

+

from huggingface_hub import hf_hub_download

|

| 4 |

+

import gradio as gr

|

| 5 |

+

from glob import glob

|

| 6 |

+

import shutil

|

| 7 |

+

import torch

|

| 8 |

+

import numpy as np

|

| 9 |

+

from PIL import Image

|

| 10 |

+

from einops import rearrange

|

| 11 |

+

import argparse

|

| 12 |

+

|

| 13 |

+

# Suppress warnings

|

| 14 |

+

warnings.simplefilter('ignore', category=UserWarning)

|

| 15 |

+

warnings.simplefilter('ignore', category=FutureWarning)

|

| 16 |

+

warnings.simplefilter('ignore', category=DeprecationWarning)

|

| 17 |

+

|

| 18 |

+

def download_models():

|

| 19 |

+

# Create weights directory if it doesn't exist

|

| 20 |

+

os.makedirs("weights", exist_ok=True)

|

| 21 |

+

os.makedirs("weights/hunyuanDiT", exist_ok=True)

|

| 22 |

+

|

| 23 |

+

# Download Hunyuan3D-1 model

|

| 24 |

+

try:

|

| 25 |

+

hf_hub_download(

|

| 26 |

+

repo_id="tencent/Hunyuan3D-1",

|

| 27 |

+

local_dir="./weights",

|

| 28 |

+

resume_download=True

|

| 29 |

+

)

|

| 30 |

+

print("Successfully downloaded Hunyuan3D-1 model")

|

| 31 |

+

except Exception as e:

|

| 32 |

+

print(f"Error downloading Hunyuan3D-1: {e}")

|

| 33 |

+

|

| 34 |

+

# Download HunyuanDiT model

|

| 35 |

+

try:

|

| 36 |

+

hf_hub_download(

|

| 37 |

+

repo_id="Tencent-Hunyuan/HunyuanDiT-v1.1-Diffusers-Distilled",

|

| 38 |

+

local_dir="./weights/hunyuanDiT",

|

| 39 |

+

resume_download=True

|

| 40 |

+

)

|

| 41 |

+

print("Successfully downloaded HunyuanDiT model")

|

| 42 |

+

except Exception as e:

|

| 43 |

+

print(f"Error downloading HunyuanDiT: {e}")

|

| 44 |

+

|

| 45 |

+

# Download models before starting the app

|

| 46 |

+

download_models()

|

| 47 |

+

|

| 48 |

+

# Parse arguments

|

| 49 |

+

parser = argparse.ArgumentParser()

|

| 50 |

+

parser.add_argument("--use_lite", default=False, action="store_true")

|

| 51 |

+

parser.add_argument("--mv23d_cfg_path", default="./svrm/configs/svrm.yaml", type=str)

|

| 52 |

+

parser.add_argument("--mv23d_ckt_path", default="weights/svrm/svrm.safetensors", type=str)

|

| 53 |

+

parser.add_argument("--text2image_path", default="weights/hunyuanDiT", type=str)

|

| 54 |

+

parser.add_argument("--save_memory", default=False, action="store_true")

|

| 55 |

+

parser.add_argument("--device", default="cuda:0", type=str)

|

| 56 |

+

args = parser.parse_args()

|

| 57 |

+

|

| 58 |

+

# Constants

|

| 59 |

+

CONST_PORT = 8080

|

| 60 |

+

CONST_MAX_QUEUE = 1

|

| 61 |

+

CONST_SERVER = '0.0.0.0'

|

| 62 |

+

|

| 63 |

+

CONST_HEADER = '''

|

| 64 |

+

<h2><b>Official 🤗 Gradio Demo</b></h2>

|

| 65 |

+

<h2><a href='https://github.com/tencent/Hunyuan3D-1' target='_blank'>

|

| 66 |

+

<b>Hunyuan3D-1.0: A Unified Framework for Text-to-3D and Image-to-3D Generation</b></a></h2>

|

| 67 |

+

'''

|

| 68 |

+

|

| 69 |

+

# Helper functions

|

| 70 |

+

def get_example_img_list():

|

| 71 |

+

print('Loading example img list ...')

|

| 72 |

+

return sorted(glob('./demos/example_*.png'))

|

| 73 |

+

|

| 74 |

+

def get_example_txt_list():

|

| 75 |

+

print('Loading example txt list ...')

|

| 76 |

+

txt_list = []

|

| 77 |

+

for line in open('./demos/example_list.txt'):

|

| 78 |

+

txt_list.append(line.strip())

|

| 79 |

+

return txt_list

|

| 80 |

+

|

| 81 |

+

example_is = get_example_img_list()

|

| 82 |

+

example_ts = get_example_txt_list()

|

| 83 |

+

|

| 84 |

+

# Import required workers

|

| 85 |

+

from infer import seed_everything, save_gif

|

| 86 |

+

from infer import Text2Image, Removebg, Image2Views, Views2Mesh, GifRenderer

|

| 87 |

+

|

| 88 |

+

# Initialize workers

|

| 89 |

+

worker_xbg = Removebg()

|

| 90 |

+

print(f"loading {args.text2image_path}")

|

| 91 |

+

worker_t2i = Text2Image(

|

| 92 |

+

pretrain=args.text2image_path,

|

| 93 |

+

device=args.device,

|

| 94 |

+

save_memory=args.save_memory

|

| 95 |

+

)

|

| 96 |

+

worker_i2v = Image2Views(

|

| 97 |

+

use_lite=args.use_lite,

|

| 98 |

+

device=args.device

|

| 99 |

+

)

|

| 100 |

+

worker_v23 = Views2Mesh(

|

| 101 |

+

args.mv23d_cfg_path,

|

| 102 |

+

args.mv23d_ckt_path,

|

| 103 |

+

use_lite=args.use_lite,

|

| 104 |

+

device=args.device

|

| 105 |

+

)

|

| 106 |

+

worker_gif = GifRenderer(args.device)

|

| 107 |

+

|

| 108 |

+

# Pipeline stages

|

| 109 |

+

def stage_0_t2i(text, image, seed, step):

|

| 110 |

+

os.makedirs('./outputs/app_output', exist_ok=True)

|

| 111 |

+

exists = set(int(_) for _ in os.listdir('./outputs/app_output') if not _.startswith("."))

|

| 112 |

+

cur_id = min(set(range(30)) - exists) if len(exists) < 30 else 0

|

| 113 |

+

|

| 114 |

+

if os.path.exists(f"./outputs/app_output/{(cur_id + 1) % 30}"):

|

| 115 |

+

shutil.rmtree(f"./outputs/app_output/{(cur_id + 1) % 30}")

|

| 116 |

+

save_folder = f'./outputs/app_output/{cur_id}'

|

| 117 |

+

os.makedirs(save_folder, exist_ok=True)

|

| 118 |

+

|

| 119 |

+

dst = save_folder + '/img.png'

|

| 120 |

+

|

| 121 |

+

if not text:

|

| 122 |

+

if image is None:

|

| 123 |

+

return dst, save_folder

|

| 124 |

+

image.save(dst)

|

| 125 |

+

return dst, save_folder

|

| 126 |

+

|

| 127 |

+

image = worker_t2i(text, seed, step)

|

| 128 |

+

image.save(dst)

|

| 129 |

+

dst = worker_xbg(image, save_folder)

|

| 130 |

+

return dst, save_folder

|

| 131 |

+

|

| 132 |

+

def stage_1_xbg(image, save_folder):

|

| 133 |

+

if isinstance(image, str):

|

| 134 |

+

image = Image.open(image)

|

| 135 |

+

dst = save_folder + '/img_nobg.png'

|

| 136 |

+

rgba = worker_xbg(image)

|

| 137 |

+

rgba.save(dst)

|

| 138 |

+

return dst

|

| 139 |

+

|

| 140 |

+

def stage_2_i2v(image, seed, step, save_folder):

|

| 141 |

+

if isinstance(image, str):

|

| 142 |

+

image = Image.open(image)

|

| 143 |

+

gif_dst = save_folder + '/views.gif'

|

| 144 |

+

res_img, pils = worker_i2v(image, seed, step)

|

| 145 |

+

save_gif(pils, gif_dst)

|

| 146 |

+

views_img, cond_img = res_img[0], res_img[1]

|

| 147 |

+

img_array = np.asarray(views_img, dtype=np.uint8)

|

| 148 |

+

show_img = rearrange(img_array, '(n h) (m w) c -> (n m) h w c', n=3, m=2)

|

| 149 |

+

show_img = show_img[worker_i2v.order, ...]

|

| 150 |

+

show_img = rearrange(show_img, '(n m) h w c -> (n h) (m w) c', n=2, m=3)

|

| 151 |

+

show_img = Image.fromarray(show_img)

|

| 152 |

+

return views_img, cond_img, show_img

|

| 153 |

+

|

| 154 |

+

def stage_3_v23(views_pil, cond_pil, seed, save_folder, target_face_count=30000,

|

| 155 |

+

do_texture_mapping=True, do_render=True):

|

| 156 |

+

do_texture_mapping = do_texture_mapping or do_render

|

| 157 |

+

obj_dst = save_folder + '/mesh_with_colors.obj'

|

| 158 |

+

glb_dst = save_folder + '/mesh.glb'

|

| 159 |

+

worker_v23(

|

| 160 |

+

views_pil,

|

| 161 |

+

cond_pil,

|

| 162 |

+

seed=seed,

|

| 163 |

+

save_folder=save_folder,

|

| 164 |

+

target_face_count=target_face_count,

|

| 165 |

+

do_texture_mapping=do_texture_mapping

|

| 166 |

+

)

|

| 167 |

+

return obj_dst, glb_dst

|

| 168 |

+

|

| 169 |

+

def stage_4_gif(obj_dst, save_folder, do_render_gif=True):

|

| 170 |

+

if not do_render_gif:

|

| 171 |

+

return None

|

| 172 |

+

gif_dst = save_folder + '/output.gif'

|

| 173 |

+

worker_gif(

|

| 174 |

+

save_folder + '/mesh.obj',

|

| 175 |

+

gif_dst_path=gif_dst

|

| 176 |

+

)

|

| 177 |

+

return gif_dst

|

| 178 |

+

|

| 179 |

+

# Gradio Interface

|

| 180 |

+

with gr.Blocks() as demo:

|

| 181 |

+

gr.Markdown(CONST_HEADER)

|

| 182 |

+

|

| 183 |

+

with gr.Row(variant="panel"):

|

| 184 |

+

with gr.Column(scale=2):

|

| 185 |

+

with gr.Tab("Text to 3D"):

|

| 186 |

+

with gr.Column():

|

| 187 |

+

text = gr.TextArea('一只黑白相间的熊猫在白色背景上居中坐着,呈现出卡通风格和可爱氛围。',

|

| 188 |

+

lines=1, max_lines=10, label='Input text')

|

| 189 |

+

with gr.Row():

|

| 190 |

+

textgen_seed = gr.Number(value=0, label="T2I seed", precision=0)

|

| 191 |

+

textgen_step = gr.Number(value=25, label="T2I step", precision=0)

|

| 192 |

+

textgen_SEED = gr.Number(value=0, label="Gen seed", precision=0)

|

| 193 |

+

textgen_STEP = gr.Number(value=50, label="Gen step", precision=0)

|

| 194 |

+

textgen_max_faces = gr.Number(value=90000, label="max number of faces", precision=0)

|

| 195 |

+

|

| 196 |

+

with gr.Row():

|

| 197 |

+

textgen_do_texture_mapping = gr.Checkbox(label="texture mapping", value=False)

|

| 198 |

+

textgen_do_render_gif = gr.Checkbox(label="Render gif", value=False)

|

| 199 |

+

textgen_submit = gr.Button("Generate", variant="primary")

|

| 200 |

+

|

| 201 |

+

gr.Examples(examples=example_ts, inputs=[text], label="Txt examples")

|

| 202 |

+

|

| 203 |

+

with gr.Tab("Image to 3D"):

|

| 204 |

+

with gr.Column():

|

| 205 |

+

input_image = gr.Image(label="Input image", width=256, height=256,

|

| 206 |

+

type="pil", image_mode="RGBA", sources="upload")

|

| 207 |

+

with gr.Row():

|

| 208 |

+

imggen_SEED = gr.Number(value=0, label="Gen seed", precision=0)

|

| 209 |

+

imggen_STEP = gr.Number(value=50, label="Gen step", precision=0)

|

| 210 |

+

imggen_max_faces = gr.Number(value=90000, label="max number of faces", precision=0)

|

| 211 |

+

|

| 212 |

+

with gr.Row():

|

| 213 |

+

imggen_do_texture_mapping = gr.Checkbox(label="texture mapping", value=False)

|

| 214 |

+

imggen_do_render_gif = gr.Checkbox(label="Render gif", value=False)

|

| 215 |

+

imggen_submit = gr.Button("Generate", variant="primary")

|

| 216 |

+

|

| 217 |

+

gr.Examples(examples=example_is, inputs=[input_image], label="Img examples")

|

| 218 |

+

|

| 219 |

+

with gr.Column(scale=3):

|

| 220 |

+

with gr.Tab("rembg image"):

|

| 221 |

+

rem_bg_image = gr.Image(label="No background image", width=256, height=256,

|

| 222 |

+

type="pil", image_mode="RGBA")

|

| 223 |

+

|

| 224 |

+

with gr.Tab("Multi views"):

|

| 225 |

+

result_image = gr.Image(label="Multi views", type="pil")

|

| 226 |

+

with gr.Tab("Obj"):

|

| 227 |

+

result_3dobj = gr.Model3D(label="Output obj")

|

| 228 |

+

with gr.Tab("Glb"):

|

| 229 |

+

result_3dglb = gr.Model3D(label="Output glb")

|

| 230 |

+

with gr.Tab("GIF"):

|

| 231 |

+

result_gif = gr.Image(label="Rendered GIF")

|

| 232 |

+

|

| 233 |

+

# States

|

| 234 |

+

none = gr.State(None)

|

| 235 |

+

save_folder = gr.State()

|

| 236 |

+

cond_image = gr.State()

|

| 237 |

+

views_image = gr.State()

|

| 238 |

+

text_image = gr.State()

|

| 239 |

+

|

| 240 |

+

# Event handlers

|

| 241 |

+

textgen_submit.click(

|

| 242 |

+

fn=stage_0_t2i,

|

| 243 |

+

inputs=[text, none, textgen_seed, textgen_step],

|

| 244 |

+

outputs=[rem_bg_image, save_folder],

|

| 245 |

+

).success(

|

| 246 |

+

fn=stage_2_i2v,

|

| 247 |

+

inputs=[rem_bg_image, textgen_SEED, textgen_STEP, save_folder],

|

| 248 |

+

outputs=[views_image, cond_image, result_image],

|

| 249 |

+

).success(

|

| 250 |

+

fn=stage_3_v23,

|

| 251 |

+

inputs=[views_image, cond_image, textgen_SEED, save_folder, textgen_max_faces,

|

| 252 |

+

textgen_do_texture_mapping, textgen_do_render_gif],

|

| 253 |

+

outputs=[result_3dobj, result_3dglb],

|

| 254 |

+

).success(

|

| 255 |

+

fn=stage_4_gif,

|

| 256 |

+

inputs=[result_3dglb, save_folder, textgen_do_render_gif],

|

| 257 |

+

outputs=[result_gif],

|

| 258 |

+

)

|

| 259 |

+

|

| 260 |

+

imggen_submit.click(

|

| 261 |

+

fn=stage_0_t2i,

|

| 262 |

+

inputs=[none, input_image, textgen_seed, textgen_step],

|

| 263 |

+

outputs=[text_image, save_folder],

|

| 264 |

+

).success(

|

| 265 |

+

fn=stage_1_xbg,

|

| 266 |

+

inputs=[text_image, save_folder],

|

| 267 |

+

outputs=[rem_bg_image],

|

| 268 |

+

).success(

|

| 269 |

+

fn=stage_2_i2v,

|

| 270 |

+

inputs=[rem_bg_image, imggen_SEED, imggen_STEP, save_folder],

|

| 271 |

+

outputs=[views_image, cond_image, result_image],

|

| 272 |

+

).success(

|

| 273 |

+

fn=stage_3_v23,

|

| 274 |

+

inputs=[views_image, cond_image, imggen_SEED, save_folder, imggen_max_faces,

|

| 275 |

+

imggen_do_texture_mapping, imggen_do_render_gif],

|

| 276 |

+

outputs=[result_3dobj, result_3dglb],

|

| 277 |

+

).success(

|

| 278 |

+

fn=stage_4_gif,

|

| 279 |

+

inputs=[result_3dglb, save_folder, imggen_do_render_gif],

|

| 280 |

+

outputs=[result_gif],

|

| 281 |

+

)

|

| 282 |

+

|

| 283 |

+

demo.queue(max_size=CONST_MAX_QUEUE)

|

| 284 |

+

demo.launch(server_name=CONST_SERVER, server_port=CONST_PORT)

|

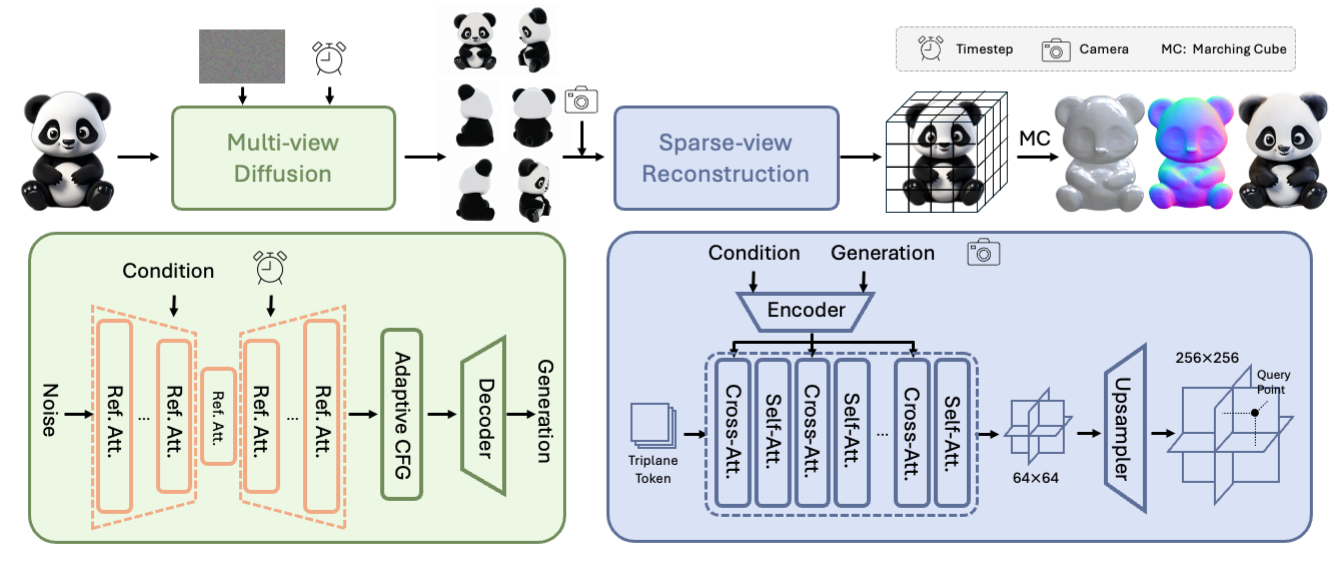

assets/logo.png

ADDED

|

assets/overview_3.png

ADDED

|

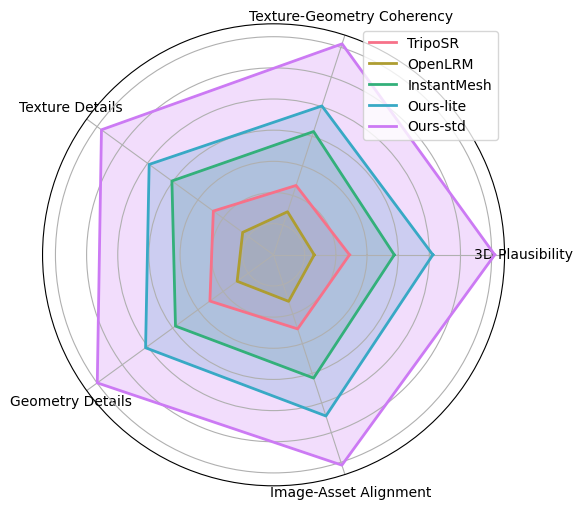

assets/radar.png

ADDED

|

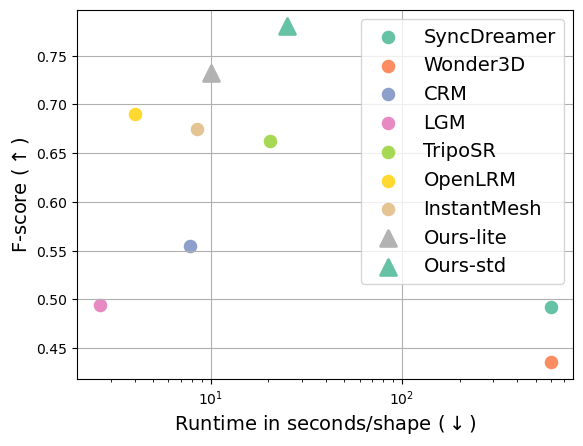

assets/runtime.png

ADDED

|

assets/teaser.png

ADDED

|

Git LFS Details

|

demos/example_000.png

ADDED

|

demos/example_001.png

ADDED

|

demos/example_002.png

ADDED

|

demos/example_003.png

ADDED

|

Git LFS Details

|

demos/example_list.txt

ADDED

|

@@ -0,0 +1,2 @@

|

|

|

|

|

|

|

|

|

|

| 1 |

+

a pot of green plants grows in a red flower pot.

|

| 2 |

+

a lovely rabbit eating carrots

|

infer/__init__.py

ADDED

|

@@ -0,0 +1,28 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Open Source Model Licensed under the Apache License Version 2.0 and Other Licenses of the Third-Party Components therein:

|

| 2 |

+

# The below Model in this distribution may have been modified by THL A29 Limited ("Tencent Modifications"). All Tencent Modifications are Copyright (C) 2024 THL A29 Limited.

|

| 3 |

+

|

| 4 |

+

# Copyright (C) 2024 THL A29 Limited, a Tencent company. All rights reserved.

|

| 5 |

+

# The below software and/or models in this distribution may have been

|

| 6 |

+

# modified by THL A29 Limited ("Tencent Modifications").

|

| 7 |

+

# All Tencent Modifications are Copyright (C) THL A29 Limited.

|

| 8 |

+

|

| 9 |

+

# Hunyuan 3D is licensed under the TENCENT HUNYUAN NON-COMMERCIAL LICENSE AGREEMENT

|

| 10 |

+

# except for the third-party components listed below.

|

| 11 |

+

# Hunyuan 3D does not impose any additional limitations beyond what is outlined

|

| 12 |

+

# in the repsective licenses of these third-party components.

|

| 13 |

+

# Users must comply with all terms and conditions of original licenses of these third-party

|

| 14 |

+

# components and must ensure that the usage of the third party components adheres to

|

| 15 |

+

# all relevant laws and regulations.

|

| 16 |

+

|

| 17 |

+

# For avoidance of doubts, Hunyuan 3D means the large language models and

|

| 18 |

+

# their software and algorithms, including trained model weights, parameters (including

|

| 19 |

+

# optimizer states), machine-learning model code, inference-enabling code, training-enabling code,

|

| 20 |

+

# fine-tuning enabling code and other elements of the foregoing made publicly available

|

| 21 |

+

# by Tencent in accordance with TENCENT HUNYUAN COMMUNITY LICENSE AGREEMENT.

|

| 22 |

+

|

| 23 |

+

from .utils import seed_everything, timing_decorator, auto_amp_inference

|

| 24 |

+

from .rembg import Removebg

|

| 25 |

+

from .text_to_image import Text2Image

|

| 26 |

+

from .image_to_views import Image2Views, save_gif

|

| 27 |

+

from .views_to_mesh import Views2Mesh

|

| 28 |

+

from .gif_render import GifRenderer

|

infer/gif_render.py

ADDED

|

@@ -0,0 +1,55 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Open Source Model Licensed under the Apache License Version 2.0 and Other Licenses of the Third-Party Components therein:

|

| 2 |

+

# The below Model in this distribution may have been modified by THL A29 Limited ("Tencent Modifications"). All Tencent Modifications are Copyright (C) 2024 THL A29 Limited.

|

| 3 |

+

|

| 4 |

+

# Copyright (C) 2024 THL A29 Limited, a Tencent company. All rights reserved.

|

| 5 |

+

# The below software and/or models in this distribution may have been

|

| 6 |

+

# modified by THL A29 Limited ("Tencent Modifications").

|

| 7 |

+

# All Tencent Modifications are Copyright (C) THL A29 Limited.

|

| 8 |

+

|

| 9 |

+

# Hunyuan 3D is licensed under the TENCENT HUNYUAN NON-COMMERCIAL LICENSE AGREEMENT

|

| 10 |

+

# except for the third-party components listed below.

|

| 11 |

+

# Hunyuan 3D does not impose any additional limitations beyond what is outlined

|

| 12 |

+

# in the repsective licenses of these third-party components.

|

| 13 |

+

# Users must comply with all terms and conditions of original licenses of these third-party

|

| 14 |

+

# components and must ensure that the usage of the third party components adheres to

|

| 15 |

+

# all relevant laws and regulations.

|

| 16 |

+

|

| 17 |

+

# For avoidance of doubts, Hunyuan 3D means the large language models and

|

| 18 |

+

# their software and algorithms, including trained model weights, parameters (including

|

| 19 |

+

# optimizer states), machine-learning model code, inference-enabling code, training-enabling code,

|

| 20 |

+

# fine-tuning enabling code and other elements of the foregoing made publicly available

|

| 21 |

+

# by Tencent in accordance with TENCENT HUNYUAN COMMUNITY LICENSE AGREEMENT.

|

| 22 |

+

|

| 23 |

+

from svrm.ldm.vis_util import render

|

| 24 |

+

from .utils import seed_everything, timing_decorator

|

| 25 |

+

|

| 26 |

+

class GifRenderer():

|

| 27 |

+

'''

|

| 28 |

+

render frame(s) of mesh using pytorch3d

|

| 29 |

+

'''

|

| 30 |

+

def __init__(self, device="cuda:0"):

|

| 31 |

+

self.device = device

|

| 32 |

+

|

| 33 |

+

@timing_decorator("gif render")

|

| 34 |

+

def __call__(

|

| 35 |

+

self,

|

| 36 |

+

obj_filename,

|

| 37 |

+

elev=0,

|

| 38 |

+

azim=0,

|

| 39 |

+

resolution=512,

|

| 40 |

+

gif_dst_path='',

|

| 41 |

+

n_views=120,

|

| 42 |

+

fps=30,

|

| 43 |

+

rgb=True

|

| 44 |

+

):

|

| 45 |

+

render(

|

| 46 |

+

obj_filename,

|

| 47 |

+

elev=elev,

|

| 48 |

+

azim=azim,

|

| 49 |

+

resolution=resolution,

|

| 50 |

+

gif_dst_path=gif_dst_path,

|

| 51 |

+

n_views=n_views,

|

| 52 |

+

fps=fps,

|

| 53 |

+

device=self.device,

|

| 54 |

+

rgb=rgb

|

| 55 |

+

)

|

infer/image_to_views.py

ADDED

|

@@ -0,0 +1,81 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Open Source Model Licensed under the Apache License Version 2.0 and Other Licenses of the Third-Party Components therein:

|

| 2 |

+

# The below Model in this distribution may have been modified by THL A29 Limited ("Tencent Modifications"). All Tencent Modifications are Copyright (C) 2024 THL A29 Limited.

|

| 3 |

+

|

| 4 |

+

# Copyright (C) 2024 THL A29 Limited, a Tencent company. All rights reserved.

|

| 5 |

+

# The below software and/or models in this distribution may have been

|

| 6 |

+

# modified by THL A29 Limited ("Tencent Modifications").

|

| 7 |

+

# All Tencent Modifications are Copyright (C) THL A29 Limited.

|

| 8 |

+

|

| 9 |

+

# Hunyuan 3D is licensed under the TENCENT HUNYUAN NON-COMMERCIAL LICENSE AGREEMENT

|

| 10 |

+

# except for the third-party components listed below.

|

| 11 |

+

# Hunyuan 3D does not impose any additional limitations beyond what is outlined

|

| 12 |

+

# in the repsective licenses of these third-party components.

|

| 13 |

+

# Users must comply with all terms and conditions of original licenses of these third-party

|

| 14 |

+

# components and must ensure that the usage of the third party components adheres to

|

| 15 |

+

# all relevant laws and regulations.

|

| 16 |

+

|

| 17 |

+

# For avoidance of doubts, Hunyuan 3D means the large language models and

|

| 18 |

+

# their software and algorithms, including trained model weights, parameters (including

|

| 19 |

+

# optimizer states), machine-learning model code, inference-enabling code, training-enabling code,

|

| 20 |

+

# fine-tuning enabling code and other elements of the foregoing made publicly available

|

| 21 |

+

# by Tencent in accordance with TENCENT HUNYUAN COMMUNITY LICENSE AGREEMENT.

|

| 22 |

+

|

| 23 |

+

import os

|

| 24 |

+

import time

|

| 25 |

+

import torch

|

| 26 |

+

import random

|

| 27 |

+

import numpy as np

|

| 28 |

+

from PIL import Image

|

| 29 |

+

from einops import rearrange

|

| 30 |

+

from PIL import Image, ImageSequence

|

| 31 |

+

|

| 32 |

+

from .utils import seed_everything, timing_decorator, auto_amp_inference

|

| 33 |

+

from .utils import get_parameter_number, set_parameter_grad_false

|

| 34 |

+

from mvd.hunyuan3d_mvd_std_pipeline import HunYuan3D_MVD_Std_Pipeline

|

| 35 |

+

from mvd.hunyuan3d_mvd_lite_pipeline import Hunyuan3d_MVD_Lite_Pipeline

|

| 36 |

+

|

| 37 |

+

|

| 38 |

+

def save_gif(pils, save_path, df=False):

|

| 39 |

+

# save a list of PIL.Image to gif

|

| 40 |

+

spf = 4000 / len(pils)

|

| 41 |

+

os.makedirs(os.path.dirname(save_path), exist_ok=True)

|

| 42 |

+

pils[0].save(save_path, format="GIF", save_all=True, append_images=pils[1:], duration=spf, loop=0)

|

| 43 |

+

return save_path

|

| 44 |

+

|

| 45 |

+

|

| 46 |

+

class Image2Views():

|

| 47 |

+

def __init__(self, device="cuda:0", use_lite=False):

|

| 48 |

+

self.device = device

|

| 49 |

+

if use_lite:

|

| 50 |

+

self.pipe = Hunyuan3d_MVD_Lite_Pipeline.from_pretrained(

|

| 51 |

+

"./weights/mvd_lite",

|

| 52 |

+

torch_dtype = torch.float16,

|

| 53 |

+

use_safetensors = True,

|

| 54 |

+

)

|

| 55 |

+

else:

|

| 56 |

+

self.pipe = HunYuan3D_MVD_Std_Pipeline.from_pretrained(

|

| 57 |

+

"./weights/mvd_std",

|

| 58 |

+

torch_dtype = torch.float16,

|

| 59 |

+

use_safetensors = True,

|

| 60 |

+

)

|

| 61 |

+

self.pipe = self.pipe.to(device)

|

| 62 |

+

self.order = [0, 1, 2, 3, 4, 5] if use_lite else [0, 2, 4, 5, 3, 1]

|

| 63 |

+

set_parameter_grad_false(self.pipe.unet)

|

| 64 |

+

print('image2views unet model', get_parameter_number(self.pipe.unet))

|

| 65 |

+

|

| 66 |

+

@torch.no_grad()

|

| 67 |

+

@timing_decorator("image to views")

|

| 68 |

+

@auto_amp_inference

|

| 69 |

+

def __call__(self, pil_img, seed=0, steps=50, guidance_scale=2.0, guidance_curve=lambda t:2.0):

|

| 70 |

+

seed_everything(seed)

|

| 71 |

+

generator = torch.Generator(device=self.device)

|

| 72 |

+

res_img = self.pipe(pil_img,

|

| 73 |

+

num_inference_steps=steps,

|

| 74 |

+

guidance_scale=guidance_scale,

|

| 75 |

+

guidance_curve=guidance_curve,

|

| 76 |

+

generat=generator).images

|

| 77 |

+

show_image = rearrange(np.asarray(res_img[0], dtype=np.uint8), '(n h) (m w) c -> (n m) h w c', n=3, m=2)

|

| 78 |

+

pils = [res_img[1]]+[Image.fromarray(show_image[idx]) for idx in self.order]

|

| 79 |

+

torch.cuda.empty_cache()

|

| 80 |

+

return res_img, pils

|

| 81 |

+

|

infer/rembg.py

ADDED

|

@@ -0,0 +1,26 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from rembg import remove, new_session

|

| 2 |

+

from .utils import timing_decorator

|

| 3 |

+

|

| 4 |

+

class Removebg():

|

| 5 |

+

def __init__(self, name="u2net"):

|

| 6 |

+

'''

|

| 7 |

+

name: rembg

|

| 8 |

+

'''

|

| 9 |

+

self.session = new_session(name)

|

| 10 |

+

|

| 11 |

+

@timing_decorator("remove background")

|

| 12 |

+

def __call__(self, rgb_img, force=False):

|

| 13 |

+

'''

|

| 14 |

+

inputs:

|

| 15 |

+

rgb_img: PIL.Image, with RGB mode expected

|

| 16 |

+

force: bool, input is RGBA mode

|

| 17 |

+

return:

|

| 18 |

+

rgba_img: PIL.Image with RGBA mode

|

| 19 |

+

'''

|

| 20 |

+

if rgb_img.mode == "RGBA":

|

| 21 |

+

if force:

|

| 22 |

+

rgb_img = rgb_img.convert("RGB")

|

| 23 |

+

else:

|

| 24 |

+

return rgb_img

|

| 25 |

+

rgba_img = remove(rgb_img, session=self.session)

|

| 26 |

+

return rgba_img

|

infer/text_to_image.py

ADDED

|

@@ -0,0 +1,80 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Open Source Model Licensed under the Apache License Version 2.0 and Other Licenses of the Third-Party Components therein:

|

| 2 |

+

# The below Model in this distribution may have been modified by THL A29 Limited ("Tencent Modifications"). All Tencent Modifications are Copyright (C) 2024 THL A29 Limited.

|

| 3 |

+

|

| 4 |

+

# Copyright (C) 2024 THL A29 Limited, a Tencent company. All rights reserved.

|

| 5 |

+

# The below software and/or models in this distribution may have been

|

| 6 |

+

# modified by THL A29 Limited ("Tencent Modifications").

|

| 7 |

+

# All Tencent Modifications are Copyright (C) THL A29 Limited.

|

| 8 |

+

|

| 9 |

+

# Hunyuan 3D is licensed under the TENCENT HUNYUAN NON-COMMERCIAL LICENSE AGREEMENT

|

| 10 |

+

# except for the third-party components listed below.

|

| 11 |

+

# Hunyuan 3D does not impose any additional limitations beyond what is outlined

|

| 12 |

+

# in the repsective licenses of these third-party components.

|

| 13 |

+

# Users must comply with all terms and conditions of original licenses of these third-party

|

| 14 |

+

# components and must ensure that the usage of the third party components adheres to

|

| 15 |

+

# all relevant laws and regulations.

|

| 16 |

+

|

| 17 |

+

# For avoidance of doubts, Hunyuan 3D means the large language models and

|

| 18 |

+

# their software and algorithms, including trained model weights, parameters (including

|

| 19 |

+

# optimizer states), machine-learning model code, inference-enabling code, training-enabling code,

|

| 20 |

+

# fine-tuning enabling code and other elements of the foregoing made publicly available

|

| 21 |

+

# by Tencent in accordance with TENCENT HUNYUAN COMMUNITY LICENSE AGREEMENT.

|

| 22 |

+

|

| 23 |

+

import torch

|

| 24 |

+

from .utils import seed_everything, timing_decorator, auto_amp_inference

|

| 25 |

+

from .utils import get_parameter_number, set_parameter_grad_false

|

| 26 |

+

from diffusers import HunyuanDiTPipeline, AutoPipelineForText2Image

|

| 27 |

+

|

| 28 |

+

class Text2Image():

|

| 29 |

+

def __init__(self, pretrain="weights/hunyuanDiT", device="cuda:0", save_memory=False):

|

| 30 |

+

'''

|

| 31 |

+

save_memory: if GPU memory is low, can set it

|

| 32 |

+

'''

|

| 33 |

+

self.save_memory = save_memory

|

| 34 |

+

self.device = device

|

| 35 |

+

self.pipe = AutoPipelineForText2Image.from_pretrained(

|

| 36 |

+

pretrain,

|

| 37 |

+

torch_dtype = torch.float16,

|

| 38 |

+

enable_pag = True,

|

| 39 |

+

pag_applied_layers = ["blocks.(16|17|18|19)"]

|

| 40 |

+

)

|

| 41 |

+

set_parameter_grad_false(self.pipe.transformer)

|

| 42 |

+

print('text2image transformer model', get_parameter_number(self.pipe.transformer))

|

| 43 |

+

if not save_memory:

|

| 44 |

+

self.pipe = self.pipe.to(device)

|

| 45 |

+

self.neg_txt = "文本,特写,裁剪,出框,最差质量,低质量,JPEG伪影,PGLY,重复,病态,残缺,多余的手指,变异的手," \

|

| 46 |

+

"画得不好的手,画得不好的脸,变异,畸形,模糊,脱水,糟糕的解剖学,糟糕的比例,多余的肢体,克隆的脸," \

|

| 47 |

+

"毁容,恶心的比例,畸形的肢体,缺失的手臂,缺失的腿,额外的手臂,额外的腿,融合的手指,手指太多,长脖子"

|

| 48 |

+

|

| 49 |

+

@torch.no_grad()

|

| 50 |

+

@timing_decorator('text to image')

|

| 51 |

+

@auto_amp_inference

|

| 52 |

+

def __call__(self, *args, **kwargs):

|

| 53 |

+

if self.save_memory:

|

| 54 |

+

self.pipe = self.pipe.to(self.device)

|

| 55 |

+

torch.cuda.empty_cache()

|

| 56 |

+

res = self.call(*args, **kwargs)

|

| 57 |

+

self.pipe = self.pipe.to("cpu")

|

| 58 |

+

else:

|

| 59 |

+

res = self.call(*args, **kwargs)

|

| 60 |

+

torch.cuda.empty_cache()

|

| 61 |

+

return res

|

| 62 |

+

|

| 63 |

+

def call(self, prompt, seed=0, steps=25):

|

| 64 |

+

'''

|

| 65 |

+

inputs:

|

| 66 |

+

prompr: str

|

| 67 |

+

seed: int

|

| 68 |

+

steps: int

|

| 69 |

+

return:

|

| 70 |

+

rgb: PIL.Image

|

| 71 |

+

'''

|

| 72 |

+

prompt = prompt + ",白色背景,3D风格,最佳质量"

|

| 73 |

+

seed_everything(seed)

|

| 74 |

+

generator = torch.Generator(device=self.device)

|

| 75 |

+

if seed is not None: generator = generator.manual_seed(int(seed))

|

| 76 |

+

rgb = self.pipe(prompt=prompt, negative_prompt=self.neg_txt, num_inference_steps=steps,

|

| 77 |

+

pag_scale=1.3, width=1024, height=1024, generator=generator, return_dict=False)[0][0]

|

| 78 |

+

torch.cuda.empty_cache()

|

| 79 |

+

return rgb

|

| 80 |

+

|

infer/utils.py

ADDED

|

@@ -0,0 +1,77 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Open Source Model Licensed under the Apache License Version 2.0 and Other Licenses of the Third-Party Components therein:

|

| 2 |

+

# The below Model in this distribution may have been modified by THL A29 Limited ("Tencent Modifications"). All Tencent Modifications are Copyright (C) 2024 THL A29 Limited.

|

| 3 |

+

|

| 4 |

+

# Copyright (C) 2024 THL A29 Limited, a Tencent company. All rights reserved.

|

| 5 |

+

# The below software and/or models in this distribution may have been

|

| 6 |

+

# modified by THL A29 Limited ("Tencent Modifications").

|

| 7 |

+

# All Tencent Modifications are Copyright (C) THL A29 Limited.

|

| 8 |

+

|

| 9 |

+

# Hunyuan 3D is licensed under the TENCENT HUNYUAN NON-COMMERCIAL LICENSE AGREEMENT

|

| 10 |

+

# except for the third-party components listed below.

|

| 11 |

+

# Hunyuan 3D does not impose any additional limitations beyond what is outlined

|

| 12 |

+

# in the repsective licenses of these third-party components.

|

| 13 |

+

# Users must comply with all terms and conditions of original licenses of these third-party

|

| 14 |

+

# components and must ensure that the usage of the third party components adheres to

|

| 15 |

+

# all relevant laws and regulations.

|

| 16 |

+

|

| 17 |

+

# For avoidance of doubts, Hunyuan 3D means the large language models and

|

| 18 |

+

# their software and algorithms, including trained model weights, parameters (including

|

| 19 |

+

# optimizer states), machine-learning model code, inference-enabling code, training-enabling code,

|

| 20 |

+

# fine-tuning enabling code and other elements of the foregoing made publicly available

|

| 21 |

+

# by Tencent in accordance with TENCENT HUNYUAN COMMUNITY LICENSE AGREEMENT.

|

| 22 |

+

|

| 23 |

+

import os

|

| 24 |

+

import time

|

| 25 |

+

import random

|

| 26 |

+

import numpy as np

|

| 27 |

+

import torch

|

| 28 |

+

from torch.cuda.amp import autocast, GradScaler

|

| 29 |

+

from functools import wraps

|

| 30 |

+

|

| 31 |

+

def seed_everything(seed):

|

| 32 |

+

'''

|

| 33 |

+

seed everthing

|

| 34 |

+

'''

|

| 35 |

+

random.seed(seed)

|

| 36 |

+

np.random.seed(seed)

|

| 37 |

+

torch.manual_seed(seed)

|

| 38 |

+

os.environ["PL_GLOBAL_SEED"] = str(seed)

|

| 39 |

+

|

| 40 |

+

def timing_decorator(category: str):

|

| 41 |

+

'''

|

| 42 |

+

timing_decorator: record time

|

| 43 |

+

'''

|

| 44 |

+

def decorator(func):

|

| 45 |

+

func.call_count = 0

|

| 46 |

+

@wraps(func)

|

| 47 |

+

def wrapper(*args, **kwargs):

|

| 48 |

+

start_time = time.time()

|

| 49 |

+

result = func(*args, **kwargs)

|

| 50 |

+

end_time = time.time()

|

| 51 |

+

elapsed_time = end_time - start_time

|

| 52 |

+

func.call_count += 1

|

| 53 |

+

print(f"[HunYuan3D]-[{category}], cost time: {elapsed_time:.4f}s") # huiwen

|

| 54 |

+

return result

|

| 55 |

+

return wrapper

|

| 56 |

+

return decorator

|

| 57 |

+

|

| 58 |

+

def auto_amp_inference(func):

|

| 59 |

+

'''

|

| 60 |

+

with torch.cuda.amp.autocast()"

|

| 61 |

+

xxx

|

| 62 |

+

'''

|

| 63 |

+

@wraps(func)

|

| 64 |

+

def wrapper(*args, **kwargs):

|

| 65 |

+

with autocast():

|

| 66 |

+

output = func(*args, **kwargs)

|

| 67 |

+

return output

|

| 68 |

+

return wrapper

|

| 69 |

+

|

| 70 |

+

def get_parameter_number(model):

|

| 71 |

+

total_num = sum(p.numel() for p in model.parameters())

|

| 72 |

+

trainable_num = sum(p.numel() for p in model.parameters() if p.requires_grad)

|

| 73 |

+

return {'Total': total_num, 'Trainable': trainable_num}

|

| 74 |

+

|

| 75 |

+

def set_parameter_grad_false(model):

|

| 76 |

+

for p in model.parameters():

|

| 77 |

+

p.requires_grad = False

|

infer/views_to_mesh.py

ADDED

|

@@ -0,0 +1,94 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Open Source Model Licensed under the Apache License Version 2.0 and Other Licenses of the Third-Party Components therein:

|

| 2 |

+

# The below Model in this distribution may have been modified by THL A29 Limited ("Tencent Modifications"). All Tencent Modifications are Copyright (C) 2024 THL A29 Limited.

|

| 3 |

+

|

| 4 |

+

# Copyright (C) 2024 THL A29 Limited, a Tencent company. All rights reserved.

|

| 5 |

+

# The below software and/or models in this distribution may have been

|

| 6 |

+

# modified by THL A29 Limited ("Tencent Modifications").

|

| 7 |

+

# All Tencent Modifications are Copyright (C) THL A29 Limited.

|

| 8 |

+

|

| 9 |

+

# Hunyuan 3D is licensed under the TENCENT HUNYUAN NON-COMMERCIAL LICENSE AGREEMENT

|

| 10 |

+

# except for the third-party components listed below.

|

| 11 |

+

# Hunyuan 3D does not impose any additional limitations beyond what is outlined

|

| 12 |

+

# in the repsective licenses of these third-party components.

|

| 13 |

+

# Users must comply with all terms and conditions of original licenses of these third-party

|

| 14 |

+

# components and must ensure that the usage of the third party components adheres to

|

| 15 |

+

# all relevant laws and regulations.

|

| 16 |

+

|

| 17 |

+

# For avoidance of doubts, Hunyuan 3D means the large language models and

|

| 18 |

+

# their software and algorithms, including trained model weights, parameters (including

|

| 19 |

+

# optimizer states), machine-learning model code, inference-enabling code, training-enabling code,

|

| 20 |

+

# fine-tuning enabling code and other elements of the foregoing made publicly available

|

| 21 |

+

# by Tencent in accordance with TENCENT HUNYUAN COMMUNITY LICENSE AGREEMENT.

|

| 22 |

+

|

| 23 |

+

import os

|

| 24 |

+

import time

|

| 25 |

+

import torch

|

| 26 |

+

import random

|

| 27 |

+

import numpy as np

|

| 28 |

+

from PIL import Image

|

| 29 |

+

from einops import rearrange

|

| 30 |

+

from PIL import Image, ImageSequence

|

| 31 |

+

|

| 32 |

+

from .utils import seed_everything, timing_decorator, auto_amp_inference

|

| 33 |

+

from .utils import get_parameter_number, set_parameter_grad_false

|

| 34 |

+

from svrm.predictor import MV23DPredictor

|

| 35 |

+

|

| 36 |

+

|

| 37 |

+

class Views2Mesh():

|

| 38 |

+

def __init__(self, mv23d_cfg_path, mv23d_ckt_path, device="cuda:0", use_lite=False):

|

| 39 |

+

'''

|

| 40 |

+