Spaces:

Sleeping

Sleeping

all my project

Browse files- commands.txt +5 -0

- requirements.txt +6 -0

- screenshots/.keep +0 -0

- screenshots/img001.jpeg +0 -0

- src/.DS_Store +0 -0

- src/app.py +142 -0

- src/assets/.DS_Store +0 -0

- src/assets/dataset/.keep +0 -0

- src/assets/ml/.keep +0 -0

- src/assets/ml/eda-report.html +0 -0

- src/assets/ml/requirements.txt +85 -0

- src/assets/tmp/.keep +0 -0

- src/problem_solving.py +139 -0

- src/tmp.txt +15 -0

- src/utils.py +0 -0

commands.txt

ADDED

|

@@ -0,0 +1,5 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

python3 -m venv env_name

|

| 2 |

+

# activate

|

| 3 |

+

|

| 4 |

+

# install dependencies

|

| 5 |

+

pip install -r requirements.txt

|

requirements.txt

ADDED

|

@@ -0,0 +1,6 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

gradio==3.23.0

|

| 2 |

+

pandas==1.5.3

|

| 3 |

+

pytest

|

| 4 |

+

# ML DEV

|

| 5 |

+

scikit-learn==1.2.2

|

| 6 |

+

pandas-profiling==3.6.6

|

screenshots/.keep

ADDED

|

File without changes

|

screenshots/img001.jpeg

ADDED

|

src/.DS_Store

ADDED

|

Binary file (6.15 kB). View file

|

|

|

src/app.py

ADDED

|

@@ -0,0 +1,142 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import gradio as gr

|

| 2 |

+

import pandas as pd

|

| 3 |

+

import os

|

| 4 |

+

import pickle

|

| 5 |

+

from datetime import datetime

|

| 6 |

+

|

| 7 |

+

|

| 8 |

+

# PAGE CONFIG

|

| 9 |

+

# page_icon = "💐"

|

| 10 |

+

|

| 11 |

+

# Setup variables and constants

|

| 12 |

+

# datetime.now().strftime('%d-%m-%Y _ %Hh %Mm %Ss')

|

| 13 |

+

DIRPATH = os.path.dirname(os.path.realpath(__file__))

|

| 14 |

+

tmp_df_fp = os.path.join(DIRPATH, "assets", "tmp",

|

| 15 |

+

f"history_{datetime.now().strftime('%d-%m-%Y')}.csv")

|

| 16 |

+

ml_core_fp = os.path.join(DIRPATH, "assets", "ml", "ml_components.pkl")

|

| 17 |

+

init_df = pd.DataFrame(

|

| 18 |

+

{"petal length (cm)": [], "petal width (cm)": [],

|

| 19 |

+

"sepal length (cm)": [], "sepal width (cm)": [], }

|

| 20 |

+

)

|

| 21 |

+

|

| 22 |

+

# FUNCTIONS

|

| 23 |

+

|

| 24 |

+

|

| 25 |

+

def load_ml_components(fp):

|

| 26 |

+

"Load the ml component to re-use in app"

|

| 27 |

+

with open(fp, "rb") as f:

|

| 28 |

+

object = pickle.load(f)

|

| 29 |

+

return object

|

| 30 |

+

|

| 31 |

+

|

| 32 |

+

def setup(fp):

|

| 33 |

+

"Setup the required elements like files, models, global variables, etc"

|

| 34 |

+

|

| 35 |

+

# history frame

|

| 36 |

+

if not os.path.exists(fp):

|

| 37 |

+

df_history = init_df.copy()

|

| 38 |

+

else:

|

| 39 |

+

df_history = pd.read_csv(fp)

|

| 40 |

+

|

| 41 |

+

df_history.to_csv(fp, index=False)

|

| 42 |

+

|

| 43 |

+

return df_history

|

| 44 |

+

|

| 45 |

+

|

| 46 |

+

def make_prediction(df_input):

|

| 47 |

+

"""Function that take a dataframe as input and make prediction

|

| 48 |

+

"""

|

| 49 |

+

global df_history

|

| 50 |

+

|

| 51 |

+

print(f"\n[Info] Input information as dataframe: \n{df_input.to_string()}")

|

| 52 |

+

df_input.drop_duplicates(inplace=True, ignore_index=True)

|

| 53 |

+

print(f"\n[Info] Input with deplicated rows: \n{df_input.to_string()}")

|

| 54 |

+

|

| 55 |

+

prediction_output = end2end_pipeline.predict_proba(df_input)

|

| 56 |

+

print(

|

| 57 |

+

f"[Info] Prediction output (of type '{type(prediction_output)}') from passed input: {prediction_output} of shape {prediction_output.shape}")

|

| 58 |

+

|

| 59 |

+

predicted_idx = prediction_output.argmax(axis=-1)

|

| 60 |

+

print(f"[Info] Predicted indexes: {predicted_idx}")

|

| 61 |

+

df_input['pred_label'] = predicted_idx

|

| 62 |

+

print(

|

| 63 |

+

f"\n[Info] pred_label: \n{df_input.to_string()}")

|

| 64 |

+

predicted_labels = df_input['pred_label'].replace(idx_to_labels)

|

| 65 |

+

df_input['pred_label'] = predicted_labels

|

| 66 |

+

print(

|

| 67 |

+

f"\n[Info] convert pred_label: \n{df_input.to_string()}")

|

| 68 |

+

|

| 69 |

+

predicted_score = prediction_output.max(axis=-1)

|

| 70 |

+

print(f"\n[Info] Prediction score: \n{predicted_score}")

|

| 71 |

+

df_input['confidence_score'] = predicted_score

|

| 72 |

+

print(

|

| 73 |

+

f"\n[Info] output information as dataframe: \n{df_input.to_string()}")

|

| 74 |

+

df_history = pd.concat([df_history, df_input], ignore_index=True).drop_duplicates(

|

| 75 |

+

ignore_index=True, keep='last')

|

| 76 |

+

return df_history

|

| 77 |

+

|

| 78 |

+

|

| 79 |

+

def download():

|

| 80 |

+

return gr.File.update(label="History File",

|

| 81 |

+

visible=True,

|

| 82 |

+

value=tmp_df_fp)

|

| 83 |

+

|

| 84 |

+

|

| 85 |

+

def hide_download():

|

| 86 |

+

return gr.File.update(label="History File",

|

| 87 |

+

visible=False)

|

| 88 |

+

|

| 89 |

+

|

| 90 |

+

# Setup execution

|

| 91 |

+

ml_components_dict = load_ml_components(fp=ml_core_fp)

|

| 92 |

+

labels = ml_components_dict['labels']

|

| 93 |

+

end2end_pipeline = ml_components_dict['pipeline']

|

| 94 |

+

print(f"\n[Info] ML components loaded: {list(ml_components_dict.keys())}")

|

| 95 |

+

print(f"\n[Info] Predictable labels: {labels}")

|

| 96 |

+

idx_to_labels = {i: l for (i, l) in enumerate(labels)}

|

| 97 |

+

print(f"\n[Info] Indexes to labels: {idx_to_labels}")

|

| 98 |

+

|

| 99 |

+

df_history = setup(tmp_df_fp)

|

| 100 |

+

|

| 101 |

+

|

| 102 |

+

# APP Interface

|

| 103 |

+

with gr.Blocks() as demo:

|

| 104 |

+

gr.Markdown('''<img class="center" src="https://www.thespruce.com/thmb/GXt55Sf9RIzADYAG5zue1hXtlqc=/1500x0/filters:no_upscale():max_bytes(150000):strip_icc()/iris-flowers-plant-profile-5120188-01-04a464ab8523426fab852b55d3bb04f0.jpg" width="50%" height="50%">

|

| 105 |

+

<style>

|

| 106 |

+

.center {

|

| 107 |

+

display: block;

|

| 108 |

+

margin-left: auto;

|

| 109 |

+

margin-right: auto;

|

| 110 |

+

width: 50%;

|

| 111 |

+

}

|

| 112 |

+

</style>''')

|

| 113 |

+

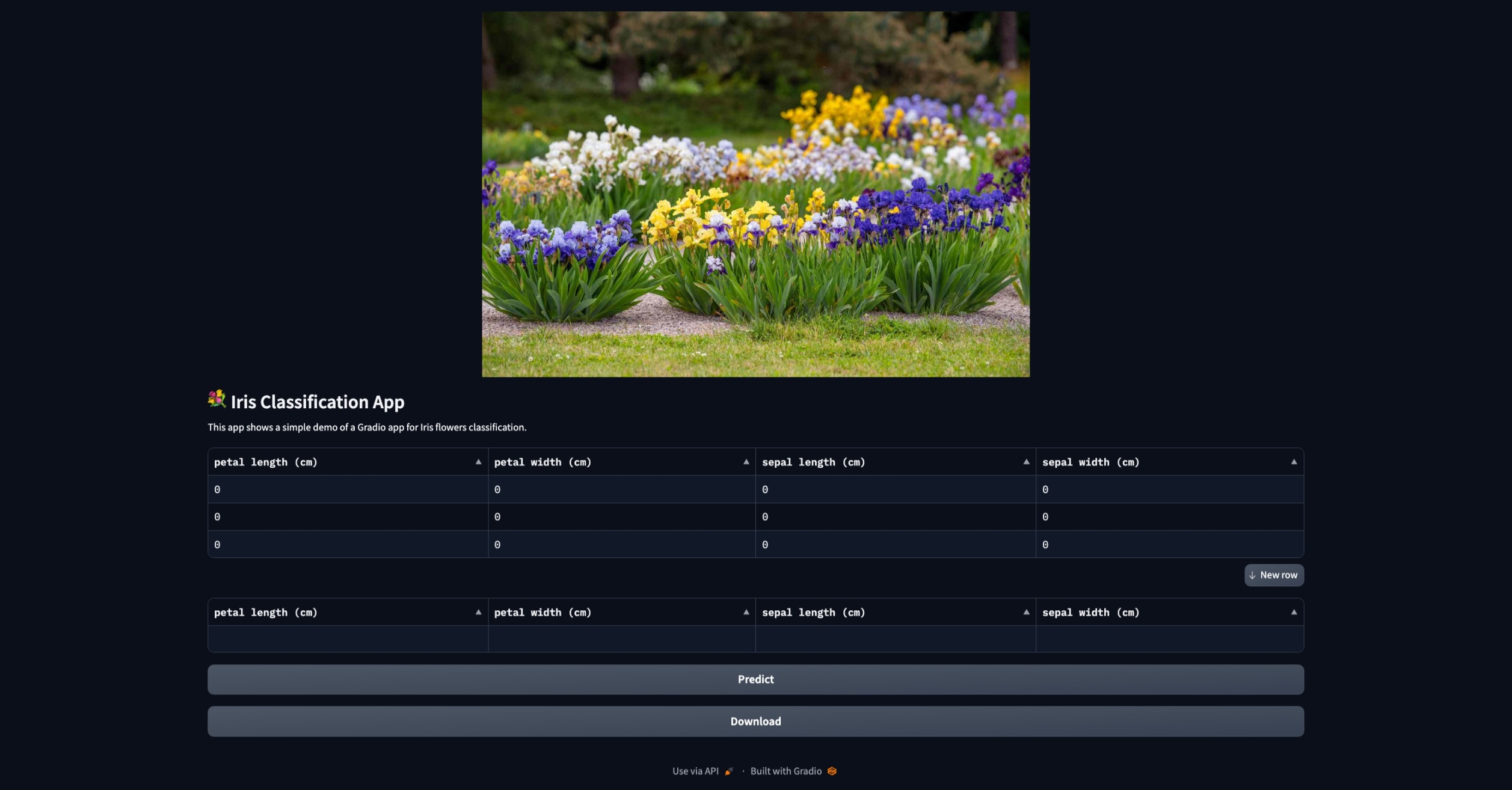

gr.Markdown('''# 💐 Iris Classification App

|

| 114 |

+

This app shows a simple demo of a Gradio app for Iris flowers classification.

|

| 115 |

+

''')

|

| 116 |

+

|

| 117 |

+

df = gr.Dataframe(

|

| 118 |

+

headers=["petal length (cm)",

|

| 119 |

+

"petal width (cm)",

|

| 120 |

+

"sepal length (cm)",

|

| 121 |

+

"sepal width (cm)"],

|

| 122 |

+

datatype=["number", "number", "number", "number", ],

|

| 123 |

+

row_count=3,

|

| 124 |

+

col_count=(4, "fixed"),

|

| 125 |

+

)

|

| 126 |

+

output = gr.Dataframe(df_history)

|

| 127 |

+

|

| 128 |

+

btn_predict = gr.Button("Predict")

|

| 129 |

+

btn_predict.click(fn=make_prediction, inputs=df, outputs=output)

|

| 130 |

+

|

| 131 |

+

# output.change(fn=)

|

| 132 |

+

|

| 133 |

+

file_obj = gr.File(label="History File",

|

| 134 |

+

visible=False

|

| 135 |

+

)

|

| 136 |

+

|

| 137 |

+

btn_download = gr.Button("Download")

|

| 138 |

+

btn_download.click(fn=download, inputs=[], outputs=file_obj)

|

| 139 |

+

output.change(fn=hide_download, inputs=[], outputs=file_obj)

|

| 140 |

+

|

| 141 |

+

if __name__ == "__main__":

|

| 142 |

+

demo.launch(debug=True)

|

src/assets/.DS_Store

ADDED

|

Binary file (6.15 kB). View file

|

|

|

src/assets/dataset/.keep

ADDED

|

File without changes

|

src/assets/ml/.keep

ADDED

|

File without changes

|

src/assets/ml/eda-report.html

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

src/assets/ml/requirements.txt

ADDED

|

@@ -0,0 +1,85 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

aiofiles==23.1.0

|

| 2 |

+

aiohttp==3.8.4

|

| 3 |

+

aiosignal==1.3.1

|

| 4 |

+

altair==4.2.2

|

| 5 |

+

anyio==3.6.2

|

| 6 |

+

async-timeout==4.0.2

|

| 7 |

+

attrs==22.2.0

|

| 8 |

+

certifi==2022.12.7

|

| 9 |

+

charset-normalizer==3.1.0

|

| 10 |

+

click==8.1.3

|

| 11 |

+

contourpy==1.0.7

|

| 12 |

+

cycler==0.11.0

|

| 13 |

+

entrypoints==0.4

|

| 14 |

+

exceptiongroup==1.1.1

|

| 15 |

+

fastapi==0.95.0

|

| 16 |

+

ffmpy==0.3.0

|

| 17 |

+

filelock==3.10.7

|

| 18 |

+

fonttools==4.39.3

|

| 19 |

+

frozenlist==1.3.3

|

| 20 |

+

fsspec==2023.3.0

|

| 21 |

+

gradio==3.23.0

|

| 22 |

+

h11==0.14.0

|

| 23 |

+

htmlmin==0.1.12

|

| 24 |

+

httpcore==0.16.3

|

| 25 |

+

httpx==0.23.3

|

| 26 |

+

huggingface-hub==0.13.3

|

| 27 |

+

idna==3.4

|

| 28 |

+

ImageHash==4.3.1

|

| 29 |

+

iniconfig==2.0.0

|

| 30 |

+

Jinja2==3.1.2

|

| 31 |

+

joblib==1.2.0

|

| 32 |

+

jsonschema==4.17.3

|

| 33 |

+

kiwisolver==1.4.4

|

| 34 |

+

linkify-it-py==2.0.0

|

| 35 |

+

markdown-it-py==2.2.0

|

| 36 |

+

MarkupSafe==2.1.2

|

| 37 |

+

matplotlib==3.6.3

|

| 38 |

+

mdit-py-plugins==0.3.3

|

| 39 |

+

mdurl==0.1.2

|

| 40 |

+

multidict==6.0.4

|

| 41 |

+

multimethod==1.9.1

|

| 42 |

+

networkx==3.0

|

| 43 |

+

numpy==1.23.5

|

| 44 |

+

orjson==3.8.9

|

| 45 |

+

packaging==23.0

|

| 46 |

+

pandas==1.5.3

|

| 47 |

+

pandas-profiling==3.6.6

|

| 48 |

+

patsy==0.5.3

|

| 49 |

+

phik==0.12.3

|

| 50 |

+

Pillow==9.4.0

|

| 51 |

+

pluggy==1.0.0

|

| 52 |

+

pydantic==1.10.7

|

| 53 |

+

pydub==0.25.1

|

| 54 |

+

pyparsing==3.0.9

|

| 55 |

+

pyrsistent==0.19.3

|

| 56 |

+

pytest==7.2.2

|

| 57 |

+

python-dateutil==2.8.2

|

| 58 |

+

python-multipart==0.0.6

|

| 59 |

+

pytz==2023.2

|

| 60 |

+

PyWavelets==1.4.1

|

| 61 |

+

PyYAML==6.0

|

| 62 |

+

requests==2.28.2

|

| 63 |

+

rfc3986==1.5.0

|

| 64 |

+

scikit-learn==1.2.2

|

| 65 |

+

scipy==1.9.3

|

| 66 |

+

seaborn==0.12.2

|

| 67 |

+

semantic-version==2.10.0

|

| 68 |

+

six==1.16.0

|

| 69 |

+

sniffio==1.3.0

|

| 70 |

+

starlette==0.26.1

|

| 71 |

+

statsmodels==0.13.5

|

| 72 |

+

tangled-up-in-unicode==0.2.0

|

| 73 |

+

threadpoolctl==3.1.0

|

| 74 |

+

tomli==2.0.1

|

| 75 |

+

toolz==0.12.0

|

| 76 |

+

tqdm==4.64.1

|

| 77 |

+

typeguard==2.13.3

|

| 78 |

+

typing_extensions==4.5.0

|

| 79 |

+

uc-micro-py==1.0.1

|

| 80 |

+

urllib3==1.26.15

|

| 81 |

+

uvicorn==0.21.1

|

| 82 |

+

visions==0.7.5

|

| 83 |

+

websockets==10.4

|

| 84 |

+

yarl==1.8.2

|

| 85 |

+

ydata-profiling==4.1.2

|

src/assets/tmp/.keep

ADDED

|

File without changes

|

src/problem_solving.py

ADDED

|

@@ -0,0 +1,139 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Imports

|

| 2 |

+

|

| 3 |

+

import pickle

|

| 4 |

+

import os

|

| 5 |

+

from sklearn.metrics import classification_report, ConfusionMatrixDisplay

|

| 6 |

+

from sklearn.ensemble import RandomForestClassifier

|

| 7 |

+

from sklearn.impute import SimpleImputer, KNNImputer

|

| 8 |

+

from sklearn.pipeline import Pipeline

|

| 9 |

+

from sklearn.compose import ColumnTransformer

|

| 10 |

+

from sklearn.preprocessing import StandardScaler, RobustScaler, OneHotEncoder

|

| 11 |

+

from sklearn.model_selection import train_test_split

|

| 12 |

+

import pandas as pd

|

| 13 |

+

from ydata_profiling import ProfileReport

|

| 14 |

+

from sklearn import datasets

|

| 15 |

+

from subprocess import call

|

| 16 |

+

|

| 17 |

+

# PATHS

|

| 18 |

+

DIRPATH = os.path.dirname(os.path.realpath(__file__))

|

| 19 |

+

ml_fp = os.path.join(DIRPATH, "assets", "ml", "ml_components.pkl")

|

| 20 |

+

req_fp = os.path.join(DIRPATH, "assets", "ml", "requirements.txt")

|

| 21 |

+

eda_report_fp = os.path.join(DIRPATH, "assets", "ml", "eda-report.html")

|

| 22 |

+

|

| 23 |

+

# import some data to play with

|

| 24 |

+

iris = datasets.load_iris(return_X_y=False, as_frame=True)

|

| 25 |

+

|

| 26 |

+

df = iris['frame']

|

| 27 |

+

target_col = 'target'

|

| 28 |

+

# pandas profiling

|

| 29 |

+

profile = ProfileReport(df, title="Dataset", html={

|

| 30 |

+

'style': {'full_width': True}})

|

| 31 |

+

profile.to_file(eda_report_fp)

|

| 32 |

+

|

| 33 |

+

# Dataset Splitting

|

| 34 |

+

# Please specify

|

| 35 |

+

to_ignore_cols = [

|

| 36 |

+

"ID", # ID

|

| 37 |

+

"Id", "id",

|

| 38 |

+

target_col

|

| 39 |

+

]

|

| 40 |

+

|

| 41 |

+

|

| 42 |

+

num_cols = list(set(df.select_dtypes('number')) - set(to_ignore_cols))

|

| 43 |

+

cat_cols = list(set(df.select_dtypes(exclude='number')) - set(to_ignore_cols))

|

| 44 |

+

print(f"\n[Info] The '{len(num_cols)}' numeric columns are : {num_cols}\nThe '{len(cat_cols)}' categorical columns are : {cat_cols}")

|

| 45 |

+

|

| 46 |

+

X, y = df.iloc[:, :-1], df.iloc[:, -1].values

|

| 47 |

+

|

| 48 |

+

|

| 49 |

+

X_train, X_eval, y_train, y_eval = train_test_split(

|

| 50 |

+

X, y, test_size=0.2, random_state=0, stratify=y)

|

| 51 |

+

|

| 52 |

+

print(

|

| 53 |

+

f"\n[Info] Dataset splitted : (X_train , y_train) = {(X_train.shape , y_train.shape)}, (X_eval y_eval) = {(X_eval.shape , y_eval.shape)}. \n")

|

| 54 |

+

|

| 55 |

+

y_train

|

| 56 |

+

|

| 57 |

+

# Modeling

|

| 58 |

+

|

| 59 |

+

# Imputers

|

| 60 |

+

num_imputer = SimpleImputer(strategy="mean").set_output(transform="pandas")

|

| 61 |

+

cat_imputer = SimpleImputer(

|

| 62 |

+

strategy="most_frequent").set_output(transform="pandas")

|

| 63 |

+

|

| 64 |

+

# Scaler & Encoder

|

| 65 |

+

if len(cat_cols) > 0:

|

| 66 |

+

df_imputed_stacked_cat = cat_imputer.fit_transform(

|

| 67 |

+

df

|

| 68 |

+

.append(df)

|

| 69 |

+

.append(df)

|

| 70 |

+

[cat_cols])

|

| 71 |

+

cat_ = OneHotEncoder(sparse=False, drop="first").fit(

|

| 72 |

+

df_imputed_stacked_cat).categories_

|

| 73 |

+

else:

|

| 74 |

+

cat_ = 'auto'

|

| 75 |

+

|

| 76 |

+

encoder = OneHotEncoder(categories=cat_, sparse=False, drop="first")

|

| 77 |

+

scaler = StandardScaler().set_output(transform="pandas")

|

| 78 |

+

|

| 79 |

+

|

| 80 |

+

# feature pipelines

|

| 81 |

+

num_pipe = Pipeline(steps=[("num_imputer", num_imputer), ("scaler", scaler)])

|

| 82 |

+

cat_pipe = Pipeline(steps=[("cat_imputer", cat_imputer), ("encoder", encoder)])

|

| 83 |

+

|

| 84 |

+

# end2end features preprocessor

|

| 85 |

+

|

| 86 |

+

transformers = []

|

| 87 |

+

|

| 88 |

+

transformers.append(("numerical", num_pipe, num_cols)) if len(

|

| 89 |

+

num_cols) > 0 else None

|

| 90 |

+

transformers.append(("categorical", cat_pipe, cat_cols,)) if len(

|

| 91 |

+

cat_cols) > 0 else None

|

| 92 |

+

# ("date", date_pipe, date_cols,),

|

| 93 |

+

|

| 94 |

+

preprocessor = ColumnTransformer(

|

| 95 |

+

transformers=transformers).set_output(transform="pandas")

|

| 96 |

+

|

| 97 |

+

print(

|

| 98 |

+

f"\n[Info] Features Transformer : {transformers}. \n")

|

| 99 |

+

|

| 100 |

+

|

| 101 |

+

# end2end pipeline

|

| 102 |

+

end2end_pipeline = Pipeline([

|

| 103 |

+

('preprocessor', preprocessor),

|

| 104 |

+

('model', RandomForestClassifier(random_state=10))

|

| 105 |

+

]).set_output(transform="pandas")

|

| 106 |

+

|

| 107 |

+

# Training

|

| 108 |

+

print(

|

| 109 |

+

f"\n[Info] Training.\n[Info] X_train : columns( {X_train.columns.tolist()}), shape: {X_train.shape} .\n")

|

| 110 |

+

|

| 111 |

+

end2end_pipeline.fit(X_train, y_train)

|

| 112 |

+

|

| 113 |

+

# Evaluation

|

| 114 |

+

print(

|

| 115 |

+

f"\n[Info] Evaluation.\n")

|

| 116 |

+

y_eval_pred = end2end_pipeline.predict(X_eval)

|

| 117 |

+

|

| 118 |

+

print(classification_report(y_eval, y_eval_pred,

|

| 119 |

+

target_names=iris['target_names']))

|

| 120 |

+

|

| 121 |

+

# ConfusionMatrixDisplay.from_predictions(

|

| 122 |

+

# y_eval, y_eval_pred, display_labels=iris['target_names'])

|

| 123 |

+

|

| 124 |

+

# Exportation

|

| 125 |

+

print(

|

| 126 |

+

f"\n[Info] Exportation.\n")

|

| 127 |

+

to_export = {

|

| 128 |

+

"labels": iris['target_names'],

|

| 129 |

+

"pipeline": end2end_pipeline,

|

| 130 |

+

}

|

| 131 |

+

|

| 132 |

+

|

| 133 |

+

# save components to file

|

| 134 |

+

with open(ml_fp, 'wb') as file:

|

| 135 |

+

pickle.dump(to_export, file)

|

| 136 |

+

|

| 137 |

+

# Requirements

|

| 138 |

+

# ! pip freeze > requirements.txt

|

| 139 |

+

call(f"pip freeze > {req_fp}", shell=True)

|

src/tmp.txt

ADDED

|

@@ -0,0 +1,15 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Setup execution

|

| 2 |

+

ml_components = load_ml_components(fp=ml_core_fp)

|

| 3 |

+

idx_to_labels = {i: l for (i, l) in enumerate(ml_components["labels"])}

|

| 4 |

+

end_2_end_pipeline = ml_components["pipeline"]

|

| 5 |

+

|

| 6 |

+

|

| 7 |

+

# Prediction

|

| 8 |

+

pred_output = end_2_end_pipeline.predict_proba(df_input)

|

| 9 |

+

|

| 10 |

+

class_idx = pred_output.argmax(axis=1)

|

| 11 |

+

scores = pred_output[:, class_idx]

|

| 12 |

+

df_input['predicted_class'] = class_idx

|

| 13 |

+

df_input['predicted_class'] = df_input['predicted_class'].replace(

|

| 14 |

+

idx_to_labels)

|

| 15 |

+

df_input['confidence_score'] = scores

|

src/utils.py

ADDED

|

File without changes

|