[InCoder](https://huggingface.co./facebook/incoder-6B) uses a decoder-only Transformer with Causal Masking objective, to train a left-to-right language model to fill in masked token segments, with a context length of 2048.

|Model | # parameters |

| - | - |

| [facebook/incoder-1B](https://huggingface.co./facebook/incoder-1B) |1.3B |

| [facebook/incoder-6B](https://huggingface.co./facebook/incoder-6B) |6.7B |

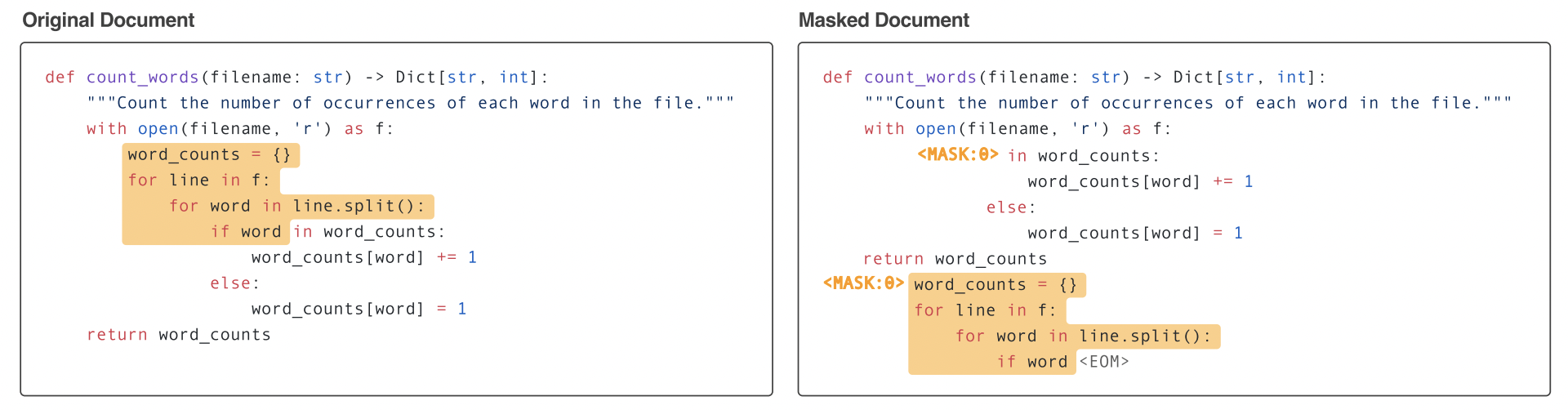

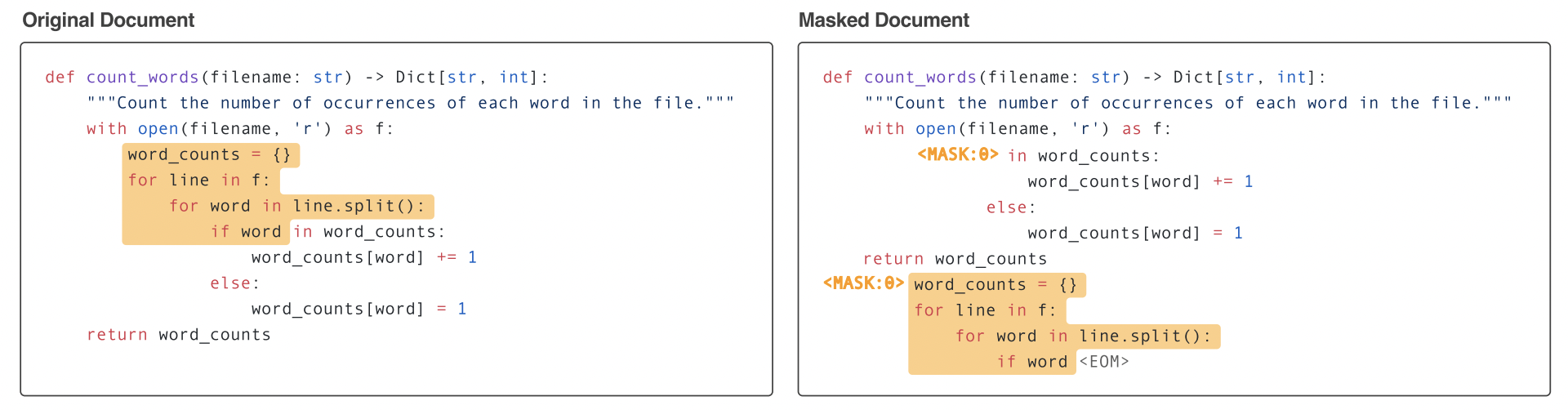

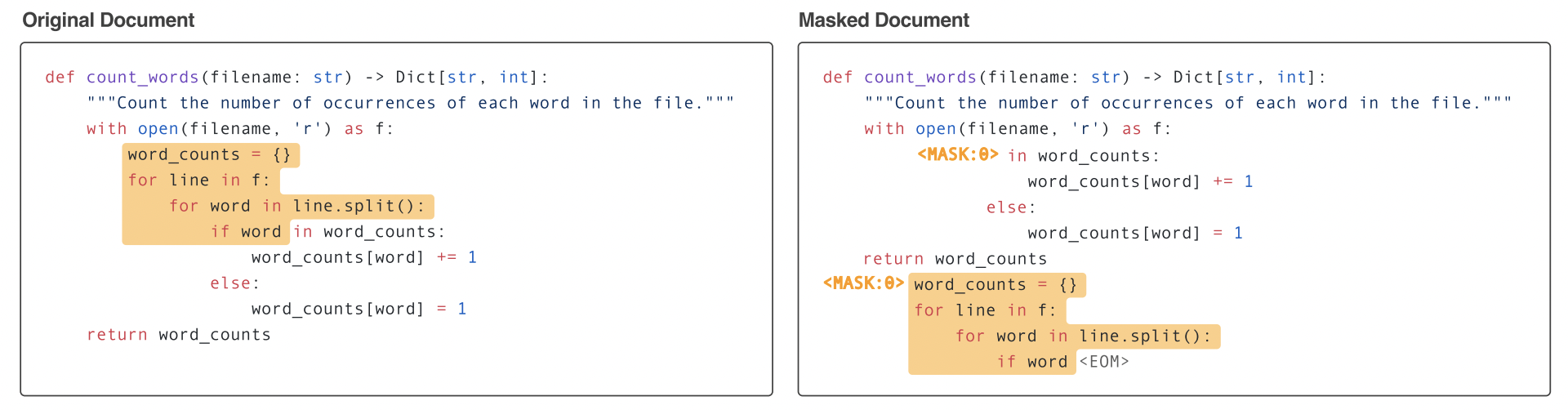

[Causal Masking objective](https://arxiv.org/abs/2201.07520) is a hybrid approach of Causal and Masked language models, "it combines the benefit of per-token generation with optional bi-directionality specifically tailored to prompting".

During the training of InCoder, spans of code were randomly masked and moved to the end of each file, which allows for bidirectional context. Figure below from InCoder [paper](https://arxiv.org/pdf/2204.05999.pdf) illustrates the training process.

So in addition to program synthesis (via left-to-right generation), InCoder can also perform editing (via infilling). The model gives promising results in some zero-shot code infilling tasks such as type prediction, variable re-naming and comment generation.

You can load the model and tokenizer directly from 🤗 [`transformers`](https://huggingface.co./docs/transformers/index):

```python

from transformers import AutoTokenizer, AutoModelWithLMHead

tokenizer = AutoTokenizer.from_pretrained("facebook/incoder-6B")

model = AutoModelWithLMHead.from_pretrained("facebook/incoder-6B")

inputs = tokenizer("def hello_world():", return_tensors="pt")

outputs = model(**inputs)

```