Spaces:

Runtime error

Runtime error

chanelcolgate

commited on

Commit

·

55f0564

1

Parent(s):

a458541

init

Browse files- .gitignore +4 -0

- app.py +291 -0

- images/62167111_jpg.rf.a28be3ccf9faa13da52aa007a7f7ed7a.jpg +0 -0

- images/A1A37A49_jpg.rf.43566e5df62b02365ced4a5bd5e21f47.jpg +0 -0

- images/A2A2E11D_jpg.rf.b366674522f576b023f5fbe116993eb7.jpg +0 -0

- images/A3EEA8A1_jpg.rf.f66d063ebbf0fe0ccc969198c6eaab63.jpg +0 -0

- images/A48928D0_jpg.rf.7926dbc20dfd480327a6ff81cfc69961.jpg +0 -0

- images/A49FFA35_jpg.rf.44ef65e540674b2bfc40361ec77569ea.jpg +0 -0

- images/A6EE237B_jpg.rf.92877f1bc68547a947773e58d62dd59d.jpg +0 -0

- images/A6F01C78_jpg.rf.3f74c020ece68222d8221abcda7b6461.jpg +0 -0

- images/A8658634_jpg.rf.52fc338e7cb1c1ba92322299ae32ce2b.jpg +0 -0

- images/ABB2195A_jpg.rf.4f96f89ee3348fb7ee8cdf77e026998a.jpg +0 -0

- requirements.txt +6 -0

.gitignore

ADDED

|

@@ -0,0 +1,4 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

.git/

|

| 2 |

+

flagged/

|

| 3 |

+

gradio_cached_examples/

|

| 4 |

+

yolov8n.pt

|

app.py

ADDED

|

@@ -0,0 +1,291 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os

|

| 2 |

+

import glob

|

| 3 |

+

import uuid

|

| 4 |

+

|

| 5 |

+

import gradio as gr

|

| 6 |

+

from PIL import Image

|

| 7 |

+

import cv2

|

| 8 |

+

import numpy as np

|

| 9 |

+

import supervision as sv

|

| 10 |

+

from ultralyticsplus import YOLO, download_from_hub

|

| 11 |

+

|

| 12 |

+

hf_model_ids = ["chanelcolgate/rods-count-v1", "chanelcolgate/cab-v1"]

|

| 13 |

+

|

| 14 |

+

image_paths = [

|

| 15 |

+

[image_path, "chanelcolgate/rods-cout-v1", 640, 0.6, 0.45]

|

| 16 |

+

for image_path in glob.glob("./images/*.jpg")

|

| 17 |

+

]

|

| 18 |

+

|

| 19 |

+

video_paths = [

|

| 20 |

+

[video_path, "chanelcolgate/cab-v1"]

|

| 21 |

+

for video_path in glob.glob("./videos/*.mp4")

|

| 22 |

+

]

|

| 23 |

+

|

| 24 |

+

|

| 25 |

+

def get_center_of_bbox(bbox):

|

| 26 |

+

x1, y1, x2, y2 = bbox

|

| 27 |

+

return int((x1 + x2) / 2), int((y1 + y2) / 2)

|

| 28 |

+

|

| 29 |

+

|

| 30 |

+

def get_bbox_width(bbox):

|

| 31 |

+

return int(bbox[2] - bbox[0])

|

| 32 |

+

|

| 33 |

+

|

| 34 |

+

def draw_circle(pil_image, bbox, color, id):

|

| 35 |

+

# Convert PIL image to a numpy array (OpenCV format)

|

| 36 |

+

cv_image = np.array(pil_image)

|

| 37 |

+

|

| 38 |

+

# Convert RGB to BGR (OpenCV format)

|

| 39 |

+

cv_image = cv2.cvtColor(cv_image, cv2.COLOR_RGB2BGR)

|

| 40 |

+

|

| 41 |

+

x_center, y_center = get_center_of_bbox(bbox)

|

| 42 |

+

width = get_bbox_width(bbox)

|

| 43 |

+

|

| 44 |

+

# Draw the circle on the image

|

| 45 |

+

cv2.circle(

|

| 46 |

+

cv_image,

|

| 47 |

+

center=(x_center, y_center),

|

| 48 |

+

radius=int(width * 0.5 * 0.6),

|

| 49 |

+

color=color,

|

| 50 |

+

thickness=1,

|

| 51 |

+

)

|

| 52 |

+

|

| 53 |

+

cv2.putText(

|

| 54 |

+

cv_image,

|

| 55 |

+

f"{id}",

|

| 56 |

+

(x_center - 6, y_center + 6),

|

| 57 |

+

cv2.FONT_HERSHEY_SIMPLEX,

|

| 58 |

+

0.5,

|

| 59 |

+

(255, 249, 208),

|

| 60 |

+

2,

|

| 61 |

+

)

|

| 62 |

+

|

| 63 |

+

# Convert BGR back to RGB (PIL format)

|

| 64 |

+

cv_image = cv2.cvtColor(cv_image, cv2.COLOR_BGR2RGB)

|

| 65 |

+

|

| 66 |

+

# Convert the numpy array back to a PIL Image

|

| 67 |

+

pil_image = Image.fromarray(cv_image)

|

| 68 |

+

return pil_image

|

| 69 |

+

|

| 70 |

+

|

| 71 |

+

def count_predictions(

|

| 72 |

+

image=None,

|

| 73 |

+

hf_model_id="chanelcolgate/rods-count-v1",

|

| 74 |

+

image_size=640,

|

| 75 |

+

conf_threshold=0.25,

|

| 76 |

+

iou_threshold=0.45,

|

| 77 |

+

):

|

| 78 |

+

model_path = download_from_hub(hf_model_id)

|

| 79 |

+

model = YOLO(model_path)

|

| 80 |

+

results = model(

|

| 81 |

+

image, imgsz=image_size, conf=conf_threshold, iou=iou_threshold

|

| 82 |

+

)

|

| 83 |

+

detections = sv.Detections.from_ultralytics(results[0])

|

| 84 |

+

|

| 85 |

+

for id, detection in enumerate(detections):

|

| 86 |

+

image = image.copy()

|

| 87 |

+

bbox = detection[0].tolist()

|

| 88 |

+

image = draw_circle(image, bbox, (90, 178, 255), id + 1)

|

| 89 |

+

return image, len(detections)

|

| 90 |

+

|

| 91 |

+

|

| 92 |

+

def count_across_line(

|

| 93 |

+

source_video_path=None,

|

| 94 |

+

hf_model_id="chanelcolgate/cab-v1",

|

| 95 |

+

):

|

| 96 |

+

TARGET_VIDEO_PATH = os.path.join("./", f"{uuid.uuid4()}.mp4")

|

| 97 |

+

|

| 98 |

+

LINE_START = sv.Point(976, 212)

|

| 99 |

+

LINE_END = sv.Point(976, 1276)

|

| 100 |

+

|

| 101 |

+

model_path = download_from_hub(hf_model_id)

|

| 102 |

+

model = YOLO(model_path)

|

| 103 |

+

|

| 104 |

+

byte_tracker = sv.ByteTrack(

|

| 105 |

+

track_thresh=0.25, track_buffer=30, match_thresh=0.8, frame_rate=30

|

| 106 |

+

)

|

| 107 |

+

|

| 108 |

+

video_info = sv.VideoInfo.from_video_path(source_video_path)

|

| 109 |

+

|

| 110 |

+

generator = sv.get_video_frames_generator(source_video_path)

|

| 111 |

+

|

| 112 |

+

line_zone = sv.LineZone(start=LINE_START, end=LINE_END)

|

| 113 |

+

|

| 114 |

+

box_annotator = sv.BoxAnnotator(thickness=4, text_thickness=4, text_scale=2)

|

| 115 |

+

|

| 116 |

+

trace_annotator = sv.TraceAnnotator(thickness=4, trace_length=50)

|

| 117 |

+

line_zone_annotator = sv.LineZoneAnnotator(

|

| 118 |

+

thickness=4, text_thickness=4, text_scale=2

|

| 119 |

+

)

|

| 120 |

+

|

| 121 |

+

def callback(frame: np.ndarray, index: int) -> np.ndarray:

|

| 122 |

+

results = model.predict(frame)

|

| 123 |

+

|

| 124 |

+

cls_names = results[0].names

|

| 125 |

+

detection = sv.Detections.from_ultralytics(results[0])

|

| 126 |

+

detection_supervision = byte_tracker.update_with_detections(detection)

|

| 127 |

+

labels_convert = [

|

| 128 |

+

f"#{tracker_id} {cls_names[class_id]} {confidence:0.2f}"

|

| 129 |

+

for _, _, confidence, class_id, tracker_id, _ in detection_supervision

|

| 130 |

+

]

|

| 131 |

+

|

| 132 |

+

annotated_frame = trace_annotator.annotate(

|

| 133 |

+

scene=frame.copy(), detections=detection_supervision

|

| 134 |

+

)

|

| 135 |

+

annotated_frame = box_annotator.annotate(

|

| 136 |

+

scene=annotated_frame,

|

| 137 |

+

detections=detection_supervision,

|

| 138 |

+

skip_label=True,

|

| 139 |

+

# labels=labels_convert,

|

| 140 |

+

)

|

| 141 |

+

|

| 142 |

+

# update line counter

|

| 143 |

+

line_zone.trigger(detection_supervision)

|

| 144 |

+

|

| 145 |

+

# return frame with box and line annotated result

|

| 146 |

+

return line_zone_annotator.annotate(

|

| 147 |

+

annotated_frame, line_counter=line_zone

|

| 148 |

+

)

|

| 149 |

+

|

| 150 |

+

# process the whole video

|

| 151 |

+

sv.process_video(

|

| 152 |

+

source_path=source_video_path,

|

| 153 |

+

target_path=TARGET_VIDEO_PATH,

|

| 154 |

+

callback=callback,

|

| 155 |

+

)

|

| 156 |

+

|

| 157 |

+

return TARGET_VIDEO_PATH, line_zone.out_count

|

| 158 |

+

|

| 159 |

+

|

| 160 |

+

def count_in_zone(

|

| 161 |

+

source_video_path=None,

|

| 162 |

+

hf_model_id="chanelcolgate/cab-v1",

|

| 163 |

+

):

|

| 164 |

+

TARGET_VIDEO_PATH = os.path.join("./", f"{uuid.uuid4()}.mp4")

|

| 165 |

+

|

| 166 |

+

colors = sv.ColorPalette.default()

|

| 167 |

+

polygons = [

|

| 168 |

+

np.array([[88, 292], [748, 284], [736, 1160], [96, 1148]]),

|

| 169 |

+

np.array([[844, 240], [844, 1132], [1580, 1124], [1584, 264]]),

|

| 170 |

+

]

|

| 171 |

+

|

| 172 |

+

model_path = download_from_hub(hf_model_id)

|

| 173 |

+

model = YOLO(model_path)

|

| 174 |

+

|

| 175 |

+

byte_tracker = sv.ByteTrack(

|

| 176 |

+

track_thresh=0.25, track_buffer=30, match_thresh=0.8, frame_rate=30

|

| 177 |

+

)

|

| 178 |

+

|

| 179 |

+

video_info = sv.VideoInfo.from_video_path(source_video_path)

|

| 180 |

+

generator = sv.get_video_frames_generator(source_video_path)

|

| 181 |

+

zones = [

|

| 182 |

+

sv.PolygonZone(

|

| 183 |

+

polygon=polygon, frame_resolution_wh=video_info.resolution_wh

|

| 184 |

+

)

|

| 185 |

+

for polygon in polygons

|

| 186 |

+

]

|

| 187 |

+

zone_annotators = [

|

| 188 |

+

sv.PolygonZoneAnnotator(

|

| 189 |

+

zone=zone,

|

| 190 |

+

color=colors.by_idx(index),

|

| 191 |

+

thickness=4,

|

| 192 |

+

text_thickness=4,

|

| 193 |

+

text_scale=2,

|

| 194 |

+

)

|

| 195 |

+

for index, zone in enumerate(zones)

|

| 196 |

+

]

|

| 197 |

+

box_annotators = [

|

| 198 |

+

sv.BoxAnnotator(

|

| 199 |

+

thickness=4,

|

| 200 |

+

text_thickness=4,

|

| 201 |

+

text_scale=2,

|

| 202 |

+

color=colors.by_idx(index),

|

| 203 |

+

)

|

| 204 |

+

for index in range(len(polygons))

|

| 205 |

+

]

|

| 206 |

+

|

| 207 |

+

def callback(frame: np.ndarray, index: int) -> np.ndarray:

|

| 208 |

+

results = model.predict(frame)

|

| 209 |

+

|

| 210 |

+

detection = sv.Detections.from_ultralytics(results[0])

|

| 211 |

+

detection_supervision = byte_tracker.update_with_detections(detection)

|

| 212 |

+

for zone, zone_annotator, box_annotator in zip(

|

| 213 |

+

zones, zone_annotators, box_annotators

|

| 214 |

+

):

|

| 215 |

+

zone.trigger(detections=detection_supervision)

|

| 216 |

+

frame = box_annotator.annotate(

|

| 217 |

+

scene=frame, detections=detection_supervision, skip_label=True

|

| 218 |

+

)

|

| 219 |

+

frame = zone_annotator.annotate(scene=frame)

|

| 220 |

+

return frame

|

| 221 |

+

|

| 222 |

+

sv.process_video(

|

| 223 |

+

source_path=source_video_path,

|

| 224 |

+

target_path=TARGET_VIDEO_PATH,

|

| 225 |

+

callback=callback,

|

| 226 |

+

)

|

| 227 |

+

|

| 228 |

+

return TARGET_VIDEO_PATH, [zone.current_count for zone in zones]

|

| 229 |

+

|

| 230 |

+

|

| 231 |

+

title = "Demo Counting"

|

| 232 |

+

|

| 233 |

+

interface_count_predictions = gr.Interface(

|

| 234 |

+

fn=count_predictions,

|

| 235 |

+

inputs=[

|

| 236 |

+

gr.Image(type="pil"),

|

| 237 |

+

gr.Dropdown(hf_model_ids),

|

| 238 |

+

gr.Slider(

|

| 239 |

+

minimum=320, maximum=1280, value=640, step=32, label="Image Size"

|

| 240 |

+

),

|

| 241 |

+

gr.Slider(

|

| 242 |

+

minimum=0.0,

|

| 243 |

+

maximum=1.0,

|

| 244 |

+

value=0.25,

|

| 245 |

+

step=0.05,

|

| 246 |

+

label="Confidence Threshold",

|

| 247 |

+

),

|

| 248 |

+

gr.Slider(

|

| 249 |

+

minimum=0.0,

|

| 250 |

+

maximum=1.0,

|

| 251 |

+

value=0.45,

|

| 252 |

+

step=0.05,

|

| 253 |

+

label="IOU Threshold",

|

| 254 |

+

),

|

| 255 |

+

],

|

| 256 |

+

outputs=[gr.Image(type="pil"), gr.Textbox(show_label=False)],

|

| 257 |

+

title="Count Predictions",

|

| 258 |

+

examples=image_paths,

|

| 259 |

+

cache_examples=True if image_paths else False,

|

| 260 |

+

)

|

| 261 |

+

|

| 262 |

+

interface_count_across_line = gr.Interface(

|

| 263 |

+

fn=count_across_line,

|

| 264 |

+

inputs=[

|

| 265 |

+

gr.Video(label="Input Video"),

|

| 266 |

+

gr.Dropdown(hf_model_ids),

|

| 267 |

+

],

|

| 268 |

+

outputs=[gr.Video(label="Output Video"), gr.Textbox(show_label=False)],

|

| 269 |

+

title="Count Across Line",

|

| 270 |

+

examples=video_paths,

|

| 271 |

+

cache_examples=True if video_paths else False,

|

| 272 |

+

)

|

| 273 |

+

|

| 274 |

+

interface_count_in_zone = gr.Interface(

|

| 275 |

+

fn=count_in_zone,

|

| 276 |

+

inputs=[gr.Video(label="Input Video"), gr.Dropdown(hf_model_ids)],

|

| 277 |

+

outputs=[gr.Video(label="Output Video"), gr.Textbox(show_label=False)],

|

| 278 |

+

title="Count in Zone",

|

| 279 |

+

examples=video_paths,

|

| 280 |

+

cache_examples=True if video_paths else False,

|

| 281 |

+

)

|

| 282 |

+

|

| 283 |

+

gr.TabbedInterface(

|

| 284 |

+

[

|

| 285 |

+

interface_count_predictions,

|

| 286 |

+

interface_count_across_line,

|

| 287 |

+

interface_count_in_zone,

|

| 288 |

+

],

|

| 289 |

+

tab_names=["Count Predictions", "Count Across Line", "Count in Zone"],

|

| 290 |

+

title="Demo Counting",

|

| 291 |

+

).queue().launch()

|

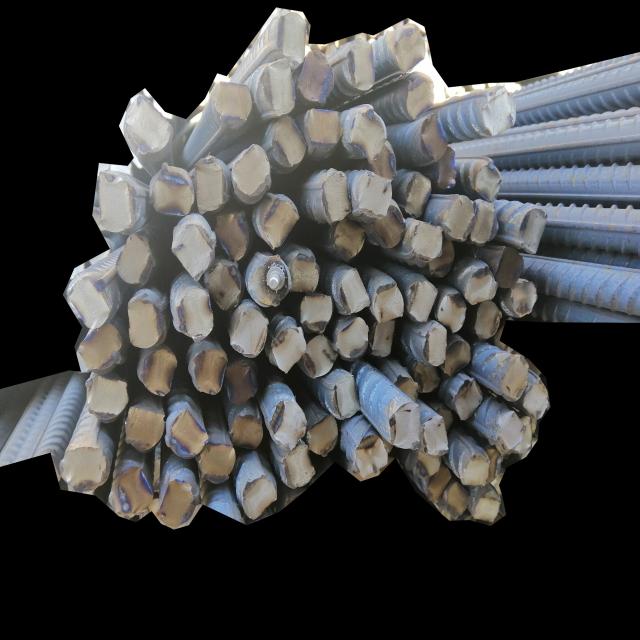

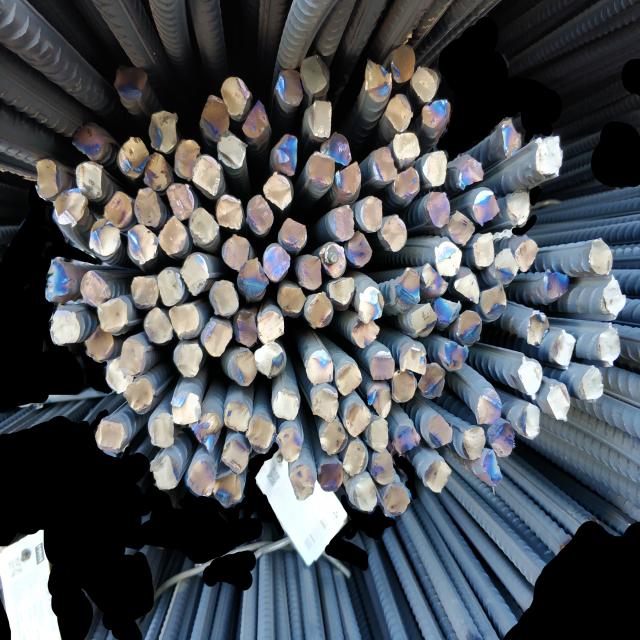

images/62167111_jpg.rf.a28be3ccf9faa13da52aa007a7f7ed7a.jpg

ADDED

|

images/A1A37A49_jpg.rf.43566e5df62b02365ced4a5bd5e21f47.jpg

ADDED

|

images/A2A2E11D_jpg.rf.b366674522f576b023f5fbe116993eb7.jpg

ADDED

|

images/A3EEA8A1_jpg.rf.f66d063ebbf0fe0ccc969198c6eaab63.jpg

ADDED

|

images/A48928D0_jpg.rf.7926dbc20dfd480327a6ff81cfc69961.jpg

ADDED

|

images/A49FFA35_jpg.rf.44ef65e540674b2bfc40361ec77569ea.jpg

ADDED

|

images/A6EE237B_jpg.rf.92877f1bc68547a947773e58d62dd59d.jpg

ADDED

|

images/A6F01C78_jpg.rf.3f74c020ece68222d8221abcda7b6461.jpg

ADDED

|

images/A8658634_jpg.rf.52fc338e7cb1c1ba92322299ae32ce2b.jpg

ADDED

|

images/ABB2195A_jpg.rf.4f96f89ee3348fb7ee8cdf77e026998a.jpg

ADDED

|

requirements.txt

ADDED

|

@@ -0,0 +1,6 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

gradio==4.26.0

|

| 2 |

+

ultralyticsplus==0.0.29

|

| 3 |

+

pillow==10.2.0

|

| 4 |

+

opencv-python==4.7.0.72

|

| 5 |

+

numpy==1.24.4

|

| 6 |

+

supervision==0.18.0

|