Spaces:

Running

Running

blairzheng

commited on

Commit

•

e6088ac

1

Parent(s):

d365954

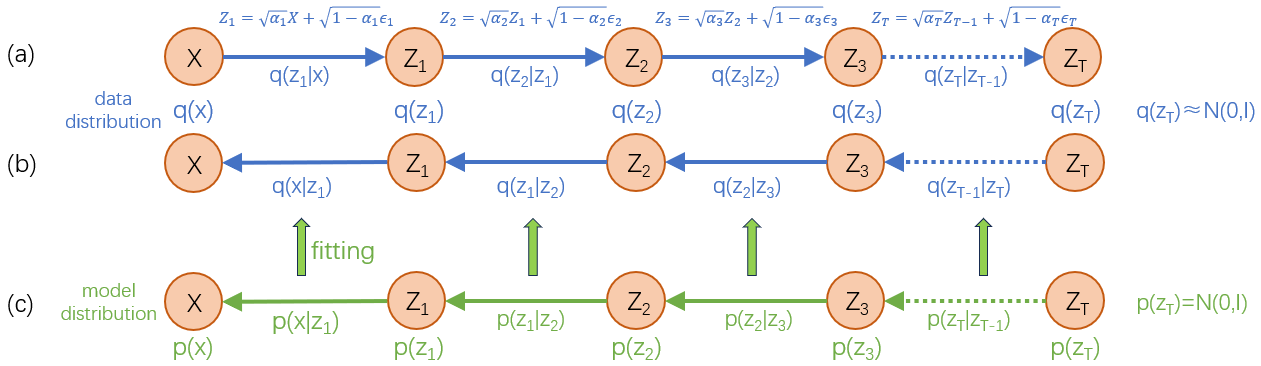

add log in equation 6.4; change order of condition variable in figure 1(a)

Browse files- RenderMarkdownEn.py +1 -1

- RenderMarkdownZh.py +1 -1

- data.json +0 -0

- fig1.png +0 -0

RenderMarkdownEn.py

CHANGED

|

@@ -235,7 +235,7 @@ def md_fit_posterior_en():

|

|

| 235 |

KL divergence can also be optimized as the objective function. KL divergence and cross-entropy are equivalent[\\[10\\]](#ce_kl)

|

| 236 |

<span id="en_fit_0">

|

| 237 |

loss &= \int q(z_t) KL(q(z_{t-1}|z_t) \Vert \textcolor{blue}{p(z_{t-1}|z_t)})dz_t \tag{6.3} \newline

|

| 238 |

-

&= \int q(z_t) \int q(z_{t-1}|z_t) \frac{q(z_{t-1}|z_t)}{\textcolor{blue}{p(z_{t-1}|z_t)}} dz_{t-1} dz_t \tag{6.4} \newline

|

| 239 |

&= -\int q(z_t)\ \underbrace{\int q(z_{t-1}|z_t) \log \textcolor{blue}{p(z_{t-1}|z_t)}dz_{t-1}}{underline}{\text{Cross Entropy}}\ dz_t + \underbrace{\int q(z_t) \int q(z_{t-1}|z_t) \log q(z_{t-1}|z_t)}{underline}{\text{Is Constant}} dz \tag{6.5}

|

| 240 |

</span>

|

| 241 |

|

|

|

|

| 235 |

KL divergence can also be optimized as the objective function. KL divergence and cross-entropy are equivalent[\\[10\\]](#ce_kl)

|

| 236 |

<span id="en_fit_0">

|

| 237 |

loss &= \int q(z_t) KL(q(z_{t-1}|z_t) \Vert \textcolor{blue}{p(z_{t-1}|z_t)})dz_t \tag{6.3} \newline

|

| 238 |

+

&= \int q(z_t) \int q(z_{t-1}|z_t) \log \frac{q(z_{t-1}|z_t)}{\textcolor{blue}{p(z_{t-1}|z_t)}} dz_{t-1} dz_t \tag{6.4} \newline

|

| 239 |

&= -\int q(z_t)\ \underbrace{\int q(z_{t-1}|z_t) \log \textcolor{blue}{p(z_{t-1}|z_t)}dz_{t-1}}{underline}{\text{Cross Entropy}}\ dz_t + \underbrace{\int q(z_t) \int q(z_{t-1}|z_t) \log q(z_{t-1}|z_t)}{underline}{\text{Is Constant}} dz \tag{6.5}

|

| 240 |

</span>

|

| 241 |

|

RenderMarkdownZh.py

CHANGED

|

@@ -227,7 +227,7 @@ def md_fit_posterior_zh():

|

|

| 227 |

也可以KL散度作为目标函数进行优化,KL散度与交叉熵是等价的[\[10\]](#ce_kl)。

|

| 228 |

<span id="zh_fit_0">

|

| 229 |

loss &= \int q(z_t) KL(q(z_{t-1}|z_t) \Vert \textcolor{blue}{p(z_{t-1}|z_t)})dz_t \tag{6.3} \newline

|

| 230 |

-

&= \int q(z_t) \int q(z_{t-1}|z_t) \frac{q(z_{t-1}|z_t)}{\textcolor{blue}{p(z_{t-1}|z_t)}} dz_{t-1} dz_t

|

| 231 |

&= -\int q(z_t)\ \underbrace{\int q(z_{t-1}|z_t) \log \textcolor{blue}{p(z_{t-1}|z_t)}dz_{t-1}}{underline}{\text{Cross Entropy}}\ dz_t + \underbrace{\int q(z_t) \int q(z_{t-1}|z_t) \log q(z_{t-1}|z_t)}{underline}{\text{Is Constant}} dz \tag{6.5}

|

| 232 |

</span>

|

| 233 |

|

|

|

|

| 227 |

也可以KL散度作为目标函数进行优化,KL散度与交叉熵是等价的[\[10\]](#ce_kl)。

|

| 228 |

<span id="zh_fit_0">

|

| 229 |

loss &= \int q(z_t) KL(q(z_{t-1}|z_t) \Vert \textcolor{blue}{p(z_{t-1}|z_t)})dz_t \tag{6.3} \newline

|

| 230 |

+

&= \int q(z_t) \int q(z_{t-1}|z_t) \log \frac{q(z_{t-1}|z_t)}{\textcolor{blue}{p(z_{t-1}|z_t)}} dz_{t-1} dz_t \tag{6.4} \newline

|

| 231 |

&= -\int q(z_t)\ \underbrace{\int q(z_{t-1}|z_t) \log \textcolor{blue}{p(z_{t-1}|z_t)}dz_{t-1}}{underline}{\text{Cross Entropy}}\ dz_t + \underbrace{\int q(z_t) \int q(z_{t-1}|z_t) \log q(z_{t-1}|z_t)}{underline}{\text{Is Constant}} dz \tag{6.5}

|

| 232 |

</span>

|

| 233 |

|

data.json

CHANGED

|

The diff for this file is too large to render.

See raw diff

|

|

|

fig1.png

CHANGED

|

|