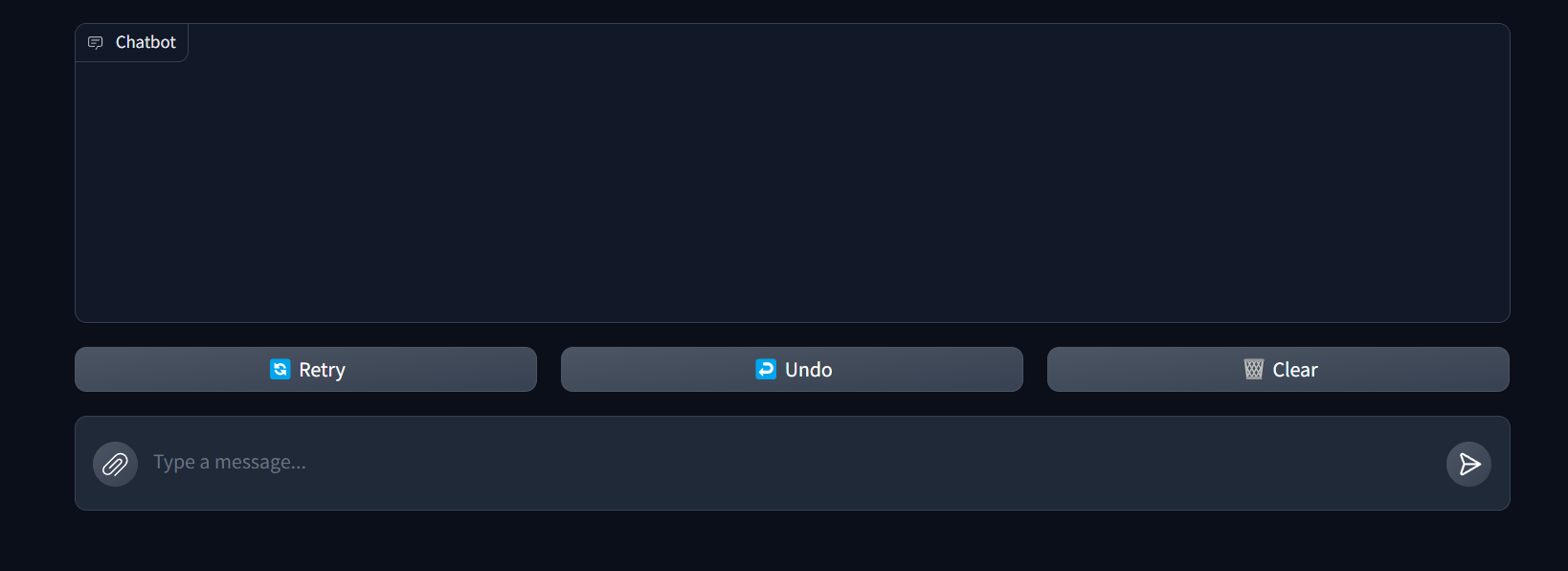

Upload 3 files

Browse files- Screenshot 2024-09-07 230747.png +0 -0

- llm.py +78 -0

- requirements.txt +0 -0

Screenshot 2024-09-07 230747.png

ADDED

|

llm.py

ADDED

|

@@ -0,0 +1,78 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import torch

|

| 2 |

+

from transformers import AutoModelForCausalLM, AutoTokenizer

|

| 3 |

+

from PIL import Image

|

| 4 |

+

import gradio as gr

|

| 5 |

+

import time

|

| 6 |

+

|

| 7 |

+

model = AutoModelForCausalLM.from_pretrained(

|

| 8 |

+

"MILVLG/imp-v1-3b",

|

| 9 |

+

torch_dtype=torch.float16,

|

| 10 |

+

device_map="auto",

|

| 11 |

+

trust_remote_code=True,

|

| 12 |

+

)

|

| 13 |

+

|

| 14 |

+

tokenizer = AutoTokenizer.from_pretrained("MILVLG/imp-v1-3b", trust_remote_code=True)

|

| 15 |

+

|

| 16 |

+

|

| 17 |

+

def response(USER_DATA, TOKEN) -> str:

|

| 18 |

+

print(USER_DATA)

|

| 19 |

+

MESSAGE = USER_DATA["text"]

|

| 20 |

+

NUM_FILES = len(USER_DATA["files"])

|

| 21 |

+

FILES = USER_DATA["files"]

|

| 22 |

+

|

| 23 |

+

SYSTEM_PROMPT = f"""

|

| 24 |

+

A chat between a curious user and an artificial intelligence assistant. The assistant generates helpful, and detailed testcases for software/website testing.

|

| 25 |

+

You are tasked with generating detailed, step-by-step test cases for software functionality based on uploaded images. The user will provide one or more images of a software or website interface. For each image, generate a separate set of test cases following the format below:

|

| 26 |

+

|

| 27 |

+

Description: Provide a brief explanation of the functionality being tested, as inferred from the image.

|

| 28 |

+

|

| 29 |

+

Pre-conditions: Identify any setup requirements, dependencies, or conditions that must be met before testing can begin (e.g., user logged in, specific data pre-populated, etc.).

|

| 30 |

+

|

| 31 |

+

Testing Steps: Outline a clear, numbered sequence of actions that a user would take to test the functionality in the image.

|

| 32 |

+

|

| 33 |

+

Expected Result: Specify the expected outcome if the functionality is working as intended.

|

| 34 |

+

|

| 35 |

+

Ensure that:

|

| 36 |

+

|

| 37 |

+

Test cases are created independently for each image.

|

| 38 |

+

The functionality from each image is fully covered in its own set of test cases.

|

| 39 |

+

Any assumptions you make are clearly stated.

|

| 40 |

+

The focus is on usability, navigation, and feature correctness as demonstrated in the UI of the uploaded images.

|

| 41 |

+

|

| 42 |

+

USER: <image>\n{MESSAGE}

|

| 43 |

+

ASSISTANT:

|

| 44 |

+

"""

|

| 45 |

+

|

| 46 |

+

RES = generate_answer(FILES, SYSTEM_PROMPT)

|

| 47 |

+

|

| 48 |

+

response = f"{RES}."

|

| 49 |

+

return response

|

| 50 |

+

for i in range(len(response)):

|

| 51 |

+

time.sleep(0.025)

|

| 52 |

+

yield response[: i + 1]

|

| 53 |

+

|

| 54 |

+

|

| 55 |

+

def generate_answer(IMAGES: list, SYSTEM_PROMPT) -> str:

|

| 56 |

+

print(len(IMAGES))

|

| 57 |

+

|

| 58 |

+

INPUT_IDS = tokenizer(SYSTEM_PROMPT, return_tensors="pt").input_ids

|

| 59 |

+

|

| 60 |

+

RESULT = ""

|

| 61 |

+

for EACH_IMG in IMAGES:

|

| 62 |

+

image_path = EACH_IMG["path"]

|

| 63 |

+

image = Image.open(image_path)

|

| 64 |

+

image_tensor = model.image_preprocess(image)

|

| 65 |

+

|

| 66 |

+

output_ids = model.generate(

|

| 67 |

+

inputs=INPUT_IDS,

|

| 68 |

+

max_new_tokens=500,

|

| 69 |

+

images=image_tensor,

|

| 70 |

+

use_cache=False,

|

| 71 |

+

)[0]

|

| 72 |

+

CUR_RESULT = tokenizer.decode(

|

| 73 |

+

output_ids[INPUT_IDS.shape[1] :], skip_special_tokens=True

|

| 74 |

+

).strip()

|

| 75 |

+

|

| 76 |

+

RESULT = f"{RESULT} /n/n {CUR_RESULT}"

|

| 77 |

+

|

| 78 |

+

return RESULT

|

requirements.txt

ADDED

|

Binary file (2.51 kB). View file

|

|

|