Spaces:

Running

Running

initial commit

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- README.md +35 -6

- app.py +65 -0

- arena_elo/LICENSE +21 -0

- arena_elo/README.md +46 -0

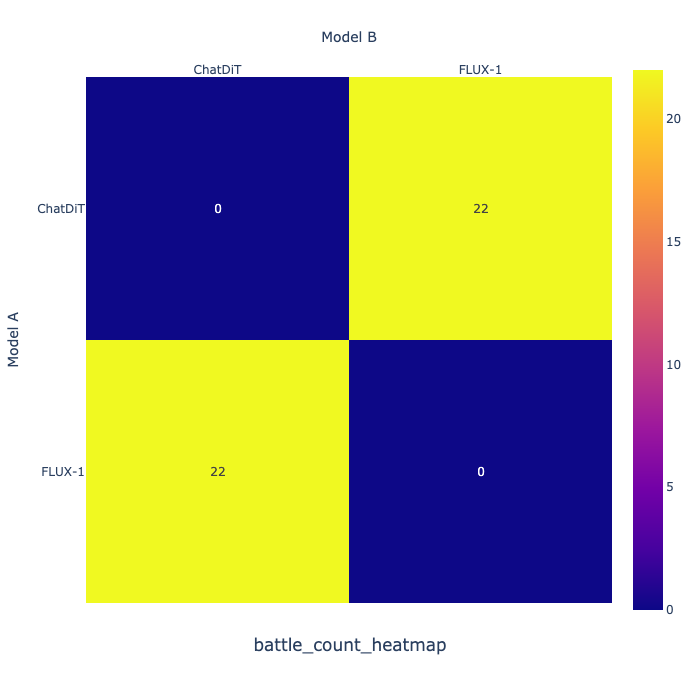

- arena_elo/battle_count_heatmap.png +0 -0

- arena_elo/cut_off_date.txt +1 -0

- arena_elo/elo_rating/__init__.py +0 -0

- arena_elo/elo_rating/__pycache__/__init__.cpython-310.pyc +0 -0

- arena_elo/elo_rating/__pycache__/basic_stats.cpython-310.pyc +0 -0

- arena_elo/elo_rating/__pycache__/clean_battle_data.cpython-310.pyc +0 -0

- arena_elo/elo_rating/__pycache__/elo_analysis.cpython-310.pyc +0 -0

- arena_elo/elo_rating/__pycache__/generate_leaderboard.cpython-310.pyc +0 -0

- arena_elo/elo_rating/__pycache__/inspect_cost.cpython-310.pyc +0 -0

- arena_elo/elo_rating/__pycache__/inspect_elo_rating_pkl.cpython-310.pyc +0 -0

- arena_elo/elo_rating/__pycache__/model_registry.cpython-310.pyc +0 -0

- arena_elo/elo_rating/__pycache__/utils.cpython-310.pyc +0 -0

- arena_elo/elo_rating/basic_stats.py +227 -0

- arena_elo/elo_rating/clean_battle_data.py +342 -0

- arena_elo/elo_rating/elo_analysis.py +395 -0

- arena_elo/elo_rating/filter_clean_battle_data.py +34 -0

- arena_elo/elo_rating/generate_leaderboard.py +88 -0

- arena_elo/elo_rating/inspect_conv_rating.py +234 -0

- arena_elo/elo_rating/inspect_cost.py +177 -0

- arena_elo/elo_rating/inspect_elo_rating_pkl.py +33 -0

- arena_elo/elo_rating/model_registry.py +578 -0

- arena_elo/elo_rating/upload_battle_data.py +193 -0

- arena_elo/elo_rating/utils.py +83 -0

- arena_elo/evaluator/convert_to_evaluator_data.py +134 -0

- arena_elo/evaluator/rating_analysis.ipynb +321 -0

- arena_elo/get_latest_data.sh +17 -0

- arena_elo/pyproject.toml +28 -0

- arena_elo/requirements.txt +28 -0

- arena_elo/results/20241224/clean_battle.json +210 -0

- arena_elo/results/20241224/elo_results.pkl +3 -0

- arena_elo/results/20241224/leaderboard.csv +3 -0

- arena_elo/results/20241226/clean_battle.json +482 -0

- arena_elo/results/20241226/elo_results.pkl +3 -0

- arena_elo/results/20241226/leaderboard.csv +9 -0

- arena_elo/results/latest/clean_battle.json +482 -0

- arena_elo/results/latest/elo_results.pkl +3 -0

- arena_elo/results/latest/leaderboard.csv +9 -0

- arena_elo/simple_test.py +16 -0

- arena_elo/update_elo_rating.sh +30 -0

- arena_elo/win_fraction_heatmap.png +0 -0

- logs/vote_log/2024-12-24-conv.json +26 -0

- logs/vote_log/2024-12-25-conv.json +7 -0

- logs/vote_log/2024-12-26-conv.json +27 -0

- logs/vote_log/2024-12-27-conv.json +4 -0

- logs/vote_log/gr_web_image_generation_multi.log +450 -0

- logs/vote_log/gr_web_image_generation_multi.log.2024-12-25 +797 -0

README.md

CHANGED

|

@@ -1,14 +1,43 @@

|

|

| 1 |

---

|

| 2 |

title: IDEA Bench Arena

|

| 3 |

-

emoji:

|

| 4 |

-

colorFrom:

|

| 5 |

-

colorTo:

|

| 6 |

sdk: gradio

|

| 7 |

sdk_version: 5.9.1

|

| 8 |

app_file: app.py

|

| 9 |

pinned: false

|

| 10 |

-

license: cc-by-

|

| 11 |

-

short_description: Official

|

| 12 |

---

|

| 13 |

|

| 14 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

---

|

| 2 |

title: IDEA Bench Arena

|

| 3 |

+

emoji: 📉

|

| 4 |

+

colorFrom: blue

|

| 5 |

+

colorTo: green

|

| 6 |

sdk: gradio

|

| 7 |

sdk_version: 5.9.1

|

| 8 |

app_file: app.py

|

| 9 |

pinned: false

|

| 10 |

+

license: cc-by-4.0

|

| 11 |

+

short_description: Official arena of IDEA-Bench.

|

| 12 |

---

|

| 13 |

|

| 14 |

+

## Installation

|

| 15 |

+

|

| 16 |

+

- for cuda 11.8

|

| 17 |

+

```bash

|

| 18 |

+

conda install pytorch torchvision torchaudio pytorch-cuda=11.8 -c pytorch -c nvidia

|

| 19 |

+

pip3 install -U xformers --index-url https://download.pytorch.org/whl/cu118

|

| 20 |

+

pip install -r requirements.txt

|

| 21 |

+

```

|

| 22 |

+

- for cuda 12.1

|

| 23 |

+

```bash

|

| 24 |

+

conda install pytorch torchvision torchaudio pytorch-cuda=12.1 -c pytorch -c nvidia

|

| 25 |

+

pip install -r requirements.txt

|

| 26 |

+

```

|

| 27 |

+

|

| 28 |

+

## Start Hugging Face UI

|

| 29 |

+

```bash

|

| 30 |

+

python app.py

|

| 31 |

+

```

|

| 32 |

+

|

| 33 |

+

## Start Log server

|

| 34 |

+

```bash

|

| 35 |

+

uvicorn serve.log_server:app --reload --port 22005 --host 0.0.0.0

|

| 36 |

+

```

|

| 37 |

+

|

| 38 |

+

## Update leaderboard

|

| 39 |

+

```bash

|

| 40 |

+

cd arena_elo && bash update_leaderboard.sh

|

| 41 |

+

```

|

| 42 |

+

|

| 43 |

+

Paper: [https://arxiv.org/abs/2412.11767](https://arxiv.org/abs/2412.11767)

|

app.py

ADDED

|

@@ -0,0 +1,65 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import gradio as gr

|

| 2 |

+

import os

|

| 3 |

+

# os.system("pip install -r requirements.txt -U")

|

| 4 |

+

# os.system("pip uninstall -y apex")

|

| 5 |

+

# os.system("pip uninstall -y flash-attn")

|

| 6 |

+

# os.system("FLASH_ATTENTION_FORCE_BUILD=TRUE pip install flash-attn")

|

| 7 |

+

from serve.gradio_web import *

|

| 8 |

+

from serve.leaderboard import build_leaderboard_tab

|

| 9 |

+

from model.model_manager import ModelManager

|

| 10 |

+

from pathlib import Path

|

| 11 |

+

from serve.constants import SERVER_PORT, ROOT_PATH, ELO_RESULTS_DIR

|

| 12 |

+

|

| 13 |

+

def build_combine_demo(models, elo_results_file, leaderboard_table_file):

|

| 14 |

+

|

| 15 |

+

with gr.Blocks(

|

| 16 |

+

title="Play with Open Vision Models",

|

| 17 |

+

theme=gr.themes.Default(),

|

| 18 |

+

css=block_css,

|

| 19 |

+

) as demo:

|

| 20 |

+

with gr.Tabs() as tabs_combine:

|

| 21 |

+

with gr.Tab("Image Generation", id=0):

|

| 22 |

+

with gr.Tabs() as tabs_ig:

|

| 23 |

+

with gr.Tab("Generation Arena (battle)", id=0):

|

| 24 |

+

build_side_by_side_ui_anony(models)

|

| 25 |

+

|

| 26 |

+

with gr.Tab("Generation Arena (side-by-side)", id=1):

|

| 27 |

+

build_side_by_side_ui_named(models)

|

| 28 |

+

|

| 29 |

+

if elo_results_file:

|

| 30 |

+

with gr.Tab("Generation Leaderboard", id=2):

|

| 31 |

+

build_leaderboard_tab(elo_results_file['t2i_generation'], leaderboard_table_file['t2i_generation'])

|

| 32 |

+

|

| 33 |

+

with gr.Tab("About Us", id=3):

|

| 34 |

+

build_about()

|

| 35 |

+

|

| 36 |

+

return demo

|

| 37 |

+

|

| 38 |

+

|

| 39 |

+

def load_elo_results(elo_results_dir):

|

| 40 |

+

from collections import defaultdict

|

| 41 |

+

elo_results_file = defaultdict(lambda: None)

|

| 42 |

+

leaderboard_table_file = defaultdict(lambda: None)

|

| 43 |

+

if elo_results_dir is not None:

|

| 44 |

+

elo_results_dir = Path(elo_results_dir)

|

| 45 |

+

elo_results_file = {}

|

| 46 |

+

leaderboard_table_file = {}

|

| 47 |

+

for file in elo_results_dir.glob('elo_results*.pkl'):

|

| 48 |

+

elo_results_file['t2i_generation'] = file

|

| 49 |

+

for file in elo_results_dir.glob('*leaderboard.csv'):

|

| 50 |

+

leaderboard_table_file['t2i_generation'] = file

|

| 51 |

+

|

| 52 |

+

return elo_results_file, leaderboard_table_file

|

| 53 |

+

|

| 54 |

+

if __name__ == "__main__":

|

| 55 |

+

|

| 56 |

+

server_port = int(SERVER_PORT)

|

| 57 |

+

root_path = ROOT_PATH

|

| 58 |

+

elo_results_dir = ELO_RESULTS_DIR

|

| 59 |

+

|

| 60 |

+

models = ModelManager()

|

| 61 |

+

|

| 62 |

+

elo_results_file, leaderboard_table_file = load_elo_results(elo_results_dir)

|

| 63 |

+

demo = build_combine_demo(models, elo_results_file, leaderboard_table_file)

|

| 64 |

+

demo.queue(max_size=20).launch(server_port=server_port, root_path=ROOT_PATH, show_error=True)

|

| 65 |

+

demo.launch(server_port=server_port, root_path=ROOT_PATH, show_error=True)

|

arena_elo/LICENSE

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

MIT License

|

| 2 |

+

|

| 3 |

+

Copyright (c) 2024 WildVision-Bench

|

| 4 |

+

|

| 5 |

+

Permission is hereby granted, free of charge, to any person obtaining a copy

|

| 6 |

+

of this software and associated documentation files (the "Software"), to deal

|

| 7 |

+

in the Software without restriction, including without limitation the rights

|

| 8 |

+

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

| 9 |

+

copies of the Software, and to permit persons to whom the Software is

|

| 10 |

+

furnished to do so, subject to the following conditions:

|

| 11 |

+

|

| 12 |

+

The above copyright notice and this permission notice shall be included in all

|

| 13 |

+

copies or substantial portions of the Software.

|

| 14 |

+

|

| 15 |

+

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

| 16 |

+

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

| 17 |

+

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

| 18 |

+

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

| 19 |

+

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

| 20 |

+

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

| 21 |

+

SOFTWARE.

|

arena_elo/README.md

ADDED

|

@@ -0,0 +1,46 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

## Computing the Elo Ratings

|

| 2 |

+

|

| 3 |

+

|

| 4 |

+

```bash

|

| 5 |

+

apt-get -y install pkg-config

|

| 6 |

+

pip install -r requirements.txt

|

| 7 |

+

```

|

| 8 |

+

|

| 9 |

+

|

| 10 |

+

### to update the leaderboard

|

| 11 |

+

|

| 12 |

+

```bash

|

| 13 |

+

export LOGDIR="/path/to/your/logdir"

|

| 14 |

+

bash update_elo_rating.sh

|

| 15 |

+

```

|

| 16 |

+

|

| 17 |

+

### to inspect the leaderboard status

|

| 18 |

+

```bash

|

| 19 |

+

python -m elo_rating.inspect_elo_rating_pkl

|

| 20 |

+

```

|

| 21 |

+

|

| 22 |

+

### to inspect the collected data status and cost

|

| 23 |

+

```bash

|

| 24 |

+

export LOGDIR="/path/to/your/logdir"

|

| 25 |

+

python -m elo_rating.inspect_cost

|

| 26 |

+

```

|

| 27 |

+

|

| 28 |

+

### to upload the battle data to hugging face🤗

|

| 29 |

+

```bash

|

| 30 |

+

export HUGGINGFACE_TOKEN="your_huggingface_token"

|

| 31 |

+

bash get_latest_data.sh

|

| 32 |

+

python -m elo_rating.upload_battle_data --repo_id "WildVision/wildvision-bench" --log_dir "./vision-arena-logs/"

|

| 33 |

+

```

|

| 34 |

+

|

| 35 |

+

### to upload the chat data to hugging face🤗

|

| 36 |

+

```bash

|

| 37 |

+

export HUGGINGFACE_TOKEN="your_huggingface_token"

|

| 38 |

+

bash get_latest_data.sh

|

| 39 |

+

python -m elo_rating.upload_chat_data --repo_id "WildVision/wildvision-bench" --log_dir "./vision-arena-logs/"

|

| 40 |

+

```

|

| 41 |

+

|

| 42 |

+

|

| 43 |

+

### to get the collected data

|

| 44 |

+

```bash

|

| 45 |

+

python -m

|

| 46 |

+

|

arena_elo/battle_count_heatmap.png

ADDED

|

arena_elo/cut_off_date.txt

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

20241226

|

arena_elo/elo_rating/__init__.py

ADDED

|

File without changes

|

arena_elo/elo_rating/__pycache__/__init__.cpython-310.pyc

ADDED

|

Binary file (185 Bytes). View file

|

|

|

arena_elo/elo_rating/__pycache__/basic_stats.cpython-310.pyc

ADDED

|

Binary file (6.26 kB). View file

|

|

|

arena_elo/elo_rating/__pycache__/clean_battle_data.cpython-310.pyc

ADDED

|

Binary file (8.12 kB). View file

|

|

|

arena_elo/elo_rating/__pycache__/elo_analysis.cpython-310.pyc

ADDED

|

Binary file (9.89 kB). View file

|

|

|

arena_elo/elo_rating/__pycache__/generate_leaderboard.cpython-310.pyc

ADDED

|

Binary file (2.02 kB). View file

|

|

|

arena_elo/elo_rating/__pycache__/inspect_cost.cpython-310.pyc

ADDED

|

Binary file (4.96 kB). View file

|

|

|

arena_elo/elo_rating/__pycache__/inspect_elo_rating_pkl.cpython-310.pyc

ADDED

|

Binary file (1.08 kB). View file

|

|

|

arena_elo/elo_rating/__pycache__/model_registry.cpython-310.pyc

ADDED

|

Binary file (14.4 kB). View file

|

|

|

arena_elo/elo_rating/__pycache__/utils.cpython-310.pyc

ADDED

|

Binary file (2.29 kB). View file

|

|

|

arena_elo/elo_rating/basic_stats.py

ADDED

|

@@ -0,0 +1,227 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import argparse

|

| 2 |

+

import code

|

| 3 |

+

import datetime

|

| 4 |

+

import json

|

| 5 |

+

import os

|

| 6 |

+

from pytz import timezone

|

| 7 |

+

import time

|

| 8 |

+

|

| 9 |

+

import pandas as pd # pandas>=2.0.3

|

| 10 |

+

import plotly.express as px

|

| 11 |

+

import plotly.graph_objects as go

|

| 12 |

+

from tqdm import tqdm

|

| 13 |

+

|

| 14 |

+

NUM_SERVERS = 1

|

| 15 |

+

LOG_ROOT_DIR = os.getenv("LOGDIR", None)

|

| 16 |

+

if LOG_ROOT_DIR is None:

|

| 17 |

+

raise ValueError("LOGDIR environment variable not set, please set it by `export LOGDIR=...`")

|

| 18 |

+

|

| 19 |

+

def get_log_files(max_num_files=None):

|

| 20 |

+

log_root = os.path.expanduser(LOG_ROOT_DIR)

|

| 21 |

+

filenames = []

|

| 22 |

+

if NUM_SERVERS == 1:

|

| 23 |

+

for filename in os.listdir(log_root):

|

| 24 |

+

if filename.endswith("-conv.json"):

|

| 25 |

+

filepath = f"{log_root}/{filename}"

|

| 26 |

+

name_tstamp_tuple = (filepath, os.path.getmtime(filepath))

|

| 27 |

+

filenames.append(name_tstamp_tuple)

|

| 28 |

+

else:

|

| 29 |

+

for i in range(NUM_SERVERS):

|

| 30 |

+

for filename in os.listdir(f"{log_root}/server{i}"):

|

| 31 |

+

if filename.endswith("-conv.json"):

|

| 32 |

+

filepath = f"{log_root}/server{i}/{filename}"

|

| 33 |

+

name_tstamp_tuple = (filepath, os.path.getmtime(filepath))

|

| 34 |

+

filenames.append(name_tstamp_tuple)

|

| 35 |

+

# sort by tstamp

|

| 36 |

+

filenames = sorted(filenames, key=lambda x: x[1])

|

| 37 |

+

filenames = [x[0] for x in filenames]

|

| 38 |

+

|

| 39 |

+

max_num_files = max_num_files or len(filenames)

|

| 40 |

+

filenames = filenames[-max_num_files:]

|

| 41 |

+

return filenames

|

| 42 |

+

|

| 43 |

+

|

| 44 |

+

def load_log_files(filename):

|

| 45 |

+

data = []

|

| 46 |

+

for retry in range(5):

|

| 47 |

+

try:

|

| 48 |

+

lines = open(filename).readlines()

|

| 49 |

+

break

|

| 50 |

+

except FileNotFoundError:

|

| 51 |

+

time.sleep(2)

|

| 52 |

+

|

| 53 |

+

for l in lines:

|

| 54 |

+

row = json.loads(l)

|

| 55 |

+

data.append(

|

| 56 |

+

dict(

|

| 57 |

+

type=row["type"],

|

| 58 |

+

tstamp=row["tstamp"],

|

| 59 |

+

model=row.get("model", ""),

|

| 60 |

+

models=row.get("models", ["", ""]),

|

| 61 |

+

)

|

| 62 |

+

)

|

| 63 |

+

return data

|

| 64 |

+

|

| 65 |

+

|

| 66 |

+

def load_log_files_parallel(log_files, num_threads=16):

|

| 67 |

+

data_all = []

|

| 68 |

+

from multiprocessing import Pool

|

| 69 |

+

|

| 70 |

+

with Pool(num_threads) as p:

|

| 71 |

+

ret_all = list(tqdm(p.imap(load_log_files, log_files), total=len(log_files)))

|

| 72 |

+

for ret in ret_all:

|

| 73 |

+

data_all.extend(ret)

|

| 74 |

+

return data_all

|

| 75 |

+

|

| 76 |

+

|

| 77 |

+

def get_anony_vote_df(df):

|

| 78 |

+

anony_vote_df = df[

|

| 79 |

+

df["type"].isin(["leftvote", "rightvote", "tievote", "bothbad_vote"])

|

| 80 |

+

]

|

| 81 |

+

anony_vote_df = anony_vote_df[anony_vote_df["models"].apply(lambda x: x[0] == "")]

|

| 82 |

+

return anony_vote_df

|

| 83 |

+

|

| 84 |

+

|

| 85 |

+

def merge_counts(series, on, names):

|

| 86 |

+

ret = pd.merge(series[0], series[1], on=on)

|

| 87 |

+

for i in range(2, len(series)):

|

| 88 |

+

ret = pd.merge(ret, series[i], on=on)

|

| 89 |

+

ret = ret.reset_index()

|

| 90 |

+

old_names = list(ret.columns)[-len(series) :]

|

| 91 |

+

rename = {old_name: new_name for old_name, new_name in zip(old_names, names)}

|

| 92 |

+

ret = ret.rename(columns=rename)

|

| 93 |

+

return ret

|

| 94 |

+

|

| 95 |

+

|

| 96 |

+

def report_basic_stats(log_files):

|

| 97 |

+

df_all = load_log_files_parallel(log_files)

|

| 98 |

+

df_all = pd.DataFrame(df_all)

|

| 99 |

+

now_t = df_all["tstamp"].max()

|

| 100 |

+

df_1_hour = df_all[df_all["tstamp"] > (now_t - 3600)]

|

| 101 |

+

df_1_day = df_all[df_all["tstamp"] > (now_t - 3600 * 24)]

|

| 102 |

+

anony_vote_df_all = get_anony_vote_df(df_all)

|

| 103 |

+

|

| 104 |

+

# Chat trends

|

| 105 |

+

chat_dates = [

|

| 106 |

+

datetime.datetime.fromtimestamp(x, tz=timezone("US/Pacific")).strftime(

|

| 107 |

+

"%Y-%m-%d"

|

| 108 |

+

)

|

| 109 |

+

for x in df_all[df_all["type"] == "chat"]["tstamp"]

|

| 110 |

+

]

|

| 111 |

+

chat_dates_counts = pd.value_counts(chat_dates)

|

| 112 |

+

vote_dates = [

|

| 113 |

+

datetime.datetime.fromtimestamp(x, tz=timezone("US/Pacific")).strftime(

|

| 114 |

+

"%Y-%m-%d"

|

| 115 |

+

)

|

| 116 |

+

for x in anony_vote_df_all["tstamp"]

|

| 117 |

+

]

|

| 118 |

+

vote_dates_counts = pd.value_counts(vote_dates)

|

| 119 |

+

chat_dates_bar = go.Figure(

|

| 120 |

+

data=[

|

| 121 |

+

go.Bar(

|

| 122 |

+

name="Anony. Vote",

|

| 123 |

+

x=vote_dates_counts.index,

|

| 124 |

+

y=vote_dates_counts,

|

| 125 |

+

text=[f"{val:.0f}" for val in vote_dates_counts],

|

| 126 |

+

textposition="auto",

|

| 127 |

+

),

|

| 128 |

+

go.Bar(

|

| 129 |

+

name="Chat",

|

| 130 |

+

x=chat_dates_counts.index,

|

| 131 |

+

y=chat_dates_counts,

|

| 132 |

+

text=[f"{val:.0f}" for val in chat_dates_counts],

|

| 133 |

+

textposition="auto",

|

| 134 |

+

),

|

| 135 |

+

]

|

| 136 |

+

)

|

| 137 |

+

chat_dates_bar.update_layout(

|

| 138 |

+

barmode="stack",

|

| 139 |

+

xaxis_title="Dates",

|

| 140 |

+

yaxis_title="Count",

|

| 141 |

+

height=300,

|

| 142 |

+

width=1200,

|

| 143 |

+

)

|

| 144 |

+

|

| 145 |

+

# Model call counts

|

| 146 |

+

model_hist_all = df_all[df_all["type"] == "chat"]["model"].value_counts()

|

| 147 |

+

model_hist_1_day = df_1_day[df_1_day["type"] == "chat"]["model"].value_counts()

|

| 148 |

+

model_hist_1_hour = df_1_hour[df_1_hour["type"] == "chat"]["model"].value_counts()

|

| 149 |

+

model_hist = merge_counts(

|

| 150 |

+

[model_hist_all, model_hist_1_day, model_hist_1_hour],

|

| 151 |

+

on="model",

|

| 152 |

+

names=["All", "Last Day", "Last Hour"],

|

| 153 |

+

)

|

| 154 |

+

model_hist_md = model_hist.to_markdown(index=False, tablefmt="github")

|

| 155 |

+

|

| 156 |

+

# Action counts

|

| 157 |

+

action_hist_all = df_all["type"].value_counts()

|

| 158 |

+

action_hist_1_day = df_1_day["type"].value_counts()

|

| 159 |

+

action_hist_1_hour = df_1_hour["type"].value_counts()

|

| 160 |

+

action_hist = merge_counts(

|

| 161 |

+

[action_hist_all, action_hist_1_day, action_hist_1_hour],

|

| 162 |

+

on="type",

|

| 163 |

+

names=["All", "Last Day", "Last Hour"],

|

| 164 |

+

)

|

| 165 |

+

action_hist_md = action_hist.to_markdown(index=False, tablefmt="github")

|

| 166 |

+

|

| 167 |

+

# Anony vote counts

|

| 168 |

+

anony_vote_hist_all = anony_vote_df_all["type"].value_counts()

|

| 169 |

+

anony_vote_df_1_day = get_anony_vote_df(df_1_day)

|

| 170 |

+

anony_vote_hist_1_day = anony_vote_df_1_day["type"].value_counts()

|

| 171 |

+

# anony_vote_df_1_hour = get_anony_vote_df(df_1_hour)

|

| 172 |

+

# anony_vote_hist_1_hour = anony_vote_df_1_hour["type"].value_counts()

|

| 173 |

+

anony_vote_hist = merge_counts(

|

| 174 |

+

[anony_vote_hist_all, anony_vote_hist_1_day],

|

| 175 |

+

on="type",

|

| 176 |

+

names=["All", "Last Day"],

|

| 177 |

+

)

|

| 178 |

+

anony_vote_hist_md = anony_vote_hist.to_markdown(index=False, tablefmt="github")

|

| 179 |

+

|

| 180 |

+

# Last 24 hours

|

| 181 |

+

chat_1_day = df_1_day[df_1_day["type"] == "chat"]

|

| 182 |

+

num_chats_last_24_hours = []

|

| 183 |

+

base = df_1_day["tstamp"].min()

|

| 184 |

+

for i in range(24, 0, -1):

|

| 185 |

+

left = base + (i - 1) * 3600

|

| 186 |

+

right = base + i * 3600

|

| 187 |

+

num = ((chat_1_day["tstamp"] >= left) & (chat_1_day["tstamp"] < right)).sum()

|

| 188 |

+

num_chats_last_24_hours.append(num)

|

| 189 |

+

times = [

|

| 190 |

+

datetime.datetime.fromtimestamp(

|

| 191 |

+

base + i * 3600, tz=timezone("US/Pacific")

|

| 192 |

+

).strftime("%Y-%m-%d %H:%M:%S %Z")

|

| 193 |

+

for i in range(24, 0, -1)

|

| 194 |

+

]

|

| 195 |

+

last_24_hours_df = pd.DataFrame({"time": times, "value": num_chats_last_24_hours})

|

| 196 |

+

last_24_hours_md = last_24_hours_df.to_markdown(index=False, tablefmt="github")

|

| 197 |

+

|

| 198 |

+

# Last update datetime

|

| 199 |

+

last_updated_tstamp = now_t

|

| 200 |

+

last_updated_datetime = datetime.datetime.fromtimestamp(

|

| 201 |

+

last_updated_tstamp, tz=timezone("US/Pacific")

|

| 202 |

+

).strftime("%Y-%m-%d %H:%M:%S %Z")

|

| 203 |

+

|

| 204 |

+

# code.interact(local=locals())

|

| 205 |

+

|

| 206 |

+

return {

|

| 207 |

+

"chat_dates_bar": chat_dates_bar,

|

| 208 |

+

"model_hist_md": model_hist_md,

|

| 209 |

+

"action_hist_md": action_hist_md,

|

| 210 |

+

"anony_vote_hist_md": anony_vote_hist_md,

|

| 211 |

+

"num_chats_last_24_hours": last_24_hours_md,

|

| 212 |

+

"last_updated_datetime": last_updated_datetime,

|

| 213 |

+

}

|

| 214 |

+

|

| 215 |

+

|

| 216 |

+

if __name__ == "__main__":

|

| 217 |

+

parser = argparse.ArgumentParser()

|

| 218 |

+

parser.add_argument("--max-num-files", type=int)

|

| 219 |

+

args = parser.parse_args()

|

| 220 |

+

|

| 221 |

+

log_files = get_log_files(args.max_num_files)

|

| 222 |

+

basic_stats = report_basic_stats(log_files)

|

| 223 |

+

|

| 224 |

+

print(basic_stats["action_hist_md"] + "\n")

|

| 225 |

+

print(basic_stats["model_hist_md"] + "\n")

|

| 226 |

+

print(basic_stats["anony_vote_hist_md"] + "\n")

|

| 227 |

+

print(basic_stats["num_chats_last_24_hours"] + "\n")

|

arena_elo/elo_rating/clean_battle_data.py

ADDED

|

@@ -0,0 +1,342 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""

|

| 2 |

+

Clean chatbot arena battle log.

|

| 3 |

+

|

| 4 |

+

Usage:

|

| 5 |

+

python3 clean_battle_data.py --mode conv_release

|

| 6 |

+

"""

|

| 7 |

+

import argparse

|

| 8 |

+

import datetime

|

| 9 |

+

import json

|

| 10 |

+

import os

|

| 11 |

+

import sys

|

| 12 |

+

from pytz import timezone

|

| 13 |

+

import time

|

| 14 |

+

import PIL

|

| 15 |

+

from PIL import ImageFile

|

| 16 |

+

ImageFile.LOAD_TRUNCATED_IMAGES = True

|

| 17 |

+

|

| 18 |

+

from tqdm import tqdm

|

| 19 |

+

|

| 20 |

+

from .basic_stats import get_log_files, NUM_SERVERS, LOG_ROOT_DIR

|

| 21 |

+

from .utils import detect_language, get_time_stamp_from_date

|

| 22 |

+

|

| 23 |

+

VOTES = ["tievote", "leftvote", "rightvote", "bothbad_vote"]

|

| 24 |

+

IDENTITY_WORDS = [

|

| 25 |

+

"vicuna",

|

| 26 |

+

"lmsys",

|

| 27 |

+

"koala",

|

| 28 |

+

"uc berkeley",

|

| 29 |

+

"open assistant",

|

| 30 |

+

"laion",

|

| 31 |

+

"chatglm",

|

| 32 |

+

"chatgpt",

|

| 33 |

+

"gpt-4",

|

| 34 |

+

"openai",

|

| 35 |

+

"anthropic",

|

| 36 |

+

"claude",

|

| 37 |

+

"bard",

|

| 38 |

+

"palm",

|

| 39 |

+

"lamda",

|

| 40 |

+

"google",

|

| 41 |

+

"llama",

|

| 42 |

+

"qianwan",

|

| 43 |

+

"alibaba",

|

| 44 |

+

"mistral",

|

| 45 |

+

"zhipu",

|

| 46 |

+

"KEG lab",

|

| 47 |

+

"01.AI",

|

| 48 |

+

"AI2",

|

| 49 |

+

"Tülu",

|

| 50 |

+

"Tulu",

|

| 51 |

+

"NETWORK ERROR DUE TO HIGH TRAFFIC. PLEASE REGENERATE OR REFRESH THIS PAGE.",

|

| 52 |

+

"$MODERATION$ YOUR INPUT VIOLATES OUR CONTENT MODERATION GUIDELINES.",

|

| 53 |

+

"API REQUEST ERROR. Please increase the number of max tokens.",

|

| 54 |

+

"**API REQUEST ERROR** Reason: The response was blocked.",

|

| 55 |

+

"**API REQUEST ERROR**",

|

| 56 |

+

]

|

| 57 |

+

|

| 58 |

+

for i in range(len(IDENTITY_WORDS)):

|

| 59 |

+

IDENTITY_WORDS[i] = IDENTITY_WORDS[i].lower()

|

| 60 |

+

|

| 61 |

+

|

| 62 |

+

def remove_html(raw):

|

| 63 |

+

if raw.startswith("<h3>"):

|

| 64 |

+

return raw[raw.find(": ") + 2 : -len("</h3>\n")]

|

| 65 |

+

if raw.startswith("### Model A: ") or raw.startswith("### Model B: "):

|

| 66 |

+

return raw[13:]

|

| 67 |

+

return raw

|

| 68 |

+

|

| 69 |

+

|

| 70 |

+

def to_openai_format(messages):

|

| 71 |

+

roles = ["user", "assistant"]

|

| 72 |

+

ret = []

|

| 73 |

+

for i, x in enumerate(messages):

|

| 74 |

+

ret.append({"role": roles[i % 2], "content": x[1]})

|

| 75 |

+

return ret

|

| 76 |

+

|

| 77 |

+

|

| 78 |

+

def replace_model_name(old_name, tstamp):

|

| 79 |

+

replace_dict = {

|

| 80 |

+

"bard": "palm-2",

|

| 81 |

+

"claude-v1": "claude-1",

|

| 82 |

+

"claude-instant-v1": "claude-instant-1",

|

| 83 |

+

"oasst-sft-1-pythia-12b": "oasst-pythia-12b",

|

| 84 |

+

"claude-2": "claude-2.0",

|

| 85 |

+

"PlayGroundV2": "PlayGround V2",

|

| 86 |

+

"PlayGroundV2.5": "PlayGround V2.5",

|

| 87 |

+

}

|

| 88 |

+

if old_name in ["gpt-4", "gpt-3.5-turbo"]:

|

| 89 |

+

if tstamp > 1687849200:

|

| 90 |

+

return old_name + "-0613"

|

| 91 |

+

else:

|

| 92 |

+

return old_name + "-0314"

|

| 93 |

+

if old_name in replace_dict:

|

| 94 |

+

return replace_dict[old_name]

|

| 95 |

+

return old_name

|

| 96 |

+

|

| 97 |

+

|

| 98 |

+

def read_file(filename):

|

| 99 |

+

data = []

|

| 100 |

+

for retry in range(5):

|

| 101 |

+

try:

|

| 102 |

+

# lines = open(filename).readlines()

|

| 103 |

+

for l in open(filename):

|

| 104 |

+

row = json.loads(l)

|

| 105 |

+

if row["type"] in VOTES:

|

| 106 |

+

data.append(row)

|

| 107 |

+

break

|

| 108 |

+

except FileNotFoundError:

|

| 109 |

+

time.sleep(2)

|

| 110 |

+

except json.JSONDecodeError:

|

| 111 |

+

print(f"Error in reading {filename}")

|

| 112 |

+

print(row)

|

| 113 |

+

exit(0)

|

| 114 |

+

return data

|

| 115 |

+

|

| 116 |

+

|

| 117 |

+

def read_file_parallel(log_files, num_threads=16):

|

| 118 |

+

data_all = []

|

| 119 |

+

from multiprocessing import Pool

|

| 120 |

+

|

| 121 |

+

with Pool(num_threads) as p:

|

| 122 |

+

ret_all = list(tqdm(p.imap(read_file, log_files), total=len(log_files)))

|

| 123 |

+

for ret in ret_all:

|

| 124 |

+

data_all.extend(ret)

|

| 125 |

+

return data_all

|

| 126 |

+

|

| 127 |

+

def load_image(image_path):

|

| 128 |

+

try:

|

| 129 |

+

return PIL.Image.open(image_path)

|

| 130 |

+

except:

|

| 131 |

+

return None

|

| 132 |

+

|

| 133 |

+

def clean_battle_data(log_files, exclude_model_names, ban_ip_list=None, sanitize_ip=False, mode="simple"):

|

| 134 |

+

data = read_file_parallel(log_files, num_threads=16)

|

| 135 |

+

|

| 136 |

+

convert_type = {

|

| 137 |

+

"leftvote": "model_a",

|

| 138 |

+

"rightvote": "model_b",

|

| 139 |

+

"tievote": "tie",

|

| 140 |

+

"bothbad_vote": "tie (bothbad)",

|

| 141 |

+

}

|

| 142 |

+

|

| 143 |

+

all_models = set()

|

| 144 |

+

all_ips = dict()

|

| 145 |

+

ct_anony = 0

|

| 146 |

+

ct_invalid = 0

|

| 147 |

+

ct_leaked_identity = 0

|

| 148 |

+

ct_banned = 0

|

| 149 |

+

battles = []

|

| 150 |

+

for row in tqdm(data, desc="Cleaning"):

|

| 151 |

+

if row["models"][0] is None or row["models"][1] is None:

|

| 152 |

+

print(f"Invalid model names: {row['models']}")

|

| 153 |

+

continue

|

| 154 |

+

|

| 155 |

+

# Resolve model names

|

| 156 |

+

models_public = [remove_html(row["models"][0]), remove_html(row["models"][1])]

|

| 157 |

+

if "model_name" in row["states"][0]:

|

| 158 |

+

models_hidden = [

|

| 159 |

+

row["states"][0]["model_name"],

|

| 160 |

+

row["states"][1]["model_name"],

|

| 161 |

+

]

|

| 162 |

+

if models_hidden[0] is None:

|

| 163 |

+

models_hidden = models_public

|

| 164 |

+

else:

|

| 165 |

+

models_hidden = models_public

|

| 166 |

+

|

| 167 |

+

if (models_public[0] == "" and models_public[1] != "") or (

|

| 168 |

+

models_public[1] == "" and models_public[0] != ""

|

| 169 |

+

):

|

| 170 |

+

ct_invalid += 1

|

| 171 |

+

print(f"Invalid model names: {models_public}")

|

| 172 |

+

continue

|

| 173 |

+

|

| 174 |

+

if row["anony"]:

|

| 175 |

+

anony = True

|

| 176 |

+

models = models_hidden

|

| 177 |

+

ct_anony += 1

|

| 178 |

+

else:

|

| 179 |

+

anony = False

|

| 180 |

+

models = models_public

|

| 181 |

+

if not models_public == models_hidden:

|

| 182 |

+

print(f"Model names mismatch: {models_public} vs {models_hidden}")

|

| 183 |

+

ct_invalid += 1

|

| 184 |

+

continue

|

| 185 |

+

|

| 186 |

+

def preprocess_model_name(m):

|

| 187 |

+

if m == "Playground v2":

|

| 188 |

+

return 'playground_PlayGroundV2_generation'

|

| 189 |

+

if m == "Playground v2.5":

|

| 190 |

+

return 'playground_PlayGroundV2.5_generation'

|

| 191 |

+

return m

|

| 192 |

+

models = [preprocess_model_name(m) for m in models]

|

| 193 |

+

|

| 194 |

+

# valid = True

|

| 195 |

+

# for _model in models:

|

| 196 |

+

# print(_model)

|

| 197 |

+

# input()

|

| 198 |

+

# try:

|

| 199 |

+

# platform, model_name, task = _model.split("_")

|

| 200 |

+

# except ValueError:

|

| 201 |

+

# print(f"Invalid model names: {_model}")

|

| 202 |

+

# valid = False

|

| 203 |

+

# break

|

| 204 |

+

# if not (platform.lower() in ["playground", "imagenhub", 'fal'] and (task == "generation" or task == "text2image")):

|

| 205 |

+

# valid = False

|

| 206 |

+

# break

|

| 207 |

+

# if not valid:

|

| 208 |

+

# ct_invalid += 1

|

| 209 |

+

# print(f"Invalid model names: {models} for t2i_generation")

|

| 210 |

+

# continue

|

| 211 |

+

# for i, _model in enumerate(models):

|

| 212 |

+

# platform, model_name, task = _model.split("_")

|

| 213 |

+

# models[i] = model_name

|

| 214 |

+

|

| 215 |

+

models = [replace_model_name(m, row["tstamp"]) for m in models]

|

| 216 |

+

|

| 217 |

+

# Exclude certain models

|

| 218 |

+

if exclude_model_names and any(x in exclude_model_names for x in models):

|

| 219 |

+

ct_invalid += 1

|

| 220 |

+

continue

|

| 221 |

+

|

| 222 |

+

if mode == "conv_release":

|

| 223 |

+

# assert the two images are the same

|

| 224 |

+

date = datetime.datetime.fromtimestamp(row["tstamp"], tz=timezone("US/Pacific")).strftime("%Y-%m-%d") # 2024-02-29

|

| 225 |

+

image_path_format = f"{LOG_ROOT_DIR}/{date}-convinput_images/input_image_"

|

| 226 |

+

image_path_0 = image_path_format + str(row["states"][0]["conv_id"]) + ".png"

|

| 227 |

+

image_path_1 = image_path_format + str(row["states"][1]["conv_id"]) + ".png"

|

| 228 |

+

if not os.path.exists(image_path_0) or not os.path.exists(image_path_1):

|

| 229 |

+

print(f"Image not found for {image_path_0} or {image_path_1}")

|

| 230 |

+

ct_invalid += 1

|

| 231 |

+

continue

|

| 232 |

+

|

| 233 |

+

image_0 = load_image(image_path_0)

|

| 234 |

+

image_1 = load_image(image_path_1)

|

| 235 |

+

if image_0 is None or image_1 is None:

|

| 236 |

+

print(f"Image not found for {image_path_0} or {image_path_1}")

|

| 237 |

+

ct_invalid += 1

|

| 238 |

+

continue

|

| 239 |

+

if image_0.tobytes() != image_1.tobytes():

|

| 240 |

+

print(f"Image not the same for {image_path_0} and {image_path_1}")

|

| 241 |

+

ct_invalid += 1

|

| 242 |

+

continue

|

| 243 |

+

|

| 244 |

+

|

| 245 |

+

# question_id = row["states"][0]["conv_id"]

|

| 246 |

+

|

| 247 |

+

ip = row["ip"]

|

| 248 |

+

if ip not in all_ips:

|

| 249 |

+

all_ips[ip] = {"ip": ip, "count": 0, "sanitized_id": len(all_ips)}

|

| 250 |

+

all_ips[ip]["count"] += 1

|

| 251 |

+

if sanitize_ip:

|

| 252 |

+

user_id = f"arena_user_{all_ips[ip]['sanitized_id']}"

|

| 253 |

+

else:

|

| 254 |

+

user_id = f"{all_ips[ip]['ip']}"

|

| 255 |

+

|

| 256 |

+

if ban_ip_list is not None and ip in ban_ip_list:

|

| 257 |

+

ct_banned += 1

|

| 258 |

+

print(f"User {user_id} is banned")

|

| 259 |

+

continue

|

| 260 |

+

|

| 261 |

+

# Save the results

|

| 262 |

+

battles.append(

|

| 263 |

+

dict(

|

| 264 |

+

model_a=models[0],

|

| 265 |

+

model_b=models[1],

|

| 266 |

+

winner=convert_type[row["type"]],

|

| 267 |

+

judge=f"arena_user_{user_id}",

|

| 268 |

+

anony=anony,

|

| 269 |

+

tstamp=row["tstamp"],

|

| 270 |

+

)

|

| 271 |

+

)

|

| 272 |

+

|

| 273 |

+

all_models.update(models_hidden)

|

| 274 |

+

battles.sort(key=lambda x: x["tstamp"])

|

| 275 |

+

last_updated_tstamp = battles[-1]["tstamp"]

|

| 276 |

+

|

| 277 |

+

last_updated_datetime = datetime.datetime.fromtimestamp(

|

| 278 |

+

last_updated_tstamp, tz=timezone("US/Pacific")

|

| 279 |

+

).strftime("%Y-%m-%d %H:%M:%S %Z")

|

| 280 |

+

|

| 281 |

+

print(

|

| 282 |

+

f"#votes: {len(data)}, #invalid votes: {ct_invalid}, "

|

| 283 |

+

f"#leaked_identity: {ct_leaked_identity} "

|

| 284 |

+

f"#banned: {ct_banned} "

|

| 285 |

+

)

|

| 286 |

+

print(f"#battles: {len(battles)}, #anony: {ct_anony}")

|

| 287 |

+

print(f"#models: {len(all_models)}, {all_models}")

|

| 288 |

+

print(f"last-updated: {last_updated_datetime}")

|

| 289 |

+

|

| 290 |

+

if ban_ip_list is not None:

|

| 291 |

+

for ban_ip in ban_ip_list:

|

| 292 |

+

if ban_ip in all_ips:

|

| 293 |

+

del all_ips[ban_ip]

|

| 294 |

+

print("Top 30 IPs:")

|

| 295 |

+

print(sorted(all_ips.values(), key=lambda x: x["count"], reverse=True)[:30])

|

| 296 |

+

return battles

|

| 297 |

+

|

| 298 |

+

|

| 299 |

+

if __name__ == "__main__":

|

| 300 |

+

parser = argparse.ArgumentParser()

|

| 301 |

+

parser.add_argument("--max-num-files", type=int)

|

| 302 |

+

parser.add_argument(

|

| 303 |

+

"--mode", type=str, choices=["simple", "conv_release"], default="simple"

|

| 304 |

+

)

|

| 305 |

+

parser.add_argument("--exclude-model-names", type=str, nargs="+")

|

| 306 |

+

parser.add_argument("--ban-ip-file", type=str)

|

| 307 |

+

parser.add_argument("--sanitize-ip", action="store_true", default=False)

|

| 308 |

+

args = parser.parse_args()

|

| 309 |

+

|

| 310 |

+

log_files = get_log_files(args.max_num_files)

|

| 311 |

+

ban_ip_list = json.load(open(args.ban_ip_file)) if args.ban_ip_file else None

|

| 312 |

+

|

| 313 |

+

battles = clean_battle_data(

|

| 314 |

+

log_files, args.exclude_model_names or [], ban_ip_list, args.sanitize_ip, args.mode,

|

| 315 |

+

)

|

| 316 |

+

last_updated_tstamp = battles[-1]["tstamp"]

|

| 317 |

+

cutoff_date = datetime.datetime.fromtimestamp(

|

| 318 |

+

last_updated_tstamp, tz=timezone("US/Pacific")

|

| 319 |

+

).strftime("%Y%m%d")

|

| 320 |

+

|

| 321 |

+

if args.mode == "simple":

|

| 322 |

+

for x in battles:

|

| 323 |

+

for key in [

|

| 324 |

+

"conversation_a",

|

| 325 |

+

"conversation_b",

|

| 326 |

+

"question_id",

|

| 327 |

+

]:

|

| 328 |

+

if key in x:

|

| 329 |

+

del x[key]

|

| 330 |

+

print("Samples:")

|

| 331 |

+

for i in range(min(4, len(battles))):

|

| 332 |

+

print(battles[i])

|

| 333 |

+

output = f"clean_battle_{cutoff_date}.json"

|

| 334 |

+

elif args.mode == "conv_release":

|

| 335 |

+

output = f"clean_battle_conv_{cutoff_date}.json"

|

| 336 |

+

|

| 337 |

+

with open(output, "w") as fout:

|

| 338 |

+

json.dump(battles, fout, indent=2, ensure_ascii=False)

|

| 339 |

+

print(f"Write cleaned data to {output}")

|

| 340 |

+

|

| 341 |

+

with open("cut_off_date.txt", "w") as fout:

|

| 342 |

+

fout.write(cutoff_date)

|

arena_elo/elo_rating/elo_analysis.py

ADDED

|

@@ -0,0 +1,395 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|