Upload 32 files

Browse files- app.py +69 -0

- classes/.ipynb_checkpoints/TissueIdentifier-checkpoint.py +33 -0

- classes/.ipynb_checkpoints/TissueIdentifierModel-checkpoint.py +35 -0

- classes/.ipynb_checkpoints/binary_neural_classifier-checkpoint.py +16 -0

- classes/.ipynb_checkpoints/genomic_plip_model-checkpoint.py +18 -0

- classes/.ipynb_checkpoints/slide_processor-checkpoint.py +64 -0

- classes/__pycache__/PlipDataProcess.cpython-310.pyc +0 -0

- classes/__pycache__/PlipDataProcess.cpython-38.pyc +0 -0

- classes/__pycache__/genomic_plip_model.cpython-310.pyc +0 -0

- classes/binary_neural_classifier.py +16 -0

- classes/genomic_plip_model.py +18 -0

- classes/slide_processor.py +175 -0

- genomic_plip/README.md +3 -0

- genomic_plip/config.json +26 -0

- genomic_plip/preprocessor_config.json +28 -0

- genomic_plip_model/.gitattributes +35 -0

- genomic_plip_model/README.md +3 -0

- genomic_plip_model/config.json +26 -0

- genomic_plip_model/preprocessor_config.json +28 -0

- genomic_plip_model/pytorch_model.bin +3 -0

- main.py +32 -0

- models/classifier.pth +3 -0

- requirements.txt +14 -0

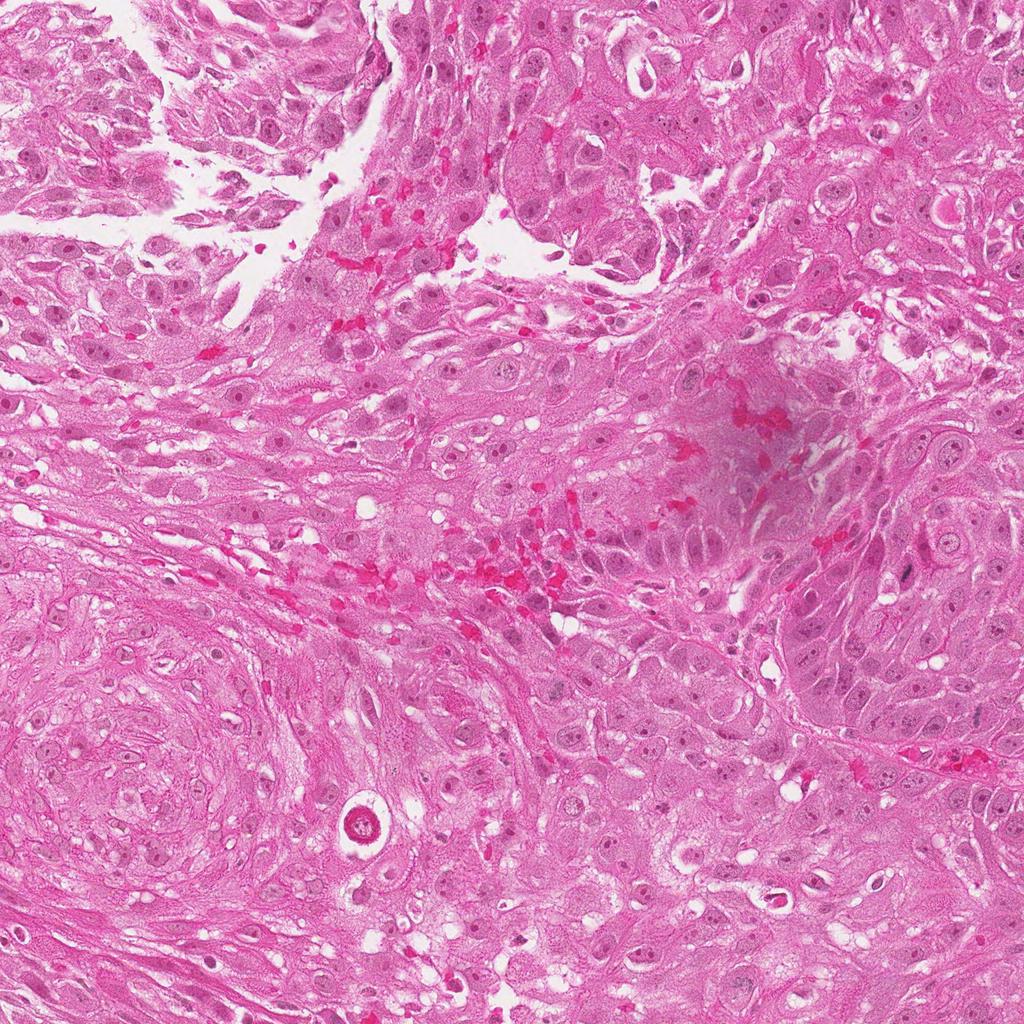

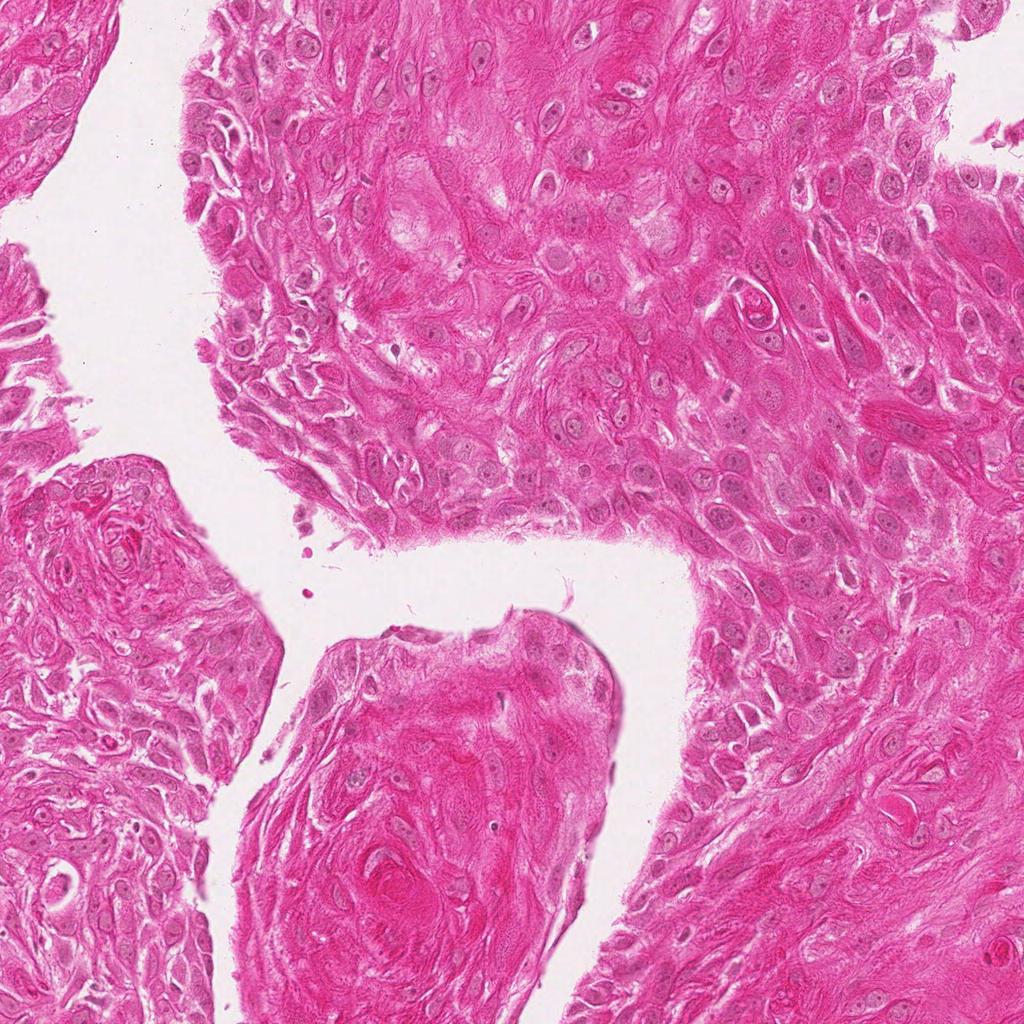

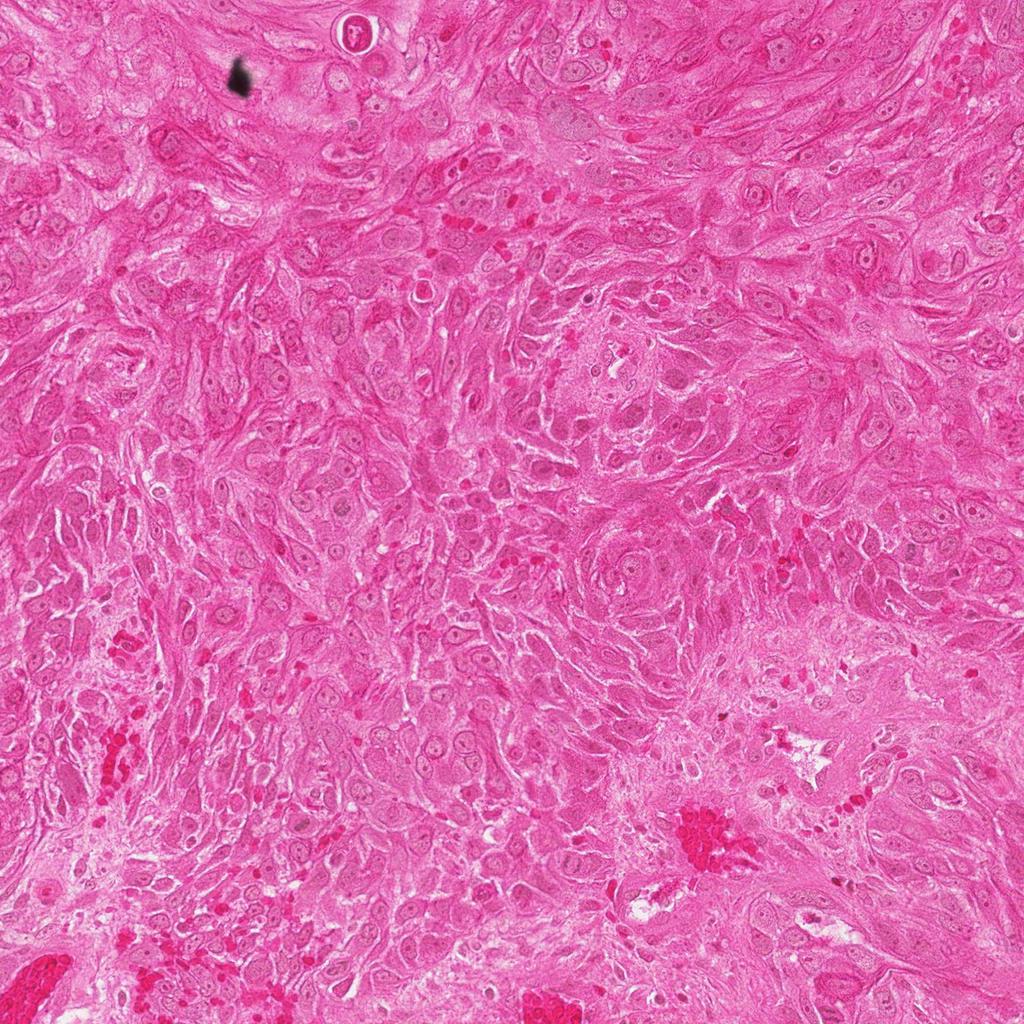

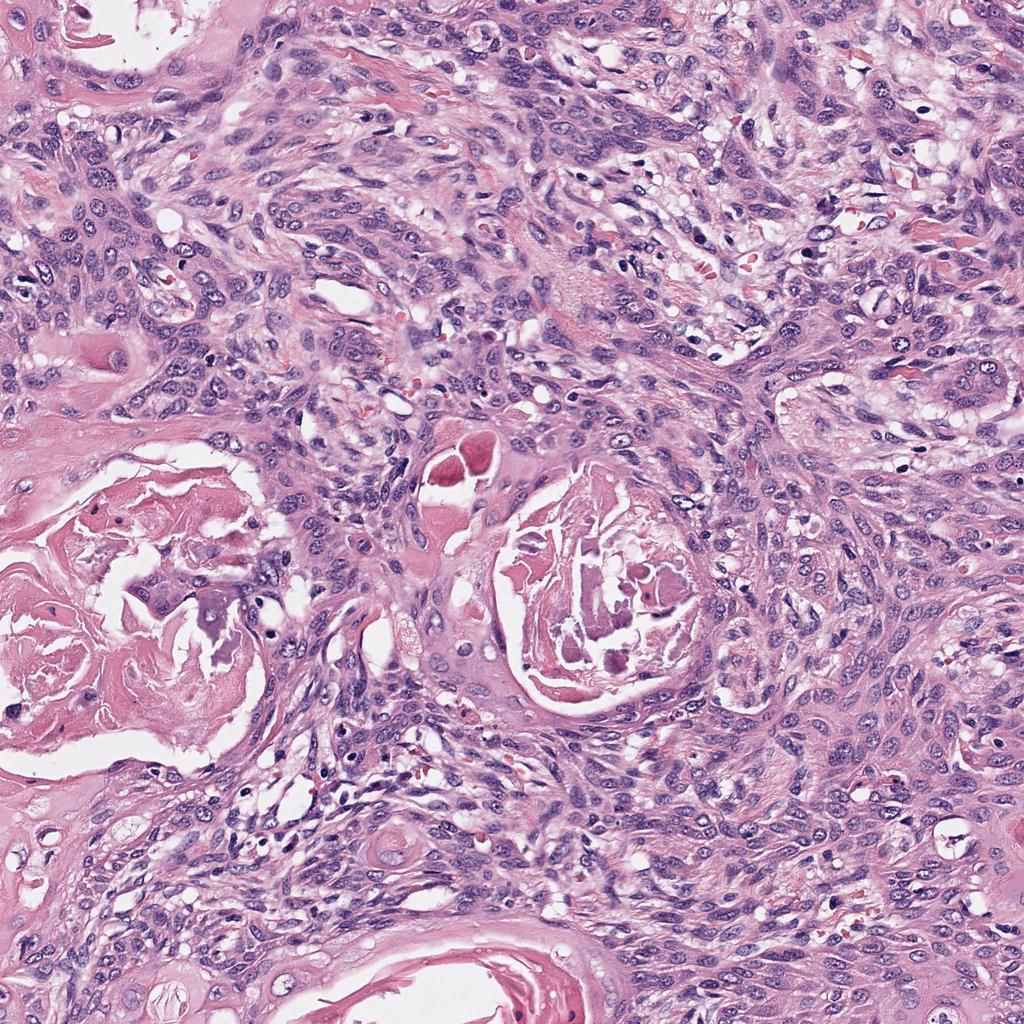

- sample_tiles/TCGA-BA-6872_52_9.jpeg +0 -0

- sample_tiles/TCGA-BA-6872_56_14.jpeg +0 -0

- sample_tiles/TCGA-BA-6872_59_12.jpeg +0 -0

- sample_tiles/TCGA-BA-6872_59_17.jpeg +0 -0

- sample_tiles/TCGA-UF-A718_13_10.jpeg +0 -0

- sample_tiles/TCGA-UF-A718_13_11.jpeg +0 -0

- sample_tiles/TCGA-UF-A718_13_19.jpeg +0 -0

- sample_tiles/TCGA-UF-A718_23_19.jpeg +0 -0

- sample_tiles/TCGA-UF-A718_23_25.jpeg +0 -0

app.py

ADDED

|

@@ -0,0 +1,69 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import gradio as gr

|

| 2 |

+

import torch

|

| 3 |

+

<<<<<<< HEAD

|

| 4 |

+

=======

|

| 5 |

+

from PIL import Image

|

| 6 |

+

>>>>>>> new-branch

|

| 7 |

+

from main import load_and_preprocess_image, genomic_plip_predictions, classify_tiles

|

| 8 |

+

|

| 9 |

+

def run_load_and_preprocess_image(image_path, clip_processor_path):

|

| 10 |

+

image_tensor = load_and_preprocess_image(image_path, clip_processor_path)

|

| 11 |

+

return image_tensor

|

| 12 |

+

|

| 13 |

+

def run_genomic_plip_predictions(image_tensor, model_path):

|

| 14 |

+

pred_data = genomic_plip_predictions(image_tensor, model_path)

|

| 15 |

+

return pred_data

|

| 16 |

+

|

| 17 |

+

def run_classify_tiles(pred_data, model_path):

|

| 18 |

+

output = classify_tiles(pred_data, model_path)

|

| 19 |

+

return output

|

| 20 |

+

|

| 21 |

+

<<<<<<< HEAD

|

| 22 |

+

with gr.Blocks() as demo:

|

| 23 |

+

image_file = gr.File(label="Upload Image File")

|

| 24 |

+

=======

|

| 25 |

+

example_files = list(Path("sample_tiles").glob("*.jpeg"))

|

| 26 |

+

|

| 27 |

+

with gr.Blocks() as demo:

|

| 28 |

+

gr.Markdown("## Cancer Risk Prediction from Tissue Slide")

|

| 29 |

+

|

| 30 |

+

image_file = gr.Image(type="filepath", label="Upload Image File")

|

| 31 |

+

>>>>>>> new-branch

|

| 32 |

+

clip_processor_path = gr.Textbox(label="CLIP Processor Path", value="./genomic_plip_model")

|

| 33 |

+

genomic_plip_model_path = gr.Textbox(label="Genomic PLIP Model Path", value="./genomic_plip_model")

|

| 34 |

+

classifier_model_path = gr.Textbox(label="Classifier Model Path", value="./models/classifier.pth")

|

| 35 |

+

|

| 36 |

+

image_tensor_output = gr.Variable()

|

| 37 |

+

pred_data_output = gr.Variable()

|

| 38 |

+

result_output = gr.Textbox(label="Result")

|

| 39 |

+

|

| 40 |

+

preprocess_button = gr.Button("Preprocess Image")

|

| 41 |

+

predict_button = gr.Button("Predict with Genomic PLIP")

|

| 42 |

+

classify_button = gr.Button("Classify Tiles")

|

| 43 |

+

|

| 44 |

+

preprocess_button.click(run_load_and_preprocess_image, inputs=[image_file, clip_processor_path], outputs=image_tensor_output)

|

| 45 |

+

predict_button.click(run_genomic_plip_predictions, inputs=[image_tensor_output, genomic_plip_model_path], outputs=pred_data_output)

|

| 46 |

+

classify_button.click(run_classify_tiles, inputs=[pred_data_output, classifier_model_path], outputs=result_output)

|

| 47 |

+

|

| 48 |

+

gr.Markdown("## Step by Step Workflow")

|

| 49 |

+

with gr.Row():

|

| 50 |

+

preprocess_status = gr.Checkbox(label="Preprocessed Image")

|

| 51 |

+

predict_status = gr.Checkbox(label="Predicted with Genomic PLIP")

|

| 52 |

+

classify_status = gr.Checkbox(label="Classified Tiles")

|

| 53 |

+

|

| 54 |

+

def update_status(status, result):

|

| 55 |

+

return status, result is not None

|

| 56 |

+

|

| 57 |

+

preprocess_button.click(update_status, inputs=[preprocess_status, image_tensor_output], outputs=preprocess_status)

|

| 58 |

+

predict_button.click(update_status, inputs=[predict_status, pred_data_output], outputs=predict_status)

|

| 59 |

+

classify_button.click(update_status, inputs=[classify_status, result_output], outputs=classify_status)

|

| 60 |

+

|

| 61 |

+

<<<<<<< HEAD

|

| 62 |

+

demo.launch()

|

| 63 |

+

=======

|

| 64 |

+

gr.Markdown("## Example Images")

|

| 65 |

+

gr.Examples(example_files, inputs=image_file)

|

| 66 |

+

|

| 67 |

+

demo.launch()

|

| 68 |

+

|

| 69 |

+

>>>>>>> new-branch

|

classes/.ipynb_checkpoints/TissueIdentifier-checkpoint.py

ADDED

|

@@ -0,0 +1,33 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import torch

|

| 2 |

+

import numpy as np

|

| 3 |

+

from TissueIdentifierModel import TissueIdentifierModel

|

| 4 |

+

|

| 5 |

+

class TissueIdentifier:

|

| 6 |

+

def __init__(self, model_path: str, img_processor, threshold: float = 0.95, device='cpu'):

|

| 7 |

+

self.model = self.load_model(model_path)

|

| 8 |

+

self.threshold = threshold

|

| 9 |

+

self.device = device

|

| 10 |

+

self.img_processor = img_processor

|

| 11 |

+

self.model.to(self.device)

|

| 12 |

+

|

| 13 |

+

def load_model(self, model_path):

|

| 14 |

+

model = TissueIdentifierModel()

|

| 15 |

+

model.load_state_dict(torch.load(model_path, map_location=torch.device('cpu')))

|

| 16 |

+

model.eval()

|

| 17 |

+

return model

|

| 18 |

+

|

| 19 |

+

def process_and_identify(self, data_dict): # Added 'self' and 'data_dict' as parameters

|

| 20 |

+

inputs = self.img_processor(images=list(data_dict.values()), return_tensors="pt")

|

| 21 |

+

x_info = {key: tensor for key, tensor in zip(data_dict.keys(), inputs['pixel_values'])}

|

| 22 |

+

|

| 23 |

+

images_tensor = inputs['pixel_values'].to(self.device)

|

| 24 |

+

with torch.no_grad():

|

| 25 |

+

outputs = self.model(images_tensor).reshape(-1)

|

| 26 |

+

predictions = outputs.cpu().numpy()

|

| 27 |

+

|

| 28 |

+

updated_dict = {key: x_info[key] for key, pred in zip(x_info.keys(), predictions) if pred > self.threshold}

|

| 29 |

+

return updated_dict

|

| 30 |

+

|

| 31 |

+

def save_tissue_tensor(self,tissue_tiles,name=None,save_loc=None):

|

| 32 |

+

tissue_tensor = {"{}_{}".format(str(key[0]),str(key[1])): tensor for key, tensor in tissue_tiles.items()}

|

| 33 |

+

save_file(tissue_tensor, "{}/{}.safetensors".format(save_loc,name))

|

classes/.ipynb_checkpoints/TissueIdentifierModel-checkpoint.py

ADDED

|

@@ -0,0 +1,35 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import torch

|

| 2 |

+

import torch.nn as nn

|

| 3 |

+

import torch.nn.functional as F

|

| 4 |

+

|

| 5 |

+

class TissueIdentifierModel(nn.Module):

|

| 6 |

+

def __init__(self):

|

| 7 |

+

super(TissueIdentifierModel, self).__init__()

|

| 8 |

+

self.conv1 = nn.Sequential(

|

| 9 |

+

nn.Conv2d(3, 16, kernel_size=3,stride=1,padding=1),

|

| 10 |

+

nn.ReLU(),

|

| 11 |

+

nn.MaxPool2d(2, 2) )

|

| 12 |

+

|

| 13 |

+

self.conv2 = nn.Sequential(

|

| 14 |

+

nn.Conv2d(16, 32, kernel_size=3,stride=1,padding=1),

|

| 15 |

+

nn.ReLU(),

|

| 16 |

+

nn.MaxPool2d(2, 2))

|

| 17 |

+

|

| 18 |

+

self.conv3 = nn.Sequential(

|

| 19 |

+

nn.Conv2d(32, 64, kernel_size=3, stride=1,padding=1),

|

| 20 |

+

nn.ReLU(),

|

| 21 |

+

nn.MaxPool2d(2, 2))

|

| 22 |

+

|

| 23 |

+

self.classifier = nn.Sequential(

|

| 24 |

+

# nn.Flatten(),

|

| 25 |

+

nn.Linear(64 * 28 * 28, 1),

|

| 26 |

+

|

| 27 |

+

nn.Sigmoid())

|

| 28 |

+

|

| 29 |

+

def forward(self, x):

|

| 30 |

+

x = self.conv1(x)

|

| 31 |

+

x = self.conv2(x)

|

| 32 |

+

x = self.conv3(x)

|

| 33 |

+

x = x.view(x.size(0), -1)

|

| 34 |

+

x = self.classifier(x)

|

| 35 |

+

return x

|

classes/.ipynb_checkpoints/binary_neural_classifier-checkpoint.py

ADDED

|

@@ -0,0 +1,16 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import torch.nn as nn

|

| 2 |

+

import torch

|

| 3 |

+

class SimpleNN(nn.Module):

|

| 4 |

+

def __init__(self):

|

| 5 |

+

super(SimpleNN, self).__init__()

|

| 6 |

+

self.fc1 = nn.Linear(512, 512)

|

| 7 |

+

self.fc2 = nn.Linear(512, 256)

|

| 8 |

+

self.fc3 = nn.Linear(256, 1)

|

| 9 |

+

|

| 10 |

+

def forward(self, x):

|

| 11 |

+

x = torch.relu(self.fc1(x))

|

| 12 |

+

x = torch.relu(self.fc2(x))

|

| 13 |

+

x = torch.sigmoid(self.fc3(x))

|

| 14 |

+

return x

|

| 15 |

+

|

| 16 |

+

|

classes/.ipynb_checkpoints/genomic_plip_model-checkpoint.py

ADDED

|

@@ -0,0 +1,18 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import torch

|

| 2 |

+

from transformers import PreTrainedModel, CLIPConfig, CLIPModel

|

| 3 |

+

|

| 4 |

+

class GenomicPLIPModel(PreTrainedModel):

|

| 5 |

+

config_class = CLIPConfig

|

| 6 |

+

|

| 7 |

+

def __init__(self, config):

|

| 8 |

+

super(GenomicPLIPModel, self).__init__(config)

|

| 9 |

+

vision_config = CLIPModel.config_class.from_pretrained('openai/clip-vit-base-patch32')

|

| 10 |

+

self.vision_model = CLIPModel(vision_config).vision_model

|

| 11 |

+

self.vision_projection = torch.nn.Linear(768, 512)

|

| 12 |

+

|

| 13 |

+

def forward(self, pixel_values):

|

| 14 |

+

vision_output = self.vision_model(pixel_values)

|

| 15 |

+

pooled_output = vision_output.pooler_output

|

| 16 |

+

vision_features = self.vision_projection(pooled_output)

|

| 17 |

+

|

| 18 |

+

return vision_features

|

classes/.ipynb_checkpoints/slide_processor-checkpoint.py

ADDED

|

@@ -0,0 +1,64 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import numpy as np

|

| 2 |

+

from concurrent.futures import ThreadPoolExecutor

|

| 3 |

+

import pandas as pd

|

| 4 |

+

import matplotlib.pyplot as plt

|

| 5 |

+

import os

|

| 6 |

+

import openslide

|

| 7 |

+

from openslide import OpenSlideError

|

| 8 |

+

from openslide.deepzoom import DeepZoomGenerator

|

| 9 |

+

from concurrent.futures import ProcessPoolExecutor

|

| 10 |

+

import math

|

| 11 |

+

import tqdm

|

| 12 |

+

|

| 13 |

+

class SlideProcessor:

|

| 14 |

+

def __init__(self, tile_size=1024, overlap=0, tissue_threshold=0.65, max_workers=60,CINFO=None):

|

| 15 |

+

self.tile_size = tile_size

|

| 16 |

+

self.overlap = overlap

|

| 17 |

+

self.tissue_threshold = tissue_threshold

|

| 18 |

+

self.max_workers = max_workers

|

| 19 |

+

|

| 20 |

+

|

| 21 |

+

def fetch_tile(self, tile_index, generator):

|

| 22 |

+

""" Fetch a single tile given a tile index and the tile generator. """

|

| 23 |

+

tile_size, overlap, zoom_level, col, row = tile_index

|

| 24 |

+

tile = np.asarray(generator.get_tile(zoom_level, (col, row)))

|

| 25 |

+

return (col, row), tile

|

| 26 |

+

|

| 27 |

+

def get_tiles(self, filtered_tiles, tile_indices, generator):

|

| 28 |

+

""" Retrieve tiles in parallel and return them as a dictionary with (col, row) keys. """

|

| 29 |

+

tiles = {}

|

| 30 |

+

with ThreadPoolExecutor(max_workers=self.max_workers) as executor:

|

| 31 |

+

futures = [executor.submit(self.fetch_tile, ti, generator) for ti in tile_indices if (ti[3], ti[4]) in filtered_tiles]

|

| 32 |

+

|

| 33 |

+

for future in futures:

|

| 34 |

+

key, tile = future.result()

|

| 35 |

+

if tile.shape == (self.tile_size,self.tile_size,3):

|

| 36 |

+

tiles[key] = tile

|

| 37 |

+

|

| 38 |

+

return tiles

|

| 39 |

+

|

| 40 |

+

def process_one_slide(self, file_loc):

|

| 41 |

+

f2p = file_loc

|

| 42 |

+

|

| 43 |

+

img1 = openslide.open_slide(f2p)

|

| 44 |

+

generator = DeepZoomGenerator(img1, tile_size=self.tile_size, overlap=self.overlap, limit_bounds=True)

|

| 45 |

+

highest_zoom_level = generator.level_count - 1

|

| 46 |

+

|

| 47 |

+

try:

|

| 48 |

+

mag = int(img1.properties[openslide.PROPERTY_NAME_OBJECTIVE_POWER])

|

| 49 |

+

offset = math.floor((mag / 20) / 2)

|

| 50 |

+

level = highest_zoom_level - offset

|

| 51 |

+

except (ValueError, KeyError):

|

| 52 |

+

level = highest_zoom_level

|

| 53 |

+

|

| 54 |

+

zoom_level = level

|

| 55 |

+

cols, rows = generator.level_tiles[zoom_level]

|

| 56 |

+

tile_indices = [(self.tile_size, self.overlap, zoom_level, col, row) for col in range(cols) for row in range(rows)]

|

| 57 |

+

|

| 58 |

+

x_info = tile_indices.copy()

|

| 59 |

+

|

| 60 |

+

all_x_info = [x[-2:] for x in x_info ]

|

| 61 |

+

|

| 62 |

+

tiles = self.get_tiles(all_x_info, tile_indices, generator)

|

| 63 |

+

|

| 64 |

+

return tiles

|

classes/__pycache__/PlipDataProcess.cpython-310.pyc

ADDED

|

Binary file (2.7 kB). View file

|

|

|

classes/__pycache__/PlipDataProcess.cpython-38.pyc

ADDED

|

Binary file (2.67 kB). View file

|

|

|

classes/__pycache__/genomic_plip_model.cpython-310.pyc

ADDED

|

Binary file (1.04 kB). View file

|

|

|

classes/binary_neural_classifier.py

ADDED

|

@@ -0,0 +1,16 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import torch.nn as nn

|

| 2 |

+

import torch

|

| 3 |

+

class SimpleNN(nn.Module):

|

| 4 |

+

def __init__(self):

|

| 5 |

+

super(SimpleNN, self).__init__()

|

| 6 |

+

self.fc1 = nn.Linear(512, 512)

|

| 7 |

+

self.fc2 = nn.Linear(512, 256)

|

| 8 |

+

self.fc3 = nn.Linear(256, 1)

|

| 9 |

+

|

| 10 |

+

def forward(self, x):

|

| 11 |

+

x = torch.relu(self.fc1(x))

|

| 12 |

+

x = torch.relu(self.fc2(x))

|

| 13 |

+

x = torch.sigmoid(self.fc3(x))

|

| 14 |

+

return x

|

| 15 |

+

|

| 16 |

+

|

classes/genomic_plip_model.py

ADDED

|

@@ -0,0 +1,18 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import torch

|

| 2 |

+

from transformers import PreTrainedModel, CLIPConfig, CLIPModel

|

| 3 |

+

|

| 4 |

+

class GenomicPLIPModel(PreTrainedModel):

|

| 5 |

+

config_class = CLIPConfig

|

| 6 |

+

|

| 7 |

+

def __init__(self, config):

|

| 8 |

+

super(GenomicPLIPModel, self).__init__(config)

|

| 9 |

+

vision_config = CLIPModel.config_class.from_pretrained('openai/clip-vit-base-patch32')

|

| 10 |

+

self.vision_model = CLIPModel(vision_config).vision_model

|

| 11 |

+

self.vision_projection = torch.nn.Linear(768, 512)

|

| 12 |

+

|

| 13 |

+

def forward(self, pixel_values):

|

| 14 |

+

vision_output = self.vision_model(pixel_values)

|

| 15 |

+

pooled_output = vision_output.pooler_output

|

| 16 |

+

vision_features = self.vision_projection(pooled_output)

|

| 17 |

+

|

| 18 |

+

return vision_features

|

classes/slide_processor.py

ADDED

|

@@ -0,0 +1,175 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import numpy as np

|

| 2 |

+

from concurrent.futures import ThreadPoolExecutor

|

| 3 |

+

<<<<<<< HEAD

|

| 4 |

+

=======

|

| 5 |

+

<<<<<<< HEAD

|

| 6 |

+

import os

|

| 7 |

+

import openslide

|

| 8 |

+

from PIL import Image

|

| 9 |

+

from openslide import OpenSlideError

|

| 10 |

+

from openslide.deepzoom import DeepZoomGenerator

|

| 11 |

+

import math

|

| 12 |

+

import random

|

| 13 |

+

from scipy.ndimage.morphology import binary_fill_holes

|

| 14 |

+

from skimage.color import rgb2gray

|

| 15 |

+

from skimage.feature import canny

|

| 16 |

+

from skimage.morphology import binary_closing, binary_dilation, disk

|

| 17 |

+

from concurrent.futures import ProcessPoolExecutor

|

| 18 |

+

|

| 19 |

+

class SlideProcessor:

|

| 20 |

+

def __init__(self,img_processor=None, tile_size=1024, overlap=0, tissue_threshold=0.65, max_workers=30):

|

| 21 |

+

=======

|

| 22 |

+

>>>>>>> new-branch

|

| 23 |

+

#import pandas as pd

|

| 24 |

+

import matplotlib.pyplot as plt

|

| 25 |

+

import os

|

| 26 |

+

import openslide

|

| 27 |

+

from openslide import OpenSlideError

|

| 28 |

+

from openslide.deepzoom import DeepZoomGenerator

|

| 29 |

+

from concurrent.futures import ProcessPoolExecutor

|

| 30 |

+

import math

|

| 31 |

+

import tqdm

|

| 32 |

+

|

| 33 |

+

class SlideProcessor:

|

| 34 |

+

def __init__(self, tile_size=1024, overlap=0, tissue_threshold=0.65, max_workers=60,CINFO=None):

|

| 35 |

+

<<<<<<< HEAD

|

| 36 |

+

=======

|

| 37 |

+

>>>>>>> Initial commit for reconnecting to Hugging Face

|

| 38 |

+

>>>>>>> new-branch

|

| 39 |

+

self.tile_size = tile_size

|

| 40 |

+

self.overlap = overlap

|

| 41 |

+

self.tissue_threshold = tissue_threshold

|

| 42 |

+

self.max_workers = max_workers

|

| 43 |

+

<<<<<<< HEAD

|

| 44 |

+

=======

|

| 45 |

+

<<<<<<< HEAD

|

| 46 |

+

self.img_processor = img_processor

|

| 47 |

+

|

| 48 |

+

def optical_density(self, tile):

|

| 49 |

+

tile = tile.astype(np.float64)

|

| 50 |

+

od = -np.log((tile+1)/240)

|

| 51 |

+

return od

|

| 52 |

+

|

| 53 |

+

def keep_tile(self, tile, tissue_threshold=None):

|

| 54 |

+

if tissue_threshold is None:

|

| 55 |

+

tissue_threshold = self.tissue_threshold

|

| 56 |

+

|

| 57 |

+

if tile.shape[0:2] == (self.tile_size, self.tile_size):

|

| 58 |

+

tile_orig = tile

|

| 59 |

+

tile = rgb2gray(tile)

|

| 60 |

+

tile = 1 - tile

|

| 61 |

+

tile = canny(tile)

|

| 62 |

+

tile = binary_closing(tile, disk(10))

|

| 63 |

+

tile = binary_dilation(tile, disk(10))

|

| 64 |

+

tile = binary_fill_holes(tile)

|

| 65 |

+

percentage = tile.mean()

|

| 66 |

+

|

| 67 |

+

check1 = percentage >= tissue_threshold

|

| 68 |

+

|

| 69 |

+

tile = self.optical_density(tile_orig)

|

| 70 |

+

beta = 0.15

|

| 71 |

+

tile = np.min(tile, axis=2) >= beta

|

| 72 |

+

tile = binary_closing(tile, disk(2))

|

| 73 |

+

tile = binary_dilation(tile, disk(2))

|

| 74 |

+

tile = binary_fill_holes(tile)

|

| 75 |

+

percentage = tile.mean()

|

| 76 |

+

|

| 77 |

+

check2 = percentage >= tissue_threshold

|

| 78 |

+

|

| 79 |

+

return check1 and check2

|

| 80 |

+

else:

|

| 81 |

+

return False

|

| 82 |

+

|

| 83 |

+

def filter_tiles(self, tile_indices, generator):

|

| 84 |

+

def process_tile(tile_index):

|

| 85 |

+

tile_size, overlap, zoom_level, col, row = tile_index

|

| 86 |

+

tile = np.asarray(generator.get_tile(zoom_level, (col, row)))

|

| 87 |

+

if self.keep_tile(tile, self.tissue_threshold):

|

| 88 |

+

return col, row

|

| 89 |

+

return None

|

| 90 |

+

|

| 91 |

+

with ThreadPoolExecutor(max_workers=self.max_workers) as executor:

|

| 92 |

+

results = executor.map(process_tile, tile_indices)

|

| 93 |

+

|

| 94 |

+

return [result for result in results if result is not None]

|

| 95 |

+

|

| 96 |

+

|

| 97 |

+

def get_tiles(self, filtered_tiles, tile_indices, generator):

|

| 98 |

+

tiles = {}

|

| 99 |

+

for col, row in filtered_tiles:

|

| 100 |

+

# Find the tile_index with matching col and row

|

| 101 |

+

tile_index = next((ti for ti in tile_indices if ti[3] == col and ti[4] == row), None)

|

| 102 |

+

if tile_index:

|

| 103 |

+

tile_size, overlap, zoom_level, col, row = tile_index

|

| 104 |

+

tile = np.asarray(generator.get_tile(zoom_level, (col, row)))

|

| 105 |

+

tiles[(col,row)] = tile

|

| 106 |

+

=======

|

| 107 |

+

>>>>>>> new-branch

|

| 108 |

+

|

| 109 |

+

|

| 110 |

+

def fetch_tile(self, tile_index, generator):

|

| 111 |

+

""" Fetch a single tile given a tile index and the tile generator. """

|

| 112 |

+

tile_size, overlap, zoom_level, col, row = tile_index

|

| 113 |

+

tile = np.asarray(generator.get_tile(zoom_level, (col, row)))

|

| 114 |

+

return (col, row), tile

|

| 115 |

+

|

| 116 |

+

def get_tiles(self, filtered_tiles, tile_indices, generator):

|

| 117 |

+

""" Retrieve tiles in parallel and return them as a dictionary with (col, row) keys. """

|

| 118 |

+

tiles = {}

|

| 119 |

+

with ThreadPoolExecutor(max_workers=self.max_workers) as executor:

|

| 120 |

+

futures = [executor.submit(self.fetch_tile, ti, generator) for ti in tile_indices if (ti[3], ti[4]) in filtered_tiles]

|

| 121 |

+

|

| 122 |

+

for future in futures:

|

| 123 |

+

key, tile = future.result()

|

| 124 |

+

if tile.shape == (self.tile_size,self.tile_size,3):

|

| 125 |

+

tiles[key] = tile

|

| 126 |

+

|

| 127 |

+

<<<<<<< HEAD

|

| 128 |

+

=======

|

| 129 |

+

>>>>>>> Initial commit for reconnecting to Hugging Face

|

| 130 |

+

>>>>>>> new-branch

|

| 131 |

+

return tiles

|

| 132 |

+

|

| 133 |

+

def process_one_slide(self, file_loc):

|

| 134 |

+

f2p = file_loc

|

| 135 |

+

|

| 136 |

+

img1 = openslide.open_slide(f2p)

|

| 137 |

+

generator = DeepZoomGenerator(img1, tile_size=self.tile_size, overlap=self.overlap, limit_bounds=True)

|

| 138 |

+

highest_zoom_level = generator.level_count - 1

|

| 139 |

+

|

| 140 |

+

try:

|

| 141 |

+

mag = int(img1.properties[openslide.PROPERTY_NAME_OBJECTIVE_POWER])

|

| 142 |

+

offset = math.floor((mag / 20) / 2)

|

| 143 |

+

level = highest_zoom_level - offset

|

| 144 |

+

except (ValueError, KeyError):

|

| 145 |

+

level = highest_zoom_level

|

| 146 |

+

|

| 147 |

+

zoom_level = level

|

| 148 |

+

cols, rows = generator.level_tiles[zoom_level]

|

| 149 |

+

tile_indices = [(self.tile_size, self.overlap, zoom_level, col, row) for col in range(cols) for row in range(rows)]

|

| 150 |

+

|

| 151 |

+

<<<<<<< HEAD

|

| 152 |

+

=======

|

| 153 |

+

<<<<<<< HEAD

|

| 154 |

+

filtered_tiles = self.filter_tiles(tile_indices, generator)

|

| 155 |

+

|

| 156 |

+

if file_loc.endswith('.svs'):

|

| 157 |

+

file = file_loc[-16:-4]

|

| 158 |

+

print(file)

|

| 159 |

+

|

| 160 |

+

tiles = self.get_tiles(filtered_tiles, tile_indices, generator)

|

| 161 |

+

|

| 162 |

+

return tiles

|

| 163 |

+

=======

|

| 164 |

+

>>>>>>> new-branch

|

| 165 |

+

x_info = tile_indices.copy()

|

| 166 |

+

|

| 167 |

+

all_x_info = [x[-2:] for x in x_info ]

|

| 168 |

+

|

| 169 |

+

tiles = self.get_tiles(all_x_info, tile_indices, generator)

|

| 170 |

+

|

| 171 |

+

return tiles

|

| 172 |

+

<<<<<<< HEAD

|

| 173 |

+

=======

|

| 174 |

+

>>>>>>> Initial commit for reconnecting to Hugging Face

|

| 175 |

+

>>>>>>> new-branch

|

genomic_plip/README.md

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

license: apache-2.0

|

| 3 |

+

---

|

genomic_plip/config.json

ADDED

|

@@ -0,0 +1,26 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"_name_or_path": "genomic_plip_hug/",

|

| 3 |

+

"architectures": [

|

| 4 |

+

"GenomicPLIPModel"

|

| 5 |

+

],

|

| 6 |

+

"genomic_config": {

|

| 7 |

+

"fc_layer_input": 4,

|

| 8 |

+

"fc_layer_output": 512

|

| 9 |

+

},

|

| 10 |

+

"initializer_factor": 1.0,

|

| 11 |

+

"logit_scale_init_value": 2.6592,

|

| 12 |

+

"model_type": "clip",

|

| 13 |

+

"projection_dim": 512,

|

| 14 |

+

"text_config": {

|

| 15 |

+

"bos_token_id": 0,

|

| 16 |

+

"dropout": 0.0,

|

| 17 |

+

"eos_token_id": 2,

|

| 18 |

+

"model_type": "clip_text_model"

|

| 19 |

+

},

|

| 20 |

+

"torch_dtype": "float32",

|

| 21 |

+

"transformers_version": "4.32.1",

|

| 22 |

+

"vision_config": {

|

| 23 |

+

"dropout": 0.0,

|

| 24 |

+

"model_type": "clip_vision_model"

|

| 25 |

+

}

|

| 26 |

+

}

|

genomic_plip/preprocessor_config.json

ADDED

|

@@ -0,0 +1,28 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"crop_size": {

|

| 3 |

+

"height": 224,

|

| 4 |

+

"width": 224

|

| 5 |

+

},

|

| 6 |

+

"do_center_crop": true,

|

| 7 |

+

"do_convert_rgb": true,

|

| 8 |

+

"do_normalize": true,

|

| 9 |

+

"do_rescale": true,

|

| 10 |

+

"do_resize": true,

|

| 11 |

+

"feature_extractor_type": "CLIPFeatureExtractor",

|

| 12 |

+

"image_mean": [

|

| 13 |

+

0.48145466,

|

| 14 |

+

0.4578275,

|

| 15 |

+

0.40821073

|

| 16 |

+

],

|

| 17 |

+

"image_processor_type": "CLIPImageProcessor",

|

| 18 |

+

"image_std": [

|

| 19 |

+

0.26862954,

|

| 20 |

+

0.26130258,

|

| 21 |

+

0.27577711

|

| 22 |

+

],

|

| 23 |

+

"resample": 3,

|

| 24 |

+

"rescale_factor": 0.00392156862745098,

|

| 25 |

+

"size": {

|

| 26 |

+

"shortest_edge": 224

|

| 27 |

+

}

|

| 28 |

+

}

|

genomic_plip_model/.gitattributes

ADDED

|

@@ -0,0 +1,35 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

*.7z filter=lfs diff=lfs merge=lfs -text

|

| 2 |

+

*.arrow filter=lfs diff=lfs merge=lfs -text

|

| 3 |

+

*.bin filter=lfs diff=lfs merge=lfs -text

|

| 4 |

+

*.bz2 filter=lfs diff=lfs merge=lfs -text

|

| 5 |

+

*.ckpt filter=lfs diff=lfs merge=lfs -text

|

| 6 |

+

*.ftz filter=lfs diff=lfs merge=lfs -text

|

| 7 |

+

*.gz filter=lfs diff=lfs merge=lfs -text

|

| 8 |

+

*.h5 filter=lfs diff=lfs merge=lfs -text

|

| 9 |

+

*.joblib filter=lfs diff=lfs merge=lfs -text

|

| 10 |

+

*.lfs.* filter=lfs diff=lfs merge=lfs -text

|

| 11 |

+

*.mlmodel filter=lfs diff=lfs merge=lfs -text

|

| 12 |

+

*.model filter=lfs diff=lfs merge=lfs -text

|

| 13 |

+

*.msgpack filter=lfs diff=lfs merge=lfs -text

|

| 14 |

+

*.npy filter=lfs diff=lfs merge=lfs -text

|

| 15 |

+

*.npz filter=lfs diff=lfs merge=lfs -text

|

| 16 |

+

*.onnx filter=lfs diff=lfs merge=lfs -text

|

| 17 |

+

*.ot filter=lfs diff=lfs merge=lfs -text

|

| 18 |

+

*.parquet filter=lfs diff=lfs merge=lfs -text

|

| 19 |

+

*.pb filter=lfs diff=lfs merge=lfs -text

|

| 20 |

+

*.pickle filter=lfs diff=lfs merge=lfs -text

|

| 21 |

+

*.pkl filter=lfs diff=lfs merge=lfs -text

|

| 22 |

+

*.pt filter=lfs diff=lfs merge=lfs -text

|

| 23 |

+

*.pth filter=lfs diff=lfs merge=lfs -text

|

| 24 |

+

*.rar filter=lfs diff=lfs merge=lfs -text

|

| 25 |

+

*.safetensors filter=lfs diff=lfs merge=lfs -text

|

| 26 |

+

saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

| 27 |

+

*.tar.* filter=lfs diff=lfs merge=lfs -text

|

| 28 |

+

*.tar filter=lfs diff=lfs merge=lfs -text

|

| 29 |

+

*.tflite filter=lfs diff=lfs merge=lfs -text

|

| 30 |

+

*.tgz filter=lfs diff=lfs merge=lfs -text

|

| 31 |

+

*.wasm filter=lfs diff=lfs merge=lfs -text

|

| 32 |

+

*.xz filter=lfs diff=lfs merge=lfs -text

|

| 33 |

+

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

+

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

+

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

genomic_plip_model/README.md

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

license: apache-2.0

|

| 3 |

+

---

|

genomic_plip_model/config.json

ADDED

|

@@ -0,0 +1,26 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"_name_or_path": "genomic_plip_hug/",

|

| 3 |

+

"architectures": [

|

| 4 |

+

"GenomicPLIPModel"

|

| 5 |

+

],

|

| 6 |

+

"genomic_config": {

|

| 7 |

+

"fc_layer_input": 4,

|

| 8 |

+

"fc_layer_output": 512

|

| 9 |

+

},

|

| 10 |

+

"initializer_factor": 1.0,

|

| 11 |

+

"logit_scale_init_value": 2.6592,

|

| 12 |

+

"model_type": "clip",

|

| 13 |

+

"projection_dim": 512,

|

| 14 |

+

"text_config": {

|

| 15 |

+

"bos_token_id": 0,

|

| 16 |

+

"dropout": 0.0,

|

| 17 |

+

"eos_token_id": 2,

|

| 18 |

+

"model_type": "clip_text_model"

|

| 19 |

+

},

|

| 20 |

+

"torch_dtype": "float32",

|

| 21 |

+

"transformers_version": "4.32.1",

|

| 22 |

+

"vision_config": {

|

| 23 |

+

"dropout": 0.0,

|

| 24 |

+

"model_type": "clip_vision_model"

|

| 25 |

+

}

|

| 26 |

+

}

|

genomic_plip_model/preprocessor_config.json

ADDED

|

@@ -0,0 +1,28 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"crop_size": {

|

| 3 |

+

"height": 224,

|

| 4 |

+

"width": 224

|

| 5 |

+

},

|

| 6 |

+

"do_center_crop": true,

|

| 7 |

+

"do_convert_rgb": true,

|

| 8 |

+

"do_normalize": true,

|

| 9 |

+

"do_rescale": true,

|

| 10 |

+

"do_resize": true,

|

| 11 |

+

"feature_extractor_type": "CLIPFeatureExtractor",

|

| 12 |

+

"image_mean": [

|

| 13 |

+

0.48145466,

|

| 14 |

+

0.4578275,

|

| 15 |

+

0.40821073

|

| 16 |

+

],

|

| 17 |

+

"image_processor_type": "CLIPImageProcessor",

|

| 18 |

+

"image_std": [

|

| 19 |

+

0.26862954,

|

| 20 |

+

0.26130258,

|

| 21 |

+

0.27577711

|

| 22 |

+

],

|

| 23 |

+

"resample": 3,

|

| 24 |

+

"rescale_factor": 0.00392156862745098,

|

| 25 |

+

"size": {

|

| 26 |

+

"shortest_edge": 224

|

| 27 |

+

}

|

| 28 |

+

}

|

genomic_plip_model/pytorch_model.bin

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:3549ad683f995391b8dce6d18f4177d8b6bb1bd91f2275cf62eabdc62ad9474e

|

| 3 |

+

size 351465073

|

main.py

ADDED

|

@@ -0,0 +1,32 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import torch

|

| 2 |

+

from PIL import Image

|

| 3 |

+

from classes.genomic_plip_model import GenomicPLIPModel

|

| 4 |

+

from classes.binary_neural_classifier import SimpleNN

|

| 5 |

+

from transformers import CLIPImageProcessor

|

| 6 |

+

|

| 7 |

+

def load_and_preprocess_image(image_path, clip_processor_path):

|

| 8 |

+

clip_processor = CLIPImageProcessor.from_pretrained(clip_processor_path)

|

| 9 |

+

image = Image.open(image_path).convert("RGB")

|

| 10 |

+

inputs = clip_processor(images=[image], return_tensors="pt")

|

| 11 |

+

image_tensor = inputs['pixel_values']

|

| 12 |

+

return image_tensor

|

| 13 |

+

|

| 14 |

+

def genomic_plip_predictions(image_tensor, model_path):

|

| 15 |

+

gmodel = GenomicPLIPModel.from_pretrained(model_path)

|

| 16 |

+

gmodel.eval()

|

| 17 |

+

with torch.no_grad():

|

| 18 |

+

pred_data = gmodel(image_tensor)

|

| 19 |

+

return pred_data

|

| 20 |

+

|

| 21 |

+

def classify_tiles(pred_data, model_path):

|

| 22 |

+

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

|

| 23 |

+

model = SimpleNN().to(device)

|

| 24 |

+

model.load_state_dict(torch.load(model_path, map_location=device))

|

| 25 |

+

model.eval()

|

| 26 |

+

with torch.no_grad():

|

| 27 |

+

output = model(pred_data).mean()

|

| 28 |

+

return output.item()

|

| 29 |

+

<<<<<<< HEAD

|

| 30 |

+

=======

|

| 31 |

+

|

| 32 |

+

>>>>>>> new-branch

|

models/classifier.pth

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:0272ab645560a4c884ebe88324943f668bf664d074186d29f3f4240d4b7e126f

|

| 3 |

+

size 1579319

|

requirements.txt

ADDED

|

@@ -0,0 +1,14 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

numpy

|

| 2 |

+

openslide-python

|

| 3 |

+

scikit-image

|

| 4 |

+

scikit-learn

|

| 5 |

+

Pillow

|

| 6 |

+

transformers

|

| 7 |

+

torch

|

| 8 |

+

<<<<<<< HEAD

|

| 9 |

+

=======

|

| 10 |

+

<<<<<<< HEAD

|

| 11 |

+

Flask

|

| 12 |

+

=======

|

| 13 |

+

>>>>>>> Initial commit for reconnecting to Hugging Face

|

| 14 |

+

>>>>>>> new-branch

|

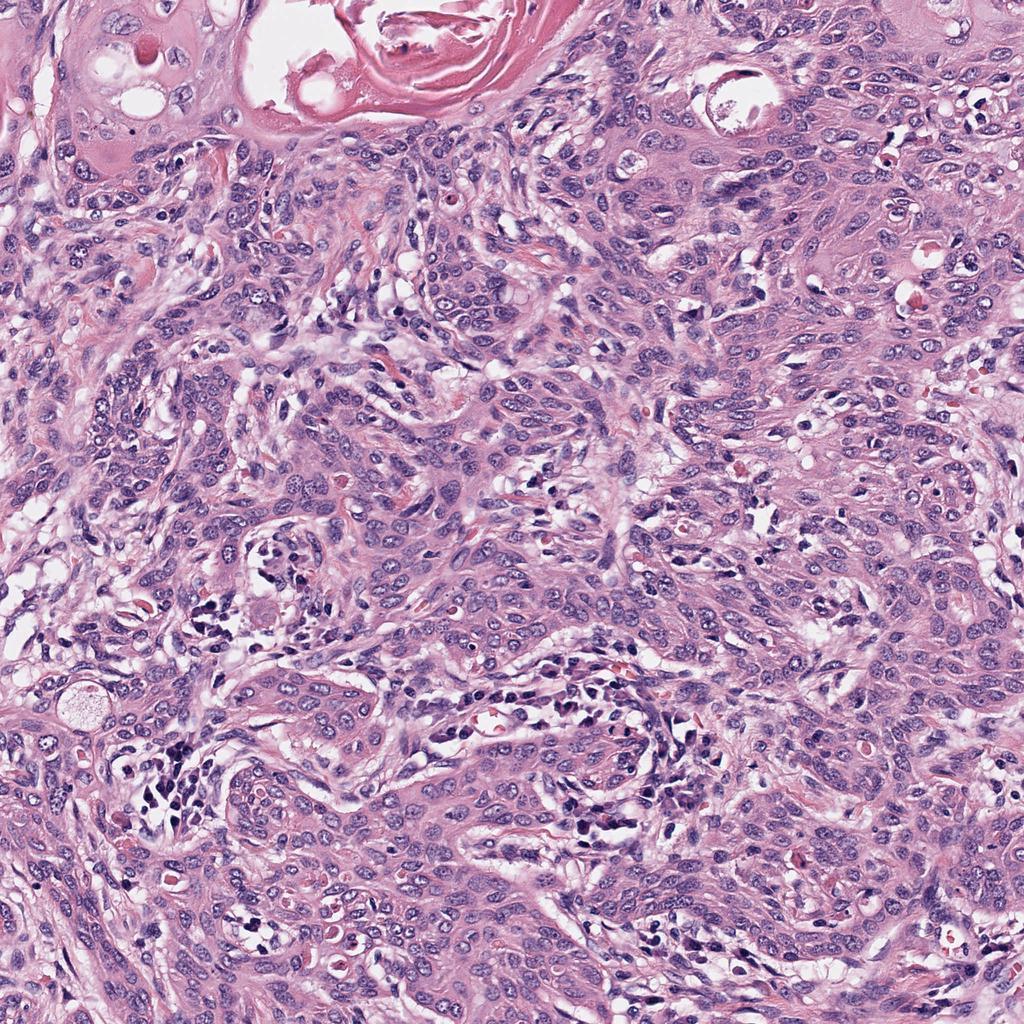

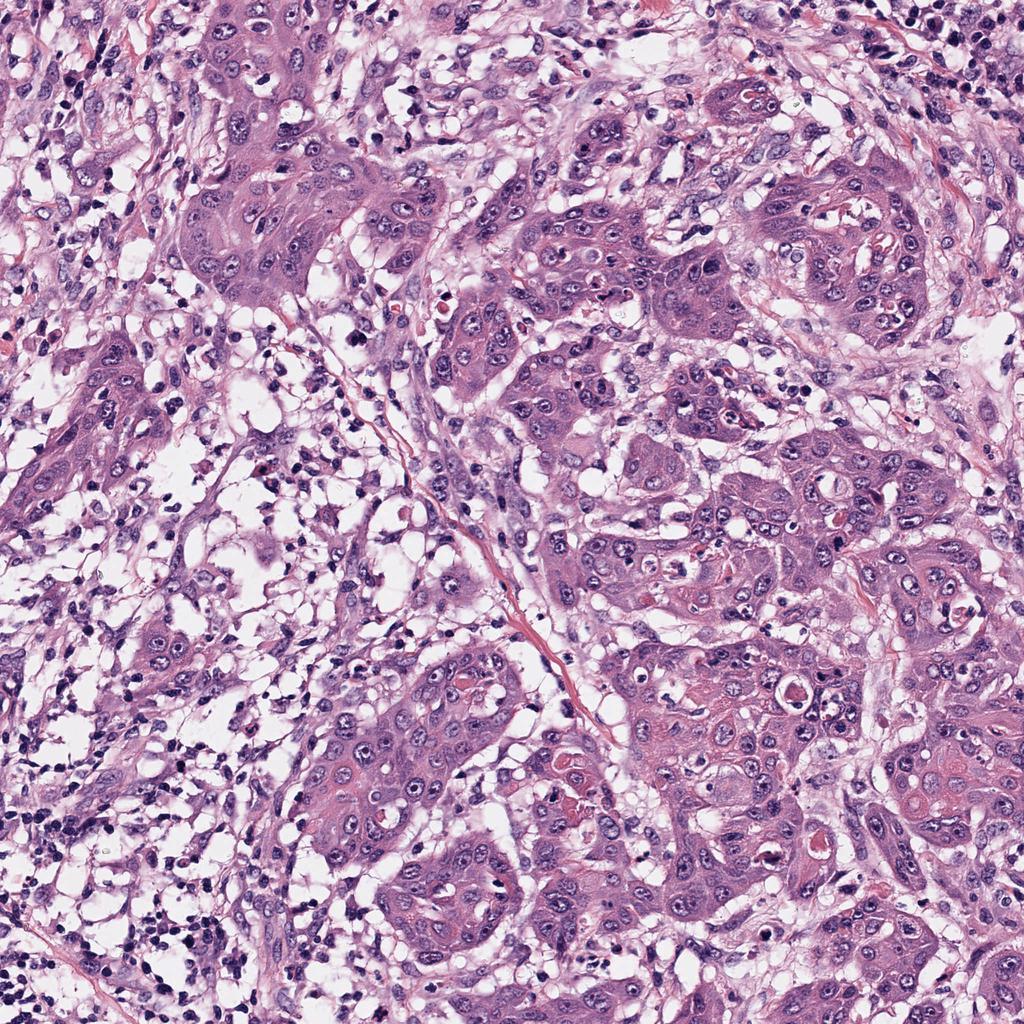

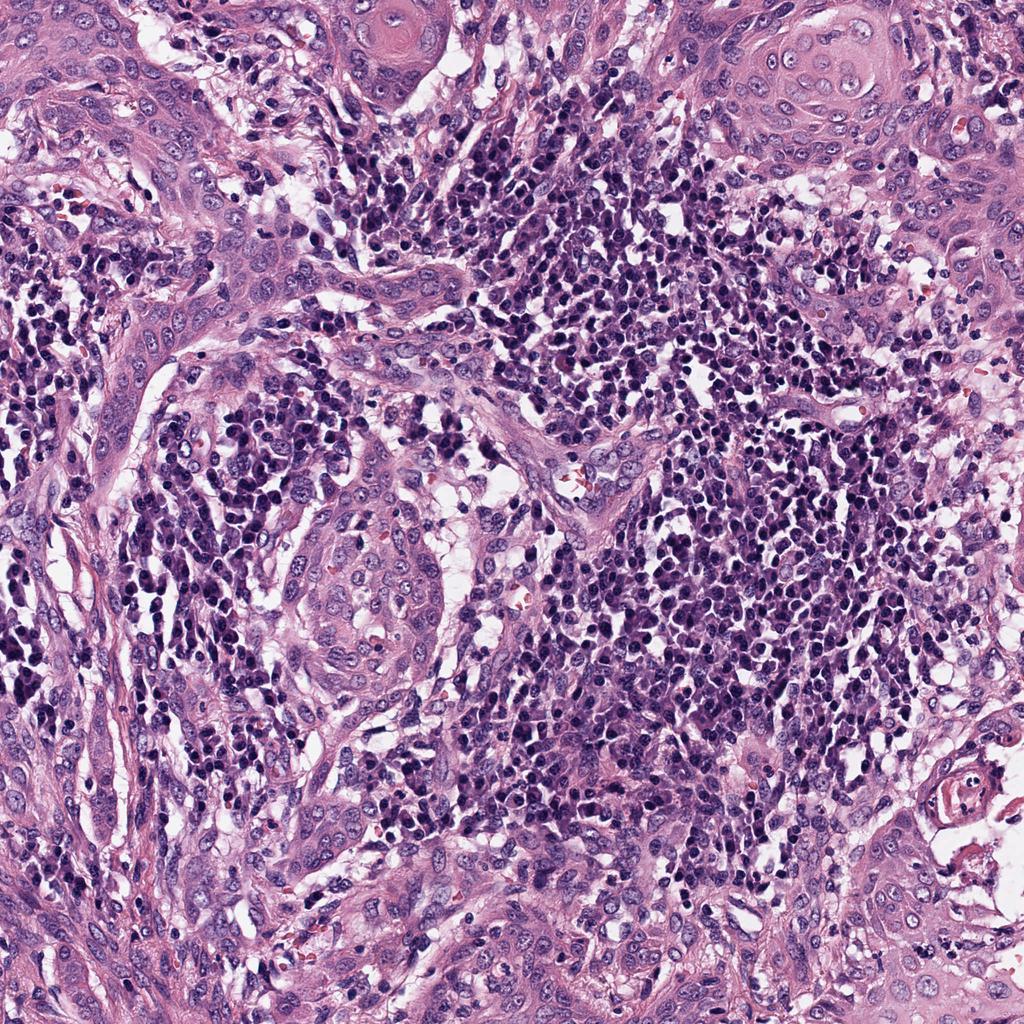

sample_tiles/TCGA-BA-6872_52_9.jpeg

ADDED

|

sample_tiles/TCGA-BA-6872_56_14.jpeg

ADDED

|

sample_tiles/TCGA-BA-6872_59_12.jpeg

ADDED

|

sample_tiles/TCGA-BA-6872_59_17.jpeg

ADDED

|

sample_tiles/TCGA-UF-A718_13_10.jpeg

ADDED

|

sample_tiles/TCGA-UF-A718_13_11.jpeg

ADDED

|

sample_tiles/TCGA-UF-A718_13_19.jpeg

ADDED

|

sample_tiles/TCGA-UF-A718_23_19.jpeg

ADDED

|

sample_tiles/TCGA-UF-A718_23_25.jpeg

ADDED

|