Upload 5 files

Browse files- detr/README.md +92 -0

- detr/config.json +221 -0

- detr/gitattributes +28 -0

- detr/preprocessor_config.json +17 -0

- detr/pytorch_model.bin +3 -0

detr/README.md

ADDED

|

@@ -0,0 +1,92 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

license: apache-2.0

|

| 3 |

+

tags:

|

| 4 |

+

- object-detection

|

| 5 |

+

- vision

|

| 6 |

+

datasets:

|

| 7 |

+

- coco

|

| 8 |

+

widget:

|

| 9 |

+

- src: https://huggingface.co/datasets/mishig/sample_images/resolve/main/savanna.jpg

|

| 10 |

+

example_title: Savanna

|

| 11 |

+

- src: https://huggingface.co/datasets/mishig/sample_images/resolve/main/football-match.jpg

|

| 12 |

+

example_title: Football Match

|

| 13 |

+

- src: https://huggingface.co/datasets/mishig/sample_images/resolve/main/airport.jpg

|

| 14 |

+

example_title: Airport

|

| 15 |

+

---

|

| 16 |

+

|

| 17 |

+

# Deformable DETR model with ResNet-50 backbone

|

| 18 |

+

|

| 19 |

+

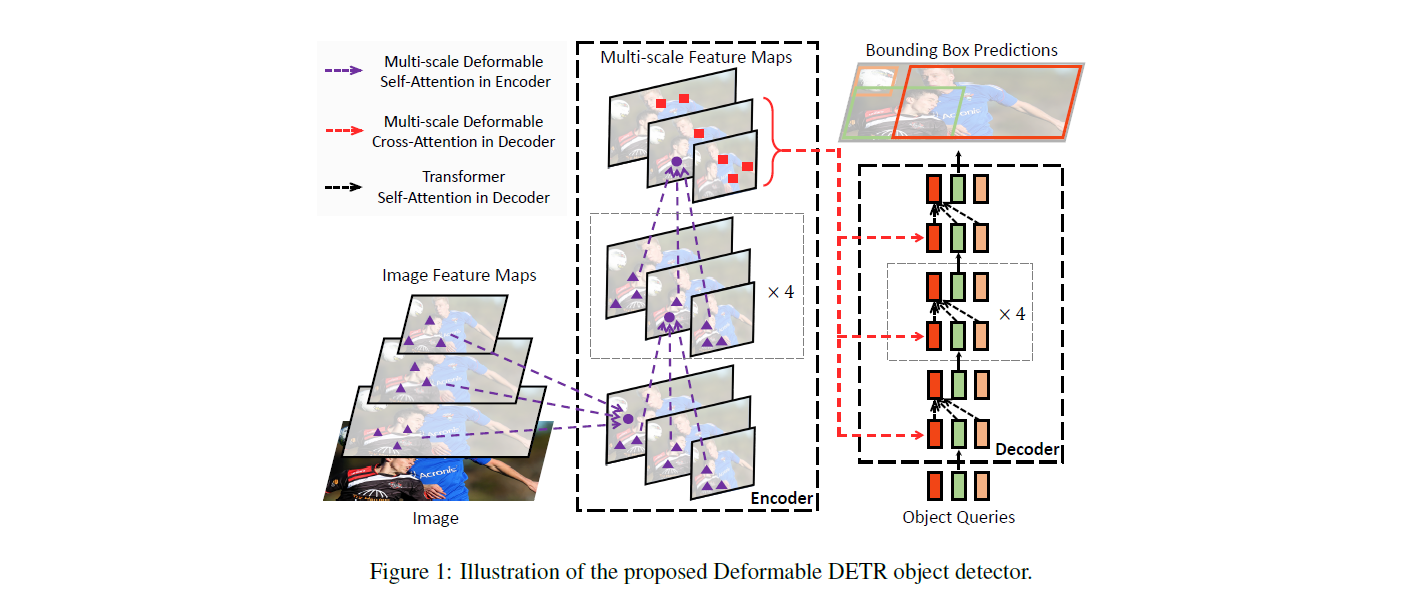

Deformable DEtection TRansformer (DETR) model trained end-to-end on COCO 2017 object detection (118k annotated images). It was introduced in the paper [Deformable DETR: Deformable Transformers for End-to-End Object Detection](https://arxiv.org/abs/2010.04159) by Zhu et al. and first released in [this repository](https://github.com/fundamentalvision/Deformable-DETR).

|

| 20 |

+

|

| 21 |

+

Disclaimer: The team releasing Deformable DETR did not write a model card for this model so this model card has been written by the Hugging Face team.

|

| 22 |

+

|

| 23 |

+

## Model description

|

| 24 |

+

|

| 25 |

+

The DETR model is an encoder-decoder transformer with a convolutional backbone. Two heads are added on top of the decoder outputs in order to perform object detection: a linear layer for the class labels and a MLP (multi-layer perceptron) for the bounding boxes. The model uses so-called object queries to detect objects in an image. Each object query looks for a particular object in the image. For COCO, the number of object queries is set to 100.

|

| 26 |

+

|

| 27 |

+

The model is trained using a "bipartite matching loss": one compares the predicted classes + bounding boxes of each of the N = 100 object queries to the ground truth annotations, padded up to the same length N (so if an image only contains 4 objects, 96 annotations will just have a "no object" as class and "no bounding box" as bounding box). The Hungarian matching algorithm is used to create an optimal one-to-one mapping between each of the N queries and each of the N annotations. Next, standard cross-entropy (for the classes) and a linear combination of the L1 and generalized IoU loss (for the bounding boxes) are used to optimize the parameters of the model.

|

| 28 |

+

|

| 29 |

+

|

| 30 |

+

|

| 31 |

+

## Intended uses & limitations

|

| 32 |

+

|

| 33 |

+

You can use the raw model for object detection. See the [model hub](https://huggingface.co/models?search=sensetime/deformable-detr) to look for all available Deformable DETR models.

|

| 34 |

+

|

| 35 |

+

### How to use

|

| 36 |

+

|

| 37 |

+

Here is how to use this model:

|

| 38 |

+

|

| 39 |

+

```python

|

| 40 |

+

from transformers import AutoImageProcessor, DeformableDetrForObjectDetection

|

| 41 |

+

import torch

|

| 42 |

+

from PIL import Image

|

| 43 |

+

import requests

|

| 44 |

+

|

| 45 |

+

url = "http://images.cocodataset.org/val2017/000000039769.jpg"

|

| 46 |

+

image = Image.open(requests.get(url, stream=True).raw)

|

| 47 |

+

|

| 48 |

+

processor = AutoImageProcessor.from_pretrained("SenseTime/deformable-detr")

|

| 49 |

+

model = DeformableDetrForObjectDetection.from_pretrained("SenseTime/deformable-detr")

|

| 50 |

+

|

| 51 |

+

inputs = processor(images=image, return_tensors="pt")

|

| 52 |

+

outputs = model(**inputs)

|

| 53 |

+

|

| 54 |

+

# convert outputs (bounding boxes and class logits) to COCO API

|

| 55 |

+

# let's only keep detections with score > 0.7

|

| 56 |

+

target_sizes = torch.tensor([image.size[::-1]])

|

| 57 |

+

results = processor.post_process_object_detection(outputs, target_sizes=target_sizes, threshold=0.7)[0]

|

| 58 |

+

|

| 59 |

+

for score, label, box in zip(results["scores"], results["labels"], results["boxes"]):

|

| 60 |

+

box = [round(i, 2) for i in box.tolist()]

|

| 61 |

+

print(

|

| 62 |

+

f"Detected {model.config.id2label[label.item()]} with confidence "

|

| 63 |

+

f"{round(score.item(), 3)} at location {box}"

|

| 64 |

+

)

|

| 65 |

+

```

|

| 66 |

+

This should output:

|

| 67 |

+

```

|

| 68 |

+

Detected cat with confidence 0.856 at location [342.19, 24.3, 640.02, 372.25]

|

| 69 |

+

Detected remote with confidence 0.739 at location [40.79, 72.78, 176.76, 117.25]

|

| 70 |

+

Detected cat with confidence 0.859 at location [16.5, 52.84, 318.25, 470.78]

|

| 71 |

+

```

|

| 72 |

+

|

| 73 |

+

Currently, both the feature extractor and model support PyTorch.

|

| 74 |

+

|

| 75 |

+

## Training data

|

| 76 |

+

|

| 77 |

+

The Deformable DETR model was trained on [COCO 2017 object detection](https://cocodataset.org/#download), a dataset consisting of 118k/5k annotated images for training/validation respectively.

|

| 78 |

+

|

| 79 |

+

### BibTeX entry and citation info

|

| 80 |

+

|

| 81 |

+

```bibtex

|

| 82 |

+

@misc{https://doi.org/10.48550/arxiv.2010.04159,

|

| 83 |

+

doi = {10.48550/ARXIV.2010.04159},

|

| 84 |

+

url = {https://arxiv.org/abs/2010.04159},

|

| 85 |

+

author = {Zhu, Xizhou and Su, Weijie and Lu, Lewei and Li, Bin and Wang, Xiaogang and Dai, Jifeng},

|

| 86 |

+

keywords = {Computer Vision and Pattern Recognition (cs.CV), FOS: Computer and information sciences, FOS: Computer and information sciences},

|

| 87 |

+

title = {Deformable DETR: Deformable Transformers for End-to-End Object Detection},

|

| 88 |

+

publisher = {arXiv},

|

| 89 |

+

year = {2020},

|

| 90 |

+

copyright = {arXiv.org perpetual, non-exclusive license}

|

| 91 |

+

}

|

| 92 |

+

```

|

detr/config.json

ADDED

|

@@ -0,0 +1,221 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"activation_dropout": 0.0,

|

| 3 |

+

"activation_function": "relu",

|

| 4 |

+

"architectures": [

|

| 5 |

+

"DeformableDetrForObjectDetection"

|

| 6 |

+

],

|

| 7 |

+

"attention_dropout": 0.0,

|

| 8 |

+

"auxiliary_loss": false,

|

| 9 |

+

"backbone": "resnet50",

|

| 10 |

+

"bbox_cost": 5,

|

| 11 |

+

"bbox_loss_coefficient": 5,

|

| 12 |

+

"class_cost": 1,

|

| 13 |

+

"d_model": 256,

|

| 14 |

+

"decoder_attention_heads": 8,

|

| 15 |

+

"decoder_ffn_dim": 1024,

|

| 16 |

+

"decoder_layerdrop": 0.0,

|

| 17 |

+

"decoder_layers": 6,

|

| 18 |

+

"decoder_n_points": 4,

|

| 19 |

+

"dice_loss_coefficient": 1,

|

| 20 |

+

"dilation": false,

|

| 21 |

+

"dropout": 0.1,

|

| 22 |

+

"encoder_attention_heads": 8,

|

| 23 |

+

"encoder_ffn_dim": 1024,

|

| 24 |

+

"encoder_layerdrop": 0.0,

|

| 25 |

+

"encoder_layers": 6,

|

| 26 |

+

"encoder_n_points": 4,

|

| 27 |

+

"eos_coefficient": 0.1,

|

| 28 |

+

"giou_cost": 2,

|

| 29 |

+

"giou_loss_coefficient": 2,

|

| 30 |

+

"id2label": {

|

| 31 |

+

"0": "N/A",

|

| 32 |

+

"1": "person",

|

| 33 |

+

"2": "bicycle",

|

| 34 |

+

"3": "car",

|

| 35 |

+

"4": "motorcycle",

|

| 36 |

+

"5": "airplane",

|

| 37 |

+

"6": "bus",

|

| 38 |

+

"7": "train",

|

| 39 |

+

"8": "truck",

|

| 40 |

+

"9": "boat",

|

| 41 |

+

"10": "traffic light",

|

| 42 |

+

"11": "fire hydrant",

|

| 43 |

+

"12": "N/A",

|

| 44 |

+

"13": "stop sign",

|

| 45 |

+

"14": "parking meter",

|

| 46 |

+

"15": "bench",

|

| 47 |

+

"16": "bird",

|

| 48 |

+

"17": "cat",

|

| 49 |

+

"18": "dog",

|

| 50 |

+

"19": "horse",

|

| 51 |

+

"20": "sheep",

|

| 52 |

+

"21": "cow",

|

| 53 |

+

"22": "elephant",

|

| 54 |

+

"23": "bear",

|

| 55 |

+

"24": "zebra",

|

| 56 |

+

"25": "giraffe",

|

| 57 |

+

"26": "N/A",

|

| 58 |

+

"27": "backpack",

|

| 59 |

+

"28": "umbrella",

|

| 60 |

+

"29": "N/A",

|

| 61 |

+

"30": "N/A",

|

| 62 |

+

"31": "handbag",

|

| 63 |

+

"32": "tie",

|

| 64 |

+

"33": "suitcase",

|

| 65 |

+

"34": "frisbee",

|

| 66 |

+

"35": "skis",

|

| 67 |

+

"36": "snowboard",

|

| 68 |

+

"37": "sports ball",

|

| 69 |

+

"38": "kite",

|

| 70 |

+

"39": "baseball bat",

|

| 71 |

+

"40": "baseball glove",

|

| 72 |

+

"41": "skateboard",

|

| 73 |

+

"42": "surfboard",

|

| 74 |

+

"43": "tennis racket",

|

| 75 |

+

"44": "bottle",

|

| 76 |

+

"45": "N/A",

|

| 77 |

+

"46": "wine glass",

|

| 78 |

+

"47": "cup",

|

| 79 |

+

"48": "fork",

|

| 80 |

+

"49": "knife",

|

| 81 |

+

"50": "spoon",

|

| 82 |

+

"51": "bowl",

|

| 83 |

+

"52": "banana",

|

| 84 |

+

"53": "apple",

|

| 85 |

+

"54": "sandwich",

|

| 86 |

+

"55": "orange",

|

| 87 |

+

"56": "broccoli",

|

| 88 |

+

"57": "carrot",

|

| 89 |

+

"58": "hot dog",

|

| 90 |

+

"59": "pizza",

|

| 91 |

+

"60": "donut",

|

| 92 |

+

"61": "cake",

|

| 93 |

+

"62": "chair",

|

| 94 |

+

"63": "couch",

|

| 95 |

+

"64": "potted plant",

|

| 96 |

+

"65": "bed",

|

| 97 |

+

"66": "N/A",

|

| 98 |

+

"67": "dining table",

|

| 99 |

+

"68": "N/A",

|

| 100 |

+

"69": "N/A",

|

| 101 |

+

"70": "toilet",

|

| 102 |

+

"71": "N/A",

|

| 103 |

+

"72": "tv",

|

| 104 |

+

"73": "laptop",

|

| 105 |

+

"74": "mouse",

|

| 106 |

+

"75": "remote",

|

| 107 |

+

"76": "keyboard",

|

| 108 |

+

"77": "cell phone",

|

| 109 |

+

"78": "microwave",

|

| 110 |

+

"79": "oven",

|

| 111 |

+

"80": "toaster",

|

| 112 |

+

"81": "sink",

|

| 113 |

+

"82": "refrigerator",

|

| 114 |

+

"83": "N/A",

|

| 115 |

+

"84": "book",

|

| 116 |

+

"85": "clock",

|

| 117 |

+

"86": "vase",

|

| 118 |

+

"87": "scissors",

|

| 119 |

+

"88": "teddy bear",

|

| 120 |

+

"89": "hair drier",

|

| 121 |

+

"90": "toothbrush"

|

| 122 |

+

},

|

| 123 |

+

"init_std": 0.02,

|

| 124 |

+

"init_xavier_std": 1.0,

|

| 125 |

+

"is_encoder_decoder": true,

|

| 126 |

+

"label2id": {

|

| 127 |

+

"N/A": 83,

|

| 128 |

+

"airplane": 5,

|

| 129 |

+

"apple": 53,

|

| 130 |

+

"backpack": 27,

|

| 131 |

+

"banana": 52,

|

| 132 |

+

"baseball bat": 39,

|

| 133 |

+

"baseball glove": 40,

|

| 134 |

+

"bear": 23,

|

| 135 |

+

"bed": 65,

|

| 136 |

+

"bench": 15,

|

| 137 |

+

"bicycle": 2,

|

| 138 |

+

"bird": 16,

|

| 139 |

+

"boat": 9,

|

| 140 |

+

"book": 84,

|

| 141 |

+

"bottle": 44,

|

| 142 |

+

"bowl": 51,

|

| 143 |

+

"broccoli": 56,

|

| 144 |

+

"bus": 6,

|

| 145 |

+

"cake": 61,

|

| 146 |

+

"car": 3,

|

| 147 |

+

"carrot": 57,

|

| 148 |

+

"cat": 17,

|

| 149 |

+

"cell phone": 77,

|

| 150 |

+

"chair": 62,

|

| 151 |

+

"clock": 85,

|

| 152 |

+

"couch": 63,

|

| 153 |

+

"cow": 21,

|

| 154 |

+

"cup": 47,

|

| 155 |

+

"dining table": 67,

|

| 156 |

+

"dog": 18,

|

| 157 |

+

"donut": 60,

|

| 158 |

+

"elephant": 22,

|

| 159 |

+

"fire hydrant": 11,

|

| 160 |

+

"fork": 48,

|

| 161 |

+

"frisbee": 34,

|

| 162 |

+

"giraffe": 25,

|

| 163 |

+

"hair drier": 89,

|

| 164 |

+

"handbag": 31,

|

| 165 |

+

"horse": 19,

|

| 166 |

+

"hot dog": 58,

|

| 167 |

+

"keyboard": 76,

|

| 168 |

+

"kite": 38,

|

| 169 |

+

"knife": 49,

|

| 170 |

+

"laptop": 73,

|

| 171 |

+

"microwave": 78,

|

| 172 |

+

"motorcycle": 4,

|

| 173 |

+

"mouse": 74,

|

| 174 |

+

"orange": 55,

|

| 175 |

+

"oven": 79,

|

| 176 |

+

"parking meter": 14,

|

| 177 |

+

"person": 1,

|

| 178 |

+

"pizza": 59,

|

| 179 |

+

"potted plant": 64,

|

| 180 |

+

"refrigerator": 82,

|

| 181 |

+

"remote": 75,

|

| 182 |

+

"sandwich": 54,

|

| 183 |

+

"scissors": 87,

|

| 184 |

+

"sheep": 20,

|

| 185 |

+

"sink": 81,

|

| 186 |

+

"skateboard": 41,

|

| 187 |

+

"skis": 35,

|

| 188 |

+

"snowboard": 36,

|

| 189 |

+

"spoon": 50,

|

| 190 |

+

"sports ball": 37,

|

| 191 |

+

"stop sign": 13,

|

| 192 |

+

"suitcase": 33,

|

| 193 |

+

"surfboard": 42,

|

| 194 |

+

"teddy bear": 88,

|

| 195 |

+

"tennis racket": 43,

|

| 196 |

+

"tie": 32,

|

| 197 |

+

"toaster": 80,

|

| 198 |

+

"toilet": 70,

|

| 199 |

+

"toothbrush": 90,

|

| 200 |

+

"traffic light": 10,

|

| 201 |

+

"train": 7,

|

| 202 |

+

"truck": 8,

|

| 203 |

+

"tv": 72,

|

| 204 |

+

"umbrella": 28,

|

| 205 |

+

"vase": 86,

|

| 206 |

+

"wine glass": 46,

|

| 207 |

+

"zebra": 24

|

| 208 |

+

},

|

| 209 |

+

"mask_loss_coefficient": 1,

|

| 210 |

+

"max_position_embeddings": 1024,

|

| 211 |

+

"model_type": "deformable_detr",

|

| 212 |

+

"num_feature_levels": 4,

|

| 213 |

+

"num_queries": 300,

|

| 214 |

+

"position_embedding_type": "sine",

|

| 215 |

+

"return_intermediate": true,

|

| 216 |

+

"torch_dtype": "float32",

|

| 217 |

+

"transformers_version": "4.16.0.dev0",

|

| 218 |

+

"two_stage": false,

|

| 219 |

+

"two_stage_num_proposals": 300,

|

| 220 |

+

"with_box_refine": false

|

| 221 |

+

}

|

detr/gitattributes

ADDED

|

@@ -0,0 +1,28 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

*.7z filter=lfs diff=lfs merge=lfs -text

|

| 2 |

+

*.arrow filter=lfs diff=lfs merge=lfs -text

|

| 3 |

+

*.bin filter=lfs diff=lfs merge=lfs -text

|

| 4 |

+

*.bin.* filter=lfs diff=lfs merge=lfs -text

|

| 5 |

+

*.bz2 filter=lfs diff=lfs merge=lfs -text

|

| 6 |

+

*.ftz filter=lfs diff=lfs merge=lfs -text

|

| 7 |

+

*.gz filter=lfs diff=lfs merge=lfs -text

|

| 8 |

+

*.h5 filter=lfs diff=lfs merge=lfs -text

|

| 9 |

+

*.joblib filter=lfs diff=lfs merge=lfs -text

|

| 10 |

+

*.lfs.* filter=lfs diff=lfs merge=lfs -text

|

| 11 |

+

*.model filter=lfs diff=lfs merge=lfs -text

|

| 12 |

+

*.msgpack filter=lfs diff=lfs merge=lfs -text

|

| 13 |

+

*.onnx filter=lfs diff=lfs merge=lfs -text

|

| 14 |

+

*.ot filter=lfs diff=lfs merge=lfs -text

|

| 15 |

+

*.parquet filter=lfs diff=lfs merge=lfs -text

|

| 16 |

+

*.pb filter=lfs diff=lfs merge=lfs -text

|

| 17 |

+

*.pt filter=lfs diff=lfs merge=lfs -text

|

| 18 |

+

*.pth filter=lfs diff=lfs merge=lfs -text

|

| 19 |

+

*.rar filter=lfs diff=lfs merge=lfs -text

|

| 20 |

+

saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

| 21 |

+

*.tar.* filter=lfs diff=lfs merge=lfs -text

|

| 22 |

+

*.tflite filter=lfs diff=lfs merge=lfs -text

|

| 23 |

+

*.tgz filter=lfs diff=lfs merge=lfs -text

|

| 24 |

+

*.xz filter=lfs diff=lfs merge=lfs -text

|

| 25 |

+

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 26 |

+

*.zstandard filter=lfs diff=lfs merge=lfs -text

|

| 27 |

+

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 28 |

+

model.safetensors filter=lfs diff=lfs merge=lfs -text

|

detr/preprocessor_config.json

ADDED

|

@@ -0,0 +1,17 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"do_normalize": true,

|

| 3 |

+

"do_resize": true,

|

| 4 |

+

"feature_extractor_type": "DeformableDetrFeatureExtractor",

|

| 5 |

+

"format": "coco_detection",

|

| 6 |

+

"image_mean": [

|

| 7 |

+

0.485,

|

| 8 |

+

0.456,

|

| 9 |

+

0.406

|

| 10 |

+

],

|

| 11 |

+

"image_std": [

|

| 12 |

+

0.229,

|

| 13 |

+

0.224,

|

| 14 |

+

0.225

|

| 15 |

+

],

|

| 16 |

+

"size": {"shortest_edge": 800, "longest_edge": 1333}

|

| 17 |

+

}

|

detr/pytorch_model.bin

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:2e138fa28130ed71d357af7bf1cef51dcc745c5301ba372dd57d9e3079626c8b

|

| 3 |

+

size 7346752798

|