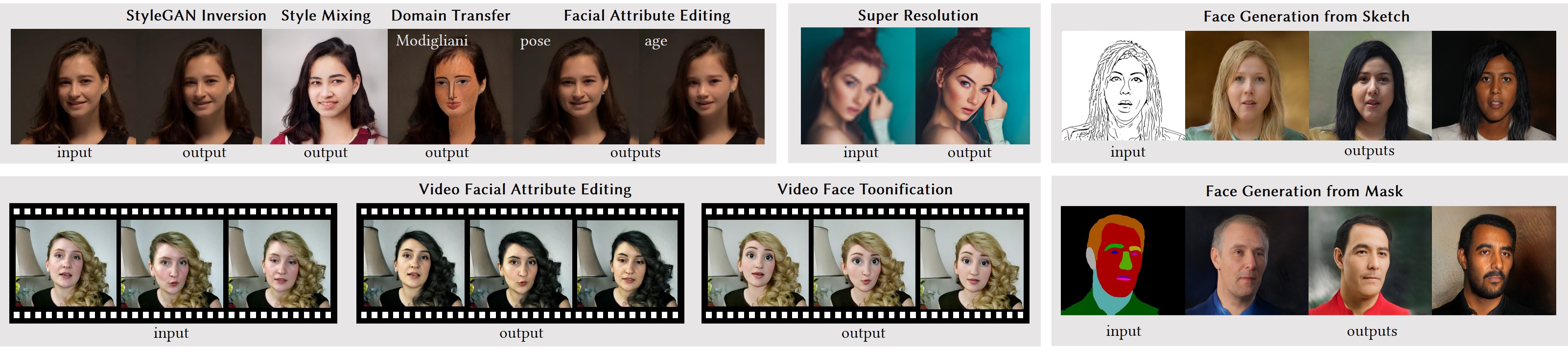

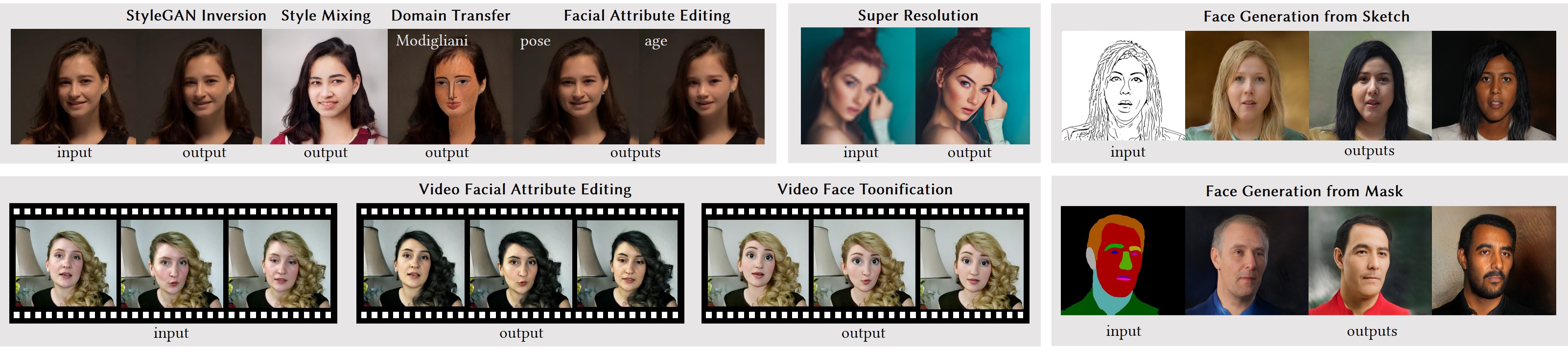

+ Face Manipulation with StyleGANEX +

+For faster inference without waiting in queue, you may duplicate the space and upgrade to GPU in settings.

+

+

+For faster inference without waiting in queue, you may duplicate the space and upgrade to GPU in settings.

+

+

+

+

+  +

+

+

+

| + | Hair | +Lip | +

|---|---|---|

| Original Input | + |

+ |

+

| Color | + |

+ |

+