Commit

·

e92bd0b

1

Parent(s):

599b1f8

demo structure

Browse files

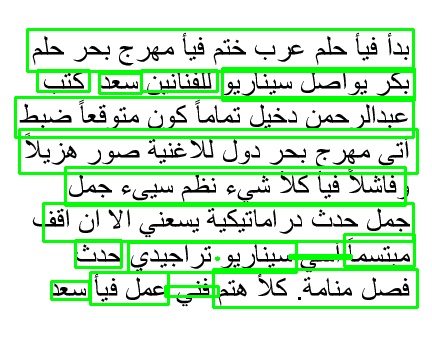

a.jpg

ADDED

|

a.py

ADDED

|

@@ -0,0 +1,40 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from easyocr import Reader

|

| 2 |

+

import cv2

|

| 3 |

+

def cleanup_text(text):

|

| 4 |

+

# strip out non-ASCII text so we can draw the text on the image

|

| 5 |

+

# using OpenCV

|

| 6 |

+

return "".join([c if ord(c) < 128 else "" for c in text]).strip()

|

| 7 |

+

|

| 8 |

+

def arabic_ocr(image_path,out_image):

|

| 9 |

+

# break the input languages into a comma separated list

|

| 10 |

+

langs = "ar,en".split(",")

|

| 11 |

+

gpu1=-1

|

| 12 |

+

#print("[INFO] OCR'ing with the following languages: {}".format(langs))

|

| 13 |

+

# load the input image from disk

|

| 14 |

+

image = cv2.imread(image_path)

|

| 15 |

+

# OCR the input image using EasyOCR

|

| 16 |

+

print("[INFO] OCR'ing input image...")

|

| 17 |

+

reader = Reader(langs, gpu=-1 > 0)

|

| 18 |

+

results = reader.readtext(image)

|

| 19 |

+

|

| 20 |

+

#print(result)

|

| 21 |

+

# loop over the results

|

| 22 |

+

filename=out_image

|

| 23 |

+

for (bbox, text, prob) in results:

|

| 24 |

+

# display the OCR'd text and associated probability

|

| 25 |

+

print("[INFO] {:.4f}: {}".format(prob, text))

|

| 26 |

+

# unpack the bounding box

|

| 27 |

+

(tl, tr, br, bl) = bbox

|

| 28 |

+

tl = (int(tl[0]), int(tl[1]))

|

| 29 |

+

tr = (int(tr[0]), int(tr[1]))

|

| 30 |

+

br = (int(br[0]), int(br[1]))

|

| 31 |

+

bl = (int(bl[0]), int(bl[1]))

|

| 32 |

+

# cleanup the text and draw the box surrounding the text along

|

| 33 |

+

# with the OCR'd text itself

|

| 34 |

+

text = cleanup_text(text)

|

| 35 |

+

cv2.rectangle(image, tl, br, (0, 255, 0), 2)

|

| 36 |

+

cv2.putText(image, text, (tl[0], tl[1] - 10),

|

| 37 |

+

cv2.FONT_HERSHEY_SIMPLEX, 0.8, (0, 255, 0), 2)

|

| 38 |

+

# show the output image

|

| 39 |

+

cv2.imwrite(filename, image)

|

| 40 |

+

return results

|

api.py

ADDED

|

@@ -0,0 +1,47 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

|

| 2 |

+

import urllib.request

|

| 3 |

+

from fastapi import FastAPI

|

| 4 |

+

from pydantic import BaseModel

|

| 5 |

+

import json

|

| 6 |

+

from io import BytesIO

|

| 7 |

+

import asyncio

|

| 8 |

+

from aiohttp import ClientSession

|

| 9 |

+

import torch

|

| 10 |

+

from main import main_det

|

| 11 |

+

import uvicorn

|

| 12 |

+

import urllib

|

| 13 |

+

|

| 14 |

+

|

| 15 |

+

app = FastAPI()

|

| 16 |

+

|

| 17 |

+

class Item(BaseModel):

|

| 18 |

+

url: str

|

| 19 |

+

|

| 20 |

+

|

| 21 |

+

async def process_item(item: Item):

|

| 22 |

+

try:

|

| 23 |

+

urllib.request.urlretrieve(item.url,"new.jpg")

|

| 24 |

+

result = await main_det("new.jpg")

|

| 25 |

+

result = json.loads(result)

|

| 26 |

+

return result

|

| 27 |

+

except:

|

| 28 |

+

pass

|

| 29 |

+

|

| 30 |

+

@app.get("/status")

|

| 31 |

+

async def status():

|

| 32 |

+

return "AI Server in running"

|

| 33 |

+

|

| 34 |

+

@app.post("/ocr")

|

| 35 |

+

async def create_items(items: Item):

|

| 36 |

+

try:

|

| 37 |

+

# print(items)

|

| 38 |

+

results = await process_item(items)

|

| 39 |

+

print("#"*100)

|

| 40 |

+

return results

|

| 41 |

+

except Exception as e:

|

| 42 |

+

return {"AI": f"Error: {str(e)}"}

|

| 43 |

+

finally:

|

| 44 |

+

torch.cuda.empty_cache()

|

| 45 |

+

|

| 46 |

+

if __name__ == "__main__":

|

| 47 |

+

uvicorn.run(app, host="127.0.0.1", port=8000)

|

img/a.png

ADDED

|

main.py

ADDED

|

@@ -0,0 +1,26 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from ArabicOcr import arabicocr

|

| 2 |

+

import json

|

| 3 |

+

import numpy as np

|

| 4 |

+

from io import BytesIO

|

| 5 |

+

from PIL import Image

|

| 6 |

+

from aiohttp import ClientSession

|

| 7 |

+

|

| 8 |

+

async def getImage(img_url):

|

| 9 |

+

async with ClientSession() as session:

|

| 10 |

+

async with session.get(img_url) as response:

|

| 11 |

+

img_data = await response.read()

|

| 12 |

+

return BytesIO(img_data)

|

| 13 |

+

|

| 14 |

+

async def main_det(image):

|

| 15 |

+

try:

|

| 16 |

+

# image_path = image

|

| 17 |

+

out = "data.jpg"

|

| 18 |

+

results=await arabicocr.arabic_ocr(image,"a.jpg")

|

| 19 |

+

print(results)

|

| 20 |

+

words=[]

|

| 21 |

+

for i in range(len(results)):

|

| 22 |

+

word=results[i][1]

|

| 23 |

+

words.append(word)

|

| 24 |

+

return json.dumps({"prediction":words})

|

| 25 |

+

except Exception as e:

|

| 26 |

+

raise ValueError(f"Error in main_det: {str(e)}")

|

new.jpg

ADDED

|

test.ipynb

ADDED

|

@@ -0,0 +1,106 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"cells": [

|

| 3 |

+

{

|

| 4 |

+

"cell_type": "code",

|

| 5 |

+

"execution_count": null,

|

| 6 |

+

"metadata": {},

|

| 7 |

+

"outputs": [],

|

| 8 |

+

"source": []

|

| 9 |

+

},

|

| 10 |

+

{

|

| 11 |

+

"cell_type": "code",

|

| 12 |

+

"execution_count": 1,

|

| 13 |

+

"metadata": {},

|

| 14 |

+

"outputs": [],

|

| 15 |

+

"source": [

|

| 16 |

+

"from ArabicOcr import arabicocr\n"

|

| 17 |

+

]

|

| 18 |

+

},

|

| 19 |

+

{

|

| 20 |

+

"cell_type": "code",

|

| 21 |

+

"execution_count": 12,

|

| 22 |

+

"metadata": {},

|

| 23 |

+

"outputs": [

|

| 24 |

+

{

|

| 25 |

+

"name": "stderr",

|

| 26 |

+

"output_type": "stream",

|

| 27 |

+

"text": [

|

| 28 |

+

"Using CPU. Note: This module is much faster with a GPU.\n"

|

| 29 |

+

]

|

| 30 |

+

},

|

| 31 |

+

{

|

| 32 |

+

"name": "stdout",

|

| 33 |

+

"output_type": "stream",

|

| 34 |

+

"text": [

|

| 35 |

+

"[INFO] OCR'ing input image...\n",

|

| 36 |

+

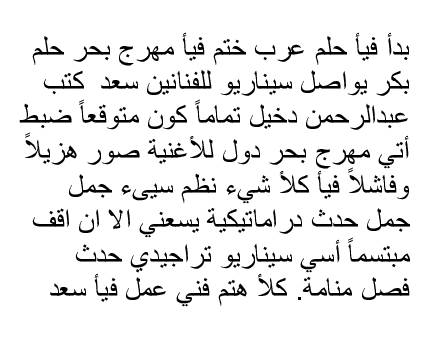

"[INFO] 0.4919: بدأ فيأ حلم عرب ختم فيأ مهرج بحر حلم\n",

|

| 37 |

+

"[INFO] 0.2563: لدعط\n",

|

| 38 |

+

"[INFO] 0.8595: بكر يواصل سيناريو\n",

|

| 39 |

+

"[INFO] 0.6365: عبدالرحمن دخيل تماماًكون متوقعاً ضبط\n",

|

| 40 |

+

"[INFO] 0.4543: أتي مهرج بحر دول للأغنية صور هزيلاً\n",

|

| 41 |

+

"[INFO] 0.6843: فاشلا فيأكلأ شيء نظم سيىء جمل\n",

|

| 42 |

+

"[INFO] 0.6381: جمل حدث دراماتيكية يسعني الاان اقف\n",

|

| 43 |

+

"[INFO] 0.9383: سيناريو تراجيدي\n",

|

| 44 |

+

"[INFO] 0.3568: منتسماً\n",

|

| 45 |

+

"[INFO] 0.2621: ددوا\n",

|

| 46 |

+

"[INFO] 0.7647: عمل فيأ\n",

|

| 47 |

+

"[INFO] 0.5875: فصل منامة . كلأ هتم\n",

|

| 48 |

+

"[INFO] 0.9771: كتب\n",

|

| 49 |

+

"[INFO] 0.9535: للفنانين\n",

|

| 50 |

+

"[INFO] 0.7544: أسي\n",

|

| 51 |

+

"[INFO] 0.9973: حدث\n",

|

| 52 |

+

"[INFO] 0.9826: فني\n",

|

| 53 |

+

"{'Extracted': ['بدأ فيأ حلم عرب ختم فيأ مهرج بحر حلم', 'لدعط', 'بكر يواصل سيناريو', 'عبدالرحمن دخيل تماماًكون متوقعاً ضبط', 'أتي مهرج بحر دول للأغنية صور هزيلاً', 'فاشلا فيأكلأ شيء نظم سيىء جمل', 'جمل حدث دراماتيكية يسعني الاان اقف', 'سيناريو تراجيدي', 'منتسماً', 'ددوا', 'عمل فيأ', 'فصل منامة . كلأ هتم', 'كتب', 'للفنانين', 'أسي', 'حدث', 'فني']}\n"

|

| 54 |

+

]

|

| 55 |

+

}

|

| 56 |

+

],

|

| 57 |

+

"source": [

|

| 58 |

+

"image_path='img/a.png'\n",

|

| 59 |

+

"out_image='out/out.jpg'\n",

|

| 60 |

+

"results=arabicocr.arabic_ocr(image_path,out_image)\n",

|

| 61 |

+

"# print(results)\n",

|

| 62 |

+

"words=[]\n",

|

| 63 |

+

"for i in range(len(results)):\t\n",

|

| 64 |

+

"\t\tword=results[i][1]\n",

|

| 65 |

+

"\t\t# print(word)\n",

|

| 66 |

+

"\t\twords.append(word)\n",

|

| 67 |

+

"data = {\"Extracted\":words}\n",

|

| 68 |

+

"print(data)\n",

|

| 69 |

+

"# with open ('file.txt','w',encoding='utf-8')as myfile:\n",

|

| 70 |

+

"# \t\tmyfile.write(str(words))\n",

|

| 71 |

+

"# import cv2\n",

|

| 72 |

+

"# img = cv2.imread('out/out.jpg', cv2.IMREAD_UNCHANGED)\n",

|

| 73 |

+

"# cv2.imshow(\"arabic ocr\",img)\n",

|

| 74 |

+

"# cv2.waitKey(0)"

|

| 75 |

+

]

|

| 76 |

+

},

|

| 77 |

+

{

|

| 78 |

+

"cell_type": "code",

|

| 79 |

+

"execution_count": null,

|

| 80 |

+

"metadata": {},

|

| 81 |

+

"outputs": [],

|

| 82 |

+

"source": []

|

| 83 |

+

}

|

| 84 |

+

],

|

| 85 |

+

"metadata": {

|

| 86 |

+

"kernelspec": {

|

| 87 |

+

"display_name": "arabic",

|

| 88 |

+

"language": "python",

|

| 89 |

+

"name": "python3"

|

| 90 |

+

},

|

| 91 |

+

"language_info": {

|

| 92 |

+

"codemirror_mode": {

|

| 93 |

+

"name": "ipython",

|

| 94 |

+

"version": 3

|

| 95 |

+

},

|

| 96 |

+

"file_extension": ".py",

|

| 97 |

+

"mimetype": "text/x-python",

|

| 98 |

+

"name": "python",

|

| 99 |

+

"nbconvert_exporter": "python",

|

| 100 |

+

"pygments_lexer": "ipython3",

|

| 101 |

+

"version": "3.12.2"

|

| 102 |

+

}

|

| 103 |

+

},

|

| 104 |

+

"nbformat": 4,

|

| 105 |

+

"nbformat_minor": 2

|

| 106 |

+

}

|