---

language: en

datasets:

- owaiskha9654/PubMed_MultiLabel_Text_Classification_Dataset_MeSH

widget:

- text: "('A case of a patient with type 1 neurofibromatosis associated with popliteal and coronary artery aneurysms is described in which cross-sectional', 'imaging provided diagnostic information.', 'The aim of this study was to compare the exercise intensity and competition load during Time Trial (TT), Flat (FL), Medium Mountain (MM) and High ', 'Mountain (HM) stages based heart rate (HR) and session rating of perceived exertion (RPE).METHODS: We monitored both HR and RPE of 12 professional ', 'cyclists during two consecutive 21-day cycling races in order to analyze the exercise intensity and competition load (TRIMPHR and TRIMPRPE).', 'RESULTS:The highest (P<0.05) mean HR was found in TT (169±2 bpm) versus those observed in FL (135±1 bpm), MM (139±3 bpm), HM (143±1 bpm)')"

- text: "('The association of body mass index (BMI) with blood pressure may be stronger in Asian than non-Asian populations, however, longitudinal studies ', 'with direct comparisons between ethnicities are lacking. We compared the relationship of BMI with incident hypertension over approximately 9.5 years', ' of follow-up in young (24-39 years) and middle-aged (45-64 years) Chinese Asians (n=5354), American Blacks (n=6076) and American Whites (n=13451).', 'We estimated risk differences using logistic regression models and calculated adjusted incidences and incidence differences. ', 'To facilitate comparisons across ethnicities, standardized estimates were calculated using mean covariate values for age, sex, smoking, education', 'and field center, and included the quadratic terms for BMI and age. Weighted least-squares regression models with were constructed to summarize', 'ethnic-specific incidence differences across BMI. Wald statistics and p-values were calculated based on chi-square distributions. The association of', 'BMI with the incidence difference for hypertension was steeper in Chinese (p<0.05) than in American populations during young and middle-adulthood.', 'For example, at a BMI of 25 vs 21 kg/m2 the adjusted incidence differences per 1000 persons (95% CI) in young adults with a BMI of 25 vs those with', 'a BMI of 21 was 83 (36- 130) for Chinese, 50 (26-74) for Blacks and 30 (12-48) for Whites')"

---

# Multi-Label-Classification-of-Pubmed-Articles

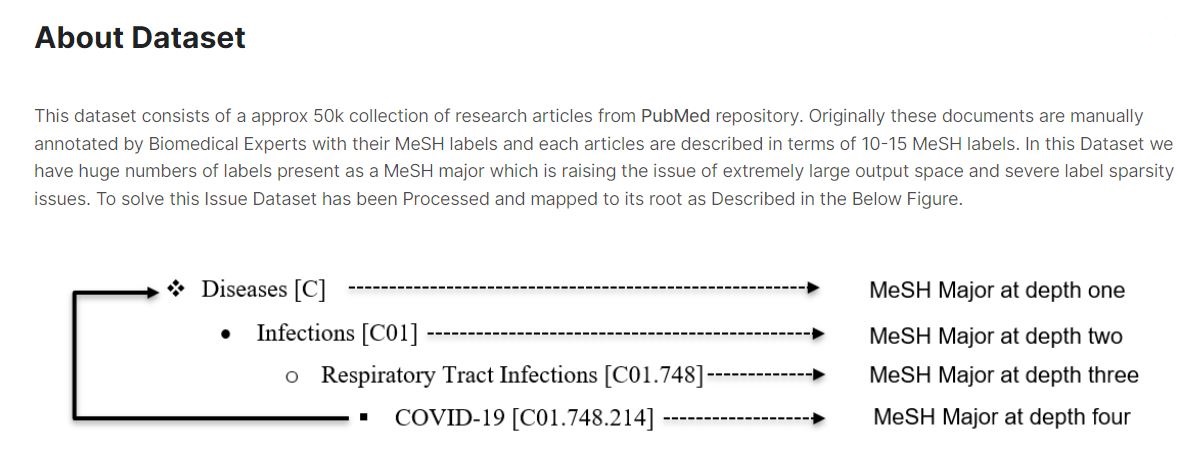

The traditional machine learning models give a lot of pain when we do not have sufficient labeled data for the specific task or domain we care about to train a reliable model. Transfer learning allows us to deal with these scenarios by leveraging the already existing labeled data of some related task or domain. We try to store this knowledge gained in solving the source task in the source domain and apply it to our problem of interest. In this work, I have utilized Transfer Learning utilizing **BioBERT** model to fine tune on PubMed MultiLabel classification Dataset.

Also tried **RobertaForSequenceClassification** and **XLNetForSequenceClassification** models for Fine-Tuning the Model on Pubmed MultiLabel Datset.

> I have integrated Weight and Bias also for visualizations and logging artifacts and comparisons of Different models!

> [Multi Label Classification of PubMed Articles (Paper Night Event)](https://wandb.ai/owaiskhan9515/Multi%20Label%20Classification%20of%20PubMed%20Articles%20(Paper%20Night%20Presentation))

> - To get the API key, create an account in the [website](https://wandb.ai/site) .

> - Use secrets to use API Keys more securely inside Kaggle.

> I have integrated Weight and Bias also for visualizations and logging artifacts and comparisons of Different models!

> [Multi Label Classification of PubMed Articles (Paper Night Event)](https://wandb.ai/owaiskhan9515/Multi%20Label%20Classification%20of%20PubMed%20Articles%20(Paper%20Night%20Presentation))

> - To get the API key, create an account in the [website](https://wandb.ai/site) .

> - Use secrets to use API Keys more securely inside Kaggle.

For more information on the attributes visit the Kaggle Dataset Description [here](https://www.kaggle.com/datasets/owaiskhan9654/pubmed-multilabel-text-classification).

### In order to, get a full grasp of what steps I have taken to utilize this dataset. Have a Full look at the information present in the Kaggle Notebook [Link](https://www.kaggle.com/code/owaiskhan9654/multi-label-classification-of-pubmed-articles) & Also Kaggle version of Same Dataset [Link](https://www.kaggle.com/datasets/owaiskhan9654/pubmed-multilabel-text-classification)

## References

1. [Attention Is All You Need](https://arxiv.org/abs/1706.03762)

2. [BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding](https://arxiv.org/abs/1810.04805)

2. https://github.com/google-research/bert

3. https://github.com/huggingface/transformers

4. [BCE WITH LOGITS LOSS Pytorch](https://pytorch.org/docs/stable/generated/torch.nn.BCEWithLogitsLoss.html#torch.nn.BCEWithLogitsLoss)

5. [Transformers for Multi-Label Classification made simple by

Ronak Patel](https://towardsdatascience.com/transformers-for-multilabel-classification-71a1a0daf5e1)

For more information on the attributes visit the Kaggle Dataset Description [here](https://www.kaggle.com/datasets/owaiskhan9654/pubmed-multilabel-text-classification).

### In order to, get a full grasp of what steps I have taken to utilize this dataset. Have a Full look at the information present in the Kaggle Notebook [Link](https://www.kaggle.com/code/owaiskhan9654/multi-label-classification-of-pubmed-articles) & Also Kaggle version of Same Dataset [Link](https://www.kaggle.com/datasets/owaiskhan9654/pubmed-multilabel-text-classification)

## References

1. [Attention Is All You Need](https://arxiv.org/abs/1706.03762)

2. [BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding](https://arxiv.org/abs/1810.04805)

2. https://github.com/google-research/bert

3. https://github.com/huggingface/transformers

4. [BCE WITH LOGITS LOSS Pytorch](https://pytorch.org/docs/stable/generated/torch.nn.BCEWithLogitsLoss.html#torch.nn.BCEWithLogitsLoss)

5. [Transformers for Multi-Label Classification made simple by

Ronak Patel](https://towardsdatascience.com/transformers-for-multilabel-classification-71a1a0daf5e1)