Post

608

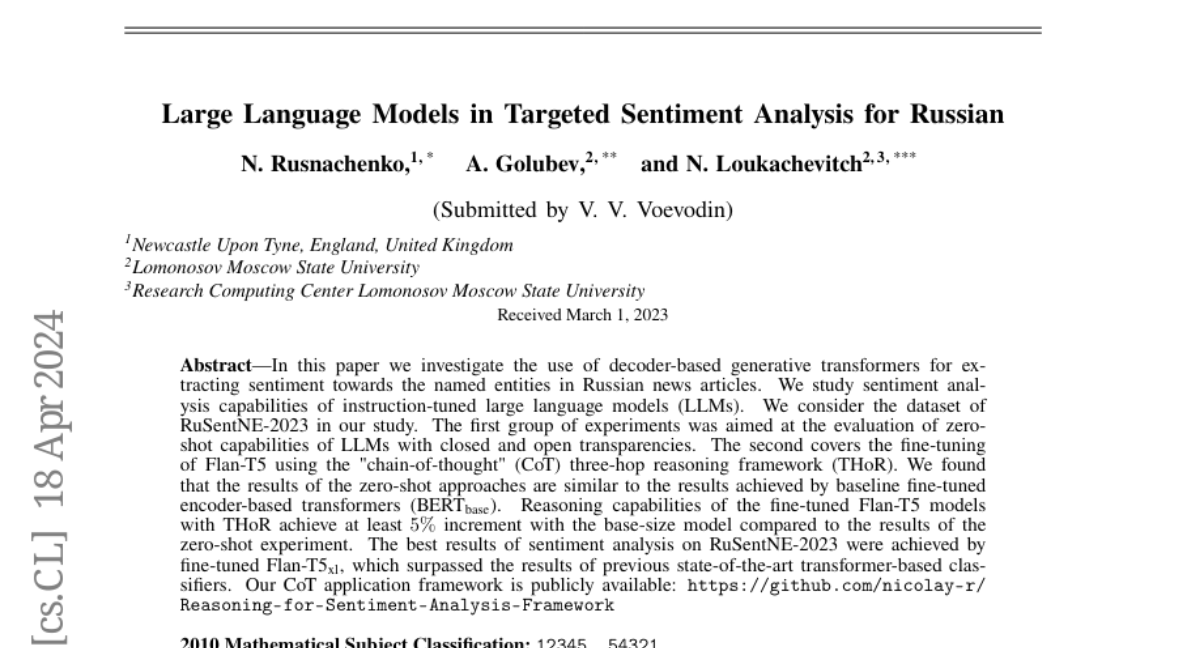

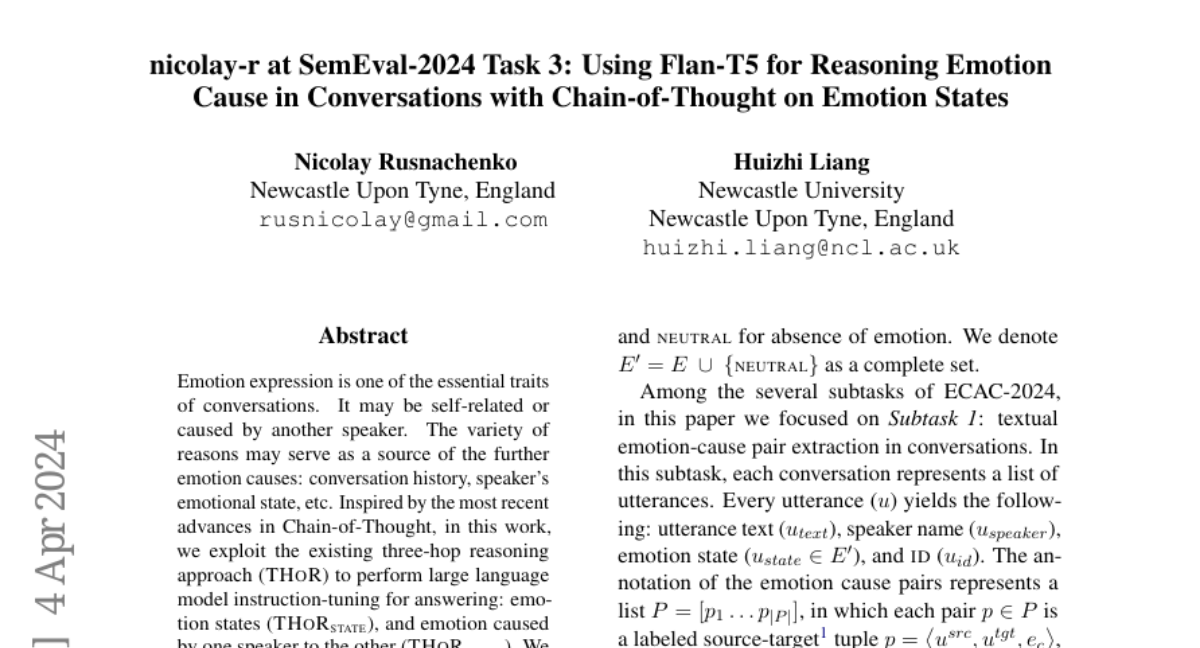

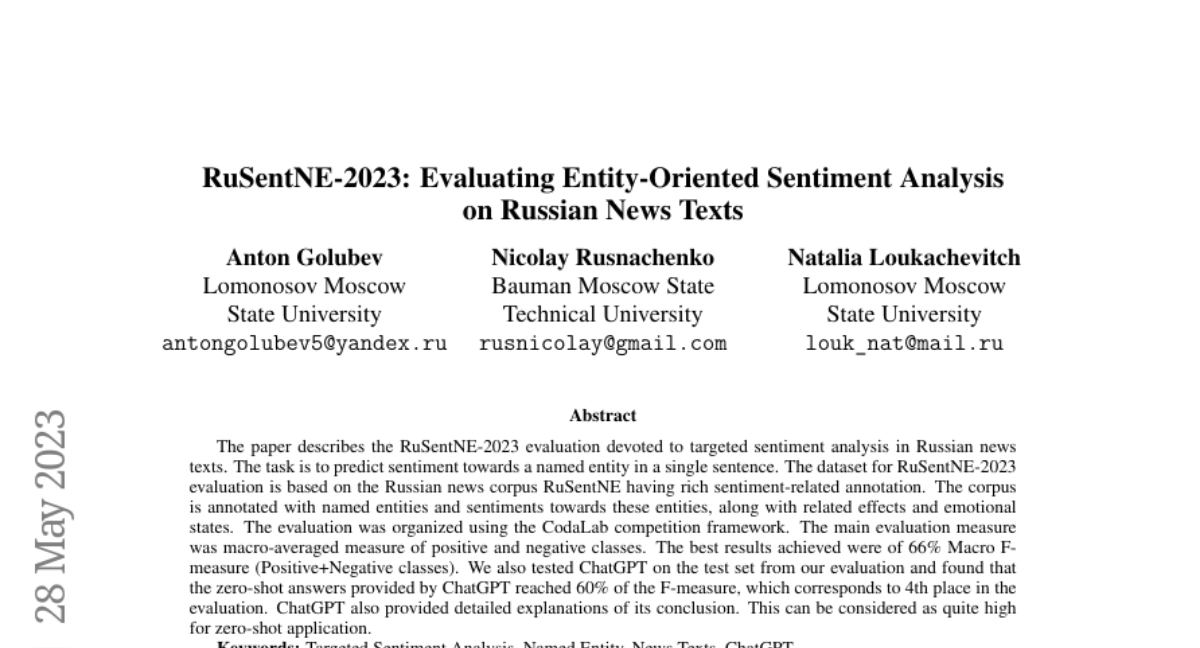

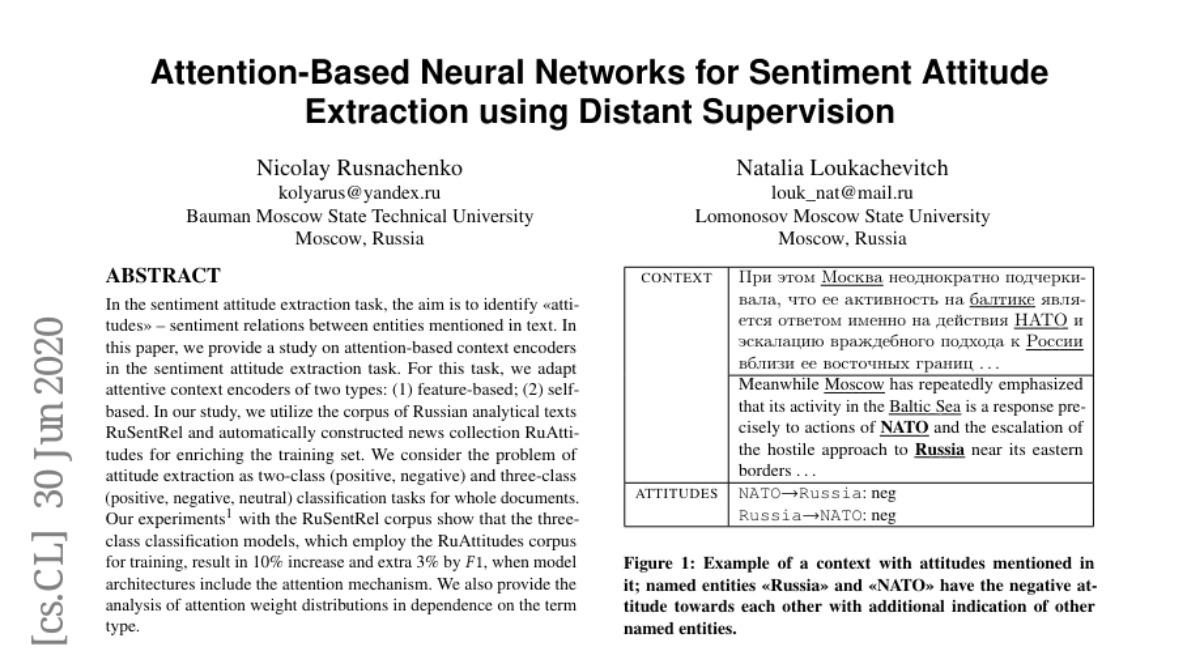

📢 Being inspired by effective LLM usage, delighted to share an approach that might boost your reasonging process 🧠 Sharing the demo for interactive launch of Chain-of-Thoght (CoT) schema in bash with the support of [optionally] predefined parameters as input files. The demo demonstrates application for author sentiment extraction towards object in text.

This is a part of the most recent release of the bulk-chain 0.25.0.

⭐ https://github.com/nicolay-r/bulk-chain/releases/tag/0.25.1

How it works: it launches your CoT by asking missed parameters if necessary. For each item of the chain you receive input prompt and streamed output of your LLM.

To settle onto certain parameters, you can pass them via

- TXT files (using filename as a parameter name)

- JSON dictionaries for multiple

🤖 Model: meta-llama/Llama-3.3-70B-Instruct

🌌 Other models: https://github.com/nicolay-r/nlp-thirdgate

This is a part of the most recent release of the bulk-chain 0.25.0.

⭐ https://github.com/nicolay-r/bulk-chain/releases/tag/0.25.1

How it works: it launches your CoT by asking missed parameters if necessary. For each item of the chain you receive input prompt and streamed output of your LLM.

To settle onto certain parameters, you can pass them via

--src:- TXT files (using filename as a parameter name)

- JSON dictionaries for multiple

🤖 Model: meta-llama/Llama-3.3-70B-Instruct

🌌 Other models: https://github.com/nicolay-r/nlp-thirdgate