update this bundle to support TensorRT convert

Browse files- README.md +24 -0

- configs/metadata.json +3 -2

- docs/README.md +24 -0

README.md

CHANGED

|

@@ -47,6 +47,23 @@ IoU was used for evaluating the performance of the model. This model achieves a

|

|

| 47 |

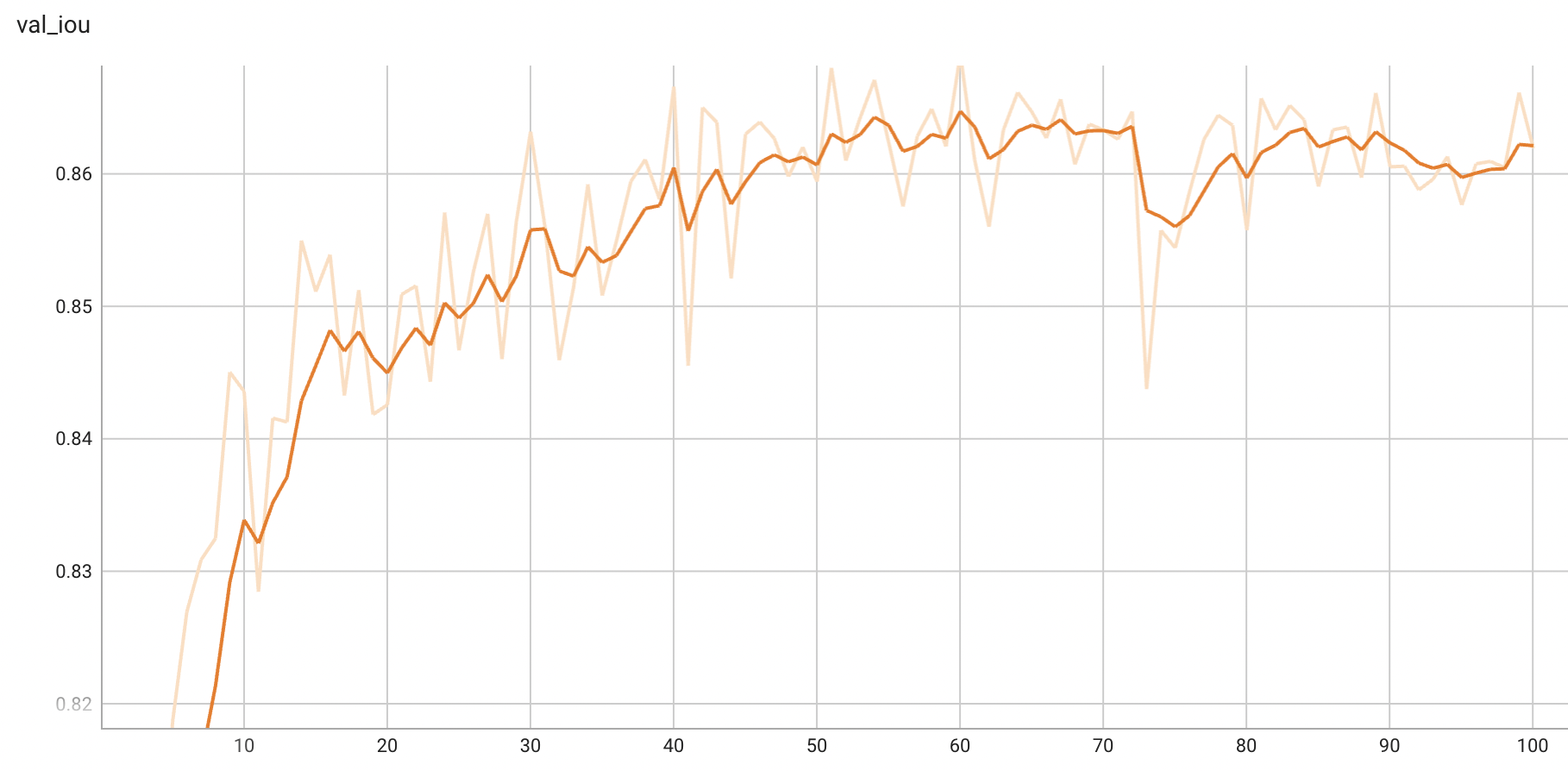

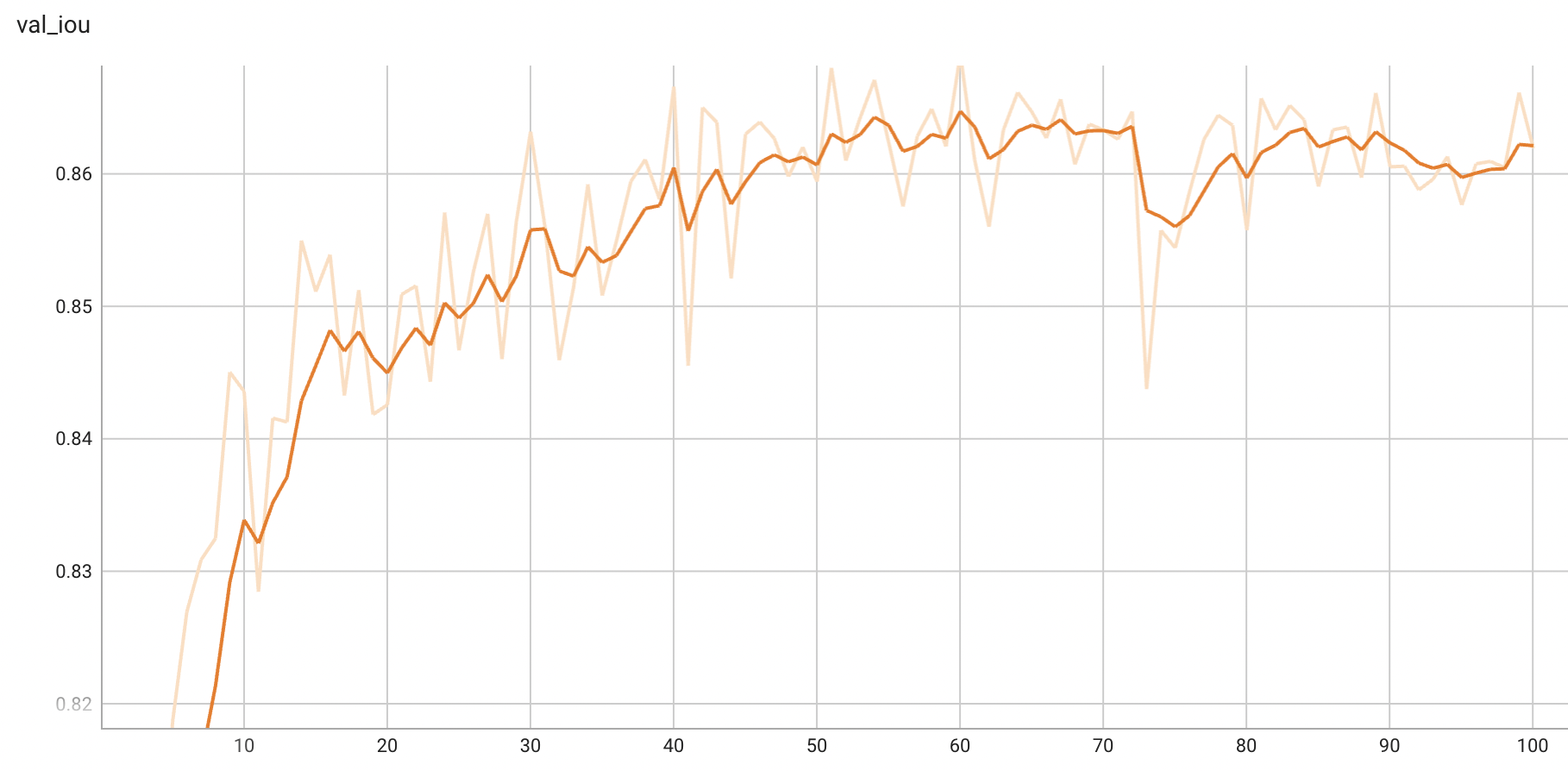

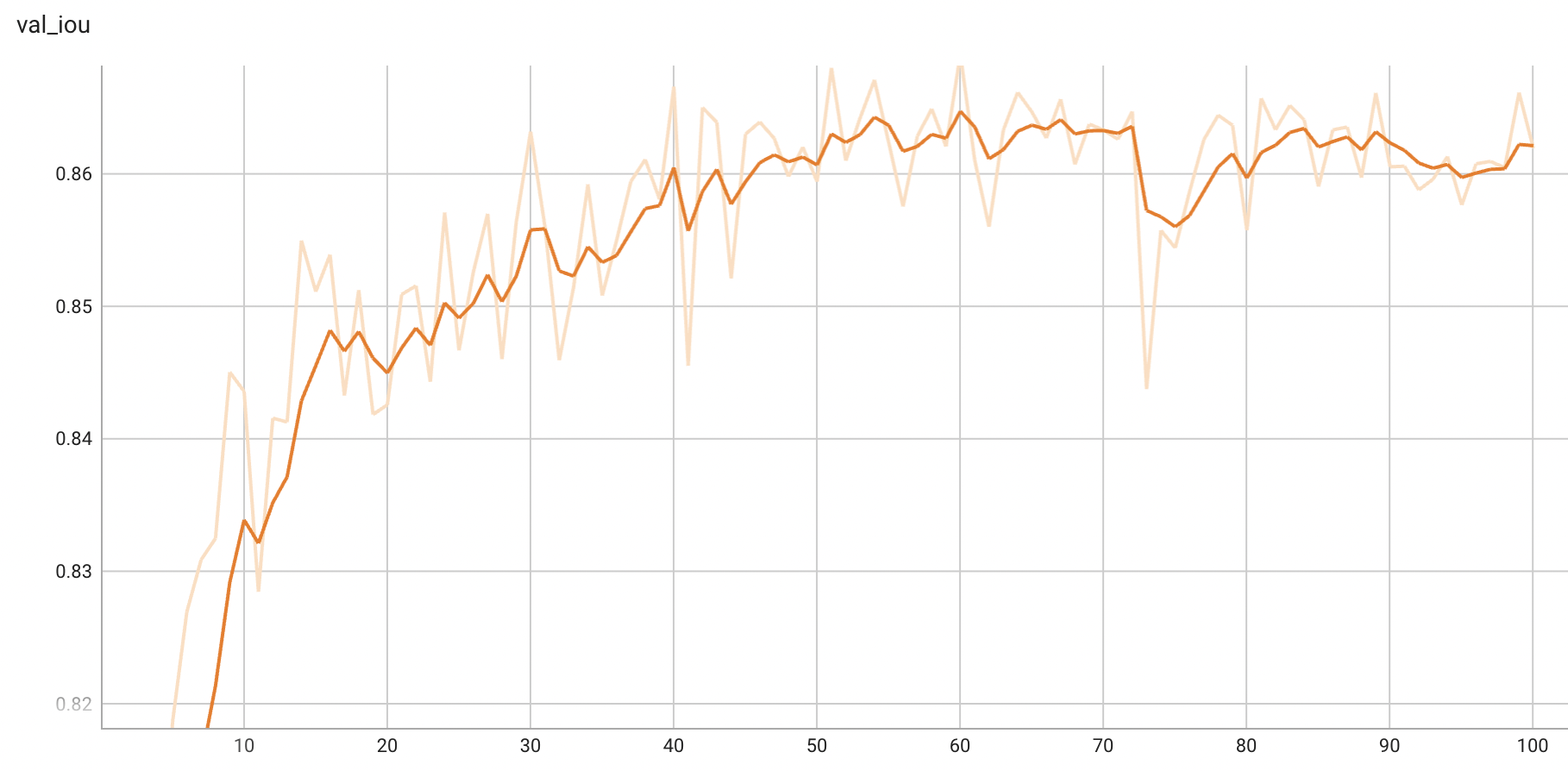

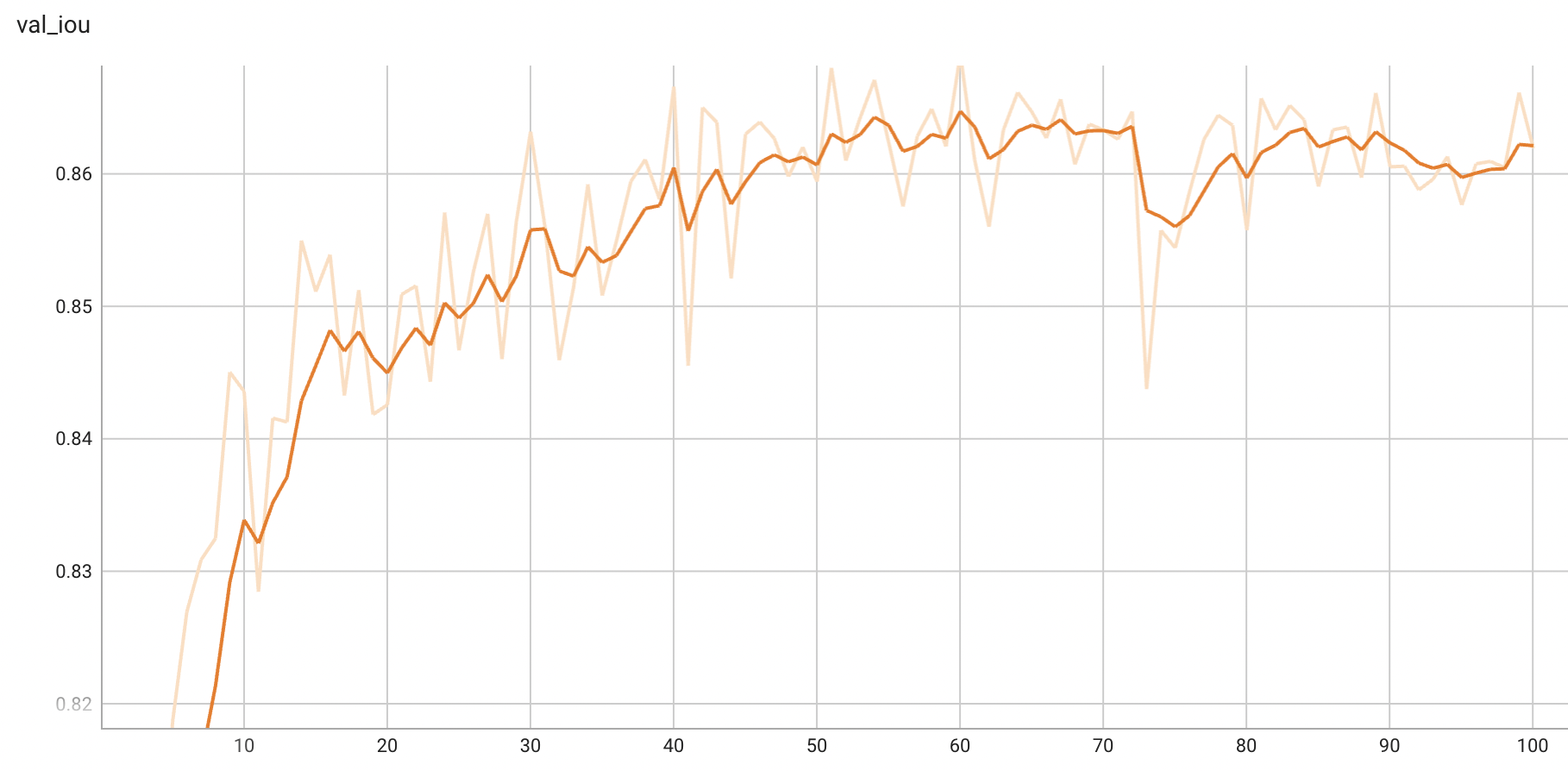

#### Validation IoU

|

| 48 |

|

| 49 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 50 |

## MONAI Bundle Commands

|

| 51 |

In addition to the Pythonic APIs, a few command line interfaces (CLI) are provided to interact with the bundle. The CLI supports flexible use cases, such as overriding configs at runtime and predefining arguments in a file.

|

| 52 |

|

|

@@ -76,6 +93,7 @@ python -m monai.bundle run evaluating --meta_file configs/metadata.json --config

|

|

| 76 |

|

| 77 |

```

|

| 78 |

torchrun --standalone --nnodes=1 --nproc_per_node=2 -m monai.bundle run evaluating --meta_file configs/metadata.json --config_file "['configs/train.json','configs/evaluate.json','configs/multi_gpu_evaluate.json']" --logging_file configs/logging.conf

|

|

|

|

| 79 |

|

| 80 |

#### Execute inference:

|

| 81 |

|

|

@@ -89,6 +107,12 @@ python -m monai.bundle run evaluating --meta_file configs/metadata.json --config

|

|

| 89 |

python -m monai.bundle ckpt_export network_def --filepath models/model.ts --ckpt_file models/model.pt --meta_file configs/metadata.json --config_file configs/inference.json

|

| 90 |

```

|

| 91 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 92 |

# References

|

| 93 |

[1] Tan, M. and Le, Q. V. Efficientnet: Rethinking model scaling for convolutional neural networks. ICML, 2019a. https://arxiv.org/pdf/1905.11946.pdf

|

| 94 |

|

|

|

|

| 47 |

#### Validation IoU

|

| 48 |

|

| 49 |

|

| 50 |

+

#### TensorRT speedup

|

| 51 |

+

The `endoscopic_tool_segmentation` bundle supports the TensorRT acceleration. The table below shows the speedup ratios benchmarked on an A100 80G GPU, in which the `model computation` means the speedup ratio of model's inference with a random input without preprocessing and postprocessing and the `end2end` means run the bundle end to end with the TensorRT based model. The `torch_fp32` and `torch_amp` is for the pytorch model with or without `amp` mode. The `trt_fp32` and `trt_fp16` is for the TensorRT based model converted in corresponding precision. The `speedup amp`, `speedup fp32` and `speedup fp16` is the speedup ratio of corresponding models versus the pytorch float32 model, while the `amp vs fp16` is between the pytorch amp model and the TensorRT float16 based model.

|

| 52 |

+

|

| 53 |

+

| method | torch_fp32(ms) | torch_amp(ms) | trt_fp32(ms) | trt_fp16(ms) | speedup amp | speedup fp32 | speedup fp16 | amp vs fp16|

|

| 54 |

+

| :---: | :---: | :---: | :---: | :---: | :---: | :---: | :---: | :---: |

|

| 55 |

+

| model computation | 12.00 | 14.06 | 6.59 | 5.20 | 0.85 | 1.82 | 2.31 | 2.70 |

|

| 56 |

+

| end2end |170.04 | 172.20 | 155.26 | 155.57 | 0.99 | 1.10 | 1.09 | 1.11 |

|

| 57 |

+

|

| 58 |

+

This result is benchmarked under:

|

| 59 |

+

- TensorRT: 8.5.3+cuda11.8

|

| 60 |

+

- Torch-TensorRT Version: 1.4.0

|

| 61 |

+

- CPU Architecture: x86-64

|

| 62 |

+

- OS: ubuntu 20.04

|

| 63 |

+

- Python version:3.8.10

|

| 64 |

+

- CUDA version: 11.8

|

| 65 |

+

- GPU models and configuration: A100 80G

|

| 66 |

+

|

| 67 |

## MONAI Bundle Commands

|

| 68 |

In addition to the Pythonic APIs, a few command line interfaces (CLI) are provided to interact with the bundle. The CLI supports flexible use cases, such as overriding configs at runtime and predefining arguments in a file.

|

| 69 |

|

|

|

|

| 93 |

|

| 94 |

```

|

| 95 |

torchrun --standalone --nnodes=1 --nproc_per_node=2 -m monai.bundle run evaluating --meta_file configs/metadata.json --config_file "['configs/train.json','configs/evaluate.json','configs/multi_gpu_evaluate.json']" --logging_file configs/logging.conf

|

| 96 |

+

```

|

| 97 |

|

| 98 |

#### Execute inference:

|

| 99 |

|

|

|

|

| 107 |

python -m monai.bundle ckpt_export network_def --filepath models/model.ts --ckpt_file models/model.pt --meta_file configs/metadata.json --config_file configs/inference.json

|

| 108 |

```

|

| 109 |

|

| 110 |

+

#### Export checkpoint to TensorRT based models with fp32 or fp16 precision:

|

| 111 |

+

|

| 112 |

+

```

|

| 113 |

+

python -m monai.bundle trt_export --net_id network_def --filepath models/model_trt.ts --ckpt_file models/model.pt --meta_file configs/metadata.json --config_file configs/inference.json --precision <fp32/fp16>

|

| 114 |

+

```

|

| 115 |

+

|

| 116 |

# References

|

| 117 |

[1] Tan, M. and Le, Q. V. Efficientnet: Rethinking model scaling for convolutional neural networks. ICML, 2019a. https://arxiv.org/pdf/1905.11946.pdf

|

| 118 |

|

configs/metadata.json

CHANGED

|

@@ -1,7 +1,8 @@

|

|

| 1 |

{

|

| 2 |

"schema": "https://github.com/Project-MONAI/MONAI-extra-test-data/releases/download/0.8.1/meta_schema_20220324.json",

|

| 3 |

-

"version": "0.4.

|

| 4 |

"changelog": {

|

|

|

|

| 5 |

"0.4.2": "support monai 1.2 new FlexibleUNet",

|

| 6 |

"0.4.1": "add name tag",

|

| 7 |

"0.4.0": "add support for multi-GPU training and evaluation",

|

|

@@ -13,7 +14,7 @@

|

|

| 13 |

"0.1.0": "complete the first version model package",

|

| 14 |

"0.0.1": "initialize the model package structure"

|

| 15 |

},

|

| 16 |

-

"monai_version": "1.2.

|

| 17 |

"pytorch_version": "1.13.0",

|

| 18 |

"numpy_version": "1.22.4",

|

| 19 |

"optional_packages_version": {

|

|

|

|

| 1 |

{

|

| 2 |

"schema": "https://github.com/Project-MONAI/MONAI-extra-test-data/releases/download/0.8.1/meta_schema_20220324.json",

|

| 3 |

+

"version": "0.4.3",

|

| 4 |

"changelog": {

|

| 5 |

+

"0.4.3": "update this bundle to support TensorRT convert",

|

| 6 |

"0.4.2": "support monai 1.2 new FlexibleUNet",

|

| 7 |

"0.4.1": "add name tag",

|

| 8 |

"0.4.0": "add support for multi-GPU training and evaluation",

|

|

|

|

| 14 |

"0.1.0": "complete the first version model package",

|

| 15 |

"0.0.1": "initialize the model package structure"

|

| 16 |

},

|

| 17 |

+

"monai_version": "1.2.0rc3",

|

| 18 |

"pytorch_version": "1.13.0",

|

| 19 |

"numpy_version": "1.22.4",

|

| 20 |

"optional_packages_version": {

|

docs/README.md

CHANGED

|

@@ -40,6 +40,23 @@ IoU was used for evaluating the performance of the model. This model achieves a

|

|

| 40 |

#### Validation IoU

|

| 41 |

|

| 42 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 43 |

## MONAI Bundle Commands

|

| 44 |

In addition to the Pythonic APIs, a few command line interfaces (CLI) are provided to interact with the bundle. The CLI supports flexible use cases, such as overriding configs at runtime and predefining arguments in a file.

|

| 45 |

|

|

@@ -69,6 +86,7 @@ python -m monai.bundle run evaluating --meta_file configs/metadata.json --config

|

|

| 69 |

|

| 70 |

```

|

| 71 |

torchrun --standalone --nnodes=1 --nproc_per_node=2 -m monai.bundle run evaluating --meta_file configs/metadata.json --config_file "['configs/train.json','configs/evaluate.json','configs/multi_gpu_evaluate.json']" --logging_file configs/logging.conf

|

|

|

|

| 72 |

|

| 73 |

#### Execute inference:

|

| 74 |

|

|

@@ -82,6 +100,12 @@ python -m monai.bundle run evaluating --meta_file configs/metadata.json --config

|

|

| 82 |

python -m monai.bundle ckpt_export network_def --filepath models/model.ts --ckpt_file models/model.pt --meta_file configs/metadata.json --config_file configs/inference.json

|

| 83 |

```

|

| 84 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 85 |

# References

|

| 86 |

[1] Tan, M. and Le, Q. V. Efficientnet: Rethinking model scaling for convolutional neural networks. ICML, 2019a. https://arxiv.org/pdf/1905.11946.pdf

|

| 87 |

|

|

|

|

| 40 |

#### Validation IoU

|

| 41 |

|

| 42 |

|

| 43 |

+

#### TensorRT speedup

|

| 44 |

+

The `endoscopic_tool_segmentation` bundle supports the TensorRT acceleration. The table below shows the speedup ratios benchmarked on an A100 80G GPU, in which the `model computation` means the speedup ratio of model's inference with a random input without preprocessing and postprocessing and the `end2end` means run the bundle end to end with the TensorRT based model. The `torch_fp32` and `torch_amp` is for the pytorch model with or without `amp` mode. The `trt_fp32` and `trt_fp16` is for the TensorRT based model converted in corresponding precision. The `speedup amp`, `speedup fp32` and `speedup fp16` is the speedup ratio of corresponding models versus the pytorch float32 model, while the `amp vs fp16` is between the pytorch amp model and the TensorRT float16 based model.

|

| 45 |

+

|

| 46 |

+

| method | torch_fp32(ms) | torch_amp(ms) | trt_fp32(ms) | trt_fp16(ms) | speedup amp | speedup fp32 | speedup fp16 | amp vs fp16|

|

| 47 |

+

| :---: | :---: | :---: | :---: | :---: | :---: | :---: | :---: | :---: |

|

| 48 |

+

| model computation | 12.00 | 14.06 | 6.59 | 5.20 | 0.85 | 1.82 | 2.31 | 2.70 |

|

| 49 |

+

| end2end |170.04 | 172.20 | 155.26 | 155.57 | 0.99 | 1.10 | 1.09 | 1.11 |

|

| 50 |

+

|

| 51 |

+

This result is benchmarked under:

|

| 52 |

+

- TensorRT: 8.5.3+cuda11.8

|

| 53 |

+

- Torch-TensorRT Version: 1.4.0

|

| 54 |

+

- CPU Architecture: x86-64

|

| 55 |

+

- OS: ubuntu 20.04

|

| 56 |

+

- Python version:3.8.10

|

| 57 |

+

- CUDA version: 11.8

|

| 58 |

+

- GPU models and configuration: A100 80G

|

| 59 |

+

|

| 60 |

## MONAI Bundle Commands

|

| 61 |

In addition to the Pythonic APIs, a few command line interfaces (CLI) are provided to interact with the bundle. The CLI supports flexible use cases, such as overriding configs at runtime and predefining arguments in a file.

|

| 62 |

|

|

|

|

| 86 |

|

| 87 |

```

|

| 88 |

torchrun --standalone --nnodes=1 --nproc_per_node=2 -m monai.bundle run evaluating --meta_file configs/metadata.json --config_file "['configs/train.json','configs/evaluate.json','configs/multi_gpu_evaluate.json']" --logging_file configs/logging.conf

|

| 89 |

+

```

|

| 90 |

|

| 91 |

#### Execute inference:

|

| 92 |

|

|

|

|

| 100 |

python -m monai.bundle ckpt_export network_def --filepath models/model.ts --ckpt_file models/model.pt --meta_file configs/metadata.json --config_file configs/inference.json

|

| 101 |

```

|

| 102 |

|

| 103 |

+

#### Export checkpoint to TensorRT based models with fp32 or fp16 precision:

|

| 104 |

+

|

| 105 |

+

```

|

| 106 |

+

python -m monai.bundle trt_export --net_id network_def --filepath models/model_trt.ts --ckpt_file models/model.pt --meta_file configs/metadata.json --config_file configs/inference.json --precision <fp32/fp16>

|

| 107 |

+

```

|

| 108 |

+

|

| 109 |

# References

|

| 110 |

[1] Tan, M. and Le, Q. V. Efficientnet: Rethinking model scaling for convolutional neural networks. ICML, 2019a. https://arxiv.org/pdf/1905.11946.pdf

|

| 111 |

|