Upload folder using huggingface_hub

Browse files- .gitattributes +2 -0

- README.md +108 -0

- image-0.png +3 -0

- image-1.png +0 -0

- image-10.png +0 -0

- image-2.png +0 -0

- image-3.png +3 -0

- image-4.png +0 -0

- image-5.png +0 -0

- image-6.png +0 -0

- image-7.png +0 -0

- image-8.png +0 -0

- image-9.png +0 -0

- logs/dreambooth-lora-sd-xl/1730932735.1719925/events.out.tfevents.1730932735.r-lfischbe-autotrain-m3d3quip-e8lv8cd9-f4de4-hnknu.212.1 +3 -0

- logs/dreambooth-lora-sd-xl/1730932735.1734807/hparams.yml +75 -0

- logs/dreambooth-lora-sd-xl/events.out.tfevents.1730932735.r-lfischbe-autotrain-m3d3quip-e8lv8cd9-f4de4-hnknu.212.0 +3 -0

- m3d3quip.safetensors +3 -0

- m3d3quip_emb.safetensors +3 -0

- pytorch_lora_weights.safetensors +3 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,5 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

image-0.png filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

image-3.png filter=lfs diff=lfs merge=lfs -text

|

README.md

ADDED

|

@@ -0,0 +1,108 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

tags:

|

| 3 |

+

- stable-diffusion-xl

|

| 4 |

+

- stable-diffusion-xl-diffusers

|

| 5 |

+

- text-to-image

|

| 6 |

+

- diffusers

|

| 7 |

+

- lora

|

| 8 |

+

- template:sd-lora

|

| 9 |

+

widget:

|

| 10 |

+

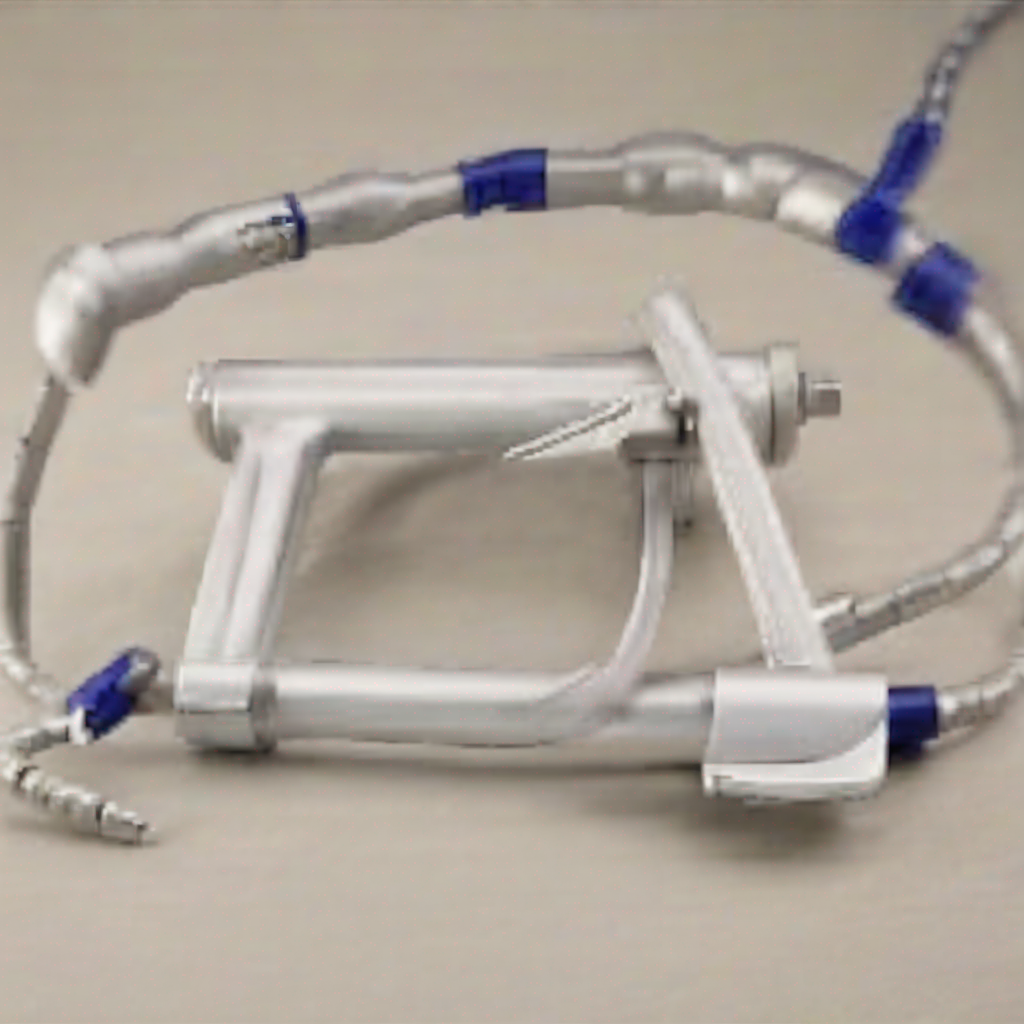

- text: A product photo of <s0><s1> a metal clamp with a hook attached to it

|

| 11 |

+

output:

|

| 12 |

+

url: image-0.png

|

| 13 |

+

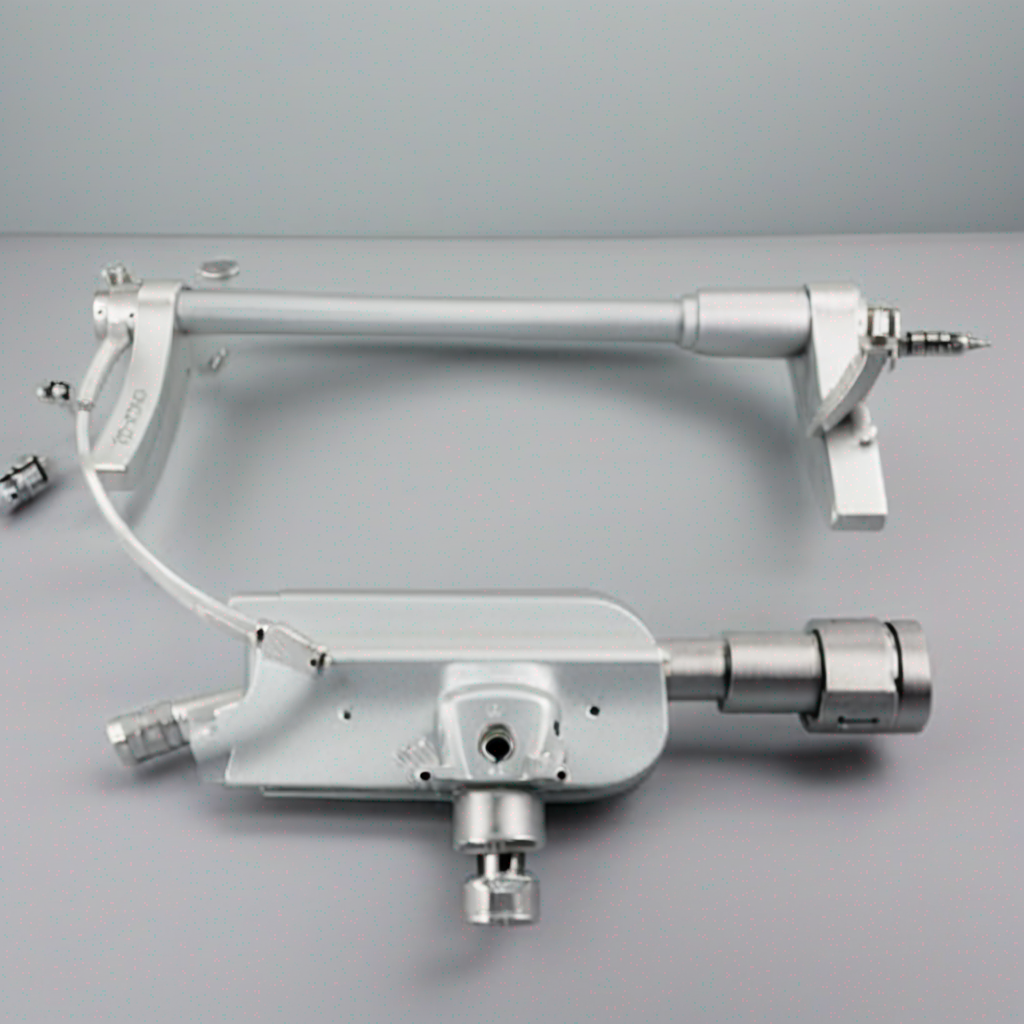

- text: A product photo of <s0><s1> a metal frame with a camera attached to it

|

| 14 |

+

output:

|

| 15 |

+

url: image-1.png

|

| 16 |

+

- text: A product photo of <s0><s1> a pair of metal bars with two handles

|

| 17 |

+

output:

|

| 18 |

+

url: image-2.png

|

| 19 |

+

- text: A product photo of <s0><s1> a green cover is on a bed in a hospital

|

| 20 |

+

output:

|

| 21 |

+

url: image-3.png

|

| 22 |

+

- text: A product photo of <s0><s1> two different images of a camera tripod and a

|

| 23 |

+

camera

|

| 24 |

+

output:

|

| 25 |

+

url: image-4.png

|

| 26 |

+

- text: A product photo of <s0><s1> a mannequin with a camera attached to it

|

| 27 |

+

output:

|

| 28 |

+

url: image-5.png

|

| 29 |

+

- text: A product photo of <s0><s1> a mannequin with a microphone attached to it

|

| 30 |

+

output:

|

| 31 |

+

url: image-6.png

|

| 32 |

+

- text: A product photo of <s0><s1> a metal pipe clamp with a handle

|

| 33 |

+

output:

|

| 34 |

+

url: image-7.png

|

| 35 |

+

- text: A product photo of <s0><s1> a white metal tripod with two arms

|

| 36 |

+

output:

|

| 37 |

+

url: image-8.png

|

| 38 |

+

- text: A product photo of <s0><s1> a metal pipe with a hose attached to it

|

| 39 |

+

output:

|

| 40 |

+

url: image-9.png

|

| 41 |

+

- text: A product photo of <s0><s1> a woman sitting on a chair with a medical device

|

| 42 |

+

output:

|

| 43 |

+

url: image-10.png

|

| 44 |

+

base_model: stabilityai/stable-diffusion-xl-base-1.0

|

| 45 |

+

instance_prompt: A product photo of <s0><s1>

|

| 46 |

+

license: openrail++

|

| 47 |

+

---

|

| 48 |

+

|

| 49 |

+

# SDXL LoRA DreamBooth - lfischbe/m3d3quip

|

| 50 |

+

|

| 51 |

+

<Gallery />

|

| 52 |

+

|

| 53 |

+

## Model description

|

| 54 |

+

|

| 55 |

+

### These are lfischbe/m3d3quip LoRA adaption weights for stabilityai/stable-diffusion-xl-base-1.0.

|

| 56 |

+

|

| 57 |

+

## Download model

|

| 58 |

+

|

| 59 |

+

### Use it with UIs such as AUTOMATIC1111, Comfy UI, SD.Next, Invoke

|

| 60 |

+

|

| 61 |

+

- **LoRA**: download **[`m3d3quip.safetensors` here 💾](/lfischbe/m3d3quip/blob/main/m3d3quip.safetensors)**.

|

| 62 |

+

- Place it on your `models/Lora` folder.

|

| 63 |

+

- On AUTOMATIC1111, load the LoRA by adding `<lora:m3d3quip:1>` to your prompt. On ComfyUI just [load it as a regular LoRA](https://comfyanonymous.github.io/ComfyUI_examples/lora/).

|

| 64 |

+

- *Embeddings*: download **[`m3d3quip_emb.safetensors` here 💾](/lfischbe/m3d3quip/blob/main/m3d3quip_emb.safetensors)**.

|

| 65 |

+

- Place it on it on your `embeddings` folder

|

| 66 |

+

- Use it by adding `m3d3quip_emb` to your prompt. For example, `A product photo of m3d3quip_emb`

|

| 67 |

+

(you need both the LoRA and the embeddings as they were trained together for this LoRA)

|

| 68 |

+

|

| 69 |

+

|

| 70 |

+

## Use it with the [🧨 diffusers library](https://github.com/huggingface/diffusers)

|

| 71 |

+

|

| 72 |

+

```py

|

| 73 |

+

from diffusers import AutoPipelineForText2Image

|

| 74 |

+

import torch

|

| 75 |

+

from huggingface_hub import hf_hub_download

|

| 76 |

+

from safetensors.torch import load_file

|

| 77 |

+

|

| 78 |

+

pipeline = AutoPipelineForText2Image.from_pretrained('stabilityai/stable-diffusion-xl-base-1.0', torch_dtype=torch.float16).to('cuda')

|

| 79 |

+

pipeline.load_lora_weights('lfischbe/m3d3quip', weight_name='pytorch_lora_weights.safetensors')

|

| 80 |

+

embedding_path = hf_hub_download(repo_id='lfischbe/m3d3quip', filename='m3d3quip_emb.safetensors' repo_type="model")

|

| 81 |

+

state_dict = load_file(embedding_path)

|

| 82 |

+

pipeline.load_textual_inversion(state_dict["clip_l"], token=["<s0>", "<s1>"], text_encoder=pipeline.text_encoder, tokenizer=pipeline.tokenizer)

|

| 83 |

+

pipeline.load_textual_inversion(state_dict["clip_g"], token=["<s0>", "<s1>"], text_encoder=pipeline.text_encoder_2, tokenizer=pipeline.tokenizer_2)

|

| 84 |

+

|

| 85 |

+

image = pipeline('A product photo of <s0><s1>').images[0]

|

| 86 |

+

```

|

| 87 |

+

|

| 88 |

+

For more details, including weighting, merging and fusing LoRAs, check the [documentation on loading LoRAs in diffusers](https://huggingface.co/docs/diffusers/main/en/using-diffusers/loading_adapters)

|

| 89 |

+

|

| 90 |

+

## Trigger words

|

| 91 |

+

|

| 92 |

+

To trigger image generation of trained concept(or concepts) replace each concept identifier in you prompt with the new inserted tokens:

|

| 93 |

+

|

| 94 |

+

to trigger concept `TOK` → use `<s0><s1>` in your prompt

|

| 95 |

+

|

| 96 |

+

|

| 97 |

+

|

| 98 |

+

## Details

|

| 99 |

+

All [Files & versions](/lfischbe/m3d3quip/tree/main).

|

| 100 |

+

|

| 101 |

+

The weights were trained using [🧨 diffusers Advanced Dreambooth Training Script](https://github.com/huggingface/diffusers/blob/main/examples/advanced_diffusion_training/train_dreambooth_lora_sdxl_advanced.py).

|

| 102 |

+

|

| 103 |

+

LoRA for the text encoder was enabled. False.

|

| 104 |

+

|

| 105 |

+

Pivotal tuning was enabled: True.

|

| 106 |

+

|

| 107 |

+

Special VAE used for training: madebyollin/sdxl-vae-fp16-fix.

|

| 108 |

+

|

image-0.png

ADDED

|

Git LFS Details

|

image-1.png

ADDED

|

image-10.png

ADDED

|

image-2.png

ADDED

|

image-3.png

ADDED

|

Git LFS Details

|

image-4.png

ADDED

|

image-5.png

ADDED

|

image-6.png

ADDED

|

image-7.png

ADDED

|

image-8.png

ADDED

|

image-9.png

ADDED

|

logs/dreambooth-lora-sd-xl/1730932735.1719925/events.out.tfevents.1730932735.r-lfischbe-autotrain-m3d3quip-e8lv8cd9-f4de4-hnknu.212.1

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:c36b016ed89ba7f08b3e239932358b7f16a2e5e7f9f3d9c0a24a505143d8b17a

|

| 3 |

+

size 3574

|

logs/dreambooth-lora-sd-xl/1730932735.1734807/hparams.yml

ADDED

|

@@ -0,0 +1,75 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

adam_beta1: 0.9

|

| 2 |

+

adam_beta2: 0.999

|

| 3 |

+

adam_epsilon: 1.0e-08

|

| 4 |

+

adam_weight_decay: 0.0001

|

| 5 |

+

adam_weight_decay_text_encoder: null

|

| 6 |

+

allow_tf32: false

|

| 7 |

+

cache_dir: null

|

| 8 |

+

cache_latents: true

|

| 9 |

+

caption_column: prompt

|

| 10 |

+

center_crop: false

|

| 11 |

+

checkpointing_steps: 100000

|

| 12 |

+

checkpoints_total_limit: null

|

| 13 |

+

class_data_dir: null

|

| 14 |

+

class_prompt: null

|

| 15 |

+

crops_coords_top_left_h: 0

|

| 16 |

+

crops_coords_top_left_w: 0

|

| 17 |

+

dataloader_num_workers: 0

|

| 18 |

+

dataset_config_name: null

|

| 19 |

+

dataset_name: ./dfc42362-1941-4d46-bb44-1981a13b98a6

|

| 20 |

+

enable_xformers_memory_efficient_attention: false

|

| 21 |

+

gradient_accumulation_steps: 1

|

| 22 |

+

gradient_checkpointing: true

|

| 23 |

+

hub_model_id: null

|

| 24 |

+

hub_token: null

|

| 25 |

+

image_column: image

|

| 26 |

+

instance_data_dir: null

|

| 27 |

+

instance_prompt: A product photo of <s0><s1>

|

| 28 |

+

learning_rate: 1.0

|

| 29 |

+

local_rank: -1

|

| 30 |

+

logging_dir: logs

|

| 31 |

+

lr_num_cycles: 1

|

| 32 |

+

lr_power: 1.0

|

| 33 |

+

lr_scheduler: constant

|

| 34 |

+

lr_warmup_steps: 0

|

| 35 |

+

max_grad_norm: 1.0

|

| 36 |

+

max_train_steps: 550

|

| 37 |

+

mixed_precision: bf16

|

| 38 |

+

noise_offset: 0

|

| 39 |

+

num_class_images: 100

|

| 40 |

+

num_new_tokens_per_abstraction: 2

|

| 41 |

+

num_train_epochs: 50

|

| 42 |

+

num_validation_images: 4

|

| 43 |

+

optimizer: prodigy

|

| 44 |

+

output_dir: m3d3quip

|

| 45 |

+

pretrained_model_name_or_path: stabilityai/stable-diffusion-xl-base-1.0

|

| 46 |

+

pretrained_vae_model_name_or_path: madebyollin/sdxl-vae-fp16-fix

|

| 47 |

+

prior_generation_precision: null

|

| 48 |

+

prior_loss_weight: 1.0

|

| 49 |

+

prodigy_beta3: null

|

| 50 |

+

prodigy_decouple: true

|

| 51 |

+

prodigy_safeguard_warmup: true

|

| 52 |

+

prodigy_use_bias_correction: true

|

| 53 |

+

push_to_hub: false

|

| 54 |

+

rank: 16

|

| 55 |

+

repeats: 2

|

| 56 |

+

report_to: tensorboard

|

| 57 |

+

resolution: 1024

|

| 58 |

+

resume_from_checkpoint: null

|

| 59 |

+

revision: null

|

| 60 |

+

sample_batch_size: 4

|

| 61 |

+

scale_lr: false

|

| 62 |

+

seed: 42

|

| 63 |

+

snr_gamma: null

|

| 64 |

+

text_encoder_lr: 1.0

|

| 65 |

+

token_abstraction: TOK

|

| 66 |

+

train_batch_size: 2

|

| 67 |

+

train_text_encoder: false

|

| 68 |

+

train_text_encoder_frac: 1.0

|

| 69 |

+

train_text_encoder_ti: true

|

| 70 |

+

train_text_encoder_ti_frac: 0.5

|

| 71 |

+

use_8bit_adam: false

|

| 72 |

+

validation_epochs: 50

|

| 73 |

+

validation_prompt: null

|

| 74 |

+

variant: null

|

| 75 |

+

with_prior_preservation: false

|

logs/dreambooth-lora-sd-xl/events.out.tfevents.1730932735.r-lfischbe-autotrain-m3d3quip-e8lv8cd9-f4de4-hnknu.212.0

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:af97a52a163fc7643aa721a113306e5054d5940e9af7f212be9e7a7c4dff3a30

|

| 3 |

+

size 46034

|

m3d3quip.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:12895c4d36a88f898d4f4b293adb9f6ab26d2d2fe90e75944ca303d88b01ef85

|

| 3 |

+

size 93148104

|

m3d3quip_emb.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:2723c82b12a582734bffe6de0c1f6fae17af7fce95786608052874cab87f9652

|

| 3 |

+

size 16536

|

pytorch_lora_weights.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:56bbd7fcad6e7c1be12b9e3c61f1367282211284ab5c8ddfbe7e4ecc45d70f27

|

| 3 |

+

size 93065304

|