---

license: cc-by-nc-sa-4.0

---

# OOTDiffusion

[Our OOTDiffusion GitHub repository](https://github.com/levihsu/OOTDiffusion)

🤗 [Try out OOTDiffusion](https://huggingface.co./spaces/levihsu/OOTDiffusion)

(Thanks to [ZeroGPU](https://huggingface.co./zero-gpu-explorers) for providing A100 GPUs)

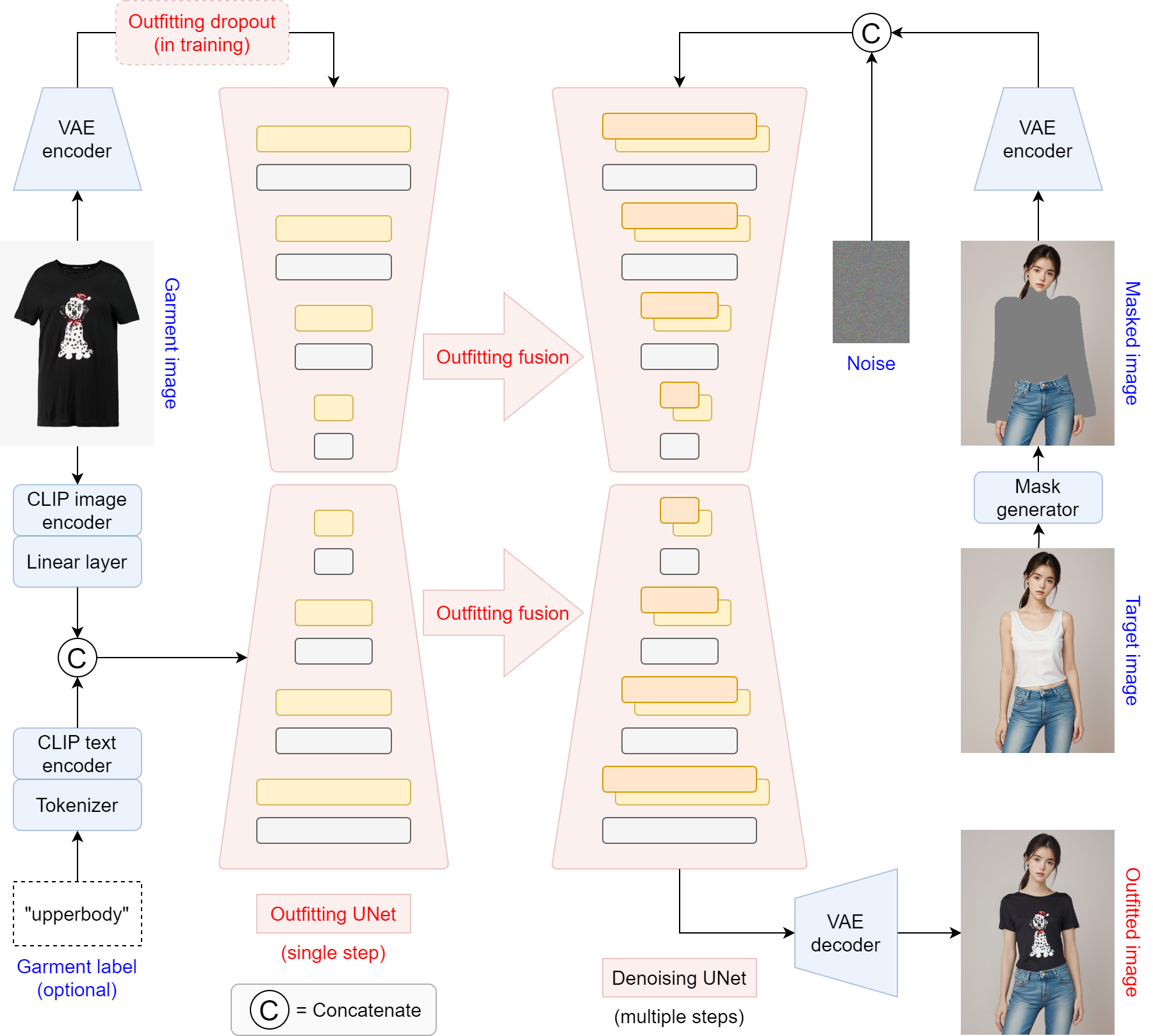

> **OOTDiffusion: Outfitting Fusion based Latent Diffusion for Controllable Virtual Try-on** [[arXiv paper](https://arxiv.org/abs/2403.01779)]

> [Yuhao Xu](http://levihsu.github.io/), [Tao Gu](https://github.com/T-Gu), [Weifeng Chen](https://github.com/ShineChen1024), [Chengcai Chen](https://www.researchgate.net/profile/Chengcai-Chen)

> Xiao-i Research

Our model checkpoints trained on [VITON-HD](https://github.com/shadow2496/VITON-HD) (half-body) and [Dress Code](https://github.com/aimagelab/dress-code) (full-body) have been released

* 📢📢 We support ONNX for [humanparsing](https://github.com/GoGoDuck912/Self-Correction-Human-Parsing) now. Most environmental issues should have been addressed : )

* Please also download [clip-vit-large-patch14](https://huggingface.co./openai/clip-vit-large-patch14) into ***checkpoints*** folder

* We've only tested our code and models on Linux (Ubuntu 22.04)

## Citation

```

@article{xu2024ootdiffusion,

title={OOTDiffusion: Outfitting Fusion based Latent Diffusion for Controllable Virtual Try-on},

author={Xu, Yuhao and Gu, Tao and Chen, Weifeng and Chen, Chengcai},

journal={arXiv preprint arXiv:2403.01779},

year={2024}

}

```