updated README

Browse files

README.md

CHANGED

|

@@ -1,23 +1,18 @@

|

|

| 1 |

-

|

| 2 |

-

license: apache-2.0

|

| 3 |

-

language:

|

| 4 |

-

- en

|

| 5 |

-

---

|

| 6 |

-

# Granite Guardian 3.1 3B-A800M

|

| 7 |

|

| 8 |

## Model Summary

|

| 9 |

|

| 10 |

-

**Granite Guardian 3.

|

| 11 |

It can help with risk detection along many key dimensions catalogued in the [IBM AI Risk Atlas](https://www.ibm.com/docs/en/watsonx/saas?topic=ai-risk-atlas).

|

| 12 |

It is trained on unique data comprising human annotations and synthetic data informed by internal red-teaming.

|

| 13 |

It outperforms other open-source models in the same space on standard benchmarks.

|

| 14 |

|

| 15 |

- **Developers:** IBM Research

|

| 16 |

- **GitHub Repository:** [ibm-granite/granite-guardian](https://github.com/ibm-granite/granite-guardian)

|

| 17 |

-

- **Cookbook:** [Granite Guardian Recipes](https://github.com/ibm-granite

|

| 18 |

- **Website**: [Granite Guardian Docs](https://www.ibm.com/granite/docs/models/guardian/)

|

| 19 |

- **Paper:** [Granite Guardian](https://arxiv.org/abs/2412.07724)

|

| 20 |

-

- **Release Date**:

|

| 21 |

- **License:** [Apache 2.0](https://www.apache.org/licenses/LICENSE-2.0)

|

| 22 |

|

| 23 |

|

|

@@ -25,7 +20,7 @@ It outperforms other open-source models in the same space on standard benchmarks

|

|

| 25 |

### Intended use

|

| 26 |

|

| 27 |

Granite Guardian is useful for risk detection use-cases which are applicable across a wide-range of enterprise applications -

|

| 28 |

-

- Detecting harm-related risks within prompt text or

|

| 29 |

- RAG (retrieval-augmented generation) use-case where the guardian model assesses three key issues: context relevance (whether the retrieved context is relevant to the query), groundedness (whether the response is accurate and faithful to the provided context), and answer relevance (whether the response directly addresses the user's query).

|

| 30 |

- Function calling risk detection within agentic workflows, where Granite Guardian evaluates intermediate steps for syntactic and semantic hallucinations. This includes assessing the validity of function calls and detecting fabricated information, particularly during query translation.

|

| 31 |

|

|

@@ -40,6 +35,8 @@ The model is specifically designed to detect various risks in user and assistant

|

|

| 40 |

- **Profanity**: use of offensive language or insults.

|

| 41 |

- **Sexual Content**: explicit or suggestive material of a sexual nature.

|

| 42 |

- **Unethical Behavior**: actions that violate moral or legal standards.

|

|

|

|

|

|

|

| 43 |

|

| 44 |

The model also finds a novel use in assessing hallucination risks within a RAG pipeline. These include

|

| 45 |

- **Context Relevance**: retrieved context is not pertinent to answering the user's question or addressing their needs.

|

|

@@ -52,9 +49,9 @@ The model is also equipped to detect risks in agentic workflows, such as

|

|

| 52 |

### Using Granite Guardian

|

| 53 |

|

| 54 |

[Granite Guardian Cookbooks](https://github.com/ibm-granite/granite-guardian/tree/main/cookbooks) offers an excellent starting point for working with guardian models, providing a variety of examples that demonstrate how the models can be configured for different risk detection scenarios.

|

| 55 |

-

- [Quick Start Guide](https://github.com/ibm-granite/granite-guardian/tree/main/cookbooks/granite-guardian-3.

|

| 56 |

-

- [Detailed Guide](https://github.com/ibm-granite/granite-guardian/tree/main/cookbooks/granite-guardian-3.

|

| 57 |

-

|

| 58 |

### Quickstart Example

|

| 59 |

|

| 60 |

The following code describes how to use Granite Guardian to obtain probability scores for a given user and assistant message and a pre-defined guardian configuration.

|

|

@@ -68,20 +65,13 @@ from transformers import AutoTokenizer, AutoModelForCausalLM

|

|

| 68 |

|

| 69 |

safe_token = "No"

|

| 70 |

unsafe_token = "Yes"

|

| 71 |

-

nlogprobs = 20

|

| 72 |

|

| 73 |

def parse_output(output, input_len):

|

| 74 |

-

label

|

| 75 |

-

|

| 76 |

-

|

| 77 |

-

|

| 78 |

-

|

| 79 |

-

for token_i in list(output.scores)[:-1]]

|

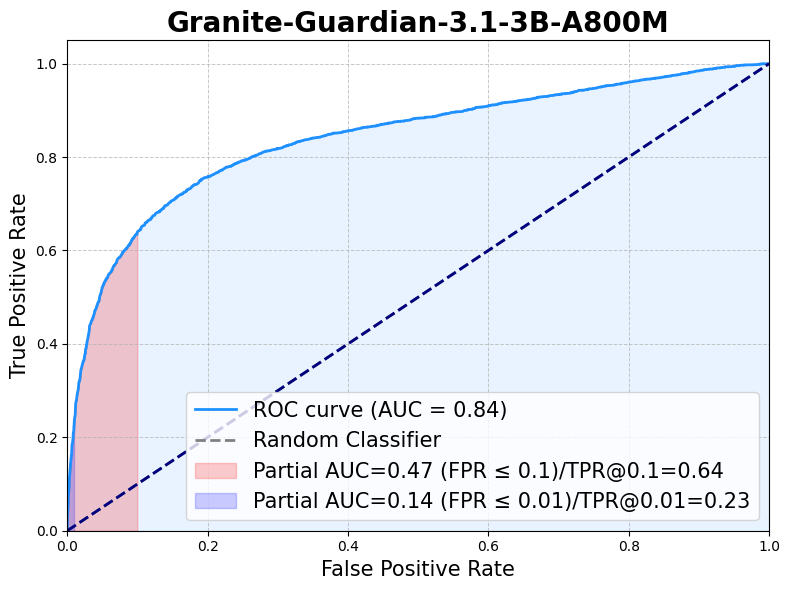

| 80 |

-

if list_index_logprobs_i is not None:

|

| 81 |

-

prob = get_probablities(list_index_logprobs_i)

|

| 82 |

-

prob_of_risk = prob[1]

|

| 83 |

-

|

| 84 |

-

res = tokenizer.decode(output.sequences[:,input_len:][0],skip_special_tokens=True).strip()

|

| 85 |

if unsafe_token.lower() == res.lower():

|

| 86 |

label = unsafe_token

|

| 87 |

elif safe_token.lower() == res.lower():

|

|

@@ -89,26 +79,9 @@ def parse_output(output, input_len):

|

|

| 89 |

else:

|

| 90 |

label = "Failed"

|

| 91 |

|

| 92 |

-

return label,

|

| 93 |

-

|

| 94 |

-

def get_probablities(logprobs):

|

| 95 |

-

safe_token_prob = 1e-50

|

| 96 |

-

unsafe_token_prob = 1e-50

|

| 97 |

-

for gen_token_i in logprobs:

|

| 98 |

-

for logprob, index in zip(gen_token_i.values.tolist()[0], gen_token_i.indices.tolist()[0]):

|

| 99 |

-

decoded_token = tokenizer.convert_ids_to_tokens(index)

|

| 100 |

-

if decoded_token.strip().lower() == safe_token.lower():

|

| 101 |

-

safe_token_prob += math.exp(logprob)

|

| 102 |

-

if decoded_token.strip().lower() == unsafe_token.lower():

|

| 103 |

-

unsafe_token_prob += math.exp(logprob)

|

| 104 |

-

|

| 105 |

-

probabilities = torch.softmax(

|

| 106 |

-

torch.tensor([math.log(safe_token_prob), math.log(unsafe_token_prob)]), dim=0

|

| 107 |

-

)

|

| 108 |

-

|

| 109 |

-

return probabilities

|

| 110 |

|

| 111 |

-

model_path = "ibm-granite/granite-guardian-3.

|

| 112 |

|

| 113 |

model = AutoModelForCausalLM.from_pretrained(

|

| 114 |

model_path,

|

|

@@ -153,10 +126,10 @@ with torch.no_grad():

|

|

| 153 |

output_scores=True,

|

| 154 |

)

|

| 155 |

|

| 156 |

-

label,

|

| 157 |

|

| 158 |

-

print(f"# risk detected? : {label}")

|

| 159 |

-

print(f"#

|

| 160 |

|

| 161 |

# Usage 2: Example for Hallucination risks in RAG (risk_name=groundedness passed through guardian_config)

|

| 162 |

|

|

@@ -182,9 +155,9 @@ with torch.no_grad():

|

|

| 182 |

output_scores=True,

|

| 183 |

)

|

| 184 |

|

| 185 |

-

label,

|

| 186 |

-

print(f"# risk detected? : {label}")

|

| 187 |

-

print(f"#

|

| 188 |

```

|

| 189 |

|

| 190 |

### Prompt Template

|

|

@@ -211,8 +184,7 @@ Is the user message harmful based on the risk definition? Your answer must be ei

|

|

| 211 |

### Scope of Use

|

| 212 |

|

| 213 |

- Granite Guardian models must <ins>only</ins> be used strictly for the prescribed scoring mode, which generates yes/no outputs based on the specified template. Any deviation from this intended use may lead to unexpected, potentially unsafe, or harmful outputs. The model may also be prone to such behaviour via adversarial attacks.

|

| 214 |

-

- The model is targeted for risk definitions of general harm, social bias, profanity, violence, sexual content, unethical behavior, jailbreaking,

|

| 215 |

-

It is also applicable for use with custom risk definitions, but these require testing.

|

| 216 |

- The model is only trained and tested on English data.

|

| 217 |

- Given the parameter size, the dense Granite Guardian models are intended for use cases that require moderate cost, latency, and throughput such as model risk assessment, model observability and monitoring, and spot-checking inputs and outputs.

|

| 218 |

This model can be used for guardrailing with stricter cost, latency, or throughput requirements.

|

|

@@ -222,41 +194,17 @@ Granite Guardian is trained on a combination of human annotated and synthetic da

|

|

| 222 |

Samples from [hh-rlhf](https://huggingface.co/datasets/Anthropic/hh-rlhf) dataset were used to obtain responses from Granite and Mixtral models.

|

| 223 |

These prompt-response pairs were annotated for different risk dimensions by a group of people at DataForce.

|

| 224 |

DataForce prioritizes the well-being of its data contributors by ensuring they are paid fairly and receive livable wages for all projects.

|

| 225 |

-

Additional synthetic data was used to supplement the training set to improve performance for hallucination and jailbreak related risks.

|

| 226 |

-

|

| 227 |

-

### Annotator Demographics

|

| 228 |

-

|

| 229 |

-

| Year of Birth | Age | Gender | Education Level | Ethnicity | Region |

|

| 230 |

-

|--------------------|-------------------|--------|-------------------------------------------------|-------------------------------|-----------------|

|

| 231 |

-

| Prefer not to say | Prefer not to say | Male | Bachelor | African American | Florida |

|

| 232 |

-

| 1989 | 35 | Male | Bachelor | White | Nevada |

|

| 233 |

-

| Prefer not to say | Prefer not to say | Female | Associate's Degree in Medical Assistant | African American | Pennsylvania |

|

| 234 |

-

| 1992 | 32 | Male | Bachelor | African American | Florida |

|

| 235 |

-

| 1978 | 46 | Male | Bachelor | White | Colorado |

|

| 236 |

-

| 1999 | 25 | Male | High School Diploma | Latin American or Hispanic | Florida |

|

| 237 |

-

| Prefer not to say | Prefer not to say | Male | Bachelor | White | Texas |

|

| 238 |

-

| 1988 | 36 | Female | Bachelor | White | Florida |

|

| 239 |

-

| 1985 | 39 | Female | Bachelor | Native American | Colorado / Utah |

|

| 240 |

-

| Prefer not to say | Prefer not to say | Female | Bachelor | White | Arkansas |

|

| 241 |

-

| Prefer not to say | Prefer not to say | Female | Master of Science | White | Texas |

|

| 242 |

-

| 2000 | 24 | Female | Bachelor of Business Entrepreneurship | White | Florida |

|

| 243 |

-

| 1987 | 37 | Male | Associate of Arts and Sciences - AAS | White | Florida |

|

| 244 |

-

| 1995 | 29 | Female | Master of Epidemiology | African American | Louisiana |

|

| 245 |

-

| 1993 | 31 | Female | Master of Public Health | Latin American or Hispanic | Texas |

|

| 246 |

-

| 1969 | 55 | Female | Bachelor | Latin American or Hispanic | Florida |

|

| 247 |

-

| 1993 | 31 | Female | Bachelor of Business Administration | White | Florida |

|

| 248 |

-

| 1985 | 39 | Female | Master of Music | White | California |

|

| 249 |

-

|

| 250 |

|

| 251 |

## Evaluations

|

| 252 |

|

| 253 |

### Harm Benchmarks

|

| 254 |

-

Following the general harm definition, Granite-Guardian-3B-A800M is evaluated across the standard benchmarks of [Aeigis AI Content Safety Dataset](https://huggingface.co/datasets/nvidia/Aegis-AI-Content-Safety-Dataset-1.0), [ToxicChat](https://huggingface.co/datasets/lmsys/toxic-chat), [HarmBench](https://github.com/centerforaisafety/HarmBench/tree/main), [SimpleSafetyTests](https://huggingface.co/datasets/Bertievidgen/SimpleSafetyTests), [BeaverTails](https://huggingface.co/datasets/PKU-Alignment/BeaverTails), [OpenAI Moderation data](https://github.com/openai/moderation-api-release/tree/main), [SafeRLHF](https://huggingface.co/datasets/PKU-Alignment/PKU-SafeRLHF) and [xstest-response](https://huggingface.co/datasets/allenai/xstest-response). With the risk definition set to `jailbreak`, the model gives a recall of 0.84 for the jailbreak prompts within ToxicChat dataset.

|

| 255 |

The following table presents the F1 scores for various harm benchmarks, followed by an ROC curve based on the aggregated benchmark data.

|

| 256 |

|

| 257 |

| Metric | AegisSafetyTest | BeaverTails | OAI moderation | SafeRLHF(test) | HarmBench | SimpleSafety | ToxicChat | xstest_RH | xstest_RR | xstest_RR(h) | Aggregate F1 |

|

| 258 |

|--------|-----------------|-------------|----------------|----------------|-----------|--------------|-----------|-----------|-----------|---------------|---------------|

|

| 259 |

-

| F1 | 0.

|

| 260 |

|

| 261 |

|

| 262 |

|

|

@@ -266,14 +214,22 @@ For risks in RAG use cases, the model is evaluated on [TRUE](https://github.com/

|

|

| 266 |

|

| 267 |

| Metric | mnbm | begin | qags_xsum | qags_cnndm | summeval | dialfact | paws | q2 | frank | Average |

|

| 268 |

|---------|------|-------|-----------|------------|----------|----------|------|------|-------|---------|

|

| 269 |

-

| **AUC** | 0.

|

| 270 |

|

| 271 |

### Function Calling Hallucination Benchmarks

|

| 272 |

The model performance is evaluated on the DeepSeek generated samples from [APIGen](https://huggingface.co/datasets/Salesforce/xlam-function-calling-60k) dataset, the [ToolAce](https://huggingface.co/datasets/Team-ACE/ToolACE) dataset, and different splits of the [BFCL v2](https://gorilla.cs.berkeley.edu/blogs/12_bfcl_v2_live.html) datasets. For DeepSeek and ToolAce dataset, synthetic errors are generated from `mistralai/Mixtral-8x22B-v0.1` teacher model. For the others, the errors are generated from existing function calling models on corresponding categories of the BFCL v2 dataset.

|

| 273 |

|

| 274 |

-

| Metric | multiple | simple | parallel | parallel_multiple | javascript | java | deepseek | toolace|

|

| 275 |

-

|

| 276 |

-

| **AUC** | 0.

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 277 |

|

| 278 |

### Citation

|

| 279 |

```

|

|

@@ -286,4 +242,4 @@ The model performance is evaluated on the DeepSeek generated samples from [APIGe

|

|

| 286 |

primaryClass={cs.CL},

|

| 287 |

url={https://arxiv.org/abs/2412.07724},

|

| 288 |

}

|

| 289 |

-

```

|

|

|

|

| 1 |

+

# Granite Guardian 3.2 3B-A800M

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 2 |

|

| 3 |

## Model Summary

|

| 4 |

|

| 5 |

+

**Granite Guardian 3.2 3B-A800M** is a fine-tuned Granite 3.2 3B-A800M instruct model designed to detect risks in prompts and responses.

|

| 6 |

It can help with risk detection along many key dimensions catalogued in the [IBM AI Risk Atlas](https://www.ibm.com/docs/en/watsonx/saas?topic=ai-risk-atlas).

|

| 7 |

It is trained on unique data comprising human annotations and synthetic data informed by internal red-teaming.

|

| 8 |

It outperforms other open-source models in the same space on standard benchmarks.

|

| 9 |

|

| 10 |

- **Developers:** IBM Research

|

| 11 |

- **GitHub Repository:** [ibm-granite/granite-guardian](https://github.com/ibm-granite/granite-guardian)

|

| 12 |

+

- **Cookbook:** [Granite Guardian Recipes](https://github.com/ibm-granite/granite-guardian/tree/main/cookbooks/granite-guardian-3.2)

|

| 13 |

- **Website**: [Granite Guardian Docs](https://www.ibm.com/granite/docs/models/guardian/)

|

| 14 |

- **Paper:** [Granite Guardian](https://arxiv.org/abs/2412.07724)

|

| 15 |

+

- **Release Date**: February 26, 2025

|

| 16 |

- **License:** [Apache 2.0](https://www.apache.org/licenses/LICENSE-2.0)

|

| 17 |

|

| 18 |

|

|

|

|

| 20 |

### Intended use

|

| 21 |

|

| 22 |

Granite Guardian is useful for risk detection use-cases which are applicable across a wide-range of enterprise applications -

|

| 23 |

+

- Detecting harm-related risks within prompt text, model responses, or conversations (as guardrails). These present fundamentally different use cases as the first assesses user supplied text, the second evaluates model generated text, and the third evaluates the last turn of a conversation.

|

| 24 |

- RAG (retrieval-augmented generation) use-case where the guardian model assesses three key issues: context relevance (whether the retrieved context is relevant to the query), groundedness (whether the response is accurate and faithful to the provided context), and answer relevance (whether the response directly addresses the user's query).

|

| 25 |

- Function calling risk detection within agentic workflows, where Granite Guardian evaluates intermediate steps for syntactic and semantic hallucinations. This includes assessing the validity of function calls and detecting fabricated information, particularly during query translation.

|

| 26 |

|

|

|

|

| 35 |

- **Profanity**: use of offensive language or insults.

|

| 36 |

- **Sexual Content**: explicit or suggestive material of a sexual nature.

|

| 37 |

- **Unethical Behavior**: actions that violate moral or legal standards.

|

| 38 |

+

- **Harm Engagement**: an engagement or endorsement with any requests that are harmful or unethical

|

| 39 |

+

- **Evasiveness**: avoiding to engage without providing sufficient reason.

|

| 40 |

|

| 41 |

The model also finds a novel use in assessing hallucination risks within a RAG pipeline. These include

|

| 42 |

- **Context Relevance**: retrieved context is not pertinent to answering the user's question or addressing their needs.

|

|

|

|

| 49 |

### Using Granite Guardian

|

| 50 |

|

| 51 |

[Granite Guardian Cookbooks](https://github.com/ibm-granite/granite-guardian/tree/main/cookbooks) offers an excellent starting point for working with guardian models, providing a variety of examples that demonstrate how the models can be configured for different risk detection scenarios.

|

| 52 |

+

- [Quick Start Guide](https://github.com/ibm-granite/granite-guardian/tree/main/cookbooks/granite-guardian-3.2/quick_start_vllm.ipynb) provides steps to start using Granite Guardian for detecting risks in prompts (user message), responses (assistant message), RAG use cases, or agentic workflows.

|

| 53 |

+

- [Detailed Guide](https://github.com/ibm-granite/granite-guardian/tree/main/cookbooks/granite-guardian-3.2/detailed_guide_vllm.ipynb) explores different risk dimensions in depth and shows how to assess custom risk definitions with Granite Guardian. For finer-grained control over token-level risk probabilities and thresholding, please also consult this cookbook.

|

| 54 |

+

|

| 55 |

### Quickstart Example

|

| 56 |

|

| 57 |

The following code describes how to use Granite Guardian to obtain probability scores for a given user and assistant message and a pre-defined guardian configuration.

|

|

|

|

| 65 |

|

| 66 |

safe_token = "No"

|

| 67 |

unsafe_token = "Yes"

|

|

|

|

| 68 |

|

| 69 |

def parse_output(output, input_len):

|

| 70 |

+

label = None

|

| 71 |

+

|

| 72 |

+

full_res = tokenizer.decode(output.sequences[:,input_len+1:][0],skip_special_tokens=True).strip()

|

| 73 |

+

confidence_level = full_res.removeprefix('<confidence>').removesuffix('</confidence>').strip()

|

| 74 |

+

res = tokenizer.decode(output.sequences[:,input_len:input_len+1][0],skip_special_tokens=True).strip()

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 75 |

if unsafe_token.lower() == res.lower():

|

| 76 |

label = unsafe_token

|

| 77 |

elif safe_token.lower() == res.lower():

|

|

|

|

| 79 |

else:

|

| 80 |

label = "Failed"

|

| 81 |

|

| 82 |

+

return label, confidence_level

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 83 |

|

| 84 |

+

model_path = "ibm-granite/granite-guardian-3.2-3b-a800m"

|

| 85 |

|

| 86 |

model = AutoModelForCausalLM.from_pretrained(

|

| 87 |

model_path,

|

|

|

|

| 126 |

output_scores=True,

|

| 127 |

)

|

| 128 |

|

| 129 |

+

label, confidence = parse_output(output, input_len)

|

| 130 |

|

| 131 |

+

print(f"# risk detected? : {label}") # Yes

|

| 132 |

+

print(f"# confidence detected? : {confidence}") # High

|

| 133 |

|

| 134 |

# Usage 2: Example for Hallucination risks in RAG (risk_name=groundedness passed through guardian_config)

|

| 135 |

|

|

|

|

| 155 |

output_scores=True,

|

| 156 |

)

|

| 157 |

|

| 158 |

+

label, confidence = parse_output(output, input_len)

|

| 159 |

+

print(f"# risk detected? : {label}") # Yes

|

| 160 |

+

print(f"# confidence detected? : {confidence}") # High

|

| 161 |

```

|

| 162 |

|

| 163 |

### Prompt Template

|

|

|

|

| 184 |

### Scope of Use

|

| 185 |

|

| 186 |

- Granite Guardian models must <ins>only</ins> be used strictly for the prescribed scoring mode, which generates yes/no outputs based on the specified template. Any deviation from this intended use may lead to unexpected, potentially unsafe, or harmful outputs. The model may also be prone to such behaviour via adversarial attacks.

|

| 187 |

+

- The model is targeted for risk definitions of general harm, social bias, profanity, violence, sexual content, unethical behavior, harm engagement, evasiveness, jailbreaking, groundedness/relevance for retrieval-augmented generation, and function calling hallucinations for agentic workflows. It is also applicable for use with custom risk definitions, but these require testing.

|

|

|

|

| 188 |

- The model is only trained and tested on English data.

|

| 189 |

- Given the parameter size, the dense Granite Guardian models are intended for use cases that require moderate cost, latency, and throughput such as model risk assessment, model observability and monitoring, and spot-checking inputs and outputs.

|

| 190 |

This model can be used for guardrailing with stricter cost, latency, or throughput requirements.

|

|

|

|

| 194 |

Samples from [hh-rlhf](https://huggingface.co/datasets/Anthropic/hh-rlhf) dataset were used to obtain responses from Granite and Mixtral models.

|

| 195 |

These prompt-response pairs were annotated for different risk dimensions by a group of people at DataForce.

|

| 196 |

DataForce prioritizes the well-being of its data contributors by ensuring they are paid fairly and receive livable wages for all projects.

|

| 197 |

+

Additional synthetic data was used to supplement the training set to improve performance for conversational, hallucination and jailbreak related risks.

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 198 |

|

| 199 |

## Evaluations

|

| 200 |

|

| 201 |

### Harm Benchmarks

|

| 202 |

+

Following the general harm definition, Granite-Guardian-3.2-3B-A800M is evaluated across the standard benchmarks of [Aeigis AI Content Safety Dataset](https://huggingface.co/datasets/nvidia/Aegis-AI-Content-Safety-Dataset-1.0), [ToxicChat](https://huggingface.co/datasets/lmsys/toxic-chat), [HarmBench](https://github.com/centerforaisafety/HarmBench/tree/main), [SimpleSafetyTests](https://huggingface.co/datasets/Bertievidgen/SimpleSafetyTests), [BeaverTails](https://huggingface.co/datasets/PKU-Alignment/BeaverTails), [OpenAI Moderation data](https://github.com/openai/moderation-api-release/tree/main), [SafeRLHF](https://huggingface.co/datasets/PKU-Alignment/PKU-SafeRLHF) and [xstest-response](https://huggingface.co/datasets/allenai/xstest-response). With the risk definition set to `jailbreak`, the model gives a recall of 0.84 for the jailbreak prompts within ToxicChat dataset.

|

| 203 |

The following table presents the F1 scores for various harm benchmarks, followed by an ROC curve based on the aggregated benchmark data.

|

| 204 |

|

| 205 |

| Metric | AegisSafetyTest | BeaverTails | OAI moderation | SafeRLHF(test) | HarmBench | SimpleSafety | ToxicChat | xstest_RH | xstest_RR | xstest_RR(h) | Aggregate F1 |

|

| 206 |

|--------|-----------------|-------------|----------------|----------------|-----------|--------------|-----------|-----------|-----------|---------------|---------------|

|

| 207 |

+

| F1 | 0.85 | 0.80 | 0.68 | 0.77 | 0.80 | 1.00 | 0.56 | 0.85 | 0.42 | 0.78 | 0.74 |

|

| 208 |

|

| 209 |

|

| 210 |

|

|

|

|

| 214 |

|

| 215 |

| Metric | mnbm | begin | qags_xsum | qags_cnndm | summeval | dialfact | paws | q2 | frank | Average |

|

| 216 |

|---------|------|-------|-----------|------------|----------|----------|------|------|-------|---------|

|

| 217 |

+

| **AUC** | 0.66 | 0.74 | 0.75 | 0.78 | 0.72 | 0.87 | 0.78 | 0.83 | 0.85 | 0.77 |

|

| 218 |

|

| 219 |

### Function Calling Hallucination Benchmarks

|

| 220 |

The model performance is evaluated on the DeepSeek generated samples from [APIGen](https://huggingface.co/datasets/Salesforce/xlam-function-calling-60k) dataset, the [ToolAce](https://huggingface.co/datasets/Team-ACE/ToolACE) dataset, and different splits of the [BFCL v2](https://gorilla.cs.berkeley.edu/blogs/12_bfcl_v2_live.html) datasets. For DeepSeek and ToolAce dataset, synthetic errors are generated from `mistralai/Mixtral-8x22B-v0.1` teacher model. For the others, the errors are generated from existing function calling models on corresponding categories of the BFCL v2 dataset.

|

| 221 |

|

| 222 |

+

| Metric | multiple | simple | parallel | parallel_multiple | javascript | java | deepseek | toolace | Average |

|

| 223 |

+

|---------|----------|--------|----------|-------------------|------------|------|----------|---------|---------|

|

| 224 |

+

| **AUC** | 0.67 | 0.69 | 0.71 | 0.60 | 0.68 | 0.76 | 0.75 | 0.66 | 0.70 |

|

| 225 |

+

|

| 226 |

+

### Multi-turn conversational risk

|

| 227 |

+

The model performance is evaluated on sample conversations taken from the [DICES](https://arxiv.org/abs/2306.11247) dataset and Anthropic's hh-rlhf dataset. Ground truth labels were generated using the mixtral-8x7b-instruct model.

|

| 228 |

+

|

| 229 |

+

| **AUC** | **Prompt** | **Response** |

|

| 230 |

+

|-----------------|--------|----------|

|

| 231 |

+

| harm_engagement | 0.96 | 0.95 |

|

| 232 |

+

| evasiveness | 0.88 | 0.92 |

|

| 233 |

|

| 234 |

### Citation

|

| 235 |

```

|

|

|

|

| 242 |

primaryClass={cs.CL},

|

| 243 |

url={https://arxiv.org/abs/2412.07724},

|

| 244 |

}

|

| 245 |

+

```

|

roc.png

CHANGED

|

|