Add files using upload-large-folder tool

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .github/ISSUE_TEMPLATE/bug_report.md +38 -0

- .github/ISSUE_TEMPLATE/feature_request.md +20 -0

- .gitmodules +6 -0

- convert_model.py +56 -0

- expman/expman/__init__.py +7 -0

- expman/expman/__main__.py +58 -0

- expman/expman/__pycache__/__init__.cpython-311.pyc +0 -0

- expman/expman/__pycache__/exp_group.cpython-311.pyc +0 -0

- expman/expman/__pycache__/experiment.cpython-311.pyc +0 -0

- expman/expman/exp_group.py +96 -0

- expman/expman/experiment.py +233 -0

- losses.py +18 -0

- matlab/Meye.m +310 -0

- matlab/README.md +57 -0

- matlab/example.m +211 -0

- models/deeplab.py +78 -0

- models/deeplab/README.md +380 -0

- models/deeplab/assets/2007_000346_inference.png +0 -0

- models/deeplab/assets/confusion_matrix.png +0 -0

- models/deeplab/assets/dog_inference.png +0 -0

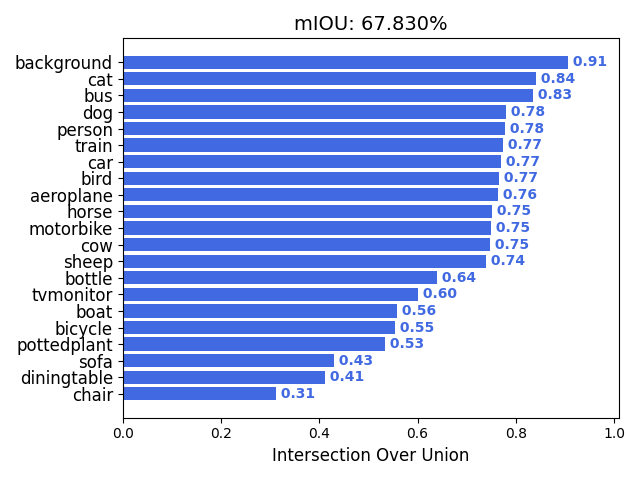

- models/deeplab/assets/mIOU.png +0 -0

- models/deeplab/common/callbacks.py +32 -0

- models/deeplab/common/data_utils.py +523 -0

- models/deeplab/common/model_utils.py +168 -0

- models/deeplab/common/utils.py +343 -0

- models/deeplab/configs/ade20k_classes.txt +150 -0

- models/deeplab/configs/cityscapes_classes.txt +33 -0

- models/deeplab/configs/coco_classes.txt +80 -0

- models/deeplab/configs/voc_classes.txt +20 -0

- models/deeplab/deeplab.py +297 -0

- models/deeplab/deeplabv3p/data.py +161 -0

- models/deeplab/deeplabv3p/loss.py +74 -0

- models/deeplab/deeplabv3p/metrics.py +46 -0

- models/deeplab/deeplabv3p/model.py +96 -0

- models/deeplab/deeplabv3p/models/__pycache__/deeplabv3p_mobilenetv3.cpython-311.pyc +0 -0

- models/deeplab/deeplabv3p/models/__pycache__/layers.cpython-311.pyc +0 -0

- models/deeplab/deeplabv3p/models/deeplabv3p_mobilenetv2.py +349 -0

- models/deeplab/deeplabv3p/models/deeplabv3p_mobilenetv3.py +912 -0

- models/deeplab/deeplabv3p/models/deeplabv3p_peleenet.py +428 -0

- models/deeplab/deeplabv3p/models/deeplabv3p_resnet50.py +408 -0

- models/deeplab/deeplabv3p/models/deeplabv3p_xception.py +239 -0

- models/deeplab/deeplabv3p/models/layers.py +311 -0

- models/deeplab/deeplabv3p/postprocess_np.py +30 -0

- models/deeplab/eval.py +565 -0

- models/deeplab/example/2007_000039.jpg +0 -0

- models/deeplab/example/2007_000039.png +0 -0

- models/deeplab/example/2007_000346.jpg +0 -0

- models/deeplab/example/2007_000346.png +0 -0

- models/deeplab/example/air.jpg +0 -0

- models/deeplab/example/car.jpg +0 -0

.github/ISSUE_TEMPLATE/bug_report.md

ADDED

|

@@ -0,0 +1,38 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

name: Bug report

|

| 3 |

+

about: Create a report to help us improve

|

| 4 |

+

title: ''

|

| 5 |

+

labels: bug

|

| 6 |

+

assignees: ''

|

| 7 |

+

|

| 8 |

+

---

|

| 9 |

+

|

| 10 |

+

**Describe the bug**

|

| 11 |

+

A clear and concise description of what the bug is.

|

| 12 |

+

|

| 13 |

+

**To Reproduce**

|

| 14 |

+

Steps to reproduce the behavior:

|

| 15 |

+

1. Go to '...'

|

| 16 |

+

2. Click on '....'

|

| 17 |

+

3. Scroll down to '....'

|

| 18 |

+

4. See error

|

| 19 |

+

|

| 20 |

+

**Expected behavior**

|

| 21 |

+

A clear and concise description of what you expected to happen.

|

| 22 |

+

|

| 23 |

+

**Screenshots**

|

| 24 |

+

If applicable, add screenshots to help explain your problem.

|

| 25 |

+

|

| 26 |

+

**Desktop (please complete the following information):**

|

| 27 |

+

- OS: [e.g. iOS]

|

| 28 |

+

- Browser [e.g. chrome, safari]

|

| 29 |

+

- Version [e.g. 22]

|

| 30 |

+

|

| 31 |

+

**Smartphone (please complete the following information):**

|

| 32 |

+

- Device: [e.g. iPhone6]

|

| 33 |

+

- OS: [e.g. iOS8.1]

|

| 34 |

+

- Browser [e.g. stock browser, safari]

|

| 35 |

+

- Version [e.g. 22]

|

| 36 |

+

|

| 37 |

+

**Additional context**

|

| 38 |

+

Add any other context about the problem here.

|

.github/ISSUE_TEMPLATE/feature_request.md

ADDED

|

@@ -0,0 +1,20 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

name: Feature request

|

| 3 |

+

about: Suggest an idea for this project

|

| 4 |

+

title: ''

|

| 5 |

+

labels: enhancement

|

| 6 |

+

assignees: ''

|

| 7 |

+

|

| 8 |

+

---

|

| 9 |

+

|

| 10 |

+

**Is your feature request related to a problem? Please describe.**

|

| 11 |

+

A clear and concise description of what the problem is. Ex. I'm always frustrated when [...]

|

| 12 |

+

|

| 13 |

+

**Describe the solution you'd like**

|

| 14 |

+

A clear and concise description of what you want to happen.

|

| 15 |

+

|

| 16 |

+

**Describe alternatives you've considered**

|

| 17 |

+

A clear and concise description of any alternative solutions or features you've considered.

|

| 18 |

+

|

| 19 |

+

**Additional context**

|

| 20 |

+

Add any other context or screenshots about the feature request here.

|

.gitmodules

ADDED

|

@@ -0,0 +1,6 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

[submodule "expman"]

|

| 2 |

+

path = expman

|

| 3 |

+

url = https://github.com/fabiocarrara/expman

|

| 4 |

+

[submodule "models/deeplab"]

|

| 5 |

+

path = models/deeplab

|

| 6 |

+

url = https://github.com/david8862/tf-keras-deeplabv3p-model-set

|

convert_model.py

ADDED

|

@@ -0,0 +1,56 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import tensorflow as tf

|

| 2 |

+

from tensorflow.keras import backend as K

|

| 3 |

+

from adabelief_tf import AdaBeliefOptimizer

|

| 4 |

+

|

| 5 |

+

def iou_coef(y_true, y_pred):

|

| 6 |

+

y_true = tf.cast(y_true, tf.float32)

|

| 7 |

+

y_pred = tf.cast(y_pred, tf.float32)

|

| 8 |

+

intersection = K.sum(K.abs(y_true * y_pred), axis=[1, 2, 3])

|

| 9 |

+

union = K.sum(y_true, axis=[1, 2, 3]) + K.sum(y_pred, axis=[1, 2, 3]) - intersection

|

| 10 |

+

return K.mean((intersection + 1e-6) / (union + 1e-6))

|

| 11 |

+

|

| 12 |

+

def dice_coef(y_true, y_pred):

|

| 13 |

+

y_true = tf.cast(y_true, tf.float32)

|

| 14 |

+

y_pred = tf.cast(y_pred, tf.float32)

|

| 15 |

+

intersection = K.sum(K.abs(y_true * y_pred), axis=[1, 2, 3])

|

| 16 |

+

return K.mean((2. * intersection + 1e-6) / (K.sum(y_true, axis=[1, 2, 3]) + K.sum(y_pred, axis=[1, 2, 3]) + 1e-6))

|

| 17 |

+

|

| 18 |

+

def boundary_loss(y_true, y_pred):

|

| 19 |

+

y_true = tf.cast(y_true, tf.float32)

|

| 20 |

+

y_pred = tf.cast(y_pred, tf.float32)

|

| 21 |

+

dy_true, dx_true = tf.image.image_gradients(y_true)

|

| 22 |

+

dy_pred, dx_pred = tf.image.image_gradients(y_pred)

|

| 23 |

+

loss = tf.reduce_mean(tf.abs(dy_pred - dy_true) + tf.abs(dx_pred - dx_true))

|

| 24 |

+

return loss * 0.5

|

| 25 |

+

|

| 26 |

+

def enhanced_binary_crossentropy(y_true, y_pred):

|

| 27 |

+

y_true = tf.cast(y_true, tf.float32)

|

| 28 |

+

y_pred = tf.cast(y_pred, tf.float32)

|

| 29 |

+

bce = tf.keras.losses.binary_crossentropy(y_true, y_pred)

|

| 30 |

+

boundary = boundary_loss(y_true, y_pred)

|

| 31 |

+

return bce + boundary

|

| 32 |

+

|

| 33 |

+

def hard_swish(x):

|

| 34 |

+

return x * tf.nn.relu6(x + 3) * (1. / 6.)

|

| 35 |

+

|

| 36 |

+

# Path to your current .keras model

|

| 37 |

+

keras_path = 'runs/b32_c-conv_d-|root|meye|data|NN_human_mouse_eyes|_g1.5_l0.001_num_c1_num_f16_num_s5_r128_se23_sp-random_up-relu_us0/best_model.keras'

|

| 38 |

+

|

| 39 |

+

# Load the model with custom objects

|

| 40 |

+

custom_objects = {

|

| 41 |

+

'AdaBeliefOptimizer': AdaBeliefOptimizer,

|

| 42 |

+

'iou_coef': iou_coef,

|

| 43 |

+

'dice_coef': dice_coef,

|

| 44 |

+

'hard_swish': hard_swish,

|

| 45 |

+

'enhanced_binary_crossentropy': enhanced_binary_crossentropy,

|

| 46 |

+

'boundary_loss': boundary_loss

|

| 47 |

+

}

|

| 48 |

+

|

| 49 |

+

print("Loading model from:", keras_path)

|

| 50 |

+

model = tf.keras.models.load_model(keras_path, custom_objects=custom_objects)

|

| 51 |

+

|

| 52 |

+

# Save as .h5

|

| 53 |

+

h5_path = keras_path.replace('.keras', '.h5')

|

| 54 |

+

print("Saving model to:", h5_path)

|

| 55 |

+

model.save(h5_path, save_format='h5')

|

| 56 |

+

print("Conversion complete!")

|

expman/expman/__init__.py

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from .experiment import Experiment, exp_filter, use_hash_naming

|

| 2 |

+

from .exp_group import ExpGroup

|

| 3 |

+

|

| 4 |

+

abbreviate = Experiment.abbreviate

|

| 5 |

+

from_dir = Experiment.from_dir

|

| 6 |

+

gather = ExpGroup.gather

|

| 7 |

+

is_exp_dir = Experiment.is_exp_dir

|

expman/expman/__main__.py

ADDED

|

@@ -0,0 +1,58 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import argparse

|

| 2 |

+

|

| 3 |

+

from .exp_group import ExpGroup

|

| 4 |

+

|

| 5 |

+

|

| 6 |

+

def add_param(args):

|

| 7 |

+

exps = ExpGroup.gather(args.run)

|

| 8 |

+

for exp in exps:

|

| 9 |

+

exp.add_parameter(args.param, args.value)

|

| 10 |

+

|

| 11 |

+

|

| 12 |

+

def mv_param(args):

|

| 13 |

+

exps = ExpGroup.gather(args.run)

|

| 14 |

+

for exp in exps:

|

| 15 |

+

exp.rename_parameter(args.param, args.new_param)

|

| 16 |

+

|

| 17 |

+

|

| 18 |

+

def rm_param(args):

|

| 19 |

+

exps = ExpGroup.gather(args.run)

|

| 20 |

+

for exp in exps:

|

| 21 |

+

exp.remove_parameter(args.param)

|

| 22 |

+

|

| 23 |

+

|

| 24 |

+

def command_line():

|

| 25 |

+

def guess(value):

|

| 26 |

+

""" try to guess a python type for the passed string parameter """

|

| 27 |

+

try:

|

| 28 |

+

result = eval(value)

|

| 29 |

+

except (NameError, ValueError):

|

| 30 |

+

result = value

|

| 31 |

+

return result

|

| 32 |

+

|

| 33 |

+

parser = argparse.ArgumentParser(description='Experiment Manager Utilities')

|

| 34 |

+

subparsers = parser.add_subparsers(dest='command')

|

| 35 |

+

subparsers.required = True

|

| 36 |

+

|

| 37 |

+

parser_add = subparsers.add_parser('add-param')

|

| 38 |

+

parser_add.add_argument('run', default='runs/')

|

| 39 |

+

parser_add.add_argument('param', help='new param name')

|

| 40 |

+

parser_add.add_argument('value', type=guess, help='new param value')

|

| 41 |

+

parser_add.set_defaults(func=add_param)

|

| 42 |

+

|

| 43 |

+

parser_rm = subparsers.add_parser('rm-param')

|

| 44 |

+

parser_rm.add_argument('run', default='runs/')

|

| 45 |

+

parser_rm.add_argument('param', help='param to remove')

|

| 46 |

+

parser_rm.set_defaults(func=rm_param)

|

| 47 |

+

|

| 48 |

+

parser_mv = subparsers.add_parser('mv-param')

|

| 49 |

+

parser_mv.add_argument('run', default='runs/')

|

| 50 |

+

parser_mv.add_argument('param', help='param to rename')

|

| 51 |

+

parser_mv.add_argument('new_param', help='new param name')

|

| 52 |

+

parser_mv.set_defaults(func=mv_param)

|

| 53 |

+

|

| 54 |

+

args = parser.parse_args()

|

| 55 |

+

args.func(args)

|

| 56 |

+

|

| 57 |

+

|

| 58 |

+

command_line()

|

expman/expman/__pycache__/__init__.cpython-311.pyc

ADDED

|

Binary file (448 Bytes). View file

|

|

|

expman/expman/__pycache__/exp_group.cpython-311.pyc

ADDED

|

Binary file (6.82 kB). View file

|

|

|

expman/expman/__pycache__/experiment.cpython-311.pyc

ADDED

|

Binary file (16.9 kB). View file

|

|

|

expman/expman/exp_group.py

ADDED

|

@@ -0,0 +1,96 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os

|

| 2 |

+

import pandas as pd

|

| 3 |

+

|

| 4 |

+

from glob import glob

|

| 5 |

+

from .experiment import Experiment

|

| 6 |

+

|

| 7 |

+

|

| 8 |

+

class ExpGroup:

|

| 9 |

+

@classmethod

|

| 10 |

+

def gather(cls, root='runs/'):

|

| 11 |

+

if Experiment.is_exp_dir(root):

|

| 12 |

+

exps = (root,)

|

| 13 |

+

else:

|

| 14 |

+

exps = glob(os.path.join(root, '*'))

|

| 15 |

+

exps = filter(Experiment.is_exp_dir, exps)

|

| 16 |

+

|

| 17 |

+

exps = map(Experiment.from_dir, exps)

|

| 18 |

+

exps = filter(lambda x: x.existing, exps)

|

| 19 |

+

exps = tuple(exps)

|

| 20 |

+

return cls(exps)

|

| 21 |

+

|

| 22 |

+

def __init__(self, experiments=()):

|

| 23 |

+

assert isinstance(experiments, (list, tuple)), "'experiments' must be a list or tuple"

|

| 24 |

+

self.experiments = experiments

|

| 25 |

+

|

| 26 |

+

@staticmethod

|

| 27 |

+

def _collect_one(exp_id, exp, csv=None, index_col=None):

|

| 28 |

+

params = exp.params.to_frame().transpose().infer_objects() # as DataFrame

|

| 29 |

+

params['exp_id'] = exp_id

|

| 30 |

+

|

| 31 |

+

if csv is None:

|

| 32 |

+

return params

|

| 33 |

+

|

| 34 |

+

csv_path = exp.path_to(csv)

|

| 35 |

+

if os.path.exists(csv_path):

|

| 36 |

+

stuff = pd.read_csv(csv_path, index_col=index_col)

|

| 37 |

+

else: # try globbing

|

| 38 |

+

csv_files = os.path.join(exp.path, csv)

|

| 39 |

+

csv_files = list(glob(csv_files))

|

| 40 |

+

if len(csv_files) == 0:

|

| 41 |

+

return pd.DataFrame()

|

| 42 |

+

|

| 43 |

+

stuff = map(lambda x: pd.read_csv(x, index_col=index_col, float_precision='round_trip'), csv_files)

|

| 44 |

+

stuff = pd.concat(stuff, ignore_index=True)

|

| 45 |

+

|

| 46 |

+

stuff['exp_id'] = exp_id

|

| 47 |

+

return pd.merge(params, stuff, on='exp_id')

|

| 48 |

+

|

| 49 |

+

def collect(self, csv=None, index_col=None, prefix=''):

|

| 50 |

+

results = [self._collect_one(exp_id, exp, csv=csv, index_col=index_col) for exp_id, exp in enumerate(self.experiments)]

|

| 51 |

+

results = pd.concat(results, ignore_index=True, sort=False)

|

| 52 |

+

|

| 53 |

+

if len(results):

|

| 54 |

+

# build minimal exp_name

|

| 55 |

+

exp_name = ''

|

| 56 |

+

params = results.loc[:, :'exp_id'].drop('exp_id', axis=1)

|

| 57 |

+

if len(params) > 1:

|

| 58 |

+

varying_params = params.loc[:, params.nunique() > 1]

|

| 59 |

+

exp_name = varying_params.apply(Experiment.abbreviate, axis=1)

|

| 60 |

+

idx = results.columns.get_loc('exp_id') + 1

|

| 61 |

+

results.insert(idx, 'exp_name', prefix + exp_name)

|

| 62 |

+

|

| 63 |

+

return results

|

| 64 |

+

|

| 65 |

+

def filter(self, filters):

|

| 66 |

+

if isinstance(filters, str):

|

| 67 |

+

filters = string.split(',')

|

| 68 |

+

filters = map(lambda x: x.split('='), filters)

|

| 69 |

+

filters = {k: v for k, v in filters}

|

| 70 |

+

|

| 71 |

+

def __filter_exp(e):

|

| 72 |

+

for param, value in filters.items():

|

| 73 |

+

try:

|

| 74 |

+

p = e.params[param]

|

| 75 |

+

ptype = type(p)

|

| 76 |

+

if p != ptype(value):

|

| 77 |

+

return False

|

| 78 |

+

except:

|

| 79 |

+

return False

|

| 80 |

+

|

| 81 |

+

return True

|

| 82 |

+

|

| 83 |

+

filtered_exps = filter(__filter_exp, self.experiments)

|

| 84 |

+

filtered_exps = tuple(filtered_exps)

|

| 85 |

+

return ExpGroup(filtered_exps)

|

| 86 |

+

|

| 87 |

+

def items(self, short_names=True, prefix=''):

|

| 88 |

+

if short_names:

|

| 89 |

+

params = self.collect(prefix=prefix)

|

| 90 |

+

exp_names = params['exp_name'].values

|

| 91 |

+

return zip(exp_names, self.experiments)

|

| 92 |

+

|

| 93 |

+

return self.experiments

|

| 94 |

+

|

| 95 |

+

def __iter__(self):

|

| 96 |

+

return iter(self.experiments)

|

expman/expman/experiment.py

ADDED

|

@@ -0,0 +1,233 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

|

| 2 |

+

import argparse

|

| 3 |

+

import ast

|

| 4 |

+

import os

|

| 5 |

+

import hashlib

|

| 6 |

+

import shutil

|

| 7 |

+

import numbers

|

| 8 |

+

from glob import glob

|

| 9 |

+

from io import StringIO

|

| 10 |

+

|

| 11 |

+

import numpy as np

|

| 12 |

+

import pandas as pd

|

| 13 |

+

|

| 14 |

+

|

| 15 |

+

hash_naming = False

|

| 16 |

+

|

| 17 |

+

def use_hash_naming(use_hashes=True):

|

| 18 |

+

global hash_naming

|

| 19 |

+

assert isinstance(use_hashes, bool), "Value must be a boolean."

|

| 20 |

+

hash_naming = use_hashes

|

| 21 |

+

|

| 22 |

+

def _guessed_cast(x):

|

| 23 |

+

try:

|

| 24 |

+

return ast.literal_eval(x)

|

| 25 |

+

except:

|

| 26 |

+

return x

|

| 27 |

+

|

| 28 |

+

def exp_filter(string):

|

| 29 |

+

if '=' not in string:

|

| 30 |

+

raise argparse.ArgumentTypeError(

|

| 31 |

+

'Filter {} is not in format <param1>=<value1>[, <param2>=<value2>[, ...]]'.format(string))

|

| 32 |

+

filters = string.split(',')

|

| 33 |

+

filters = map(lambda x: x.split('='), filters)

|

| 34 |

+

filters = {k: _guessed_cast(v) for k, v in filters}

|

| 35 |

+

return filters

|

| 36 |

+

|

| 37 |

+

|

| 38 |

+

class Experiment:

|

| 39 |

+

|

| 40 |

+

PARAM_FILENAME = 'params.json'

|

| 41 |

+

|

| 42 |

+

@staticmethod

|

| 43 |

+

def _abbr(name, value, params):

|

| 44 |

+

|

| 45 |

+

def prefix_len(a, b):

|

| 46 |

+

return len(os.path.commonprefix((a, b)))

|

| 47 |

+

|

| 48 |

+

prefix = [name[:prefix_len(p, name) + 1] for p in params.keys() if p != name]

|

| 49 |

+

prefix = max(prefix, key=len) if len(prefix) > 0 else name

|

| 50 |

+

|

| 51 |

+

sep = ''

|

| 52 |

+

if isinstance(value, str):

|

| 53 |

+

sep = '-'

|

| 54 |

+

elif isinstance(value, numbers.Number):

|

| 55 |

+

value = '{:g}'.format(value)

|

| 56 |

+

sep = '-' if prefix[-1].isdigit() else ''

|

| 57 |

+

elif isinstance(value, (list, tuple)):

|

| 58 |

+

value = map(str, value)

|

| 59 |

+

value = map(lambda v: v.replace(os.sep, '|'), value)

|

| 60 |

+

value = ','.join(list(value))

|

| 61 |

+

sep = '-'

|

| 62 |

+

|

| 63 |

+

return prefix, sep, value

|

| 64 |

+

|

| 65 |

+

@classmethod

|

| 66 |

+

def abbreviate(cls, params):

|

| 67 |

+

if isinstance(params, pd.DataFrame):

|

| 68 |

+

params = params.iloc[0]

|

| 69 |

+

params = params.replace({np.nan: None})

|

| 70 |

+

|

| 71 |

+

if hash_naming:

|

| 72 |

+

exp_name = hashlib.md5(str(sorted(params.items())).encode()).hexdigest()

|

| 73 |

+

else:

|

| 74 |

+

abbrev_params = {k: '{}{}{}'.format(*cls._abbr(k, v, params)) for k, v in params.items()}

|

| 75 |

+

abbrev = sorted(abbrev_params.values())

|

| 76 |

+

exp_name = '_'.join(abbrev)

|

| 77 |

+

|

| 78 |

+

return exp_name

|

| 79 |

+

|

| 80 |

+

@classmethod

|

| 81 |

+

def from_dir(cls, exp_dir):

|

| 82 |

+

root = os.path.dirname(exp_dir.rstrip('/'))

|

| 83 |

+

params = os.path.join(exp_dir, cls.PARAM_FILENAME)

|

| 84 |

+

|

| 85 |

+

assert os.path.exists(exp_dir), "Experiment directory not found: '{}'".format(exp_dir)

|

| 86 |

+

assert os.path.exists(params), "Empty run directory found: '{}'".format(params)

|

| 87 |

+

|

| 88 |

+

params = cls._read_params(params)

|

| 89 |

+

exp = cls(params, root=root, create=False)

|

| 90 |

+

return exp

|

| 91 |

+

|

| 92 |

+

@classmethod

|

| 93 |

+

def is_exp_dir(cls, exp_dir):

|

| 94 |

+

if os.path.isdir(exp_dir):

|

| 95 |

+

params = os.path.join(exp_dir, cls.PARAM_FILENAME)

|

| 96 |

+

if os.path.exists(params):

|

| 97 |

+

return True

|

| 98 |

+

|

| 99 |

+

return False

|

| 100 |

+

|

| 101 |

+

@classmethod

|

| 102 |

+

def update_exp_dir(cls, exp_dir):

|

| 103 |

+

exp_dir = exp_dir.rstrip('/')

|

| 104 |

+

root = os.path.dirname(exp_dir)

|

| 105 |

+

name = os.path.basename(exp_dir)

|

| 106 |

+

params = os.path.join(exp_dir, cls.PARAM_FILENAME)

|

| 107 |

+

|

| 108 |

+

assert os.path.exists(exp_dir), "Experiment directory not found: '{}'".format(exp_dir)

|

| 109 |

+

assert os.path.exists(params), "Empty run directory found: '{}'".format(params)

|

| 110 |

+

|

| 111 |

+

params = cls._read_params(params)

|

| 112 |

+

new_name = cls.abbreviate(params)

|

| 113 |

+

|

| 114 |

+

if name != new_name:

|

| 115 |

+

new_exp_dir = os.path.join(root, new_name)

|

| 116 |

+

assert not os.path.exists(new_exp_dir), \

|

| 117 |

+

"Destination experiment directory already exists: '{}'".format(new_exp_dir)

|

| 118 |

+

|

| 119 |

+

print('Renaming:\n {} into\n {}'.format(exp_dir, new_exp_dir))

|

| 120 |

+

shutil.move(exp_dir, new_exp_dir)

|

| 121 |

+

|

| 122 |

+

def __init__(self, params, root='runs/', ignore=(), create=True):

|

| 123 |

+

# relative dir containing this run

|

| 124 |

+

self.root = root

|

| 125 |

+

# params to be ignored in the run naming

|

| 126 |

+

self.ignore = ignore

|

| 127 |

+

# parameters of this run

|

| 128 |

+

if isinstance(params, argparse.Namespace):

|

| 129 |

+

params = vars(params)

|

| 130 |

+

|

| 131 |

+

def _sanitize(v):

|

| 132 |

+

return tuple(v) if isinstance(v, list) else v

|

| 133 |

+

|

| 134 |

+

params = {k: _sanitize(v) for k, v in params.items() if k not in self.ignore}

|

| 135 |

+

self.params = pd.Series(params, name='params')

|

| 136 |

+

|

| 137 |

+

# whether to create the run directory if not exists

|

| 138 |

+

self.create = create

|

| 139 |

+

|

| 140 |

+

self.name = self.abbreviate(self.params)

|

| 141 |

+

self.path = os.path.join(self.root, self.name)

|

| 142 |

+

self.existing = os.path.exists(self.path)

|

| 143 |

+

self.found = self.existing

|

| 144 |

+

|

| 145 |

+

if not self.existing:

|

| 146 |

+

if self.create:

|

| 147 |

+

os.makedirs(self.path)

|

| 148 |

+

self.write_params()

|

| 149 |

+

self.existing = True

|

| 150 |

+

else:

|

| 151 |

+

print("Run directory '{}' not found, but not created.".format(self.path))

|

| 152 |

+

|

| 153 |

+

else:

|

| 154 |

+

param_fname = self.path_to(self.PARAM_FILENAME)

|

| 155 |

+

assert os.path.exists(param_fname), "Empty run, parameters not found: '{}'".format(param_fname)

|

| 156 |

+

self.params = self._read_params(param_fname)

|

| 157 |

+

|

| 158 |

+

|

| 159 |

+

def __str__(self):

|

| 160 |

+

s = StringIO()

|

| 161 |

+

print('Experiment Dir: {}'.format(self.path), file=s)

|

| 162 |

+

print('Params:', file=s)

|

| 163 |

+

|

| 164 |

+

# Set display options differently

|

| 165 |

+

with pd.option_context('display.max_rows', None,

|

| 166 |

+

'display.max_columns', None,

|

| 167 |

+

'display.width', None):

|

| 168 |

+

print(self.params.to_string(), file=s)

|

| 169 |

+

|

| 170 |

+

return s.getvalue()

|

| 171 |

+

|

| 172 |

+

def __repr__(self):

|

| 173 |

+

return self.__str__()

|

| 174 |

+

|

| 175 |

+

def path_to(self, path):

|

| 176 |

+

path = os.path.join(self.path, path)

|

| 177 |

+

return path

|

| 178 |

+

|

| 179 |

+

def add_parameter(self, key, value):

|

| 180 |

+

assert key not in self.params, "Parameter already exists: '{}'".format(key)

|

| 181 |

+

self.params[key] = value

|

| 182 |

+

self._update_run_dir()

|

| 183 |

+

self.write_params()

|

| 184 |

+

|

| 185 |

+

def rename_parameter(self, key, new_key):

|

| 186 |

+

assert key in self.params, "Cannot rename non-existent parameter: '{}'".format(key)

|

| 187 |

+

assert new_key not in self.params, "Destination name for parameter exists: '{}'".format(key)

|

| 188 |

+

|

| 189 |

+

self.params[new_key] = self.params[key]

|

| 190 |

+

del self.params[key]

|

| 191 |

+

|

| 192 |

+

self._update_run_dir()

|

| 193 |

+

self.write_params()

|

| 194 |

+

|

| 195 |

+

def remove_parameter(self, key):

|

| 196 |

+

assert key in self.params, "Cannot remove non-existent parameter: '{}'".format(key)

|

| 197 |

+

del self.params[key]

|

| 198 |

+

self._update_run_dir()

|

| 199 |

+

self.write_params()

|

| 200 |

+

|

| 201 |

+

def _update_run_dir(self):

|

| 202 |

+

old_run_dir = self.path

|

| 203 |

+

if self.existing:

|

| 204 |

+

self.name = self.abbreviate(self.params)

|

| 205 |

+

self.path = os.path.join(self.root, self.name)

|

| 206 |

+

assert not os.path.exists(self.path), "Cannot rename run, new name exists: '{}'".format(self.path)

|

| 207 |

+

shutil.move(old_run_dir, self.path)

|

| 208 |

+

|

| 209 |

+

@staticmethod

|

| 210 |

+

def _read_params(path):

|

| 211 |

+

# read json to pd.Series

|

| 212 |

+

params = pd.read_json(path, typ='series')

|

| 213 |

+

# transform lists to tuples (for hashability)

|

| 214 |

+

params = params.apply(lambda x: tuple(x) if isinstance(x, list) else x)

|

| 215 |

+

return params

|

| 216 |

+

|

| 217 |

+

def write_params(self):

|

| 218 |

+

# write Series as json

|

| 219 |

+

self.params.to_json(self.path_to(self.PARAM_FILENAME))

|

| 220 |

+

|

| 221 |

+

def test():

|

| 222 |

+

parser = argparse.ArgumentParser(description='Experiment Manager Test')

|

| 223 |

+

parser.add_argument('-e', '--epochs', type=int, default=70)

|

| 224 |

+

parser.add_argument('-b', '--batch-size', type=int, default=64)

|

| 225 |

+

parser.add_argument('-m', '--model', choices=('1d-conv', 'paper'), default='1d-conv')

|

| 226 |

+

parser.add_argument('-s', '--seed', type=int, default=23)

|

| 227 |

+

parser.add_argument('--no-cuda', action='store_true')

|

| 228 |

+

parser.set_defaults(no_cuda=False)

|

| 229 |

+

args = parser.parse_args()

|

| 230 |

+

|

| 231 |

+

run = Experiment(args, root='prova', ignore=['no_cuda'])

|

| 232 |

+

print(run)

|

| 233 |

+

print(run.path_to('ckpt/best.h5'))

|

losses.py

ADDED

|

@@ -0,0 +1,18 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import tensorflow as tf

|

| 2 |

+

from tensorflow.keras import backend as K

|

| 3 |

+

|

| 4 |

+

def boundary_loss(y_true, y_pred):

|

| 5 |

+

"""Additional loss focusing on boundaries"""

|

| 6 |

+

# Compute gradients

|

| 7 |

+

dy_true, dx_true = tf.image.image_gradients(y_true)

|

| 8 |

+

dy_pred, dx_pred = tf.image.image_gradients(y_pred)

|

| 9 |

+

|

| 10 |

+

# Compute boundary loss

|

| 11 |

+

loss = tf.reduce_mean(tf.abs(dy_pred - dy_true) + tf.abs(dx_pred - dx_true))

|

| 12 |

+

return loss * 0.5 # weight factor

|

| 13 |

+

|

| 14 |

+

def enhanced_binary_crossentropy(y_true, y_pred):

|

| 15 |

+

"""Combine standard BCE with boundary loss"""

|

| 16 |

+

bce = tf.keras.losses.binary_crossentropy(y_true, y_pred)

|

| 17 |

+

boundary = boundary_loss(y_true, y_pred)

|

| 18 |

+

return bce + boundary

|

matlab/Meye.m

ADDED

|

@@ -0,0 +1,310 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

classdef Meye

|

| 2 |

+

|

| 3 |

+

properties (Access=private)

|

| 4 |

+

model

|

| 5 |

+

end

|

| 6 |

+

|

| 7 |

+

|

| 8 |

+

methods

|

| 9 |

+

|

| 10 |

+

% CONSTRUCTOR

|

| 11 |

+

%------------------------------------------------------------------

|

| 12 |

+

function self = Meye(modelPath)

|

| 13 |

+

% Class constructor

|

| 14 |

+

arguments

|

| 15 |

+

modelPath char {mustBeText}

|

| 16 |

+

end

|

| 17 |

+

|

| 18 |

+

% Change the current directory to the directory where the

|

| 19 |

+

% original class is, so that the package with the custom layers

|

| 20 |

+

% is created there

|

| 21 |

+

classPath = getClassPath(self);

|

| 22 |

+

oldFolder = cd(classPath);

|

| 23 |

+

% Import the model saved as ONNX

|

| 24 |

+

self.model = importONNXNetwork(modelPath, ...

|

| 25 |

+

'GenerateCustomLayers',true, ...

|

| 26 |

+

'PackageName','customLayers_meye',...

|

| 27 |

+

'InputDataFormats', 'BSSC',...

|

| 28 |

+

'OutputDataFormats',{'BSSC','BC'});

|

| 29 |

+

|

| 30 |

+

% Manually change the "nearest" option to "linear" inside of

|

| 31 |

+

% the automatically generated custom layers. This is necessary

|

| 32 |

+

% due to the fact that MATLAB still does not support the proper

|

| 33 |

+

% translation between ONNX layers and DLtoolbox layers

|

| 34 |

+

self.nearest2Linear([classPath filesep '+customLayers_meye'])

|

| 35 |

+

|

| 36 |

+

% Go back to the old current folder

|

| 37 |

+

cd(oldFolder)

|

| 38 |

+

end

|

| 39 |

+

|

| 40 |

+

|

| 41 |

+

% PREDICTION OF SINGLE IMAGES

|

| 42 |

+

%------------------------------------------------------------------

|

| 43 |

+

function [pupilMask, eyeProb, blinkProb] = predictImage(self, inputImage, options)

|

| 44 |

+

% Predicts pupil location on a single image

|

| 45 |

+

arguments

|

| 46 |

+

self

|

| 47 |

+

inputImage

|

| 48 |

+

options.roiPos = []

|

| 49 |

+

options.threshold = []

|

| 50 |

+

end

|

| 51 |

+

|

| 52 |

+

roiPos = options.roiPos;

|

| 53 |

+

|

| 54 |

+

% Convert the image to grayscale if RGB

|

| 55 |

+

if size(inputImage,3) > 1

|

| 56 |

+

inputImage = im2gray(inputImage);

|

| 57 |

+

end

|

| 58 |

+

|

| 59 |

+

% Crop the frame to the desired ROI

|

| 60 |

+

if ~isempty(roiPos)

|

| 61 |

+

crop = inputImage(roiPos(2):roiPos(2)+roiPos(4)-1,...

|

| 62 |

+

roiPos(1):roiPos(1)+roiPos(3)-1);

|

| 63 |

+

else

|

| 64 |

+

crop = inputImage;

|

| 65 |

+

end

|

| 66 |

+

|

| 67 |

+

% Preprocessing

|

| 68 |

+

img = double(imresize(crop,[128 128]));

|

| 69 |

+

img = img / max(img,[],'all');

|

| 70 |

+

|

| 71 |

+

% Do the prediction

|

| 72 |

+

[rawMask, info] = predict(self.model, img);

|

| 73 |

+

eyeProb = info(1);

|

| 74 |

+

blinkProb = info(2);

|

| 75 |

+

|

| 76 |

+

% Reinsert the cropped prediction in the frame

|

| 77 |

+

if ~isempty(roiPos)

|

| 78 |

+

pupilMask = zeros(size(inputImage));

|

| 79 |

+

pupilMask(roiPos(2):roiPos(2)+roiPos(4)-1,...

|

| 80 |

+

roiPos(1):roiPos(1)+roiPos(3)-1) = imresize(rawMask, [roiPos(4), roiPos(3)],"bilinear");

|

| 81 |

+

else

|

| 82 |

+

pupilMask = imresize(rawMask,size(inputImage),"bilinear");

|

| 83 |

+

end

|

| 84 |

+

|

| 85 |

+

% Apply a threshold to the image if requested

|

| 86 |

+

if ~isempty(options.threshold)

|

| 87 |

+

pupilMask = pupilMask > options.threshold;

|

| 88 |

+

end

|

| 89 |

+

|

| 90 |

+

end

|

| 91 |

+

|

| 92 |

+

|

| 93 |

+

% PREDICT A MOVIE AND GET A TABLE WITH THE RESULTS

|

| 94 |

+

%------------------------------------------------------------------

|

| 95 |

+

function tab = predictMovie(self, moviePath, options)

|

| 96 |

+

% Predict an entire video file and returns a results Table

|

| 97 |

+

%

|

| 98 |

+

% tab = predictMovie(moviePath, name-value)

|

| 99 |

+

%

|

| 100 |

+

% INPUT(S)

|

| 101 |

+

% - moviePath: (char/string) Full path of a video file.

|

| 102 |

+

% - name-value pairs

|

| 103 |

+

% - roiPos: [x,y,width,height] 4-elements vector defining a

|

| 104 |

+

% rectangle containing the eye. Works best if width and

|

| 105 |

+

% height are similar. If empty, a prediction will be done on

|

| 106 |

+

% a full frame(Default: []).

|

| 107 |

+

% - threshold: [0-1] The pupil prediction is binarized based

|

| 108 |

+

% on a threshold value to measure pupil size. (Default:0.4)

|

| 109 |

+

%

|

| 110 |

+

% OUTPUT(S)

|

| 111 |

+

% - tab: a MATLAB table containing data of the analyzed video

|

| 112 |

+

|

| 113 |

+

arguments

|

| 114 |

+

self

|

| 115 |

+

moviePath char {mustBeText}

|

| 116 |

+

options.roiPos double = []

|

| 117 |

+

options.threshold = 0.4;

|

| 118 |

+

end

|

| 119 |

+

|

| 120 |

+

% Initialize a video reader

|

| 121 |

+

v = VideoReader(moviePath);

|

| 122 |

+

totFrames = v.NumFrames;

|

| 123 |

+

|

| 124 |

+

% Initialize Variables

|

| 125 |

+

frameN = zeros(totFrames,1,'double');

|

| 126 |

+

frameTime = zeros(totFrames,1,'double');

|

| 127 |

+

binaryMask = cell(totFrames,1);

|

| 128 |

+

pupilArea = zeros(totFrames,1,'double');

|

| 129 |

+

isEye = zeros(totFrames,1,'double');

|

| 130 |

+

isBlink = zeros(totFrames,1,'double');

|

| 131 |

+

|

| 132 |

+

tic

|

| 133 |

+

for i = 1:totFrames

|

| 134 |

+

% Progress report

|

| 135 |

+

if toc>10

|

| 136 |

+

fprintf('%.1f%% - Processing frame (%u/%u)\n', (i/totFrames)*100 , i, totFrames)

|

| 137 |

+

tic

|

| 138 |

+

end

|

| 139 |

+

|

| 140 |

+

% Read a frame and make its prediction

|

| 141 |

+

frame = read(v, i, 'native');

|

| 142 |

+

[pupilMask, eyeProb, blinkProb] = self.predictImage(frame, roiPos=options.roiPos,...

|

| 143 |

+

threshold=options.threshold);

|

| 144 |

+

|

| 145 |

+

% Save results for this frame

|

| 146 |

+

frameN(i) = i;

|

| 147 |

+

frameTime(i) = v.CurrentTime;

|

| 148 |

+

binaryMask{i} = pupilMask > options.threshold;

|

| 149 |

+

pupilArea(i) = sum(binaryMask{i},"all");

|

| 150 |

+

isEye(i) = eyeProb;

|

| 151 |

+

isBlink(i) = blinkProb;

|

| 152 |

+

end

|

| 153 |

+

% Save all the results in a final table

|

| 154 |

+

tab = table(frameN,frameTime,binaryMask,pupilArea,isEye,isBlink);

|

| 155 |

+

end

|

| 156 |

+

|

| 157 |

+

|

| 158 |

+

|

| 159 |

+

% PREVIEW OF A PREDICTED MOVIE

|

| 160 |

+

%------------------------------------------------------------------

|

| 161 |

+

function predictMovie_Preview(self, moviePath, options)

|

| 162 |

+

% Displays a live-preview of prediction for a video file

|

| 163 |

+

|

| 164 |

+

arguments

|

| 165 |

+

self

|

| 166 |

+

moviePath char {mustBeText}

|

| 167 |

+

options.roiPos double = []

|

| 168 |

+

options.threshold double = []

|

| 169 |

+

end

|

| 170 |

+

roiPos = options.roiPos;

|

| 171 |

+

|

| 172 |

+

|

| 173 |

+

% Initialize a video reader

|

| 174 |

+

v = VideoReader(moviePath);

|

| 175 |

+

% Initialize images to show

|

| 176 |

+

blankImg = zeros(v.Height, v.Width, 'uint8');

|

| 177 |

+

cyanColor = cat(3, blankImg, blankImg+255, blankImg+255);

|

| 178 |

+

pupilTransparency = blankImg;

|

| 179 |

+

|

| 180 |

+

% Create a figure for the preview

|

| 181 |

+

figHandle = figure(...

|

| 182 |

+

'Name','MEYE video preview',...

|

| 183 |

+

'NumberTitle','off',...

|

| 184 |

+

'ToolBar','none',...

|

| 185 |

+

'MenuBar','none', ...

|

| 186 |

+

'Color',[.1, .1, .1]);

|

| 187 |

+

|

| 188 |

+

ax = axes('Parent',figHandle,...

|

| 189 |

+

'Units','normalized',...

|

| 190 |

+

'Position',[0 0 1 .94]);

|

| 191 |

+

|

| 192 |

+

imHandle = imshow(blankImg,'Parent',ax);

|

| 193 |

+

hold on

|

| 194 |

+

cyanHandle = imshow(cyanColor,'Parent',ax);

|

| 195 |

+

cyanHandle.AlphaData = pupilTransparency;

|

| 196 |

+

rect = rectangle('LineWidth',1.5, 'LineStyle','-.','EdgeColor',[1,0,0],...

|

| 197 |

+

'Parent',ax,'Position',[0,0,0,0]);

|

| 198 |

+

hold off

|

| 199 |

+

title(ax,'MEYE Video Preview', 'Color',[1,1,1])

|

| 200 |

+

|

| 201 |

+

% Movie-Showing loop

|

| 202 |

+

while exist("figHandle","var") && ishandle(figHandle) && hasFrame(v)

|

| 203 |

+

try

|

| 204 |

+

tic

|

| 205 |

+

frame = readFrame(v);

|

| 206 |

+

|

| 207 |

+

% Actually do the prediction

|

| 208 |

+

[pupilMask, eyeProb, blinkProb] = self.predictImage(frame, roiPos=roiPos,...

|

| 209 |

+

threshold=options.threshold);

|

| 210 |

+

|

| 211 |

+

% Update graphic elements

|

| 212 |

+

imHandle.CData = frame;

|

| 213 |

+

cyanHandle.AlphaData = imresize(pupilMask, [v.Height, v.Width]);

|

| 214 |

+

if ~isempty(roiPos)

|

| 215 |

+

rect.Position = roiPos;

|

| 216 |

+

end

|

| 217 |

+

titStr = sprintf('Eye: %.2f%% - Blink:%.2f%% - FPS:%.1f',...

|

| 218 |

+

eyeProb*100, blinkProb*100, 1/toc);

|

| 219 |

+

ax.Title.String = titStr;

|

| 220 |

+

drawnow

|

| 221 |

+

catch ME

|

| 222 |

+

warning(ME.message)

|

| 223 |

+

close(figHandle)

|

| 224 |

+

end

|

| 225 |

+

end

|

| 226 |

+

disp('Stop preview.')

|

| 227 |

+

end

|

| 228 |

+

|

| 229 |

+

|

| 230 |

+

end

|

| 231 |

+

|

| 232 |

+

|

| 233 |

+

%------------------------------------------------------------------

|

| 234 |

+

%------------------------------------------------------------------

|

| 235 |

+

% INTERNAL FUNCTIONS

|

| 236 |

+

%------------------------------------------------------------------

|

| 237 |

+

%------------------------------------------------------------------

|

| 238 |

+

methods(Access=private)

|

| 239 |

+

%------------------------------------------------------------------

|

| 240 |

+

function path = getClassPath(~)

|

| 241 |

+

% Returns the full path of where the class file is

|

| 242 |

+

|

| 243 |

+

fullPath = mfilename('fullpath');

|

| 244 |

+

[path,~,~] = fileparts(fullPath);

|

| 245 |

+

end

|

| 246 |

+

|

| 247 |

+

%------------------------------------------------------------------

|

| 248 |

+

function [fplist,fnlist] = listfiles(~, folderpath, token)

|

| 249 |

+

listing = dir(folderpath);

|

| 250 |

+

index = 0;

|

| 251 |

+

fplist = {};

|

| 252 |

+

fnlist = {};

|

| 253 |

+

for i = 1:size(listing,1)

|

| 254 |

+

s = listing(i).name;

|

| 255 |

+

if contains(s,token)

|

| 256 |

+

index = index+1;

|

| 257 |

+

fplist{index} = [folderpath filesep s];

|

| 258 |

+

fnlist{index} = s;

|

| 259 |

+

end

|

| 260 |

+

end

|

| 261 |

+

end

|

| 262 |

+

|

| 263 |

+

% nearest2Linear

|

| 264 |

+

%------------------------------------------------------------------

|

| 265 |

+

function nearest2Linear(self, inputPath)

|

| 266 |

+

fP = self.listfiles(inputPath, 'Shape_To_Upsample');

|

| 267 |

+

|

| 268 |

+

foundFileToChange = false;

|

| 269 |

+

beforePatter = '"half_pixel", "nearest",';

|

| 270 |

+

afterPattern = '"half_pixel", "linear",';

|

| 271 |

+

for i = 1:length(fP)

|

| 272 |

+

|

| 273 |

+

% Get the content of the file

|

| 274 |

+

fID = fopen(fP{i}, 'r');

|

| 275 |

+

f = fread(fID,'*char')';

|

| 276 |

+

fclose(fID);

|

| 277 |

+

|

| 278 |

+

% Send a verbose warning the first time we are manually

|

| 279 |

+

% correcting the upsampling layers bug

|

| 280 |

+

if ~foundFileToChange && contains(f,beforePatter)

|

| 281 |

+

foundFileToChange = true;

|

| 282 |

+

msg = ['This is a message from MEYE developers.\n' ...

|

| 283 |

+

'In the current release of the Deep Learning Toolbox ' ...

|

| 284 |

+

'MATLAB does not translate well all the layers in the ' ...

|

| 285 |

+

'ONNX network to native MATLAB layers. In particular the ' ...

|

| 286 |

+

'automatically generated custom layers that have to do ' ...

|

| 287 |

+

'with UPSAMPLING are generated with the ''nearest'' instead of ' ...

|

| 288 |

+

'the ''linear'' mode.\nWe automatically correct for this bug when you ' ...

|

| 289 |

+

'instantiate a Meye object (henche this warning).\nEverything should work fine, ' ...

|

| 290 |

+