Upload extensions using SD-Hub extension

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitattributes +196 -0

- extensions/321Prompt/321Prompt/Readme.md +8 -0

- extensions/321Prompt/321Prompt/__init__.py +51 -0

- extensions/321Prompt/321prompt.php +105 -0

- extensions/321Prompt/321prompt.png +0 -0

- extensions/321Prompt/LICENSE +121 -0

- extensions/321Prompt/README.md +41 -0

- extensions/ABG_extension/.gitignore +1 -0

- extensions/ABG_extension/README.md +32 -0

- extensions/ABG_extension/install.py +11 -0

- extensions/ABG_extension/scripts/__pycache__/app.cpython-310.pyc +0 -0

- extensions/ABG_extension/scripts/app.py +183 -0

- extensions/AdverseCleanerExtension/.gitignore +2 -0

- extensions/AdverseCleanerExtension/LICENSE +201 -0

- extensions/AdverseCleanerExtension/README.md +8 -0

- extensions/AdverseCleanerExtension/install.py +12 -0

- extensions/AdverseCleanerExtension/scripts/__pycache__/denoise.cpython-310.pyc +0 -0

- extensions/AdverseCleanerExtension/scripts/denoise.py +74 -0

- extensions/Automatic1111-Geeky-Remb/LICENSE +21 -0

- extensions/Automatic1111-Geeky-Remb/README.md +173 -0

- extensions/Automatic1111-Geeky-Remb/__init__.py +4 -0

- extensions/Automatic1111-Geeky-Remb/install.py +7 -0

- extensions/Automatic1111-Geeky-Remb/requirements.txt +4 -0

- extensions/Automatic1111-Geeky-Remb/scripts/geeky-remb.py +435 -0

- extensions/CFgfade/LICENSE +24 -0

- extensions/CFgfade/README.md +66 -0

- extensions/CFgfade/screenshot.png +0 -0

- extensions/CFgfade/scripts/__pycache__/forge_cfgfade.cpython-310.pyc +0 -0

- extensions/CFgfade/scripts/forge_cfgfade.py +317 -0

- extensions/CloneCleaner/.gitattributes +2 -0

- extensions/CloneCleaner/.gitignore +4 -0

- extensions/CloneCleaner/LICENSE +21 -0

- extensions/CloneCleaner/README.md +86 -0

- extensions/CloneCleaner/prompt_tree.yml +286 -0

- extensions/CloneCleaner/scripts/__pycache__/clonecleaner.cpython-310.pyc +0 -0

- extensions/CloneCleaner/scripts/clonecleaner.py +223 -0

- extensions/CloneCleaner/style.css +33 -0

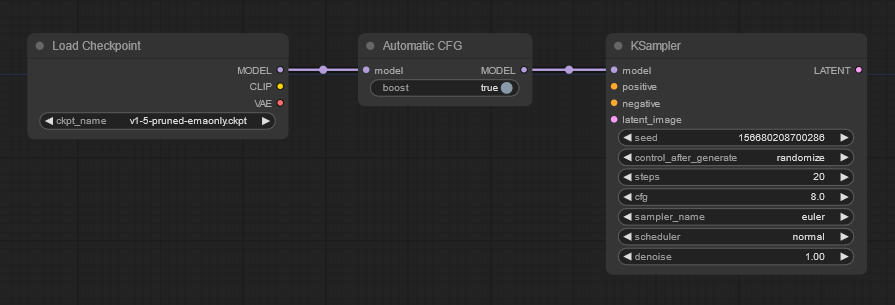

- extensions/ComfyUI-AutomaticCFG/.github/workflows/publish.yml +21 -0

- extensions/ComfyUI-AutomaticCFG/README.md +175 -0

- extensions/ComfyUI-AutomaticCFG/__init__.py +27 -0

- extensions/ComfyUI-AutomaticCFG/experimental_temperature.py +208 -0

- extensions/ComfyUI-AutomaticCFG/grids_example/Enhanced_details_and_tweaked_attention.png +3 -0

- extensions/ComfyUI-AutomaticCFG/grids_example/Iris_Lux_v1051_base_image_vanilla_sampling.png +3 -0

- extensions/ComfyUI-AutomaticCFG/grids_example/excellent_patch_a.jpg +3 -0

- extensions/ComfyUI-AutomaticCFG/grids_example/excellent_patch_b.jpg +3 -0

- extensions/ComfyUI-AutomaticCFG/grids_example/presets.jpg +3 -0

- extensions/ComfyUI-AutomaticCFG/nodes.py +1286 -0

- extensions/ComfyUI-AutomaticCFG/nodes_sag_custom.py +190 -0

- extensions/ComfyUI-AutomaticCFG/presets/A subtle touch.json +1 -0

- extensions/ComfyUI-AutomaticCFG/presets/Crossed conds customized 1.json +1 -0

.gitattributes

CHANGED

|

@@ -134,3 +134,199 @@ extensionsa/stable-diffusion-webui-composable-lora/readme/fig8.png filter=lfs di

|

|

| 134 |

extensionsa/stable-diffusion-webui-composable-lora/readme/fig9.png filter=lfs diff=lfs merge=lfs -text

|

| 135 |

extensionsa/stable-diffusion-webui-rembg/preview.png filter=lfs diff=lfs merge=lfs -text

|

| 136 |

extensionsa/stable-diffusion-webui-tripclipskip/images/xy_plot.jpg filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 134 |

extensionsa/stable-diffusion-webui-composable-lora/readme/fig9.png filter=lfs diff=lfs merge=lfs -text

|

| 135 |

extensionsa/stable-diffusion-webui-rembg/preview.png filter=lfs diff=lfs merge=lfs -text

|

| 136 |

extensionsa/stable-diffusion-webui-tripclipskip/images/xy_plot.jpg filter=lfs diff=lfs merge=lfs -text

|

| 137 |

+

extensions/ComfyUI-AutomaticCFG/grids_example/Enhanced_details_and_tweaked_attention.png filter=lfs diff=lfs merge=lfs -text

|

| 138 |

+

extensions/ComfyUI-AutomaticCFG/grids_example/Iris_Lux_v1051_base_image_vanilla_sampling.png filter=lfs diff=lfs merge=lfs -text

|

| 139 |

+

extensions/ComfyUI-AutomaticCFG/grids_example/excellent_patch_a.jpg filter=lfs diff=lfs merge=lfs -text

|

| 140 |

+

extensions/ComfyUI-AutomaticCFG/grids_example/excellent_patch_b.jpg filter=lfs diff=lfs merge=lfs -text

|

| 141 |

+

extensions/ComfyUI-AutomaticCFG/grids_example/presets.jpg filter=lfs diff=lfs merge=lfs -text

|

| 142 |

+

extensions/ComfyUI-AutomaticCFG/workflows/10[[:space:]]steps[[:space:]]SDXL[[:space:]]AYS[[:space:]]Warp[[:space:]]drive[[:space:]]variation.png filter=lfs diff=lfs merge=lfs -text

|

| 143 |

+

extensions/ComfyUI-AutomaticCFG/workflows/11728UI_00001_.png filter=lfs diff=lfs merge=lfs -text

|

| 144 |

+

extensions/ComfyUI-AutomaticCFG/workflows/12[[:space:]]steps[[:space:]]SDXL[[:space:]]AYS[[:space:]]Warp[[:space:]]drive[[:space:]]workflow.png filter=lfs diff=lfs merge=lfs -text

|

| 145 |

+

extensions/ComfyUI-AutomaticCFG/workflows/12steps.png filter=lfs diff=lfs merge=lfs -text

|

| 146 |

+

extensions/ComfyUI-AutomaticCFG/workflows/24steps.png filter=lfs diff=lfs merge=lfs -text

|

| 147 |

+

extensions/ComfyUI-AutomaticCFG/workflows/I'm[[:space:]]just[[:space:]]throwing[[:space:]]a[[:space:]]few[[:space:]]here[[:space:]]that[[:space:]]I[[:space:]]find[[:space:]]nice/00382UI_00001_.png filter=lfs diff=lfs merge=lfs -text

|

| 148 |

+

extensions/ComfyUI-AutomaticCFG/workflows/I'm[[:space:]]just[[:space:]]throwing[[:space:]]a[[:space:]]few[[:space:]]here[[:space:]]that[[:space:]]I[[:space:]]find[[:space:]]nice/01207UI_00001_.png filter=lfs diff=lfs merge=lfs -text

|

| 149 |

+

extensions/ComfyUI-AutomaticCFG/workflows/I'm[[:space:]]just[[:space:]]throwing[[:space:]]a[[:space:]]few[[:space:]]here[[:space:]]that[[:space:]]I[[:space:]]find[[:space:]]nice/01217UI_00001_.png filter=lfs diff=lfs merge=lfs -text

|

| 150 |

+

extensions/ComfyUI-AutomaticCFG/workflows/I'm[[:space:]]just[[:space:]]throwing[[:space:]]a[[:space:]]few[[:space:]]here[[:space:]]that[[:space:]]I[[:space:]]find[[:space:]]nice/a[[:space:]]bad[[:space:]]upscale[[:space:]]looks[[:space:]]like[[:space:]]low[[:space:]]quality[[:space:]]jpeg.png filter=lfs diff=lfs merge=lfs -text

|

| 151 |

+

extensions/ComfyUI-AutomaticCFG/workflows/I'm[[:space:]]just[[:space:]]throwing[[:space:]]a[[:space:]]few[[:space:]]here[[:space:]]that[[:space:]]I[[:space:]]find[[:space:]]nice/another[[:space:]]bad[[:space:]]upscale[[:space:]]looking[[:space:]]like[[:space:]]jpeg.png filter=lfs diff=lfs merge=lfs -text

|

| 152 |

+

extensions/ComfyUI-AutomaticCFG/workflows/I'm[[:space:]]just[[:space:]]throwing[[:space:]]a[[:space:]]few[[:space:]]here[[:space:]]that[[:space:]]I[[:space:]]find[[:space:]]nice/intradasting.png filter=lfs diff=lfs merge=lfs -text

|

| 153 |

+

extensions/ComfyUI-AutomaticCFG/workflows/I'm[[:space:]]just[[:space:]]throwing[[:space:]]a[[:space:]]few[[:space:]]here[[:space:]]that[[:space:]]I[[:space:]]find[[:space:]]nice/laule.png filter=lfs diff=lfs merge=lfs -text

|

| 154 |

+

extensions/ComfyUI-AutomaticCFG/workflows/I'm[[:space:]]just[[:space:]]throwing[[:space:]]a[[:space:]]few[[:space:]]here[[:space:]]that[[:space:]]I[[:space:]]find[[:space:]]nice/niiiiiice.png filter=lfs diff=lfs merge=lfs -text

|

| 155 |

+

extensions/ComfyUI-AutomaticCFG/workflows/I'm[[:space:]]just[[:space:]]throwing[[:space:]]a[[:space:]]few[[:space:]]here[[:space:]]that[[:space:]]I[[:space:]]find[[:space:]]nice/special[[:space:]]double[[:space:]]pass.png filter=lfs diff=lfs merge=lfs -text

|

| 156 |

+

extensions/ComfyUI-AutomaticCFG/workflows/I'm[[:space:]]just[[:space:]]throwing[[:space:]]a[[:space:]]few[[:space:]]here[[:space:]]that[[:space:]]I[[:space:]]find[[:space:]]nice/web.png filter=lfs diff=lfs merge=lfs -text

|

| 157 |

+

extensions/ComfyUI-AutomaticCFG/workflows/My[[:space:]]current[[:space:]]go-to[[:space:]]settings.png filter=lfs diff=lfs merge=lfs -text

|

| 158 |

+

extensions/ComfyUI-AutomaticCFG/workflows/Start_by_this_one.png filter=lfs diff=lfs merge=lfs -text

|

| 159 |

+

extensions/ComfyUI-AutomaticCFG/workflows/attention_modifiers_explainations.png filter=lfs diff=lfs merge=lfs -text

|

| 160 |

+

extensions/ComfyUI-AutomaticCFG/workflows/potato[[:space:]]attention[[:space:]]guidance.png filter=lfs diff=lfs merge=lfs -text

|

| 161 |

+

extensions/ComfyUI-AutomaticCFG/workflows/simple[[:space:]]SD[[:space:]]upscale.png filter=lfs diff=lfs merge=lfs -text

|

| 162 |

+

extensions/ComfyUI-nodes-hnmr/examples/workflow_mbw_multi.png filter=lfs diff=lfs merge=lfs -text

|

| 163 |

+

extensions/ComfyUI-nodes-hnmr/examples/workflow_xyz.png filter=lfs diff=lfs merge=lfs -text

|

| 164 |

+

extensions/DWPose/ControlNet-v1-1-nightly/github_docs/imgs/anime_3.png filter=lfs diff=lfs merge=lfs -text

|

| 165 |

+

extensions/DWPose/ControlNet-v1-1-nightly/github_docs/imgs/anime_4.png filter=lfs diff=lfs merge=lfs -text

|

| 166 |

+

extensions/DWPose/ControlNet-v1-1-nightly/github_docs/imgs/canny_1.png filter=lfs diff=lfs merge=lfs -text

|

| 167 |

+

extensions/DWPose/ControlNet-v1-1-nightly/github_docs/imgs/inpaint_before_fix.png filter=lfs diff=lfs merge=lfs -text

|

| 168 |

+

extensions/DWPose/ControlNet-v1-1-nightly/github_docs/imgs/ip2p_1.png filter=lfs diff=lfs merge=lfs -text

|

| 169 |

+

extensions/DWPose/ControlNet-v1-1-nightly/github_docs/imgs/ip2p_2.png filter=lfs diff=lfs merge=lfs -text

|

| 170 |

+

extensions/DWPose/ControlNet-v1-1-nightly/github_docs/imgs/ip2p_3.png filter=lfs diff=lfs merge=lfs -text

|

| 171 |

+

extensions/DWPose/ControlNet-v1-1-nightly/github_docs/imgs/lineart_1.png filter=lfs diff=lfs merge=lfs -text

|

| 172 |

+

extensions/DWPose/ControlNet-v1-1-nightly/github_docs/imgs/lineart_2.png filter=lfs diff=lfs merge=lfs -text

|

| 173 |

+

extensions/DWPose/ControlNet-v1-1-nightly/github_docs/imgs/lineart_3.png filter=lfs diff=lfs merge=lfs -text

|

| 174 |

+

extensions/DWPose/ControlNet-v1-1-nightly/github_docs/imgs/mlsd_1.png filter=lfs diff=lfs merge=lfs -text

|

| 175 |

+

extensions/DWPose/ControlNet-v1-1-nightly/github_docs/imgs/normal_1.png filter=lfs diff=lfs merge=lfs -text

|

| 176 |

+

extensions/DWPose/ControlNet-v1-1-nightly/github_docs/imgs/normal_2.png filter=lfs diff=lfs merge=lfs -text

|

| 177 |

+

extensions/DWPose/ControlNet-v1-1-nightly/github_docs/imgs/scribble_2.png filter=lfs diff=lfs merge=lfs -text

|

| 178 |

+

extensions/DWPose/ControlNet-v1-1-nightly/github_docs/imgs/seg_2.png filter=lfs diff=lfs merge=lfs -text

|

| 179 |

+

extensions/DWPose/ControlNet-v1-1-nightly/github_docs/imgs/shuffle_1.png filter=lfs diff=lfs merge=lfs -text

|

| 180 |

+

extensions/DWPose/ControlNet-v1-1-nightly/github_docs/imgs/shuffle_2.png filter=lfs diff=lfs merge=lfs -text

|

| 181 |

+

extensions/DWPose/ControlNet-v1-1-nightly/github_docs/imgs/softedge_1.png filter=lfs diff=lfs merge=lfs -text

|

| 182 |

+

extensions/DWPose/ControlNet-v1-1-nightly/github_docs/imgs/tile_new_1.png filter=lfs diff=lfs merge=lfs -text

|

| 183 |

+

extensions/DWPose/ControlNet-v1-1-nightly/github_docs/imgs/tile_new_2.png filter=lfs diff=lfs merge=lfs -text

|

| 184 |

+

extensions/DWPose/ControlNet-v1-1-nightly/github_docs/imgs/tile_new_3.png filter=lfs diff=lfs merge=lfs -text

|

| 185 |

+

extensions/DWPose/ControlNet-v1-1-nightly/github_docs/imgs/tile_new_4.png filter=lfs diff=lfs merge=lfs -text

|

| 186 |

+

extensions/DWPose/ControlNet-v1-1-nightly/test_imgs/bird.png filter=lfs diff=lfs merge=lfs -text

|

| 187 |

+

extensions/DWPose/ControlNet-v1-1-nightly/test_imgs/building.png filter=lfs diff=lfs merge=lfs -text

|

| 188 |

+

extensions/DWPose/ControlNet-v1-1-nightly/test_imgs/building2.png filter=lfs diff=lfs merge=lfs -text

|

| 189 |

+

extensions/DWPose/ControlNet-v1-1-nightly/test_imgs/girls.jpg filter=lfs diff=lfs merge=lfs -text

|

| 190 |

+

extensions/DWPose/ControlNet-v1-1-nightly/test_imgs/person-leaves.png filter=lfs diff=lfs merge=lfs -text

|

| 191 |

+

extensions/DWPose/ControlNet-v1-1-nightly/test_imgs/sn.jpg filter=lfs diff=lfs merge=lfs -text

|

| 192 |

+

extensions/DWPose/mmpose/demo/resources/demo_coco.gif filter=lfs diff=lfs merge=lfs -text

|

| 193 |

+

extensions/DWPose/mmpose/tests/data/humanart/2D_virtual_human/digital_art/000000001648.jpg filter=lfs diff=lfs merge=lfs -text

|

| 194 |

+

extensions/DWPose/resources/architecture.jpg filter=lfs diff=lfs merge=lfs -text

|

| 195 |

+

extensions/DWPose/resources/generation.jpg filter=lfs diff=lfs merge=lfs -text

|

| 196 |

+

extensions/DWPose/resources/iron.gif filter=lfs diff=lfs merge=lfs -text

|

| 197 |

+

extensions/DWPose/resources/jay_pose.jpg filter=lfs diff=lfs merge=lfs -text

|

| 198 |

+

extensions/DWPose/resources/lalaland.gif filter=lfs diff=lfs merge=lfs -text

|

| 199 |

+

extensions/OneButtonPrompt/images/background.png filter=lfs diff=lfs merge=lfs -text

|

| 200 |

+

extensions/SD-WebUI-BatchCheckpointPrompt/img/grid.png filter=lfs diff=lfs merge=lfs -text

|

| 201 |

+

extensions/artjiggler/thesaurus.jsonl filter=lfs diff=lfs merge=lfs -text

|

| 202 |

+

extensions/canvas-zoom/dist/templates/frontend/assets/index-0c8f6dbd.js.map filter=lfs diff=lfs merge=lfs -text

|

| 203 |

+

extensions/canvas-zoom/dist/v1_1_v1_5_1/templates/frontend/assets/index-2a280c06.js.map filter=lfs diff=lfs merge=lfs -text

|

| 204 |

+

extensions/hypernetwork-modify/res/example_1.jpg filter=lfs diff=lfs merge=lfs -text

|

| 205 |

+

extensions/hypernetwork-modify/res/example_2.jpg filter=lfs diff=lfs merge=lfs -text

|

| 206 |

+

extensions/latent-upscale/assets/default.png filter=lfs diff=lfs merge=lfs -text

|

| 207 |

+

extensions/latent-upscale/assets/img2img_latent_upscale_process.png filter=lfs diff=lfs merge=lfs -text

|

| 208 |

+

extensions/latent-upscale/assets/nearest-exact-normal1.png filter=lfs diff=lfs merge=lfs -text

|

| 209 |

+

extensions/latent-upscale/assets/nearest-exact-normal2.png filter=lfs diff=lfs merge=lfs -text

|

| 210 |

+

extensions/latent-upscale/assets/nearest-exact-simple1.png filter=lfs diff=lfs merge=lfs -text

|

| 211 |

+

extensions/latent-upscale/assets/nearest-exact-simple2.png filter=lfs diff=lfs merge=lfs -text

|

| 212 |

+

extensions/latent-upscale/assets/nearest-exact-simple8.png filter=lfs diff=lfs merge=lfs -text

|

| 213 |

+

extensions/lazy-pony-prompter/images/ef-showcase.jpg filter=lfs diff=lfs merge=lfs -text

|

| 214 |

+

extensions/lazy-pony-prompter/images/lulu.png filter=lfs diff=lfs merge=lfs -text

|

| 215 |

+

extensions/ponyverse/showcase.jpg filter=lfs diff=lfs merge=lfs -text

|

| 216 |

+

extensions/posex/image/sample-webui2.jpg filter=lfs diff=lfs merge=lfs -text

|

| 217 |

+

extensions/posex/image/sample-webui2.png filter=lfs diff=lfs merge=lfs -text

|

| 218 |

+

extensions/sd-3dmodel-loader/doc/images/depth/depth3.png filter=lfs diff=lfs merge=lfs -text

|

| 219 |

+

extensions/sd-3dmodel-loader/doc/images/sendto/sendto5.png filter=lfs diff=lfs merge=lfs -text

|

| 220 |

+

extensions/sd-3dmodel-loader/models/body/kachujin.fbx filter=lfs diff=lfs merge=lfs -text

|

| 221 |

+

extensions/sd-3dmodel-loader/models/body/vanguard.fbx filter=lfs diff=lfs merge=lfs -text

|

| 222 |

+

extensions/sd-3dmodel-loader/models/body/warrok.fbx filter=lfs diff=lfs merge=lfs -text

|

| 223 |

+

extensions/sd-3dmodel-loader/models/body/ybot.fbx filter=lfs diff=lfs merge=lfs -text

|

| 224 |

+

extensions/sd-3dmodel-loader/models/hand/hand_right.fbx filter=lfs diff=lfs merge=lfs -text

|

| 225 |

+

extensions/sd-3dmodel-loader/models/pose.vrm filter=lfs diff=lfs merge=lfs -text

|

| 226 |

+

extensions/sd-3dmodel-loader/models/pose2.fbx filter=lfs diff=lfs merge=lfs -text

|

| 227 |

+

extensions/sd-canvas-editor/doc/images/overall.png filter=lfs diff=lfs merge=lfs -text

|

| 228 |

+

extensions/sd-canvas-editor/doc/images/panels.png filter=lfs diff=lfs merge=lfs -text

|

| 229 |

+

extensions/sd-canvas-editor/doc/images/photos.png filter=lfs diff=lfs merge=lfs -text

|

| 230 |

+

extensions/sd-model-organizer/pic/readme/logo.png filter=lfs diff=lfs merge=lfs -text

|

| 231 |

+

extensions/sd-promptbook/static/masterpiece[[:space:]]+[[:space:]]unwanted[[:space:]]+[[:space:]]bad[[:space:]]anatomy.png filter=lfs diff=lfs merge=lfs -text

|

| 232 |

+

extensions/sd-webui-3d-open-pose-editor/downloads/pose/0.5.1675469404/pose_solution_packed_assets.data filter=lfs diff=lfs merge=lfs -text

|

| 233 |

+

extensions/sd-webui-Lora-queue-helper/docs/output_sample.png filter=lfs diff=lfs merge=lfs -text

|

| 234 |

+

extensions/sd-webui-agent-scheduler/docs/images/walkthrough.png filter=lfs diff=lfs merge=lfs -text

|

| 235 |

+

extensions/sd-webui-dycfg/images/05.png filter=lfs diff=lfs merge=lfs -text

|

| 236 |

+

extensions/sd-webui-dycfg/images/09.png filter=lfs diff=lfs merge=lfs -text

|

| 237 |

+

extensions/sd-webui-img2txt/sd-webui-img2txt.gif filter=lfs diff=lfs merge=lfs -text

|

| 238 |

+

extensions/sd-webui-inpaint-anything/images/inpaint_anything_ui_image_1.png filter=lfs diff=lfs merge=lfs -text

|

| 239 |

+

extensions/sd-webui-lcm-sampler/images/img2.jpg filter=lfs diff=lfs merge=lfs -text

|

| 240 |

+

extensions/sd-webui-manga-inpainting/manga_inpainting/repo/examples/representative.png filter=lfs diff=lfs merge=lfs -text

|

| 241 |

+

extensions/sd-webui-panorama-tools/images/example_2.jpg filter=lfs diff=lfs merge=lfs -text

|

| 242 |

+

extensions/sd-webui-panorama-tools/images/example_3.jpg filter=lfs diff=lfs merge=lfs -text

|

| 243 |

+

extensions/sd-webui-panorama-tools/images/panorama_tools_ui_screenshot.jpg filter=lfs diff=lfs merge=lfs -text

|

| 244 |

+

extensions/sd-webui-picbatchwork/bin/ebsynth.dll filter=lfs diff=lfs merge=lfs -text

|

| 245 |

+

extensions/sd-webui-picbatchwork/bin/ebsynth.exe filter=lfs diff=lfs merge=lfs -text

|

| 246 |

+

extensions/sd-webui-picbatchwork/img/2.gif filter=lfs diff=lfs merge=lfs -text

|

| 247 |

+

extensions/sd-webui-pixelart/examples/custom_palette_demo.mp4 filter=lfs diff=lfs merge=lfs -text

|

| 248 |

+

extensions/sd-webui-real-image-artifacts/examples/before.png filter=lfs diff=lfs merge=lfs -text

|

| 249 |

+

extensions/sd-webui-rich-text/assets/color.png filter=lfs diff=lfs merge=lfs -text

|

| 250 |

+

extensions/sd-webui-rich-text/assets/font.png filter=lfs diff=lfs merge=lfs -text

|

| 251 |

+

extensions/sd-webui-rich-text/assets/footnote.png filter=lfs diff=lfs merge=lfs -text

|

| 252 |

+

extensions/sd-webui-rich-text/assets/size.png filter=lfs diff=lfs merge=lfs -text

|

| 253 |

+

extensions/sd-webui-samplers-scheduler-for-v1.6/images/example2.png filter=lfs diff=lfs merge=lfs -text

|

| 254 |

+

extensions/sd-webui-samplers-scheduler-for-v1.6/images/example3.png filter=lfs diff=lfs merge=lfs -text

|

| 255 |

+

extensions/sd-webui-sd-webui-DPMPP_2M_Karras_v2/images/sample1.jpg filter=lfs diff=lfs merge=lfs -text

|

| 256 |

+

extensions/sd-webui-state-manager/preview-docked.png filter=lfs diff=lfs merge=lfs -text

|

| 257 |

+

extensions/sd-webui-state-manager/preview-modal.png filter=lfs diff=lfs merge=lfs -text

|

| 258 |

+

extensions/sd-webui-state-manager/toma-chan.png filter=lfs diff=lfs merge=lfs -text

|

| 259 |

+

extensions/sd-webui-timemachine/images/tm_result.png filter=lfs diff=lfs merge=lfs -text

|

| 260 |

+

extensions/sd-webui-udav2/metric_depth/assets/compare_zoedepth.png filter=lfs diff=lfs merge=lfs -text

|

| 261 |

+

extensions/sd-webui-udav2/metric_depth/dataset/splits/hypersim/train.txt filter=lfs diff=lfs merge=lfs -text

|

| 262 |

+

extensions/sd-webui-waifu2x-upscaler/waifu2x/yu45020/model_check_points/Upconv_7/anime/noise0_scale2.0x_model.json filter=lfs diff=lfs merge=lfs -text

|

| 263 |

+

extensions/sd-webui-waifu2x-upscaler/waifu2x/yu45020/model_check_points/Upconv_7/anime/noise1_scale2.0x_model.json filter=lfs diff=lfs merge=lfs -text

|

| 264 |

+

extensions/sd-webui-waifu2x-upscaler/waifu2x/yu45020/model_check_points/Upconv_7/anime/noise2_scale2.0x_model.json filter=lfs diff=lfs merge=lfs -text

|

| 265 |

+

extensions/sd-webui-waifu2x-upscaler/waifu2x/yu45020/model_check_points/Upconv_7/anime/noise3_scale2.0x_model.json filter=lfs diff=lfs merge=lfs -text

|

| 266 |

+

extensions/sd-webui-waifu2x-upscaler/waifu2x/yu45020/model_check_points/Upconv_7/photo/noise0_scale2.0x_model.json filter=lfs diff=lfs merge=lfs -text

|

| 267 |

+

extensions/sd-webui-waifu2x-upscaler/waifu2x/yu45020/model_check_points/Upconv_7/photo/noise1_scale2.0x_model.json filter=lfs diff=lfs merge=lfs -text

|

| 268 |

+

extensions/sd-webui-waifu2x-upscaler/waifu2x/yu45020/model_check_points/Upconv_7/photo/noise2_scale2.0x_model.json filter=lfs diff=lfs merge=lfs -text

|

| 269 |

+

extensions/sd-webui-waifu2x-upscaler/waifu2x/yu45020/model_check_points/Upconv_7/photo/noise3_scale2.0x_model.json filter=lfs diff=lfs merge=lfs -text

|

| 270 |

+

extensions/sd-webui-xyz-addon/img/Extra-Network-Weight.png filter=lfs diff=lfs merge=lfs -text

|

| 271 |

+

extensions/sd-webui-xyz-addon/img/Multi-Axis-2.png filter=lfs diff=lfs merge=lfs -text

|

| 272 |

+

extensions/sd-webui-xyz-addon/img/Multi-Axis-3.png filter=lfs diff=lfs merge=lfs -text

|

| 273 |

+

extensions/sd-webui-xyz-addon/img/Prompt-SR-Combinations.png filter=lfs diff=lfs merge=lfs -text

|

| 274 |

+

extensions/sd-webui-xyz-addon/img/Prompt-SR-P.png filter=lfs diff=lfs merge=lfs -text

|

| 275 |

+

extensions/sd-webui-xyz-addon/img/Prompt-SR-Permutations-1-2.png filter=lfs diff=lfs merge=lfs -text

|

| 276 |

+

extensions/sd-webui-xyz-addon/img/Prompt-SR-Permutations-2.png filter=lfs diff=lfs merge=lfs -text

|

| 277 |

+

extensions/sd-webui-xyz-addon/img/Prompt-SR-Permutations.png filter=lfs diff=lfs merge=lfs -text

|

| 278 |

+

extensions/sd-webui-xyz-addon/img/Prompt-SR.png filter=lfs diff=lfs merge=lfs -text

|

| 279 |

+

extensions/sd_webui_masactrl/resources/img/xyz_grid-0010-1508457017.png filter=lfs diff=lfs merge=lfs -text

|

| 280 |

+

extensions/sd_webui_masactrl-ash/resources/img/xyz_grid-0010-1508457017.png filter=lfs diff=lfs merge=lfs -text

|

| 281 |

+

extensions/sd_webui_ootdiffusion/preview.png filter=lfs diff=lfs merge=lfs -text

|

| 282 |

+

extensions/sd_webui_realtime_lcm_canvas/preview.png filter=lfs diff=lfs merge=lfs -text

|

| 283 |

+

extensions/sd_webui_sghm/preview.png filter=lfs diff=lfs merge=lfs -text

|

| 284 |

+

extensions/stable-diffusion-webui-dumpunet/images/IN00.jpg filter=lfs diff=lfs merge=lfs -text

|

| 285 |

+

extensions/stable-diffusion-webui-dumpunet/images/IN05.jpg filter=lfs diff=lfs merge=lfs -text

|

| 286 |

+

extensions/stable-diffusion-webui-dumpunet/images/OUT06.jpg filter=lfs diff=lfs merge=lfs -text

|

| 287 |

+

extensions/stable-diffusion-webui-dumpunet/images/OUT11.jpg filter=lfs diff=lfs merge=lfs -text

|

| 288 |

+

extensions/stable-diffusion-webui-dumpunet/images/README_00_01_color.png filter=lfs diff=lfs merge=lfs -text

|

| 289 |

+

extensions/stable-diffusion-webui-dumpunet/images/README_00_01_gray.png filter=lfs diff=lfs merge=lfs -text

|

| 290 |

+

extensions/stable-diffusion-webui-dumpunet/images/README_02.png filter=lfs diff=lfs merge=lfs -text

|

| 291 |

+

extensions/stable-diffusion-webui-dumpunet/images/attn-IN01.png filter=lfs diff=lfs merge=lfs -text

|

| 292 |

+

extensions/stable-diffusion-webui-dumpunet/images/attn-OUT10.png filter=lfs diff=lfs merge=lfs -text

|

| 293 |

+

extensions/stable-diffusion-webui-eyemask/models/shape_predictor_68_face_landmarks.dat filter=lfs diff=lfs merge=lfs -text

|

| 294 |

+

extensions/stable-diffusion-webui-hires-fix-progressive/img/highres.png filter=lfs diff=lfs merge=lfs -text

|

| 295 |

+

extensions/stable-diffusion-webui-hires-fix-progressive/img/pg_10x12.png filter=lfs diff=lfs merge=lfs -text

|

| 296 |

+

extensions/stable-diffusion-webui-hires-fix-progressive/img/pg_1x120.png filter=lfs diff=lfs merge=lfs -text

|

| 297 |

+

extensions/stable-diffusion-webui-hires-fix-progressive/img/pg_2x60.png filter=lfs diff=lfs merge=lfs -text

|

| 298 |

+

extensions/stable-diffusion-webui-hires-fix-progressive/img/pg_3x40.png filter=lfs diff=lfs merge=lfs -text

|

| 299 |

+

extensions/stable-diffusion-webui-hires-fix-progressive/img/pg_4x15.png filter=lfs diff=lfs merge=lfs -text

|

| 300 |

+

extensions/stable-diffusion-webui-hires-fix-progressive/img/pg_4x23.png filter=lfs diff=lfs merge=lfs -text

|

| 301 |

+

extensions/stable-diffusion-webui-hires-fix-progressive/img/pg_4x3.png filter=lfs diff=lfs merge=lfs -text

|

| 302 |

+

extensions/stable-diffusion-webui-hires-fix-progressive/img/pg_4x30.png filter=lfs diff=lfs merge=lfs -text

|

| 303 |

+

extensions/stable-diffusion-webui-hires-fix-progressive/img/pg_4x5.png filter=lfs diff=lfs merge=lfs -text

|

| 304 |

+

extensions/stable-diffusion-webui-hires-fix-progressive/img/pg_4x8.png filter=lfs diff=lfs merge=lfs -text

|

| 305 |

+

extensions/stable-diffusion-webui-hires-fix-progressive/img/pg_6x20.png filter=lfs diff=lfs merge=lfs -text

|

| 306 |

+

extensions/stable-diffusion-webui-hires-fix-progressive/img/pg_8x15.png filter=lfs diff=lfs merge=lfs -text

|

| 307 |

+

extensions/stable-diffusion-webui-hires-fix-progressive/img/std_10.png filter=lfs diff=lfs merge=lfs -text

|

| 308 |

+

extensions/stable-diffusion-webui-hires-fix-progressive/img/std_120.png filter=lfs diff=lfs merge=lfs -text

|

| 309 |

+

extensions/stable-diffusion-webui-hires-fix-progressive/img/std_20.png filter=lfs diff=lfs merge=lfs -text

|

| 310 |

+

extensions/stable-diffusion-webui-hires-fix-progressive/img/std_30.png filter=lfs diff=lfs merge=lfs -text

|

| 311 |

+

extensions/stable-diffusion-webui-hires-fix-progressive/img/std_60.png filter=lfs diff=lfs merge=lfs -text

|

| 312 |

+

extensions/stable-diffusion-webui-hires-fix-progressive/img/std_90.png filter=lfs diff=lfs merge=lfs -text

|

| 313 |

+

extensions/stable-diffusion-webui-intm/images/IMAGE.png filter=lfs diff=lfs merge=lfs -text

|

| 314 |

+

extensions/stable-diffusion-webui-simple-manga-maker/SP-MangaEditer/03_images/imgPromptHelper/bottom/00106--rebuild.png filter=lfs diff=lfs merge=lfs -text

|

| 315 |

+

extensions/stable-diffusion-webui-simple-manga-maker/SP-MangaEditer/03_images/imgPromptHelper/skin/wrinkles-skin.png filter=lfs diff=lfs merge=lfs -text

|

| 316 |

+

extensions/stable-diffusion-webui-simple-manga-maker/SP-MangaEditer/03_images/imgPromptHelper/top-bottom/00067-2139881315.png filter=lfs diff=lfs merge=lfs -text

|

| 317 |

+

extensions/stable-diffusion-webui-simple-manga-maker/SP-MangaEditer/font/851CHIKARA-DZUYOKU_kanaA_004/851CHIKARA-DZUYOKU_kanaA_004.ttf filter=lfs diff=lfs merge=lfs -text

|

| 318 |

+

extensions/stable-diffusion-webui-simple-manga-maker/SP-MangaEditer/font/851MkPOP_101/851MkPOP_101.ttf filter=lfs diff=lfs merge=lfs -text

|

| 319 |

+

extensions/stable-diffusion-webui-simple-manga-maker/SP-MangaEditer/font/ChalkJP_3/Chalk-JP.otf filter=lfs diff=lfs merge=lfs -text

|

| 320 |

+

extensions/stable-diffusion-webui-simple-manga-maker/SP-MangaEditer/font/DokiDokiFont2/DokiDokiFantasia.otf filter=lfs diff=lfs merge=lfs -text

|

| 321 |

+

extensions/stable-diffusion-webui-simple-manga-maker/SP-MangaEditer/font/DotGothic16/DotGothic16-Regular.ttf filter=lfs diff=lfs merge=lfs -text

|

| 322 |

+

extensions/stable-diffusion-webui-simple-manga-maker/SP-MangaEditer/font/Klee_One/KleeOne-Regular.ttf filter=lfs diff=lfs merge=lfs -text

|

| 323 |

+

extensions/stable-diffusion-webui-simple-manga-maker/SP-MangaEditer/font/OhisamaFont11/OhisamaFont11.ttf filter=lfs diff=lfs merge=lfs -text

|

| 324 |

+

extensions/stable-diffusion-webui-simple-manga-maker/SP-MangaEditer/font/Rampart_One/RampartOne-Regular.ttf filter=lfs diff=lfs merge=lfs -text

|

| 325 |

+

extensions/stable-diffusion-webui-simple-manga-maker/SP-MangaEditer/font/Stick/Stick-Regular.ttf filter=lfs diff=lfs merge=lfs -text

|

| 326 |

+

extensions/stable-diffusion-webui-simple-manga-maker/SP-MangaEditer/font/Train_One/TrainOne-Regular.ttf filter=lfs diff=lfs merge=lfs -text

|

| 327 |

+

extensions/stable-diffusion-webui-size-travel/img/ddim_advance.png filter=lfs diff=lfs merge=lfs -text

|

| 328 |

+

extensions/stable-diffusion-webui-size-travel/img/ddim_simple.png filter=lfs diff=lfs merge=lfs -text

|

| 329 |

+

extensions/stable-diffusion-webui-size-travel/img/eular_a_advance.png filter=lfs diff=lfs merge=lfs -text

|

| 330 |

+

extensions/stable-diffusion-webui-sonar/img/momentum.png filter=lfs diff=lfs merge=lfs -text

|

| 331 |

+

extensions/stable-diffusion-webui-text2prompt/pic/pic0.png filter=lfs diff=lfs merge=lfs -text

|

| 332 |

+

extensions/stable-diffusion-webui-two-shot/gradio-3.16.2-py3-none-any.whl filter=lfs diff=lfs merge=lfs -text

|

extensions/321Prompt/321Prompt/Readme.md

ADDED

|

@@ -0,0 +1,8 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

This is my attempt to integrate this script as an extension into A1111, but it's not working and I don't have time!

|

| 2 |

+

(I'll let you figure out why, with all my apologies but if this piece of code can help you, so much the better)

|

| 3 |

+

|

| 4 |

+

Place this directory "321prompt" with this file in it "__init__.py" in the extension directory of A1111.

|

| 5 |

+

Relaunch A1111 and, it's does'nt work !

|

| 6 |

+

|

| 7 |

+

Hope you do it better !

|

| 8 |

+

Thanks

|

extensions/321Prompt/321Prompt/__init__.py

ADDED

|

@@ -0,0 +1,51 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import gradio as gr

|

| 2 |

+

from modules import scripts

|

| 3 |

+

|

| 4 |

+

class PromptGeneratorScript(scripts.Script):

|

| 5 |

+

def title(self):

|

| 6 |

+

return "Prompt Generator"

|

| 7 |

+

|

| 8 |

+

def show(self, is_img2img):

|

| 9 |

+

return True

|

| 10 |

+

|

| 11 |

+

def ui(self, is_img2img):

|

| 12 |

+

start_phrase1 = gr.Textbox(label="Début de la phrase 1", placeholder="Un [ chat: chien: ")

|

| 13 |

+

start_value1 = gr.Number(label="Valeur de départ 1", value=0.0)

|

| 14 |

+

end_value1 = gr.Number(label="Valeur d'arrivée 1", value=1.0)

|

| 15 |

+

end_phrase1 = gr.Textbox(label="Fin de la phrase 1", placeholder="] dans un jardin.")

|

| 16 |

+

num_phrases1 = gr.Number(label="Nombre de phrases 1", value=10)

|

| 17 |

+

|

| 18 |

+

start_phrase2 = gr.Textbox(label="Début de la phrase 2", placeholder="le temps est [ beau: pluvieux: ", optional=True)

|

| 19 |

+

start_value2 = gr.Number(label="Valeur de départ 2", value=0.0, optional=True)

|

| 20 |

+

end_value2 = gr.Number(label="Valeur d'arrivée 2", value=1.0, optional=True)

|

| 21 |

+

end_phrase2 = gr.Textbox(label="Fin de la phrase 2", placeholder="] sur la montagne.", optional=True)

|

| 22 |

+

num_phrases2 = gr.Number(label="Nombre de phrases 2", value=10, optional=True)

|

| 23 |

+

|

| 24 |

+

generate_button = gr.Button("Générer")

|

| 25 |

+

output = gr.Textbox(label="Résultats", lines=10, interactive=False)

|

| 26 |

+

|

| 27 |

+

def generate_phrases(start_phrase1, start_value1, end_value1, end_phrase1, num_phrases1,

|

| 28 |

+

start_phrase2, start_value2, end_value2, end_phrase2, num_phrases2):

|

| 29 |

+

increment1 = (end_value1 - start_value1) / (num_phrases1 - 1)

|

| 30 |

+

increment2 = (end_value2 - start_value2) / (num_phrases2 - 1) if start_phrase2 else 0

|

| 31 |

+

|

| 32 |

+

combined_results = ""

|

| 33 |

+

max_phrases = max(num_phrases1, num_phrases2)

|

| 34 |

+

for i in range(max_phrases):

|

| 35 |

+

current_value1 = start_value1 + (increment1 * i)

|

| 36 |

+

current_value2 = start_value2 + (increment2 * i) if start_phrase2 else ''

|

| 37 |

+

phrase1 = f"{start_phrase1}{current_value1}{end_phrase1}"

|

| 38 |

+

phrase2 = f", {start_phrase2}{current_value2}{end_phrase2}" if start_phrase2 else ''

|

| 39 |

+

combined_results += phrase1 + phrase2 + "\n"

|

| 40 |

+

|

| 41 |

+

return combined_results

|

| 42 |

+

|

| 43 |

+

generate_button.click(fn=generate_phrases,

|

| 44 |

+

inputs=[start_phrase1, start_value1, end_value1, end_phrase1, num_phrases1,

|

| 45 |

+

start_phrase2, start_value2, end_value2, end_phrase2, num_phrases2],

|

| 46 |

+

outputs=output)

|

| 47 |

+

|

| 48 |

+

return [start_phrase1, start_value1, end_value1, end_phrase1, num_phrases1,

|

| 49 |

+

start_phrase2, start_value2, end_value2, end_phrase2, num_phrases2, generate_button, output]

|

| 50 |

+

|

| 51 |

+

scripts.register_script(PromptGeneratorScript())

|

extensions/321Prompt/321prompt.php

ADDED

|

@@ -0,0 +1,105 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

<!DOCTYPE html>

|

| 2 |

+

<html>

|

| 3 |

+

<head>

|

| 4 |

+

<title>Générateur de Phrases</title>

|

| 5 |

+

<style>

|

| 6 |

+

.container {

|

| 7 |

+

display: flex;

|

| 8 |

+

flex-wrap: wrap;

|

| 9 |

+

}

|

| 10 |

+

.box {

|

| 11 |

+

flex: 1;

|

| 12 |

+

min-width: 45%;

|

| 13 |

+

padding: 10px;

|

| 14 |

+

box-sizing: border-box;

|

| 15 |

+

}

|

| 16 |

+

textarea {

|

| 17 |

+

width: 100%;

|

| 18 |

+

height: 200px;

|

| 19 |

+

}

|

| 20 |

+

</style>

|

| 21 |

+

</head>

|

| 22 |

+

<body>

|

| 23 |

+

|

| 24 |

+

<h1>Générateur de Phrases</h1>

|

| 25 |

+

|

| 26 |

+

<form method="post" action="">

|

| 27 |

+

<div class="container">

|

| 28 |

+

<!-- Boîte 1 -->

|

| 29 |

+

<div class="box">

|

| 30 |

+

<label for="start_phrase1">Début de la phrase 1 :</label><br>

|

| 31 |

+

<input type="text" id="start_phrase1" name="start_phrase1" value="<?php echo isset($_POST['start_phrase1']) ? htmlspecialchars($_POST['start_phrase1']) : ''; ?>" required><br><br>

|

| 32 |

+

|

| 33 |

+

<label for="start_value1">Valeur de départ :</label><br>

|

| 34 |

+

<input type="number" step="0.01" id="start_value1" name="start_value1" value="<?php echo isset($_POST['start_value1']) ? htmlspecialchars($_POST['start_value1']) : ''; ?>" required><br><br>

|

| 35 |

+

|

| 36 |

+

<label for="end_value1">Valeur d'arrivée :</label><br>

|

| 37 |

+

<input type="number" step="0.01" id="end_value1" name="end_value1" value="<?php echo isset($_POST['end_value1']) ? htmlspecialchars($_POST['end_value1']) : ''; ?>" required><br><br>

|

| 38 |

+

|

| 39 |

+

<label for="end_phrase1">Fin de la phrase 1 :</label><br>

|

| 40 |

+

<input type="text" id="end_phrase1" name="end_phrase1" value="<?php echo isset($_POST['end_phrase1']) ? htmlspecialchars($_POST['end_phrase1']) : ''; ?>" required><br><br>

|

| 41 |

+

|

| 42 |

+

<label for="num_phrases1">Nombre de phrases :</label><br>

|

| 43 |

+

<input type="number" id="num_phrases1" name="num_phrases1" value="<?php echo isset($_POST['num_phrases1']) ? htmlspecialchars($_POST['num_phrases1']) : ''; ?>" required><br><br>

|

| 44 |

+

</div>

|

| 45 |

+

|

| 46 |

+

<!-- Boîte 2 -->

|

| 47 |

+

<div class="box">

|

| 48 |

+

<label for="start_phrase2">Début de la phrase 2 :</label><br>

|

| 49 |

+

<input type="text" id="start_phrase2" name="start_phrase2" value="<?php echo isset($_POST['start_phrase2']) ? htmlspecialchars($_POST['start_phrase2']) : ''; ?>"><br><br>

|

| 50 |

+

|

| 51 |

+

<label for="start_value2">Valeur de départ :</label><br>

|

| 52 |

+

<input type="number" step="0.01" id="start_value2" name="start_value2" value="<?php echo isset($_POST['start_value2']) ? htmlspecialchars($_POST['start_value2']) : ''; ?>"><br><br>

|

| 53 |

+

|

| 54 |

+

<label for="end_value2">Valeur d'arrivée :</label><br>

|

| 55 |

+

<input type="number" step="0.01" id="end_value2" name="end_value2" value="<?php echo isset($_POST['end_value2']) ? htmlspecialchars($_POST['end_value2']) : ''; ?>"><br><br>

|

| 56 |

+

|

| 57 |

+

<label for="end_phrase2">Fin de la phrase 2 :</label><br>

|

| 58 |

+

<input type="text" id="end_phrase2" name="end_phrase2" value="<?php echo isset($_POST['end_phrase2']) ? htmlspecialchars($_POST['end_phrase2']) : ''; ?>"><br><br>

|

| 59 |

+

|

| 60 |

+

<label for="num_phrases2">Nombre de phrases :</label><br>

|

| 61 |

+

<input type="number" id="num_phrases2" name="num_phrases2" value="<?php echo isset($_POST['num_phrases2']) ? htmlspecialchars($_POST['num_phrases2']) : ''; ?>"><br><br>

|

| 62 |

+

</div>

|

| 63 |

+

</div>

|

| 64 |

+

<input type="submit" value="Générer">

|

| 65 |

+

</form>

|

| 66 |

+

|

| 67 |

+

<?php

|

| 68 |

+

if ($_SERVER["REQUEST_METHOD"] == "POST") {

|

| 69 |

+

// Récupérer les entrées du formulaire pour la première phrase

|

| 70 |

+

$startPhrase1 = $_POST['start_phrase1'];

|

| 71 |

+

$startValue1 = floatval($_POST['start_value1']);

|

| 72 |

+

$endValue1 = floatval($_POST['end_value1']);

|

| 73 |

+

$endPhrase1 = $_POST['end_phrase1'];

|

| 74 |

+

$numPhrases1 = intval($_POST['num_phrases1']);

|

| 75 |

+

|

| 76 |

+

// Récupérer les entrées du formulaire pour la deuxième phrase (si présentes)

|

| 77 |

+

$startPhrase2 = isset($_POST['start_phrase2']) ? $_POST['start_phrase2'] : '';

|

| 78 |

+

$startValue2 = isset($_POST['start_value2']) ? floatval($_POST['start_value2']) : 0;

|

| 79 |

+

$endValue2 = isset($_POST['end_value2']) ? floatval($_POST['end_value2']) : 0;

|

| 80 |

+

$endPhrase2 = isset($_POST['end_phrase2']) ? $_POST['end_phrase2'] : '';

|

| 81 |

+

$numPhrases2 = isset($_POST['num_phrases2']) ? intval($_POST['num_phrases2']) : $numPhrases1;

|

| 82 |

+

|

| 83 |

+

// Calculer l'incrément pour les phrases

|

| 84 |

+

$increment1 = ($endValue1 - $startValue1) / ($numPhrases1 - 1);

|

| 85 |

+

$increment2 = ($startPhrase2 !== '') ? ($endValue2 - $startValue2) / ($numPhrases2 - 1) : 0;

|

| 86 |

+

|

| 87 |

+

// Générer les phrases combinées

|

| 88 |

+

$combinedResults = "";

|

| 89 |

+

$maxPhrases = max($numPhrases1, $numPhrases2);

|

| 90 |

+

for ($i = 0; $i < $maxPhrases; $i++) {

|

| 91 |

+

$currentValue1 = $startValue1 + ($increment1 * $i);

|

| 92 |

+

$currentValue2 = ($startPhrase2 !== '') ? $startValue2 + ($increment2 * $i) : '';

|

| 93 |

+

$phrase1 = $startPhrase1 . $currentValue1 . $endPhrase1;

|

| 94 |

+

$phrase2 = ($startPhrase2 !== '') ? ' , ' . $startPhrase2 . $currentValue2 . $endPhrase2 : '';

|

| 95 |

+

$combinedResults .= $phrase1 . $phrase2 . "\n";

|

| 96 |

+

}

|

| 97 |

+

|

| 98 |

+

// Afficher les résultats

|

| 99 |

+

echo "<h2>Résultats :</h2>";

|

| 100 |

+

echo '<textarea readonly>' . htmlspecialchars($combinedResults) . '</textarea>';

|

| 101 |

+

}

|

| 102 |

+

?>

|

| 103 |

+

|

| 104 |

+

</body>

|

| 105 |

+

</html>

|

extensions/321Prompt/321prompt.png

ADDED

|

extensions/321Prompt/LICENSE

ADDED

|

@@ -0,0 +1,121 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Creative Commons Legal Code

|

| 2 |

+

|

| 3 |

+

CC0 1.0 Universal

|

| 4 |

+

|

| 5 |

+

CREATIVE COMMONS CORPORATION IS NOT A LAW FIRM AND DOES NOT PROVIDE

|

| 6 |

+

LEGAL SERVICES. DISTRIBUTION OF THIS DOCUMENT DOES NOT CREATE AN

|

| 7 |

+

ATTORNEY-CLIENT RELATIONSHIP. CREATIVE COMMONS PROVIDES THIS

|

| 8 |

+

INFORMATION ON AN "AS-IS" BASIS. CREATIVE COMMONS MAKES NO WARRANTIES

|

| 9 |

+

REGARDING THE USE OF THIS DOCUMENT OR THE INFORMATION OR WORKS

|

| 10 |

+

PROVIDED HEREUNDER, AND DISCLAIMS LIABILITY FOR DAMAGES RESULTING FROM

|

| 11 |

+

THE USE OF THIS DOCUMENT OR THE INFORMATION OR WORKS PROVIDED

|

| 12 |

+

HEREUNDER.

|

| 13 |

+

|

| 14 |

+

Statement of Purpose

|

| 15 |

+

|

| 16 |

+

The laws of most jurisdictions throughout the world automatically confer

|

| 17 |

+

exclusive Copyright and Related Rights (defined below) upon the creator

|

| 18 |

+

and subsequent owner(s) (each and all, an "owner") of an original work of

|

| 19 |

+

authorship and/or a database (each, a "Work").

|

| 20 |

+

|

| 21 |

+

Certain owners wish to permanently relinquish those rights to a Work for

|

| 22 |

+

the purpose of contributing to a commons of creative, cultural and

|

| 23 |

+

scientific works ("Commons") that the public can reliably and without fear

|

| 24 |

+

of later claims of infringement build upon, modify, incorporate in other

|

| 25 |

+

works, reuse and redistribute as freely as possible in any form whatsoever

|

| 26 |

+

and for any purposes, including without limitation commercial purposes.

|

| 27 |

+

These owners may contribute to the Commons to promote the ideal of a free

|

| 28 |

+

culture and the further production of creative, cultural and scientific

|

| 29 |

+

works, or to gain reputation or greater distribution for their Work in

|

| 30 |

+

part through the use and efforts of others.

|

| 31 |

+

|

| 32 |

+

For these and/or other purposes and motivations, and without any

|

| 33 |

+

expectation of additional consideration or compensation, the person

|

| 34 |

+

associating CC0 with a Work (the "Affirmer"), to the extent that he or she

|

| 35 |

+

is an owner of Copyright and Related Rights in the Work, voluntarily

|

| 36 |

+

elects to apply CC0 to the Work and publicly distribute the Work under its

|

| 37 |

+

terms, with knowledge of his or her Copyright and Related Rights in the

|

| 38 |

+

Work and the meaning and intended legal effect of CC0 on those rights.

|

| 39 |

+

|

| 40 |

+

1. Copyright and Related Rights. A Work made available under CC0 may be

|

| 41 |

+

protected by copyright and related or neighboring rights ("Copyright and

|

| 42 |

+

Related Rights"). Copyright and Related Rights include, but are not

|

| 43 |

+

limited to, the following:

|

| 44 |

+

|

| 45 |

+

i. the right to reproduce, adapt, distribute, perform, display,

|

| 46 |

+

communicate, and translate a Work;

|

| 47 |

+

ii. moral rights retained by the original author(s) and/or performer(s);

|

| 48 |

+

iii. publicity and privacy rights pertaining to a person's image or

|

| 49 |

+

likeness depicted in a Work;

|

| 50 |

+

iv. rights protecting against unfair competition in regards to a Work,

|

| 51 |

+

subject to the limitations in paragraph 4(a), below;

|

| 52 |

+

v. rights protecting the extraction, dissemination, use and reuse of data

|

| 53 |

+

in a Work;

|

| 54 |

+

vi. database rights (such as those arising under Directive 96/9/EC of the

|

| 55 |

+

European Parliament and of the Council of 11 March 1996 on the legal

|

| 56 |

+

protection of databases, and under any national implementation

|

| 57 |

+

thereof, including any amended or successor version of such

|

| 58 |

+

directive); and

|

| 59 |

+

vii. other similar, equivalent or corresponding rights throughout the

|

| 60 |

+

world based on applicable law or treaty, and any national

|

| 61 |

+

implementations thereof.

|

| 62 |

+

|

| 63 |

+

2. Waiver. To the greatest extent permitted by, but not in contravention

|

| 64 |

+

of, applicable law, Affirmer hereby overtly, fully, permanently,

|

| 65 |

+

irrevocably and unconditionally waives, abandons, and surrenders all of

|

| 66 |

+

Affirmer's Copyright and Related Rights and associated claims and causes

|

| 67 |

+

of action, whether now known or unknown (including existing as well as

|

| 68 |

+

future claims and causes of action), in the Work (i) in all territories

|

| 69 |

+

worldwide, (ii) for the maximum duration provided by applicable law or

|

| 70 |

+

treaty (including future time extensions), (iii) in any current or future

|

| 71 |

+

medium and for any number of copies, and (iv) for any purpose whatsoever,

|

| 72 |

+

including without limitation commercial, advertising or promotional

|

| 73 |

+

purposes (the "Waiver"). Affirmer makes the Waiver for the benefit of each

|

| 74 |

+

member of the public at large and to the detriment of Affirmer's heirs and

|

| 75 |

+

successors, fully intending that such Waiver shall not be subject to

|

| 76 |

+

revocation, rescission, cancellation, termination, or any other legal or

|

| 77 |

+

equitable action to disrupt the quiet enjoyment of the Work by the public

|

| 78 |

+

as contemplated by Affirmer's express Statement of Purpose.

|

| 79 |

+

|

| 80 |

+

3. Public License Fallback. Should any part of the Waiver for any reason

|

| 81 |

+

be judged legally invalid or ineffective under applicable law, then the

|

| 82 |

+

Waiver shall be preserved to the maximum extent permitted taking into

|

| 83 |

+

account Affirmer's express Statement of Purpose. In addition, to the

|

| 84 |

+

extent the Waiver is so judged Affirmer hereby grants to each affected

|

| 85 |

+

person a royalty-free, non transferable, non sublicensable, non exclusive,

|

| 86 |

+

irrevocable and unconditional license to exercise Affirmer's Copyright and

|

| 87 |

+

Related Rights in the Work (i) in all territories worldwide, (ii) for the

|

| 88 |

+

maximum duration provided by applicable law or treaty (including future

|

| 89 |

+

time extensions), (iii) in any current or future medium and for any number

|

| 90 |

+

of copies, and (iv) for any purpose whatsoever, including without

|

| 91 |

+

limitation commercial, advertising or promotional purposes (the

|

| 92 |

+

"License"). The License shall be deemed effective as of the date CC0 was

|

| 93 |

+

applied by Affirmer to the Work. Should any part of the License for any

|

| 94 |

+

reason be judged legally invalid or ineffective under applicable law, such

|

| 95 |

+

partial invalidity or ineffectiveness shall not invalidate the remainder

|

| 96 |

+

of the License, and in such case Affirmer hereby affirms that he or she

|

| 97 |

+

will not (i) exercise any of his or her remaining Copyright and Related

|

| 98 |

+

Rights in the Work or (ii) assert any associated claims and causes of

|

| 99 |

+

action with respect to the Work, in either case contrary to Affirmer's

|

| 100 |

+

express Statement of Purpose.

|

| 101 |

+

|

| 102 |

+

4. Limitations and Disclaimers.

|

| 103 |

+

|

| 104 |

+

a. No trademark or patent rights held by Affirmer are waived, abandoned,

|

| 105 |

+

surrendered, licensed or otherwise affected by this document.

|

| 106 |

+

b. Affirmer offers the Work as-is and makes no representations or

|

| 107 |

+

warranties of any kind concerning the Work, express, implied,

|

| 108 |

+

statutory or otherwise, including without limitation warranties of

|

| 109 |

+

title, merchantability, fitness for a particular purpose, non

|

| 110 |

+

infringement, or the absence of latent or other defects, accuracy, or

|

| 111 |

+

the present or absence of errors, whether or not discoverable, all to

|

| 112 |

+

the greatest extent permissible under applicable law.

|

| 113 |

+

c. Affirmer disclaims responsibility for clearing rights of other persons

|

| 114 |

+

that may apply to the Work or any use thereof, including without

|

| 115 |

+

limitation any person's Copyright and Related Rights in the Work.

|

| 116 |

+

Further, Affirmer disclaims responsibility for obtaining any necessary

|

| 117 |

+

consents, permissions or other rights required for any use of the

|

| 118 |

+

Work.

|

| 119 |

+

d. Affirmer understands and acknowledges that Creative Commons is not a

|

| 120 |

+

party to this document and has no duty or obligation with respect to

|

| 121 |

+

this CC0 or use of the Work.

|

extensions/321Prompt/README.md

ADDED

|

@@ -0,0 +1,41 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# 321Prompt

|

| 2 |

+

"321prompt.php" is a php script to generate prompts for A1111. (stable diffusion)

|

| 3 |

+

This involves creating a prompt text file with incremented weights to put in the A1111 interface: "Script/Prompts from file or textbox".

|

| 4 |

+

|

| 5 |

+

the PHP script works on a classic web server and I tried to make an extension for A1111 but one thing escapes me (it's buggy).

|

| 6 |

+

Do with it what you want!

|

| 7 |

+

|

| 8 |

+

You will be able to generate a prompt text of this type in 3-4 clicks:

|

| 9 |

+

|

| 10 |

+

a [cat: dog:0] in the garden , it's [Rainy: sunny: 1 ] Outside

|

| 11 |

+

|

| 12 |

+

a [cat: dog:0.125] in the garden , it's [Rainy: sunny: 0.875 ] Outside

|

| 13 |

+

|

| 14 |

+

a [cat: dog:0.25] in the garden , it's [Rainy: sunny: 0.75 ] Outside

|

| 15 |

+

|

| 16 |

+

a [cat: dog:0.375] in the garden , it's [Rainy: sunny: 0.625 ] Outside

|

| 17 |

+

|

| 18 |

+

a [cat: dog:0.5] in the garden , it's [Rainy: sunny: 0.5 ] Outside

|

| 19 |

+

|

| 20 |

+

a [cat: dog:0.625] in the garden , it's [Rainy: sunny: 0.375 ] Outside

|

| 21 |

+

|

| 22 |

+

a [cat: dog:0.75] in the garden , it's [Rainy: sunny: 0.25 ] Outside

|

| 23 |

+

|

| 24 |

+

a [cat: dog:0.875] in the garden , it's [Rainy: sunny: 0.125 ] Outside

|

| 25 |

+

|

| 26 |

+

a [cat: dog:1] in the garden , it's [Rainy: sunny: 0 ] Outside

|

| 27 |

+

|

| 28 |

+

|

| 29 |

+

Look at the screenshot (321prompt.png) for understand how to play with this script !

|

| 30 |

+

|

| 31 |

+

It's work with one sentence (sentence 1, and you do not have to fulfill sentence 2 !)

|

| 32 |

+

But you can fullfill sentence 1 + sentence 2 for more fun ! it's work !

|

| 33 |

+

And you can increase or decrease the weight for sentence 1 or increase or decrease weight for sentence 2.

|

| 34 |

+

Bye !

|

| 35 |

+

|

| 36 |

+

Demo here: [https://lostcantina.com/321prompt.php](https://lostcantina.com/321prompt.php)

|

| 37 |

+

(sorry, i'm french but you can translate easily with Chrome !)

|

| 38 |

+

|

| 39 |

+

|

| 40 |

+

|

| 41 |

+

|

extensions/ABG_extension/.gitignore

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

scripts/__pycache__

|

extensions/ABG_extension/README.md

ADDED

|

@@ -0,0 +1,32 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

<h3 align="center">

|

| 2 |

+

<b>ABG extension</b>

|

| 3 |

+

</h3>

|

| 4 |

+

|

| 5 |

+

<p align="center">

|

| 6 |

+

<a href="https://github.com/KutsuyaYuki/ABG_extension/stargazers"><img src="https://img.shields.io/github/stars/KutsuyaYuki/ABG_extension?style=for-the-badge"></a>

|

| 7 |

+

<a href="https://github.com/KutsuyaYuki/ABG_extension/issues"><img src="https://img.shields.io/github/issues/KutsuyaYuki/ABG_extension?style=for-the-badge"></a>

|

| 8 |

+

<a href="https://github.com/KutsuyaYuki/ABG_extension/commits/main"><img src="https://img.shields.io/github/last-commit/KutsuyaYuki/ABG_extension?style=for-the-badge"></a>

|

| 9 |

+

</p>

|

| 10 |

+

|

| 11 |

+

## Installation

|

| 12 |

+

|

| 13 |

+

1. Install extension by going to Extensions tab -> Install from URL -> Paste github URL and click Install.

|

| 14 |

+

2. After it's installed, go back to the Installed tab in Extensions and press Apply and restart UI.

|

| 15 |

+

3. Installation finished.

|

| 16 |

+

4. If the script does not show up or work, please restart the WebUI.

|

| 17 |

+

|

| 18 |

+

## Usage

|

| 19 |

+

|

| 20 |

+

### txt2img

|

| 21 |

+

|

| 22 |

+

1. In the bottom of the WebUI in Script, select **ABG Remover**.

|

| 23 |

+

2. Select the desired options: **Only save background free pictures** or **Do not auto save**.

|

| 24 |

+

3. Generate an image and you will see the result in the output area.

|

| 25 |

+

|

| 26 |

+

### img2img

|

| 27 |

+

|

| 28 |

+

1. In the bottom of the WebUI in Script, select **ABG Remover**.

|

| 29 |

+

2. Select the desired options: **Only save background free pictures** or **Do not auto save**.

|

| 30 |

+

3. **IMPORTANT**: Set **Denoising strength** to a low value, like **0.01**

|

| 31 |

+

|

| 32 |

+

Based on https://huggingface.co/spaces/skytnt/anime-remove-background

|

extensions/ABG_extension/install.py

ADDED

|

@@ -0,0 +1,11 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|