Upload 16 files

Browse files- AllExperimentsSerial.sh +33 -0

- LICENSE +21 -0

- README.md +249 -0

- __init__.py +8 -0

- __main__.py +561 -0

- ai.py +1064 -0

- components.py +951 -0

- convert.py +144 -0

- goals.py +529 -0

- helpers.py +489 -0

- losses.py +60 -0

- media/overview.png +0 -0

- media/resnetTinyFewCombo.png +0 -0

- models.py +120 -0

- requirements.txt +6 -0

- scheduling.py +120 -0

AllExperimentsSerial.sh

ADDED

|

@@ -0,0 +1,33 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Baseline

|

| 2 |

+

python . -D CIFAR10 -n ResNetTiny -d "LinMix(a=Point(), b=Box(w=Lin(0,0.031373,150,10)), bw=Lin(0,0.5,150,10))" --batch-size 50 --width 0.031373 --lr 0.001 --normalize-layer True --clip-norm False --lr-multistep $1

|

| 3 |

+

# InSamp

|

| 4 |

+

python . -D CIFAR10 -n ResNetTiny -d "LinMix(a=Point(), b=InSamp(Lin(0,1,150,10)), bw=Lin(0,0.5, 150,10))" --batch-size 50 --width 0.031373 --lr 0.001 --normalize-layer True --clip-norm False --lr-multistep $1

|

| 5 |

+

# InSampLPA

|

| 6 |

+

python . -D CIFAR10 -n ResNetTiny -d "LinMix(a=Point(), b=InSamp(Lin(0,1,150,20), w=Lin(0,0.031373, 150, 20)), bw=Lin(0,0.5, 150, 20))" --batch-size 50 --width 0.031373 --lr 0.001 --normalize-layer True --clip-norm False --lr-multistep $1

|

| 7 |

+

# Adv_{1}InSampLPA

|

| 8 |

+

python . -D CIFAR10 -n ResNetTiny -d "LinMix(a=IFGSM(w=Lin(0,0.031373,20,20), k=1), b=InSamp(Lin(0,1,150,10), w=Lin(0,0.031373,150,10)), bw=Lin(0,0.5,150,10))" --batch-size 50 --width 0.031373 --lr 0.001 --normalize-layer True --clip-norm False --lr-multistep $1

|

| 9 |

+

# Adv_{3}InSampLPA

|

| 10 |

+

python . -D CIFAR10 -n ResNetTiny -d "LinMix(a=IFGSM(w=Lin(0,0.031373,20,20), k=3), b=InSamp(Lin(0,1,150,10), w=Lin(0,0.031373,150,10)), bw=Lin(0,0.5,150,10))" --batch-size 50 --width 0.031373 --lr 0.001 --normalize-layer True --clip-norm False --lr-multistep $1

|

| 11 |

+

|

| 12 |

+

|

| 13 |

+

# Baseline

|

| 14 |

+

python . -D CIFAR10 -n ResNetTiny_FewCombo -d "LinMix(a=Point(), b=Box(w=Lin(0,0.031373,150,10)), bw=Lin(0,0.5,150,10))" --batch-size 50 --width 0.031373 --lr 0.001 --normalize-layer True --clip-norm False --lr-multistep $1

|

| 15 |

+

# InSamp

|

| 16 |

+

python . -D CIFAR10 -n ResNetTiny_FewCombo -d "LinMix(a=Point(), b=InSamp(Lin(0,1,150,10)), bw=Lin(0,0.5, 150,10))" --batch-size 50 --width 0.031373 --lr 0.001 --normalize-layer True --clip-norm False --lr-multistep $1

|

| 17 |

+

# InSampLPA

|

| 18 |

+

python . -D CIFAR10 -n ResNetTiny_FewCombo -d "LinMix(a=Point(), b=InSamp(Lin(0,1,150,20), w=Lin(0,0.031373, 150, 20)), bw=Lin(0,0.5, 150, 20))" --batch-size 50 --width 0.031373 --lr 0.001 --normalize-layer True --clip-norm False --lr-multistep $1

|

| 19 |

+

# Adv_{1}InSampLPA

|

| 20 |

+

python . -D CIFAR10 -n ResNetTiny_FewCombo -d "LinMix(a=IFGSM(w=Lin(0,0.031373,20,20), k=1), b=InSamp(Lin(0,1,150,10), w=Lin(0,0.031373,150,10)), bw=Lin(0,0.5,150,10))" --batch-size 50 --width 0.031373 --lr 0.001 --normalize-layer True --clip-norm False --lr-multistep $1

|

| 21 |

+

# Adv_{3}InSampLPA

|

| 22 |

+

python . -D CIFAR10 -n ResNetTiny_FewCombo -d "LinMix(a=IFGSM(w=Lin(0,0.031373,20,20), k=3), b=InSamp(Lin(0,1,150,10), w=Lin(0,0.031373,150,10)), bw=Lin(0,0.5,150,10))" --batch-size 50 --width 0.031373 --lr 0.001 --normalize-layer True --clip-norm False --lr-multistep $1

|

| 23 |

+

|

| 24 |

+

# Adv_{1}InSampLPA

|

| 25 |

+

python . -D CIFAR10 -n ResNetTiny_ManyFixed -d "LinMix(a=IFGSM(w=Lin(0,0.031373,20,20), k=1), b=InSamp(Lin(0,1,150,10), w=Lin(0,0.031373,150,10)), bw=Lin(0,0.5,150,10))" --batch-size 50 --width 0.031373 --lr 0.001 --normalize-layer True --clip-norm False --lr-multistep $1

|

| 26 |

+

|

| 27 |

+

# InSamp_{18}

|

| 28 |

+

python . -D CIFAR10 -n SkipNet18 -d "LinMix(a=Point(), b=InSamp(Lin(0,1,200,40)), bw=Lin(0,0.5,200,40))" -t "MI_FGSM(k=20,r=2)" --batch-size 100 --save-freq 2 --width 0.031373 --lr 0.1 --normalize-layer True --clip-norm False --lr-multistep --sgd --custom-schedule "[10,20,250,300,350]" $1

|

| 29 |

+

# Adv_{5}InSamp_{18}

|

| 30 |

+

python . -D CIFAR10 -n SkipNet18 -d "LinMix(a=IFGSM(w=Lin(0,0.031373,20,20)), b=InSamp(Lin(0,1,200,40)), bw=Lin(0,0.5,200,40))" -t "MI_FGSM(k=20,r=2)" --batch-size 100 --width 0.031373 --lr 0.1 --normalize-layer True --clip-norm False --lr-multistep --sgd --custom-schedule "[10,20,250,300,350]" $1

|

| 31 |

+

# InSamp_{18} Combo

|

| 32 |

+

python . -D CIFAR10 -n SkipNet18_Combo -d "LinMix(b=InSamp(Lin(0,1,200,40)), bw=Lin(0,0.5, 200, 40))" --batch-size 100 --width 0.031373 --lr 0.1 --normalize-layer True --clip-norm False --sgd --lr-multistep --custom-schedule "[10,20,250,300,350]" $1

|

| 33 |

+

|

LICENSE

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

MIT License

|

| 2 |

+

|

| 3 |

+

Copyright (c) 2018 SRI Lab, ETH Zurich

|

| 4 |

+

|

| 5 |

+

Permission is hereby granted, free of charge, to any person obtaining a copy

|

| 6 |

+

of this software and associated documentation files (the "Software"), to deal

|

| 7 |

+

in the Software without restriction, including without limitation the rights

|

| 8 |

+

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

| 9 |

+

copies of the Software, and to permit persons to whom the Software is

|

| 10 |

+

furnished to do so, subject to the following conditions:

|

| 11 |

+

|

| 12 |

+

The above copyright notice and this permission notice shall be included in all

|

| 13 |

+

copies or substantial portions of the Software.

|

| 14 |

+

|

| 15 |

+

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

| 16 |

+

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

| 17 |

+

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

| 18 |

+

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

| 19 |

+

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

| 20 |

+

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

| 21 |

+

SOFTWARE.

|

README.md

ADDED

|

@@ -0,0 +1,249 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

DiffAI v3 <a href="https://www.sri.inf.ethz.ch/"><img width="100" alt="portfolio_view" align="right" src="http://safeai.ethz.ch/img/sri-logo.svg"></a>

|

| 2 |

+

=============================================================================================================

|

| 3 |

+

|

| 4 |

+

|

| 5 |

+

|

| 6 |

+

|

| 7 |

+

|

| 8 |

+

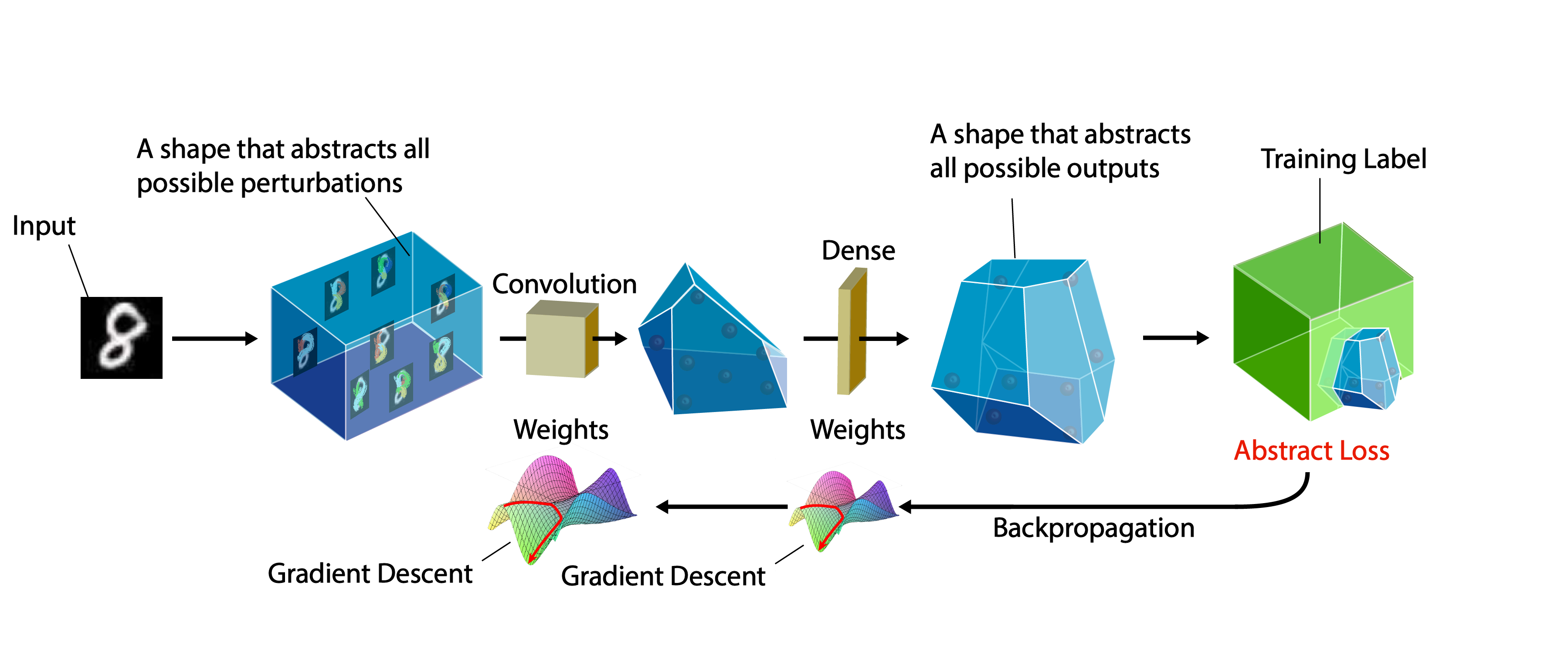

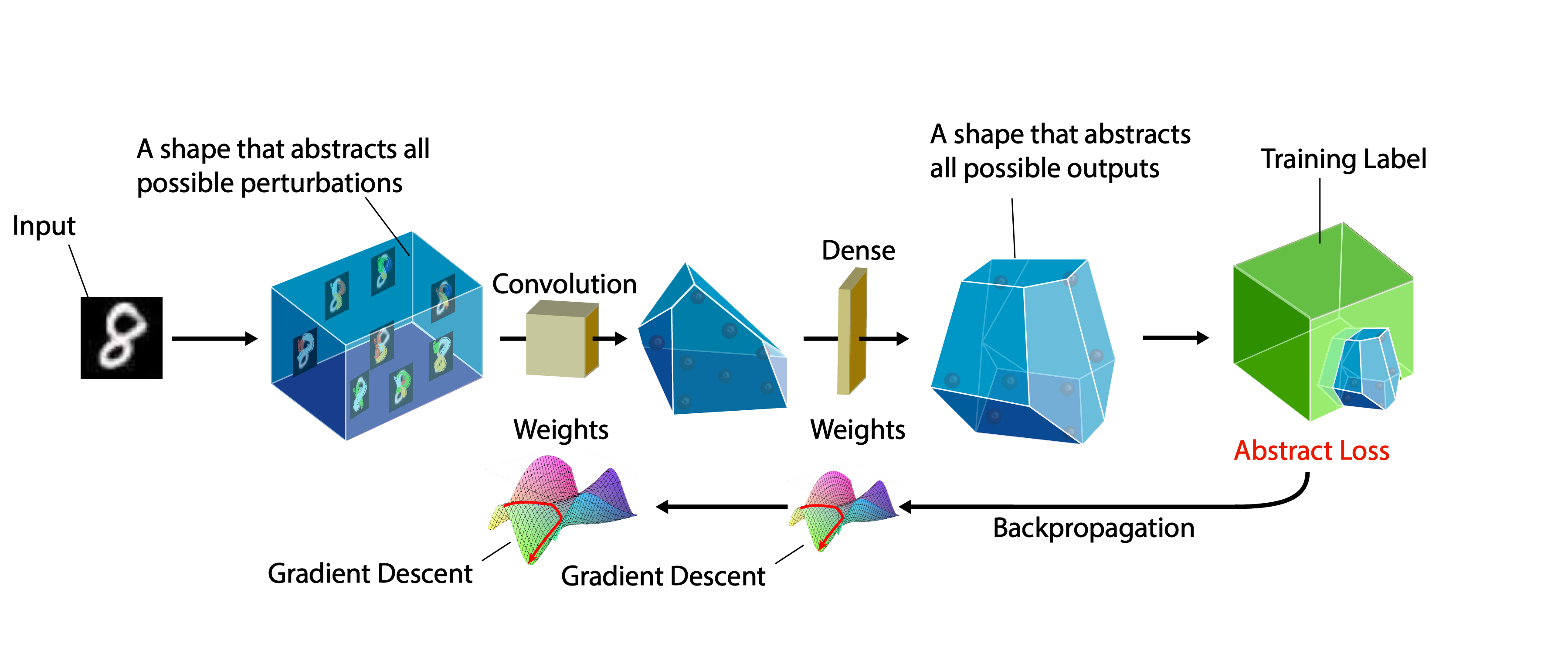

DiffAI is a system for training neural networks to be provably robust and for proving that they are robust.

|

| 9 |

+

|

| 10 |

+

Background

|

| 11 |

+

----------

|

| 12 |

+

|

| 13 |

+

By now, it is well known that otherwise working networks can be tricked by clever attacks. For example [Goodfellow et al.](https://arxiv.org/abs/1412.6572) demonstrated a network with high classification accuracy which classified one image of a panda correctly, and a seemingly identical attack picture

|

| 14 |

+

incorrectly. Many defenses against this type of attack have been produced, but very few produce networks for which *provably* verifying the safety of a prediction is feasible.

|

| 15 |

+

|

| 16 |

+

Abstract Interpretation is a technique for verifying properties of programs by soundly overapproximating their behavior. When applied to neural networks, an infinite set (a ball) of possible inputs is passed to an approximating "abstract" network

|

| 17 |

+

to produce a superset of the possible outputs from the actual network. Provided an appropreate representation for these sets, demonstrating that the network classifies everything in the ball correctly becomes a simple task. The method used to represent these sets is the abstract domain, and the specific approximations are the abstract transformers.

|

| 18 |

+

|

| 19 |

+

In DiffAI, the entire abstract interpretation process is programmed using PyTorch so that it is differentiable and can be run on the GPU,

|

| 20 |

+

and a loss function is crafted so that low values correspond to inputs which can be proved safe (robust).

|

| 21 |

+

|

| 22 |

+

Whats New In v3?

|

| 23 |

+

----------------

|

| 24 |

+

|

| 25 |

+

* Abstract Networks: one can now customize the handling of the domains on a per-layer basis.

|

| 26 |

+

* Training DSL: A DSL has been exposed to allow for custom training regimens with complex parameter scheduling.

|

| 27 |

+

* Cross Loss: The box goal now uses the cross entropy style loss by default as suggested by [Gowal et al. 2019](https://arxiv.org/abs/1810.12715)

|

| 28 |

+

* Conversion to Onyx: We can now export to the onyx format, and can export the abstract network itself to onyx (so that one can run abstract analysis or training using tensorflow for example).

|

| 29 |

+

|

| 30 |

+

Requirements

|

| 31 |

+

------------

|

| 32 |

+

|

| 33 |

+

python 3.6.7, and virtualenv, torch 0.4.1.

|

| 34 |

+

|

| 35 |

+

Recommended Setup

|

| 36 |

+

-----------------

|

| 37 |

+

|

| 38 |

+

```

|

| 39 |

+

$ git clone https://github.com/eth-sri/DiffAI.git

|

| 40 |

+

$ cd DiffAI

|

| 41 |

+

$ virtualenv pytorch --python python3.6

|

| 42 |

+

$ source pytorch/bin/activate

|

| 43 |

+

(pytorch) $ pip install -r requirements.txt

|

| 44 |

+

```

|

| 45 |

+

|

| 46 |

+

Note: you need to activate your virtualenv every time you start a new shell.

|

| 47 |

+

|

| 48 |

+

Getting Started

|

| 49 |

+

---------------

|

| 50 |

+

|

| 51 |

+

DiffAI can be run as a standalone program. To see a list of arguments, type

|

| 52 |

+

|

| 53 |

+

```

|

| 54 |

+

(pytorch) $ python . --help

|

| 55 |

+

```

|

| 56 |

+

|

| 57 |

+

At the minimum, DiffAI expects at least one domain to train with and one domain to test with, and a network with which to test. For example, to train with the Box domain, baseline training (Point) and test against the FGSM attack and the ZSwitch domain with a simple feed forward network on the MNIST dataset (default, if none provided), you would type:

|

| 58 |

+

|

| 59 |

+

```

|

| 60 |

+

(pytorch) $ python . -d "Point()" -d "Box()" -t "PGD()" -t "ZSwitch()" -n ffnn

|

| 61 |

+

```

|

| 62 |

+

|

| 63 |

+

Unless otherwise specified by "--out", the output is logged to the folder "out/".

|

| 64 |

+

In the folder corresponding to the experiment that has been run, one can find the saved configuration options in

|

| 65 |

+

"config.txt", and a pickled net which is saved every 10 epochs (provided that testing is set to happen every 10th epoch).

|

| 66 |

+

|

| 67 |

+

To load a saved model, use "--test" as per the example:

|

| 68 |

+

|

| 69 |

+

```

|

| 70 |

+

(pytorch) $ alias test-diffai="python . -d Point --epochs 1 --dont-write --test-freq 1"

|

| 71 |

+

(pytorch) $ test-diffai -t Box --update-test-net-name convBig --test PATHTOSAVED_CONVBIG.pynet --width 0.1 --test-size 500 --test-batch-size 500

|

| 72 |

+

```

|

| 73 |

+

|

| 74 |

+

Note that "--update-test-net-name" will create a new model based on convBig and try to use the weights in the pickled PATHTOSAVED_CONVBIG.pynet to initialize that models weights. This is not always necessary, but is useful when the code for a model changes (in components) but does not effect the number or usage of weight, or when loading a model pickled by a cuda process into a cpu process.

|

| 75 |

+

|

| 76 |

+

The default specification type is the L_infinity Ball specified explicitly by "--spec boxSpec",

|

| 77 |

+

which uses an epsilon specified by "--width"

|

| 78 |

+

|

| 79 |

+

The default specification type is the L_infinity Ball specified explicitly by "--spec boxSpec",

|

| 80 |

+

which uses an epsilon specified by "--width"

|

| 81 |

+

|

| 82 |

+

Abstract Networks

|

| 83 |

+

-----------------

|

| 84 |

+

|

| 85 |

+

|

| 86 |

+

|

| 87 |

+

A cruical point of DiffAI v3 is that how a network is trained and abstracted should be part of the network description itself. In this release, we provide layers that allow one to alter how the abstraction works,

|

| 88 |

+

in addition to providing a script for converting an abstract network to onyx so that the abstract analysis might be run in tensorflow.

|

| 89 |

+

Below is a list of the abstract layers that we have included.

|

| 90 |

+

|

| 91 |

+

* CorrMaxPool3D

|

| 92 |

+

* CorrMaxPool2D

|

| 93 |

+

* CorrFix

|

| 94 |

+

* CorrMaxK

|

| 95 |

+

* CorrRand

|

| 96 |

+

* DecorrRand

|

| 97 |

+

* DecorrMin

|

| 98 |

+

* DeepLoss

|

| 99 |

+

* ToZono

|

| 100 |

+

* ToHZono

|

| 101 |

+

* Concretize

|

| 102 |

+

* CorrelateAll

|

| 103 |

+

|

| 104 |

+

Training Domain DSL

|

| 105 |

+

-------------------

|

| 106 |

+

|

| 107 |

+

In DiffAI v3, a dsl has been provided to specify arbitrary training domains. In particular, it is now possible to train on combinations of attacks and abstract domains on specifications defined by attacks. Specifying training domains is possible in the command line using ```-d "DOMAIN_INITIALIZATION"```. The possible combinations are the classes listed in domains.py. The same syntax is also supported for testing domains, to allow for testing robustness with different epsilon-sized attacks and specifications.

|

| 108 |

+

|

| 109 |

+

Listed below are a few examples:

|

| 110 |

+

|

| 111 |

+

* ```-t "IFGSM(k=4, w=0.1)" -t "ZNIPS(w=0.3)" ``` Will first test with the PGD attack with an epsilon=w=0.1 and, the number of iterations k=4 and step size set to w/k. It will also test with the zonotope domain using the transformer specified in our [NIPS 2018 paper](https://www.sri.inf.ethz.ch/publications/singh2018effective) with an epsilon=w=0.3.

|

| 112 |

+

|

| 113 |

+

* ```-t "PGD(r=3,k=16,restart=2, w=0.1)"``` tests on points found using PGD with a step size of r*w/k and two restarts, and an attack-generated specification.

|

| 114 |

+

|

| 115 |

+

* ```-d Point()``` is standard non-defensive training.

|

| 116 |

+

|

| 117 |

+

* ```-d "LinMix(a=IFGSM(), b=Box(), aw=1, bw=0.1)"``` trains on points produced by pgd with the default parameters listed in domains.py, and points produced using the box domain. The loss is combined linearly using the weights aw and bw and scaled by 1/(aw + bw). The epsilon used for both is the ambient epsilon specified with "--width".

|

| 118 |

+

|

| 119 |

+

* ```-d "DList((IFGSM(w=0.1),1), (Box(w=0.01),0.1), (Box(w=0.1),0.01))"``` is a generalization of the Mix domain allowing for training with arbitrarily many domains at once weighted by the given values (the resulting loss is scaled by the inverse of the sum of weights).

|

| 120 |

+

|

| 121 |

+

* ```-d "AdvDom(a=IFGSM(), b=Box())"``` trains using the Box domain, but constructs specifications as L∞ balls containing the PGD attack image and the original image "o".

|

| 122 |

+

|

| 123 |

+

* ```-d "BiAdv(a=IFGSM(), b=Box())"``` is similar, but creates specifications between the pgd attack image "a" and "o - (a - o)".

|

| 124 |

+

|

| 125 |

+

One domain we have found particularly useful for training is ```Mix(a=PGD(r=3,k=16,restart=2, w=0.1), b=BiAdv(a=IFGSM(k=5, w=0.05)), bw=0.1)```.

|

| 126 |

+

|

| 127 |

+

While the above domains are all deterministic (up to gpu error and shuffling orders), we have also implemented nondeterministic training domains:

|

| 128 |

+

|

| 129 |

+

* ```-d "Coin(a=IFGSM(), b=Box(), aw=1, bw=0.1)"``` is like Mix, but chooses which domain to train a batch with by the probabilities determined by aw / (aw + bw) and bw / (aw + bw).

|

| 130 |

+

|

| 131 |

+

* ```-d "DProb((IFGSM(w=0.1),1), (Box(w=0.01),0.1), (Box(w=0.1),0.01))"``` is to Coin what DList is to Mix.

|

| 132 |

+

|

| 133 |

+

* ```-d AdvDom(a=IFGSM(), b=DList((PointB(),1), (PointA(), 1), (Box(), 0.2)))``` can be used to share attack images between multiple training types. Here an attack image "m" is found using PGD, then both the original image "o" and the attack image "m" are passed to DList which trains using three different ways: PointA trains with "o", PointB trains with "m", and Box trains on the box produced between them. This can also be used with Mix.

|

| 134 |

+

|

| 135 |

+

* ```-d Normal(w=0.3)``` trains using images sampled from a normal distribution around the provided image using standard deviation w.

|

| 136 |

+

|

| 137 |

+

* ```-d NormalAdv(a=IFGSM(), w=0.3)``` trains using PGD (but this could be an abstract domain) where perturbations are constrained to a box determined by a normal distribution around the original image with standard deviation w.

|

| 138 |

+

|

| 139 |

+

* ```-d InSamp(0.2, w=0.1)``` uses Inclusion sampling as defined in the ArXiv paper.

|

| 140 |

+

|

| 141 |

+

There are more domains implemented than listed here, and of course more interesting combinations are possible. Please look carefully at domains.py for default values and further options.

|

| 142 |

+

|

| 143 |

+

|

| 144 |

+

Parameter Scheduling DSL

|

| 145 |

+

------------------------

|

| 146 |

+

|

| 147 |

+

In place of many constants, you can use the following scheduling devices.

|

| 148 |

+

|

| 149 |

+

* ```Lin(s,e,t,i)``` Linearly interpolates between s and e over t epochs, using s for the first i epochs.

|

| 150 |

+

|

| 151 |

+

* ```Until(t,a,b)``` Uses a for the first t epochs, then switches to using b (telling b the current epoch starting from 0 at epoch t).

|

| 152 |

+

|

| 153 |

+

Suggested Training

|

| 154 |

+

------------------

|

| 155 |

+

|

| 156 |

+

```LinMix(a=IFGSM(k=2), b=InSamp(Lin(0,1,150,10)), bw = Lin(0,0.5,150,10))``` is a training goal that appears to work particularly well for CIFAR10 networks.

|

| 157 |

+

|

| 158 |

+

Contents

|

| 159 |

+

--------

|

| 160 |

+

|

| 161 |

+

* components.py: A high level neural network library for composable layers and operations

|

| 162 |

+

* goals.py: The DSL for specifying training losses and domains, and attacks which can be used as a drop in replacement for pytorch tensors in any model built with components from components.py

|

| 163 |

+

* scheduling.py: The DSL for specifying parameter scheduling.

|

| 164 |

+

* models.py: A repository of models to train with which are used in the paper.

|

| 165 |

+

* convert.py: A utility for converting a model with a training or testing domain (goal) into an onyx network. This is useful for exporting DiffAI abstractions to tensorflow.

|

| 166 |

+

* \_\_main\_\_.py: The entry point to run the experiments.

|

| 167 |

+

* helpers.py: Assorted helper functions. Does some monkeypatching, so you might want to be careful importing our library into your project.

|

| 168 |

+

* AllExperimentsSerial.sh: A script which runs the training experiments from the 2019 ArXiv paper from table 4 and 5 and figure 5.

|

| 169 |

+

|

| 170 |

+

Notes

|

| 171 |

+

-----

|

| 172 |

+

|

| 173 |

+

Not all of the datasets listed in the help message are supported. Supported datasets are:

|

| 174 |

+

|

| 175 |

+

* CIFAR10

|

| 176 |

+

* CIFAR100

|

| 177 |

+

* MNIST

|

| 178 |

+

* SVHN

|

| 179 |

+

* FashionMNIST

|

| 180 |

+

|

| 181 |

+

Unsupported datasets will not necessarily throw errors.

|

| 182 |

+

|

| 183 |

+

Reproducing Results

|

| 184 |

+

-------------------

|

| 185 |

+

|

| 186 |

+

[Download Defended Networks](https://www.dropbox.com/sh/66obogmvih79e3k/AACe-tkKGvIK0Z--2tk2alZaa?dl=0)

|

| 187 |

+

|

| 188 |

+

All training runs from the paper can be reproduced as by the following command, in the same order as Table 6 in the appendix.

|

| 189 |

+

|

| 190 |

+

```

|

| 191 |

+

./AllExperimentsSerial.sh "-t MI_FGSM(k=20,r=2) -t HBox --test-size 10000 --test-batch-size 200 --test-freq 400 --save-freq 1 --epochs 420 --out all_experiments --write-first True --test-first False"

|

| 192 |

+

```

|

| 193 |

+

|

| 194 |

+

The training schemes can be written as follows (the names differ slightly from the presentation in the paper):

|

| 195 |

+

|

| 196 |

+

* Baseline: LinMix(a=Point(), b=Box(w=Lin(0,0.031373,150,10)), bw=Lin(0,0.5,150,10))

|

| 197 |

+

* InSamp: LinMix(a=Point(), b=InSamp(Lin(0,1,150,10)), bw=Lin(0,0.5, 150,10))

|

| 198 |

+

* InSampLPA: LinMix(a=Point(), b=InSamp(Lin(0,1,150,20), w=Lin(0,0.031373, 150, 20)), bw=Lin(0,0.5, 150, 20))

|

| 199 |

+

* Adv_{1}ISLPA: LinMix(a=IFGSM(w=Lin(0,0.031373,20,20), k=1), b=InSamp(Lin(0,1,150,10), w=Lin(0,0.031373,150,10)), bw=Lin(0,0.5,150,10))

|

| 200 |

+

* Adv_{3}ISLPA: LinMix(a=IFGSM(w=Lin(0,0.031373,20,20), k=3), b=InSamp(Lin(0,1,150,10), w=Lin(0,0.031373,150,10)), bw=Lin(0,0.5,150,10))

|

| 201 |

+

* Baseline_{18}: LinMix(a=Point(), b=InSamp(Lin(0,1,200,40)), bw=Lin(0,0.5,200,40))

|

| 202 |

+

* InSamp_{18}: LinMix(a=IFGSM(w=Lin(0,0.031373,20,20)), b=InSamp(Lin(0,1,200,40)), bw=Lin(0,0.5,200,40))

|

| 203 |

+

* Adv_{5}IS_{18}: LinMix(b=InSamp(Lin(0,1,200,40)), bw=Lin(0,0.5, 200, 40))

|

| 204 |

+

* BiAdv_L: LinMix(a=IFGSM(k=2), b=BiAdv(a=IFGSM(k=3, w=Lin(0,0.031373, 150, 30)), b=Box()), bw=Lin(0,0.6, 200, 30))

|

| 205 |

+

|

| 206 |

+

To test a saved network as in the paper, use the following command:

|

| 207 |

+

|

| 208 |

+

```

|

| 209 |

+

python . -D CIFAR10 -n ResNetLarge_LargeCombo -d Point --width 0.031373 --normalize-layer True --clip-norm False -t 'MI_FGSM(k=20,r=2)' -t HBox --test-size 10000 --test-batch-size 200 --epochs 1 --test NAMEOFSAVEDNET.pynet

|

| 210 |

+

```

|

| 211 |

+

|

| 212 |

+

About

|

| 213 |

+

-----

|

| 214 |

+

|

| 215 |

+

* DiffAI is now on version 3.0.

|

| 216 |

+

* This repository contains the code used for the experiments in the [2019 ArXiV Paper](https://arxiv.org/abs/1903.12519).

|

| 217 |

+

* To reproduce the experiments from the 2018 ICML paper [Differentiable Abstract Interpretation for Provably Robust Neural Networks](https://files.sri.inf.ethz.ch/website/papers/icml18-diffai.pdf), one must download the source from download the [source code for Version 1.0](https://github.com/eth-sri/diffai/releases/tag/v1.0)

|

| 218 |

+

* Further information and related projects can be found at [the SafeAI Project](http://safeai.ethz.ch/)

|

| 219 |

+

* [High level slides](https://files.sri.inf.ethz.ch/website/slides/mirman2018differentiable.pdf)

|

| 220 |

+

|

| 221 |

+

Citing This Framework

|

| 222 |

+

---------------------

|

| 223 |

+

|

| 224 |

+

```

|

| 225 |

+

@inproceedings{

|

| 226 |

+

title={Differentiable Abstract Interpretation for Provably Robust Neural Networks},

|

| 227 |

+

author={Mirman, Matthew and Gehr, Timon and Vechev, Martin},

|

| 228 |

+

booktitle={International Conference on Machine Learning (ICML)},

|

| 229 |

+

year={2018},

|

| 230 |

+

url={https://www.icml.cc/Conferences/2018/Schedule?showEvent=2477},

|

| 231 |

+

}

|

| 232 |

+

```

|

| 233 |

+

|

| 234 |

+

Contributors

|

| 235 |

+

------------

|

| 236 |

+

|

| 237 |

+

* [Matthew Mirman](https://www.mirman.com) - [email protected]

|

| 238 |

+

* [Gagandeep Singh](https://www.sri.inf.ethz.ch/people/gagandeep) - [email protected]

|

| 239 |

+

* [Timon Gehr](https://www.sri.inf.ethz.ch/tg.php) - [email protected]

|

| 240 |

+

* Marc Fischer - [email protected]

|

| 241 |

+

* [Martin Vechev](https://www.sri.inf.ethz.ch/vechev.php) - [email protected]

|

| 242 |

+

|

| 243 |

+

|

| 244 |

+

|

| 245 |

+

License and Copyright

|

| 246 |

+

---------------------

|

| 247 |

+

|

| 248 |

+

* Copyright (c) 2018 [Secure, Reliable, and Intelligent Systems Lab (SRI), ETH Zurich](https://www.sri.inf.ethz.ch/)

|

| 249 |

+

* Licensed under the [MIT License](https://opensource.org/licenses/MIT)

|

__init__.py

ADDED

|

@@ -0,0 +1,8 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import sys

|

| 2 |

+

import os

|

| 3 |

+

|

| 4 |

+

SCRIPT_DIR = os.path.dirname(os.path.realpath(os.path.join(os.getcwd(), os.path.expanduser(__file__))))

|

| 5 |

+

print(SCRIPT_DIR)

|

| 6 |

+

sys.path.append(SCRIPT_DIR)

|

| 7 |

+

|

| 8 |

+

|

__main__.py

ADDED

|

@@ -0,0 +1,561 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import future

|

| 2 |

+

import builtins

|

| 3 |

+

import past

|

| 4 |

+

import six

|

| 5 |

+

import copy

|

| 6 |

+

|

| 7 |

+

from timeit import default_timer as timer

|

| 8 |

+

from datetime import datetime

|

| 9 |

+

import argparse

|

| 10 |

+

import torch

|

| 11 |

+

import torch.nn as nn

|

| 12 |

+

import torch.nn.functional as F

|

| 13 |

+

import torch.optim as optim

|

| 14 |

+

from torchvision import datasets

|

| 15 |

+

from torch.utils.data import Dataset

|

| 16 |

+

import decimal

|

| 17 |

+

import torch.onnx

|

| 18 |

+

|

| 19 |

+

|

| 20 |

+

import inspect

|

| 21 |

+

from inspect import getargspec

|

| 22 |

+

import os

|

| 23 |

+

import helpers as h

|

| 24 |

+

from helpers import Timer

|

| 25 |

+

import copy

|

| 26 |

+

import random

|

| 27 |

+

|

| 28 |

+

from components import *

|

| 29 |

+

import models

|

| 30 |

+

|

| 31 |

+

import goals

|

| 32 |

+

import scheduling

|

| 33 |

+

|

| 34 |

+

from goals import *

|

| 35 |

+

from scheduling import *

|

| 36 |

+

|

| 37 |

+

import math

|

| 38 |

+

|

| 39 |

+

import warnings

|

| 40 |

+

from torch.serialization import SourceChangeWarning

|

| 41 |

+

|

| 42 |

+

POINT_DOMAINS = [m for m in h.getMethods(goals) if issubclass(m, goals.Point)]

|

| 43 |

+

SYMETRIC_DOMAINS = [goals.Box] + POINT_DOMAINS

|

| 44 |

+

|

| 45 |

+

|

| 46 |

+

datasets.Imagenet12 = None

|

| 47 |

+

|

| 48 |

+

class Top(nn.Module):

|

| 49 |

+

def __init__(self, args, net, ty = Point):

|

| 50 |

+

super(Top, self).__init__()

|

| 51 |

+

self.net = net

|

| 52 |

+

self.ty = ty

|

| 53 |

+

self.w = args.width

|

| 54 |

+

self.global_num = 0

|

| 55 |

+

self.getSpec = getattr(self, args.spec)

|

| 56 |

+

self.sub_batch_size = args.sub_batch_size

|

| 57 |

+

self.curve_width = args.curve_width

|

| 58 |

+

self.regularize = args.regularize

|

| 59 |

+

|

| 60 |

+

|

| 61 |

+

self.speedCount = 0

|

| 62 |

+

self.speed = 0.0

|

| 63 |

+

|

| 64 |

+

def addSpeed(self, s):

|

| 65 |

+

self.speed = (s + self.speed * self.speedCount) / (self.speedCount + 1)

|

| 66 |

+

self.speedCount += 1

|

| 67 |

+

|

| 68 |

+

def forward(self, x):

|

| 69 |

+

return self.net(x)

|

| 70 |

+

|

| 71 |

+

def clip_norm(self):

|

| 72 |

+

self.net.clip_norm()

|

| 73 |

+

|

| 74 |

+

def boxSpec(self, x, target, **kargs):

|

| 75 |

+

return [(self.ty.box(x, w = self.w, model=self, target=target, untargeted=True, **kargs).to_dtype(), target)]

|

| 76 |

+

|

| 77 |

+

def curveSpec(self, x, target, **kargs):

|

| 78 |

+

if self.ty.__class__ in SYMETRIC_DOMAINS:

|

| 79 |

+

return self.boxSpec(x,target, **kargs)

|

| 80 |

+

|

| 81 |

+

|

| 82 |

+

batch_size = x.size()[0]

|

| 83 |

+

|

| 84 |

+

newTargs = [ None for i in range(batch_size) ]

|

| 85 |

+

newSpecs = [ None for i in range(batch_size) ]

|

| 86 |

+

bestSpecs = [ None for i in range(batch_size) ]

|

| 87 |

+

|

| 88 |

+

for i in range(batch_size):

|

| 89 |

+

newTarg = target[i]

|

| 90 |

+

newTargs[i] = newTarg

|

| 91 |

+

newSpec = x[i]

|

| 92 |

+

|

| 93 |

+

best_x = newSpec

|

| 94 |

+

best_dist = float("inf")

|

| 95 |

+

for j in range(batch_size):

|

| 96 |

+

potTarg = target[j]

|

| 97 |

+

potSpec = x[j]

|

| 98 |

+

if (not newTarg.data.equal(potTarg.data)) or i == j:

|

| 99 |

+

continue

|

| 100 |

+

curr_dist = (newSpec - potSpec).norm(1).item() # must experiment with the type of norm here

|

| 101 |

+

if curr_dist <= best_dist:

|

| 102 |

+

best_x = potSpec

|

| 103 |

+

|

| 104 |

+

newSpecs[i] = newSpec

|

| 105 |

+

bestSpecs[i] = best_x

|

| 106 |

+

|

| 107 |

+

new_batch_size = self.sub_batch_size

|

| 108 |

+

batchedTargs = h.chunks(newTargs, new_batch_size)

|

| 109 |

+

batchedSpecs = h.chunks(newSpecs, new_batch_size)

|

| 110 |

+

batchedBest = h.chunks(bestSpecs, new_batch_size)

|

| 111 |

+

|

| 112 |

+

def batch(t,s,b):

|

| 113 |

+

t = h.lten(t)

|

| 114 |

+

s = torch.stack(s)

|

| 115 |

+

b = torch.stack(b)

|

| 116 |

+

|

| 117 |

+

if h.use_cuda:

|

| 118 |

+

t.cuda()

|

| 119 |

+

s.cuda()

|

| 120 |

+

b.cuda()

|

| 121 |

+

|

| 122 |

+

m = self.ty.line(s, b, w = self.curve_width, **kargs)

|

| 123 |

+

return (m , t)

|

| 124 |

+

|

| 125 |

+

return [batch(t,s,b) for t,s,b in zip(batchedTargs, batchedSpecs, batchedBest)]

|

| 126 |

+

|

| 127 |

+

|

| 128 |

+

def regLoss(self):

|

| 129 |

+

if self.regularize is None or self.regularize <= 0.0:

|

| 130 |

+

return 0

|

| 131 |

+

reg_loss = 0

|

| 132 |

+

r = self.net.regularize(2)

|

| 133 |

+

return self.regularize * r

|

| 134 |

+

|

| 135 |

+

def aiLoss(self, dom, target, **args):

|

| 136 |

+

r = self(dom)

|

| 137 |

+

return self.regLoss() + r.loss(target = target, **args)

|

| 138 |

+

|

| 139 |

+

def printNet(self, f):

|

| 140 |

+

self.net.printNet(f)

|

| 141 |

+

|

| 142 |

+

|

| 143 |

+

# Training settings

|

| 144 |

+

parser = argparse.ArgumentParser(description='PyTorch DiffAI Example', formatter_class=argparse.ArgumentDefaultsHelpFormatter)

|

| 145 |

+

parser.add_argument('--batch-size', type=int, default=10, metavar='N', help='input batch size for training')

|

| 146 |

+

parser.add_argument('--test-first', type=h.str2bool, nargs='?', const=True, default=True, help='test first')

|

| 147 |

+

parser.add_argument('--test-freq', type=int, default=1, metavar='N', help='number of epochs to skip before testing')

|

| 148 |

+

parser.add_argument('--test-batch-size', type=int, default=10, metavar='N', help='input batch size for testing')

|

| 149 |

+

parser.add_argument('--sub-batch-size', type=int, default=3, metavar='N', help='input batch size for curve specs')

|

| 150 |

+

|

| 151 |

+

parser.add_argument('--custom-schedule', type=str, default="", metavar='net', help='Learning rate scheduling for lr-multistep. Defaults to [200,250,300] for CIFAR10 and [15,25] for everything else.')

|

| 152 |

+

|

| 153 |

+

parser.add_argument('--test', type=str, default=None, metavar='net', help='Saved net to use, in addition to any other nets you specify with -n')

|

| 154 |

+

parser.add_argument('--update-test-net', type=h.str2bool, nargs='?', const=True, default=False, help="should update test net")

|

| 155 |

+

|

| 156 |

+

parser.add_argument('--sgd',type=h.str2bool, nargs='?', const=True, default=False, help="use sgd instead of adam")

|

| 157 |

+

parser.add_argument('--onyx', type=h.str2bool, nargs='?', const=True, default=False, help="should output onyx")

|

| 158 |

+

parser.add_argument('--save-dot-net', type=h.str2bool, nargs='?', const=True, default=False, help="should output in .net")

|

| 159 |

+

parser.add_argument('--update-test-net-name', type=str, choices = h.getMethodNames(models), default=None, help="update test net name")

|

| 160 |

+

|

| 161 |

+

parser.add_argument('--normalize-layer', type=h.str2bool, nargs='?', const=True, default=True, help="should include a training set specific normalization layer")

|

| 162 |

+

parser.add_argument('--clip-norm', type=h.str2bool, nargs='?', const=True, default=False, help="should clip the normal and use normal decomposition for weights")

|

| 163 |

+

|

| 164 |

+

parser.add_argument('--epochs', type=int, default=1000, metavar='N', help='number of epochs to train')

|

| 165 |

+

parser.add_argument('--log-freq', type=int, default=10, metavar='N', help='The frequency with which log statistics are printed')

|

| 166 |

+

parser.add_argument('--save-freq', type=int, default=1, metavar='N', help='The frequency with which nets and images are saved, in terms of number of test passes')

|

| 167 |

+

parser.add_argument('--number-save-images', type=int, default=0, metavar='N', help='The number of images to save. Should be smaller than test-size.')

|

| 168 |

+

|

| 169 |

+

parser.add_argument('--lr', type=float, default=0.001, metavar='LR', help='learning rate')

|

| 170 |

+

parser.add_argument('--lr-multistep', type=h.str2bool, nargs='?', const=True, default=False, help='learning rate multistep scheduling')

|

| 171 |

+

|

| 172 |

+

parser.add_argument('--threshold', type=float, default=-0.01, metavar='TH', help='threshold for lr schedule')

|

| 173 |

+

parser.add_argument('--patience', type=int, default=0, metavar='PT', help='patience for lr schedule')

|

| 174 |

+

parser.add_argument('--factor', type=float, default=0.5, metavar='R', help='reduction multiplier for lr schedule')

|

| 175 |

+

parser.add_argument('--max-norm', type=float, default=10000, metavar='MN', help='the maximum norm allowed in weight distribution')

|

| 176 |

+

|

| 177 |

+

|

| 178 |

+

parser.add_argument('--curve-width', type=float, default=None, metavar='CW', help='the width of the curve spec')

|

| 179 |

+

|

| 180 |

+

parser.add_argument('--width', type=float, default=0.01, metavar='CW', help='the width of either the line or box')

|

| 181 |

+

parser.add_argument('--spec', choices = [ x for x in dir(Top) if x[-4:] == "Spec" and len(getargspec(getattr(Top, x)).args) == 3]

|

| 182 |

+

, default="boxSpec", help='picks which spec builder function to use for training')

|

| 183 |

+

|

| 184 |

+

|

| 185 |

+

parser.add_argument('--seed', type=int, default=1, metavar='S', help='random seed')

|

| 186 |

+

parser.add_argument("--use-schedule", type=h.str2bool, nargs='?',

|

| 187 |

+

const=True, default=False,

|

| 188 |

+

help="activate learning rate schedule")

|

| 189 |

+

|

| 190 |

+

parser.add_argument('-d', '--domain', sub_choices = None, action = h.SubAct

|

| 191 |

+

, default=[], help='picks which abstract goals to use for training', required=True)

|

| 192 |

+

|

| 193 |

+

parser.add_argument('-t', '--test-domain', sub_choices = None, action = h.SubAct

|

| 194 |

+

, default=[], help='picks which abstract goals to use for testing. Examples include ' + str(goals), required=True)

|

| 195 |

+

|

| 196 |

+

parser.add_argument('-n', '--net', choices = h.getMethodNames(models), action = 'append'

|

| 197 |

+

, default=[], help='picks which net to use for training') # one net for now

|

| 198 |

+

|

| 199 |

+

parser.add_argument('-D', '--dataset', choices = [n for (n,k) in inspect.getmembers(datasets, inspect.isclass) if issubclass(k, Dataset)]

|

| 200 |

+

, default="MNIST", help='picks which dataset to use.')

|

| 201 |

+

|

| 202 |

+

parser.add_argument('-o', '--out', default="out", help='picks which net to use for training')

|

| 203 |

+

parser.add_argument('--dont-write', type=h.str2bool, nargs='?', const=True, default=False, help='dont write anywhere if this flag is on')

|

| 204 |

+

parser.add_argument('--write-first', type=h.str2bool, nargs='?', const=True, default=False, help='write the initial net. Useful for comparing algorithms, a pain for testing.')

|

| 205 |

+

parser.add_argument('--test-size', type=int, default=2000, help='number of examples to test with')

|

| 206 |

+

|

| 207 |

+

parser.add_argument('-r', '--regularize', type=float, default=None, help='use regularization')

|

| 208 |

+

|

| 209 |

+

|

| 210 |

+

args = parser.parse_args()

|

| 211 |

+

|

| 212 |

+

largest_domain = max([len(h.catStrs(d)) for d in (args.domain)] )

|

| 213 |

+

largest_test_domain = max([len(h.catStrs(d)) for d in (args.test_domain)] )

|

| 214 |

+

|

| 215 |

+

args.log_interval = int(50000 / (args.batch_size * args.log_freq))

|

| 216 |

+

|

| 217 |

+

h.max_c_for_norm = args.max_norm

|

| 218 |

+

|

| 219 |

+

if h.use_cuda:

|

| 220 |

+

torch.cuda.manual_seed(1 + args.seed)

|

| 221 |

+

else:

|

| 222 |

+

torch.manual_seed(args.seed)

|

| 223 |

+

|

| 224 |

+

train_loader = h.loadDataset(args.dataset, args.batch_size, True, False)

|

| 225 |

+

test_loader = h.loadDataset(args.dataset, args.test_batch_size, False, False)

|

| 226 |

+

|

| 227 |

+

input_dims = train_loader.dataset[0][0].size()

|

| 228 |

+

num_classes = int(max(getattr(train_loader.dataset, 'train_labels' if args.dataset != "SVHN" else 'labels'))) + 1

|

| 229 |

+

|

| 230 |

+

print("input_dims: ", input_dims)

|

| 231 |

+

print("Num classes: ", num_classes)

|

| 232 |

+

|

| 233 |

+

vargs = vars(args)

|

| 234 |

+

|

| 235 |

+

total_batches_seen = 0

|

| 236 |

+

|

| 237 |

+

def train(epoch, models):

|

| 238 |

+

global total_batches_seen

|

| 239 |

+

|

| 240 |

+

for model in models:

|

| 241 |

+

model.train()

|

| 242 |

+

|

| 243 |

+

for batch_idx, (data, target) in enumerate(train_loader):

|

| 244 |

+

total_batches_seen += 1

|

| 245 |

+

time = float(total_batches_seen) / len(train_loader)

|

| 246 |

+

if h.use_cuda:

|

| 247 |

+

data, target = data.cuda(), target.cuda()

|

| 248 |

+

|

| 249 |

+

for model in models:

|

| 250 |

+

model.global_num += data.size()[0]

|

| 251 |

+

|

| 252 |

+

timer = Timer("train a sample from " + model.name + " with " + model.ty.name, data.size()[0], False)

|

| 253 |

+

lossy = 0

|

| 254 |

+

with timer:

|

| 255 |

+

for s in model.getSpec(data.to_dtype(),target, time = time):

|

| 256 |

+

model.optimizer.zero_grad()

|

| 257 |

+

loss = model.aiLoss(*s, time = time, **vargs).mean(dim=0)

|

| 258 |

+

lossy += loss.detach().item()

|

| 259 |

+

loss.backward()

|

| 260 |

+

torch.nn.utils.clip_grad_norm_(model.parameters(), 1)

|

| 261 |

+

for p in model.parameters():

|

| 262 |

+

if p is not None and torch.isnan(p).any():

|

| 263 |

+

print("Such nan in vals")

|

| 264 |

+

if p is not None and p.grad is not None and torch.isnan(p.grad).any():

|

| 265 |

+

print("Such nan in postmagic")

|

| 266 |

+

stdv = 1 / math.sqrt(h.product(p.data.shape))

|

| 267 |

+

p.grad = torch.where(torch.isnan(p.grad), torch.normal(mean=h.zeros(p.grad.shape), std=stdv), p.grad)

|

| 268 |

+

|

| 269 |

+

model.optimizer.step()

|

| 270 |

+

|

| 271 |

+

for p in model.parameters():

|

| 272 |

+

if p is not None and torch.isnan(p).any():

|

| 273 |

+

print("Such nan in vals after grad")

|

| 274 |

+

stdv = 1 / math.sqrt(h.product(p.data.shape))

|

| 275 |

+

p.data = torch.where(torch.isnan(p.data), torch.normal(mean=h.zeros(p.data.shape), std=stdv), p.data)

|

| 276 |

+

|

| 277 |

+

if args.clip_norm:

|

| 278 |

+

model.clip_norm()

|

| 279 |

+

for p in model.parameters():

|

| 280 |

+

if p is not None and torch.isnan(p).any():

|

| 281 |

+

raise Exception("Such nan in vals after clip")

|

| 282 |

+

|

| 283 |

+

model.addSpeed(timer.getUnitTime())

|

| 284 |

+

|

| 285 |

+

if batch_idx % args.log_interval == 0:

|

| 286 |

+

print(('Train Epoch {:12} {:'+ str(largest_domain) +'}: {:3} [{:7}/{} ({:.0f}%)] \tAvg sec/ex {:1.8f}\tLoss: {:.6f}').format(

|

| 287 |

+

model.name, model.ty.name,

|

| 288 |

+

epoch,

|

| 289 |

+

batch_idx * len(data), len(train_loader.dataset), 100. * batch_idx / len(train_loader),

|

| 290 |

+

model.speed,

|

| 291 |

+

lossy))

|

| 292 |

+

|

| 293 |

+

|

| 294 |

+

num_tests = 0

|

| 295 |

+

def test(models, epoch, f = None):

|

| 296 |

+

global num_tests

|

| 297 |

+

num_tests += 1

|

| 298 |

+

class MStat:

|

| 299 |

+

def __init__(self, model):

|

| 300 |

+

model.eval()

|

| 301 |

+

self.model = model

|

| 302 |

+

self.correct = 0

|

| 303 |

+

class Stat:

|

| 304 |

+

def __init__(self, d, dnm):

|

| 305 |

+

self.domain = d

|

| 306 |

+

self.name = dnm

|

| 307 |

+

self.width = 0

|

| 308 |

+

self.max_eps = None

|

| 309 |

+

self.safe = 0

|

| 310 |

+

self.proved = 0

|

| 311 |

+

self.time = 0

|

| 312 |

+

self.domains = [ Stat(h.parseValues(d, goals), h.catStrs(d)) for d in args.test_domain ]

|

| 313 |

+

model_stats = [ MStat(m) for m in models ]

|

| 314 |

+

|

| 315 |

+

num_its = 0

|

| 316 |

+

saved_data_target = []

|

| 317 |

+

for data, target in test_loader:

|

| 318 |

+

if num_its >= args.test_size:

|

| 319 |

+

break

|

| 320 |

+

|

| 321 |

+

if num_tests == 1:

|

| 322 |

+

saved_data_target += list(zip(list(data), list(target)))

|

| 323 |

+

|

| 324 |

+

num_its += data.size()[0]

|

| 325 |

+

if h.use_cuda:

|

| 326 |

+

data, target = data.cuda().to_dtype(), target.cuda()

|

| 327 |

+

|

| 328 |

+

for m in model_stats:

|

| 329 |

+

|

| 330 |

+

with torch.no_grad():

|

| 331 |

+

pred = m.model(data).vanillaTensorPart().max(1, keepdim=True)[1] # get the index of the max log-probability

|

| 332 |

+

m.correct += pred.eq(target.data.view_as(pred)).sum()

|

| 333 |

+

|

| 334 |

+

for stat in m.domains:

|

| 335 |

+

timer = Timer(shouldPrint = False)

|

| 336 |

+

with timer:

|

| 337 |

+

def calcData(data, target):

|

| 338 |

+

box = stat.domain.box(data, w = m.model.w, model=m.model, untargeted = True, target=target).to_dtype()

|

| 339 |

+

with torch.no_grad():

|

| 340 |

+

bs = m.model(box)

|

| 341 |

+

org = m.model(data).vanillaTensorPart().max(1,keepdim=True)[1]

|

| 342 |

+

stat.width += bs.diameter().sum().item() # sum up batch loss

|

| 343 |

+

stat.proved += bs.isSafe(org).sum().item()

|

| 344 |

+

stat.safe += bs.isSafe(target).sum().item()

|

| 345 |

+

# stat.max_eps += 0 # TODO: calculate max_eps

|

| 346 |

+

|

| 347 |

+

if m.model.net.neuronCount() < 5000 or stat.domain in SYMETRIC_DOMAINS:

|

| 348 |

+

calcData(data, target)

|

| 349 |

+

else:

|

| 350 |

+

for d,t in zip(data, target):

|

| 351 |

+

calcData(d.unsqueeze(0),t.unsqueeze(0))

|

| 352 |

+

stat.time += timer.getUnitTime()

|

| 353 |

+

|

| 354 |

+

l = num_its # len(test_loader.dataset)

|