url

stringlengths 61

61

| repository_url

stringclasses 1

value | labels_url

stringlengths 75

75

| comments_url

stringlengths 70

70

| events_url

stringlengths 68

68

| html_url

stringlengths 51

51

| id

int64 1.92B

2.7B

| node_id

stringlengths 18

18

| number

int64 6.27k

7.3k

| title

stringlengths 2

150

| user

dict | labels

listlengths 0

2

| state

stringclasses 2

values | locked

bool 1

class | assignee

dict | assignees

listlengths 0

1

| milestone

null | comments

sequencelengths 0

23

| created_at

timestamp[ns] | updated_at

int64 1.7k

1.73k

| closed_at

timestamp[ns] | author_association

stringclasses 4

values | active_lock_reason

null | body

stringlengths 3

47.9k

⌀ | closed_by

dict | reactions

dict | timeline_url

stringlengths 70

70

| performed_via_github_app

null | state_reason

stringclasses 3

values | draft

null | pull_request

null | is_pull_request

bool 1

class | time_to_close

float64 0

0

⌀ |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

https://api.github.com/repos/huggingface/datasets/issues/7299 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/7299/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/7299/comments | https://api.github.com/repos/huggingface/datasets/issues/7299/events | https://github.com/huggingface/datasets/issues/7299 | 2,695,378,251 | I_kwDODunzps6gqDVL | 7,299 | Efficient Image Augmentation in Hugging Face Datasets | {

"avatar_url": "https://avatars.githubusercontent.com/u/46443190?v=4",

"events_url": "https://api.github.com/users/fabiozappo/events{/privacy}",

"followers_url": "https://api.github.com/users/fabiozappo/followers",

"following_url": "https://api.github.com/users/fabiozappo/following{/other_user}",

"gists_url": "https://api.github.com/users/fabiozappo/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/fabiozappo",

"id": 46443190,

"login": "fabiozappo",

"node_id": "MDQ6VXNlcjQ2NDQzMTkw",

"organizations_url": "https://api.github.com/users/fabiozappo/orgs",

"received_events_url": "https://api.github.com/users/fabiozappo/received_events",

"repos_url": "https://api.github.com/users/fabiozappo/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/fabiozappo/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/fabiozappo/subscriptions",

"type": "User",

"url": "https://api.github.com/users/fabiozappo",

"user_view_type": "public"

} | [] | open | false | null | [] | null | [] | 1970-01-01T00:00:00.000001 | 1,732 | null | NONE | null | ### Describe the bug

I'm using the Hugging Face datasets library to load images in batch and would like to apply a torchvision transform to solve the inconsistent image sizes in the dataset and apply some on the fly image augmentation. I can just think about using the collate_fn, but seems quite inefficient.

I'm new to the Hugging Face datasets library, I didn't find nothing in the documentation or the issues here on github.

Is there an existing way to add image transformations directly to the dataset loading pipeline?

### Steps to reproduce the bug

from datasets import load_dataset

from torch.utils.data import DataLoader

```python

def collate_fn(batch):

images = [item['image'] for item in batch]

texts = [item['text'] for item in batch]

return {

'images': images,

'texts': texts

}

dataset = load_dataset("Yuki20/pokemon_caption", split="train")

dataloader = DataLoader(dataset, batch_size=4, collate_fn=collate_fn)

# Output shows varying image sizes:

# [(1280, 1280), (431, 431), (789, 789), (769, 769)]

```

### Expected behavior

I'm looking for a way to resize images on-the-fly when loading the dataset, similar to PyTorch's Dataset.__getitem__ functionality. This would be more efficient than handling resizing in the collate_fn.

### Environment info

- `datasets` version: 3.1.0

- Platform: Linux-6.5.0-41-generic-x86_64-with-glibc2.35

- Python version: 3.11.10

- `huggingface_hub` version: 0.26.2

- PyArrow version: 18.0.0

- Pandas version: 2.2.3

- `fsspec` version: 2024.9.0

| null | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/7299/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/7299/timeline | null | null | null | null | false | null |

https://api.github.com/repos/huggingface/datasets/issues/7298 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/7298/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/7298/comments | https://api.github.com/repos/huggingface/datasets/issues/7298/events | https://github.com/huggingface/datasets/issues/7298 | 2,694,196,968 | I_kwDODunzps6gli7o | 7,298 | loading dataset issue with load_dataset() when training controlnet | {

"avatar_url": "https://avatars.githubusercontent.com/u/81594044?v=4",

"events_url": "https://api.github.com/users/bigbraindump/events{/privacy}",

"followers_url": "https://api.github.com/users/bigbraindump/followers",

"following_url": "https://api.github.com/users/bigbraindump/following{/other_user}",

"gists_url": "https://api.github.com/users/bigbraindump/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/bigbraindump",

"id": 81594044,

"login": "bigbraindump",

"node_id": "MDQ6VXNlcjgxNTk0MDQ0",

"organizations_url": "https://api.github.com/users/bigbraindump/orgs",

"received_events_url": "https://api.github.com/users/bigbraindump/received_events",

"repos_url": "https://api.github.com/users/bigbraindump/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/bigbraindump/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/bigbraindump/subscriptions",

"type": "User",

"url": "https://api.github.com/users/bigbraindump",

"user_view_type": "public"

} | [] | open | false | null | [] | null | [] | 1970-01-01T00:00:00.000001 | 1,732 | null | NONE | null | ### Describe the bug

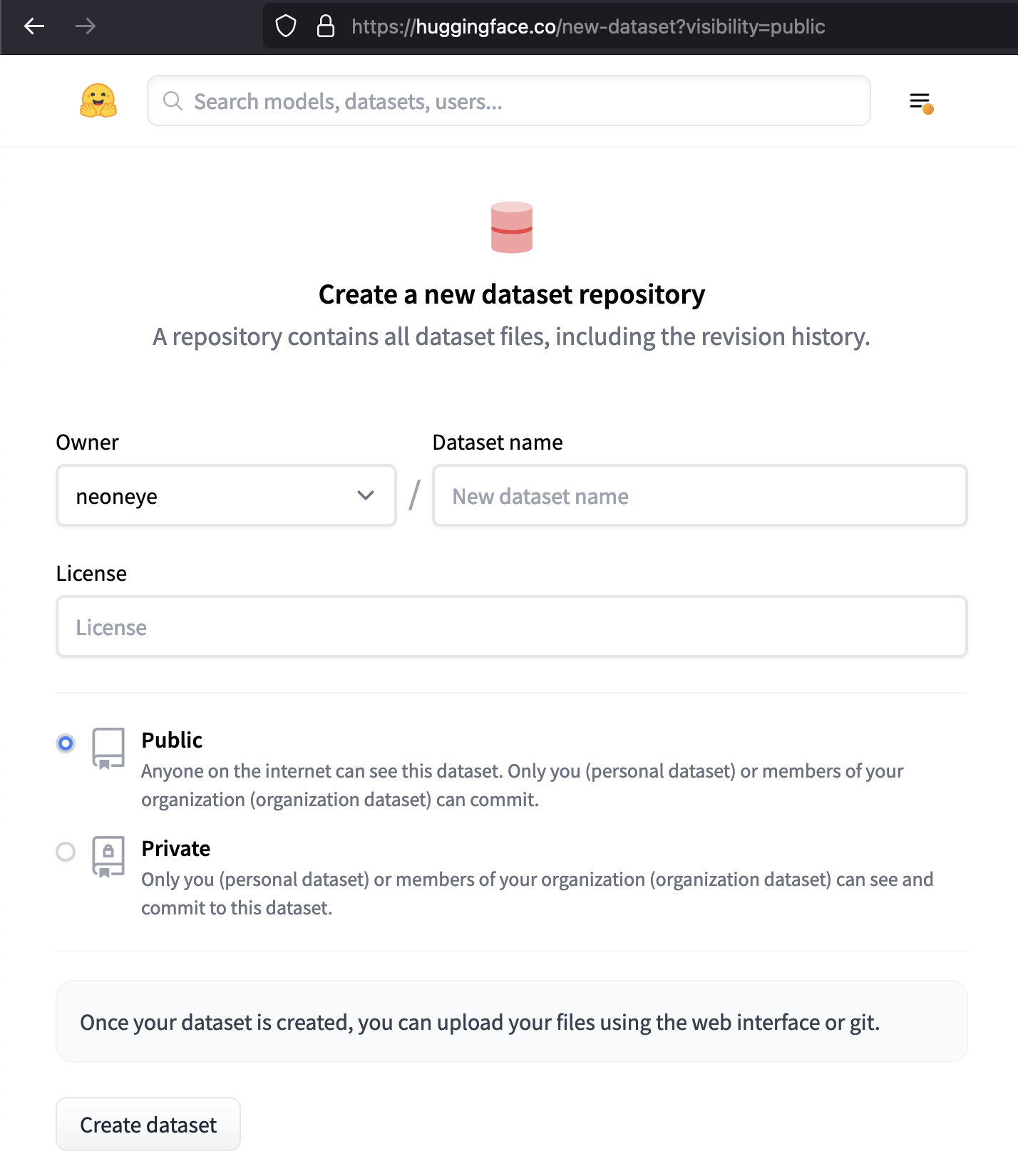

i'm unable to load my dataset for [controlnet training](https://github.com/huggingface/diffusers/blob/074e12358bc17e7dbe111ea4f62f05dbae8a49d5/examples/controlnet/train_controlnet.py#L606) using load_dataset(). however, load_from_disk() seems to work?

would appreciate if someone can explain why that's the case here

1. for reference here's the structure of the original training files _before_ dataset creation -

```

- dir train

- dir A (illustrations)

- dir B (SignWriting)

- prompt.json containing:

{"source": "B/file.png", "target": "A/file.png", "prompt": "..."}

```

2. here are features _after_ dataset creation -

```

"features": {

"control_image": {

"_type": "Image"

},

"image": {

"_type": "Image"

},

"caption": {

"dtype": "string",

"_type": "Value"

}

```

3. I've also attempted to upload the dataset to huggingface with the same error output

### Steps to reproduce the bug

1. [dataset creation script](https://github.com/sign-language-processing/signwriting-illustration/blob/main/signwriting_illustration/controlnet_huggingface/dataset.py)

2. controlnet [training script](examples/controlnet/train_controlnet.py) used

3. training parameters -

! accelerate launch diffusers/examples/controlnet/train_controlnet.py \

--pretrained_model_name_or_path="stable-diffusion-v1-5/stable-diffusion-v1-5" \

--output_dir="$OUTPUT_DIR" \

--train_data_dir="$HF_DATASET_DIR" \

--conditioning_image_column=control_image \

--image_column=image \

--caption_column=caption \

--resolution=512\

--learning_rate=1e-5 \

--validation_image "./validation/0a4b3c71265bb3a726457837428dda78.png" "./validation/0a5922fe2c638e6776bd62f623145004.png" "./validation/1c9f1a53106f64c682cf5d009ee7156f.png" \

--validation_prompt "An illustration of a man with short hair" "An illustration of a woman with short hair" "An illustration of Barack Obama" \

--train_batch_size=4 \

--num_train_epochs=500 \

--tracker_project_name="sd-controlnet-signwriting-test" \

--hub_model_id="sarahahtee/signwriting-illustration-test" \

--checkpointing_steps=5000 \

--validation_steps=1000 \

--report_to wandb \

--push_to_hub

4. command -

` sbatch --export=HUGGINGFACE_TOKEN=hf_token,WANDB_API_KEY=api_key script.sh`

### Expected behavior

```

11/25/2024 17:12:18 - INFO - __main__ - Initializing controlnet weights from unet

Generating train split: 1 examples [00:00, 334.85 examples/s]

Traceback (most recent call last):

File "/data/user/user/signwriting_illustration/controlnet_huggingface/diffusers/examples/controlnet/train_controlnet.py", line 1189, in <module>

main(args)

File "/data/user/user/signwriting_illustration/controlnet_huggingface/diffusers/examples/controlnet/train_controlnet.py", line 923, in main

train_dataset = make_train_dataset(args, tokenizer, accelerator)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/data/user/user/signwriting_illustration/controlnet_huggingface/diffusers/examples/controlnet/train_controlnet.py", line 639, in make_train_dataset

raise ValueError(

ValueError: `--image_column` value 'image' not found in dataset columns. Dataset columns are: _data_files, _fingerprint, _format_columns, _format_kwargs, _format_type, _output_all_columns, _split

```

### Environment info

accelerate 1.1.1

huggingface-hub 0.26.2

python 3.11

torch 2.5.1

transformers 4.46.2 | null | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/7298/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/7298/timeline | null | null | null | null | false | null |

https://api.github.com/repos/huggingface/datasets/issues/7297 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/7297/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/7297/comments | https://api.github.com/repos/huggingface/datasets/issues/7297/events | https://github.com/huggingface/datasets/issues/7297 | 2,683,977,430 | I_kwDODunzps6f-j7W | 7,297 | wrong return type for `IterableDataset.shard()` | {

"avatar_url": "https://avatars.githubusercontent.com/u/47225236?v=4",

"events_url": "https://api.github.com/users/ysngshn/events{/privacy}",

"followers_url": "https://api.github.com/users/ysngshn/followers",

"following_url": "https://api.github.com/users/ysngshn/following{/other_user}",

"gists_url": "https://api.github.com/users/ysngshn/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/ysngshn",

"id": 47225236,

"login": "ysngshn",

"node_id": "MDQ6VXNlcjQ3MjI1MjM2",

"organizations_url": "https://api.github.com/users/ysngshn/orgs",

"received_events_url": "https://api.github.com/users/ysngshn/received_events",

"repos_url": "https://api.github.com/users/ysngshn/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/ysngshn/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/ysngshn/subscriptions",

"type": "User",

"url": "https://api.github.com/users/ysngshn",

"user_view_type": "public"

} | [] | open | false | null | [] | null | [] | 1970-01-01T00:00:00.000001 | 1,732 | null | NONE | null | ### Describe the bug

`IterableDataset.shard()` has the wrong typing for its return as `"Dataset"`. It should be `"IterableDataset"`. Makes my IDE unhappy.

### Steps to reproduce the bug

look at [the source code](https://github.com/huggingface/datasets/blob/main/src/datasets/iterable_dataset.py#L2668)?

### Expected behavior

Correct return type as `"IterableDataset"`

### Environment info

datasets==3.1.0 | null | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/7297/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/7297/timeline | null | null | null | null | false | null |

https://api.github.com/repos/huggingface/datasets/issues/7295 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/7295/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/7295/comments | https://api.github.com/repos/huggingface/datasets/issues/7295/events | https://github.com/huggingface/datasets/issues/7295 | 2,672,003,384 | I_kwDODunzps6fQ4k4 | 7,295 | [BUG]: Streaming from S3 triggers `unexpected keyword argument 'requote_redirect_url'` | {

"avatar_url": "https://avatars.githubusercontent.com/u/27340033?v=4",

"events_url": "https://api.github.com/users/casper-hansen/events{/privacy}",

"followers_url": "https://api.github.com/users/casper-hansen/followers",

"following_url": "https://api.github.com/users/casper-hansen/following{/other_user}",

"gists_url": "https://api.github.com/users/casper-hansen/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/casper-hansen",

"id": 27340033,

"login": "casper-hansen",

"node_id": "MDQ6VXNlcjI3MzQwMDMz",

"organizations_url": "https://api.github.com/users/casper-hansen/orgs",

"received_events_url": "https://api.github.com/users/casper-hansen/received_events",

"repos_url": "https://api.github.com/users/casper-hansen/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/casper-hansen/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/casper-hansen/subscriptions",

"type": "User",

"url": "https://api.github.com/users/casper-hansen",

"user_view_type": "public"

} | [] | open | false | null | [] | null | [] | 1970-01-01T00:00:00.000001 | 1,732 | null | NONE | null | ### Describe the bug

Note that this bug is only triggered when `streaming=True`. #5459 introduced always calling fsspec with `client_kwargs={"requote_redirect_url": False}`, which seems to have incompatibility issues even in the newest versions.

Analysis of what's happening:

1. `datasets` passes the `client_kwargs` through `fsspec`

2. `fsspec` passes the `client_kwargs` through `s3fs`

3. `s3fs` passes the `client_kwargs` to `aiobotocore` which uses `aiohttp`

```

s3creator = self.session.create_client(

"s3", config=conf, **init_kwargs, **client_kwargs

)

```

4. The `session` tries to create an `aiohttp` session but the `**kwargs` are not just kept as unfolded `**kwargs` but passed in as individual variables (`requote_redirect_url` and `trust_env`).

Error:

```

Traceback (most recent call last):

File "/Users/cxrh/Documents/GitHub/nlp_foundation/nlp_train/test.py", line 14, in <module>

batch = next(iter(ds))

File "/Users/cxrh/miniconda3/envs/s3_data_loader/lib/python3.10/site-packages/datasets/iterable_dataset.py", line 1353, in __iter__

for key, example in ex_iterable:

File "/Users/cxrh/miniconda3/envs/s3_data_loader/lib/python3.10/site-packages/datasets/iterable_dataset.py", line 255, in __iter__

for key, pa_table in self.generate_tables_fn(**self.kwargs):

File "/Users/cxrh/miniconda3/envs/s3_data_loader/lib/python3.10/site-packages/datasets/packaged_modules/json/json.py", line 78, in _generate_tables

for file_idx, file in enumerate(itertools.chain.from_iterable(files)):

File "/Users/cxrh/miniconda3/envs/s3_data_loader/lib/python3.10/site-packages/datasets/download/streaming_download_manager.py", line 840, in __iter__

yield from self.generator(*self.args, **self.kwargs)

File "/Users/cxrh/miniconda3/envs/s3_data_loader/lib/python3.10/site-packages/datasets/download/streaming_download_manager.py", line 921, in _iter_from_urlpaths

elif xisdir(urlpath, download_config=download_config):

File "/Users/cxrh/miniconda3/envs/s3_data_loader/lib/python3.10/site-packages/datasets/download/streaming_download_manager.py", line 305, in xisdir

return fs.isdir(inner_path)

File "/Users/cxrh/miniconda3/envs/s3_data_loader/lib/python3.10/site-packages/fsspec/spec.py", line 721, in isdir

return self.info(path)["type"] == "directory"

File "/Users/cxrh/miniconda3/envs/s3_data_loader/lib/python3.10/site-packages/fsspec/archive.py", line 38, in info

self._get_dirs()

File "/Users/cxrh/miniconda3/envs/s3_data_loader/lib/python3.10/site-packages/datasets/filesystems/compression.py", line 64, in _get_dirs

f = {**self.file.fs.info(self.file.path), "name": self.uncompressed_name}

File "/Users/cxrh/miniconda3/envs/s3_data_loader/lib/python3.10/site-packages/fsspec/asyn.py", line 118, in wrapper

return sync(self.loop, func, *args, **kwargs)

File "/Users/cxrh/miniconda3/envs/s3_data_loader/lib/python3.10/site-packages/fsspec/asyn.py", line 103, in sync

raise return_result

File "/Users/cxrh/miniconda3/envs/s3_data_loader/lib/python3.10/site-packages/fsspec/asyn.py", line 56, in _runner

result[0] = await coro

File "/Users/cxrh/miniconda3/envs/s3_data_loader/lib/python3.10/site-packages/s3fs/core.py", line 1302, in _info

out = await self._call_s3(

File "/Users/cxrh/miniconda3/envs/s3_data_loader/lib/python3.10/site-packages/s3fs/core.py", line 341, in _call_s3

await self.set_session()

File "/Users/cxrh/miniconda3/envs/s3_data_loader/lib/python3.10/site-packages/s3fs/core.py", line 524, in set_session

s3creator = self.session.create_client(

File "/Users/cxrh/miniconda3/envs/s3_data_loader/lib/python3.10/site-packages/aiobotocore/session.py", line 114, in create_client

return ClientCreatorContext(self._create_client(*args, **kwargs))

TypeError: AioSession._create_client() got an unexpected keyword argument 'requote_redirect_url'

```

### Steps to reproduce the bug

1. Install the necessary libraries, datasets having a requirement for being at least 2.19.0:

```

pip install s3fs fsspec aiohttp aiobotocore botocore 'datasets>=2.19.0'

```

2. Run this code:

```

from datasets import load_dataset

ds = load_dataset(

"json",

data_files="s3://your_path/*.jsonl.gz",

streaming=True,

split="train",

)

batch = next(iter(ds))

print(batch)

```

3. You get the `unexpected keyword argument 'requote_redirect_url'` error.

### Expected behavior

The datasets is able to load a batch from the dataset stored on S3, without triggering this `requote_redirect_url` error.

Fix: I could fix this by directly removing the `requote_redirect_url` and `trust_env` - then it loads properly.

<img width="1127" alt="image" src="https://github.com/user-attachments/assets/4c40efa9-8787-4919-b613-e4908c3d1ab2">

### Environment info

- `datasets` version: 3.1.0

- Platform: macOS-15.1-arm64-arm-64bit

- Python version: 3.10.15

- `huggingface_hub` version: 0.26.2

- PyArrow version: 18.0.0

- Pandas version: 2.2.3

- `fsspec` version: 2024.9.0 | null | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/7295/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/7295/timeline | null | null | null | null | false | null |

https://api.github.com/repos/huggingface/datasets/issues/7292 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/7292/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/7292/comments | https://api.github.com/repos/huggingface/datasets/issues/7292/events | https://github.com/huggingface/datasets/issues/7292 | 2,664,250,855 | I_kwDODunzps6ezT3n | 7,292 | DataFilesNotFoundError for datasets `OpenMol/PubChemSFT` | {

"avatar_url": "https://avatars.githubusercontent.com/u/17878022?v=4",

"events_url": "https://api.github.com/users/xnuohz/events{/privacy}",

"followers_url": "https://api.github.com/users/xnuohz/followers",

"following_url": "https://api.github.com/users/xnuohz/following{/other_user}",

"gists_url": "https://api.github.com/users/xnuohz/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/xnuohz",

"id": 17878022,

"login": "xnuohz",

"node_id": "MDQ6VXNlcjE3ODc4MDIy",

"organizations_url": "https://api.github.com/users/xnuohz/orgs",

"received_events_url": "https://api.github.com/users/xnuohz/received_events",

"repos_url": "https://api.github.com/users/xnuohz/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/xnuohz/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/xnuohz/subscriptions",

"type": "User",

"url": "https://api.github.com/users/xnuohz",

"user_view_type": "public"

} | [] | closed | false | null | [] | null | [

"Hi ! If the dataset owner uses `push_to_hub()` instead of `save_to_disk()` and upload the local files it will fix the issue.\r\nRight now `datasets` sees the train/test/valid pickle files but they are not supported file formats.",

"Alternatively you can load the arrow file instead:\r\n\r\n```python\r\nfrom datasets import load_dataset\r\ndataset = load_dataset('OpenMol/PubChemSFT', data_files='stage1/*.arrow')\r\n```",

"Thanks! I'll have a try."

] | 1970-01-01T00:00:00.000001 | 1,731 | 1970-01-01T00:00:00.000001 | NONE | null | ### Describe the bug

Cannot load the dataset https://huggingface.co./datasets/OpenMol/PubChemSFT

### Steps to reproduce the bug

```

from datasets import load_dataset

dataset = load_dataset('OpenMol/PubChemSFT')

```

### Expected behavior

```

---------------------------------------------------------------------------

DataFilesNotFoundError Traceback (most recent call last)

Cell In[7], [line 2](vscode-notebook-cell:?execution_count=7&line=2)

[1](vscode-notebook-cell:?execution_count=7&line=1) from datasets import load_dataset

----> [2](vscode-notebook-cell:?execution_count=7&line=2) dataset = load_dataset('OpenMol/PubChemSFT')

File ~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:2587, in load_dataset(path, name, data_dir, data_files, split, cache_dir, features, download_config, download_mode, verification_mode, ignore_verifications, keep_in_memory, save_infos, revision, token, use_auth_token, task, streaming, num_proc, storage_options, trust_remote_code, **config_kwargs)

[2582](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:2582) verification_mode = VerificationMode(

[2583](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:2583) (verification_mode or VerificationMode.BASIC_CHECKS) if not save_infos else VerificationMode.ALL_CHECKS

[2584](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:2584) )

[2586](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:2586) # Create a dataset builder

-> [2587](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:2587) builder_instance = load_dataset_builder(

[2588](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:2588) path=path,

[2589](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:2589) name=name,

[2590](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:2590) data_dir=data_dir,

[2591](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:2591) data_files=data_files,

[2592](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:2592) cache_dir=cache_dir,

[2593](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:2593) features=features,

[2594](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:2594) download_config=download_config,

[2595](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:2595) download_mode=download_mode,

[2596](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:2596) revision=revision,

[2597](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:2597) token=token,

[2598](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:2598) storage_options=storage_options,

[2599](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:2599) trust_remote_code=trust_remote_code,

[2600](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:2600) _require_default_config_name=name is None,

[2601](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:2601) **config_kwargs,

[2602](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:2602) )

[2604](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:2604) # Return iterable dataset in case of streaming

[2605](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:2605) if streaming:

File ~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:2259, in load_dataset_builder(path, name, data_dir, data_files, cache_dir, features, download_config, download_mode, revision, token, use_auth_token, storage_options, trust_remote_code, _require_default_config_name, **config_kwargs)

[2257](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:2257) download_config = download_config.copy() if download_config else DownloadConfig()

[2258](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:2258) download_config.storage_options.update(storage_options)

-> [2259](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:2259) dataset_module = dataset_module_factory(

[2260](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:2260) path,

[2261](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:2261) revision=revision,

[2262](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:2262) download_config=download_config,

[2263](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:2263) download_mode=download_mode,

[2264](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:2264) data_dir=data_dir,

[2265](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:2265) data_files=data_files,

[2266](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:2266) cache_dir=cache_dir,

[2267](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:2267) trust_remote_code=trust_remote_code,

[2268](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:2268) _require_default_config_name=_require_default_config_name,

[2269](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:2269) _require_custom_configs=bool(config_kwargs),

[2270](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:2270) )

[2271](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:2271) # Get dataset builder class from the processing script

[2272](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:2272) builder_kwargs = dataset_module.builder_kwargs

File ~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:1904, in dataset_module_factory(path, revision, download_config, download_mode, dynamic_modules_path, data_dir, data_files, cache_dir, trust_remote_code, _require_default_config_name, _require_custom_configs, **download_kwargs)

[1902](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:1902) raise ConnectionError(f"Couldn't reach the Hugging Face Hub for dataset '{path}': {e1}") from None

[1903](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:1903) if isinstance(e1, (DataFilesNotFoundError, DatasetNotFoundError, EmptyDatasetError)):

-> [1904](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:1904) raise e1 from None

[1905](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:1905) if isinstance(e1, FileNotFoundError):

[1906](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:1906) raise FileNotFoundError(

[1907](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:1907) f"Couldn't find a dataset script at {relative_to_absolute_path(combined_path)} or any data file in the same directory. "

[1908](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:1908) f"Couldn't find '{path}' on the Hugging Face Hub either: {type(e1).__name__}: {e1}"

[1909](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:1909) ) from None

File ~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:1885, in dataset_module_factory(path, revision, download_config, download_mode, dynamic_modules_path, data_dir, data_files, cache_dir, trust_remote_code, _require_default_config_name, _require_custom_configs, **download_kwargs)

[1876](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:1876) return HubDatasetModuleFactoryWithScript(

[1877](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:1877) path,

[1878](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:1878) revision=revision,

(...)

[1882](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:1882) trust_remote_code=trust_remote_code,

[1883](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:1883) ).get_module()

[1884](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:1884) else:

-> [1885](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:1885) return HubDatasetModuleFactoryWithoutScript(

[1886](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:1886) path,

[1887](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:1887) revision=revision,

[1888](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:1888) data_dir=data_dir,

[1889](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:1889) data_files=data_files,

[1890](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:1890) download_config=download_config,

[1891](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:1891) download_mode=download_mode,

[1892](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:1892) ).get_module()

[1893](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:1893) except Exception as e1:

[1894](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:1894) # All the attempts failed, before raising the error we should check if the module is already cached

[1895](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:1895) try:

File ~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:1270, in HubDatasetModuleFactoryWithoutScript.get_module(self)

[1263](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:1263) patterns = get_data_patterns(base_path, download_config=self.download_config)

[1264](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:1264) data_files = DataFilesDict.from_patterns(

[1265](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:1265) patterns,

[1266](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:1266) base_path=base_path,

[1267](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:1267) allowed_extensions=ALL_ALLOWED_EXTENSIONS,

[1268](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:1268) download_config=self.download_config,

[1269](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:1269) )

-> [1270](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:1270) module_name, default_builder_kwargs = infer_module_for_data_files(

[1271](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:1271) data_files=data_files,

[1272](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:1272) path=self.name,

[1273](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:1273) download_config=self.download_config,

[1274](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:1274) )

[1275](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:1275) data_files = data_files.filter_extensions(_MODULE_TO_EXTENSIONS[module_name])

[1276](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:1276) # Collect metadata files if the module supports them

File ~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:597, in infer_module_for_data_files(data_files, path, download_config)

[595](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:595) raise ValueError(f"Couldn't infer the same data file format for all splits. Got {split_modules}")

[596](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:596) if not module_name:

--> [597](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:597) raise DataFilesNotFoundError("No (supported) data files found" + (f" in {path}" if path else ""))

[598](https://file+.vscode-resource.vscode-cdn.net/home/ubuntu/Projects/notebook/~/Softwares/anaconda3/envs/pyg-dev/lib/python3.9/site-packages/datasets/load.py:598) return module_name, default_builder_kwargs

DataFilesNotFoundError: No (supported) data files found in OpenMol/PubChemSFT

```

### Environment info

```

- `datasets` version: 3.1.0

- Platform: Linux-5.15.0-125-generic-x86_64-with-glibc2.31

- Python version: 3.9.18

- `huggingface_hub` version: 0.25.2

- PyArrow version: 18.0.0

- Pandas version: 2.0.3

- `fsspec` version: 2023.9.2

``` | {

"avatar_url": "https://avatars.githubusercontent.com/u/17878022?v=4",

"events_url": "https://api.github.com/users/xnuohz/events{/privacy}",

"followers_url": "https://api.github.com/users/xnuohz/followers",

"following_url": "https://api.github.com/users/xnuohz/following{/other_user}",

"gists_url": "https://api.github.com/users/xnuohz/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/xnuohz",

"id": 17878022,

"login": "xnuohz",

"node_id": "MDQ6VXNlcjE3ODc4MDIy",

"organizations_url": "https://api.github.com/users/xnuohz/orgs",

"received_events_url": "https://api.github.com/users/xnuohz/received_events",

"repos_url": "https://api.github.com/users/xnuohz/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/xnuohz/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/xnuohz/subscriptions",

"type": "User",

"url": "https://api.github.com/users/xnuohz",

"user_view_type": "public"

} | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/7292/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/7292/timeline | null | completed | null | null | false | 0 |

https://api.github.com/repos/huggingface/datasets/issues/7291 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/7291/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/7291/comments | https://api.github.com/repos/huggingface/datasets/issues/7291/events | https://github.com/huggingface/datasets/issues/7291 | 2,662,244,643 | I_kwDODunzps6erqEj | 7,291 | Why return_tensors='pt' doesn't work? | {

"avatar_url": "https://avatars.githubusercontent.com/u/86752851?v=4",

"events_url": "https://api.github.com/users/bw-wang19/events{/privacy}",

"followers_url": "https://api.github.com/users/bw-wang19/followers",

"following_url": "https://api.github.com/users/bw-wang19/following{/other_user}",

"gists_url": "https://api.github.com/users/bw-wang19/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/bw-wang19",

"id": 86752851,

"login": "bw-wang19",

"node_id": "MDQ6VXNlcjg2NzUyODUx",

"organizations_url": "https://api.github.com/users/bw-wang19/orgs",

"received_events_url": "https://api.github.com/users/bw-wang19/received_events",

"repos_url": "https://api.github.com/users/bw-wang19/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/bw-wang19/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/bw-wang19/subscriptions",

"type": "User",

"url": "https://api.github.com/users/bw-wang19",

"user_view_type": "public"

} | [] | open | false | null | [] | null | [

"Hi ! `datasets` uses Arrow as storage backend which is agnostic to deep learning frameworks like torch. If you want to get torch tensors back, you need to do `dataset = dataset.with_format(\"torch\")`",

"> Hi ! `datasets` uses Arrow as storage backend which is agnostic to deep learning frameworks like torch. If you want to get torch tensors back, you need to do `dataset = dataset.with_format(\"torch\")`\r\n\r\nIt does work! Thanks for your suggestion!"

] | 1970-01-01T00:00:00.000001 | 1,731 | null | NONE | null | ### Describe the bug

I tried to add input_ids to dataset with map(), and I used the return_tensors='pt', but why I got the callback with the type of List?

### Steps to reproduce the bug

### Expected behavior

Sorry for this silly question, I'm noob on using this tool. But I think it should return a tensor value as I have used the protocol?

When I tokenize only one sentence using tokenized_input=tokenizer(input, return_tensors='pt' ),it does return in tensor type. Why doesn't it work in map()?

### Environment info

transformers>=4.41.2,<=4.45.0

datasets>=2.16.0,<=2.21.0

accelerate>=0.30.1,<=0.34.2

peft>=0.11.1,<=0.12.0

trl>=0.8.6,<=0.9.6

gradio>=4.0.0

pandas>=2.0.0

scipy

einops

sentencepiece

tiktoken

protobuf

uvicorn

pydantic

fastapi

sse-starlette

matplotlib>=3.7.0

fire

packaging

pyyaml

numpy<2.0.0

| null | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/7291/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/7291/timeline | null | null | null | null | false | null |

https://api.github.com/repos/huggingface/datasets/issues/7290 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/7290/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/7290/comments | https://api.github.com/repos/huggingface/datasets/issues/7290/events | https://github.com/huggingface/datasets/issues/7290 | 2,657,620,816 | I_kwDODunzps6eaBNQ | 7,290 | `Dataset.save_to_disk` hangs when using num_proc > 1 | {

"avatar_url": "https://avatars.githubusercontent.com/u/22243463?v=4",

"events_url": "https://api.github.com/users/JohannesAck/events{/privacy}",

"followers_url": "https://api.github.com/users/JohannesAck/followers",

"following_url": "https://api.github.com/users/JohannesAck/following{/other_user}",

"gists_url": "https://api.github.com/users/JohannesAck/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/JohannesAck",

"id": 22243463,

"login": "JohannesAck",

"node_id": "MDQ6VXNlcjIyMjQzNDYz",

"organizations_url": "https://api.github.com/users/JohannesAck/orgs",

"received_events_url": "https://api.github.com/users/JohannesAck/received_events",

"repos_url": "https://api.github.com/users/JohannesAck/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/JohannesAck/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/JohannesAck/subscriptions",

"type": "User",

"url": "https://api.github.com/users/JohannesAck",

"user_view_type": "public"

} | [] | open | false | null | [] | null | [] | 1970-01-01T00:00:00.000001 | 1,731 | null | NONE | null | ### Describe the bug

Hi, I'm encountered a small issue when saving datasets that led to the saving taking up to multiple hours.

Specifically, [`Dataset.save_to_disk`](https://huggingface.co./docs/datasets/main/en/package_reference/main_classes#datasets.Dataset.save_to_disk) is a lot slower when using `num_proc>1` than when using `num_proc=1`

The documentation mentions that "Multiprocessing is disabled by default.", but there is no explanation on how to enable it.

### Steps to reproduce the bug

```

import numpy as np

from datasets import Dataset

n_samples = int(4e6)

n_tokens_sample = 100

data_dict = {

'tokens' : np.random.randint(0, 100, (n_samples, n_tokens_sample)),

}

dataset = Dataset.from_dict(data_dict)

dataset.save_to_disk('test_dataset', num_proc=1)

dataset.save_to_disk('test_dataset', num_proc=4)

dataset.save_to_disk('test_dataset', num_proc=8)

```

This results in:

```

>>> dataset.save_to_disk('test_dataset', num_proc=1)

Saving the dataset (7/7 shards): 100%|██████████████| 4000000/4000000 [00:17<00:00, 228075.15 examples/s]

>>> dataset.save_to_disk('test_dataset', num_proc=4)

Saving the dataset (7/7 shards): 100%|██████████████| 4000000/4000000 [01:49<00:00, 36583.75 examples/s]

>>> dataset.save_to_disk('test_dataset', num_proc=8)

Saving the dataset (8/8 shards): 100%|██████████████| 4000000/4000000 [02:11<00:00, 30518.43 examples/s]

```

With larger datasets it can take hours, but I didn't benchmark that for this bug report.

### Expected behavior

I would expect using `num_proc>1` to be faster instead of slower than `num_proc=1`.

### Environment info

- `datasets` version: 3.1.0

- Platform: Linux-5.15.153.1-microsoft-standard-WSL2-x86_64-with-glibc2.35

- Python version: 3.10.12

- `huggingface_hub` version: 0.26.2

- PyArrow version: 18.0.0

- Pandas version: 2.2.3

- `fsspec` version: 2024.6.1 | null | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/7290/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/7290/timeline | null | null | null | null | false | null |

https://api.github.com/repos/huggingface/datasets/issues/7289 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/7289/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/7289/comments | https://api.github.com/repos/huggingface/datasets/issues/7289/events | https://github.com/huggingface/datasets/issues/7289 | 2,648,019,507 | I_kwDODunzps6d1ZIz | 7,289 | Dataset viewer displays wrong statists | {

"avatar_url": "https://avatars.githubusercontent.com/u/3585459?v=4",

"events_url": "https://api.github.com/users/speedcell4/events{/privacy}",

"followers_url": "https://api.github.com/users/speedcell4/followers",

"following_url": "https://api.github.com/users/speedcell4/following{/other_user}",

"gists_url": "https://api.github.com/users/speedcell4/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/speedcell4",

"id": 3585459,

"login": "speedcell4",

"node_id": "MDQ6VXNlcjM1ODU0NTk=",

"organizations_url": "https://api.github.com/users/speedcell4/orgs",

"received_events_url": "https://api.github.com/users/speedcell4/received_events",

"repos_url": "https://api.github.com/users/speedcell4/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/speedcell4/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/speedcell4/subscriptions",

"type": "User",

"url": "https://api.github.com/users/speedcell4",

"user_view_type": "public"

} | [] | closed | false | null | [] | null | [

"i think this issue is more for https://github.com/huggingface/dataset-viewer"

] | 1970-01-01T00:00:00.000001 | 1,731 | 1970-01-01T00:00:00.000001 | NONE | null | ### Describe the bug

In [my dataset](https://huggingface.co./datasets/speedcell4/opus-unigram2), there is a column called `lang2`, and there are 94 different classes in total, but the viewer says there are 83 values only. This issue only arises in the `train` split. The total number of values is also 94 in the `test` and `dev` columns, viewer tells the correct number of them.

<img width="177" alt="image" src="https://github.com/user-attachments/assets/78d76ef2-fe0e-4fa3-85e0-fb2552813d1c">

### Steps to reproduce the bug

```python3

from datasets import load_dataset

ds = load_dataset('speedcell4/opus-unigram2').unique('lang2')

for key, lang2 in ds.items():

print(key, len(lang2))

```

This script returns the following and tells that the `train` split has 94 values in the `lang2` column.

```

train 94

dev 94

test 94

zero 5

```

### Expected behavior

94 in the reviewer.

### Environment info

Collecting environment information...

PyTorch version: 2.4.1+cu121

Is debug build: False

CUDA used to build PyTorch: 12.1

ROCM used to build PyTorch: N/A

OS: CentOS Linux release 8.2.2004 (Core) (x86_64)

GCC version: (GCC) 8.3.1 20191121 (Red Hat 8.3.1-5)

Clang version: Could not collect

CMake version: version 3.11.4

Libc version: glibc-2.28

Python version: 3.9.20 (main, Oct 3 2024, 07:27:41) [GCC 11.2.0] (64-bit runtime)

Python platform: Linux-4.18.0-193.28.1.el8_2.x86_64-x86_64-with-glibc2.28

Is CUDA available: True

CUDA runtime version: 12.2.140

CUDA_MODULE_LOADING set to: LAZY

GPU models and configuration:

GPU 0: NVIDIA A100-SXM4-40GB

GPU 1: NVIDIA A100-SXM4-40GB

GPU 2: NVIDIA A100-SXM4-40GB

GPU 3: NVIDIA A100-SXM4-40GB

GPU 4: NVIDIA A100-SXM4-40GB

GPU 5: NVIDIA A100-SXM4-40GB

GPU 6: NVIDIA A100-SXM4-40GB

GPU 7: NVIDIA A100-SXM4-40GB

Nvidia driver version: 525.85.05

cuDNN version: Could not collect

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

CPU:

Architecture: x86_64

CPU op-mode(s): 32-bit, 64-bit

Byte Order: Little Endian

CPU(s): 64

On-line CPU(s) list: 0-63

Thread(s) per core: 1

Core(s) per socket: 32

Socket(s): 2

NUMA node(s): 4

Vendor ID: AuthenticAMD

CPU family: 23

Model: 49

Model name: AMD EPYC 7542 32-Core Processor

Stepping: 0

CPU MHz: 3389.114

BogoMIPS: 5789.40

Virtualization: AMD-V

L1d cache: 32K

L1i cache: 32K

L2 cache: 512K

L3 cache: 16384K

NUMA node0 CPU(s): 0-15

NUMA node1 CPU(s): 16-31

NUMA node2 CPU(s): 32-47

NUMA node3 CPU(s): 48-63

Flags: fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush mmx fxsr sse sse2 ht syscall nx mmxext fxsr_opt pdpe1gb rdtscp lm constant_tsc rep_good nopl nonstop_tsc cpuid extd_apicid aperfmperf pni pclmulqdq monitor ssse3 fma cx16 sse4_1 sse4_2 movbe popcnt aes xsave avx f16c rdrand lahf_lm cmp_legacy svm extapic cr8_legacy abm sse4a misalignsse 3dnowprefetch osvw ibs skinit wdt tce topoext perfctr_core perfctr_nb bpext perfctr_llc mwaitx cpb cat_l3 cdp_l3 hw_pstate ssbd mba ibrs ibpb stibp vmmcall fsgsbase bmi1 avx2 smep bmi2 cqm rdt_a rdseed adx smap clflushopt clwb sha_ni xsaveopt xsavec xgetbv1 xsaves cqm_llc cqm_occup_llc cqm_mbm_total cqm_mbm_local clzero irperf xsaveerptr wbnoinvd arat npt lbrv svm_lock nrip_save tsc_scale vmcb_clean flushbyasid decodeassists pausefilter pfthreshold avic v_vmsave_vmload vgif umip rdpid overflow_recov succor smca

Versions of relevant libraries:

[pip3] numpy==1.26.4

[pip3] torch==2.4.1+cu121

[pip3] torchaudio==2.4.1+cu121

[pip3] torchdevice==0.1.1

[pip3] torchglyph==0.3.2

[pip3] torchmetrics==1.5.0

[pip3] torchrua==0.5.1

[pip3] torchvision==0.19.1+cu121

[pip3] triton==3.0.0

[pip3] datasets==3.0.1

[conda] numpy 1.26.4 pypi_0 pypi

[conda] torch 2.4.1+cu121 pypi_0 pypi

[conda] torchaudio 2.4.1+cu121 pypi_0 pypi

[conda] torchdevice 0.1.1 pypi_0 pypi

[conda] torchglyph 0.3.2 pypi_0 pypi

[conda] torchmetrics 1.5.0 pypi_0 pypi

[conda] torchrua 0.5.1 pypi_0 pypi

[conda] torchvision 0.19.1+cu121 pypi_0 pypi

[conda] triton 3.0.0 pypi_0 pypi | {

"avatar_url": "https://avatars.githubusercontent.com/u/3585459?v=4",

"events_url": "https://api.github.com/users/speedcell4/events{/privacy}",

"followers_url": "https://api.github.com/users/speedcell4/followers",

"following_url": "https://api.github.com/users/speedcell4/following{/other_user}",

"gists_url": "https://api.github.com/users/speedcell4/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/speedcell4",

"id": 3585459,

"login": "speedcell4",

"node_id": "MDQ6VXNlcjM1ODU0NTk=",

"organizations_url": "https://api.github.com/users/speedcell4/orgs",

"received_events_url": "https://api.github.com/users/speedcell4/received_events",

"repos_url": "https://api.github.com/users/speedcell4/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/speedcell4/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/speedcell4/subscriptions",

"type": "User",

"url": "https://api.github.com/users/speedcell4",

"user_view_type": "public"

} | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/7289/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/7289/timeline | null | completed | null | null | false | 0 |

https://api.github.com/repos/huggingface/datasets/issues/7287 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/7287/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/7287/comments | https://api.github.com/repos/huggingface/datasets/issues/7287/events | https://github.com/huggingface/datasets/issues/7287 | 2,646,958,393 | I_kwDODunzps6dxWE5 | 7,287 | Support for identifier-based automated split construction | {

"avatar_url": "https://avatars.githubusercontent.com/u/5719745?v=4",

"events_url": "https://api.github.com/users/alex-hh/events{/privacy}",

"followers_url": "https://api.github.com/users/alex-hh/followers",

"following_url": "https://api.github.com/users/alex-hh/following{/other_user}",

"gists_url": "https://api.github.com/users/alex-hh/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/alex-hh",

"id": 5719745,

"login": "alex-hh",

"node_id": "MDQ6VXNlcjU3MTk3NDU=",

"organizations_url": "https://api.github.com/users/alex-hh/orgs",

"received_events_url": "https://api.github.com/users/alex-hh/received_events",

"repos_url": "https://api.github.com/users/alex-hh/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/alex-hh/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/alex-hh/subscriptions",

"type": "User",

"url": "https://api.github.com/users/alex-hh",

"user_view_type": "public"

} | [

{

"color": "a2eeef",

"default": true,

"description": "New feature or request",

"id": 1935892871,

"name": "enhancement",

"node_id": "MDU6TGFiZWwxOTM1ODkyODcx",

"url": "https://api.github.com/repos/huggingface/datasets/labels/enhancement"

}

] | open | false | null | [] | null | [

"Hi ! You can already configure the README.md to have multiple sets of splits, e.g.\r\n\r\n```yaml\r\nconfigs:\r\n- config_name: my_first_set_of_split\r\n data_files:\r\n - split: train\r\n path: *.csv\r\n- config_name: my_second_set_of_split\r\n data_files:\r\n - split: train\r\n path: train-*.csv\r\n - split: test\r\n path: test-*.csv\r\n```",

"Hi - I had something slightly different in mind:\r\n\r\nCurrently the yaml splits specified like this only allow specifying which filenames to pass to each split.\r\nBut what if I have a situation where I know which individual *training examples* I want to put in each split.\r\n\r\nI could build split-specific files, however for large datasets with overlapping (e.g. multiple sets of) splits this could result in significant duplication of data.\r\n\r\nI can see that this could actually be very much intended (i.e. to discourage overlapping splits), but wondered whether some support for handling splits based on individual identifiers is something that could be considered. ",

"This is not supported right now :/ Though you can load the data in two steps like this\r\n```python\r\nfrom datasets import load_dataset\r\n\r\nfull_dataset = load_dataset(\"username/dataset\", split=\"train\")\r\nmy_first_set_indices = load_dataset(\"username/dataset\", \"my_first_set_of_split\", split=\"train\")\r\n\r\nmy_first_set = full_dataset.select(my_first_set_indices[\"indices\"])\r\n```\r\n\r\nyou can create such a dataset by adapting this code for example\r\n```python\r\n# upload the full dataset\r\nfull_dataset.push_to_hub(\"username/dataset\")\r\n# then upload the indices for each set\r\nDatasetDict({\r\n \"train\": Dataset.from_dict({\"indices\": [0, 1, 2, 3]}),\r\n \"test\": Dataset.from_dict({\"indices\": [4, 5]}),\r\n}).push_to_hub(\"username/dataset\", \"my_first_set_of_split\")"

] | 1970-01-01T00:00:00.000001 | 1,732 | null | CONTRIBUTOR | null | ### Feature request

As far as I understand, automated construction of splits for hub datasets is currently based on either file names or directory structure ([as described here](https://huggingface.co./docs/datasets/en/repository_structure))

It would seem to be pretty useful to also allow splits to be based on identifiers of individual examples

This could be configured like

{"split_name": {"column_name": [column values in split]}}

(This in turn requires unique 'index' columns, which could be explicitly supported or just assumed to be defined appropriately by the user).

I guess a potential downside would be that shards would end up spanning different splits - is this something that can be handled somehow? Would this only affect streaming from hub?

### Motivation

The main motivation would be that all data files could be stored in a single directory, and multiple sets of splits could be generated from the same data. This is often useful for large datasets with multiple distinct sets of splits.

This could all be configured via the README.md yaml configs

### Your contribution

May be able to contribute if it seems like a good idea | null | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/7287/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/7287/timeline | null | null | null | null | false | null |

https://api.github.com/repos/huggingface/datasets/issues/7286 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/7286/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/7286/comments | https://api.github.com/repos/huggingface/datasets/issues/7286/events | https://github.com/huggingface/datasets/issues/7286 | 2,645,350,151 | I_kwDODunzps6drNcH | 7,286 | Concurrent loading in `load_from_disk` - `num_proc` as a param | {

"avatar_url": "https://avatars.githubusercontent.com/u/5240449?v=4",

"events_url": "https://api.github.com/users/unography/events{/privacy}",

"followers_url": "https://api.github.com/users/unography/followers",

"following_url": "https://api.github.com/users/unography/following{/other_user}",

"gists_url": "https://api.github.com/users/unography/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/unography",

"id": 5240449,

"login": "unography",

"node_id": "MDQ6VXNlcjUyNDA0NDk=",

"organizations_url": "https://api.github.com/users/unography/orgs",

"received_events_url": "https://api.github.com/users/unography/received_events",

"repos_url": "https://api.github.com/users/unography/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/unography/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/unography/subscriptions",

"type": "User",

"url": "https://api.github.com/users/unography",

"user_view_type": "public"

} | [

{

"color": "a2eeef",

"default": true,

"description": "New feature or request",

"id": 1935892871,

"name": "enhancement",

"node_id": "MDU6TGFiZWwxOTM1ODkyODcx",

"url": "https://api.github.com/repos/huggingface/datasets/labels/enhancement"

}

] | closed | false | null | [] | null | [] | 1970-01-01T00:00:00.000001 | 1,731 | 1970-01-01T00:00:00.000001 | NONE | null | ### Feature request

https://github.com/huggingface/datasets/pull/6464 mentions a `num_proc` param while loading dataset from disk, but can't find that in the documentation and code anywhere

### Motivation

Make loading large datasets from disk faster

### Your contribution

Happy to contribute if given pointers | {

"avatar_url": "https://avatars.githubusercontent.com/u/5240449?v=4",

"events_url": "https://api.github.com/users/unography/events{/privacy}",

"followers_url": "https://api.github.com/users/unography/followers",

"following_url": "https://api.github.com/users/unography/following{/other_user}",

"gists_url": "https://api.github.com/users/unography/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/unography",

"id": 5240449,

"login": "unography",

"node_id": "MDQ6VXNlcjUyNDA0NDk=",

"organizations_url": "https://api.github.com/users/unography/orgs",

"received_events_url": "https://api.github.com/users/unography/received_events",

"repos_url": "https://api.github.com/users/unography/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/unography/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/unography/subscriptions",

"type": "User",

"url": "https://api.github.com/users/unography",

"user_view_type": "public"

} | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/7286/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/7286/timeline | null | not_planned | null | null | false | 0 |

https://api.github.com/repos/huggingface/datasets/issues/7282 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/7282/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/7282/comments | https://api.github.com/repos/huggingface/datasets/issues/7282/events | https://github.com/huggingface/datasets/issues/7282 | 2,642,075,491 | I_kwDODunzps6det9j | 7,282 | Faulty datasets.exceptions.ExpectedMoreSplitsError | {

"avatar_url": "https://avatars.githubusercontent.com/u/90473723?v=4",

"events_url": "https://api.github.com/users/meg-huggingface/events{/privacy}",

"followers_url": "https://api.github.com/users/meg-huggingface/followers",

"following_url": "https://api.github.com/users/meg-huggingface/following{/other_user}",

"gists_url": "https://api.github.com/users/meg-huggingface/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/meg-huggingface",

"id": 90473723,

"login": "meg-huggingface",

"node_id": "MDQ6VXNlcjkwNDczNzIz",

"organizations_url": "https://api.github.com/users/meg-huggingface/orgs",

"received_events_url": "https://api.github.com/users/meg-huggingface/received_events",

"repos_url": "https://api.github.com/users/meg-huggingface/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/meg-huggingface/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/meg-huggingface/subscriptions",

"type": "User",

"url": "https://api.github.com/users/meg-huggingface",

"user_view_type": "public"

} | [] | open | false | null | [] | null | [] | 1970-01-01T00:00:00.000001 | 1,731 | null | CONTRIBUTOR | null | ### Describe the bug

Trying to download only the 'validation' split of my dataset; instead hit the error `datasets.exceptions.ExpectedMoreSplitsError`.

Appears to be the same undesired behavior as reported in [#6939](https://github.com/huggingface/datasets/issues/6939), but with `data_files`, not `data_dir`.

Here is the Traceback:

```

Traceback (most recent call last):

File "/home/user/app/app.py", line 12, in <module>

ds = load_dataset('datacomp/imagenet-1k-random0.0', token=GATED_IMAGENET, data_files={'validation': 'data/val*'}, split='validation', trust_remote_code=True)

File "/usr/local/lib/python3.10/site-packages/datasets/load.py", line 2154, in load_dataset

builder_instance.download_and_prepare(

File "/usr/local/lib/python3.10/site-packages/datasets/builder.py", line 924, in download_and_prepare

self._download_and_prepare(

File "/usr/local/lib/python3.10/site-packages/datasets/builder.py", line 1018, in _download_and_prepare

verify_splits(self.info.splits, split_dict)

File "/usr/local/lib/python3.10/site-packages/datasets/utils/info_utils.py", line 68, in verify_splits

raise ExpectedMoreSplitsError(str(set(expected_splits) - set(recorded_splits)))

datasets.exceptions.ExpectedMoreSplitsError: {'train', 'test'}

```

Note: I am using the `data_files` argument only because I am trying to specify that I only want the 'validation' split, and the whole dataset will be downloaded even when the `split='validation'` argument is specified, unless you also specify `data_files`, as described here: https://discuss.huggingface.co/t/how-can-i-download-a-specific-split-of-a-dataset/79027

### Steps to reproduce the bug

1. Create a Space with the default blank 'gradio' SDK https://huggingface.co./new-space

2. Create a file 'app.py' that loads a dataset to only extract a 'validation' split:

`ds = load_dataset('datacomp/imagenet-1k-random0.0', token=GATED_IMAGENET, data_files={'validation': 'data/val*'}, split='validation', trust_remote_code=True)`

### Expected behavior

Downloading validation split.

### Environment info

Default environment for creating a new Space. Relevant to this bug, that is:

```

FROM docker.io/library/python:3.10@sha256:fd0fa50d997eb56ce560c6e5ca6a1f5cf8fdff87572a16ac07fb1f5ca01eb608

--> RUN pip install --no-cache-dir pip==22.3.1 && pip install --no-cache-dir datasets "huggingface-hub>=0.19" "hf-transfer>=0.1.4" "protobuf<4" "click<8.1"

``` | null | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/7282/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/7282/timeline | null | null | null | null | false | null |

https://api.github.com/repos/huggingface/datasets/issues/7281 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/7281/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/7281/comments | https://api.github.com/repos/huggingface/datasets/issues/7281/events | https://github.com/huggingface/datasets/issues/7281 | 2,640,346,339 | I_kwDODunzps6dYHzj | 7,281 | File not found error | {

"avatar_url": "https://avatars.githubusercontent.com/u/37507786?v=4",

"events_url": "https://api.github.com/users/MichielBontenbal/events{/privacy}",

"followers_url": "https://api.github.com/users/MichielBontenbal/followers",

"following_url": "https://api.github.com/users/MichielBontenbal/following{/other_user}",

"gists_url": "https://api.github.com/users/MichielBontenbal/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/MichielBontenbal",

"id": 37507786,

"login": "MichielBontenbal",

"node_id": "MDQ6VXNlcjM3NTA3Nzg2",

"organizations_url": "https://api.github.com/users/MichielBontenbal/orgs",

"received_events_url": "https://api.github.com/users/MichielBontenbal/received_events",

"repos_url": "https://api.github.com/users/MichielBontenbal/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/MichielBontenbal/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/MichielBontenbal/subscriptions",

"type": "User",

"url": "https://api.github.com/users/MichielBontenbal",

"user_view_type": "public"

} | [] | open | false | null | [] | null | [

"Link to the dataset: https://huggingface.co./datasets/MichielBontenbal/UrbanSounds "

] | 1970-01-01T00:00:00.000001 | 1,730 | null | NONE | null | ### Describe the bug

I get a FileNotFoundError:

<img width="944" alt="image" src="https://github.com/user-attachments/assets/1336bc08-06f6-4682-a3c0-071ff65efa87">

### Steps to reproduce the bug

See screenshot.

### Expected behavior

I want to load one audiofile from the dataset.

### Environment info

MacOs Intel 14.6.1 (23G93)

Python 3.10.9

Numpy 1.23

Datasets latest version | null | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/7281/reactions"

} | https://api.github.com/repos/huggingface/datasets/issues/7281/timeline | null | null | null | null | false | null |

https://api.github.com/repos/huggingface/datasets/issues/7280 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/7280/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/7280/comments | https://api.github.com/repos/huggingface/datasets/issues/7280/events | https://github.com/huggingface/datasets/issues/7280 | 2,639,977,077 | I_kwDODunzps6dWtp1 | 7,280 | Add filename in error message when ReadError or similar occur | {

"avatar_url": "https://avatars.githubusercontent.com/u/37046039?v=4",

"events_url": "https://api.github.com/users/elisa-aleman/events{/privacy}",

"followers_url": "https://api.github.com/users/elisa-aleman/followers",

"following_url": "https://api.github.com/users/elisa-aleman/following{/other_user}",

"gists_url": "https://api.github.com/users/elisa-aleman/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/elisa-aleman",

"id": 37046039,

"login": "elisa-aleman",

"node_id": "MDQ6VXNlcjM3MDQ2MDM5",

"organizations_url": "https://api.github.com/users/elisa-aleman/orgs",

"received_events_url": "https://api.github.com/users/elisa-aleman/received_events",

"repos_url": "https://api.github.com/users/elisa-aleman/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/elisa-aleman/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/elisa-aleman/subscriptions",

"type": "User",

"url": "https://api.github.com/users/elisa-aleman",

"user_view_type": "public"

} | [] | open | false | null | [] | null | [

"Hi Elisa, please share the error traceback here, and if you manage to find the location in the `datasets` code where the error occurs, feel free to open a PR to add the necessary logging / improve the error message.",

"> please share the error traceback\n\nI don't have access to it but it should be during [this exception](https://github.com/huggingface/datasets/blob/2049c00921c59cdeb835137a1c49639cf175af07/src/datasets/builder.py#L1643) which happens during the loading of a dataset. If one of the downloaded files is corrupted, the for loop will not yield correctly, and the error will come from, say, in the case of tar files, [this iterable](https://github.com/huggingface/datasets/blob/2049c00921c59cdeb835137a1c49639cf175af07/src/datasets/utils/file_utils.py#L1293) which has no explicit error handling that leaves clues as to which file has failed.\n\nI only know the case for tar files, but I consider this issue could be happening across different file types too.",

"I think having a better error handling for this tar iterable would be useful already, maybe a simple try/except in `_iter_from_urlpath` that checks for `tarfile.ReadError` and raises an error with the `urlpath` mentioned in the error ?",

"I think not just from higher calls like the `_iter_from_urlpath` but directly wherever a file is attempted to be opened would be the best case, as the traceback would simply lead to that.",