Map Features in OpenStreetMap with Computer Vision

/ [Unsplash](https://unsplash.com/photos/a-person-is-putting-pins-on-a-map-LT3prlHOVlU?utm_content=creditCopyText&utm_medium=referral&utm_source=unsplash)](https://blog.mozilla.ai/content/images/size/w1200/2025/03/stefancu-iulian-LT3prlHOVlU-unsplash.jpg) Photo by Stefancu Iulian / Unsplash

Photo by Stefancu Iulian / Unsplash

Original article: https://blog.mozilla.ai/map-features-in-openstreetmap-with-computer-vision/

Code: https://github.com/mozilla-ai/osm-ai-helper

HuggingFace Space demo: https://huggingface.co./spaces/mozilla-ai/osm-ai-helper

Motivation

At Mozilla.ai, we believe that there are a lot of opportunities where artificial intelligence (AI) can empower communities driven by open collaboration.

These opportunities need to be designed carefully, though, as many members of these communities (and people in general) are increasingly worried about the amount of AI slop flooding the internet.

With this idea in mind we developed and released the OpenStreetMap AI Helper Blueprint. If you love maps and are interested in training your own computer vision model, you'll enjoy diving into this Blueprint.

Why OpenStreetMap?

Data is one of the most important components of any AI application, and OpenStreetMap has a vibrant community that collaborates to maintain and extend the most complete open map database available.

If you haven't heard of it, OpenStreetMap is an open, editable map of the world created by a community of mappers who contribute and maintain data about roads, trails, cafés, railway stations, and more.

Combined with other sources, like satellite imagery, this database offers infinite possibilities to train different AI models.

As a long-time user and contributor to OpenStreetMap , I wanted to build an end-to-end application where a model is first trained with this data and then used to contribute back.

The idea is to use AI to speed up the slower parts of the mapping process (roaming around the map, drawing polygons) while keeping a human in the loop for the critical parts (verifying that the generated data is correct).

Why Computer Vision?

Large Language Models (LLM) and, more recently, Visual Language Models (VLM) are sucking all the oxygen out of the AI room, but there are a lot of interesting applications that don't (need to) use this type of models.

Many of the Map Features you can find in OpenStreetMap are represented with a polygon ('Area'). It turns out that finding and drawing these polygons is a very time consuming task for a human, but Computer Vision models can be easily trained for the task (when provided with enough data).

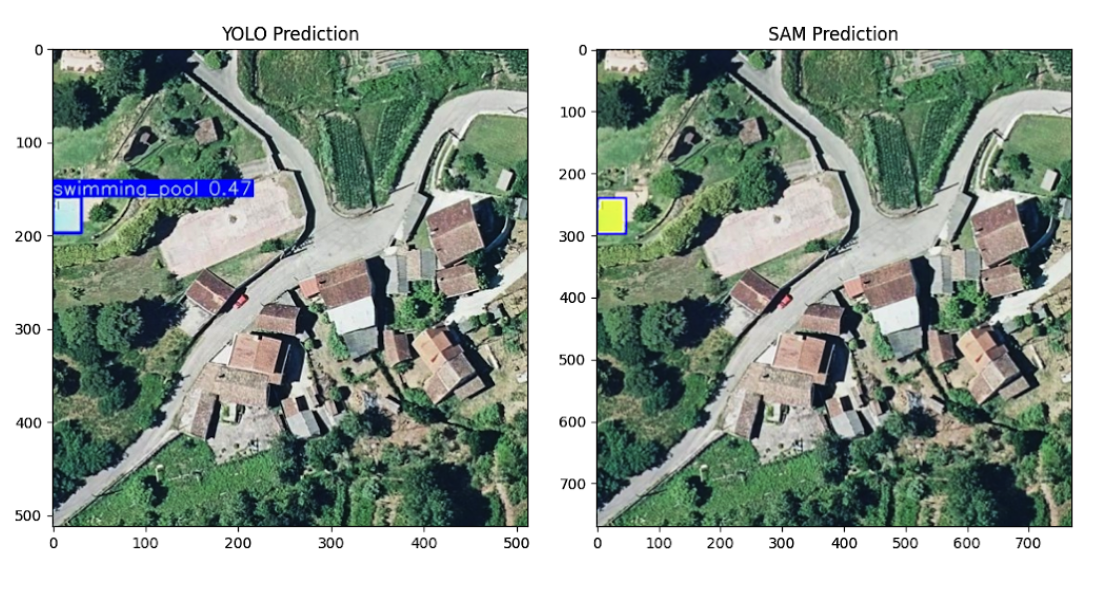

We chose to split the work of finding and drawing map features into 2 computer vision tasks using state-of-the-art non-LLM models:

- Object Detection with YOLOv11, by Ultralytics, which identifies where relevant features exist in an image.

- Segmentation with SAM2, by Meta, which refines the detected features by outlining their exact shape.

These models are lightweight, fast, and local-friendly -- it's refreshing to work with models that don't demand a high-end GPU just to function. As an example, the combined weights of YOLOv11 and SAM2 take much less disk space (<250MB) than any of the smallest Visual Language Models available, like SmolVLM (4.5GB).

By combining these models, we can automate much of the mapping process while keeping humans in control for final verification.

The OpenStreetMap AI Helper Blueprint

The Blueprint can be divided into 3 stages:

Stage 1: Create an Object Detection dataset from OpenStreetMap

The first stage involves fetching data from OpenStreetMap, combining it with satellite images, and transforming it into a format suitable for training.

You can run it yourself in the Create Dataset Colab.

For fetching OpenStreetMap data, we use:

- The Nominatim API to provide users with a flexible way of selecting an area of interest. In our swimming pool example, we use Galicia for training and Viana do Castelo for validation.

- The Overpass API to download all the relevant polygons using specific tags within the selected area of interest. In our swimming pool example, we use leisure=swimming_pool discarding the ones also tagged with location=indoor.

Once all the polygons have been downloaded, you can choose a zoom level. We use this zoom level to first identify all the tiles that contain a polygon and then download them using the Static Tiles API from Mapbox.

The polygons in latitude and longitude coordinates are transformed to a bounding box in pixel coordinates relative to each tile and then saved in the Ultralytics YOLO format.

Finally, the dataset is uploaded to the Hugging Face Hub. You can check our example mozilla-ai/osm-swimming-pools.

Stage 2 - Finetune an Object Detection model

Once the dataset is uploaded in the right format, finetuning a YOLOv11 (or any other model supported by Ultralytics) is quite easy.

You can run it yourself in the Finetune Model Colab and check all the available hyperparameters.

Once the model is trained, it is also uploaded to the Hugging Face Hub. You can check our example mozilla-ai/swimming-pool-detector.

Stage 3 - Contributing to OpenStreetMap

Once you have a finetuned Object Detection model, you can use it to run inference across multiple tiles.

You can run inference yourself in the Run Inference Colab.

We also provide a hosted demo where you can try our example swimming pool detector: HuggingFace Demo.

The inference requires a couple of human interactions. First, you need to first pick a point of interest in the map:

After a point is selected, a bounding box is computed around it based on the margin argument.

All the existing elements of interest are downloaded from OpenStreetMap, and all the tiles are downloaded from Mapbox and joined to create a stacked image.

The stacked image is divided into overlapping tiles. For each tile, we run the Object Detection model (YOLOv11). If an object of interest is detected (e.g. a swimming pool), we pass the bounding box to the Segmentation model (SAM2) to obtain a segmentation mask.

All the predicted polygons are checked against the existing ones, downloaded from OpenStreetMap, in order to avoid duplicates. All those identified as new are displayed one by one for manual verification and filtering.

The ones you chose to keep will be then uploaded to OpenStreetMap in a single changeset.

Closing thoughts

OpenStreetMap is a powerful example of open collaboration to create a rich, community-driven map of the world.

The OpenStreatMap AI Helper Blueprint shows that, with the right approach, AI can enhance human contributions while keeping human verification at the core. In the fully manual process it takes about 1 min to map 2-3 swimming pools, whereas using the blueprint, even without an optimized UX, I can map about 10-15 in the same time (~5x more).

It also highlights the value of high-quality data from projects like OpenStreetMap, which enables to easily train models like YOLOv11 to perform object detection -- proving that you shouldn't always throw an LLM at the problem.

We'd love for you to try the OpenStreetMap AI Helper Blueprint and experiment with training a model on a different map feature. If you're interested, feel free to contribute to the repo to help improve it, or fork it to extend it even further!

To find other Blueprints we've released, check out the Blueprints Hub.