Upload folder using huggingface_hub (#2)

Browse files- 19dcab0efc93a2632c5d61dad34bfabb1d09412798105b22599e0a7c246cd7ef (113ef709dabbf32ef5f341ff925d8c915bd9933b)

- base_results.json +19 -0

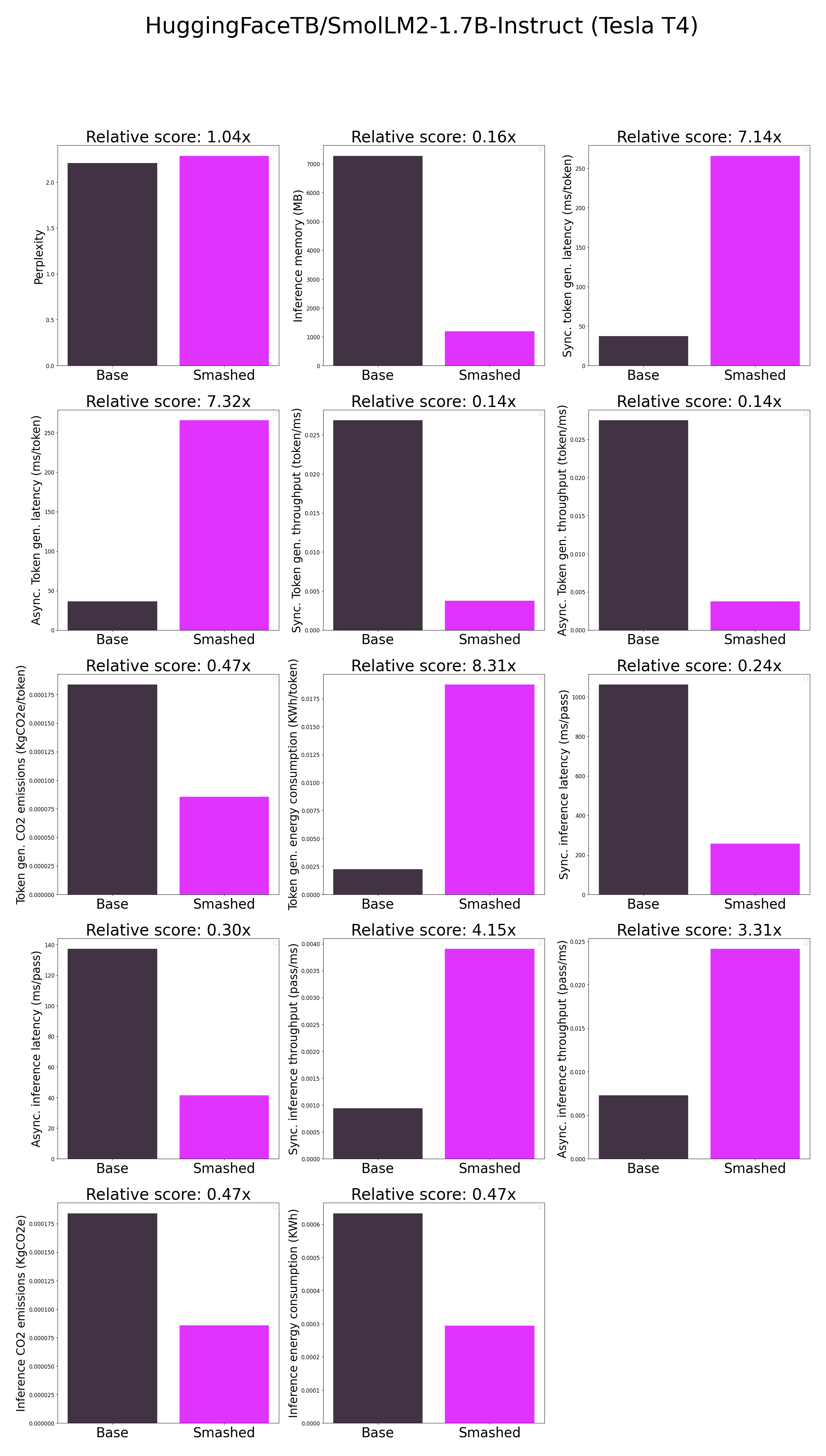

- plots.png +0 -0

- smashed_results.json +19 -0

base_results.json

ADDED

|

@@ -0,0 +1,19 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"current_gpu_type": "Tesla T4",

|

| 3 |

+

"current_gpu_total_memory": 15095.0625,

|

| 4 |

+

"perplexity": 2.2062017917633057,

|

| 5 |

+

"memory_inference_first": 7276.0,

|

| 6 |

+

"memory_inference": 7276.0,

|

| 7 |

+

"token_generation_latency_sync": 37.20788230895996,

|

| 8 |

+

"token_generation_latency_async": 36.32834870368242,

|

| 9 |

+

"token_generation_throughput_sync": 0.026876025668335118,

|

| 10 |

+

"token_generation_throughput_async": 0.027526712214657724,

|

| 11 |

+

"token_generation_CO2_emissions": 0.00018383593221655306,

|

| 12 |

+

"token_generation_energy_consumption": 0.0022573257956992913,

|

| 13 |

+

"inference_latency_sync": 1062.5710464477538,

|

| 14 |

+

"inference_latency_async": 137.16118335723877,

|

| 15 |

+

"inference_throughput_sync": 0.0009411135409185738,

|

| 16 |

+

"inference_throughput_async": 0.007290692421306122,

|

| 17 |

+

"inference_CO2_emissions": 0.00018406364335004676,

|

| 18 |

+

"inference_energy_consumption": 0.0006330725272366455

|

| 19 |

+

}

|

plots.png

ADDED

|

smashed_results.json

ADDED

|

@@ -0,0 +1,19 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"current_gpu_type": "Tesla T4",

|

| 3 |

+

"current_gpu_total_memory": 15095.0625,

|

| 4 |

+

"perplexity": 2.2858734130859375,

|

| 5 |

+

"memory_inference_first": 1178.0,

|

| 6 |

+

"memory_inference": 1194.0,

|

| 7 |

+

"token_generation_latency_sync": 265.68286819458007,

|

| 8 |

+

"token_generation_latency_async": 265.86400624364614,

|

| 9 |

+

"token_generation_throughput_sync": 0.0037638858944703305,

|

| 10 |

+

"token_generation_throughput_async": 0.0037613214896173967,

|

| 11 |

+

"token_generation_CO2_emissions": 8.567858013593123e-05,

|

| 12 |

+

"token_generation_energy_consumption": 0.01875942725794068,

|

| 13 |

+

"inference_latency_sync": 256.0767112731934,

|

| 14 |

+

"inference_latency_async": 41.45829677581787,

|

| 15 |

+

"inference_throughput_sync": 0.003905079829509206,

|

| 16 |

+

"inference_throughput_async": 0.024120624284384207,

|

| 17 |

+

"inference_CO2_emissions": 8.569058627578585e-05,

|

| 18 |

+

"inference_energy_consumption": 0.00029453419207313414

|

| 19 |

+

}

|