File size: 5,253 Bytes

e69de54 1887403 e69de54 1887403 e69de54 f0ce0b1 e69de54 |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 |

---

License: apache-2.0

Language:

- En

Pipeline_tag: text-generation

Base_model: nvidia/Llama-3.1-Minitron-4 B-Width-Base

Tags:

- Chat

license: agpl-3.0

datasets:

- anthracite-org/kalo-opus-instruct-22k-no-refusal

- PJMixers/lodrick-the-lafted_OpusStories-ShareGPT

- NewEden/Gryphe-3.5-16k-Subset

- Epiculous/Synthstruct-Gens-v1.1-Filtered-n-Cleaned

tags:

- chat

---

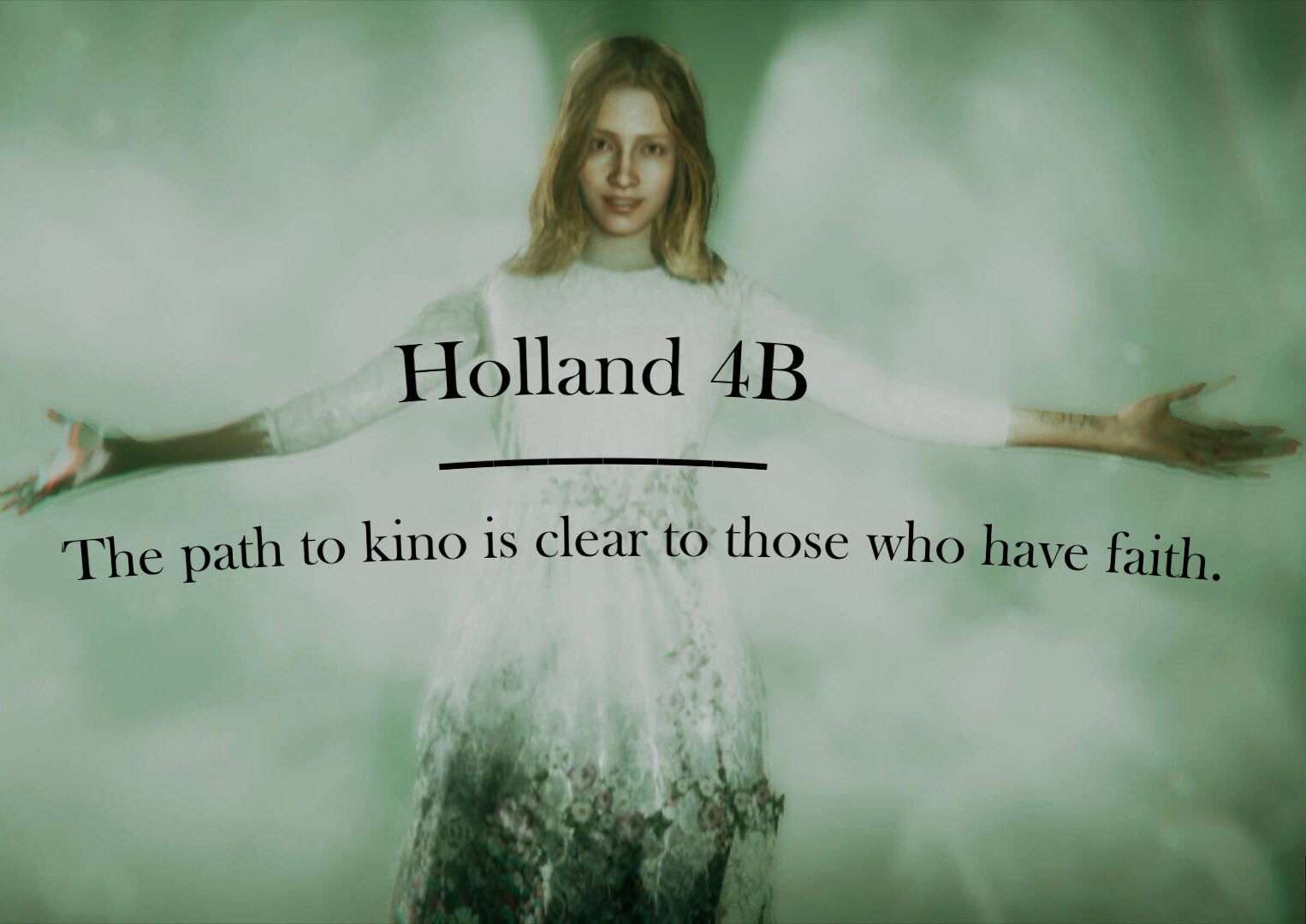

A model made to continue off my previous work on [Magnum 4B](https://huggingface.co./anthracite-org/magnum-v2-4b), A small model made for creative writing / General assistant tasks, finetuned ontop of [IntervitensInc/Llama-3.1-Minitron-4B-Width-Base-chatml](https://huggingface.co./IntervitensInc/Llama-3.1-Minitron-4B-Width-Base-chatml), this model is made to be more coherent and generally be better then the 4B at both writing and assistant tasks.

# Quants (Thanks Lucy <3)

GGUF: https://huggingface.co./NewEden/Holland-4B-gguf

EXL2: https://huggingface.co./NewEden/Holland-4B-exl2

## Prompting

Model has been Instruct tuned with the ChatML formatting. A typical input would look like this:

```py

"""<|im_start|>system

system prompt<|im_end|>

<|im_start|>user

Hi there!<|im_end|>

<|im_start|>assistant

Nice to meet you!<|im_end|>

<|im_start|>user

Can I ask a question?<|im_end|>

<|im_start|>assistant

"""

```

## Support

## No longer needed as LCPP has merged support - just update.,

To run inference on this model, you'll need to use Aphrodite, vLLM or EXL 2/tabbyAPI, as llama.cpp hasn't yet merged the required pull request to fix the llama 3.1 rope_freqs issue with custom head dimensions.

However, you can work around this by quantizing the model yourself to create a functional GGUF file. Note that until [this PR](https://github.com/ggerganov/llama.cpp/pull/9141) is merged, the context will be limited to 8 k tokens.

To create a working GGUF file, make the following adjustments:

1. Remove the `"rope_scaling": {}` entry from `config.json`

2. Change `"max_position_embeddings"` to `8192` in `config.json`

These modifications should allow you to use the model with llama. Cpp, albeit with the mentioned context limitation.

## Axolotl config

<details><summary>See axolotl config</summary>

Axolotl version: `0.4.1`

```yaml

base_model: IntervitensInc/Llama-3.1-Minitron-4B-Width-Base-chatml

model_type: AutoModelForCausalLM

tokenizer_type: AutoTokenizer

load_in_8bit: false

load_in_4bit: false

strict: false

datasets:

- path: NewEden/Gryphe-3.5-16k-Subset

type: sharegpt

conversation: chatml

- path: Epiculous/Synthstruct-Gens-v1.1-Filtered-n-Cleaned

type: sharegpt

conversation: chatml

- path: anthracite-org/kalo-opus-instruct-22k-no-refusal

type: sharegpt

conversation: chatml

- path: PJMixers/lodrick-the-lafted_OpusStories-ShareGPT

type: sharegpt

conversation: chatml

chat_template: chatml

val_set_size: 0.01

output_dir: ./outputs/out

adapter:

lora_r:

lora_alpha:

lora_dropout:

lora_target_linear:

sequence_len: 16384

# sequence_len: 32768

sample_packing: true

eval_sample_packing: false

pad_to_sequence_len: true

plugins:

- axolotl.integrations.liger.LigerPlugin

liger_rope: true

liger_rms_norm: true

liger_swiglu: true

liger_fused_linear_cross_entropy: true

wandb_project:

wandb_entity:

wandb_watch:

wandb_name:

wandb_log_model:

gradient_accumulation_steps: 32

micro_batch_size: 1

num_epochs: 2

optimizer: adamw_bnb_8bit

#optimizer: paged_adamw_8bit

lr_scheduler: cosine

learning_rate: 0.00002

weight_decay: 0.05

train_on_inputs: false

group_by_length: false

bf16: auto

fp16:

tf32: true

gradient_checkpointing: true

early_stopping_patience:

resume_from_checkpoint:

local_rank:

logging_steps: 1

xformers_attention:

flash_attention: true

warmup_ratio: 0.1

evals_per_epoch: 4

eval_table_size:

eval_max_new_tokens: 128

saves_per_epoch: 1

debug:

deepspeed: /workspace/axolotl/deepspeed_configs/zero2.json

#deepspeed:

fsdp:

fsdp_config:

special_tokens:

pad_token: <|finetune_right_pad_id|>

```

</details><br>

## Credits

- [anthracite-org/kalo-opus-instruct-22k-no-refusal](https://huggingface.co./datasets/anthracite-org/kalo-opus-instruct-22k-no-refusal)

- [NewEden/Gryphe-3.5-16k-Subset](https://huggingface.co./datasets/NewEden/Gryphe-3.5-16k-Subset)

- [Epiculous/Synthstruct-Gens-v1.1-Filtered-n-Cleaned](https://huggingface.co./datasets/Epiculous/Synthstruct-Gens-v1.1-Filtered-n-Cleaned)

- [lodrick-the-lafted/OpusStories](https://huggingface.co./datasets/lodrick-the-lafted/OpusStories)

I couldn't have made this model without the help of [Kubernetes_bad](https://huggingface.co./kubernetes-bad) and the support of [Lucy Knada](https://huggingface.co./lucyknada)

## Training

The training was done for 2 epochs. We used 2 x [RTX 6000s](https://store.nvidia.com/en-us/nvidia-rtx/products/nvidia-rtx-6000-ada-generation/) GPUs graciously provided by [Kubernetes_Bad](https://huggingface.co./kubernetes-bad) for the full-parameter fine-tuning of the model.

[<img src="https://raw.githubusercontent.com/OpenAccess-AI-Collective/axolotl/main/image/axolotl-badge-web.png" alt="Built with Axolotl" width="200" height="32"/>](https://github.com/OpenAccess-AI-Collective/axolotl)

## Safety

... |