Update README.md

Browse files

README.md

CHANGED

|

@@ -1,115 +0,0 @@

|

|

| 1 |

-

---

|

| 2 |

-

language:

|

| 3 |

-

- en

|

| 4 |

-

license: cc-by-nc-4.0

|

| 5 |

-

datasets:

|

| 6 |

-

- facebook/asset

|

| 7 |

-

- wi_locness

|

| 8 |

-

- GEM/wiki_auto_asset_turk

|

| 9 |

-

- discofuse

|

| 10 |

-

- zaemyung/IteraTeR_plus

|

| 11 |

-

- jfleg

|

| 12 |

-

- grammarly/coedit

|

| 13 |

-

metrics:

|

| 14 |

-

- sari

|

| 15 |

-

- bleu

|

| 16 |

-

- accuracy

|

| 17 |

-

widget:

|

| 18 |

-

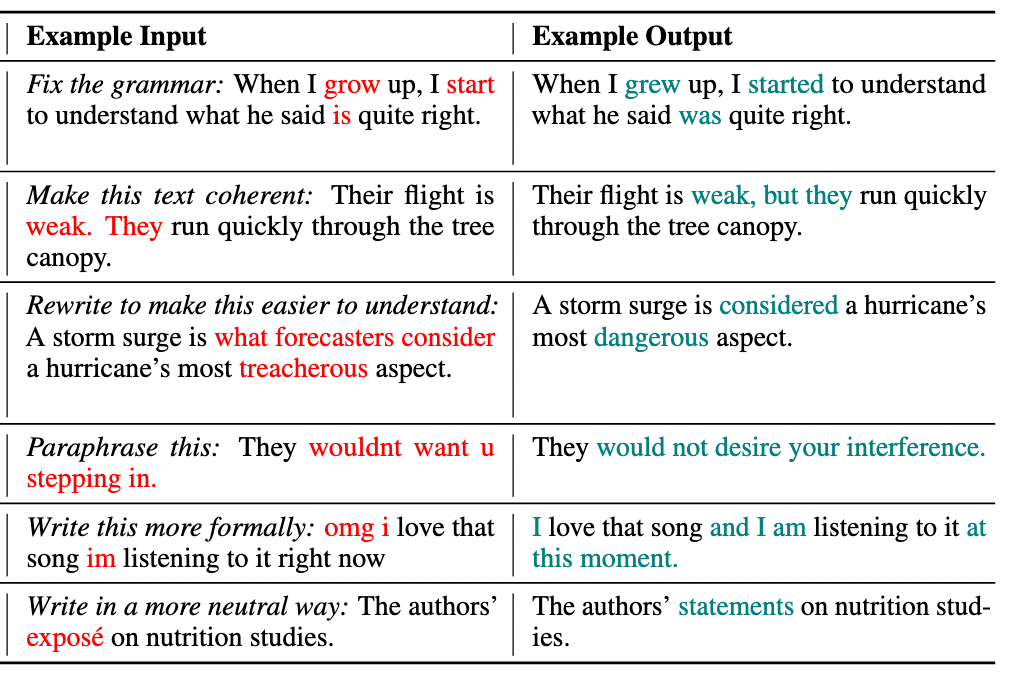

- text: 'Fix the grammar: When I grow up, I start to understand what he said is quite

|

| 19 |

-

right.'

|

| 20 |

-

example_title: Fluency

|

| 21 |

-

- text: 'Make this text coherent: Their flight is weak. They run quickly through the

|

| 22 |

-

tree canopy.'

|

| 23 |

-

example_title: Coherence

|

| 24 |

-

- text: 'Rewrite to make this easier to understand: A storm surge is what forecasters

|

| 25 |

-

consider a hurricane''s most treacherous aspect.'

|

| 26 |

-

example_title: Simplification

|

| 27 |

-

- text: 'Paraphrase this: Do you know where I was born?'

|

| 28 |

-

example_title: Paraphrase

|

| 29 |

-

- text: 'Write this more formally: omg i love that song im listening to it right now'

|

| 30 |

-

example_title: Formalize

|

| 31 |

-

- text: 'Write in a more neutral way: The authors'' exposé on nutrition studies.'

|

| 32 |

-

example_title: Neutralize

|

| 33 |

-

---

|

| 34 |

-

# Model Card for CoEdIT-Large

|

| 35 |

-

|

| 36 |

-

This model was obtained by fine-tuning the corresponding `google/flan-t5-large` model on the CoEdIT dataset. Details of the dataset can be found in our paper and repository.

|

| 37 |

-

|

| 38 |

-

**Paper:** CoEdIT: Text Editing by Task-Specific Instruction Tuning

|

| 39 |

-

|

| 40 |

-

**Authors:** Vipul Raheja, Dhruv Kumar, Ryan Koo, Dongyeop Kang

|

| 41 |

-

|

| 42 |

-

## Model Details

|

| 43 |

-

|

| 44 |

-

### Model Description

|

| 45 |

-

|

| 46 |

-

- **Language(s) (NLP)**: English

|

| 47 |

-

- **Finetuned from model:** google/flan-t5-large

|

| 48 |

-

|

| 49 |

-

### Model Sources

|

| 50 |

-

|

| 51 |

-

- **Repository:** https://github.com/vipulraheja/coedit

|

| 52 |

-

- **Paper:** https://arxiv.org/abs/2305.09857

|

| 53 |

-

|

| 54 |

-

## How to use

|

| 55 |

-

We make available the models presented in our paper.

|

| 56 |

-

|

| 57 |

-

<table>

|

| 58 |

-

<tr>

|

| 59 |

-

<th>Model</th>

|

| 60 |

-

<th>Number of parameters</th>

|

| 61 |

-

</tr>

|

| 62 |

-

<tr>

|

| 63 |

-

<td>CoEdIT-large</td>

|

| 64 |

-

<td>770M</td>

|

| 65 |

-

</tr>

|

| 66 |

-

<tr>

|

| 67 |

-

<td>CoEdIT-xl</td>

|

| 68 |

-

<td>3B</td>

|

| 69 |

-

</tr>

|

| 70 |

-

<tr>

|

| 71 |

-

<td>CoEdIT-xxl</td>

|

| 72 |

-

<td>11B</td>

|

| 73 |

-

</tr>

|

| 74 |

-

</table>

|

| 75 |

-

|

| 76 |

-

|

| 77 |

-

## Uses

|

| 78 |

-

|

| 79 |

-

## Text Revision Task

|

| 80 |

-

Given an edit instruction and an original text, our model can generate the edited version of the text.<br>

|

| 81 |

-

|

| 82 |

-

|

| 83 |

-

|

| 84 |

-

## Usage

|

| 85 |

-

```python

|

| 86 |

-

from transformers import AutoTokenizer, T5ForConditionalGeneration

|

| 87 |

-

|

| 88 |

-

tokenizer = AutoTokenizer.from_pretrained("grammarly/coedit-large")

|

| 89 |

-

model = T5ForConditionalGeneration.from_pretrained("grammarly/coedit-large")

|

| 90 |

-

input_text = 'Fix grammatical errors in this sentence: When I grow up, I start to understand what he said is quite right.'

|

| 91 |

-

input_ids = tokenizer(input_text, return_tensors="pt").input_ids

|

| 92 |

-

outputs = model.generate(input_ids, max_length=256)

|

| 93 |

-

edited_text = tokenizer.decode(outputs[0], skip_special_tokens=True)

|

| 94 |

-

```

|

| 95 |

-

|

| 96 |

-

|

| 97 |

-

#### Software

|

| 98 |

-

https://github.com/vipulraheja/coedit

|

| 99 |

-

|

| 100 |

-

## Citation

|

| 101 |

-

|

| 102 |

-

**BibTeX:**

|

| 103 |

-

```

|

| 104 |

-

@article{raheja2023coedit,

|

| 105 |

-

title={CoEdIT: Text Editing by Task-Specific Instruction Tuning},

|

| 106 |

-

author={Vipul Raheja and Dhruv Kumar and Ryan Koo and Dongyeop Kang},

|

| 107 |

-

year={2023},

|

| 108 |

-

eprint={2305.09857},

|

| 109 |

-

archivePrefix={arXiv},

|

| 110 |

-

primaryClass={cs.CL}

|

| 111 |

-

}

|

| 112 |

-

```

|

| 113 |

-

|

| 114 |

-

**APA:**

|

| 115 |

-

Raheja, V., Kumar, D., Koo, R., & Kang, D. (2023). CoEdIT: Text Editing by Task-Specific Instruction Tuning. ArXiv. /abs/2305.09857

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|